Abstract

In this paper, an approach for classifying gastrointestinal (GI) diseases from endoscopic images is proposed. The proposed approach is built using a convolutional neural network (CNN) with batch normalization (BN) and an exponential linear unit (ELU) as the activation function. The proposed approach consists of eight layers (six convolutional and two fully connected layers) and is used to identify eight types of GI diseases in version two of the Kvasir dataset. The proposed approach was compared with other CNN architectures (VGG16, VGG19, and Inception-v3) using five elements (number of convolutional layers, number of total parameters of the convolutional layers, number of epochs, validation accuracy and test accuracy). The proposed approach achieved good results compared to the compared architectures. It achieved a validation accuracy of 88%, which is superior to other architectures and a test accuracy of 87%, which outperforms the Inception-v3 architecture. Therefore, the proposed approach has less trained images and less computational complexity in the training phase.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Endoscopic gastrointestinal (GI) images

- Kvasir dataset

- Convolutional neural network (CNN)

- Batch normalization (BN)

- Exponential linear unit (ELU)

1 Introduction

The American Society for Gastrointestinal Endoscopy (ASGE) supports the analysis of endoscopic images in gastrointestinal (GI) tract to assist clinicians in making correct decisions [1]. Endoscopic imaging technology has refined the diagnostic and therapeutic purposes that can be used as alternative techniques by which patients can avoid biopsy and surgical procedures [2]. Explorations of the digestive system based on endoscopic images are performed using gastroscopy for the upper GI tract and colonoscopy for the lower GI tract. Based on statistical analyses of GI disorders, various different diseases exist such as oesophageal, stomach and colorectal cancer [3], that may result in death. The most common cancers in the GI tract are colorectal cancer representing 1.80 million cases, and stomach cancer representing 1.03 million cases. In the United States, approximately 862, 000 colorectal cancer and 783 000 stomach cancer deaths occur each year [4]. The factors related to GI diseases, include environmental factors (Helicobacter pylori infection, a wrong diet, food storage), treatment factors (using antibiotics to kill a specific bacterium, poorly qualified gastroenterologists), genetic factors (inherited cancer genes), and unknown factors. In some cases, optical diagnoses by endoscopic imaging examination suffer from endoscopist errors, lengthy procedures and poor quality images [5]. Therefore, computer-assisted diagnosis systems for GI images can affect accurate and rapid classification by discriminating between normal and diseased GI tract and reducing the mortality level for GI diseases [6].

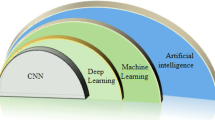

In general, endoscopic images for the GI tract are considered to be biomedical images. It is essential to create deep learning algorithms to process these huge images before the disease diagnosis. A major challenge in biomedical images is to perform classification for low-level visual images obtained from imaging devices. The deep convolutional neural network (CNN) is a common learning algorithm that has achieved success in medical images classification [7]. For example, CNNs have been efficiently applied for polyp detection in colonoscopy videos [8], for lung images classification [9], for pancreas segmentation in CT images [10], and for brain tumour segmentation in magnetic resonance imaging (MRI) scans [11]. Additionally, CNN frameworks running on accelerated hardware have been utilized for medical image retrieval [12] and for medical image segmentation ration [13]. Thus, the CNN architecture has encouraged rapid automated classification for large number of medical images.

This paper demonstrates a CNN model for classifying GI diseases from endoscopic images. The remainder of this paper is structured as follows. Related works are discussed in Sect. 2, especially from the perspective of previous CNN architectures when using BN [14] and when ELU is used as the activation function [15]. Section 3 presents an explanation of the image dataset used in this paper. In Sect. 4, the proposed methodology is explained. The experimental outcomes are notified in Sect. 5. Lastly, a few concluding comments are estimated in Sect. 6.

2 Related Works

CNNs have been used extensively to solve issues related to computer vision, such as image identification [16] because a CNN is one of the most effective ways to extract features for non-trivial tasks [17]. Numerous variants of CNN architectures can be found in the literature that have advanced results in different image classification task, for example, VGG16 and VGG19 [18], which won the runner-up award in the ILSVRC-2014. VGG16 is a 16-layer network containing 13 convolutional layers, three fully-connected layers, and five max-pooling layers, while VGG19 is a 19-layer network containing 16 convolutional layers, three fully-connected layers, and five max-pooling layers. Despite the successes of these architectures, one of their drawbacks is that they are difficult to train [19].

In addition, a wide range of techniques have been developed to improve the performance or facilitate the training of CNNs, such as incorporating BN or using an ELU as the activation function. BN is a technique introduced by Ioffe and Szegedy for accelerating deep network training. BN has become a typical element in modern better performing CNN designs such as Inception V3 [20], which achieved the lowest error rate (3.08%) in the ImageNet challenge. BN helps the network to train faster, achieve higher accuracy, stabilize the distribution and reduce the internal covariate shift [14, 21, 22].

The experimental results in [15] indicated that the ELU activation function accelerates learning in deep neural networks, leads to higher classification accuracies that achieve better generalization performance than other activations function such as rectified units (RELUs), and using ELU with BN outperforms RELU with BN. According to the previous work, a CNN model incorporating the BN technique and using ELU as an activation function accelerates GI diseases identification from endoscopic images.

3 Dataset Description

The Kvasir dataset [23] has two versions. Deep learning methods were implemented using version one in [24, 25]; however, version two, which was released in 2017, has not been used until now in any previous studies in this field. Therefore, in this paper the proposed model is applied to version two of the Kvasir database. Kvasir version two has a size of 2.3 GB and contains 8,000 images with 720 × 576 pixels. These data are divided into eight classes with 1,000 images for each class. This Kvasir dataset was created from endoscopic images of GI tract diseases. The descriptions of the eight classes are listed in Table 1. These data consist of three types: anatomical landmarks, pathological findings, and polyp removal, as shown in Fig. 1.

4 The Proposed Approach

The proposed approach involves four phases: images preprocessing, data augmentation, feature extraction, and classification. The feature extraction phase includes convolutional layers, BN layers, ELU layers, and max-pooling layers, while the classification phase contains fully connected layers, BN layer, ELU layer, dropout layer, and a softmax layer. Figure 2 demonstrates a structural representation of the proposed approach. In the proposed approach, the RMSProp optimizer [26] with a learning rate of 1e−4, categorical cross-entropy as the loss function [27], a batch size of 32 and 115 epochs were used as shown in Table 2.

4.1 Images Preprocessing Phase

The dataset was split into three separate file groups. The first file group comprised the training set, which included 700 images of each class; each class was stored in a separate file. The second file group comprised the validation set, which included 150 images of each class, and each class was stored in a separate file. The third file group was the test set, which included 150 images for each class, and each class was stored in a separate file.

Before loading the images into the proposed approach, all the images in the training, validation and test sets were resized to a resolution of 400 × 400 to decrease the computational time and normalized by dividing the colour value of each pixel by 255 to achieve values in the range 0, 1.

4.2 Data Augmentation Phase

Data augmentation techniques increase the amount of training data available, which is crucial when training a deep learning model from scratch [28]. Data augmentation was used in this paper to overcome the overfitting that can result from small training dataset sizes. The data augmentation has a lot of techniques, such as rotation, width shift, height shift, shear, zoom, horizontal flip and fill mode. These techniques were used in this paper to apply various transformation to the images as listed in Table 3.

4.3 Feature Extraction Phase

The convolutional layers, BN layers, ELU layers, and Max pooling layers were used to extract important features from the images.

-

Convolutional Layers: the proposed approach involves six convolutional layers [29]. All convolutional layers contain 64 filters except for the last two layers (layer five and layer six) which each contained 128 filters. A kernel size of 3 × 3, a stride of 2 and the same padding were used in all convolutional layers (see Table 2).

-

Batch Normalization is a recent approach for accelerating deep neural network training that normalizes each scalar feature independently by making it have a mean of zero and unit variance, as shown in step one, two and three in Algorithm 1. Then, the normalized value for each training mini-batch is scaled and shifted by the scale and shift parameters \(\upgamma\,{\text{and}}\,\beta\) as shown in step four in Algorithm 1. This conversion confirms that the input distribution of each layer remains unchanged within different mini-batches; thus, BN reduces the internal covariate shift and the number of iterations required for convergence and simultaneously improves the final performance. BN maintains non-trainable weights (the mean and variance vectors) that are updated via layer updates instead of through back propagation [30]. The BN can be considered as another layer that can be inserted into the model architecture, similar to a convolutional layer, an activation layer or a fully connected layer [31]. The proposed CNN approach includes eight BN layers in which six are used in the feature extraction phase and two are used in the classification phase. In the proposed approach, the BN layers were added before each activation function layer.

-

An Exponential Linear Unit (ELU) is the activation function used in the proposed approach and given in [32] as

-

in which the gradient w.r.t. the input is

-

where \(\alpha\) = 1.

-

The proposed CNN approach involves seven ELU layers in which six are used in the feature extraction phase and one is used in the classification phase. Each ELU layer was implemented after each BN layer as shown in Fig. 2.

-

Max-Pooling aims to down-sample the input representation in the feature extraction phase [33]. In this paper, six max-pooling layers of size (2 × 2) were used and these layers were implemented after each ELU layer.

4.4 Classification Phase

The classification phase classifies the images after flattening the output of the feature extraction phase [34] using two fully connected layers (FC), in which the first (FC1) contains 512 neurons and the second (FC2) contains 8 neurons, a BN layer, an ELU layer and a dropout layer [35] with a dropout rate of 0.3 to prevent overfitting. Finally, a softmax layer was added [36].

4.5 Checkpoint Ensemble Phase

When training a neural network model, the checkpoint technique [37] can be used to save all the model weights to obtain the final prediction or to checkpoint the neural network model improvements to save the best weights only and then obtain the final prediction, as shown in Fig. 3. In this paper, checkpointing was applied to save the best network weights (those that maximally reduced the classification loss of the validation dataset).

The rounded boxes going from left to right represent a model’s weights at each step of a particular training process. The lighter shades represent better weight. In checkpointing neural network model, all the model weights are saved to obtain the final prediction P. In checkpointing neural network improvements, only the best weights are saved to obtain the final prediction P

5 Experimental Results

The architecture of the proposed approach was built using Keras library [38] using Tensorflow [39] as the backend. The Keras library’s ImageDataGenerator function [40] was used to normalize the images during images preprocessing phase and to perform the data augmentation techniques as shown in Fig. 4, while the ModelCheckpoint function was used to perform the checkpoint ensemble phase. The proposed approach has 2,702,056 total parameters of which 2,699,992 are trainable parameters and 2,064 are non-trainable parameters that come from using the BN layers.

The proposed approach was tested using traditional metrics such as accuracy (Table 4), precision, recall, F1 score (Table 5), and a confusion matrix (Table 6).

Accuracy measures the ratio of correct predictions to the total number of instances evaluated and is calculated by the following formula [41]:

Precision measures the ability of a model to correctly predict values for a particular category and is calculated as follows:

Recall measures the fraction of positive patterns that are correctly classified and is calculated by the following formula:

The F1 score is the weighted average of the precision and recall. Additionally, the confusion matrix is a matrix that maps the predicted outputs across actual outputs [42]. Additionally, the pyplot function of matplotlib [43] was used to plot the loss and accuracy of the model over the training and validation data during training to ensure that the model did not suffer from overfitting, as shown in Fig. 5.

To evaluate the proposed approach, transfer learning and fine-tuning techniques [44] with data augmentation technique were applied to the VGG16, VGG19 and Inception-v3 architectures using the same dataset and batch size used in the proposed approach. Transfer learning was conducted first by replacing the fully connected layers with new two fully connected layers, where FC1 contains 512 neurons and FC2 contains 8 neurons, and one added dropout layer after the flatten layer with a dropout rate of 0.3 in VGG architectures and a dropout rate of 0.5 in inception-v3 architecture to prevent overfitting. During the transfer learning, the RMSProp optimizer with a learning rate of 1e−4 was used, and the models were trained for 15 epochs. Then, the top convolutional block of the VGG16 and VGG19 architectures and the top two blocks of Inceptionv3 architecture were fine-tuned, with a small learning rate of 1e−5 and trained for 35 epochs.

The elements used for comparison were the number of convolutional layers, the total number of parameters of the convolutional layers, the number of epochs, validation accuracy and test accuracy, as shown in Table 7 which shows as comparison of the models’ results when identifying GI diseases. The first and second comparative elements are the number of convolution layers and the number of parameters of the convolutional layers. The proposed approach includes the fewest convolutional layers and parameters compared to the other architectures, which reduces its computational complexity in the training phase. The third comparative element is the number of epochs in the training phase. The proposed approach has the maximum number of epochs; however, the great number of epochs was expected because unlike the other architectures, the proposed approach network was not pre-trained; thus, more epochs are required to achieve a stable accuracy. As shown by the validation accuracy comparison, the proposed approach obtained an accuracy of 88%, which is better than the accuracy of the compared models. Regarding test accuracy, the proposed approach achieved an accuracy of 87%, similar to VGG16 and VGG19, while Inception-v3 achieved an accuracy of only 80%.

6 Conclusion

Automatic classification of GI diseases from imaging is increasingly important; it can assist the endoscopists in determining the appropriate treatment for patients who suffer from GI diseases and reduce the costs of disease therapies. In this paper, the proposed approach is introduced for this purpose. The proposed approach consists of a CNN with BN and ELU. The results of comparisons show that the proposed approach although it has low trained images and low computational complexity in training phase, outperforms the VGG16, VGG19, and Inception-v3 architectures regarding validation accuracy and outperforms the Inception-v3 model in test accuracy.

References

Subramanian, V., Ragunath, K.: Advanced endoscopic imaging: a review of commercially available technologies. Clin. Gastroenterol. Hepatol. 12, 368–376 (2014)

Pereira, S.P., Goodchild, G., Webster, G.J.M.: The endoscopist and malignant and non-malignant biliary obstruction. BBA – Mol. Basis Dis. 2018, 1478–1483 (1864)

Ferlay, J., et al.: GLOBOCAN 2012 v1.0, cancer incidence and mortality worldwide: IARC CancerBase No. 11. International Agency for Research on Cancer (2013). http://globocan.iarc.fr

American Cancer Society: Cancer Facts & Figures 2018. American Cancer Society, Atlanta, Ga (2018). https://www.cancer.org/

Repici, A., Hassan, C.: The endoscopist, the anesthesiologists, and safety in GI endoscopy. Gastrointest. Endosc. 85(1), 109–111 (2017)

Kominami, Y., Yoshida, S., Tanaka, S., et al.: Computer-aided diagnosis of colorectal polyp histology by using a real-time image recognition system and narrow-band imaging magnifying colonoscopy. Gastrointest. Endosc. 83, 643–649 (2016)

Khalifa, N.E.M., Taha, M.H.N., Ali, D.E., Slowik, A., Hassanien, A.E.: Artificial intelligence technique for gene expression by tumor RNA-Seq data: a novel optimized deep learning approach. IEEE Access 8, 22874–22883 (2020)

Tajbakhsh, N., Gurudu, S.R., Liang, J.: A comprehensive computer aided polyp detection system for colonoscopy videos. In: Information Processing in Medical Imaging. Springer, pp. 327–338 (2015)

Cai, W., Wang, X., Chen, M.: Medical image classification with convolutional neural network. In: 13th International Conference on Control Automation Robotics & Vision (ICARCV) (2014)

Roth, H.R., Farag, A., Lu, L., Turkbey, E.B., Summers, R.M.: Deep convolutional networks for pancreas segmentation in CT imaging. In: SPIE Medical Imaging. International Society for Optics and Photonics, pp. 94 131G (2015)

Havaei, M., Davy, A., Warde-Farley, D., Biard, A., Courville, A., Bengio, Y., Pal, C., Jodoin, P.-M., Larochelle, H.: Brain tumor segmentation with deep neural networks. Med. Image Anal. 35, 18–31 (2017)

Qayyum, A., Anwar, S.M., Awais, M., Majid, M.: Medical image retrieval using deep convolutional neural network. Neurocomputing 266, 8–20 (2017)

Vardhana, M., Arunkumar, N., Lasrado, S., Abdulhay, E., Ramirez-Gonzalez, G.: Convolutional neural network for bio-medical image segmentation with hardware acceleration. Cognit. Syst. Res. 50, 10–14 (2018)

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift (2015). arXiv preprint arXiv:1502.03167v3

Clevert, D.A., Unterthiner, T., Hochreiter, S.: Fast and accurate deep network learning by exponential linear units (ELUs). In: ICLR Conference (2016)

Ezzat, D., Taha, M.H.N., Hassanien, A.E.: An optimized deep convolutional neural network to identify nanoscience scanning electron microscope images using social ski driver algorithm. In: International Conference on Advanced Intelligent Systems and Informatics, pp. 492–501. Springer, Cham (2019)

Albeahdili, H.M., Alwzwazy, H.A., Islam, N.E.: Robust convolutional neural networks for image recognition. Int. J. Adv. Comput. Sci. Appl. (ijacsa) 6(11) (2015). http://dx.doi.org/10.14569/IJACSA.2015.061115

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. In: Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015 (2015)

Srivastava, R.K., Greff, K., Schmidhuber, J.: Training very deep networks. In: Proceedings of the 28th International Conference on Neural Information Processing Systems, Montreal, Canada, pp. 2377–2385 (2015)

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., Wojna, Z. Rethinking the inception architecture for computer vision. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). https://doi.org/10.1109/cvpr.2016.308

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

Ioffe, S.: Batch renormalization: towards reducing minibatch dependence in batch-normalized models. CoRR, abs/1702.03275 (2017)

Asperti, A., Mastronardo, C.: The effectiveness of data augmentation for detection of gastrointestinal diseases from endoscopical images. In: Proceedings of the 11th International Joint Conference on Biomedical Engineering Systems and Technologies, KALSIMIS, Funchal, Madeira, Portugal, vol. 2, pp. 199–205 (2018)

Pogorelov, K., et al.: Kvasir: a multi-class image dataset for computer aided gastrointestinal disease detection. In: Proceedings of the 8th ACM on Multimedia Systems Conference, MMSys 2017, New York, NY, USA, pp. 164–169. ACM (2017)

Bello, I., Zoph, B., Vasudevan, V. and Le, Q.V.: Neural optimizer search with reinforcement learning. In: International Conference on Machine Learning, pp. 459–468 (2017)

Santosa, B.: Multiclass classification with cross entropy-support vector machines. Procedia Comput. Sci. 72, 345–352. https://doi.org/10.1016/j.procs.2015.12.149

Ezzat, D., Hassanien, A.E., Taha, M.H.N., Bhattacharyya, S., Vaclav, S.: Transfer learning with a fine-tuned CNN model for classifying augmented natural images. In: International Conference on Innovative Computing and Communications, pp. 843–856. Springer, Singapore (2020)

Albawi, S., Mohammed, T.A., Al-Zawi, S.: Understanding of a convolutional neural network. In: International Conference on Engineering and Technology (ICET) (2017). https://doi.org/10.1109/icengtechnol.2017.8308186

Guo, Y., Wu, Q., Deng, C., Chen, J., Tan, M.: Double forward propagation for memorized batch normalization. AAAI (2018)

Schilling, F.: The effect of batch normalization on deep convolutional neural networks. Master thesis at CSC CVAP (2016)

Pedamonti, D.: Comparison of non-linear activation functions for deep neural networks on MNIST classification task. (2018). arXiv preprint arXiv:1804.02763v1

Darwish, A., Ezzat, D., Hassanien, A.E.: An optimized model based on convolutional neural networks and orthogonal learning particle swarm optimization algorithm for plant diseases diagnosis. Swarm Evol. Comput. 52, 100616 (2020)

Passalis, N., Tefas, A.: Learning bag-of-features pooling for deep convolutional neural networks. In: IEEE International Conference on Computer Vision (ICCV) (2017). https://doi.org/10.1109/iccv.2017.614

Wu, H., Gu, X.: Towards dropout training for convolutional neural networks. Neural Netw. 71, 1–10 (2015). https://doi.org/10.1016/j.neunet.2015.07.007

Yuan, B.: Efficient hardware architecture of softmax layer in deep neural network. In: 2016 29th IEEE International System-on-Chip Conference (SOCC). https://doi.org/10.1109/socc.2016.7905501

Chen, H., Lundberg, S., Lee, S.-I.: Checkpoint ensembles: ensemble methods from a single training process (2017). arXiv preprint arXiv:1710.03282v1

Chollet, F.: Keras: deep learning library for theano and tensorflow. 2015. https://keras.io/. Accessed 19 Apr 2017

Zaccone, G.: Getting Started with TensorFlow. Packt Publishing Ltd. (2016)

Ayan, E., Unver, H.M.: Data augmentation importance for classification of skin lesions via deep learning. In: 2018 Electric Electronics, Computer Science, Biomedical Engineerings Meeting (EBBT). IEEE, pp. 1–4 (2018). https://doi.org/10.1109/EBBT.2018.8391469

Hossin, M., Sulaiman, M.N.: A review on evaluation metrics for data classification evaluations. Int. J. Data Min. Knowl. Manag. Process 5(2), 01–11 (2015). https://doi.org/10.5121/ijdkp.2015.5201

Maria Navin, J.R., Balaji, K.: Performance analysis of neural networks and support vector machines using confusion matrix. Int. J. Adv. Res. Sci. Eng. Technol. 3(5), 2106–2109 (2016)

Jeffrey, W.: The Matplotlib basemap toolkit user’s guide (v. 1.0.5). Matplotlib Basemap Toolkit documentation. Accessed 24 Apr 2013

Wang, G., Sun, Y., Wang, J.: Automatic image-based plant disease severity estimation using deep learning. Comput. Intell. Neurosci. 1–8 (2017). https://doi.org/10.1155/2017/2917536

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Ezzat, D., Afify, H.M., Taha, M.H.N., Hassanien, A.E. (2021). Convolutional Neural Network with Batch Normalization for Classification of Endoscopic Gastrointestinal Diseases. In: Hassanien, A.E., Darwish, A. (eds) Machine Learning and Big Data Analytics Paradigms: Analysis, Applications and Challenges. Studies in Big Data, vol 77. Springer, Cham. https://doi.org/10.1007/978-3-030-59338-4_7

Download citation

DOI: https://doi.org/10.1007/978-3-030-59338-4_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-59337-7

Online ISBN: 978-3-030-59338-4

eBook Packages: Computer ScienceComputer Science (R0)