Abstract

We propose an optimization model of automatic grouping (clustering) based on the k-means model with the Mahalanobis distance measure. This model uses training (parameterization) procedure for the Mahalanobis distance measure by calculating the averaged estimation of the covariance matrix for a training sample. In this work, we investigate the application of the k-means algorithm for the problem of automatic grouping of devices, each of which is described by a large number of measured parameters, with various distance measures: Euclidean, Manhattan, Mahalanobis. If we have a sample with the composition known in advance, we use it as a training (parameterizing) sample from which we can calculate the averaged estimation of the covariance matrix of homogeneous production batches using the Mahalanobis distance. We propose a new clustering model based on the k-means algorithm with the Mahalanobis distance with the averaged (weighted average) estimation of the covariance matrix. We used various optimization models based on the k-means model in our computational experiments for the automatic grouping (clustering) of electronic radio components based on data from their non-destructive testing results. As a result, our new model of automatic grouping allows us to reach the highest accuracy by the Rand index.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The increasing complexity of modern technology leads to an increase in the requirements for the quality, of industrial products reliability and durability. Determination of product quality is carried out by production tests. The quality of products within a single production batch is determined by the stability of the product parameters. Moreover, an increase in the stability of product parameters in manufactured batches can be achieved by increasing the stability of the technological process.

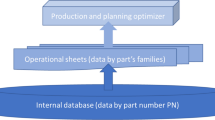

In order to exclude the possibility of potentially unreliable electronic and radio components (ERC) intended to be installed in the onboard equipment of a spacecraft with a long period of active existence, the entire electronic component base passes through specialized technical test centers [1, 2]. These centers carry out operations of the total input control of the ERC, total additional screening tests, total diagnostic non-destructive testing and the selective destructive physical analysis (DPA). To expand the results of the DPA to the entire batch of products obtained, we must be sure that the products are manufactured from a single batch of raw materials. Therefore, the identification of the original homogeneous ERC production batches from the shipped lots of the ERC is one of the most important steps during testing [1].

The k-means model in this problem is well established [1, 3,4,5,6,7,8,9,10]. Its application allows us to achieve a sufficiently high accuracy of splitting the shipped lots into homogeneous production batches. The problem is solved as a k-means problem [11]. The aim is to find \(k\) points (centers or centroids) \(X_1,\dots ,X_k\) in a d-dimensional space, such that the sum of the squared distances from known points (data vectors) \(A_1,\dots ,A_N\) to the nearest of the required points reaches its minimum (1):

Factor analysis methods do not significantly reduce the dimension of the space without loss of accuracy in solving problems [12]. However, in some cases, the accuracy of partitioning into homogeneous batches (the proportion of objects correctly assigned to “their” cluster representing a homogeneous batch of products) can be significantly improved, especially for samples containing more than 2 or 3 homogeneous batches. In addition, the methods of factor analysis, although they do not significantly reduce the dimension of the search space, show the presence of linear statistical dependencies (correlations) between the parameters of the ERC in a homogeneous batch.

A slight increase in accuracy is achieved by using an ensemble of models [3]. We also applied some other clustering models, such as the Expectation-Maximization (EM) model and Self-organized Cohonen Maps (COM) [12].

Distance measure used in practical tasks of automatic objects grouping in real space depends on the features of space. Changing distance measures can improve the accuracy of automatic ERC grouping.

The idea of this work is to use the Mahalanobis distance measure in the k-means problem and study the accuracy of clustering results. We proposed a new algorithm, based on k-means model using the Mahalanobis distance measure with an averaged estimation of the covariance matrix.

2 Mahalanobis Distance

In k-means, k-median [13,14,15] and k-medoid [16,17,18] models, various distance measures may be applied [19, 20]. The use of correlation dependencies can be involved by moving from a search in space with a Euclidean or rectangular distance to a search in space with a Mahalanobis distance [21,22,23,24]. The square of the Mahalanobis distance \(D_M\) defined as follows (2):

where \(X\) is vector of values of measured parameters, \(\mu \) is vector of coordinate values of the cluster center point (or cluster center), \(C\) is the covariance matrix.

Experiments on automatic ERC grouping with the k-medoid and k-median models using the Mahalanobis distance show a slight increase in the clustering accuracy in simple cases (with 2–4 clusters) [25].

3 Data and Preprocessing

In this study, we used data of test results performed in the testing center for the batches of integrated circuits (microchips) [26]. The source data is a set of some ERC parameters measured during the mandatory tests. The sample (mixed lot) was originally composed of data on products belonging to different homogeneous batches (in accordance with the manufacturer’s markup). The total amount of ERC is 3987 devices. Batch 1 contains 71 device, 116 devices for Batch 2, 1867 for Batch 3, 1250 for Batch 4, 146 for batch 5, 113 for Batch 6, 424 for Batch 7. The items (devices) in each batch are described by 205 input measured parameters.

Computationally, the k-means problem, in which the sum of squared distances acts as the minimized objective function, is more convenient than the k-median model using the sum of distances, because when using the sum of the squared distances, the center point of the cluster (the centroid) coincides with the average coordinate value of all objects in the cluster. When passing to the sum of squared Mahalanobis distances, this property is preserved.

Nevertheless, the use of the Mahalanobis distance in the problem of automatic ERC grouping in many cases leads to accuracy decrease in comparison with the results achieved with the Euclidean distance due to the loss of the advantage of the special data normalization approach (Table 1, hit percentage computed as the sum of hits of algorithm (True Positives) in every batch divided by number of products in the mixed lot).

The assumption that the statistical dependences of the parameter values appear in different batches of ERC in a similar way has experimental grounds. As can be seen from Fig. 1, the span and variance of the parameters of different batches vary significantly. Even if the difference in the magnitude of the span and variance of any parameters is insignificant among separate batches, they differ significantly from the span and variance of the entire mixed lot (Fig. 2). Thus, it is erroneous to take the variance and covariance coefficients in each of the homogeneous batches equal to the variance and covariance coefficients for the whole sample. Experiments with the automatic grouping model based on a mixture of Gaussian distributions by maximizing the likelihood function by the EM algorithm [27] show a relatively high model adequacy only when using diagonal covariance matrices (i.e. uncorrelated distributions), moreover, equal for all distributions. Apparent correlations between the parameters are not taken into account.

Mahalanobis distance is scale invariant [28]. Due to this property, data normalization does not matter if this distance is applied. At the same time, binding of the boundaries of the parameters to the boundaries, determined by their physical nature, sets a scale proportional to the permissible fluctuations of these parameters under operating conditions, without reference to the span and variance of these values in a particular production batch. The solution to the problem of preserving the scale could be to use the Mahalanobis distance with the correlation matrix R instead of the covariance matrix \(C\) (3):

Each element of the matrix \(R\) is calculated as follows (4):

where \(S_X\) and \(S_Y\) are standard deviations of parameters \(X\) and \(Y\), \(\overline{X}\) and \(\overline{Y}\) are their average values.

As shown by experiments, the results of which are given below, this approach does not show advantages compared to other methods.

4 The K-Means Model with Supervised Mahalanobis Distance Measure

The clustering problem is a classical example of the unsupervised learning approach. However, in some cases, when solving the problem of automatic grouping, we have a sample of a known composition. This sample can serve as a training (parameterizing) sample. In this case, a unique covariance matrix \(C\) (see (2)) is calculated on this training sample and then used on other data. We call the Mahalanobis distance (2) with the covariance matrix \(C\) pre-calculated on a training sample the supervised (or parameterized) Mahalanobis distance.

If there is no training sample, well-known cluster analysis models can be used to isolate presumably homogeneous batches with some accuracy. With this approach, a presumably heterogeneous batch can be divided into the number of presumably homogeneous batches, determined by the silhouette criterion [29,30,31]. At the same time, a mixed lot can be divided into a larger number of homogeneous batches than it actually is: smaller clusters are more likely to contain data of the same class, i.e. the probability of false assignment of objects of different classes to one cluster reduces. The proportion of objects of the same class, falsely assigned to different classes, is not so important for assessing the statistical characteristics of homogeneous groups of objects.

In the next experiment, there were training sample contains 6 batches: Batch 1 (71 device), Batch 2 (116 devices), Batch 4 (1250 devices), Batch 5 (146 devices), Batch 6 (113 devices), Batch 7 (424 devices). Using covariance matrix \(C\), datasets contain 2 batches in all combinations were clustered with the use of various distance measure. The result was compared with the traditional k-means clustering method with the squared Mahalanobis distance (unsupervised squared Mahalanobis distance, Tables 2, 3, 4 proportion of hits computed as the sum of hits of algorithm in every batch divided by number of products in the batch), and with Euclidean and rectangular distances. For each model, we performed 5 experiments. Average clustering results are shown in Tables 2, 3, 4.

The experiment showed that the results of solving the k-means problem with a supervised Mahalanobis distance measure are higher in comparison with the results of a model with unsupervised Mahalanobis distance, however, it is still lower than in case of Euclidean and rectangular distances.

5 The K-Means Model with Supervised Mahalanobis Distance Measure Based on Averaged Estimation of the Covariance Matrix

Since the original covariance matrices are of the same dimension, we are able to calculate the average estimation of the covariance matrix among all homogeneous batches of products in the training (parameterizing) sample:

where \(n_j\) is number of objects (components) in \(j\)th production batch, \(n\) is total sample size, \(C_j\) are covariance matrices calculated on separate production batches, each of which can be calculated by (6):

We propose the k-means algorithm using the Mahalanobis distance measure with averaged estimation of the covariance matrix. Convergence of the k-means algorithm using a Mahalanobis distance reviewed in [32]. Optimal k value was found by silhouette criterion [30]:

6 Computational Experiments

A series of experiments was carried out on the data set described above. This mixed lot is convenient due to its composition is known in advance, which allows us to evaluate the accuracy of the applied clustering models. Moreover, this data set is difficult for grouping by well-known models: some homogeneous batches in its composition are practically indistinguishable from each other, and the accuracy of known clustering models on this sample is low [12, 33].

As a measure of the clustering accuracy, we use the Rand Index (RI) [34], which determines the proportion of objects for which the reference and resulting cluster splitting are similar.

To train the model with the averaged Mahalanobis distance measure from the components of the mixed lot, new combinations of batches were compiled containing devices belonging to different homogeneous batches. New combinations consists of 2–7 homogeneous batches. Training sample include the entire data from each batch.

Experiments conducted with 5 different clustering models:

Model DM1: K-means with the Mahalanobis distance measure, the estimation of the covariance matrix calculates for the entire training sample. The objective function defines as the sum of the squared distances.

Model DC: K-means with a distance measure similar to the Mahalanobis distance, but using a correlation matrix instead of a covariance matrix (3). The objective function defines as the sum of the squared distances.

Model DM2: K-means algorithm with Mahalanobis distance measure based on averaged estimation of the covariance matrix (4). The objective function defines as the sum of the squared distances.

Model DR: K-means with Manhattan distance measure. The objective function defines as the sum of the distances.

Model DE: K-means with Euclidean distance measure. The objective function defines as the sum of the squared distances.

This paper presents the results of three groups of experiments. In each of the groups of experiments, for each working sample, the k-means algorithm was run 30 times with each of the five studied clustering models. In these groups of experiments the highest RI value was shown by K-means algorithm with Mahalanobis distance measure based on averaged estimation of the covariance matrix.

First Group. The training set corresponds to the working sample for which clustering was carried out. Five series of experiments were carried out. In each series of experiments, the sample is composed of a combination of products belonging to 2–7 homogeneous batches. Table 5 presents the maximum, minimum, mean value and standard deviation for the Rand index and objective function for the 7-batches sample. For objective function also calculated the coefficient of variation (V) and span factor (R, where \(R=Max-Min\)).

Second Group. Training and work samples do not match. In practice, the test center can use retrospective data from the supply and testing of products of the same type as a training sample. In this series of experiments, no more than seven homogeneous batches are presented in the training set. The working sample is represented by a new combination of products belonging to different homogeneous batches. In Table 6 represented results for 5-batches working set and 7-batches training set.

Third Group. The training and working samples also do not match, but the results of the automatic product grouping were used as the training sample (k-means in multistart mode with Euclidean distance measure). In each series of experiments, the training set consists of 10 batches, which in turn are the result of applying the k-means algorithm to the training set containing the entire sample. The working sample is represented by a new combination of products belonging to different homogeneous batches. In Table 7 showed results for 7-batches working set.

In most cases, the coefficient of variation of the objective function values is highest for the DE model, where the Euclidean distance measure used. The span factor of the objective function, in the opposite, has most high values for the DM2 model, where the Mahalanobis distance measure with the average estimation of the covariance matrix used. Therefore, obtaining consistently good values of the objective function requires multiple attempts to run the k-means algorithm, or using other algorithms based on the k-means model, such as j-means [35] or greedy heuristic algorithms [36] or others.

According to Rand index, DM2 model shows the best accuracy among the presented models (Fig. 3(a)–3(c)) in almost all series of experiments. And in all cases, the DM2 model surpasses the traditional DE model, where Euclidean distance measure used (Fig. 3(b), 3(c)).

Experiments showed that there is no correlation between the values of the objective function and the Rand index in series of experiments with model DM1 in any combinations of training and working samples (Fig. 4(a)). In other models with an increase the volume of training and working samples (\(n_t\) and \(n_w\), respectively), the clustering accuracy becomes constant (Fig. 4(b)). For DM2 model there is an inverse correlation between the achieved value of the objective function and the clustering accuracy RI on a small sample (Fig. 5(a)).

In addition, the fact deserves attention that when applying the Euclidean distance measure, the best (smaller) values of the objective function do not correspond to the best (large) accuracy values. (Fig. 5(b)). This fact shows that the model with the Euclidean distance measure is not quite adequate: the most compact clusters do not exactly correspond to homogeneous batches.

7 Conclusion

The proposed clustering model and algorithm which uses the k-means model with Mahalanobis distance and an averaged (weighted average) estimation of the covariance matrix was compared with the k-means model with the Euclidean and rectangular distances in solving the problem of automatic grouping of industrial products by homogeneous production batches.

Taking into account the higher average Rand Index value, the proposed optimization model and algorithm applied for the electronic radio components clustering by homogeneous production batches has an advantage over the models with traditionally used Euclidean and rectangular (Manhattan) metrics.

References

Orlov, V.I., Kazakovtsev, L.A., Masich, I.S., Stashkov, D.V.: Algorithmic support of decision-making on selection of microelectronics products for space industry. Siberian State Aerospace University, Krasnoyarsk (2017)

Kazakovtsev, L.A., Antamoshkin, A.N.: Greedy heuristic method for location problems. Vestnik SibGAU 16(2), 317–325 (2015)

Rozhnov, I., Orlov, V., Kazakovtsev, L.: Ensembles of clustering algorithms for problem of detection of homogeneous production batches of semiconductor devices. In: 2018 School-Seminar on Optimization Problems and their Applications, OPTA-SCL 2018, vol. 2098, pp. 338–348 (2018)

Kazakovtsev, L.A., Antamoshkin, A.N., Masich, I.S.: Fast deterministic algorithm for EEE components classification. IOP Conf. Ser. Mater. Sci. Eng. 94. https://doi.org/10.1088/1757-899X/04/1012015. Article ID 012015

Li, Y., Wu, H.: A clustering method based on K-means algorithm. Phys. Procedia 25, 1104–1109 (2012). https://doi.org/10.1016/j.phpro.2012.03.206

Ansari, S.A., et al.: Using K-means clustering to cluster provinces in Indonesia. J. Phys. Conf. Ser. 1028, 521–526 (2018). 012006

Hossain, Md., Akhtar, Md.N., Ahmad, R.B., Rahman, M.: A dynamic K-means clustering for data mining. Indones. J. Electr. Eng. Comput. Sci. 13(521), 521–526 (2019)

Perez-Ortega, J., Almanza-Ortega, N.N., Romero, D.: Balancing effort and benefit of K-means clustering algorithms in Big Data realms. PLoS ONE 13(9), e0201874 (2018). https://doi.org/10.1371/journal.pone.0201874

Patel, V.R., Mehta, R.G.: Modified k-Means clustering algorithm. In: Das, V.V., Thankachan, N. (eds.) CIIT 2011. CCIS, vol. 250, pp. 307–312. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-25734-6_46

Na, S., Xumin, L., Yong, G.: Research on k-means clustering algorithm: an improved k-means clustering algorithm. In: 2010 Third International Symposium on Intelligent Information Technology and Security Informatics, Jinggangshan, pp. 63–67 (2010)

MacQueen, J.: Some methods for classification and analysis of multivariate observations. In: Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, vol. 1, pp. 281–297 (1967)

Shkaberina, G.S., Orlov, V.I., Tovbis, E.M., Kazakovtsev, L.A.: Identification of the optimal set of informative features for the problem of separating of mixed production batch of semiconductor devices for the space industry. In: Bykadorov, I., Strusevich, V., Tchemisova, T. (eds.) MOTOR 2019. CCIS, vol. 1090, pp. 408–421. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-33394-2_32

Jain, A.K., Dubes, R.C.: Algorithms for Clustering Data. Prentice-Hall, Englewood Cliffs (1981)

Bradley, P.S., Mangasarian, O.L., Street, W.N.: Clustering via concave minimization. In: Advances in Neural Information Processing Systems, vol. 9, pp. 368–374 (1997)

Har-Peled, S., Mazumdar, S.: Coresets for k-Means and k-Median clustering and their applications. In: Proceedings of the 36th Annual ACM Symposium on Theory of Computing, pp. 291–300 (2003)

Maranzana, F.E.: On the location of supply points to minimize transportation costs. IBM Syst. J. 2(2), 129–135 (1963). https://doi.org/10.1147/sj.22.0129

Kaufman, L., Rousseeuw, P.J.: Clustering by means of Medoids. In: Dodge, Y. (ed.) Statistical Data Analysis Based on the L1-Norm and Related Methods, pp. 405–416. North-Holland, Amsterdam (1987)

Park, H.-S., Jun, C.-H.: A simple and fast algorithm for K-medoids clustering. Expert Syst. Appl. 36(2), 3336–3341 (2009). https://doi.org/10.1016/j.eswa.2008.01.039

Davies, D.L., Bouldin, D.W.: A cluster Separation measure. IEEE Trans. Pattern Anal. Mach. Intell. PAMI–1(2), 224–227 (1979)

Deza, M.M., Deza, E.: Metrics on normed structures. In: Encyclopedia of Distances, pp. 89–99. Springer, Heidelberg (2013) https://doi.org/10.1007/978-3-642-30958-8_5

De Maesschalck, R., Jouan-Rimbaud, D., Massart, D.L.: The Mahalanobis distance. Chem. Intell. Lab. Syst. 50(1), 1–18 (2000). https://doi.org/10.1016/S0169-7439(99)00047-7

McLachlan, G.J.: Mahalanobis distance. Resonance 4(20), 1–26 (1999). https://doi.org/10.1007/BF02834632

Xing, E.P., Jordan, M.I., Russell, S.J., Ng, A.Y.: Distance metric learning with application to clustering with side-information. In: Advances in Neural Information Processing Systems, vol. 15, pp. 521–528 (2003)

Arathiand, M., Govardhan, A.: Performance of Mahalanobis distance in time series classification using shapelets. Int. J. Mach. Learn. Comput. 4(4), 339–345 (2014)

Orlov, V.I., Shkaberina, G.S., Rozhnov, I.P., Stupina, A.A., Kazakovtsev, L.A.: Application of clustering algorithms with special distance measures for the problem of automatic grouping of radio products. Sistemy upravleniia I informacionnye tekhnologii 3(77), 42–46 (2019)

Orlov, V.I., Fedosov, V.V.: ERC clustering dataset (2016). http://levk.info/data1526.zip

Kazakovtsev, L.A., Orlov, V.I., Stashkov, D.V., Antamoshkin, A.N., Masich, I.S.: Improved model for detection of homogeneous production batches of electronic components. IOP Conf. Ser. Mater. Sci. Eng. 255 (2017). https://doi.org/10.1088/1757-899x/255/1/012004

Shumskaia, A.O.: Evaluation of the effectiveness of Euclidean distance metrics and Mahalanobis distance metrics in identifying the origin of text. Doklady Tomskogo gosudarstvennogo universiteta system upravleniia I radioelektroniki 3(29), 141–145 (2013)

Kaufman, L., Rousseeuw, P.: Finding Groups in Data: An Introduction to Cluster Analysis. Wiley, New York (1990)

Rousseeuw, P.: Silhouettes: a graphical aid to the interpretation and validation of cluster analysis. J. Comput. Appl. Math. 20, 53–65 (1987)

Golovanov, S.M., Orlov, V.I., Kazakovtsev, L.A.: Recursive clustering algorithm based on silhouette criterion maximization for sorting semiconductor devices by homogeneous batches. IOP Conf. Ser. Mater. Sci. Eng. 537 (2019). 022035

Lapidot, I.: Convergence problems of Mahalanobis distance-based k-means clustering. In: IEEE International Conference on the Science of Electrical Engineering in Israel (ICSEE) (2018). https://doi.org/10.1109/icsee.2018.8646138

Shkaberina, G.Sh., Orlov, V.I., Tovbis, E.M., Sugak, E.V., Kazakovtsev, L.A.: Estimation of the impact of semiconductor device parameters on the accuracy of separating a mixed production batch. IOP Conf. Ser. Mater. Sci. Eng. 537 (2019). https://doi.org/10.1088/1757-899X/537/3/032088. 032088

Rand, W.M.: Objective criteria for the evaluation of clustering methods. J. Am. Stat. Assoc. 66(336), 846–850 (1971). https://doi.org/10.1080/01621459.1971.10482356

Hansen, P., Mladenovic, N.: J-means: a new local search heuristic for minimum sum of squares clustering. Pattern Recogn. 34(2), 405–413 (2001). https://doi.org/10.1016/S0031-3203(99)00216-2

Kazakovtsev, L.A., Antamoshkin, A.N.: Genetic algorithm with fast greedy heuristic for clustering and location problems. Informatica 38(3), 229–240 (2014)

Acknowledgement

Results were obtained within the framework of the State Task FEFE-2020-0013 of the Ministry of Science and Higher Education of the Russian Federation.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Shkaberina, G.S., Orlov, V.I., Tovbis, E.M., Kazakovtsev, L.A. (2020). On the Optimization Models for Automatic Grouping of Industrial Products by Homogeneous Production Batches. In: Kochetov, Y., Bykadorov, I., Gruzdeva, T. (eds) Mathematical Optimization Theory and Operations Research. MOTOR 2020. Communications in Computer and Information Science, vol 1275. Springer, Cham. https://doi.org/10.1007/978-3-030-58657-7_33

Download citation

DOI: https://doi.org/10.1007/978-3-030-58657-7_33

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-58656-0

Online ISBN: 978-3-030-58657-7

eBook Packages: Computer ScienceComputer Science (R0)