Abstract

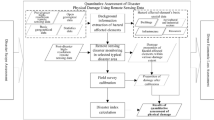

Ever-growing earth observation data enable rapid recognition of damaged areas caused by large-scale disasters. Automation of data processing is the key to obtain adequate knowledge quickly from big earth observation data. In this chapter, we provide an overview of big earth observation data processing for disaster damage mapping. First, we review current earth observation systems used for disaster damage mapping. Next, we summarize recent studies of global land-cover mapping, which is essential information for disaster risk management. After that, we showcase state-of-the-art techniques for damage recognition from three different types of disaster, namely, flood mapping, landslide mapping, and building damage mapping. Finally, we summarize the remaining challenges and future directions.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 Monitoring Disasters from Space

Earth observation has been receiving considerable attention in disaster management in recent years. As such, the imaging capability of national or international earth observation missions has been improving steadily. Also, driven by technology innovation in New Space, the number of satellites has been increasing dramatically. Satellite constellations enable high-frequency data acquisition, which is often required in disaster monitoring and rapid response.

In the last two decades, enormous efforts have been made in international cooperative projects and services for sharing and analyzing satellite imagery in emergency response. Some representative ones are listed below.

-

International Charter ‘Space and Major Disasters’:Footnote 1 The International Charter ‘Space and Major Disasters’ is an international collaboration among space agencies and companies (e.g., Maxar and Planet Labs) to support disaster response activities by providing information and products derived from satellite data. The charter was initiated by the European Space Agency (ESA) and the French space agency (CNES), came into operation in 2000, and activated 601 times for 125 countries supported by 17 charter members with 34 satellites as of April 1, 2019.

-

UNOSAT:Footnote 2 UNOSAT is a technology-intensive programme of the United Nations Institute for Training and Research (UNITAR) to provide satellite imagery analysis and solutions to the UN system and its partners for decision making in critical areas, including humanitarian response to natural disasters. UNOSAT was established in 2001 and the Humanitarian Rapid Mapping service of UNOSAT was launched in 2003 and contributed to 28 humanitarian response to natural disasters in 22 countries in 2018.

-

Sentinel Asia:Footnote 3 The Sentinel Asia initiative is a voluntary basis international collaboration among space agencies, disaster management agencies, and international agencies to support disaster management activities in the Asia-Pacific region by applying remote sensing and Web-GIS technologies. Sentinel Asia was initiated by the Asia-Pacific Regional Space Agency Forum (APRSAF) in 2005 and its members consist of 93 organizations from 28 countries/regions and 16 international organizations. In 2018, there were 25 emergency observation requests and disaster response activities are supported by 8 data provider nodes and 48 data analysis nodes.

-

Copernicus Emergency Management Service (Copernicus EMS):Footnote 4 Copernicus EMS provides geospatial information for emergency response to disasters as well as prevention, preparedness, and recovery activities by analyzing satellite imagery. Copernicus EMS is coordinated by the European Commission as one of the key services of the European Union’s Earth Observation programme Copernicus. The two Mapping services of Copernicus EMS (i.e., Rapid Mapping, Risk and Recovery Mapping) started operation since April 2012 and 349 mapping activations have been conducted as of April 3, 2019.

Owing to the development of hardware, big earth observation data is now available from various types of satellites and imaging sensors. Large volume and a wide variety of earth observation data promote new applications but also raise challenges in understanding satellite imagery for disaster response. In this book chapter, we summarize recent advances and challenges in the processing of big earth observation data for disaster management.

2 Earth Observation Satellites

Over the last decades, the number of earth observation satellites has steadily increased, providing an unprecedented amount of available data. This includes optial (multi- and hyperspectral) images (e.g., Fig. 4.1b) and also synthetic aperture radar (SAR) images (e.g., Fig. 4.1e, f). Regarding disaster response, the sheer number of satellites ensures quick post-event acquisitions and often, due to the regular acquisition patterns of many satellite missions, the availability of a recent pre-event image. In the following paragraphs, we provide a summary of current and future earth observation satellite missions and how they benefit mapping damages and the extent of disasters.

2.1 Optical Satellite Missions

Table 4.1 shows the list of optical satellite missions. An explosive amount of data has become available in the last decade. For instance, only Sentinel-2 satellites acquire over one petabyte per year. Data policies are different depending on resolution: datasets from moderate-resolution satellites (e.g., Landsat-8 and Sentinel-2) are freely available and those from very high-resolution satellites (e.g., Pleiades and WorldView-3) are commercial. For emergency responses, even some commercial satellite images are openly distributed through special data programs (e.g., Open Data ProgramFootnote 5 for WorldView images and Disaster Data ProgramFootnote 6 for PlanetScope).

Optical remote sensing records the solar radiation reflected from the surface in visible, near-infrared, and short-wave infrared ranges as illustrated in Fig. 4.1a. Reflected spectral signatures allow us to discriminate different types of land covers. Owing to its similar characteristics to human vision, optical imagery is straightforward to analyze for damage recognition. A pair of pre- and post-disaster optical images are commonly used to detect pixel-wise or object-wise changes and identify damage levels of affected areas. In particular, if there is any clear change in the normalized difference vegetation index (NDVI) (e.g., Fig. 4.1c) or the normalized difference water index (NDWI) due to landslides or floods, affected areas can be detected easily and accurately.

Optical satellite imaging systems have evolved in terms of spatial, temporal, and spectral resolutions. Spatial and temporal resolutions are critical for disaster damage mapping. Improvement of temporal resolution has been achieved by forming satellite constellations. For instance, the revisit cycle of Sentinel-2 is five days and it was accomplished by a constellation of twin satellites (i.e., Sentinel-2 A and B). An extreme example is PlanetScope: the daily acquisition is possible for the entire globe with a constellation of 135+ small satellites (i.e., Droves). The evolution in temporal resolution allows disaster damage mapping within a day under good weather conditions.

Spatial resolution is another key factor to ensure accuracy of disaster damage mapping. Medium-resolution satellites such as Landsat-8 and Sentinel-2 are sufficient for mapping large-scale changes of the surface due to floods, landslides, wildfires, and volcanos. High-resolution satellites data are necessary particularly when analyzing damages in urban areas. Visual interpretation in emergency response relies on sub-meter satellite imagery such as Pleiades and WorldView to identify building damages.

The major drawback of optical satellites is that they cannot acquire images when affected areas are covered by clouds. Because of this limitation, in many real cases, datasets from different sensors are only available before and after disasters in a few days after disasters. Integration and fusion of multisensor data sources are crucial to deliver map products of disaster damages.

2.2 SAR Satellite Missions

Unlike optical imagery, SAR sensors have the advantage that they are undisturbed by clouds, making them invaluable for responding to disasters due to their reliable image acquisition schedule. Table 4.2 lists current and future SAR missions, together with their highest resolution modes, the corresponding swath widths, their frequency bands and launch dates. All of these satellites also have lower resolution acquisition modes with increased spatial coverage. As can be seen from Table 4.2, even moderately large areas can easily result in multiple GB of data if several sensors are used and acquisitions before and after an event are collected.

As a quite recent development, several startup companies (ICEYE, Capella and Synspective) announced plans to create constellations of dozens of comparatively small and cheap satellites, that enable frequent and short notice acquisitions. Such constellations would produce a wealth of data, compounding the need, both for big data systems and algorithms.

The following publications provide more verbose information for the respective SAR satellites and list additional references. Morena et al. (2004) for RADARSAT-2, Lee (2010) for KOMPSAT-5 and Werninghaus and Buckreuss (2010) for TerraSAR-X, TanDEM-X and the essentially identical PAZ satellite (Suri et al. 2015). Torres et al. (2012) describes ESA’s Sentinel-1 satellites, Bird et al. (2013) NovaSAR-S, Caltagirone et al. (2014) COSMO-SkyMed, Rosenqvist et al. (2014) SAOCOM, and Sun et al. (2017) Gaofen-3. Future SAR missions are covered in Rosen et al. (2017) for NISAR, Motohka et al. (2017) for ALOS-4, and finally De Lisle et al. (2018) introduces the RADARSAT constellation mission. Technical details and developments regarding small SAR satellite constellations are given in Farquharson et al. (2018) and Obata et al. (2019).

Many satellites have acquisition modes where the resolution suffices to detect changes and damages for individual buildings. In any case, large scale destructions, caused by earthquakes (Karimzadeh et al. 2018), wildfires (Tanase et al. 2010; Verhegghen et al. 2016) landslides or flooding (Martinis et al. 2018) can be observed by all sensors. We cover these in greater detail in Sects. 4.4.1, 4.4.2 and 4.4.3. Here we introduce the reader to SAR image formation and how these characteristics are applicable for disaster damage mapping. For a more thorough introduction we advice the interested reader to consult (Moreira et al. 2013).

SAR sensors emit electromagnetic waves and measure the reflected energy (see Fig. 4.1d), called backscatter, which depends on the geometric and geophysical properties of the target. This renders the SAR sensors sensitive to different kinds of land cover but also physical parameters, such as soil moisture. In addition, depending on the SAR’s operating frequency, parts of the electromagnetic wave also penetrate the surface and image layers below the uppermost land cover.

Just like visible light, microwaves are polarized, and the polarimetric composition of reflected waves depends on the imaged targets’ geometric and physical properties. These polarimetric signatures permit further analysis and classification of the imaged area.

Inside one SAR resolution cell, i.e. pixel, numerous elemental scatterers reflected the impinging electromagnetic wave. The superposition of all these reflections make up the received signal at the SAR sensors. Between two SAR acquisitions changes of the elemental scatterers can be estimated, providing a direct measure of differences, the so-called coherence.

All of these properties: backscatter, polarimetric composition, and coherence are useful when analysing disaster-struck areas.

Some newer SAR satellite systems, namely PAZ, NovaSAR-S and the RADARSAT constellation, are additionally equipped with automatic identification system (AIS) receivers, enabling them to track shipping traffic. In most countries AIS transceivers are mandatory for vessels above a certain size. AIS is an additional data source that could be exploited for responding to disasters affecting ships.

3 Land Cover Mapping

Map information is necessary in all phases of disaster management. Mapping of buildings and roads is essential for rescue, relief, and recovery activities. The map information is generally well maintained in the developed countries; however, it is not the case for developing countries, particularly where uncontrolled urbanization is happening, and thus there is high demand for the automatic update of map information from satellite imagery at a large (e.g., country) scale.

Mapping of buildings, roads, and land cover types is one of the key applications using satellite imagery. Global land cover maps at a high resolution have been derived from satellite data in the last decade. Global Urban Footprint (GUF) was created with a ground sampling distance of 12 m by the German Aerospace Center by processing 180,000 TerraSAR-X and TanDEM-X scenes (Esch et al. 2013). The GUF data was released in 2012, freely available at a full resolution for any scientific use and also open to any nonprofit applications at a degraded resolution of 84 m. GlobeLand30 is the first open-access and high-resolution land cover map comprising 10 land cover classes for the years from 2000 to 2010 by analyzing more than 20,000 Landsat and Chinese HJ-1 satellite images (Jun Chen et al. 2015). In 2014, China donated the GlobeLand30 data to the United Nations to contribute to global sustainable development and climate change mitigation.

Recently, building and road mapping technologies that apply machine and deep learning to high-resolution satellite imagery have been dramatically improved. For instance, Ecopia U.S. Building Footprints powered by DigitalGlobe (currently a part of Maxar) has been released in 2018 as the first precise, GIS-ready building footprints dataset covering the entire United States produced by semi-automated processing based on machine learning. The 2D vector polygon dataset will be updated every six months using latest DigitalGlobe big satellite image data to ensure up-to-date building footprint information. Going beyond 2D is the next standard in the field of urban mapping. 3D reconstruction and 3D semantic reconstruction using large-scale satellite imagery have been receiving particular attention in recent years.

Benchmark datasets and data science competitions have been playing key roles in advancing 2D/3D mapping technologies. Representative benchmark datasets are listed below.

-

SpaceNet:Footnote 7 SpaceNet is a repository of freely available high-resolution satellite imagery and labeled training data for computer vision and machine learning research. SpaceNet was initiated by CosmiQ Works, DigitalGlobe, and NVIDIA in 2016. SpaceNet building and road extraction competitions were organized with over 685,000 building footprints and 8000 km of roads from large cities in the world (i.e., Rio de Janeiro, Las Vegas, Paris, Shanghai, Khartoum).

-

DeepGlobe:Footnote 8 DeepGlobe is a challenge-based workshop initiated by Facebook and DigitalGlobe as conjunction with CVPR 2018 to promote research on machine learning and computer vision techniques applied to satellite imagery and bridge people from the respective fields with different perspectives. DeepGlobe was composed of three challenges: road extraction, building detection, and land cover classification. The building detection challenge used the SpaceNet data; the road extraction and land cover classification challenges used images sampled from the DigitalGlobe Basemap +Vivid dataset. The road extraction challenge dataset comprises images of rural and urban areas in Thailand, Indonesia, and India, whereas the land cover classification challenge focuses on rural areas (Demir et al. 2018).

-

BigEarthNet:Footnote 9 The BigEarthNet archive was constructed by the Technical University of Berlin and released in 2019. The archive is a large scale dataset composed of 590,326 Sentinel-2 image patches with land cover labels. BigEarthNet was created from 125 Sentinel-2 tiles covering 10 countries of Europe and the corresponding labels were provided from CORINE Land Cover database. BigEarthNet advances research for the analysis of big earth observation data archives.

-

2019 IEEE GRSS Data Fusion Contest:Footnote 10 2019 IEEE GRSS Data Fusion Contest, organized by the Image Analysis and Data Fusion Technical Committee (IADF TC) of the IEEE Geoscience and Remote Sensing Society (GRSS) and the Johns Hopkins University (JHU), promoted research in semantic 3D reconstruction and stereo using machine learning and satellite images. The contest was composed of four challenges: three of them are simultaneous estimation of land cover semantics and height information from single-view, pairwise, and multi-view satellite images, respectively; the last one is 3D point cloud classification. The contest used high-resolution satellite imagery and airborne LiDAR data over Jacksonville and Omaha, US (Le Saux et al. 2019).

One major challenge in land cover mapping is the generalization ability. Most of training data was prepared for a limited number of countries and cities. Trained models for such data do not always work globally due to different characteristics of structures. The technical focus has been on how to ensure the generalization ability between different cities (Yokoya et al. 2018). To exploit the capability of machine learning and maximize the mapping accuracy, the simplest approach is to increase training data. Many mapping projects have been progressing in developing countries through annotation efforts by local people (e.g., Open Cities AfricaFootnote 11). Collaborative mapping based on crowdsourced data represented by OpenStreetMap plays a major role in creating training data. The synergy of openly available big earth observation data, crowdsourcing-based annotations, and machine learning technologies will accelerate the land cover mapping capability for the entire globe.

4 Disaster Mapping

4.1 Flood Mapping

Besides the above-mentioned international cooperative projects and services for the disaster response in the introduction part, flood mapping systems are also availability.

-

Global flood detection system.Footnote 12 The objective of this system is to detect and map major river floods using daily passive microwave remote sensing sensors (AMSR2 and GPM).

-

NASA Global flood detection system.Footnote 13 This system adapts real-time TRMM Multi-satellite Precipitation Analysis (TMPA) and Global Precipitation Measurement (GPM) Integrated Multi-Satellite Retrievals.

-

Tiger-Net.Footnote 14 ESA supports the African with earth observation for monitoring water resource (including flood mapping) through the satellites of ESA.

-

Dartmouth flood observatory.Footnote 15 It was founded in 1993 at Dartmouth College, Hanover, NH USA and moved to the University of Colorado, INSTAAR in 2010. They have used all the available satellite datasets (optical and SAR) to estimate the flood inundation map using change detection methods.

-

DLR flood service.Footnote 16 Sentinel-1 and TerraSAR-X SAR datasets are used to extract the flooding maps using a fully automatic chains (i.e., pre-processing, auxiliary datasets collection, initialized classification and post-processing) via a web-client.

For flood mapping, SAR images are the better choice compared to the optical and UAV images, as clouds are penetrated by electromagnetic waves and do not corrupt the resulting image. Usually, due to the lower reflectance in optical and lower backscattering in SAR datasets, water bodies are easily detected. Two traditional but efficient methods are usually utilized (seen in Fig. 4.2). The first one is to apply the change detection methods between pre- and post-flood images and then use the filters (e.g., morphological closing and opening) to remove the noise. This kind of techniques is suitable to detect the flood area using single source datasets, such as Landsat series (Chignell et al. 2015), ENVISAT ASAR (Schlaffer et al. 2015), and Sentinel SAR (Li et al. 2018).

The second one is to extract the water bodies using classification methods (water and non-water areas) and indexes (listed in Table 4.3) from pre- and post-flood images. Then, the flood area is produced by analyze the changes between the water bodies of two periods. Tong et al. (2018) have applied the support vector machine and the active contour without edges model for extracting water from Landsat 8 and COMSO-SkyMed and then mapped the flood using image difference method.

Technical challenges and future directions are list as follows:

-

1.

Mapping flood in small specific area. Very high resolution remote sensing provide an opportunity to monitoring the flood in a small scale (e.g., downtown area). However, water is always mixed by the shadow areas. To separate the shadow from water body will improve the performance of flood monitoring.

-

2.

Developing more computationally efficient and robust method without considering spatial resolution, spectral signature, or viewing angle. Normalized Difference Flood Index (NDFI) (Cian et al. 2018), which is computed using multi-temporal statistics of SAR images, will give us the inspirations.

-

3.

Flood detection via satellite and social media by deep learning. Satellite images can provide large scale flooding information, however, we should wait for the datasets. Social media can provide real-time information. A proper way should be found to integrate the information derivied from satellite images and social multimedia. Interested reader can read more details in http://www.multimediaeval.org/mediaeval2018/.

Here, a typical example of combining medium-resolution SAR (i.e., Sentinel-1) and high-resolution optical (i.e., Jilin-1 sp06) datasets to detect the flood areas in Iran is shown in Fig. 4.3. Due to the coarse resolution of Sentinel-1, the small flood areas in the city center (red rectangle areas in Fig. 4.3b) could not be detected by using only Sentinel-1 images. However, it can be identified by the high-resolution optical images. Thus, the final flooded mapping is the combination of the city flooded areas extracted by high-resolution and the non-city flood areas generated by Sentinel-1.

4.2 Landslide Mapping

Landslide disasters are frequently triggered by heavy rains and earthquakes (Martelloni et al. 2012; Tanyaş et al. 2019). These deadly events can cause a large number of fatalities (Intrieri et al. 2019). As a result, there have been several efforts to map a global subjectively of occurrence using big earth observation data sources (Stanley and Kirschbaum 2017). These activities take advantage of the relationship between landslides and four main variables such as topography slope computed from global topography models (SRTM, ASTER GDEM), land cover, rainfall data, and seismic activity (NASA Goddard Space Flight Center 2007; Muthu and Petrou 2007; Kirschbaum et al. 2010, 2015; Kirschbaum and Stanley 2018). These techniques are mainly based on models that integrate all variables using heuristic functions to evaluate the possibility of landslide occurrence. These models can map the landslide susceptibility on a continental scale (approximately 1 km2), regional, and local scale with a resolution of few hundred meters. These studies provide an overview of the landslide hazard and can be used for mitigation and preparation activities before these disasters occurred.

Differently, earth observation data is also applied for mapping landslide damages in smaller scales focusing on particular events. Visual interpretation methods employ very-high-resolution optical imagery acquired from either space- or air-bone platforms. Although these approaches provide high-reliability on the damage assessment, their applicability is often restricted by the availability of suitable images such as cloud-free and good-illumination conditions. It is also important to notice that these techniques require huge human efforts for damage interpretation, specially in case of rapid disaster response.

Change detection models, on the other hand, use a set of images acquired before and after the disaster to evaluate the damages. The land cover changes estimated from multi-temporal optical imagery is used for delineating the extent of landslides. Furthermore, spectral indexes (e.g. normalized vegetation and soil index) are also employed for landslide mapping (Rau et al. 2014; Lv et al. 2018; Yang et al. 2013; Zhuo et al. 2019; Ramos-Bernal et al. 2018). Integration of high-resolution digital terrain models allows estimation of landslide-induced damages such as debris and land scars distribution in the affected area (Dou et al. 2019; Bunn et al. 2019). Similarly to visual interpretation approach, the availability of suitable multi-temporal image datasets firmly bound the deployment of these techniques.

In the case of SAR data that has almost all-weather acquisition conditions, mapping techniques take advantage of the side-looking nature of these sensors. The two properties of SAR data, intensity, and phase information of the backscattered signal are exploited for detecting landslide damages. The later is widely applied for monitoring and mapping seismic-induced landslides (Cascini et al. 2009; Kalia 2018). Interferometric SAR (InSAR) analysis using detail DEM data provide the spatial distribution and displacement fields of the ground movement (Riedel and Walther 2008; Rabus and Pichierri 2018; Amitrano et al. 2019). Furthermore, time-series InSAR models allow landslide monitoring of slow-movement landslides (Kang et al. 2017). On the other hand, change detection techniques, using SAR intensity images, are also powerful means to estimate the spatial distribution of landslide damages (Shi et al. 2015). For instance, texture features computed from multi-temporal datasets shows good correlation with the areas affected by landslides (Darvishi et al. 2018; Mondini et al. 2019). Furthermore, in case of disaster response where rapid geolocations of affected areas are crucial for rescue efforts, change detection based on intensity information has great applicability because of low computation time and direct manipulation of geocoded images. For instance, on September 6, last year, the 2018 Hokkaido Eastern Iburi Earthquake caused several landslides distributed in an extensive area (Yamagishi and Yamazaki 2018). Figure 4.4 shows a repid landslide mapping (yellow segments) using a combination of pixel- and object-based change detection analysis, proposed by Adriano et al. (2020), of a pre- and post-event Sentinel-1 intensity images acquired on September 1 and 13, 2018, respectively.

(a) Location of the target area. The red start shows the earthquake epicenter (b) Color-composed image from pre- and post-event Sentinel-1 intensity images (R: pre-event, G, B: post-event). (c) Google Satellite imagery corresponding to the same area shown in b. (d) Landslide mapping results using multi-temporal Sentinel-1 imagery. Background image corresponds to the color-composed RGB image

Recently, machine learning algorithms together with earth observation data are applied to detect landslide areas. Application of well establish classifiers such as support vector machine and ensemble learning models are used to identify landslide areas from optical, SAR intensity, and SAR coherence images (Bui et al. 2018; Park et al. 2018; Burrows et al. 2019). Furthermore, deep neural networks are also employed to map the landslide detection (Ghorbanzadeh et al. 2019; Wang et al. 2019). These approaches focused on high-resolution remote sensing imagery and landslide influencing features such as DEM data, land cover, and rainfall information.

4.3 Building Damage Mapping

Assessing the building damage in the aftermath of major disasters, such as earthquakes, tsunamis, and typhoons, are crucial for post-disaster rapid and efficient relief activities. In this context, earth observation data is a good alternative for damage mapping because satellite imagery can observe large scenes from remote or inaccessible affected areas (Matsuoka and Yamazaki 2004). Based on the evolution of sensor platforms and their spatial resolution, damage mapping can be divided into two parts. Initial applications for building damage recognition were based on change detection analysis of moderate-resolution, mainly using sensor launched in the late 90’s such as the Landsat-7 Satellite and the European Remote Sensing (ERS-1) SAR satellite, optical and SAR imagery. These applications relied on the interpretation of texture and linear correlation features computed from pre- and post-event datasets. Besides, due to their relative low spatial resolution (about 30 m2), these methods were efficiently applied for building damage mapping in a block-scale (Yusuf et al. 2001; Matsuoka and Yamazaki 2005; Kohiyama and Yamazaki 2005).

The following generation of high-resolution optical and SAR imagery, starting in early 2000s such as the QuickBird, GeoEye-1, TerraSAR-X, COSMO-SkyMed satellites, contribute to developing frameworks for detail mapping of building damage. These methods, besides of change detection techniques, implemented sophisticated pixel- and object-based image processing algorithms for damage recognition (Miura et al. 2016; Tong et al. 2012; Brett and Guida 2013; Gokon et al. 2015; Ranjbar et al. 2018). Moreover, taking advantage of very-high-resolution datasets, sophisticated frameworks were implemented to extract building damage using only post-event images (Gong et al. 2016). Most of these methodologies rely on specific features of SAR data. For instance, some studies analyzed the polarimetric characteristics of radar backscattering that are correlated with building damage patterns observed in SAR images (Yamaguchi 2012; Chen and Sato 2013). Furthermore, SAR platforms such as the Sentinel-1 and ALOS-2 repeatedly acquired images constructing large time-series datasets. Phase coherence computed from multi-temporal SAR acquisitions can provide important characteristics of the degree of changes in urban areas in the case of earthquake-induced damage (Yun et al. 2015; Olen and Bookhagen 2018; Karimzadeh et al. 2018).

Recently, advanced machine learning algorithms are implemented using multi-temporal and multi-source remote sensing data for mapping building damage. These methodologies learn from limited but properly labeled samples of damaged buildings to assign a level on the whole affected area (Endo et al. 2018). A recent example, Adriano et al. (2019) used an ensemble learning classifier on SAR and optical datasets to map the building damage following the 2018 Sulawesi Earthquake-Tsunami in Palu, Indonesia. Their methodology successfully classified three levels of building damage with an overall accuracy greater than 90% (Fig. 4.5). Furthermore, their implemented framework provided a reliable thematic map after only after three hours of acquired all raw remote sensing datasets.

(a) Location of the target area. The red start shows the earthquake epicenter (b) Pre-event WorldView-3 image. (c) Post-event WorldView-3 images. (d) Damage mapping results using multi-sensor and multi-temporal remote sensing data. Background image corresponds to the pre-event Sentinel-1 SAR image capture on May 26, 2018

5 Conclusion and Future Lines

Open data policy in earth observation and international cooperation in emergency responses have expanded practical use of image and signal processing techniques for rapid disaster damage mapping. In this chapter, we have reviewed earth observation systems available for disaster management and showcased recent advances in land cover mapping, flood mapping, landslide mapping, and building damage mapping.

Although human visual interpretation is still required to determine the level of detailed building damages, it takes a long time to acquire high-resolution images and conduct visual interpretation. One possible future direction is to construct training data on past disasters via human visual interpretation and develop machine learning models that can respond quickly to unknown disasters. Another challenge is that there are many cases where data can not be obtained from the same sensor before and after a disaster (He and Yokoya 2018). How to extract disaster-induced changes from multisensor and possibly heterogeneous data sources before and after disasters is a practical problem in damage mapping. Furthermore, it is important for the entire disaster management process to verify the accuracy of damage assessment results using in-situ data. Integration and fusion of earth observation data with ground-shot images and text information available online (e.g., news and SNS) is also a future subject. On the basis of the remote sensing image and signal processing technology and human expert knowledge, machine learning technologies have the potential to accelerate the accuracy and speed of damage mapping from big earth observation data.

Notes

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

References

Adriano B, Xia J et al (2019) Multi-source data fusion based on ensemble learning for rapid building damage mapping during the 2018 Sulawesi earthquake and tsunami in Palu, Indonesia. Remote Sens 11(7). ISSN: 2072–4292. https://doi.org/10.3390/rs11070886. https://www.mdpi.com/2072-4292/11/7/886

Adriano B, Yokoya N et al (2020) A semiautomatic pixel-object method for detecting landslides using multitemporal ALOS-2 intensity images. Remote Sens 12(3). issn: 2072-4292. https://doi.org/10.3390/rs12030561. https://www.mdpi.com/2072-4292/12/3/561

Amitrano D et al (2019) Long-term satellite monitoring of the Slumgul- lion landslide using space-borne synthetic aperture radar sub-pixel offset tracking. Remote Sens 11(3):369. https://doi.org/10.3390/rs11030369

Bird R et al (2013) NovaSAR-S: a low cost approach to SAR applications. In: Conference proceedings of 2013 Asia-Pacific conference on synthetic aperture radar (APSAR), pp 84–87

Brett PTB, Guida R (2013) Earthquake damage detection in urban areas using curvilinear features. IEEE Trans Geosci Remote Sens 51(9):4877–4884. ISSN: 0196-2892. https://doi.org/10.1109/TGRS.2013.2271564. http://ieeexplore.ieee.org/lpdocs/epic03/wrapper.htm?arnumber=6565347

Bui DT et al (2018) Landslide detection and susceptibility mapping by AIRSAR data using support vector machine and index of entropy models in Cameron Highlands, Malaysia. Remote Sens 10(10). ISSN: 20724292. https://doi.org/10.3390/rs10101527

Bunn MD et al (2019) A simplified, object-based framework for efficient landslide inventorying using LIDAR digital elevation model derivatives. Remote Sens 11(3). ISSN: 20724292. https://doi.org/10.3390/rs11030303

Burrows K et al (2019) A new method for large-scale landslide classification from satellite radar. Remote Sens 11(3):237. https://doi.org/10.3390/rs11030237

Caltagirone F et al (2014) The COSMO-SkyMed dual use earth observation program: development, qualification, and results of the commissioning of the overall constellation. IEEE J Sel Top Appl Earth Obs Remote Sens 7(7):2754–2762. ISSN: 1939–1404. https://doi.org/10.1109/JSTARS.2014.2317287

Cascini L, Fornaro G, Peduto D (2009) Analysis at medium scale of low-resolution DInSAR data in slow-moving landslide-affected areas. ISPRS J Photogramm Remote Sens 64(6):598–611. ISSN: 09242716. https://doi.org/10.1016/j.isprsjprs.2009.05.003

Chen J et al (2015) Global land cover mapping at 30 m resolution: a POK-based operational approach. ISPRS J Photogramm Remote Sens 103:7–27

Chen S-W, Sato M (2013) Tsunami damage investigation of built-up areas using multitemporal spaceborne full polarimetric SAR images. IEEE Trans Geosci Remote Sens 51(4):1997. ISSN: 0196-2892. https://doi.org/10.1109/TGRS.2012.2210050. http://ieeexplore.ieee.org/lpdocs/epic03/wrapper.htm?arnumber=6353568

Chignell SM et al (2015) Multi-temporal independent component analysis and landsat 8 for delineating maximum extent of the 2013 Colorado front range flood. Remote Sens 7(8):9822–9843. ISSN: 2072-4292. https://doi.org/10.3390/rs70809822. http://www.mdpi.com/2072-4292/7/8/9822

Cian F, Marconcini M, Ceccato P (2018) Normalized difference flood index for rapid flood mapping: taking advantage of EO big data. Remote Sens Environ 209:712–730. ISSN: 0034-4257. https://doi.org/10.1016/j.rse.2018.03.006. http://www.sciencedirect.com/science/article/pii/S0034425718300993

Darvishi M et al (2018) Sentinel-1 and ground-based sensors for continuous monitoring of the corvara landslide (South Tyrol, Italy). Remote Sens 10(11):1781. https://doi.org/10.3390/rs10111781

De Lisle D et al (2018) RADARSAT constellation mission status update. In: EUSAR 2018; 12th European conference on synthetic aperture radar, pp 1–5

Demir I et al (2018) DeepGlobe 2018: a challenge to parse the Earth through satellite images. In: The IEEE conference on computer vision and pattern recognition (CVPR) workshops

Dou J et al (2019) Evaluating GIS-based multiple statistical models and data mining for earthquake and rainfall-induced landslide susceptibility using the LiDAR DEM. Remote Sens 11(6):638. ISSN: 2072-4292. https://doi.org/10.3390/rs11060638. https://www.mdpi.com/2072-4292/11/6/638

Endo Y et al (2018) New insights into multiclass damage classification of tsunami-induced building damage from SAR images. Remote Sens 10(12):2059. ISSN: 2072-4292. https://doi.org/10.3390/rs10122059. http://www.mdpi.com/2072-4292/10/12/2059

Esch T et al (2013) Urban footprint processor – fully automated processing chain generating settlement masks from global data of the TanDEM-X mission. IEEE Geosci Remote Sens Lett 10(6):1617–1621

Farquharson G et al (2018) The capella synthetic aperture radar constellation. In: IGARSS 2018–2018 IEEE international geoscience and remote sensing symposium, pp 1873–1876. https://doi.org/10.1109/IGARSS.2018.8518683

Feyisa GL et al (2014) Automated water extraction index: a new technique for surface water mapping using Landsat imagery. Remote Sens Environ 140:23–35. ISSN: 0034-4257. https://doi.org/10.1016/j.rse.2013.08.029. http://www.sciencedirect.com/science/article/pii/S0034425713002873

Ghorbanzadeh O et al (2019) Evaluation of different machine learning methods and deep-learning convolutional neural networks for landslide detection. Remote Sens 11(2). ISSN: 20724292. https://doi.org/10.3390/rs11020196

Gokon H et al (2015) A method for detecting buildings destroyed by the 2011 Tohoku earthquake and tsunami using multitemporal TerraSAR-X data. IEEE Geosci Remote Sens Lett 12(6):1277–1281. ISSN: 1545-598X. https://doi.org/10.1109/LGRS.2015.2392792. http://ieeexplore.ieee.org/lpdocs/epic03/wrapper.htm?arnumber=7042770

Gong L et al (2016) Earthquake-induced building damage detection with post-event sub-meter VHR terrasar-X staring spotlight imagery. Remote Sens 8(11):1–21. ISSN: 20724292. https://doi.org/10.3390/rs8110887

He W, Yokoya N (2018) Multi-temporal sentinel-1 and -2 data fusion for optical image simulation. ISPRS Int J GeoInf 7(10). ISSN: 2220-9964. https://doi.org/10.3390/ijgi7100389. https://www.mdpi.com/2220-9964/7/10/389

Intrieri E, Carlà T, Gigli G (2019) Forecasting the time of failure of landslides at slope-scale: a literature review. Earth-Sci Rev. ISSN: 00128252. https://doi.org/10.1016/j.earscirev.2019.03.019. https://linkinghub.elsevier.com/retrieve/pii/S001282521830518X

Kalia AC (2018) Classification of landslide activity on a regional scale using persistent scatterer interferometry at the Moselle Valley (Germany). Remote Sens 10(12). ISSN: 20724292. https://doi.org/10.3390/rs10121880

Kang Y et al (2017) Application of InSAR techniques to an analysis of the Guanling landslide. Remote Sens 9(10):1–17. ISSN: 20724292. https://doi.org/10.3390/rs9101046

Karimzadeh S et al (2018) Sequential SAR coherence method for the monitoring of buildings in Sarpole-Zahab, Iran. Remote Sens 10(8):1255. ISSN: 2072-4292. https://doi.org/10.3390/rs10081255. http://www.mdpi.com/2072-4292/10/8/1255

Kirschbaum D, Stanley T (2018) Satellite-based assessment of rainfall-triggered landslide hazard for situational awareness. Earth’s Future 6(3):505–523. ISSN: 23284277. https://doi.org/10.1002/2017EF000715

Kirschbaum DB et al (2010) A global landslide catalog for hazard applications: method, results, and limitations. Nat Hazards 52(3):561–575. ISSN: 0921030X. https://doi.org/10.1007/s11069-009-9401-4

Kirschbaum D, Stanley T, Zhou Y (2015) Spatial and temporal analysis of a global landslide catalog. Geomorphology 249:4–15. ISSN: 0169555X. https://doi.org/10.1016/j.geomorph.2015.03.016

Kohiyama M, Yamazaki F (2005) Damage detection for 2003 Bam, Iran, earthquake using Terra-ASTER satellite imagery. Earthquake Spectra 21(S1):267–274. https://doi.org/10.1193/1.2098947

Le Saux B et al (2019) 2019 data fusion contest [technical committees]. IEEE Geosci Remote Sens Mag 7(1):103–105. ISSN: 2168–6831. https://doi.org/10.1109/MGRS.2019.2893783

Lee S (2010) Overview of KOMPSAT-5 program, mission, and system. In: 2010 IEEE international geoscience and remote sensing symposium, pp 797–800. https://doi.org/10.1109/IGARSS.2010.5652759

Li Y et al (2018) An automatic change detection approach for rapid flood mapping in Sentinel-1 SAR data. Int J Appl Earth Obs Geoinf 73:123–135. ISSN: 0303-2434. https://doi.org/10.1016/j.jag.2018.05.023. http://www.sciencedirect.com/science/article/pii/S0303243418302782

Lv ZY et al (2018) Landslide inventory mapping from bitemporal high-resolution remote sensing images using change detection and multiscale segmentation. IEEE J Sel Top Appl Earth Obs Remote Sens 11(5):1520–1532. ISSN: 21511535. https://doi.org/10.1109/JSTARS.2018.2803784

Martelloni G et al (2012) Rainfall thresholds for the forecasting of landslide occurrence at regional scale. Landslides 9(4):485–495. ISSN: 1612–5118. https://doi.org/10.1007/s10346-011-0308-2

Martinis S, Plank S, Ćwik K (2018) The use of Sentinel-1 time-series data to improve flood monitoring in arid areas. Remote Sens 10(4). ISSN: 2072-4292. https://doi.org/10.3390/rs10040583. https://www.mdpi.com/2072-4292/10/4/583

Matsuoka M, Yamazaki F (2004) Use of satellite SAR intensity imagery for detecting building areas damaged due to earthquakes. Earthquake Spectra 20(3):975. ISSN: 87552930. https://doi.org/10.1193/1.1774182. http://link.aip.org/link/EASPEF/v20/i3/p975/s1%7B%5C&%7DAgg=doi

Matsuoka M, Yamazaki F (2005) Building damage mapping of the 2003 Bam, Iran, earthquake using Envisat/ASAR intensity imagery. Earthquake Spectra 21(S1):S285. ISSN: 87552930. https://doi.org/10.1193/1.2101027. http://link.aip.org/link/EASPEF/v21/iS1/pS285/s1%7B%5C&%7DAgg=doi

Mcfeeters SK (1996) The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int J Remote Sens 17(7):1425–1432

Miura H, Midorikawa S, Matsuoka M (2016) Building damage assessment using high-resolution satellite SAR images of the 2010 Haiti earthquake. Earthquake Spectra 32(1):591–610. ISSN: 8755-2930. https://doi.org/10.1193/033014EQS042M

Mondini A et al (2019) Sentinel-1 SAR amplitude imagery for rapid landslide detection. Remote Sens 11(7):760. ISSN: 2072-4292. https://doi.org/10.3390/rs11070760. https://www.mdpi.com/2072-4292/11/7/760

Moreira A et al (2013) A tutorial on synthetic aperture radar. IEEE Geosci Remote Sens Mag 1(1):6–43. ISSN: 2168-6831. https://doi.org/10.1109/MGRS.2013.2248301

Morena LC, James KV, Beck J (2004) An introduction to the RADARSAT-2 mission. Can J Remote Sens 30(3):221–234. https://doi.org/10.5589/m04-004

Motohka T et al (2017) Status of the advanced land observing satellite-2 (ALOS-2) and its follow-on L-band SAR mission. In: 2017 IEEE international geo-science and remote sensing symposium (IGARSS), pp 2427–2429. https://doi.org/10.1109/IGARSS.2017.8127482

Muthu K, Petrou M (2007) Landslide-Hazard mapping using an expert system and a GIS. IEEE Trans Geosci Remote Sens 45(2):522–531. ISSN: 0196-2892

NASA Goddard Space Flight Center (2007). The Global Landslide Catalog. http://web.archive.org/web/20080207010024/. http://www.808multimedia.com/winnt/kernel.htm (visited on 30 Sept 2010)

Obata T et al (2019) The development status of the first demonstration satellite of our commercial small synthetic aperture radar satellite constellation. In: AIAA/USU conference on small satellites, pp 1–4

Olen S, Bookhagen B (2018) Mapping damage-affected areas after natural hazard events using Sentinel-1 coherence time series. Remote Sens 10(8):1272. ISSN: 2072-4292. https://doi.org/10.3390/rs10081272. http://www.mdpi.com/2072-4292/10/8/1272

Park S-J et al (2018) Landslide susceptibility mapping and comparison using decision tree models: a case study of Jumunjin Area, Korea. Remote Sens 10(10):1545. https://doi.org/10.3390/rs10101545

Rabus B, Pichierri M (2018) A new InSAR phase demodulation technique developed for a typical example of a complex, multi-lobed landslide displacement field, Fels Glacier Slide, Alaska. Remote Sens 10(7). ISSN: 20724292. https://doi.org/10.3390/rs10070995

Ramos-Bernal RN et al (2018) Evaluation of unsupervised change detection methods applied to landslide inventory mapping using ASTER imagery. Remote Sens 10(12). ISSN: 20724292. https://doi.org/10.3390/rs10121987

Ranjbar HR et al (2018) Using high-resolution satellite imagery to provide a relief priority map after earthquake. Nat Hazards 90(3):1087–1113. ISSN: 0921-030X. https://doi.org/10.1007/s11069-017-3085-y

Rau J, Jhan J, Rau R (2014) Semiautomatic object-oriented landslide recognition scheme from multisensor optical imagery and DEM. IEEE Trans Geosci Remote Sens 52(2):1336–1349. ISSN: 0196-2892

Riedel B, Walther A (2008) InSAR processing for the recognition of landslides. Adv Geosci 14:189–194. ISSN: 1680-7359. https://doi.org/10.5194/adgeo-14-189-2008. https://www.adv-geosci.net/14/189/2008/

Rosen P et al (2017) The NASA-ISRO SAR (NISAR) mission dual-band radar instrument preliminary design. In: 2017 IEEE international geoscience and remote sensing symposium (IGARSS), pp 3832–3835. https://doi.org/10.1109/IGARSS.2017.8127836

Rosenqvist A et al (2014) A brief overview of the SAOCOM Integrated Mission Acquisition Strategy (IMAS). In: 1st ESA SAOCOM companion satellite workshop, ESA ESTEC

Schlaffer S et al (2015) Flood detection from multi-temporal SAR data using harmonic analysis and change detection. Int J Appl Earth Obs Geoinf 38:15–24. ISSN: 0303-2434. https://doi.org/10.1016/j.jag.2014.12.001. http://www.sciencedirect.com/science/article/pii/S0303243414002645

Shi X et al (2015) Landslide deformation monitoring using point-like target offset tracking with multi-mode high-resolution TerraSAR-X data. ISPRS J Photogramm Remote Sens 105:128–140. ISSN: 09242716. https://doi.org/10.1016/j.isprsjprs.2015.03.017

Stanley T, Kirschbaum DB (2017) A heuristic approach to global landslide susceptibility mapping. Nat Hazards 87(1):145–164. ISSN: 15730840. https://doi.org/10.1007/s11069-017-2757-y

Sun J, Yu W, Deng Y (2017) The SAR payload design and performance for the GF-3 mission. Sensors 17(10). ISSN: 1424–8220. https://doi.org/10.3390/s17102419. http://www.mdpi.com/1424-8220/17/10/2419

Suri S et al (2015) TerraSAR-X/PAZ constellation: CONOPS, highlights and access solution. In: 2015 IEEE 5th Asia-Pacific conference on synthetic aperture radar (APSAR), pp 178–183. https://doi.org/10.1109/APSAR.2015.7306183

Tanase MA et al (2010) TerraSAR-X data for burn severity evaluation in mediterranean forests on sloped terrain. IEEE Trans Geosci Remote Sens 48(2):917–929. ISSN: 0196-2892. https://doi.org/10.1109/TGRS.2009.2025943

Tanyaş H et al (2019) Rapid prediction of the magnitude scale of landslide events triggered by an earthquake. Landslides 16(4):661–676. ISSN: 1612-5118. https://doi.org/10.1007/s10346-019-01136-4

Tong X, Hong Z et al (2012) Building-damage detection using pre- and post-seismic high-resolution satellite stereo imagery: a case study of the May 2008 Wenchuan earthquake. ISPRS J Photogramm Remote Sens 68:13–27. ISSN: 09242716. https://doi.org/10.1016/j.isprsjprs.2011.12.004. http://linkinghub.elsevier.com/retrieve/pii/S0924271611001584

Tong X, Luo X et al (2018) An approach for flood monitoring by the combined use of Landsat 8 optical imagery and COSMO-SkyMed radar imagery. ISPRS J Photogramm Remote Sens 136:144–153. ISSN: 0924-2716

Torres R et al (2012) GMES Sentinel-1 mission. In: Remote sensing of environment 120. The Sentinel missions – new opportunities for science, pp 9–24. ISSN: 0034-4257. https://doi.org/10.1016/j.rse.2011.05.028. http://www.sciencedirect.com/science/article/pii/S0034425712000600

Verhegghen A et al (2016) The potential of Sentinel satellites for burnt area mapping and monitoring in the Congo Basin Forests. Remote Sens 8(12). ISSN: 2072-4292. https://doi.org/10.3390/rs8120986. https://www.mdpi.com/2072-4292/8/12/986

Wang Y, Fang Z, Hong H (2019) Comparison of convolutional neural networks for landslide susceptibility mapping in Yanshan County, China. Sci Total Environ 666:975–993. ISSN: 18791026. https://doi.org/10.1016/j.scitotenv.2019.02.263

Werninghaus R, Buckreuss S (2010) The TerraSAR-X mission and system design. IEEE Trans Geosci Remote Sens 48(2):606–614. ISSN: 0196-2892. https://doi.org/10.1109/TGRS.2009.2031062

Xu H (2006) Modification of normalised difference water index (NDWI) to enhance open water features in remotely sensed imagery. Int J Remote Sens 27(14):3025–3033

Yamagishi H, Yamazaki F (2018) Landslides by the 2018 Hokkaido Iburi-Tobu earthquake on September 6. Landslides 15(12):2521–2524. ISSN: 1612-5118. https://doi.org/10.1007/s10346-018-1092-z

Yamaguchi Y (2012) Disaster monitoring by fully polarimetric SAR data acquired with ALOS-PALSAR. Proc IEEE 100(10):2851–2860. ISSN: 0018-9219. https://doi.org/10.1109/JPROC.2012.2195469. http://ieeexplore.ieee.org/document/6205771/

Yang W, Wang M, Shi P (2013) Using MODIS NDVI time series to identify geographic patterns of landslides in vegetated regions. IEEE Geosci Remote Sens Lett 10(4):707–710. ISSN:1545-598X. https://doi.org/10.1109/LGRS.2012.2219576

Yokoya N et al (2018) Open data for global multimodal land use classification: outcome of the 2017 IEEE GRSS Data Fusion Contest. IEEE J Sel Top Appl Earth Obs Remote Sens 11(5):1363–1377

Yun S-H et al (2015) Rapid damage mapping for the 2015 M w 7.8 Gorkha earthquake using synthetic aperture radar data from COSMO-SkyMed and ALOS-2 satellites. Seismological Res Lett 86(6):1549–1556. ISSN: 0895-0695. https://doi.org/10.1785/0220150152. http://srl.geoscienceworld.org/lookup/doi/10.1785/0220150152%20. https://pubs.geoscienceworld.org/srl/article/86/6/1549-1556/315478

Yusuf Y, Matsuoka M, Yamazaki F (2001) Damage assessment after 2001 Gujarat earthquake using Landsat-7 satellite images. J Indian Soc Remote Sens 29(1):17–22. ISSN: 0974-3006. https://doi.org/10.1007/BF02989909

Zhuo L et al (2019) Evaluation of remotely sensed soil moisture for land-slide hazard assessment. IEEE J Sel Top Appl Earth Obs Remote Sens 12(1):162–173. ISSN: 1939-1404

Acknowledgements

If you want to include acknowledgments of assistance and the like at the end of an individual chapter please use the acknowledgement environment – it will automatically be rendered in line with the preferred layout.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Adriano, B., Yokoya, N., Xia, J., Baier, G. (2021). Big Earth Observation Data Processing for Disaster Damage Mapping. In: Werner, M., Chiang, YY. (eds) Handbook of Big Geospatial Data. Springer, Cham. https://doi.org/10.1007/978-3-030-55462-0_4

Download citation

DOI: https://doi.org/10.1007/978-3-030-55462-0_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-55461-3

Online ISBN: 978-3-030-55462-0

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)