Abstract

The intrinsic volumes are measures of the content of a convex body. This paper applies probabilistic and information-theoretic methods to study the sequence of intrinsic volumes. The main result states that the intrinsic volume sequence concentrates sharply around a specific index, called the central intrinsic volume. Furthermore, among all convex bodies whose central intrinsic volume is fixed, an appropriately scaled cube has the intrinsic volume sequence with maximum entropy.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Alexandrov–Fenchel inequality

- Concentration

- Convex body

- Entropy

- Information theory

- Intrinsic volume

- Log-concave distribution

- Quermassintegral

- Ultra-log-concave sequence

6.1 Introduction and Main Results

Intrinsic volumes are the fundamental measures of content for a convex body. Some of the most celebrated results in convex geometry describe the properties of the intrinsic volumes and their interrelationships. In this paper, we identify several new properties of the sequence of intrinsic volumes by exploiting recent results from information theory and geometric functional analysis. In particular, we establish that the mass of the intrinsic volume sequence concentrates sharply around a specific index, which we call the central intrinsic volume. We also demonstrate that a scaled cube has the maximum-entropy distribution of intrinsic volumes among all convex bodies with a fixed central intrinsic volume.

6.1.1 Convex Bodies and Volume

For each natural number m, the Euclidean space \(\mathbb {R}^m\) is equipped with the ℓ 2 norm \(\left \Vert {\cdot } \right \Vert \), the associated inner product, and the canonical orthonormal basis. The origin of \(\mathbb {R}^m\) is written as 0 m.

Throughout the paper, n denotes a fixed natural number. A convex body in \(\mathbb {R}^n\) is a compact and convex subset, possibly empty. Throughout this paper, K will denote a nonempty convex body in \(\mathbb {R}^n\). The dimension of the convex body, \(\dim \mathsf {K}\), is the dimension of the affine hull of K; the dimension takes values in the range {0, 1, 2, …, n}. When K has dimension j, we define the j-dimensional volume Volj(K) to be the Lebesgue measure of K, computed relative to its affine hull. If K is zero-dimensional (i.e., a single point), then Vol0(K) = 1.

For sets \(\mathsf {C} \subset \mathbb {R}^n\) and \(\mathsf {D} \subset \mathbb {R}^m\), we define the orthogonal direct product

To be precise, the concatenation \((\boldsymbol {x}, \boldsymbol {y}) \in \mathbb {R}^{n + m}\) places \(\boldsymbol {x} \in \mathbb {R}^n\) in the first n coordinates and \(\boldsymbol {y} \in \mathbb {R}^m\) in the remaining (n − m) coordinates. In particular, K ×{0 m} is the natural embedding of K into \(\mathbb {R}^{n + m}\).

Several convex bodies merit special notation. The unit-volume cube is the set \(\mathsf {Q}_n := [0, 1]^n \subset \mathbb {R}^n\). We write \(\mathsf {B}_n := \{ \boldsymbol {x} \in \mathbb {R}^n : \left \Vert {\boldsymbol {x}} \right \Vert \leq 1 \}\) for the Euclidean unit ball. The volume κ n and the surface area ω n of the Euclidean ball are given by the formulas

As usual, Γ denotes the gamma function.

6.1.2 The Intrinsic Volumes

In this section, we introduce the intrinsic volumes, their properties, and connections to other geometric functionals. A good reference for this material is [32]. Intrinsic volumes are basic tools in stochastic and integral geometry [33], and they appear in the study of random fields [2].

We begin with a geometrically intuitive definition.

Definition 6.1.1 (Intrinsic Volumes)

For each index j = 0, 1, 2, …, n, let \(\boldsymbol {P}_j \in \mathbb {R}^{n \times n}\) be the orthogonal projector onto a fixed j-dimensional subspace of \(\mathbb {R}^n\). Draw a rotation matrix \(\boldsymbol {Q} \in \mathbb {R}^{n \times n}\) uniformly at random (from the Haar measure on the compact, homogeneous group of n × n orthogonal matrices with determinant one). The intrinsic volumes of the nonempty convex body \(\mathsf {K} \subset \mathbb {R}^n\) are the quantities

We write \(\operatorname {\mathbb {E}}\) for expectation and \(\operatorname {\mathbb {E}}_{X}\) for expectation with respect to a specific random variable X. The intrinsic volumes of the empty set are identically zero: V j(∅) = 0 for each index j.

Up to scaling, the jth intrinsic volume is the average volume of a projection of the convex body onto a j-dimensional subspace, chosen uniformly at random. Following Federer [11], we have chosen the normalization in (6.1.2) to remove the dependence on the dimension in which the convex body is embedded. McMullen [25] introduced the term “intrinsic volumes”. In her work, Chevet [10] called V j the j-ième épaisseur or the “jth thickness”.

Example 6.1.2 (The Euclidean Ball)

We can easily calculate the intrinsic volumes of the Euclidean unit ball because each projection is simply a Euclidean unit ball of lower dimension. Thus,

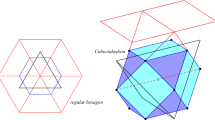

Example 6.1.3 (The Cube)

We can also determine the intrinsic volumes of a cube:

See Sect. 6.5 for the details of the calculation. A classic reference is [30, pp. 224–227].

6.1.2.1 Geometric Functionals

The intrinsic volumes are closely related to familiar geometric functionals. The intrinsic volume V 0 is called the Euler characteristic; it takes the value zero for the empty set and the value one for each nonempty convex body. The intrinsic volume V 1 is proportional to the mean width, scaled so that V 1([0, 1] ×{0 n−1}) = 1. Meanwhile, V n−1 is half the surface area, and V n coincides with the ordinary volume measure, Voln.

6.1.2.2 Properties

The intrinsic volumes satisfy many important properties. Let \(\mathsf {C}, \mathsf {K} \subset \mathbb {R}^n\) be nonempty convex bodies. For each index j = 0, 1, 2, …, n, the intrinsic volume V j is…

-

1.

Nonnegative: V j(K) ≥ 0.

-

2.

Monotone: C ⊂K implies V j(C) ≤ V j(K).

-

3.

Homogeneous: V j(λK) = λ jV j(K) for each λ ≥ 0.

-

4.

Invariant: V j(TK) = V j(K) for each proper rigid motion T. That is, T acts by rotation and translation.

-

5.

Intrinsic: V j(K) = V j(K ×{0 m}) for each natural number m.

-

6.

A Valuation: V j(∅) = 0. If C ∪K is also a convex body, then

$$\displaystyle \begin{aligned} V_j( \mathsf{C} \cap \mathsf{K} ) + V_j( \mathsf{C} \cup \mathsf{K} ) = V_j(\mathsf{C}) + V_j(\mathsf{K}). \end{aligned}$$ -

7.

Continuous: If K m →K in the Hausdorff metric, then V j(K m) → V j(K).

With sufficient energy, one may derive all of these facts directly from Definition 6.1.1. See the books [14, 20, 30, 32, 33] for further information about intrinsic volumes and related matters.

6.1.2.3 Hadwiger’s Characterization Theorems

Hadwiger [15,16,17] proved several wonderful theorems that characterize the intrinsic volumes. To state these results, we need a short definition. A valuation F on \(\mathbb {R}^n\) is simple if F(K) = 0 whenever \(\dim \mathsf {K} < n\).

Fact 6.1.4 (Uniqueness of Volume)

Suppose that F is a simple, invariant, continuous valuation on convex bodies in \(\mathbb {R}^n\). Then F is a scalar multiple of the intrinsic volume V n.

Fact 6.1.5 (The Basis of Intrinsic Volumes)

Suppose that F is an invariant, continuous valuation on convex bodies in \(\mathbb {R}^n\). Then F is a linear combination of the intrinsic volumes V 0, V 1, V 2, …, V n.

Together, these theorems demonstrate the fundamental importance of intrinsic volumes in convex geometry. They also construct a bridge to the field of integral geometry, which provides explicit formulas for geometric functionals defined by integrating over geometric groups (e.g., the family of proper rigid motions).

6.1.2.4 Quermassintegrals

With a different normalization, the mean projection volume appearing in (6.1.2) is also known as a quermassintegral. The relationship between the quermassintegrals and the intrinsic volumes is

The notation reflects the fact that the quermassintegral \(W^{(n)}_j\) depends on the ambient dimension n, while the intrinsic volume does not.

6.1.3 The Intrinsic Volume Random Variable

In view of Example 6.1.3, we see that the intrinsic volume sequence of the cube Q n is sharply peaked (around index n∕2). Example 6.1.2 shows that intrinsic volumes of the Euclidean ball B n drop off quickly (starting around index \(\sqrt {2\pi n}\)). This observation motivates us to ask whether the intrinsic volumes of a general convex body also exhibit some type of concentration.

It is natural to apply probabilistic methods to address this question. To that end, we first need to normalize the intrinsic volumes to construct a probability distribution.

Definition 6.1.6 (Normalized Intrinsic Volumes)

The total intrinsic volume of the convex body K, also known as the Wills functional [18, 25, 37], is the quantity

The normalized intrinsic volumes compose the sequence

In particular, the sequence \(\{ \tilde {V}_j(\mathsf {K}) : j = 0, 1, 2, \dots , n \}\) forms a probability distribution.

In spite of the similarity of notation, the total intrinsic volume W should not be confused with a quermassintegral.

We may now construct a random variable that reflects the distribution of the intrinsic volumes of a convex body.

Definition 6.1.7 (Intrinsic Volume Random Variable)

The intrinsic volume random variable Z K associated with a convex body K takes nonnegative integer values according to the distribution

The mean of the intrinsic volume random variable plays a special role in the analysis, so we exalt it with its own name and notation.

Definition 6.1.8 (Central Intrinsic Volume)

The central intrinsic volume of the convex body K is the quantity

Equivalently, the central intrinsic volume is the centroid of the sequence of intrinsic volumes.

Since the intrinsic volume sequence of a convex body \(\mathsf {K} \subset \mathbb {R}^n\) is supported on {0, 1, 2, …, n}, it is immediate that the central intrinsic volume satisfies Δ(K) ∈ [0, n]. The extreme n is unattainable (because a nonempty convex body has Euler characteristic V 0(K) = 1). But it is easy to construct examples that achieve values across the rest of the range.

Example 6.1.9 (The Scaled Cube)

Fix s ∈ [0, ∞). Using Example 6.1.3 and the homogeneity of intrinsic volumes, we see that total intrinsic volume of the scaled cube is

The central intrinsic volume of the scaled cube is

We recognize the mean of the random variable Bin(s∕(1 + s), n) to reach the last identity. Note that the quantity Δ(sQ n) = ns∕(1 + s) sweeps through the interval [0, n) as we vary s ∈ [0, ∞).

Example 6.1.10 (Large Sets)

More generally, we can compute the limits of the normalized intrinsic volumes of a growing set:

This point follows from the homogeneity of intrinsic volumes, noted in Sect. 6.1.2.2.

6.1.4 Concentration of Intrinsic Volumes

Our main result states that the intrinsic volume random variable concentrates sharply around the central intrinsic volume.

Theorem 6.1.11 (Concentration of Intrinsic Volumes)

Let \(\mathsf {K} \subset \mathbb {R}^n\) be a nonempty convex body with intrinsic volume random variable Z K . The variance satisfies

Furthermore, in the range \(0 \leq t \leq \sqrt {n}\) , we have the tail inequality

To prove this theorem, we first convert questions about the intrinsic volume random variable into questions about metric geometry (Sect. 6.2). We reinterpret the metric geometry formulations in terms of the information content of a log-concave probability density. Then we can control the variance (Sect. 6.3) and concentration properties (Sect. 6.4) of the intrinsic volume random variable using the analogous results for the information content random variable.

A general probability distribution on {0, 1, 2, …, n} can have variance higher than n 2∕3. In contrast, the intrinsic volume random variable has variance no greater than 4n. Moreover, the intrinsic volume random variable behaves, at worst, like a normal random variable with mean \(\operatorname {\mathbb {E}} Z_{\mathsf {K}}\) and variance less than 5n. Thus, most of the mass of the intrinsic volume sequence is concentrated on an interval of about \(O( \sqrt {n} )\) indices.

Looking back to Example 6.1.3, concerning the unit-volume cube Q n, we see that Theorem 6.1.11 gives a qualitatively accurate description of the intrinsic volume sequence. On the other hand, the bounds for scaled cubes sQ n can be quite poor; see Sect. 6.5.3.

6.1.5 Concentration of Conic Intrinsic Volumes

Theorem 6.1.11 and its proof parallel recent developments in the theory of conic intrinsic volumes, which appear in the papers [3, 13, 22]. Using the concentration of conic intrinsic volumes, we were able to establish that random configurations of convex cones exhibit striking phase transitions; these facts have applications in signal processing [3, 21, 23, 24]. We are confident that extending the ideas in the current paper will help us discover new phase transition phenomena in Euclidean integral geometry.

6.1.6 Maximum-Entropy Convex Bodies

The probabilistic approach to the intrinsic volume sequence suggests other questions to investigate. For instance, we can study the entropy of the intrinsic volume random variable, which reflects the dispersion of the intrinsic volume sequence.

Definition 6.1.12 (Intrinsic Entropy)

Let \(\mathsf {K} \subset \mathbb {R}^n\) be a nonempty convex body. The intrinsic entropy of K is the entropy of the intrinsic volume random variable Z K:

We have the following extremal result.

Theorem 6.1.13 (Cubes Have Maximum Entropy)

Fix the ambient space \(\mathbb {R}^n\), and let d ∈ [0, n). There is a scaled cube whose central intrinsic volume equals d:

Among convex bodies with central intrinsic volume d, the scaled cube s d,n Q n has the maximum intrinsic entropy. Among all convex bodies, the unit-volume cube has the maximum intrinsic entropy. In symbols,

The maximum takes place over all nonempty convex bodies \(\mathsf {K} \subset \mathbb {R}^n\).

The proof of Theorem 6.1.13 also depends on recent results from information theory, as well as some deep properties of the intrinsic volume sequence. This analysis appears in Sect. 6.6.

Theorem 6.1.13 joins a long procession of results on the extremal properties of the cube. In particular, the cube solves the (affine) reverse isoperimetric problem for symmetric convex bodies [5]. That is, every symmetric convex body \(\mathsf {K} \subset \mathbb {R}^n\) has an affine image whose volume is one and whose surface area is not greater than 2n, the surface area of Q n. See Sect. 6.1.7.2 for an equivalent statement.

Remark 6.1.14 (Minimum Entropy)

The convex body consisting of a single point \(\boldsymbol {x}_0 \in \mathbb {R}^n\) has the minimum intrinsic entropy: IntEnt({x 0}) = 0. Very large convex bodies also have negligible entropy:

The limit is a consequence of Example 6.1.10.

6.1.7 Other Inequalities for Intrinsic Volumes

The classic literature on convex geometry contains a number of prominent inequalities relating the intrinsic volumes, and this topic continues to arouse interest. This section offers a short overview of the main results of this type. Our presentation is influenced by [26, 28]. See [32, Chap. 7] for a comprehensive treatment.

Remark 6.1.15 (Unrelated Work)

Although the title of the paper [1] includes the phrase “concentration of intrinsic volumes,” the meaning is quite different. Indeed, the focus of that work is to study hyperplane arrangements via the intrinsic volumes of a random sequence associated with the arrangement.

6.1.7.1 Ultra-Log-Concavity

The Alexandrov–Fenchel inequality (AFI) is a profound result on the behavior of mixed volumes; see [32, Sec. 7.3] or [34]. We can specialize the AFI from mixed volumes to the particular case of quermassintegrals. In this instance, the AFI states that the quermassintegrals of a convex body \(\mathsf {K} \subset \mathbb {R}^n\) compose a log-concave sequence:

As Chevet [10] and McMullen [26] independently observed, the log-concavity (6.1.6) of the quermassintegral sequence implies that the intrinsic volumes form an ultra-log-concave (ULC) sequence:

This fact plays a key role in the proof of Theorem 6.1.13. For more information on log-concavity and ultra-log-concavity, see the survey article [31].

From (6.1.7), Chevet and McMullen both deduce that all of the intrinsic volumes are controlled by the first one, and they derive an estimate for the total intrinsic volume:

This estimate implies some growth and decay properties of the intrinsic volume sequence. An interesting application appears in Vitale’s paper [35], which derives concentration for the supremum of a Gaussian process from the foregoing bound on the total intrinsic volume.

It is possible to establish a concentration result for intrinsic volumes as a direct consequence of (6.1.7). Indeed, it is intuitive that a ULC sequence should concentrate around its centroid. This point follows from Caputo et al. [9, Sec. 3.2], which transcribes the usual semigroup proof of a log-Sobolev inequality to the discrete setting. When applied to intrinsic volumes, this method gives concentration on the scale of the mean width V 1(K) of the convex body K. This result captures a phenomenon different from Theorem 6.1.11, where the scale for the concentration is the dimension n.

6.1.7.2 Isoperimetric Ratios

Another classical consequence of the AFI is a sequence of comparisons for the isoperimetric ratios of the volume of a convex body \(\mathsf {K} \subset \mathbb {R}^n\), relative to the Euclidean ball B n:

The first inequality is the isoperimetric inequality, and the inequality between V n and V 1 is called Urysohn’s inequality [32, Sec. 7.2]. Isoperimetric ratios play a prominent role in asymptotic convex geometry; for example, see [4, 6, 29].

Some of the inequalities in (6.1.8) can be inverted by applying affine transformations. For example, Ball’s reverse isoperimetric inequality [5] states that K admits an affine image \(\hat {\mathsf {K}}\) for which

The sharp value for the constant is known; equality holds when K is a simplex. If we restrict our attention to symmetric convex bodies, then the cube is extremal.

The recent paper [28] of Paouris et al. contains a more complete, but less precise, set of reversals. Suppose that K is a symmetric convex body. Then there is a parameter β ⋆ := β ⋆(K) for which

The constants here are universal but unspecified. This result implies that the prefix of the sequence of isoperimetric ratios is roughly constant. The result (6.1.9) leaves open the question about the behavior of the sequence beyond the distinguished point.

It would be interesting to reconcile the work of Paouris et al. [28] with Theorem 6.1.11. In particular, it is unclear whether the isoperimetric ratios remain constant, or whether they exhibit some type of phase transition. We believe that our techniques have implications for this question.

6.2 Steiner’s Formula and Distance Integrals

The first step in our program is to convert questions about the intrinsic volume random variable into questions in metric geometry. We can accomplish this goal using Steiner’s formula, which links the intrinsic volumes of a convex body to its expansion properties. We reinterpret Steiner’s formula as a distance integral, and we use this result to compute moments of the intrinsic volume random variable. This technique, which appears to be novel, drives our approach.

6.2.1 Steiner’s Formula

The Minkowski sum of a nonempty convex body and a Euclidean ball is called a parallel body. Steiner’s formula gives an explicit expansion for the volume of the parallel body in terms of the intrinsic volumes of the convex body.

Fact 6.2.1 (Steiner’s Formula)

Let \(\mathsf {K} \subset \mathbb {R}^n\) be a nonempty convex body. For each λ ≥ 0,

In other words, the volume of the parallel body is a polynomial function of the expansion radius. Moreover, the coefficients depend only on the intrinsic volumes of the convex body. The proof of Fact 6.2.1 is fairly easy; see [14, 32].

Remark 6.2.2 (Steiner and Kubota)

Steiner’s formula can be used to define the intrinsic volumes. The definition we have given in (6.1.2) is usually called Kubota’s formula; it can be derived as a consequence of Fact 6.2.1 and Cauchy’s formula for surface area. For example, see [4, Sec. B.5].

6.2.2 Distance Integrals

The parallel body can also be expressed as the set of points within a fixed distance of the convex body. This observation motivates us to introduce the distance to a convex set.

Definition 6.2.3 (Distance to a Convex Body)

The distance to a nonempty convex body K is the function

It is not hard to show that the distance, \( \operatorname {\mathrm {dist}}(\cdot , \mathsf {K})\), and its square, \( \operatorname {\mathrm {dist}}^2( \cdot , \mathsf {K} )\), are both convex functions.

Here is an alternative statement of Steiner’s formula in terms of distance integrals [18].

Proposition 6.2.4 (Distance Integrals)

Let \(\mathsf {K} \subset \mathbb {R}^n\) be a nonempty convex body. Let \(f : \mathbb {R}_+ \to \mathbb {R}\) be an absolutely integrable function. Provided that the integrals on the right-hand side converge,

This result is equivalent to Fact 6.2.1.

Proof

For r > 0, Steiner’s formula gives an expression for the volume of the locus of points within distance r of the convex body:

The rate of change in this volume satisfies

We have used the relation (6.1.1) that ω n−j = (n − j)κ n−j.

Let μ ♯ be the push-forward of the Lebesgue measure on \(\mathbb {R}^n\) to \(\mathbb {R}_+\) by the function \( \operatorname {\mathrm {dist}}(\cdot ; \mathsf {K})\). That is,

This measure clearly satisfies μ ♯({0}) = V n(K). Beyond that, when 0 < a < b,

Therefore, by definition of the push-forward,

Introduce (6.2.1) into the last display to arrive at the result. □

6.2.3 Moments of the Intrinsic Volume Sequence

We can compute moments (i.e., linear functionals) of the sequence of intrinsic volumes by varying the function f in Proposition 6.2.4. To that end, it is helpful to make another change of variables.

Corollary 6.2.5 (Distance Integrals II)

Let \(\mathsf {K} \subset \mathbb {R}^n\) be a nonempty convex body. Let \(g : \mathbb {R}_+ \to \mathbb {R}\) be an absolutely integrable function. Provided the integrals on the right-hand side converge,

Proof

Set \(f(r) = g( \pi r^2 ) \cdot \mathrm {e}^{-\pi r^2}\) in Proposition 6.2.4 and invoke (6.1.1). □

We are now prepared to compute some specific moments of the intrinsic volume sequence by making special choices of g in Corollary 6.2.5.

Example 6.2.6 (Total Intrinsic Volume)

Consider the case where g(r) = 1. We obtain the appealing formula

The total intrinsic volume W(K) was defined in (6.1.3). This identity appears in [18, 25].

Example 6.2.7 (Central Intrinsic Volume)

The choice g(r) = 2r∕W(K) yields

We have recognized the total intrinsic volume (6.1.3) and the central intrinsic volume (6.1.5).

Example 6.2.8 (Generating Functions)

We can also develop an expression for the generating function of the intrinsic volume sequence by selecting \(g(r) = \mathrm {e}^{(1-\lambda ^2) r}\). Thus,

This expression is valid for all λ > 0. See [18] or [33, Lem. 14.2.1].

We can reframe the relation (6.2.2) in terms of the moment generating function of the intrinsic volume random variable Z K. To do so, we make the change of variables λ = eθ and divide by the total intrinsic volume W(K):

This expression remains valid for all \(\theta \in \mathbb {R}\).

Remark 6.2.9 (Other Moments)

In fact, we can compute any moment of the intrinsic volume sequence by selecting an appropriate function f in Proposition 6.2.4. Corollary 6.2.5 is designed to produce gamma integrals. Beta integrals also arise naturally and lead to other striking relations. For instance,

The intrinsic volumes of the Euclidean ball are computed in Example 6.1.2. Isoperimetric ratios appear naturally in convex geometry (see Sect. 6.1.7.2), so this type of result may have independent interest.

6.3 Variance of the Intrinsic Volume Random Variable

Let us embark on our study of the intrinsic volume random variable. The main result of this section states that the variance of the intrinsic volume random variable is significantly smaller than its range. This is a more precise version of the variance bound in Theorem 6.1.11.

Theorem 6.3.1 (Variance of the Intrinsic Volume Random Variable)

Let \(\mathsf {K} \subset \mathbb {R}^n\) be a nonempty convex body with intrinsic volume random variable Z K . We have the inequalities

The proof of Theorem 6.3.1 occupies the rest of this section. We make a connection between the distance integrals from Sect. 6.2 and the information content of a log-concave probability measure. By using recent results on the variance of information, we can develop bounds for the distance integrals. These results, in turn, yield bounds on the variance of the intrinsic volume random variable. A closely related argument, appearing in Sect. 6.4, produces exponential concentration.

Remark 6.3.2 (An Alternative Argument)

Theorem 6.3.1 can be sharpened using variance inequalities for log-concave densities. Indeed, it holds that

To prove this claim, we apply the Brascamp–Lieb inequality [8, Thm. 4.1] to a perturbation of the log-concave density (6.3.4) described below. It is not clear whether similar ideas lead to normal concentration (because the density is not strongly log-concave), so we have chosen to omit this development.

6.3.1 The Varentropy of a Log-Concave Distribution

First, we outline some facts from information theory about the information content in a log-concave random variable. Let \(\mu : \mathbb {R}^n \to \mathbb {R}_+\) be a log-concave probability density; that is, a probability density that satisfies the inequalities

We define the information content I μ of a random point drawn from the density μ to be the random variable

The symbol ∼ means “has the distribution.” The terminology is motivated by the operational interpretation of the information content of a discrete random variable as the number of bits required to represent a random realization using a code with minimal average length [7].

The expected information content \(\operatorname {\mathbb {E}} I_{\mu }\) is usually known as the entropy of the distribution μ. The varentropy of the distribution is the variance of information content:

Here and elsewhere, nonlinear functions bind before the expectation.

Bobkov and Madiman [7] showed that the varentropy of a log-concave distribution on \(\mathbb {R}^n\) is not greater than a constant multiple of n. Other researchers quickly determined the optimal constant. The following result was obtained independently by Nguyen [27] and by Wang [36] in their doctoral dissertations.

Fact 6.3.3 (Varentropy of a Log-Concave Distribution)

Let \(\mu : \mathbb {R}^n \to \mathbb {R}_+\) be a log-concave probability density. Then

See Fradelizi et al. [12] for more background and a discussion of this result.

For future reference, note that the varentropy and related quantities exhibit a simple scale invariance. Consider the shifted information content

It follows from the definition that

In particular, \( \operatorname {\mathrm {Var}}[ I_{c\mu } ] = \operatorname {\mathrm {Var}}[ I_{\mu } ]\).

6.3.2 A Log-Concave Density

Next, we observe that the central intrinsic volume is related to the information content of a log-concave density. For a nonempty convex body \(\mathsf {K} \subset \mathbb {R}^n\), define

The density μ K is log-concave because the squared distance to a convex body is a convex function. The calculation in Example 6.2.6 ensures that μ K is a probability density.

Introduce the (shifted) information content random variable associated with K:

Up to the presence of the factor W(K), the random variable H K is the information content of a random draw from the distribution μ K. In view of (6.3.2) and (6.3.3),

More generally, all central moments and cumulants of H K coincide with the corresponding central moments and cumulants of \(I_{\mu _{\mathsf {K}}}\):

This expression is valid for any function \(f : \mathbb {R} \to \mathbb {R}\) such that the expectations exist.

6.3.3 Information Content and Intrinsic Volumes

We are now prepared to connect the moments of the intrinsic volume random variable Z K with the moments of the information content random variable H K. These representations allow us to transfer results about information content into data about the intrinsic volumes.

Using the notation from the last section, Example 6.2.7 gives a relation between the expectations:

The next result provides a similar relationship between the variances.

Proposition 6.3.4 (Variance of the Intrinsic Volume Random Variable)

Let \(\mathsf {K} \subset \mathbb {R}^n\) be a nonempty convex body with intrinsic volume random variable Z K and information content random variable H K . We have the variance identity

Proof

Apply Corollary 6.2.5 with the function g(r) = 4r 2∕W(K) to obtain

We have used the definition (6.1.4) of the intrinsic volume random variable to express the sum as an expectation. In the last step, we used the relation (6.3.8) twice to pass to the random variable H K. Finally, rearrange the display to complete the proof. □

6.3.4 Proof of Theorem 6.3.1

We may now establish the main result of this section. Proposition 6.3.4 yields

We have invoked (6.3.6) to replace the variance of H K with the varentropy and (6.3.8) to replace \(\operatorname {\mathbb {E}} H_{\mathsf {K}}\) by the central intrinsic volume \(\operatorname {\mathbb {E}} Z_{\mathsf {K}}\). The inequality is a consequence of Fact 6.3.3, which controls the varentropy of the log-concave density μ K. We obtain the final bound by noting that \(\operatorname {\mathbb {E}} Z_{\mathsf {K}} \leq n\).

Here is an alternative approach to the final bound that highlights the role of the varentropy:

The first inequality follows from Proposition 6.3.4, and the second inequality is Fact 6.3.3.

6.4 Concentration of the Intrinsic Volume Random Variable

The square root of the variance of the intrinsic volume random variable Z K gives the scale for fluctuations about the mean. These fluctuations have size \(O(\sqrt {n})\), which is much smaller than the O(n) range of the random variable. This observation motivates us to investigate the concentration properties of Z K. In this section, we develop a refined version of the tail bound from Theorem 6.1.11.

Theorem 6.4.1 (Tail Bounds for Intrinsic Volumes)

Let \(\mathsf {K} \subset \mathbb {R}^n\) be a nonempty convex body with intrinsic volume random variable Z K. For all t ≥ 0, we have the inequalities

The function \(\psi ^*(s) := ((1+s)\log (1+s) - s)/2\) for s > −1.

The proof of this result follows the same pattern as the argument from Theorem 6.3.1. In Sect. 6.4.5, we derive Theorem 6.4.1 as an immediate consequence.

6.4.1 Moment Generating Function of the Information Content

In addition to the variance, one may study other moments of the information content random variable. In particular, bounds for the moment generating function (mgf) of the centered information content lead to exponential tail bounds for the information content. Bobkov and Madiman [7] proved the first result in this direction. More recently, Fradelizi et al. [12] have obtained the optimal bound.

Fact 6.4.2 (Information Content mgf)

Let \(\mu : \mathbb {R}^n \to \mathbb {R}_+\) be a log-concave probability density. For β < 1,

where \(\varphi (s) := - s - \log (1-s)\) for s < 1. The information content random variable I μ is defined in (6.3.1).

6.4.2 Information Content and Intrinsic Volumes

We extract concentration inequalities for the intrinsic volume random variable Z K by studying its (centered) exponential moments. Define

The first step in the argument is to represent the mgf in terms of the information content random variable H K defined in (6.3.5).

Proposition 6.4.3 (mgf of Intrinsic Volume Random Variable)

Let \(\mathsf {K} \subset \mathbb {R}^n\) be a nonempty convex body with intrinsic volume random variable Z K and information content random variable H K. For \(\theta \in \mathbb {R}\),

The function φ is defined in Fact 6.4.2.

Proof

The formula (6.2.3) from Example 6.2.8 yields the identity

We can transfer this result to obtain another representation for m K. First, use the identity (6.3.8) to replace \(\operatorname {\mathbb {E}} Z_{\mathsf {K}}\) with \(\operatorname {\mathbb {E}} H_{\mathsf {K}}\). Then invoke the last display to reach

In the last step, we have made the change of variables β = 1 −e2θ. Finally, identify the value − φ(β) in the first exponent. □

6.4.3 A Bound for the mgf

We are now prepared to bound the mgf m K. This result will lead directly to concentration of the intrinsic volume random variable.

Proposition 6.4.4 (A Bound for the mgf)

Let \(\mathsf {K} \subset \mathbb {R}^n\) be a nonempty convex body with intrinsic volume random variable Z K. For \(\theta \in \mathbb {R}\),

where ψ(s) := (e2s − 2s − 1)∕2 for \(s \in \mathbb {R}\).

Proof

For the parameter β = 1 −e2θ, Proposition 6.4.3 yields

To reach the second line, we use the equivalence (6.3.7) for the central moments. The inequality is Fact 6.4.2, the mgf bound for the information content \(I_{\mu _{\mathsf {K}}}\) of the log-concave density μ K. Afterward, we invoke (6.3.8) to pass from the information content random variable H K to the intrinsic volume random variable Z K. The next step is algebraic. The result follows when we return from the variable β to the variable θ, leading to the appearance of the function ψ. □

6.4.4 Proof of Theorem 6.4.1

The Laplace transform method, combined with the mgf bound from Proposition 6.4.4, produces Bennett-type inequalities for the intrinsic volume random variable. In brief,

The Fenchel–Legendre conjugate ψ ∗ of the function ψ has the explicit form given in the statement of Theorem 6.4.1. The lower tail bound follows from the same argument.

6.4.5 Proof of Theorem 6.1.11

The concentration inequality in the main result, Theorem 6.4.1, follows when we weaken the inequalities obtained in the last section. Comparing derivatives, we can verify that ψ ∗(s) ≥ (s 2∕4)∕(1 + s∕3) for all s > −1. For the interesting range, 0 ≤ t ≤ n, we have

We may combine this pair of inequalities into a single bound:

Make the estimate \(\operatorname {\mathbb {E}} Z_{\mathsf {K}} \leq n\), and bound the denominator using t ≤ n. This completes the argument.

6.5 Example: Rectangular Parallelotopes

In this section, we work out the intrinsic volume sequence of a rectangular parallelotope. This computation involves the generating function of the intrinsic volume sequence. Because of its elegance, we develop this method in more depth than we need to treat the example at hand.

6.5.1 Generating Functions and Intrinsic Volumes

To begin, we collect some useful information about the properties of the generating function of the intrinsic volumes.

Definition 6.5.1 (Intrinsic Volume Generating Function)

The generating function of the intrinsic volumes of the convex body K is the polynomial

We can use the generating function to read off some information about a convex body, including the total intrinsic volume and the central intrinsic volume. This is a standard result [38, Sec. 4.1], so we omit the elementary argument.

Proposition 6.5.2 (Properties of the Generating Function)

For each nonempty convex body \(\mathsf {K} \subset \mathbb {R}^n\),

As usual, the prime ′ denotes a derivative.

It is usually challenging to compute the intrinsic volumes of a convex body, but the following fact allows us to make short work of some examples.

Fact 6.5.3 (Direct Products)

Let \(\mathsf {C} \subset \mathbb {R}^{n_1}\) and \(\mathsf {K} \subset \mathbb {R}^{n_2}\) be nonempty convex bodies. The generating function of the intrinsic volumes of the convex body \(\mathsf {C} \times \mathsf {K} \subset \mathbb {R}^{n_1 + n_2}\) takes the form

For completeness, we include a short proof inspired by Hadwiger [18]; see [33, Lem. 14.2.1].

Proof

Abbreviate n := n 1 + n 2. For a point \(\boldsymbol {x} \in \mathbb {R}^n\), write x = (x 1, x 2) where \(\boldsymbol {x}_i \in \mathbb {R}^{n_i}\). Then

Invoke the formula (6.2.2) from Example 6.2.8 for the generating function of the intrinsic volumes (three times!). For λ > 0,

Cancel the leading factors of λ to complete the argument. □

As a corollary, we can derive an expression for the central intrinsic volume of a direct product.

Corollary 6.5.4 (Central Intrinsic Volume of a Product)

Let \(\mathsf {C} \subset \mathbb {R}^{n_1}\) and \(\mathsf {K} \subset \mathbb {R}^{n_2}\) be nonempty convex bodies. Then

Proof

According to Proposition 6.5.2 and Fact 6.5.3,

This is what we needed to show. □

6.5.2 Intrinsic Volumes of a Rectangular Parallelotope

Using Fact 6.5.3, we quickly compute the intrinsic volumes and related statistics for a rectangular parallelotope.

Proposition 6.5.5 (Rectangular Parallelotopes)

For parameters s 1, s 2, …, s n ≥ 0, construct the rectangular parallelotope

The generating function for the intrinsic volumes of the parallelotope P satisfies

In particular, V j(K) = e j(s 1, …, s n), where e j denotes the jth elementary symmetric function. The total intrinsic volume and central intrinsic volume satisfy

Proof

Let s ≥ 0. By direct calculation from Definition 6.1.1, the intrinsic volumes of the interval \([0,s] \subset \mathbb {R}^1\) are V 0([0, s]) = 1 and V 1([0, s]) = s. Thus,

Fact 6.5.3 implies that the generating function for the intrinsic volumes of the parallelotope P is

We immediately obtain formulas for the total intrinsic volume and the central intrinsic volume from Proposition 6.5.2. Alternatively, we can compute the central intrinsic volume of an interval [0, s] and use Corollary 6.5.4 to extend this result to the parallelotope P. □

6.5.3 Intrinsic Volumes of a Cube

As an immediate consequence of Proposition 6.5.5, we obtain a clean result on the intrinsic volumes of a scaled cube.

Corollary 6.5.6 (Cubes)

Let \(\mathsf {Q}_n \subset \mathbb {R}^n\) be the unit cube. For s ≥ 0, the normalized intrinsic volumes of the scaled cube sQ n coincide with a binomial distribution. For each j = 0, 1, 2, …, n,

In particular, the central intrinsic volume of the scaled cube is

Corollary 6.5.6 plays a starring role in our analysis of the intrinsic volume sequences that attain the maximum entropy.

We can also use Corollary 6.5.6 to test our results on the variance and concentration properties of the intrinsic volume sequence by comparing them with exact computations for the cube. Fix a number s ≥ 0, and let p = s∕(1 + s). Then

Meanwhile, Theorem 6.3.1 gives the upper bound

For s = 1, the ratio of the upper bound to the exact variance is 12. For s ≈ 0 and s →∞, the ratio becomes arbitrarily large. Similarly, Theorem 6.4.1 gives a qualitatively good description for s = 1, but its predictions are far less accurate for small and large s. There remains more work to do!

6.6 Maximum-Entropy Distributions of Intrinsic Volumes

We have been using probabilistic methods to study the intrinsic volumes of a convex body, and we have seen that the intrinsic volume sequence is concentrated, as reflected in the variance bound (Theorem 6.3.1) and the exponential tail bounds (Theorem 6.4.1). Therefore, it is natural to consider other measures of the dispersion of the sequence. We recall Definition 6.1.12, of the intrinsic entropy, which is the entropy of the normalized intrinsic volume sequence. This concept turns out to be interesting.

In this section, we will establish Theorem 6.1.13. This result states that, among all convex bodies with a fixed central intrinsic volume, a scaled cube has the largest entropy. Moreover, the unit-volume cube has the largest intrinsic entropy among all convex bodies in a fixed dimension. We prove this theorem using some recent observations from information theory.

6.6.1 Ultra-Log-Concavity and Convex Bodies

The key step in proving Theorem 6.1.13 is to draw a connection between intrinsic volumes and ultra-log-concave sequences. We begin with an important definition.

Definition 6.6.1 (Ultra-Log-Concave Sequence)

A nonnegative sequence {a j : j = 0, 1, 2, … } is called ultra-log-concave, briefly ULC, if it satisfies the relations

It is equivalent to say that the sequence {j! a j : j = 0, 1, 2, … } is log-concave.

Among all finitely supported ULC probability distributions, the binomial distributions have the maximum entropy. This result was obtained by Yaming Yu [39] using methods developed by Oliver Johnson [19] for studying the maximum-entropy properties of Poisson distributions.

Fact 6.6.2 (Binomial Distributions Maximize Entropy)

Let p ∈ [0, 1], and fix a natural number n. Among all ULC probability distributions with mean pn that are supported on {0, 1, 2, …, n}, the binomial distribution Bin(p, n) has the maximum entropy.

These facts are relevant to our discussion because the intrinsic volumes of a convex body form an ultra-log-concave sequence.

Fact 6.6.3 (Intrinsic Volumes are ULC)

The normalized intrinsic volumes of a nonempty convex body in \(\mathbb {R}^n\) compose a ULC probability distribution supported on {0, 1, 2, …, n}.

This statement is a consequence of the Alexandrov–Fenchel inequalities [32, Sec. 7.3]; see the papers of Chevet [10] and McMullen [26].

6.6.2 Proof of Theorem 6.1.13

With this information at hand, we quickly establish the main result of the section. Recall that Q n denotes the unit-volume cube in \(\mathbb {R}^n\). Let \(\mathsf {K} \subset \mathbb {R}^n\) be a nonempty convex body. Define the number p ∈ [0, 1) by the relation pn = Δ(K). According to Corollary 6.5.6, the scaled cube sQ n satisfies

Fact 6.6.3 ensures that the normalized intrinsic volume sequence of the convex body K is a ULC probability distribution supported on {0, 1, 2, …, n}. Since \(\operatorname {\mathbb {E}} Z_{\mathsf {K}} = \Delta (\mathsf {K}) = pn\), Fact 6.6.2 now delivers

We have used Corollary 6.5.6 again to see that \(Z_{s \mathsf {Q}_n} \sim \textsc {Bin}(p, n)\). The remaining identities are simply the definition of the intrinsic entropy. In other words, the scaled cube has the maximum intrinsic entropy among all convex bodies that share the same central intrinsic volume.

It remains to show that the unit-volume cube has maximum intrinsic entropy among all convex bodies. Continuing the analysis in the last display, we find that

Indeed, among the binomial distributions Bin(p, n) for p ∈ [0, 1], the maximum entropy distribution is Bin(1∕2, n). But this is the distribution of \(Z_{\mathsf {Q}_n}\), the intrinsic volume random variable of the unit cube Q n. This observation implies the remaining claim in Theorem 6.1.13.

References

K. Adiprasito, R. Sanyal, Whitney numbers of arrangements via measure concentration of intrinsic volumes (2016). http://arXiv.org/abs/1606.09412

R.J. Adler, J.E. Taylor, Random fields and geometry. Springer Monographs in Mathematics (Springer, New York, 2007)

D. Amelunxen, M. Lotz, M.B. McCoy, J.A. Tropp, Living on the edge: phase transitions in convex programs with random data. Inf. Inference 3(3), 224–294 (2014)

S. Artstein-Avidan, A. Giannopoulos, V.D. Milman, Asymptotic geometric analysis. Part I. Mathematical Surveys and Monographs, vol. 202 (American Mathematical Society, Providence, 2015)

K. Ball, Volume ratios and a reverse isoperimetric inequality. J. Lond. Math. Soc. 44(2), 351–359 (1991)

K. Ball, An elementary introduction to modern convex geometry, in Flavors of geometry. Mathematical Sciences Research Institute Publications, vol. 31 (Cambridge Univ. Press, Cambridge, 1997), pp. 1–58

S. Bobkov, M. Madiman, Concentration of the information in data with log-concave distributions. Ann. Probab. 39(4), 1528–1543 (2011)

H.J. Brascamp, E.H. Lieb, On extensions of the Brunn-Minkowski and Prékopa-Leindler theorems, including inequalities for log concave functions, and with an application to the diffusion equation. J. Funct. Anal. 22(4), 366–389 (1976)

P. Caputo, P. Dai Pra, G. Posta, Convex entropy decay via the Bochner-Bakry-Emery approach. Ann. Inst. Henri Poincaré Probab. Stat. 45(3), 734–753 (2009)

S. Chevet, Processus Gaussiens et volumes mixtes. Z. Wahrscheinlichkeitstheorie und Verw. Gebiete 36(1), 47–65 (1976)

H. Federer, Curvature measures. Trans. Am. Math. Soc. 93, 418–491 (1959)

M. Fradelizi, M. Madiman, L. Wang, Optimal concentration of information content for log-concave densities, in High dimensional probability VII. Progress in Probability, vol. 71 (Springer, Berlin, 2016), pp. 45–60

L. Goldstein, I. Nourdin, G. Peccati, Gaussian phase transitions and conic intrinsic volumes: steining the Steiner formula. Ann. Appl. Probab. 27(1), 1–47 (2017)

P.M. Gruber, Convex and discrete geometry. Grundlehren der Mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences], vol. 336 (Springer, Berlin, 2007)

H. Hadwiger, Beweis eines Funktionalsatzes für konvexe Körper. Abh. Math. Sem. Univ. Hamburg 17, 69–76 (1951)

H. Hadwiger, Additive Funktionale k-dimensionaler Eikörper. I. Arch. Math. 3, 470–478 (1952)

H. Hadwiger, Vorlesungen über Inhalt, Oberfläche und Isoperimetrie (Springer, Berlin, 1957)

H. Hadwiger, Das Wills’sche funktional. Monatsh. Math. 79, 213–221 (1975)

O. Johnson, Log-concavity and the maximum entropy property of the Poisson distribution. Stoch. Process. Appl. 117(6), 791–802 (2007)

D.A. Klain, G.-C. Rota, Introduction to geometric probability. Lezioni Lincee. [Lincei Lectures] (Cambridge University Press, Cambridge, 1997)

M.B. McCoy, A geometric analysis of convex demixing (ProQuest LLC, Ann Arbor, 2013). Thesis (Ph.D.)–California Institute of Technology

M.B. McCoy, J.A. Tropp, From Steiner formulas for cones to concentration of intrinsic volumes. Discrete Comput. Geom. 51(4), 926–963 (2014)

M.B. McCoy, J.A. Tropp, Sharp recovery bounds for convex demixing, with applications. Found. Comput. Math. 14(3), 503–567 (2014)

M.B. McCoy, J.A. Tropp, The achievable performance of convex demixing. ACM Report 2017-02, California Institute of Technology (2017). Manuscript dated 28 Sep. 2013

P. McMullen, Non-linear angle-sum relations for polyhedral cones and polytopes. Math. Proc. Cambridge Philos. Soc. 78(2), 247–261 (1975)

P. McMullen, Inequalities between intrinsic volumes. Monatsh. Math. 111(1), 47–53 (1991)

V.H. Nguyen, Inégalités Fonctionelles et Convexité. Ph.D. Thesis, Université Pierrre et Marie Curie (Paris VI) (2013)

G. Paouris, P. Pivovarov, P. Valettas. On a quantitative reversal of Alexandrov’s inequality. Trans. Am. Math. Soc. 371(5), 3309–3324 (2019)

G. Pisier, The volume of convex bodies and Banach space geometry. Cambridge Tracts in Mathematics, vol. 94 (Cambridge University Press, Cambridge, 1989)

L.A. Santaló, Integral geometry and geometric probability. Cambridge Mathematical Library, 2nd edn. (Cambridge University Press, Cambridge, 2004). With a foreword by Mark Kac

A. Saumard, J.A. Wellner, Log-concavity and strong log-concavity: a review. Stat. Surv. 8, 45–114 (2014)

R. Schneider, Convex bodies: the Brunn-Minkowski theory. Encyclopedia of Mathematics and its Applications, vol. 151, expanded edn. (Cambridge University Press, Cambridge, 2014)

R. Schneider, W. Weil, Stochastic and integral geometry. Probability and its Applications (New York) (Springer, Berlin, 2008)

Y. Shenfeld, R. van Handel, Mixed volumes and the Bochner method. Proc. Amer. Math. Soc. 147, 5385–5402 (2019)

R.A. Vitale, The Wills functional and Gaussian processes. Ann. Probab. 24(4), 2172–2178 (1996)

L. Wang, Heat Capacity Bound, Energy Fluctuations and Convexity (ProQuest LLC, Ann Arbor, 2014). Thesis (Ph.D.)–Yale University

J.M. Wills, Zur Gitterpunktanzahl konvexer Mengen. Elem. Math. 28, 57–63 (1973)

H.S. Wilf, generatingfunctionology, 2nd edn. (Academic Press, Boston, 1994)

Y. Yu, On the maximum entropy properties of the binomial distribution. IEEE Trans. Inform. Theory 54(7), 3351–3353 (2008)

Acknowledgements

We are grateful to Emmanuel Milman for directing us to the literature on concentration of information. Dennis Amelunxen, Sergey Bobkov, and Michel Ledoux also gave feedback at an early stage of this project. Ramon Van Handel provided valuable comments and citations, including the fact that ULC sequences concentrate. We thank the anonymous referee for a careful reading and constructive remarks.

Parts of this research were completed at Luxembourg University and at the Institute for Mathematics and its Applications (IMA) at the University of Minnesota. Giovanni Peccati is supported by the internal research project STARS (R-AGR-0502-10) at Luxembourg University. Joel A. Tropp gratefully acknowledges support from ONR award N00014-11-1002 and the Gordon and Betty Moore Foundation.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Lotz, M., McCoy, M.B., Nourdin, I., Peccati, G., Tropp, J.A. (2020). Concentration of the Intrinsic Volumes of a Convex Body. In: Klartag, B., Milman, E. (eds) Geometric Aspects of Functional Analysis. Lecture Notes in Mathematics, vol 2266. Springer, Cham. https://doi.org/10.1007/978-3-030-46762-3_6

Download citation

DOI: https://doi.org/10.1007/978-3-030-46762-3_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-46761-6

Online ISBN: 978-3-030-46762-3

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)