Abstract

Experimental designs intended to match arbitrary target distributions are typically constructed via a variable transformation of a uniform experimental design. The inverse distribution function is one such transformation. The discrepancy is a measure of how well the empirical distribution of any design matches its target distribution. This chapter addresses the question of whether a variable transformation of a low discrepancy uniform design yields a low discrepancy design for the desired target distribution. The answer depends on the two kernel functions used to define the respective discrepancies. If these kernels satisfy certain conditions, then the answer is yes. However, these conditions may be undesirable for practical reasons. In such a case, the transformation of a low discrepancy uniform design may yield a design with a large discrepancy. We illustrate how this may occur. We also suggest some remedies. One remedy is to ensure that the original uniform design has optimal one-dimensional projections, but this remedy works best if the design is dense, or in other words, the ratio of sample size divided by the dimension of the random variable is relatively large. Another remedy is to use the transformed design as the input to a coordinate-exchange algorithm that optimizes the desired discrepancy, and this works for both dense or sparse designs. The effectiveness of these two remedies is illustrated via simulation.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 Introduction

Professor Kai-Tai Fang and his collaborators have demonstrated the effectiveness of low discrepancy points as space filling designs [4,5,6, 11]. They have promoted discrepancy as a quality measure for statistical experimental designs to the statistics, science, and engineering communities [7,8,9,10].

Low discrepancy uniform designs, \(\mathscr {U}= \{\varvec{u}_i\}_{i=1}^N\), are typically constructed so that their empirical distributions, \(F_\mathscr {U}\), approximate \(F_{unif }\), the uniform distribution on the unit cube, \((0,1)^d\). The discrepancy measures the magnitude of \(F_{unif }-F_\mathscr {U}\). The uniform design is a commonly used space filling design for computer experiments [5] and can be constructed using JMP® [20].

When the target probability distribution for the design, \(F\), defined over the experimental domain \(\varOmega \), is not the uniform distribution on the unit cube, then the desired design, \(\mathscr {X}\), is typically constructed by transforming a low discrepancy uniform design, i.e.,

Note that \(F\) may differ from \(F_{unif }\) because \(\varOmega \ne (0,1)^d\) and/or \(F\) is non-uniform. A natural transformation, \(\varvec{\varPsi }(\varvec{u})=\bigl (\varPsi _1(u_1),\ldots ,\varPsi _d(u_d) \bigr )\), when \(F\) has independent marginals, is the inverse distribution transformation:

A number of transformation methods for different distributions can be found in [2] and [11, Chap. 1].

This chapter addresses the question of whether the design \(\mathscr {X}\) resulting from transformation (5.1) of a low discrepancy design, \(\mathscr {U}\), is itself low discrepancy with respect to the target distribution \(F\). In other words,

We show that the answer may be yes or no, depending on how the question is understood. We discuss both cases. For illustrative purposes, we consider the situation where \(F\) is the standard multivariate normal distribution, \(F_{normal }\).

In the next section, we define the discrepancy and motivate it from three perspectives. In Sect. 5.3 we give a simple condition under which the answer to (Q) is yes. But, in Sect. 5.4 we show that under more practical assumptions the answer to (Q) is no. An example illustrates what can go wrong. Section 5.5 provides a coordinate exchange algorithm that improves the discrepancy of a candidate design. Simulation results illustrate the performance of this algorithm. We conclude with a brief discussion.

2 The Discrepancy

Experimental design theory based on discrepancy assumes an experimental region, \(\varOmega \), and a target probability distribution, \(F:\varOmega \rightarrow [0,1]\), which is known a priori. We assume that \(F\) has a probability density, \(\varrho \). It is convenient to also work with measures, \(\nu \), defined on \(\varOmega \). If \(\nu \) is a probability measure, then the associated probability distribution is given by \(F(\varvec{x}) = \nu ((-\varvec{\infty },\varvec{x}])\). The Dirac measure, \(\delta _{\varvec{x}}\) assigns unit measure to the set \(\{\varvec{x}\}\) and zero measure to sets not containing \(\varvec{x}\). A design, \(\mathscr {X}= \{\varvec{x}_i\}_{i=1}^N\), is a finite set of points with empirical distribution \(F_{\mathscr {X}} = N^{-1} \sum _{i=1}^N \mathbbm {1}_{(-\varvec{\infty },\varvec{x}_i]}\) and empirical measure \(\nu _{\mathscr {X}} = N^{-1} \sum _{i=1}^N \delta _{\varvec{x}_i}\).

Our notation for discrepancy takes the form of

all of which mean the same thing. The first argument always refers to the design, the second argument always refers to the target, and the third argument is a symmetric, positive definite kernel, which is explained below. We abuse the discrepancy notation because sometimes it is convenient to refer to the design as a set, \(\mathscr {X}\), other times by its empirical distribution, \(F_{\mathscr {X}}\), and other times by its empirical measure, \(\nu _{\mathscr {X}}\). Likewise, sometimes it is convenient to refer the target as a probability measure, \(\nu \), other times by its distribution function, F, and other times by its density function, \(\varrho \).

In the remainder of this section we provide three interpretations of the discrepancy, summarized in Table 5.1. These results are presented in various places, including [14, 15]. One interpretation of discrepancy is the norm of \(\nu - \nu _{\mathscr {X}}\). The second and third interpretations consider the problem of evaluating the mean of a random variable \(Y=f(\varvec{X})\), or equivalently a multidimensional integral

where \(\varvec{X}\) is a random vector with density \(\varrho \). The second interpretation of the discrepancy is worst-case cubature error for integrands, f, in the unit ball of a Hilbert space. The third interpretation is the root mean squared cubature error for integrands, f, which are realizations of a stochastic processes.

2.1 Definition in Terms of a Norm on a Hilbert Space of Measures

Let \((\mathscr {M}, \left\langle \cdot , \cdot \right\rangle _{\mathscr {M}})\) be a Hilbert space of measures defined on the experimental region, \(\varOmega \). Assume that \(\mathscr {M}\) includes all Dirac measures. Define the kernel function \(K:\varOmega \times \varOmega \rightarrow \mathbb {R}\) in terms of inner products of Dirac measures:

The squared distance between two Dirac measures in \(\mathscr {M}\) is then

It is straightforward to show that K is symmetric in its arguments and positive-definite, namely:

The inner product of arbitrary measures \(\lambda , \nu \in \mathscr {M}\) can be expressed in terms of a double integral of the kernel, K:

This can be established directly from (5.4) for \(\mathscr {M}_0\), the vector space spanned by all Dirac measures. Letting \(\mathscr {M}\) be the closure of the pre-Hilbert space \(\mathscr {M}_0\) then yields (5.7).

The discrepancy of the design \(\mathscr {X}\) with respect to the target probability measure \(\nu \) using the kernel K can be defined as the norm of the difference between the target probability measure, \(\nu \), and the empirical probability measure for \(\mathscr {X}\):

The formula for the discrepancy may be written equivalently in terms of the probability distribution, F, or the probability density, \(\varrho \), corresponding to the target probability measure, \(\nu \):

Typically the computational cost of evaluating \(K(\varvec{t},\varvec{x})\) for any \((\varvec{t},\varvec{x}) \in \varOmega ^2\) is \(\mathscr {O}(d)\), where \(\varvec{t}\) is a d-vector. Assuming that the integrals above can be evaluated at a cost of \(\mathscr {O}(d)\), the computational cost of evaluating \(D(\mathscr {X},\nu ,K)\) is \(\mathscr {O}(dN^2)\).

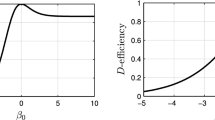

The formulas for the discrepancy in (5.8) depend inherently on the choice of the kernel K. That choice is key to answering question (Q). An often used kernel is

This kernel is plotted in Fig. 5.1 for \(d=1\). The distance between two Dirac measures by (5.5) for this kernel in one dimension is

The kernel defined in (5.9) for \(d=1\)

The discrepancy for the uniform distribution on the unit cube defined in terms of the above kernel is expressed as

2.2 Definition in Terms of a Deterministic Cubature Error Bound

Now let \((\mathscr {H}, \left\langle \cdot , \cdot \right\rangle _{\mathscr {H}})\) be a reproducing kernel Hilbert space (RKHS) of functions [1], \(f: \varOmega \rightarrow \mathbb {R}\), which appear as the integrand in (5.3). By definition, the reproducing kernel, K, is the unique function defined on \(\varOmega \times \varOmega \) with the properties that \(K(\cdot , \varvec{x})\in \mathscr {H}\) for any \(\varvec{x}\in \varOmega \) and \(f(\varvec{x})=\left\langle K(\cdot ,\varvec{x}), f \right\rangle _{\mathscr {H}}\). This second property, implies that K reproduces function values via the inner product. It can be verified that K is symmetric in its arguments and positive definite as in (5.6).

The integral \(\mu = \int _{\varOmega } f(\varvec{x}) \, \varrho (\varvec{x}) \, \mathrm {d}\varvec{x}\), which was identified as \(\mathbb {E}[f(\varvec{X})]\) in (5.3), can be approximated by a sample mean:

The quality of this approximation to the integral, i.e., this cubature, depends in part on how well the empirical distribution of the design, \(\mathscr {X}= \{\varvec{x}_i\}_{i=1}^N\), matches the target distribution F associated with the density function \(\varrho \).

Define the cubature error as

Under modest assumptions on the reproducing kernel, \({{\,\mathrm{err}\,}}(\cdot , \mathscr {X})\) is a bounded, linear functional. By the Riesz representation theorem, there exists a unique representer, \(\xi \in \mathscr {H}\), such that

The reproducing kernel allows us to write down an explicit formula for that representer, namely, \(\xi (\varvec{x})=\left\langle K(\cdot ,\varvec{x}), \xi \right\rangle _{\mathscr {H}}=\left\langle \xi , K(\cdot ,\varvec{x}) \right\rangle _{\mathscr {H}}={{\,\mathrm{err}\,}}(K(\cdot ,\varvec{x}),\mathscr {X})\). By the Cauchy-Schwarz inequality, there is a tight bound on the squared cubature error, namely

The first term on the right describes the contribution made by the quality of the cubature rule, while the second term describes the contribution to the cubature error made by the nature of the integrand.

The square norm of the representer of the error functional is

We can equate this formula for \(\left\Vert \xi \right\Vert _{\mathscr {H}}^2\) with the formula for \(D^2(\mathscr {X},F,K)\) in (5.8). Thus, the tight, worst-case cubature error bound in (5.12) can be written in terms of the discrepancy as

This implies our second interpretation of the discrepancy in Table 5.1.

We now identify the RKHS for the kernel K defined in (5.9). Let \((\varvec{a},\varvec{b})\) be some d dimensional box containing the origin in the interior or on the boundary. For any \(\mathfrak {u}\subseteq \{1, \ldots , d\}\), define \(\partial ^\mathfrak {u}f(\varvec{x}_\mathfrak {u}) : = \partial ^{|\mathfrak {u}|}f(\varvec{x}_\mathfrak {u},\varvec{0})/\partial \varvec{x}_\mathfrak {u}\), the mixed first-order partial derivative of f with respect to the \(x_j\) for \(j\in \mathfrak {u}\), while setting \(x_j=0\) for all \(j \notin \mathfrak {u}\). Here, \(\varvec{x}_{\mathfrak {u}} = (x_j)_{j \in \mathfrak {u}}\), and \(|\mathfrak {u}|\) denotes the cardinality of \(\mathfrak {u}\). By convention, \(\partial ^{\emptyset }f := f(\varvec{0})\). The inner product for the reproducing kernel K defined in (5.9) is defined as

To establish that the inner product defined above corresponds to the reproducing kernel K defined in (5.9), we note that

Thus, \(K(\cdot ,\varvec{t})\) possesses sufficient regularity to have finite \(\mathscr {H}\)-norm. Furthermore, K exhibits the reproducing property for the above inner product because

2.3 Definition in Terms of the Root Mean Squared Cubature Error

Assume \(\varOmega \) is a measurable subset in \(\mathbb {R}^d\) and F is the target probability distribution defined on \(\varOmega \) as defined earlier. Now, let \(f: \varOmega \rightarrow \mathbb {R}\) be a stochastic process with a constant pointwise mean, i.e.,

where \(\mathscr {A}\) is the sample space for this stochastic process. Now we interpret K as the covariance kernel for the stocastic process:

It is straightforward to show that the kernel function is symmetric and positive definite.

Define the error functional \({{\,\mathrm{err}\,}}(\cdot ,\mathscr {X})\) in the same way as in (5.11). Now, the mean squared error is

Therefore, we can equate the discrepancy \(D(\mathscr {X}, F, K)\) defined in (5.8) as the root mean squared error:

3 When a Transformed Low Discrepancy Design Also Has Low Discrepancy

Having motivated the definition of discrepancy in (5.8) from three perspectives, we now turn our attention to question (Q), namely, does a transformation of low discrepancy points with respect to the uniform distribution yield low discrepancy points with respect to the new target distribution. In this section, we show a positive result, yet recognize some qualifications.

Consider some symmetric, positive definite kernel, \(K_{unif }: (0,1)^d \times (0,1)^d \rightarrow \mathbb {R}\), some uniform design \(\mathscr {U}\), some other domain, \(\varOmega \), some other target distribution, F, and some transformation \(\varvec{\varPsi }:(0,1)^d \rightarrow \varOmega \) as defined in (5.1). Then the squared discrepancy of the uniform design can be expressed according to (5.8) as follows:

where the kernel K is defined as

and provided that the density, \(\varrho \), corresponding to the target distribution, F, satisfies

The above argument is summarized in the following theorem.

Theorem 5.1

Suppose that the design \(\mathscr {X}\) is constructed by transforming the design \(\mathscr {U}\) according to the transformation (5.1). Also suppose that conditions (5.14) are satisfied. Then \(\mathscr {X}\) has the same discrepancy with respect to the target distribution, F, defined by the kernel K as does the original design \(\mathscr {U}\) with respect to the uniform distribution and defined by the kernel \(K_{unif }\). That is,

As a consequence, under conditions (5.14), question (Q) has a positive answer.

Condition (5.14b) may be easily satisfied. For example, it is automatically satisfied by the inverse cumulative distribution transform (5.2). Condition (5.14a) is simply a matter of definition of the kernel, K, but this definition has consequences. From the perspective of Sect. 5.2.1, changing the kernel from \(K_{unif }\) to K means changing the definition of the distance between two Dirac measures. From the perspective of Sect. 5.2.2, changing the kernel from \(K_{unif }\) to K means changing the definition of the Hilbert space of integrands, f, in (5.3). From the perspective of Sect. 5.2.3, changing the kernel from \(K_{unif }\) to K means changing the definition of the covariance kernel for the integrands, f, in (5.3).

To illustrate this point, consider a cousin of the kernel in (5.9), which places the reference point at \(\varvec{0.5} = (0.5, \ldots , 0.5)\), the center of the unit cube \((0,1)^d\):

This kernel defines the centered \(L^2\)-discrepancy [13]. Consider the standard multivariate normal distribution, \(F_{normal }\), and choose the inverse normal distribution,

where \(\varPhi \) denotes the standard normal distribution function. Then condition (5.14b) is automatically satisfied, and condition (5.14a) is satisfied by defining

In one dimension, the distance between two Dirac measures defined using the kernel \(K_{unif }\) above is \(\Vert \delta _{x} - \delta _{t} \Vert _{\mathscr {M}} = \sqrt{|x-t|}\), whereas the distance defined using the kernel K above is \(\Vert \delta _{x} - \delta _{t} \Vert _{\mathscr {M}} = \sqrt{|\varPhi (x)-\varPhi (t)|}\). Under kernel K, the distance between two Dirac measures is bounded, even though the domain of the distribution is unbounded. Such an assumption may be unpalatable.

4 Do Transformed Low Discrepancy Points Have Low Discrepancy More Generally

The discussion above indicates that condition (5.14a) can be too restrictive. We would like to compare the discrepancies of designs under kernels that do not satisfy that restriction. In particular, we consider the centered \(L^2\)-discrepancy for uniform designs on \((0,1)^d\) defined by the kernel in (5.15):

where again, \(F_{unif }\) denotes the uniform distribution on \((0,1)^d\), and \(\mathscr {U}\) denotes a design on \((0,1)^d\)

Changing perspectives slightly, if \(F_{unif }'\) denotes the uniform distribution on the cube of volume one centered at the origin, \((-0.5,0.5)^d\), and the design \(\mathscr {U}'\) is constructed by subtracting \(\varvec{0.5}\) from each point in the design \(\mathscr {U}\):

then

where K is the kernel defined in (5.9).

Recall that the origin is a special point in the definition of the inner product for the Hilbert space with K as its reproducing kernel in (5.13). Therefore, this K from (5.9) is appropriate for defining the discrepancy for target distributions centered at the origin, such as the standard normal distribution, \(F_{normal }\). Such a discrepancy is

Here, \(\phi \) is the standard normal probability density function. The derivation of (5.18) is given in the Appendix.

We numerically compare the discrepancy of a uniform design, \(\mathscr {U}'\) given by (5.17) and the discrepancy of a design constructed by the inverse normal transformation, i.e., \(\mathscr {X}= \varvec{\varPsi }(\mathscr {U})\) for \(\varvec{\varPsi }\) in (5.16), where the \(\mathscr {U}\) leading to both \(\mathscr {U}'\) and \(\mathscr {X}\) is identical. We do not expect the magnitudes of the discrepancies to be the same, but we ask

Again, K is given by (5.9). So we are actually comparing discrepancies defined by the same kernels, but not kernels that satisfy (5.14a).

Let \(d=5\) and \(N=50\). We generate \(B=20\) independent and identically distributed (IID) uniform designs, \(\mathscr {U}\) with \(N=50\) points on \((0,1)^5\) and then use the inverse distribution transformation to obtain IID random \(N(\mathbf{0}, {\mathsf I}_5)\) designs, \(\mathscr {X}= \varvec{\varPsi }(\mathscr {U})\). Figure 5.2 plots the discrepancies for normal designs, \(D(\varvec{\varPsi }(\mathscr {U}),F_{normal }, K)\), against the discrepancies for the uniform designs, \(D(\mathscr {U},F_{unif },K_{unif }) = D(\mathscr {U}',F_{unif }',K)\) for each of the \(B=20\) designs. Question (Q′) has a positive answer if and only if the lines passing through any two points on this plot all have non-negative slopes. However, that is not the case. Thus (Q′) has a negative answer.

We further investigate the relationship between the discrepancy of a uniform design and the discrepancy of the same design after inverse normal transformation. Varying the dimension d from 1 to 10, we calculate the sample correlation between \(D(\varvec{\varPsi }(\mathscr {U}),F_{normal }, K)\) and \(D(\mathscr {U},F_{unif },K_{unif }) = D(\mathscr {U}',F_{unif }',K)\) for \(B=500\) IID designs of size \(N=50\). Figure 5.3 displays the correlation as a function of d. Although the correlation is positive, it degrades with increasing d.

Example 5.1

A simple cubature example illustrates that an inverse transformed low discrepancy design, \(\mathscr {U}\), may yield a large \(D(\varvec{\varPsi }(\mathscr {U}),F_{normal }, K)\) and also a large cubature error. Consider the integration problem in (5.3) with

where \(\phi \) is the probability density function for the standard multivariate normal distribution. The function \(f:\mathbb {R}^d \rightarrow \mathbb {R}\) is constructed to asymptote to a constant as \([\left\Vert 2 \right\Vert _{}]{\varvec{x}}\) tends to infinity to ensure that f lies inside the Hilbert space corresponding to the kernel K defined in (5.9). Since the integrand in (5.19) is a function of \([\left\Vert 2 \right\Vert _{}]{\varvec{x}}\), \(\mu \) can be written as a one dimensional integral. For \(d=10\), \(\mu = 10\) to at least 15 significant digits using quadrature.

We can also approximate the integral in (5.19) using a \(d=10\), \(N=512\) cubature (5.10). We compare cubatures using two designs. The design \(\mathscr {X}_1\) is the inverse normal transformation of a scrambled Sobol’ sequence, \(\mathscr {U}_1\), which has a low discrepancy with respect to the uniform distribution on the d-dimensional unit cube. The design \(\mathscr {U}_2\) takes the point in \(\mathscr {U}_1\) that is closet to \(\varvec{0}\) and moves it to \(\left( 10^{-15}, \ldots , 10^{-15}\right) \), which is very close to \(\varvec{0}\). As seen in Table 5.2, the two uniform designs have quite similar, small discrepancies. However, the transformed designs, \(\mathscr {X}_j = \varvec{\varPsi }(\mathscr {U}_j)\) for \(j=1,2\), have much different discrepancies with respect to the normal distribution. This is due to the point in \(\mathscr {X}_2\) that has large negative coordinates. Furthermore, the cubatures, \(\widehat{\mu }\), based on these two designs have significantly different errors. The first design has both a smaller discrepancy and a smaller cubature error than the second. This could not have been inferred by looking at the discrepancies of the original uniform designs.

5 Improvement by the Coordinate-Exchange Method

In this section, we propose an efficient algorithm that improves a design’s quality in terms of the discrepancy for the target distribution. We start with a low discrepancy uniform design, such as a Sobol’ sequence, and transform it into a design that approximates the target distribution. Following the optimal design approach, we then apply a coordinate-exchange algorithm to further improve the discrepancy of the design.

The coordinate-exchange algorithm was introduced in [18], and then applied widely to construct various kinds of optimal designs [16, 19, 21]. The coordinate-exchange algorithm is an iterative method. It finds the “worst” coordinate \(x_{ij}\) of the current design and replaces it to decrease loss function, in this case, the discrepancy. The most appealing advantage of the coordinate-exchange algorithm is that at each step one need only solve a univariate optimization problem.

First, we define the point deletion function, \(\mathfrak {d}_p\), as the change in square discrepancy resulting from removing the a point from the design:

Here, the design \(\mathscr {X}\backslash \{\varvec{x}_i\}\) is the \(N-1\) point design with the point \(\{\varvec{x}_i\}\) removed. We suppress the choice of target distribution and kernel in the above discrepancy notation for simplicity. We then choose

The definition of \(i^*\) means that removing \(\varvec{x}_{i^*}\) from the design \(\mathscr {X}\) results in the smallest discrepancy among all possible deletions. Thus, \(\varvec{x}_{i^*}\) is helping the least, which makes it a prime candidate for modification.

Next, we define a coordinate deletion function, \(\mathfrak {d}_{c}\), as the change in the square discrepancy resulting from removing a coordinate in our calculation of the discrepancy:

Here, the design \(\mathscr {X}_{-j}\) still has N points but now only d dimensions, the jth coordinate having been removed. For this calculation to be feasible, the target distribution must have independent marginals. Also, the kernel must be of product form. To simplify the derivation, we assume a somewhat stronger condition, namely that the marginals are identical and that each term in the product defining the kernel is the same for every coordinate:

We then choose

For reasons analogous to those given above, the jth coordinate seems to be the best candidate for change.

Let \(\mathscr {X}^*(x)\) denote the design that results from replacing \(x_{i^*j^*}\) by x. We now define \(\varDelta (x)\) as improvement in the squared discrepancy resulting from replacing \(\mathscr {X}\) by \(\mathscr {X}^*(x)\):

We can reduce the discrepancy by find an x such that \(\varDelta (x)\) is positive. The coordinate-exchange algorithm outlined in Algorithm 1 improves the design by maximizing \(\varDelta (x)\) for one chosen coordinate in one iteration. The algorithm terminates when it exhausts the maximum allowed number of iterations or the optimal improvement \(\varDelta (x^*)\) is so small that it becomes negligible (\(\varDelta (x^*)\le \text {TOL}\)). Algorithm 1 is a greedy algorithm, and thus it can stop at a local optimal design. We recommend multiple runs of the algorithm with different initial designs to obtain a design with the lowest discrepancy possible. Alternatively, users can include stochasticity in the choice of the coordinate that is to be exchanged, similarly to [16].

For kernels of product form, (5.22), and target distributions with independent and identical marginals, the formula for the squared discrepancy in (5.8) becomes

\(\text {where}\)

An evaluation of h(x) and \(\widetilde{K}(t,x)\) each require \(\mathscr {O}(1)\) operations, while an evaluation of \(H(\varvec{x})\) and \(K(\varvec{t},\varvec{x})\) each require \(\mathscr {O}(d)\) operations. The computation of \(D(\mathscr {X},\rho ,K)\) requires \(\mathscr {O}(dN^2)\) operations because of the double sum. For a standard multivariate normal target distribution and the kernel defined in (5.9), we have

The point deletion function defined in (5.20) then can be expressed as

The computational cost for \(\mathfrak {d}_p(1), \ldots , \mathfrak {d}_p(N)\) is then \(\mathscr {O}(dN^2)\), which is the same order as the cost of the discrepancy of a single design.

The coordinate deletion function defined in (5.21) can be be expressed as

The computational cost for \(\mathfrak {d}_c(1), \ldots , \mathfrak {d}_p(d)\) is also \(\mathscr {O}(dN^2)\), which is the same order as the cost of the discrepancy of a single design.

Finally, the function \(\varDelta \) defined in (5.23) is given by

If we drop the terms that are independent of x, then we can maximize the function

where

Note that \(A, B_1, \ldots , B_N, C\) only need to be computed once for each iteration of the coordinate exchange algorithm.

Note that the coordinate-exchange algorithm we have developed is a greedy and deterministic algorithm. The coordinate that we choose to make exchange is the one has the largest point and coordinate deletion function values, and we always make the exchange for new coordinate as long as the new optimal coordinate improves the objective function. It is true that such deterministic and greedy algorithm is likely to return a design of whose discrepancy attains a local minimum. To overcome this, we can either run the algorithm with multiple random initial designs, or we can combine the coordinate-exchange with stochastic optimization algorithms, such as simulated annealing (SA) [17] or threshold accepting (TA) [12]. For example, we can add a random selection scheme when choosing a coordinate to exchange, and when making the exchange of the coordinates, we can incorporate a random decision to accept the exchange or not. The random decision can follow the SA or TA method. Tuning parameters must be carefully chosen to make the SA or TA method effective. Interested readers can refer to [22] to see how TA can be applied to the minimization of discrepancy.

6 Simulation

To demonstrate the performance of the d-dimensional standard normal design proposed in Sect. 5.5, we compare three families of designs: (1) RAND: inverse transformed IID uniform random numbers; (2) SOBOL: inverse transformed Sobol’ set; (3) E-SOBOL: inverse transformed scrambled Sobol’ set where the one dimensional projections of the Sobol’ set have been adjusted to be \(\left\{ 1/(2N), 3/(2N), \ldots , (2N-1)/(2N) \right\} \); and (4) CE: improved E-SOBOL via Algorithm 1. We have tried different combinations of dimension, d, and sample size, N. For each (d, N) and each algorithm we generate 500 designs and compute their discrepancies (5.18).

Figure 5.4 contains the boxplots of normal discrepancies corresponding to the four generators with \(d=2\) and \(N=32\). It shows that SOBOL, E-SOBOL, and CE all outperform RAND by a large margin. To better present the comparison between the better generators, in Fig. 5.5 we generally exclude RAND.

We also report the average execution times for the four generators in Table 5.3. All codes were run on a MacBook Pro with 2.4 GHz Intel Core i5 processor. The maximum number of iterations allowed is \(M_{\max } = 200\). Algorithm 1 converges within 20 iterations in all simulation examples.

We summarize the results of our simulation as follows.

-

1.

Overall, CE produces the smallest discrepancy.

-

2.

When the design is relatively dense, i.e., N/d is large, E-SOBOL and CE have similar performance.

-

3.

When the design is more sparse, i.e., N/d is smaller, SOBOL and E-SOBOL have similar performance, but CE is superior to both of them in terms of the discrepancy. Not only in terms of the mean but also in terms of the range for the 500 designs generated.

-

4.

CE requires the longest computational time to construct a design, but this is moderate. When the cost of obtaining function values is substantial, then the cost of constructing the design may be insignificant.

7 Discussion

This chapter summarizes the three interpretations of the discrepancy. We show that for kernels and variable transformations satisfying conditions (5.14), variable transformations of low discrepancy uniform designs yield low discrepancy designs with respect to the target distribution. However, for more practical choices of kernels, this correspondence may not hold. The coordinate-exchange algorithm can improve the discrepancies of candidate designs that may be constructed by variable transformations.

While discrepancies can be defined for arbitrary kernels, we believe that the choice of kernel can be important, especially for small sample sizes. If the distribution has a symmetry, e.g. \(\varrho (\varvec{T}(\varvec{x})) = \varrho (\varvec{x})\) for some probability preserving bijection \(\varvec{T}:\varOmega \rightarrow \varOmega \), then we would like our discrepancy to remain unchanged under such a bijection, i.e., \(D(\varvec{T}(\mathscr {X}),\varrho ,K) = D(\mathscr {X},\varrho ,K)\). This can typically be ensured by choosing kernels satisfying \(K(\varvec{T}(\varvec{t}),\varvec{T}(\varvec{x})) = K(\varvec{t},\varvec{x})\). The kernel \(K_{unif }\) defined in (5.15) satisfies this assumption for the standard uniform distribution and the transformation \(\varvec{T}(\varvec{x}) = \varvec{1}- \varvec{x}\). The kernel K defined in (5.9) satisfies this assumption for the standard normal distribution and the transformation \(\varvec{T}(\varvec{x}) = -\varvec{x}\).

For target distributions with independent marginals and kernels of product form as in (5.22), coordinate weights [3] Sect. 4 are used to determine which projections of the design, denoted by \(\mathfrak {u}\subseteq \{1, \ldots , d\}\), are more important. The product form of the kernel given in (5.22) can be generalized as

Here, the positive coordinate weights are \(\varvec{\gamma }= (\gamma _1, \ldots , \gamma _d)\). The squared discrepancy corresponding to this kernel may then be written as

where c and h are defined in (5.24). Here, \(\mathscr {X}_\mathfrak {u}\) denotes the projection of the design into the coordinates contained in \(\mathfrak {u}\), and \(F_\mathfrak {u}= \prod _{j \in \mathfrak {u}} F_j\) is the \(\mathfrak {u}\)-marginal distribution. Each discrepancy piece, \(D_{\mathfrak {u}} (\mathscr {X}_\mathfrak {u},F_\mathfrak {u},K)\), measures how well the projected design \(\mathscr {X}_\mathfrak {u}\) matches \(F_\mathfrak {u}\).

The values of the coordinate weights can be chosen to reflect the user’s belief as to the importance of the design matching the target for various coordinate projections. A large value of \(\gamma _j\) relative to the other \(\gamma _{j'}\) places more importance on the \(D_{\mathfrak {u}} (\mathscr {X}_\mathfrak {u},F_\mathfrak {u},K)\) with \(j \in \mathfrak {u}\). Thus, \(\gamma _j\) is an indication of the importance of coordinate j in the definition of \(D(\mathscr {X},F,K_{\varvec{\gamma }})\).

If \(\varvec{\gamma }\) is one choice of coordinate weights and \(\varvec{\gamma }'=C\varvec{\gamma }\) is another choice of coordinate weights where \(C > 1\), then \(\gamma '_\mathfrak {u}= C^{\left|\mathfrak {u} \right|} \gamma _\mathfrak {u}\). Thus, \(D(\mathscr {X},F,K_{\varvec{\gamma }'})\) emphasizes the projections corresponding to the \(\mathfrak {u}\) with large \(\left|\mathfrak {u} \right|\), i.e., the higher order effects. Likewise, \(D(\mathscr {X},F,K_{\varvec{\gamma }'})\) places relatively more emphasis lower order effects. Again, the choice of coordinate weights reflects the user’s belief as to the relative importance of the design matching the target distribution for lower order effects or higher order effects.

References

Aronszajn, N.: Theory of reproducing kernels. Trans. Amer. Math. Soc. 68, 337–404 (1950)

Devroye, L.: Nonuniform Random Variate Generation. Handbooks in Operations Research and Management Science, pp. 83–121 (2006)

Dick, J., Kuo, F., Sloan, I.H.: High dimensional integration—the Quasi- Monte Carlo way. Acta Numer. 22, 133–288 (2013)

Fang, K.T., Hickernell, F.J.: Uniform experimental design. Encyclopedia Stat. Qual. Reliab., 2037–2040 (2008)

Fang, K.T., Li, R., Sudjianto, A.: Design and Modeling for Computer Experiments. Computer Science and Data Analysis. Chapman & Hall, New York (2006)

Fang, K.T., Liu, M.-Q., Qin, H., Zhou, Y.-D.: Theory and Application of Uniform Experimental Designs. Springer Nature (Singapore) and Science Press (Bejing), Mathematics Monograph Series (2018)

Fang, K.T., Ma, C.X.: Wrap-around l2-discrepancy of random sampling, latin hypercube and uniform designs. J. Complexity 17, 608–624 (2001)

Fang, K.T. , Ma, C.X.: Relationships Between Uniformity, Aberration and Correlation in Regular Fractions 3s-1. Monte Carlo and quasi-Monte Carlo methods 2000, pp. 213–231 (2002)

Fang, K.T., Ma, C.X., Winker, P.: Centered l2-discrepancy of random sampling and latin hypercube design, and construction of uniform designs. Math. Comp. 71, 275–296 (2002)

Fang, K.T., Mukerjee, R.: A connection between uniformity and aberration in regular fractions of two-level factorials. Biometrika 87, 193–198 (2000)

Fang, K.T., Wang, Y.: Number-Theoretic Methods in Statistics. Chapman and Hall, New York (1994)

Fang, K.T., Lu, X., Winker, P.: Lower bounds for centered and wrap- around l2-discrepancies and construction of uniform designs by threshold accepting. J. Complex. 19(5), 692–711 (2003)

Hickernell, F.J.: A generalized discrepancy and quadrature error bound. Math. Comp. 67, 299–322 (1998)

Hickernell, F.J.: Goodness-of-fit statistics, discrepancies and robust designs. Statist. Probab. Lett. 44, 73–78 (1999)

Hickernell, F.J.: The trio identity for Quasi-Monte Carlo error. In: MCQMC: International conference on Monte Carlo and Quasi-Monte Carlo Methods in Scientific Computing, pp. 3–27 (2016)

Kang, L.: Stochastic coordinate-exchange optimal designs with complex constraints. Qual. Eng. 31(3), 401–416 (2019). https://doi.org/10.1080/08982112.2018.1508695

Kirkpatrick, S., Gelatt, C.D., Vecchi, M.P.: Optimization by Simulated Annealing. Science 220(4598), 671–680 (1983)

Meyer, R.K., Nachtsheim, C.J.: The coordinate-exchange algorithm for constructing exact optimal experimental designs. Technometrics 37(1), 60–69 (1995)

Overstall, A.M., Woods, D.C.: Bayesian design of experiments using approximate coordinate exchange. Technometrics 59(4), 458–470 (2017)

Sall, J., Lehman, A., Stephens, M.L., Creighton, L.: JMP Start Statistics: a Guide to Statistics and Data Analysis Using JMP, 6th edn. SAS Institute (2017)

Sambo, F., Borrotti, M., Mylona, K.: A coordinate-exchange two-phase local search algorithm for the \(D\)-and \(I\)-optimal designs of split-plot experiments. Comput. Statist. Data Anal. 71, 1193–1207 (2014)

Winker, P., Fang, K.T.: Application of threshold-accepting to the evaluation of the discrepancy of a set of points. SIAM J. Numer. Anal. 34(5), 2028–2042 (1997)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

We derive the formula in (5.18) for the discrepancy with respect to the standard normal distribution, \(\varPhi \), using the kernel defined in (5.9). We first consider the case \(d=1\). We integrate the kernel once:

Then we integrate once more:

Generalizing this to the d-dimensional case yields

Thus, the discrepancy for the normal distribution is

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Li, Y., Kang, L., Hickernell, F.J. (2020). Is a Transformed Low Discrepancy Design Also Low Discrepancy?. In: Fan, J., Pan, J. (eds) Contemporary Experimental Design, Multivariate Analysis and Data Mining. Springer, Cham. https://doi.org/10.1007/978-3-030-46161-4_5

Download citation

DOI: https://doi.org/10.1007/978-3-030-46161-4_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-46160-7

Online ISBN: 978-3-030-46161-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)