Abstract

Active 3D imaging systems use artificial illumination in order to capture and record digital representations of objects. The use of artificial illumination allows the acquisition of dense and accurate range images of textureless objects. An active 3D imaging system can be based on different measurement principles that include time-of-flight, triangulation, and interferometry. The different time-of-flight technologies allow the development of a plethora of systems that can operate at a range of a few meters to many kilometers. In this chapter, we focus on time-of-flight technologies that operate from a few meters to a few hundred meters. The characterization of these systems is discussed and experimental results are presented using systems related to construction, engineering, and the automobile industry.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 Introduction

Three-dimensional (3D) vision systems capture and record a digital representation of the geometry and appearance information of visible 3D surfaces of people, animals, plants, objects, and sites. Active 3D imaging systems use an artificial illumination (visible or infrared) to acquire dense range maps with a minimum of ambiguity. In the previous chapter, active 3D imaging systems based on triangulation that operate at a range of a few centimeters to a few meters were presented. In contrast, Time-of-Flight (ToF) technologies allow the development of a plethora of systems that can operate from a range of a few meters to many kilometers. Systems that operate up to a few meters (approx. 5 m) are typically called range cameras or RGB-D cameras and are typically dedicated to indoor applications. Systems that operate at greater ranges are termed LiDAR (Light Detection and Ranging). LiDAR started as a method to directly and accurately capture digital elevation data for Terrestrial Laser Scanning (TLS) and Airborne Laser Scanning (ALS) applications. In the last few years, the appealing features of LiDAR have attracted the automotive industry and LiDAR became one of the most important sensors in autonomous vehicle applications where it is called Mobile Laser Scanning (MLS). Terrestrial, airborne, and mobile laser scanning differ in terms of data capture mode, typical project size, scanning mechanism, and obtainable accuracy and resolution; however, they share many features. In this chapter, we focus on time-of-flight technologies that operate from a few meters to a few hundred meters. They are, by their nature, non-contact measurement instruments and produce a quantifiable 3D digital representation of a surface in a specified finite volume of interest and with a particular measurement uncertainty.

1.1 Historical Context

The fundamental work on Time-of-Flight systems can be traced back to the era of RADAR (Radio Detection And Ranging), which is based on radio waves. With the advent of lasers in the late 1950s, it became possible to image a surface with angular and range resolutions much higher than possible with radio waves. This new technology was termed LiDAR and one of its initial uses was for mapping particles in the atmosphere [38]. During the 1980s, the development of the Global Positioning System (GPS) opened up the possibility of moving sensor systems such as airborne LiDAR and Bathymetric LiDAR were actually one of the first uses [98]. The early 1990s saw the improvement of the Inertial Measurement Unit (IMU) and the ability to begin achieving decimeter accuracies. Some of the earlier non-bathymetric airborne applications were in the measurement of glaciers and how they were changing [113]. TLS systems are also beginning to be used as a way to densely map the three-dimensional nature of features and ground surfaces to a high level of accuracy [79]. TLS is now an important tool in the construction and engineering industry. Many modern ToF systems work in the near and short-wave infrared regions of the electromagnetic spectrum. Some sensors also operate in the green band to penetrate water and detect features at the bottom of it. In recent years, the automotive industry has adopted ToF systems and this is now an essential technology for automated and advanced driver-assistance systems. The entertainment industry has also adopted ToF systems. The progressive addition of motion-sensitive interfaces to gaming platforms and the desire to personalize the gaming experience led to the development of short-range time-of-flight cameras targeted at a wide range of cost-sensitive consumers.

1.2 Basic Measurement Principles

Active three-dimensional (3D) imaging systems can be based on different measurement principles. The three most used principles of commercially available systems are triangulation, interferometry, and time-of-flight. Seitz describes triangulation as a method based on geometry, interferometry as one that uses accurate wavelengths and time-of-flight as based on an accurate clock [92]. Figure 4.1 summarizes the typical accuracy of each type of active 3D imaging system technology found on the market as a function of the operating distance. It can be observed from that figure that each optical technique covers a particular range of operations. Many in-depth classifications of optical distance measurement principles have been published in important references in the field of 3D vision, e.g., [17, 19, 52, 78]. Both active and passive triangulation systems are based on the same geometric principle: intersecting light rays in 3D space. Typically, an active system replaces one camera of a passive stereo system by a projection device. This projection device can be a digital video projector, an analog slide projector, or a laser. Interferometry is based on the superposition of two beams of light [52]. Typically, a laser beam is split into two paths. One path is of known length, while the other is of unknown length. The difference in path lengths creates a phase difference between the light beams. The two beams are then combined together before reaching a photodetector. The interference pattern seen by the detector resulting from the superposition of these two light beams depends on the path difference (i.e., the distance). The remainder of this chapter will focus on the time-of-flight.

1.3 Time-of-Flight Methods

Most Time-of-Flight (ToF) technologies presented in this chapter are classified as active optical non-contact 3D imaging systems because they emit light into the environment and use the reflected optical energy to estimate the distance to a surface in the environment. The distance is computed from the round-trip time which may be estimated directly using a high-resolution clock to measure the time between outgoing and incoming pulses (Pulse-based ToF), or indirectly by measuring the phase shift of an amplitude-modulated optical signal (Phase-based ToF) [105].

There are many ways to classify ToF sensors, according to their components, application fields, and performance. One of the key dimensions within this taxonomy is the way in which the active 3D imaging system illuminates the scene. Some measurement systems are points based and need to scan the laser spot along two axes in order to acquire a range image, other systems used multiple laser spots and require the scanning along a single axis to obtain a range image. Finally, some systems illuminate the entire scene simultaneously.

The first family of systems that we present are point-based scanners. A large subset within this family are known as Terrestrial Laser Scanning (TLS) systems or Terrestrial LiDAR and have multiple applications within the construction and engineering industry (see top left of Fig. 4.2). Many TLS systems contain a biaxial leveling compensator used to align the coordinate system of the generated range image with respect to gravity.

Various ToF systems. (These are used to generate the experimental results shown in Sect. 4.7.) Top left: a terrestrial LiDAR system. Top right: a laser tracker. Bottom left: a retroreflector used by the laser tracker. Bottom right: a multi-channel LiDAR mounted on the top of a car. Note the GPS receiver on the left and the inertial measurement unit on the right of the LiDAR system.

A second type of point-based system, frequently encountered in the construction and engineering industry, is the Laser Tracker (LT) which is the only type of systems presented in this chapter that is classified as a contact technology because the light emitted into the environment is reflected by a retroreflector, which is placed in contact with a surface at the time of measurement (see top right and bottom left of Fig. 4.2).

Systems using multiple laser spots are typically referred as multi-channel LiDAR. These systems are encountered in automotive applications (see bottom right of Fig. 4.2). Multi-channel LiDAR is considered by many automobile manufacturers to be a key technology for autonomous driving. In the automotive industry, the technology is known as Mobile LiDAR Systems (MLS). Multi-channel LiDAR emits multiple laser beams that are contained within a plane. Each acquisition generates a profile contained within that plane and by modifying the orientation or position of this plane it is possible to generate a range image.

Systems that illuminate the entire scene simultaneously are now frequently encountered in consumer-grade applications. These systems are referred as area-based systems and the detection of the incoming light is done by a two-dimensional (2D) array of detectors. The second generation Microsoft Kinect is a popular area-based system. Typically, because of constraints imposed by eye-safety requirements for consumer-grade products, their operational range is smaller than other types of ToF system.

ToF systems measure the distance to a point by calculating the round-trip time of light reflected from the surface [24, 105], based on an assumption of the speed at which the light is able to travel through the medium (typically air). Factors such as air temperature and pressure [20, 70], relative humidity, \(CO_2\) concentration [24], atmospheric turbulence [109], and the presence of particulate matter [11, 24] or fog [89] can all affect the speed at which light can travel through the medium. This is further complicated by gradients in these factors along the beam path [42].

Moreover, the measurement quality is strongly dependent on the surface being measured due to factors such as reflectance [46], surface orientation [42], optical penetration [34, 40, 44], and substances, such as water, on the surface [61, 67].

The output of a ToF system is typically a point cloud or range image. A point cloud is an unorganized set of 3D points, while a range image is an organized array of 3D points that implicitly encode the neighborhood relation between points. This neighborhood structure is related to the physical acquisition process. As an example, for a range image produced by a multi-channel LiDAR, one axis represents the laser beam index while the other represents the angular position of the laser beam along the scanning axis. For historical reasons, unstructured point clouds saved into ASCII file format are still encountered today. Wherever possible, we advocate the preservation of the neighborhood structure associated with the physical acquisition process of the ToF system employed.

As discussed in Chap. 3, when working with coherent light sources (lasers) eye safety is of paramount importance and one should never operate laser-based 3D imaging sensors without appropriate eye-safety training. Many 3D imaging systems use a laser in the visible spectrum where fractions of a milliwatt are sufficient to cause eye damage, since the laser light density entering the pupil is magnified, at the retina, through the lens. For an operator using any laser, an important safety parameter is the Maximum Permissible Exposure (MPE) which is defined as the level of laser radiation to which a person may be exposed without hazardous effect or adverse biological changes in the eye or skin [4]. The MPE varies with wavelength and operating conditions of a system. One possible mitigation strategy used by scanner manufacturers is to use a laser at 1.55 \(\upmu \)m. At this wavelength, the light is absorbed by the eye fluids before being focused on the retina. This tends to increase the MPE to the laser source. We do not have space to discuss eye safety extensively here and refer the reader to the American National Standard for Safe Use of Lasers [4].

1.4 Chapter Outline

The core sections in this chapter cover the following materials:

-

Point-based systems.

-

Laser trackers—a special type of point-based system using retroreflectors.

-

Multi-channel systems.

-

Area-based systems (these are used in consumer-grade products).

-

Characterization of ToF system performance.

-

Experimental results for random and systematic ranging errors.

-

Sensor fusion and navigation.

-

ToF versus photogrammetry for some specific applications.

Toward the end of the chapter, we will present the main challenges for future research followed by concluding remarks and suggestions for further reading. A set of questions and exercises are provided at the end for the reader to develop a good understanding and knowledge of ToF technologies.

2 Point-Based Systems

Point-based systems measure distance one point at a time and need to be scanned along two axes in order to acquire a range image. TLS systems are a large subset within this family and this section will mostly focus on this type of system, which is now commonly used as a survey method for monitoring large structures such as bridges and for as-built building information modeling. TLS can be used for forensic applications in large environments and they are regularly used to document cultural heritage sites. In TLS, the scanning is performed by two rotating components. One controls the elevation, while the other determines the azimuth. Many TLS systems contain a biaxial leveling compensator used to align the coordinate system of the generated range image with respect to gravity. Point-based systems that do not include scanning mechanisms are commercially available and are refereed as laser range finders.Footnote 1

One configuration that can be encountered in a TLS system uses a galvanometer with a mirror to control the elevation and azimuth is controlled by rotating the complete scanner head. Using this configuration, the elevation can be scanned at a higher frequency than the azimuth. A typical configuration could have a 360\(^\circ \) field of view in the azimuth and 30–120\(^\circ \) of field of view in elevation. Note that galvanometer scan angles are limited to about ±45\(^\circ \). For larger scan angle, a motor with encoders must be used. In the idealized case, each time a distance measurement is made, the value of the optical encoder of the rotating head and the readout value for the galvanometer are recorded. When the system is calibrated, these values can be converted into angles. Using the distance measurement and the angles it is possible to compute the position of the 3D points. In the non-idealized case, the rotation axis of the scanner head and that of the galvanometer may not be perpendicular and some small translation offsets can result from the misalignment of the laser and galvanometer with respect to the rotation center of the scanner head. Moreover, the rotation axis may wobble. For high-end systems, all these issues and others have to be taken into account. Since access to a facility capable of calibrating a TLS system is rare, this topic is not discussed in this chapter. However, the characterization of systems will be discussed in Sect. 4.6. For the remainder of this section, two technologies for the distance measurement known as pulse-based and phase-based are presented. Pulse-based systems perform a direct measurement of the time required by the light to do a round trip between the scanner and the scene. Phase-based systems perform an indirect measurement of the time by measuring a phase offset. Some authors reserved the name time-of-flight for systems performing direct measurement. In this chapter, a less restricting definition of time-of-flight is used.

2.1 Pulse-Based Systems

Pulse-based systems continually pulse a laser, and measure how long it takes for each light pulse to reach a surface within the scene and return to the sensor. Typically, the pulse has a Gaussian shape with a half-beam width of 4–10 ns. Since the speed of light is known, the range \(r(\varDelta t)\) of the scene surface is defined as

where c is the speed of light, and \(\varDelta t\) is the time between the light being emitted and it being detected. Typically, the detector performs a sampling of the signal for every 1 or 2 ns. Different algorithms that perform the detection of pulses in the incoming signal are discussed in Sect. 4.2.1.2. In many applications, the detection of the peak of a pulse with a sub-nanosecond accuracy is critical as the pulse travels approximately 30 cm in one nanosecond. Figure 4.3-left illustrates the principle.

The simplest implementation assumes that a detected pulse corresponds to the last pulse emitted. In some situations, the ordering of the outgoing pulses may be different from the order of the returning pulses. This can occur in scenes with large depth variations. A pulse can reach a distant surface and by the time the pulse returns to the sensor, a second pulse is emitted to a close surface and back to the sensor. In order to avoid this situation, the maximum pulsing rate \(f_p\) of a pulse-based system is limited by the maximum range \(R_{max}\) of the system using

As an example, a system having a maximum range of 1.5 km is expected to generate at most 100,000 range measurements per second.

2.1.1 Multiple Returning Pulses

A property of pulse-based system is that it may register multiple return signals per emitted pulse. An emitted laser pulse may encounter multiple reflecting surfaces and the sensor may register as many returns as there are reflective surfaces (i.e., the laser beam diameter is not infinitely thin [94]). This situation is frequently encountered in airborne applications related to forestry and archaeology where it may simultaneously register the top of the vegetation and the ground [25, 59, 84]. Note that a pulse can hit a thick branch on its way to the ground and it may not actually reach the ground. For terrestrial applications, the analysis of multiple return signals can sometimes allow detection of a building behind vegetation or can detect both the position of a building’s window and a surface within the building. Finally, some systems record the complete return signal, which forms a vector of intensity values where each value is associated with a time stamp. These systems are known as waveform LiDAR [68]. Waveform LiDAR is capable of measuring the distance of several objects within the laser footprint and this allows characterization of the vegetation structure [1, 59, 106]. Finally, some detected pulses can be the result of an inter-reflection within the scene. This situation is illustrated in Fig. 4.4. In this figure, a part of the laser light emitted by the system is first reflected on the ground and then reflected on the road sign before reaching the sensor. Typically, multi-channel and area-based systems are more sensitive to inter-reflection artifacts than point-based systems.

2.1.2 Detecting a Returning Pulse

The detection of the peak of the returning signal with a sub-nanosecond accuracy is critical for many commercially available systems and scanner manufacturers provide few implementation details. An electronic circuit that can be used to locate the peak is the constant fraction discriminator circuit. This approximates the mathematical operation of finding the maximum of the returning pulse by locating the zero of the first derivative [60]. Implementation details about the peak detector of experimental systems developed for the landing of spacecraft are discussed in [22, 39]. In [39], the returning signal is convolved with a Gaussian and the peaks are located by examining the derivative. The standard deviation of the Gaussian is derived from the physical characteristics of the system. In [22], a 6\(^\circ \) polynomial is fitted on the returning signal. Waveform LiDAR records the complete return signal and makes it available to the end user. The end user can implement specialized peak detectors adapted to specific applications. A significant body of knowledge about peak detection for waveform LiDAR is available [68]. One approach is to model the waveform as a series of Gaussian pulses. The theoretical basis for this modeling is discussed in [104].

2.2 Phase-Based Systems

Phase-based systems emit an Amplitude-Modulated (AM) laser beam [3]. The systems presented in this section are also known as continuous-wave ToF system. Frequency Modulation (FM) is rarely used so it is not considered here.Footnote 2 The range is deduced from the phase difference between the detected signal and the emitted signal. Figure 4.3b illustrates the principle of the phase-based system. The temporal intensity profile \(I_s(t)\) for the illumination source is

where \(A_s\), \(B_s\), and \(\theta _s\) are constants and \(\phi (t) = 2\pi t f_{cw}\) where \(f_{cw}\) is the modulation frequency. The temporal intensity profile \(I_d(t)\) for the detected signal is

where \(\delta \phi \) is the phase offset related to the range. Note that \(A_d\), \(B_d\) depend on \(A_s\), \(B_s\) the scene surface properties and the sensor characteristics. In general, the value of \(\theta _s\) and \(\theta _d\) are assumed to be constant but their values are unknown. The conversion from phase offset to range can be achieved using

where c is the speed of light in the medium. The range over which the system can perform unambiguous measurement can be computed from the modulation frequency. This unambiguous range \(r_{max} \) is defined as

Increasing the modulation frequency \( f_{cw}\) will simultaneously reduce the value of \(r_{max}\) and the measurement uncertainty.

2.2.1 Measuring Phase Offset

Phase-based systems combine the detected signal with the emitted signal in order to perform the phase offset measurement. At this point, one should realize a similarity with interferometry. A unified presentation of phase-based ToF and interferometry can be found in [29]. Combining the detected signal with the emitted signal is mathematically equivalent to the cross-correlation between Eqs. 4.3 and 4.4 which results in another sinusoidal function \(I_c(t)\) defined as

where \(A_c\), \(B_c\) and \(\delta \phi \) are unknown and \(\phi (t)\) and \(\theta _c\) are known. Typically, \(\theta _c\) is assumed to be zero during the processing of the signal as a nonzero value simply creates a bias in the range measurement that can be compensated by the calibration. By sampling Eq. 4.7 three or more times with different values of \(t_i\), it is possible to construct a system of equations that allows us to solve for \(A_c\), \(B_c\), and \(\delta \phi \). This is done in the same way as is described in the previous chapter and the details are not repeated here. After selecting different sampling times \(t_i\) such that \(\phi (t_i) = \frac{2\pi i}{N}\), where N is the number of samples, we can compute the following:

and

where \(\arctan (n,d)\) represents the usual \(\tan ^{-1} (n/d)\) where the sign of n and d are used to determinate the quadrant.

2.2.2 Removing the Phase Offset Ambiguity

Once the phase difference \(\varDelta \Phi \) is computed, the range is defined as

where k is an unknown integer that represents the phase ambiguity. The value of k must be recovered in order to compute the location of the 3D points. A simple method uses multiple modulation frequencies denoted as \(f^i_{cw} \) with \(i>0\). When using this scheme, a value of \(k^i\) must be recovered for each \( f^i_{cw} \). The lower modulation frequencies are used to remove the range ambiguity while the higher ones are used to improve the accuracy of the measurement. In that scheme, the value of \(f^1_{cw}\) and \(r_{max} \) are selected such that \(k^1\) is always zero and the value of \( \varDelta \Phi ^i\) for \(i{\ge }1\) can be used to determinate the value of \(k^{i+1} \) (assuming that \(f^i_{cw} < f^{i+1}_{cw} \)).

3 Laser Trackers

In this section, we are specifically interested in a type of measurement system known as a laser trackers, also known as portable coordinate measurement machines. Laser trackers are classified as contact systems because the light emitted into the environment is reflected by a retroreflector, often referred to as Spherically Mounted Reflector or SMR, which is placed in contact with a surface at the time of measurement (see Fig. 4.5). Measurement results obtained by laser tracker systems are also relatively independent of the type of surface being measured because the point of reflection is the retroreflector. Every point obtained by a laser tracker system requires human intervention. For this reason, these systems are used where only a few points need to be measured, but must be measured at high accuracy. The laser tracker instrument is relatively easy to use and it can be employed to evaluate the performance of a time-of-flight 3D imaging system. The laser tracker is used as a reference instrument for medium-range 3D imaging systems in the American Society for Testing and Materials (ASTM) standard ASTM E2641-09 (2017). As seen in Fig. 4.1, laser tracker systems operate in the same range as ToF systems, from the tenth-of-a-meter to the hundreds-of-meters range. They have a measurement uncertainties one order of magnitude or more better than ToF. The performance of the laser tracker is covered by the American Society of Mechanical Engineers (ASME) ASME B89.4.19-2006 standard which uses the interferometer as a reference instrument [77]. Laser tracker systems use interferometry, ToF or a combination of both to calculate the distance. Distance measurement using interferometry is known as Interferometry Mode (IFM), while distance calculated using ToF is known as Absolute Distance Mode (ADM).

Top left: experimental setup used to compare close-range photogrammetry and LT. Top right: a range image with different targets extracted, shown in red. Center left: a half-sphere mounted on a kinetic mount. Center right: the same setup with the half sphere substituted with an SMR. Bottom left: a specialized target containing a recessed black bow tie. An operator is currently probing the planar surface using LT. Bottom center: the specialized probing tool with the SMR’s nest but without the SMR. Bottom right: the specialized probing tool with the SMR, as used to probe the center of the bow tie.

Typically, specifications for laser trackers are given as a Maximum Permissible Error (MPE) [55]. Specifically,

where A and K are constants that depend on the laser tracker and L is the range of the SMR. Incidentally, MPE estimates are Type B uncertainties, which are described in GUM JCGM 104:2008 [53]. There are two types of uncertainty defined in [53]. Type A uncertainties are obtained using statistical methods, while Type B is obtained by means other than statistical analysis. FARO X Laser TrackerFootnote 3 specifications are given in Table 4.1.

Measurement accuracy is affected not only by tracker performance but also by the variation in air temperature and procedures used to perform the measurement which is not discussed. In the remainder of this section, we described best practices that should be adopted when using laser trackers.

3.1 Good Practices

Before a laser tracker can be used to obtain measurement results, the quality of data produced must be verified by a set of basic tests using SMR nests in fixed positions. These tests are often divided into two categories: ranging tests that assess the radial measurement performance, and system tests that evaluate volumetric measurement performance. The ASME B89.4.19 [70, 76] standard is the most applicable to the average laser tracker user because it provides a clear and well-recognized method for evaluating whether the performance of the laser tracker is within the manufacturer provided Maximum Permissible Error (MPE). More recently, other research institutes including NIST and NPL have published additional in-field test procedures that can be used to establish the measurement uncertainty associated with any laser tracker measurement result.

3.1.1 Range Measurement Evaluation

A laser tracker performance assessment typically starts by assessing the range (radial) measurement performance of a laser tracker. These systems can use either one of the two operating modes to measure the radial distance to a Spherically Mounted Retroreflector (SMR). The two operating modes are Interferometric distance Measurement (IFM) or Absolute Distance Meter (ADM) [72, 75]. In IFM mode the SMR must initially be placed in the home position, then moved without breaking the beam to the position of interest. The distance is calculated by counting the number of fringes from the measurement beam relative to an internal reference beam, which corresponds to the distance traveled [35]. The distance between each fringe is one wavelength, and the result is a highly accurate measurement result. ADM mode, by contrast, uses a form of time-of-flight measurement so it is less accurate; however, it does not require SMRs to start the measurement process in the home position.

The range measurement results of laser trackers that operate in IFM mode are typically compared with displacement measurement results obtained from a calibrated reference interferometer with measurement uncertainty traceable to the meter [35]. These results are used to verify that the laser tracker range measurement performance is within the specifications provided by the manufacturer. This is normally performed in-factory of the manufacturer and periodically as required by the manufacturer to ensure that the laser scanner continues to operate within specification. The performance of laser trackers that operate in both IFM and ADM mode can be validated on site by calculating the deviation between range measurements obtained in IFM mode (reference) and ADM mode (test).

3.1.2 Angular Encoder Evaluation

Once the radial axis measurement performance has been verified, the performance of the horizontal and vertical angle encoders can be assessed using system tests. The following encoder errors can be identified [76]: beam offset, transit offset, vertex index offset, beam tilt, transit tilt, encoder eccentricity, bird-bath error, and encoder scale errors. A complete calibration requires quantification of each error source using a more comprehensive test regime. For in-field evaluation, however, most error sources can be bundled to reduce the number of encoder performance tests required to complete an in-field calibration.

3.1.3 In-Field Evaluation

The first in-field system test to perform is referred to as the two-faced test because it compares the performance of the system between front-face and back-face mode [70, 74, 76]. An SMR is placed in a fixed position relative to the laser tracker and its position is determined in both front-face and in back-face mode. Any deviations between the measurement result obtained in each mode indicate that compensation will need to be applied to minimize the deviation. The compensation procedure to correct for what is referred to as R0 (range zero) error is normally available in most software packages provided with the laser tracker. For most applications a deviation less than 50 \(\upmu \)m is considered negligible. The two-face test must be repeated after compensation has been applied to verify that the deviation between front-face and back-face mode measurement results has been minimized; however, it may also need to be repeated often during the day if the test situation changes, such as a change in temperature, or if the equipment needs to be turned off and moved to another location.

The second in-field test compares the distance between two SMR positions where the distance has been previously established using a more accurate method. A common reference is a fixed-length bar with SMR nests at each end in which the SMR-to-SMR distance has been previously established using a distance measurement device more accurate than the laser tracker encoders, such as a Coordinate Measuring Machine (CMM) [70]. The SMR-to-SMR distance can also be established using the range axis of the laser tracker in IFM mode because the IFM radial uncertainty is typically much smaller than the encoder uncertainty. The second option is feasible only for bars in which the SMR nests are mounted on one side, which permits the SMR nests to be aligned along the radial axis in what is referred to as the “bucked-in” position. An example of one such bar available commercially is the Kinary, recently developed as part of a collaboration between NIST and Brunson Instruments [58].

Having established a fixed-length reference artifact, the encoder performances are evaluated by placing the artifacts in different positions and orientations. The ASME B89.4.19 [70] describes a series of test positions to evaluate whether the performance of the laser tracker is within the stated Maximum Permissible Error (MPE) of the laser tracker, as stated by the manufacturer.

3.2 Combining Laser Trackers with Other 3D Imaging Systems

Some applications may require the combined use of a laser tracker and a TLS system. A Laser Tracker (LT) can be used to measure some features with high accuracy and a TLS system can be used to obtain lower accuracy dense point clouds of the surroundings. Aligning the coordinate system of the LT and the TLS system requires pairs of corresponding 3D points. We present the following three methods to compute a correspondence:

-

The first method uses three planes which intersect into a 3D point. The LT can be used to probe 3D points on the surfaces of each plane. It is possible to compute the equation of each plane and compute the intersection of the three planes [66]. Using the 3D points scanned by the TLS system, it is possible to compute the equation of the three planes and compute their intersection. Note that the intersection of the planes does not necessarily correspond to an actual physical structure in the scene.

-

The second method uses a sphere. The LT can be used to probe points on the surface of a sphere. From these 3D points, it is possible to compute the position of the center of the sphere. Using the 3D points scanned by the TLS, it is also possible to compute the center of the sphere. A specific algorithm has been developed to compute the position of the center of a sphere using the 3D points of the surface of the sphere generated by the TLS system [86].

-

The third method uses a specifically designed target and designed probing tool [30]. This target can be used simultaneously for photogrammetry (shape from motion), LT and TLS. The last row of Fig. 4.5 shows the target and probing tool.

The top right image of Fig. 4.5 shows a range image where inferred 3D points are computed based on the last two presented methods. The combined use of LT and TLS is further discussed in Sects. 4.6.2 and 4.7.1.3.

The measurements of an LT and photogrammetry can also be combined using a kinetic mount, half spheres, and SMRs [80]. The middle row of Fig. 4.5 shows a half-sphere installed on a kinetic mount (left) and a SMR is installed on the same kinetic mount (right). The center of the circle formed by the planar surface of the half-sphere is located at the same position as the center of the SMR. The top left part of Fig. 4.5 contains a picture of the experimental setup used to compare close-range photogrammetry and a LT [80]. Note the two yellow scale bars used to compute the scale of the sparse point cloud obtained by a shape-from-motion algorithm.

4 Multi-channel Systems

Typically, a multi-channel LiDAR emits multiple laser beams that are contained within a plane. Each acquisition generates a profile contained within that plane and, by modifying the orientation or position of this plane, it is possible to generate a range image. Multi-channel LiDAR is now considered by many automobile manufacturers as a key technology required for autonomous driving and for the remainder of this section we will focus on imaging systems typically encountered in autonomous driving vehicles. Two variants of this concept are presented in the following two subsections. The first one uses physical scanning, while the second one uses a time-multiplexing strategy to perform digital scanning. In both cases, the distance measurement is performed using a pulse-based method.

4.1 Physical Scanning

A simple modification to the point-based approach is the integration into a single scanning head of multiple point-based systems having their laser beams contained within a plane. By scanning the head perpendicularly to this plane, it is possible to generate a range image. This configuration limits the lateral resolution and/or field of view along one axis, but allows a higher sampling rate than point-based systems. This type of system is well adapted to navigation applications where the horizontal orientation requires a larger field of view and higher resolution than the vertical one. A typical system for navigation could generate a range image of \(20000 \times 16\) 3D points by rotating a 16-channel scanner head over 360\(^\circ \). Section 4.7.2 presents results from a 16-channel scanner. Typically, the 3D information along the vertical axis is used to verify that the proper clearance is available for the vehicle, while the horizontal axis is used for obstacles avoidance. For some autonomous driving applications, the desired vertical field of view is about 30\(^\circ \). As shown in Fig. 4.6, a 30\(^\circ \) vertical field of view allows the detection of objects on the road just in front of the autonomous vehicle and approaching hills, and it allows the monitoring of the vertical clearance of garage entrances and other structures. For moving vehicles, the limitation of vertical lateral resolution can be compensated by integrating multiple range images into a single point cloud.

An example of forward-looking LiDAR with digital scanning. The system is composed of arrays of three photodetectors and four lasers. This system generates a range image of four by three, which is shown on the right. The pixel in yellow represents the 3D point that is being measured, as illustrated on the left. For illustrative purposes, a single photodetector is active.

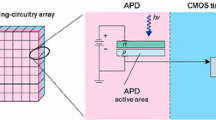

4.2 Digital Scanning

While physical scanning systems can generate 360\(^\circ \) range images, the design discussed in this section is adapted for the forward-looking configuration. This simplified solid-state design is inspired by the commercial documentation by LeddarTech.Footnote 4 The system is composed of a linear array of M photodetectors and a linear array of N laser sources. The projection and collection linear arrays are mounted side by side such that the arrays are perpendicular (see Fig. 4.7). Without loss of generality, it will be assumed that the projection array is oriented vertically, while the detector array is oriented horizontally. There is an optical element installed in front of the projection array that spreads the laser spots into stripes. The optical element is mounted such that the laser stripes are perpendicular to the linear array of projectors. Each individual laser will generate a horizontal stripe on the scene at a given elevation. By successively firing each laser, it is possible to scan (with a discrete number of samples) a horizontal laser line on the scene. Each laser pulse can be detected by the M photodetectors and the range of M 3D points can be computed. This is made possible by the installation, in front of the photodetector array, an optical element that collects a stripe of laser light reflected from the scene. For a system that generates horizontal laser stripes, the orientation of the collection optical element is arranged such that the stripes of incoming light are vertical. A range image of N by M 3D points can be generated by projecting N laser stripes. Note that the array of N laser stripes could be replaced by a single laser stripe whose orientation is controlled using a Microelectromechanical Systems (MEMS).

5 Area-Based Systems

Area-based ToF systems typically use a single illumination source and a 2D array of detectors that share the same collection optics. They are, to some extent, similar to a regular camera equipped with a flash. This type of system can be based on pulse or phase technologies. Pulse-based systems are also referred to as flash LiDAR. The camera model for the area-based system is presented in Sect. 4.5.1. Phase-based products are now mass produced and distributed as accessories for popular computing platforms. Due to the popularity of these platforms, an overview of the sensors is presented in Sect. 4.5.2. The last type of system presented in Sect. 4.5.3 is range-gated imaging, which is an active vision system for challenging environments that scatter light.

5.1 Camera Model

The simplest mathematical model that can be used to represent a camera is the pinhole model. A pinhole camera can be assembled using a box in which a small hole (i.e., the aperture) is made on one side and a sheet of photosensitive paper is placed on the opposite side. Figure 4.8 is an illustration of a pinhole camera. The pinhole camera has a very small aperture so it requires long integration times; thus, machine vision applications use cameras with lenses which collect more light and hence require shorter integration times. Nevertheless, for many applications, the pinhole model is a valid approximation of a camera. The readers familiar with the camera modeling material presented in Chaps. 2 and 3 can omit the following section.

5.1.1 Pinhole Camera Model

In this mathematical model, the aperture and the photosensitive surface of the pinhole are represented by the center of projection and the image plane, respectively. The center of projection is the origin of the camera coordinate system and the optical axis coincides with the Z-axis of the camera. Moreover, the optical axis is perpendicular to the image plane and the intersection of the optical axis and the image plane is the principal point (image center). Note that when approximating a camera with the pinhole model, the geometric distortions of the image introduced by the optical components of an actual camera are not taken into account (see Chap. 2 and [94] for more details). A 3D point \([X_c,Y_c,Z_c]^T\) in the camera reference frame can be transformed into pixel coordinates \([x,y]^T\) using

where \(s_x\) and \(s_y\) are the dimensions of the sensor in millimeters divided by the number of pixels along the X and Y-axis, respectively. Moreover, d is the distance in millimeters between the aperture and the sensor chip and \([o_x,o_y]^T\) is the position in pixels of the principal point (image center) in the image. The parameters \(s_x\), \(s_y\), d, \(o_x\), and \(o_y\) are the intrinsic parameters of the camera.

The extrinsic parameters of the camera must be defined in order to locate the position and orientation of the camera in the world coordinate system. This requires three parameters for the rotation and three parameters for the translation. The rotation is represented using a \(3\times 3\) rotation matrix

where \(\theta _x\), \(\theta _y\), and \(\theta _z\) are the rotation angles around the X, Y, and Z axis and translation is represented by a vector \(\mathbf {T}_c=[T_x,T_y,T_z]^T\). Note that the rotation matrix \(\mathsf {R}_c\) is orthogonal (i.e., \(\mathsf {R}^T_c=\mathsf {R}^{-1}_c\)) and \(\det (\mathsf {R}_c)=1\).

A 3D point \(\mathbf {Q}_w= [X_w ,Y_w,Z_w]^T\) in the world reference frame can be transformed into a point \(\mathbf {Q}_c=[X_c,Y_c,Z_c]^T\) of the camera reference frame by using

A point in the camera reference frame can be transformed to a point in the world reference frame by using

5.1.2 Camera Models for Area-Based Systems

For a pulse-based system, a pixel \([x,y]^T\) with a time delay of \(\varDelta t(x,y)\) can be transformed into a 3D point using Eqs. 4.13, 4.16, and 4.1

where \(o_r(x,y)\) are an offset recovered at calibration time that compensate for a systematic error in range. The intensity of the peaks can be used to create an intensity image associated with the range image.

For a phase-based system, a pixel \([x,y]^T\) with a phase offset of \(\varDelta \Phi (x,y)\) can be transformed into a 3D point using Eqs. 4.13, 4.16, and 4.11

where \(o_r(x,y)\) is an offset recovered at calibration time and k is the unknown integer that represents the phase ambiguity (see Sect. 4.2.2.2). The sine-wave magnitude, \(B_c\), allows the creation of the intensity image.

The intensity images associated with the range image can be use to calibrate the camera [101, 110] (see Chap. 2). This allows recovery of the intrinsic and extrinsic parameters of the camera. Once the parameters are recovered and using the pairs of known 3D points and their image coordinates, it is possible to use Eq. 4.17 or Eq. 4.18 to compute the value of the offset \(o_r\). Note that the offset \(o_r\) could also vary with the distance of the 3D points.

5.2 Phase-Based ToF for the Consumer Market

Consumer-grade products that use phase-based technology typically use a modulation frequency in the range of 10–300 MHz. Some major industrial players are designing dedicated imaging sensors for ToF [12, 83]. Table 4.2 contains some characteristics of these sensors. These dedicated designs are made viable by the expected large volume of production associated with consumer-grade products. Rather than examining the actual physical implementation, we will present the guiding principles of these specialized designs. While they are multiple reasons to design a specialized ToF sensor chip, we identify three main reasons as follows:

-

1.

Increasing the dynamic range of the system. For most 3D active imaging systems, increasing the dynamic range usually results in a reduction of the measurement uncertainty. Some specialized ToF sensors achieved this by using a per-pixel gain.

-

2.

Reducing the power consumption of the system. This can be achieved by reducing the power requirement of the sensor chip itself or by increasing the fill factor and quantum efficiency of the sensor, which allows a reduction of the power output of the illumination source. This reduction is key to the integration of ToF cameras into augmented-reality and mixed-reality helmets, which require a 3D map of the user environment.

-

3.

Immunizing the system from ambient lighting variation. To achieve this, some chips acquire two images quasi-simultaneously and output the difference of both images. The difference of two sine waves with the same frequency, but with a phase offset \(\pi \), is a sine wave with twice the amplitude, centered on zero (i.e., without ambient illumination).

5.3 Range-Gated Imaging

Range-gated imaging is a technology typically used for night vision and challenging environments that scatter light. Typically, it is composed of an infrared camera coupled with an infrared light source. When the light source is pulsed and the exposure delay and integration time of the camera are controlled, it is possible to perform some rudimentary range measurements. The integration time (typically referred to as the gate) and exposure delay (referred to as the gate delay) can be specified such that light emitted by the source and captured by the sensor had to be reflected by an object located within a given range interval. One interesting aspect of range-gated imaging is that it can be used during a snowstorm, rain, fog, or underwater to image objects that would be difficult to image using passive 2D imaging technology. However, the resulting images become noisier and, in these weather conditions, the power output of the light source may need to be increased to levels that pose eye-safety issues. One possible mitigation strategy is to use a laser at 1.55 \(\upmu \)m. At this wavelength (known as shortwave infrared or SWIR), the light is absorbed by the eye fluids before being focused on the retina. This tends to increase the maximum permissible exposure to the laser source. Due to possible military applications, some SWIR imagers are regulated by stringent exportation laws. Moreover, they are expensive as they require Indium Gallium Arsenide semiconductors (InGaAs) rather than the more usual Complementary Metal Oxide Semiconductors (CMOS). While range-gated imaging technology is typically used for enhancing 2D vision, it can be used to construct a range image by acquiring multiple images with different acquisition parameters [23, 108]. Moreover, codification techniques, similar to the one used for structured light (see Chap. 3), can also be used [57].

6 Characterization of ToF System Performance

A prerequisite for the characterization of ToF systems is the definition of uncertainty, accuracy, precision, repeatability, reproducibility, and lateral resolution. None of these terms are interchangeable. Two authoritative texts on the matter of uncertainty and the vocabulary related to metrology are the Guide to the Expression of Uncertainty in Measurement (GUM) and the International Vocabulary of Metrology (VIM) [51, 54]. The document designated E2544 from the ASTM provides the definition and description of terms for 3D imaging systems [6]. Due to space constraints, we provide concise intuitive definitions. For formal definitions, we refer the reader to the abovementioned authoritative texts.

Uncertainty is the expression of the statistical dispersion of the values associated with a measured quantity. There are two types of uncertainty defined in [53]. Type A uncertainties are obtained using statistical methods (standard deviation), while Type B is obtained by other means than statistical analysis (a frequently encountered example is the maximum permissible error). In metrology, a measured quantity must be reported with an uncertainty.

For a metrologist, an accuracy is a qualitative description of the measured quantity (see the definition of exactitude in the VIM [54]). However, many manufacturers are referring to quantitative values that they describe as the accuracy of their systems. Note that often accuracy specifications provided by the manufacturer do not include information about the test procedures used to obtain these values. For many applications, the measured quantities need to be georeferenced and one may encounter the terms relative and absolute accuracy, the latter being critical for autonomous vehicle applications, for example. Relative accuracy relates to the position of something relative to another landmark. It is how close a measured value is to a standard value in relative terms. For example, you can give your location by referencing a known location, such as 100 km West of the CN Tower located in downtown Toronto. Absolute accuracy relates to a fixed position that never changes, regardless of your current location. It is identified by specific coordinates, such as latitude and longitude. Manufacturers are usually referring to accuracy specifications of their system as relative and not absolute. Relative measurement is generally better than absolute for a given acquisition.

Intuitively, precision is how close multiple measurements are to each other. Precise measurements are both repeatable and reproducible. You can call it repeatable if you can get the same measurement using the same operator and instrument. It is reproducible if you can get the same measurement using multiple operators and instruments. Figure 4.9 shows the difference between precision and accuracy with a dartboard example. Precision is the grouping of the shots (how close they are to each other on average), whereas accuracy is how close the darts are, on average, to the bullseye.

Intuitively, the lateral resolution is the capability of a scanner to discriminate two adjacent structures on the surface of a sample. The lateral resolution is limited by two factors: structural resolution and spatial resolution. The knowledge of beam footprint (see discussion on laser spot size in Chap. 3) on the scene allows one to determine the structural component of the lateral resolution of the system. The spatial resolution is the smallest possible variation of the scan angle. Increasing the resolution of the scan angle can improve the lateral resolution, as long as the spatial resolution does not exceed structural resolution.

For the remainder of this section, we will first review the use of reference instruments to compare ToF systems. This is used to introduce current standards and guidelines. Then other related studies on the characterization of 3D imaging system are presented. We then present methods to find inconsistencies in instrument measurement results.

6.1 Comparison to a Reference System

The performance of a 3D imaging system (instrument under test or IUT) is usually evaluated by comparing it to the performance of a reference system to determine if the IUT can be used to accomplish the same task as the reference system. This requires the reference system to be considered the “gold standard” for system performance. The objective is to validate whether, for a given task, both instruments can provide similar measurement results. Many governments have been active in developing standards to test the performance of TLS systems using an LT system as the reference instrument [14, 63,64,65]. For small objects, obtaining 3D reference models can be relatively simple because they can be manufactured with great accuracy or they can easily be characterized using a Coordinate Measuring Machine (CMM) [62]. When quantitatively evaluating the performance of a large-volume TLS system, a significant problem is how to acquire reliable 3D reference models. For example, manufacturing a reference object the size of a building would be cost-prohibitive. Moreover, creating a digital reference model of such an object would require the use of a complex software pipeline to merge many 3D scans. It may be difficult to properly characterize the impact of the software pipeline on the uncertainty of the data points comprising the 3D model. For example, consider the study presented in [99, 100] that compared the task-specific performance of a TLS system and a photogrammetry system being used to create a digital model of the entrance of a patrimonial building (10 m \(\times \) 10 m). For the large-volume digital reference model, the authors used a combination of measurements results obtained by both a total station and a TLS system. For a smaller volume digital reference model they combined measurement results obtained using both an LT system and a TLS system. In another study, the authors compared the performance of a photogrammetry system with that of a laser scanning system [18, 41]. Yet another study compared the performance of a photogrammetry system to a manual survey for the task of generating the as-built model of a building [31, 56]. A best practice methodology for acquiring reference models of building-sized object is presented in [33]. The method uses a TLS system and an LT. A study that uses a LT to evaluate close-range photogrammetry with tilt-shift lenses is presented in [80]. The comparison of TLS and LT with photogrammetry is further discussed in Sect. 4.9.

6.2 Standards and Guidelines

There are relatively few guidelines and standards available for optical non-contact 3D imaging systems, most of which have emerged from the world of CMMs. The primary organizations that develop standards for optical non-contact 3D imaging systems are the International Organization for Standardization (ISO), American Society for Testing and Materials (ASTM), and American Society of Mechanical Engineers (ASME). There is also a set of guidelines published by the Association of German Engineers (the VDI).

ISO 10360 encompasses a group of international standards that provides methods for the acceptance and reverification of 3D imaging systems [49]. Parts 1 through 6 apply specifically to CMMs, but a part 7 was added to include CMMs with imaging probing systems. Parts 8, 9, and 10 apply to the broader class of Coordinate Measuring System (CMS), which includes both CMMs and LT systems. A part 12 is under development to include articulated arm systems under the CMS umbrella. All four of these standards, however, apply only to contact 3D imaging systems. ISO 17123-9 was developed to provide a standard for in-field test procedures for TLS systems [36, 50].

VDI 2634, a set of German guidelines, is devoted to acceptance and reverification testing of optical non-contact 3D imaging systems, but is limited to optical non-contact 3D imaging systems that perform area scanning from a single viewpoint [103]. Part 3 extends the test procedures to multi-view non-contact 3D imaging systems. VDI 2634 was written as an extension to ISO 10360, so it drew heavily from CMM standards; however, 3D imaging systems utilize measurement principles that differ substantially from those of CMMs. VDI 2617 part 6.2 makes some attempt to bridge the gap between contact and non-contact 3D imaging systems, but still approaches acceptance and reverification from the CMM perspective.

The ASTM E57 committee for 3D imaging systems has developed standards specifically for TLS systems. ASTM E2611-09 is a guideline to the best practices for safe use of 3D imaging system technology. This standard was followed by ASTM E2544-11, which provides a set of terminology to facilitate discussion regarding 3D imaging systems. Parallel with this effort was the development of ASTM E2807 to provide a common and vendor-neutral way to encode and exchange LiDAR data, although this was focused mostly on airborne LiDAR systems rather than TLS systems. The most recent standard developed by the committee is ASTM E2938-15, which evaluates the range measurement performance of a TLS system using an LT as the reference instrument. A proposed standard WK43218 is under development to evaluate the volumetric measurement performance of a TLS system, building on the method developed for the E2939-15. More details on the development of standards can be found in [15].

6.3 Other Research

Outside the realm of official standards and guidelines, there have been many attempts to quantify the performance of LiDAR systems. Hebert and Krotkov [2] identified a variety of issues that can affect LiDAR data quality, including mixed pixels, range/intensity cross-talk, synchronization problems among the mirrors and range measuring system, motion distortion, incorrect sensor geometry model, and range drift. Mixed pixels refer to multiple return signals associated with a single outgoing signal, each resulting from a different surface along the beam path, and occurs when the beam footprint is only partially intersected by a surface. They assessed the accuracy of a LiDAR system from 5 trials of measuring the positions of 6 targets of different materials (untreated cardboard, black-painted cardboard, wood) at known distances from 6 to 16 m under different lighting conditions (sunny and cloudy, with and without lights). Range accuracy was evaluated by averaging 100 scans of each of 6 black-painted cardboard targets at different distances from 6 to 16 m. Angular accuracy was evaluated by measuring the lateral drift of 1000 scans of white circles with radius 12 cm, which were extracted from each image using thresholding.

Tang et al. [96] focused their efforts on quantifying the effect of spatial discontinuities, or edges, on LiDAR data. This is related to the mixed pixel problem discussed by Hebert and Krotkov, but focused on the spatial measurement error that occurs as progressively less of the surface intersects the beam footprint. Indeed, Tang et al. referred specifically to the mixed pixel effect as a significant issue in which both foreground and background surfaces are simultaneously imaged. Different LiDAR systems handle multiple return signals in different ways such as reporting only the first or the last returns, or averaging the returns. In all cases, measurements at discontinuities are extremely noisy, so a challenge is to detect and remove them; however, zero-tolerance removal can significantly degrade the quality of the resulting depth map, especially when surfaces narrower than the beam footprint are removed as noise. In some cases, strips of up to several centimeters wide may be removed at spatial discontinuities.

Cheok and Stone [27], as part of the NIST’s Construction and Metrology Automation Group, have conducted significant research into the problem of characterizing the performance of LiDAR systems. In addition to their participation in the ASTM E57 standard development, they built a facility devoted to the performance assessment and calibration of LiDAR systems. Some of this work was discussed in Sect. 4.6.2. They noted that LiDAR performance is not simply a function of range measurement. Rather, it is complicated by having to function in a multi-system environment that may include equipment to determine the pose of the LiDAR system and other imaging systems such as triangulation or photogrammetry that may be included in the final model. For individual LiDAR systems, range measurement accuracy, surface color, surface reflectivity (shiny to matt), surface finish (rough to smooth), and angle of incidence with the systems are significant factors in assessing LiDAR system performance. As impediments to assessing LiDAR performance, they identified a lack of standard procedures for in-site test setup and equipment alignment, how to obtain reference measurements, lack of standards related to target size or reflectivity, and no guidelines regarding the required number of data points.

Zhu and Brilakis [111] examined different approaches to collecting spatial data from civil infrastructure and how they are converted into digital representations. They noted that while there were many types of optical-spatial data collection systems, no system was ideally suited for civil infrastructure. In their study they compared system accuracy, methods of automating spatial-data acquisition, instrument cost, and portability. According to Teizer and Kahlmann [97], the ideal system for generating digital models of civil infrastructure would be highly accurate, capable of updating information in real time, be affordable, and be portable. Zhu and Brilakis tabulated the benefits and limitations of four classes of optical spatial imaging systems: terrestrial photogrammetry, videogrammetry, terrestrial laser scanning, and video camera ranging. They classified each according to measurement accuracy, measurement spatial resolution, equipment cost, portability, spatial range, and whether or not data about the infrastructure could be obtained in real time (fast and automated data collection).

6.4 Finding Inconsistencies in Final 3D Models

As noted in the previous section, partial or complete automation of a spatial data collection system is the ideal; however, measurement errors often require manual intervention to locate and remove data prior to merging the data into the final model. Moreover, inaccuracies in the final model due to problems in the registration process must also be located and addressed.

Salvi et al. [90] completed a review of image registration techniques in which model accuracy was assessed as part of the process. Image registration is used to address the physical limitation to accurately modeling a surface by most spatial imaging systems. These limitations include occluded surfaces and the limited field of view of typical sensor system. The registration process determines the motion and orientation change required of one range image to fit a second range image. They divided registration techniques into coarse and fine methods. Coarse methods can handle noisy data and are fast. Fine methods are designed to produce the most accurate final model possible given the quality of the data; however, they are typically slow because they are minimizing the point-to-point or point-to-surface distance. Salvi et al. experimentally compared a comprehensive set of registration techniques to determine the rotation error, translation error, and Root-Mean-Square (RMS) error, as well as computing time of both synthetic and real datasets.

Bosche [21] focused on automated CAD generation from laser scans to update as-built dimensions. These dimensions would be used to determine whether as-built features were in compliance with as-designed building tolerances. Even this system, though, was only quasi-automated in that the image registration step required manual intervention. The automated part of the system used the registered laser scans to find a best-fit match to the as-designed CAD with an 80% recognition rate. They noted that fit accuracy could not be properly assessed because it depended on the partially manual registration process in which a human operator was required to provide the match points for the registration system. The system, though, assumed each CAD object corresponded to a pre-cast structure that was already in compliance, so only assessed whether the resulting combination of those structures was in compliance.

Anil et al. [7, 8] tackled the problem of assessing the quality of as-built Building Information Modeling (BIM) models generated from point-cloud data. Significant deviations between as-built and as-designed BIM models should be able to provide information about as-built compliance to as-designed tolerance specifications, but only if the digital model is a reasonably accurate representation of the physical structure. In their case study, they determined that the automated compliance testing identified six times as many compliance deviations as physical test measurement methods alone. Moreover, the errors were discovered more quickly, resulting in a 40% time savings compared to physical measurement methods. The authors did, however, note that automated methods were not as well suited to detecting scale errors. This issue is balanced by limitations to physical measurements that include limited accuracy of tape- and contact-based methods, limited number of measurements, and the physical measurement process is time and labor intensive. They identified the primary sources of measurement errors in scanned data as data collection errors (mixed pixels, incidence angle, etc.), calibration errors in scanning systems, data artifacts, registration errors, and modeling errors (missing sections, incorrect geometry, location, or orientation). They observed that these errors were, in practice, typically within the building-design tolerance so were well suited to identifying actual deviations in the as-built structure.

7 Experimental Results

In this section, we present some experimental results that illustrate some of the applications of terrestrial and mobile LiDAR systems. The discussions about range uncertainty and systematic error of the measurements produced by TLS is also applicable to MLS.

7.1 Terrestrial LiDAR Systems (TLS)

First, the range uncertainty of a pulse-based TLS on different diffuse reflectance surfaces is presented. Then, the impact of sub-surface scattering on pulse-based and phase-based TLS is discussed. Finally, a case study of the use of pulse-based TLS and LT is showcased.

7.1.1 Range Uncertainty

The performance of a Leica ScanStation 2 was verified under laboratory conditions (ISO 1) for distances below 10 m and in a monitored corridor for distances up to 40 m. Figure 4.10 shows two sets of curves for evaluating the noise level on a flat surface (RMS value of the residuals after plane fitting to the data) as a function of distance, and the diffuse reflectance of different materials [5]. Using a Munsell cardboard reference with a diffuse reflectance of 3.5%, we get a maximum range of 20 m; therefore, the measurement of dark areas is limited by distance. At a range that varies between 1 and 90 m, on a surface with diffuse reflectance that varies between 18 and 90%, the RMS noise levels vary between 1.25 and 2.25 mm.

Left: graphical representation of the RMS values obtained after fitting a plane to the 3D point cloud at different distances and as a function of material diffuse reflectance. Right: graphical representation of the RMS values obtained after fitting a plane to the 3D point clouds for different material diffuse reflectance as a function of distance.

7.1.2 Systematic Range Error

Figure 4.11 left shows the range measurement systematic error resulting from optical penetration of a marble surface. This image was acquired in-situ, while performing the 3D data acquisition of the Erechtheion at the Acropolis in Athens [34]. An approximately 0.1 mm thick piece of paper represents an opaque surface held flat onto the semi-opaque marble surface. The penetration makes the marble surface appear offset by almost 5 mm from the paper. This systematic range error may be attributed to a combination of laser penetration and unusual backscattering properties of the laser light on this type of marble. This systematic error was observed in the field using a pulse-based TLS and a phase-based TLS. While both scanners register a systematic error, the magnitude varies depending on the technology used and the type of marble.

Figure 4.11 right shows an experiment conducted under laboratory conditions (ISO 1) that validates this in-situ observation. A 15 mm thick well-characterized metallic plate is installed in front of a polished marble plate. When analyzing scans from a TLS, the metallic plate appears 8.4 mm thicker. Again, this systematic error was observed using a pulse-based TLS and a phase-based TLS and the magnitude of the error varies depending on the technology used.

Facade of a building facing south, Top: color from the onboard laser scanner 2D camera mapped onto the final 3D point cloud. Bottom: shaded view of the 3D point cloud. The polygonal mesh was created without using a hole-filling algorithm, using Polyworks IMMerge (no scale provided on this drawing).

7.1.3 A Case Study in Combining TLS and LT

This section presents a dataset containing a reference model composed of a 3D point cloud of the exterior walls and courtyards of a 130 m \(\times \) 55 m \(\times \) 20 m building that was acquired using the methodology proposed in [33]. This building is located at 100 Sussex Drive in Ottawa and was built from 1930 to 1932 to host the National Research Council Canada. The building is made of large sandstone and granite and it is designated as a classified federal heritage building under the Treasury Board heritage buildings policy of the Government of Canada (see Fig. 4.12). The two courtyards require special attention because they had to be attached to the 3D images of the exterior walls. Multiple scan positions were required and Fig. 4.14 illustrates the different range images that were acquired. The range images, once acquired, were aligned in a reference system linked to the measurement results from the laser tracker. This was possible by the combined use of TLS, LT, and spherical reference objects. The spherical object was used to combine the TLS and LT coordinate systems (see Sect. 4.3.2). Some contrast targets were used for quality control purposes (see Fig. 4.13). Polyworks IMAlign, IMInspect, and IMMergeFootnote 5 were used together to perform most of the work.

View of the south facade. The contrast target positions are extracted with the laser scanner interface software Cyclone 2.0. The results are embedded in IMInspect and mapped onto the final 3D point cloud (top). Bottom: close-up of the coordinates of some contrast targets on the west side of the south facade (no scale provided on this drawing).

Due to the combined use of both measurement instruments, it was possible to quantitatively evaluate the quality of the alignment of the different range images produces at different scanning positions. The final model has 47 million polygons and can be characterized by an expanded uncertainty (Type B) U(k\(=\)3) of 33.63 mm [33]. Locally, the RMS on the surface is about 2.36 mm (\(1\sigma \)) and the average spatial resolution is about 20 mm (between 10 and 30 mm).

7.2 Mobile LiDAR Systems (MLS)

Some LiDARs installed on mobile platforms are used for acquiring high-resolution mappings of urban environments, while others are used for the vehicular navigational purposes. In the remainder of this section, 3D scans obtained using a 16-channel LiDAR system installed on top of an automobile are presented. The results shown in this section target navigation applications.

7.2.1 Static Scan

In Fig. 4.15, a 360\(^\circ \) range image and an image from a front-facing camera are presented. These were obtained while the automobile was parked. Generally, standard 2D cameras must deal with lighting variation and shadows. This is a significant disadvantage. However, LiDAR can provide a 3D representation of the surrounding environment largely independently of ambient light. Another example of a 360\(^\circ \) scan is presented in Fig. 4.16. The accompanying image was generated using the panoramic feature on a smart phone.

3D Map of the Collip Circle road located in London (Canada) generated using a SLAM algorithm. The trajectory of the vehicle is shown using a dotted line. This result was obtained using the system presented in [45].

7.2.2 Simultaneous Localization and Mapping (SLAM)

A typical robot integrated with a SLAM system will build a model of the surrounding environment and estimate its trajectory simultaneously. SLAM systems rely on several key algorithms, like feature extraction, registration, and loop closure detection. SLAM is a central challenge in mobile robotics and SLAM solutions enable autonomous navigation through large, unknown, and unstructured environments. In recent years, SLAM became an important research topic that has been investigated heavily [9]. In autonomous vehicle and mobile robotics applications, both cameras and the LiDAR can be used for localization [95]. As stated above, LiDAR has the advantage of being largely independent of external lighting and making use of the full 3D representation. Figure 4.17 shows the result of a map of the environment made using the Velodyne VLP-16 LiDAR previously used. Note that the SLAM method that produced this result exploited the 360\(^\circ \) field of view of the VLP-16 LiDAR and the availability of odometry data.

8 Sensor Fusion and Navigation

Sensor fusion is the process of integrating data from different sensors in order to construct a more accurate representation of the environment than otherwise would be obtained using any of the independent sensors alone. In recent years, significant progress has been made in the development of autonomous vehicles and Advanced Driver-Assistance Systems (ADAS). LiDAR is an important sensor that made possible such progress. Nevertheless, LiDAR data must be fused with other sensor’s data in order to improve the situational awareness and the overall reliability and security of autonomous vehicles.

8.1 Sensors for Mobile Applications

For autonomous driving, ADAS and navigation applications, the sensors may include stereo cameras, cameras, radar, sonar, LiDAR, GPS, Inertia Navigation System (INS), and so on. Radar is one of the most reliable sensors. It can operate through various conditions such as fog, snow, rain, and dust when most optical sensors fail. However, radar has a limited lateral resolution. Cameras are cheap and have high resolution, but are affected by ambient illumination. Stereo cameras are cheap, but can be computationally expensive and do not cope well with low-texture areas. Sonar is useful for parking assistance, but has a limited range. The main disadvantage of sensor fusion is that different sensors can have incompatible perceptions of the environment; for example, some may detect an obstacle, while others may not.

In autonomous systems and ADAS, all sensor data is fed into the Data Acquisition System (DAQ). The gathering of the data by the DAQ can also be used for testing, developing, improving efficiency, ensuring reliability, and safety. Figure 4.18 explains the collaboration of the main sensors in an autonomous or ADAS application. The sensor fusion process starts by using information provided by GPS, INS, and odometery in order to determine the absolute position and orientation of the vehicle. Then sensor information from cameras and LiDARs is compared to known maps that are downloaded from the cloud (Web Security Services).

8.2 Cloud-Based, High-Definition Map

The cloud-based information is typically referred to as a High-Definition (HD) map and it allows adaptation of the driving to potential obstacles, including traffic, in order to avoid potential accidents. An HD map consists of geo-spatial coordinates of the static elements that describe roadway features such as lane markings, traffic signs, landmarks, road-edges, and so on. In order to construct these maps, special mapping vehicles are augmented with a number of high-accuracy sensors [91]. This allows the determination of a geo-spatial representation of the roadways that can be used by autonomous vehicles or ADAS that uses cheaper sensors. However, this HD-map approach creates significant data storage, computation, data delivery, and cyber-security issues that fall outside the scope of this chapter.

Data acquisition is a sampling process that measures real-world physical conditions and converts the resulting samples into a digital representation recorded on the Data Acquisition System (DAQ). In automotive applications, the data from the different sensors are typically transferred on a data bus known as a Controller Area Network (CAN) or CAN bus.

8.3 Absolute Positioning Systems