Abstract

The work deals with the evolution of Manufacturing Planning and Control (MPC) system, from the approaches commonly adopted in the industry so far, to the new implementation schemes involved by Industry 4.0 and Cloud Manufacturing paradigms. Firstly, an introductory part presenting the different classical approaches and their scope of application is reported, together with some insights about the positioning of Industry 4.0 in future market scenarios. Then, a second section focuses the attention on the new technologies at the base of the Industry 4.0 concept, and how they can act as enablers for bridging the gap between current and future production control approaches. Finally, the last section explores the state of the art of Industry 4.0 and Cloud Manufacturing architectural implementations, also outlining further development possibilities and strategies.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

10.1 Classical Approaches to Production Control and Scheduling Prior to Industry 4.0

Manufacturing systems are complex systems whose performance is significantly affected by both configuration and operational management. The goal is to achieve customer satisfaction in terms of the products delivered, expected quality, requested quantity, and desired response time. Customers, who comprise the market, and their needs are driving factors that determine, at a strategic level, the coherent configuration of the system and, at an operational level, the optimal approach for its management. Both of these aspects are strictly correlated, since the fulfilment of customers’ needs can be obtained at a sustainable cost (i.e. a cost that can be transferred to the customer) only by the right combination of strategic decisions and operative management.

Different theories and manufacturing system designs have been developed to face decades of new challenges imposed by market changes, as well as implement technological innovations and progress made available by the scientific and engineering fields. Hence, before exploring the new perspectives involved in the advent of Industry 4.0 and recent market trends, a general picture of which can be found in Panetto et al. (2019), it is convenient to summarise currently accepted views regarding the strategic configuration of manufacturing systems and related implications for their management. Figure 10.1 shows basic configurations that can be adopted to structure a manufacturing system, ordered along with a coherence curve that depicts the relationship between the complexity of the product to be realised and the amount of time the customer is willing to wait until the order is delivered.

These two aspects are the main driving factors that influence the configuration of the system and its management approach. The complexity of a product represents the degree of customisation requested by customers. High complexity means products are formed by many components that frequently differ. Conversely, reduced complexity indicates simple products, formed by a few standardised parts that are likely to be present in different products, typically belonging to a specific family.

The degree of complexity is inversely related to the sales volume of a single product variant (i.e. a specific final product identified by a unique part number). This aspect is the natural consequence of simple products being designed according to basic requirements that cover the needs of many people, while complex, customised products fulfil the needs of a restricted niche of customers. Thus, a standardised product experiences a total demand that is not affected by the behaviour of every customer in the market since it is derived from the sum of a large number of individual demands, resulting in a huge aggregated sales volume that also exhibits low variation and high stability over time. Conversely, a complex, customised product is affected by a low and highly variable sales volume since it is intended to fulfil the needs of a restricted and identifiable group of customers, also resulting in a demand signal that is difficult to forecast given its intrinsic variability and uncertainty.

The required market response time is the other external driving factor impacting system configuration and management. It represents the amount of time that the generic customer in the downstream (i.e. the direct client of the manufacturing system) can wait to see their order satisfied, starting from the moment they place the order with the manufacturing system. This amount of time becomes significant when compared to the (average) cycle time of manufacturing systems, which is the amount of time the generic order takes to cross the system once it has been received. The cycle time includes all the processing times, as well as every lead time and queuing time the order experiences while moving through the system. A required market response time less than the manufacturing system cycle time implies that it is not possible to wait for the customer order before starting production; stock positioning strategies have to be deployed to mask a quota (or all) of the cycle time to assure compliance with the response time required by the customer.

With respect to the two aforementioned driving factors (i.e. the product complexity/customisation and the required market response time), different approaches to the configuration and management of the manufacturing system can be adopted following a coherence criterion that is represented in Fig. 10.1 by the specific position of each different manufacturing configuration. In the left part of Fig. 10.1, the zone with the tightest request in terms of response time by the customer is represented. In this zone, the customer expects an almost immediate fulfilment of the order, making any manufacturing strategy that waits for the customer order before starting production de-facto impossible. The fulfilment of customers’ orders has to be carried out by maintaining a stock of finished products since this is the only way to achieve such a rapid response. The strategy is to mask the production time to the customer, letting them experience only the time requested for the final preparation and delivery of the order.

Given the need to keep stock of finished products, a high level of standardisation is required to reduce the risk of obsolescence. Therefore, if manufacturing systems operating in these conditions are coherent (i.e. sustainable from an economic viewpoint), then they produce a restricted number of products or variants of the same product, and each product experiences a large sales volume. Given the stability of demand for such items, forecast-based production planning can be deployed efficiently, making a make-to-stock (MTS) approach possible. Hence, production plans are anticipated with respect to actual demand and computed as a trade-off between inventory costs and production costs.

The production system in this scenario is typically configured as production lines dedicated to specific products or simple variants of them. The paradigm of ‘mass production’ is then adopted since the lines are working at the minimum possible unit cost to produce large batches. The production system is less responsive because every batch takes a long time to be produced; however, this does not constitute an issue with respect to customer order fulfilment since the market demand is directly satisfied by the inventory of finished products, and thus the customer does not experience the cycle time of the production system. Such a manufacturing environment is referred to as ‘flow’ since the production line clearly defines a continuous and stable production flow. When a few different products or variants are alternated in production, realising a cyclic load of the line, the manufacturing environment is called ‘repetitive’.

In the second column in Fig. 10.1, the just-in-time (JIT) manufacturing environment is found. The JIT concept was developed in the 1970s by the Toyota Motor Corporation in response to the need to reduce inventories, viewed as a source of unnecessary costs and inefficiency at the time (Ohno 1988). Toyota introduced the concept of kanban, developed by one of its production engineers in the mid-twentieth century, as a simple tool that enables the propagation of the demand signal along the production system by directly controlling and limiting the work-in-progress (WIP) in the system. The mechanism is simple; every time a station withdraws a predefined quantity of some item, the upstream station is allowed to produce the same amount of the withdrawn item to replenish its stock.

The original idea behind the JIT concept paved the way to define an entire class of manufacturing systems that, as can be seen in Fig. 10.1, covers the wide area of required market response times, ranging from short to comparable to the manufacturing system cycle time. In this area, different configurations of the manufacturing system are possible, as will be described below, but all of them are unified by a common denominator: creating a continuous and balanced production flow across the whole system by timing production activities to the demand pace. This is the real insight behind the JIT concept; it focuses the attention on the entire system (i.e. the flow), controlling the WIP and monitoring the resulting throughput and cycle time instead of trying to optimise each resource (i.e. station) individually. Resources are then forced to produce at the demand pace and remain synchronised with the production flow since the demand signal is propagated continuously to the upstream tanks to the adopted WIP control mechanism.

The JIT concept makes possible the flow-based management of the manufacturing system even if customisation is increased with respect to flow and repetitive production—so when some flexibility and differences in production routes are present. By maintaining flow-based control, which, in JIT manufacturing, is deployed via direct WIP measuring, it is possible to keep the system cycle time reduced and stable, resulting in increased predictability and responsiveness in the manufacturing system. Moreover, correlations among the WIP level, throughput, and cycle time can be better understood, with respect to the level of variability inflated in the system by common detractors, such as setups or failures, and by market demand, which is mainly related to mix variation rather than aggregated sales volume variation in this context.

Different kinds of configurations can be adopted to implement the basic concept of JIT manufacturing, depending on the degree of customisation, the way it is obtained, and the response time allowed by the market. The original approach developed by Toyota, which can be called a ‘pure pull’ system, can be placed in the bottom-left part of the JIT zone in Fig. 10.1. The pure pull system de-facto implements the original kanban mechanics, which considers the adoption of a single kanban loop for each couple of resources to limit the WIP in between them and release the production order. Hence, the presence of a stock point in any kanban loop is determined by the particular behaviour of the system. At this stock point, any item required by production must be present with an inventory level greater than zero to make production possible in the downstream processes. Items are stocked in standardised containers, typically plastic boxes, containing a specified quantity that represents the minimum possible batch size for the related item. Each box in the stock point has a kanban attached to it, and every time a box is withdrawn, the associated kanban is released and sent to the upstream process, which is then allowed to produce just the consumed quantity (i.e. the quantity needed for a new container). The kanban is then called a ‘production kanban’ since its release determines the generation of a production order. A pure pull JIT system falls in the category of MTS systems since market demand is fulfilled by the stock of finished products kept at the end of the production flow. Practically, customer orders entering the system generate the withdrawal of finished products from the final stock point, which releases production kanbans in a cascade, stage by stage, in a backward manner along the flow. The advantage of these systems is that the customisation delivered to the market can be increased while maintaining a short response time since demand is still satisfied by the inventory of finished products, and the production can be kept under strict control due to the limitation of WIP, even if a more complex production route is present. As a counterpart, given the need to keep a stock of every item used at every stage, as well as finished products, the degree of customisation cannot be too high, which prevents excessive stocks of slow-moving items and the related obsolescence risk. Moreover, demand must be stable to guarantee a steady pace in the production flow, so these systems are unable to react to significant deviation in daily demand, as well as sharp variation in the demand mix, as it will result in the emptying of some stock items at different stages of production, provoking starvation phenomena and the loss of throughput. This is also why the pure pull JIT system saw its birth in the automotive industry.

To the right of the JIT block in Fig. 10.1, the other extreme condition for the application of flow-based control is reached. Here, the level of customisation requested by customers is higher than in the pure pull case, involving a significant increase in the number of finished products to be realised and the related number of items to be managed within the system. In this situation, maintaining stock of every component and the final product is not sustainable from an economic viewpoint given the wide variety to be managed and the implicit obsolescence risk. Stocks have to be moved as upstream as possible, typically at the level of raw materials or by-products, where a certain level of standardisation is required considering their commonality, at least within the different product families. Customisation is then obtained by successive production stages, which need to be managed on an on-order basis, by adopting a make-to-order (MTO) approach. Given the increased level of customisation, the market must allow manufacturing system more time to carry out the production.

Nevertheless, at this level of customisation requested by the market, the amount of time available for production activities remains tight with small margins. In this scenario, it is strategic to adopt a flow-based configuration with production order releases based on direct WIP control to increase stability and keep the system’s cycle time as brief as possible. The best configuration and control approach in this scenario is constant WIP (CONWIP; Hopp and Spearman 2011). Practically, CONWIP defines long, closed control loops involving specific parts of the manufacturing system dedicated to the production of particular families of items. Within each loop, anonymous kanbans (i.e. kanbans that are not a priori associated with specific part numbers) limit the number of production orders that can be in process simultaneously in the system. Every time an order is completed, the attached kanban is released and can move to upstream to allow a new order to enter, generally for a different type of product. Hence, stocks of customised items are not kept within the flow, while the performance of the flow itself (i.e. throughput and cycle time) can be easily controlled by directly tuning the number of kanbans. The queue of orders at the entrance of each loop is determined by the consumption of items in the downstream stages, so the pull mechanism is preserved, and prioritisation strategies can be adopted in the queue to facilitate the optimal utilisation of resources, along with the flow.

Finally, the central part of the JIT zone in Fig. 10.1, bounded by the pure pull approach to the left and CONWIP to the right, represents a transition from an MTS environment to an MTO environment. It exists on the part of the horizontal axis in which the required market response time is becoming less tight but remains at a value smaller than the cycle time of the entire manufacturing system, so a pure MTO approach cannot be adopted. Moreover, since the willingness of the customer to wait longer for the product to be delivered must be compensated in some way, the customisation level must increase. Here, a coherent configuration of the manufacturing system can be achieved by adopting a modularisation strategy in product design. If products are modularised, several different final products can be obtained by means of alternative combinations of a limited number of various modules. Customisation can then be kept downstream in the manufacturing process, mainly involving assembly activities (i.e. assembling common parts and different modules) and a few finishing production activities (e.g. painting). This approach facilitates the upstream production of modules, each of which are used in a number of different final products depending on customer orders, a characteristic similar to standardised items, making it more likely that MTS production can be adopted. At this point, the manufacturing systems is split into two parts divided by a decoupling point at which most of the stock is kept, allowing the upstream to start production in advance by exploiting an aggregated forecast for module demand estimation over the mid-term horizon, while the downstream operates on an on-order basis given that the cycle time requested by the downstream remains shorter than the required market response time. Since the downstream, which is working directly on fulfilling customer orders, mainly executes assembly activities, this kind of configuration is called assembly-to-order (ATO).

Next to the JIT block in Fig. 10.1, the ‘on order’ zone can be found. This zone refers to manufacturing production characterised by the highest customisation level, meaning the number of different final products delivered to the market is so large that each of them experiences a very low and unpredictable demand. The stock of final products and related components, highly customised as well, cannot be held in the manufacturing system given the high obsolescence risk. Production orders can be released only after the customer order is received, so the customer must provide the manufacturing system sufficient time to perform all the manufacturing activities. The manufacturing system is asked to maintain a cycle time as short as possible even if the production mix is very complex and formed by a large number of different small production orders moving along different routes in the system.

The high degree of fragmentation in the routes makes it impossible to identify the primary and stable production flow, so it is no longer feasible to manage the system on a flow basis, like in a JIT environment. The solution adopted to tackle the problem, due to the advent of information technology (IT), was the introduction of the Material Requirements Planning (MRP) system (Orlicky 1975). The approach adopted by the MRP is precisely the opposite of the one taken by the JIT: while the JIT considers the whole production flow, MRP focuses on resources (i.e. the stations) with the objective of maximising their utilisation as if they are operating individually. To do this, MRP discretises the planning horizon in elementary time units, also called ‘time buckets’ and typically sized to a 1-week duration, and by exploding the ordered products in their components, time bucket by time bucket, it computes the production orders to be released to each resource in a way that fills the available production time. The production control mechanism of MRP is also rather different from JIT manufacturing, which controls production by means of the WIP; MRP controls production by sending production orders to the resources directly based on estimations of the workload involved. Production orders are then pushed from the MRP system to the resources, defining the concept of ‘push’ production control, which differs from the ‘pull’ control of the JIT.

Since MRP relies on estimations of the production workload, its behaviour is less stable and less predictable than a JIT system, as its control mechanism is based on the direct WIP measure. Moreover, the need to create individual plans for the resources requires MRP production to create the conditions to make them operate as they were isolated from each other. This result can be obtained by increasing the WIP in the system, which means increasing and distributing inventory along the system so as to make every resource work on a dedicated queue of production orders that never empties. According to Little’s law, the practical way to achieve this result is to impose fixed (significant) lead times on the production phases, resulting in the anticipation of order releases in every stage of the system. The result is a sharp increase in the entire system’s cycle time because of the long periods of time production orders spend in the queue. The cycle time then becomes longer and, therefore, more variable from order to order (i.e. the system becomes less predictable), making the MRP approach sustainable only when the market is rather permissive in terms of the required response time.

Until recently, the MRP system has been universally recognised as the best system to adopt in response to a highly customised demand, making the users accept its weaknesses implicitly. However, as investigated in Sect. 10.3, recent market changes and future trends highlight critical issues with the sustainability of the MRP approach. Specifically, future market scenarios in developed countries show a continuous increase in the requested product customisation associated with a rise in order fragmentation; this is mainly due to the willingness of the actors involved in supply chains to reduce their stocks of materials, then asking for even more brief response times (Ivanov et al. 2018a). Hence, the downside compromise made by MRP to sacrifice cycle times in favour of throughput is no longer an acceptable strategy, especially considering that in modern markets, customers can agree to pay more if products are delivered more quickly.

Industry 4.0 and cloud manufacturing aim to address the need for delivering highly customised products within a short response time while maintaining high efficiency and effectiveness in production execution (Ivanov et al. 2014). Referring to Fig. 10.1, the produced effect is like crushing to the left the block of the ‘on order’ production, which means reducing the cycle time to the level of a system that is managed on a flow basis, even if customisation is very high. To achieve this goal, Industry 4.0 considers the massive adoption of modern technologies to empower the capability of production resources to exchange data, make decisions, improve the coordination of activities on the shop floor (Dolgui et al. 2019b), and interact with higher decisional levels. Moreover, new models and architecture for manufacturing planning and control (MPC) are needed to best exploit the new capabilities introduced by Industry 4.0, as well as enable operation at the cloud level of the manufacturing system (Ivanov et al. 2016, 2018b; Vespoli et al. 2019). In the next sections, these two aspects will be developed and discussed in detail.

10.2 The Impact of New Industry 4.0 Enabling Technologies on Production Planning, Control, and Execution

The fourth industrial revolution faces problems of an organisational and technological nature. These two aspects are intimately connected because new technologies enable the implementation of new organisational paradigms, and new organisational models in the manufacturing industry encourage the development of new technologies. Among the key elements of Industry 4.0 are interoperability, intelligence, communication, and the decentralisation of decisions. Interoperability is the ability of systems, production technologies, and operators to collaborate (Xu et al. 2018). Decentralising decisions refers to the possibility for intelligent objects (agents) to make decisions within a domain. If a conflict occurs between the decisions of agents cooperating within the same domain, this conflict must be solved via rules or a decision-maker of a higher level. Intelligence is the ability of an agent to learn and train itself using theoretical models and real information from the field. Because of this ability, the agent can make decisions or suggest them to a higher-level decision-maker. Finally, communication is the ability of machines and systems to communicate through multiple technologies. Such communications can take place locally (i.e. between devices and agents placed in a specific area) or in an extended area (i.e. between different plants placed in geographically distant locations).

The aforementioned key elements explain why cyber-physical systems (CPS) and the Internet of things (IoT) are the most relevant key enabling technologies of Industry 4.0. These technologies are not the only ones introduced, but they are, indeed, the ones that characterise the Industry 4.0 paradigm. Additionally, the interest in CPS and the Industrial IoT (IIoT), which refers to migrating the IoT to the industrial context, can be assessed by consulting Google’s search statistics comparing ‘cyber+physical+systems’ and ‘industrial+Internet+of+things’ keys. Figure 10.2 shows how interest in the IIoT has been growing since 2014, following the same trend as interest in CPS. The graph points out two main issues: (1) public interest in CPS preceded interest in IIoT, and (2) increasing CPS development has stimulated the tailoring of the IoT concept for use in the industrial field.

Google trends: searches for ‘industrial Internet of things’ and ‘cyber-physical system’. (Source: http://trends.google.it)

10.2.1 Cyber-Physical Systems

In the past, industrial production was mainly based on the human factor and machines. Typically, an operator was the user of a device used to manufacture a product or semi-finished product. Due to progress made in the electronics field in the 1980s, machines acquired the ability to carry out specific processes in an increasingly autonomous manner. Consequently, the operator switched from a user to a machine supervisor. The advantages of this evolution are many: higher repeatability of operations (e.g. as time passes, an operator gets tired, but a machine does not), more dangerous activities can be carried out by a machine rather than an operator, with consequent advantages in terms of safety, and the possibility of achieving massive production rates. At that time, the myth of the factories operating with the lights off grew thanks to the birth of the computer integrated manufacturing (CIM) approach, but its application failed substantially because operations technology (OT) systems, which includes hardware and software systems devoted to detecting and imposing changes in physical processes, were not fault-tolerant enough and could not be as adaptive as needed because of the technologies available. Today, production systems are becoming increasingly complex as they need to be able to quickly adapt to product changes and unpredictable demand variations, as a direct consequence of product life cycles shortening and increased product customisability requested by customers.

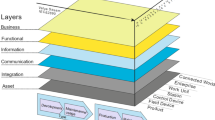

The emphasis on reconfigurability and adaptability in industrial processes has been the driving factor in the integration of operations technology (OT) and information technology (IT) in modern machines and industrial operations, paving the way for the birth of cyber-physical systems (CPS). Each CPS, made up of several devices or other sub-systems, has an internal logic component that utilises IT to perform evaluations and make decisions and a physical component (OT) that performs the actions. The 5C model, shown in Fig. 10.3, is a reference guideline for the development of a CPS in manufacturing processes (Lee et al. 2015). This model provides a base layer (I) where a CPS can communicate with field sensors or other CPSs.

CPS: 5C model. (Inspired from Jin et al. 2017)

The information exchanged between several devices is analysed in the next layer (II), which returns the semantic content of this information (e.g. the identification of information patterns that may be critical to the operation of the machine). Therefore, the system acquires the awareness of the state of its components and the devices that comprise it. The next level (III) represents a fundamental level in the model because the CPS, through the awareness acquired from the antecedent level, can compare the performance of the fleet of machines that comprise it. At this level, a digital twin of the system is also available so that what-if analyses can be performed in a risk-free environment. The fourth layer of the 5C model is defined as cognition. At this level, the CPS acquires the ability to make and propose decisions. These decisions have a rank and are optimised concerning the desired objective. The top level (V) of the model is the configuration level, where decisions made in the ‘cyber environment’ are executed at the ‘physical environment’ level. The CPS, therefore, acquires the capability for flexibility and adaptability to ‘real-world’ conditions.

The new perspectives introduced by CPS represent a radical evolution in the concept of automation in industrial processes. CPSs can make decisions, can learn from what happened in the past (i.e. gain experience), share knowledge with other systems, and test possible decisions in virtual environments (i.e. digital twin). The latter is relevant in the context of CPS. The digital twin of the physical system can be represented by a simulation environment or a federation of simulation environments to model the CPS, integrating different techniques, such as discrete events, agents, and system dynamics. For further details, the reader can refer to Guizzi et al. (2019).

Moreover, CPSs can communicate with the company’s enterprise resource planning (ERP) systems, and this is changing the role of ERP within the company’s information systems. In particular, the traditional processes of factory resource planning (i.e. machines and human workers) will undergo a significant change with the introduction of CPSs. One of the characteristics of CPS systems is their ability to self-organise (Habib and Chimsom 2019). This means that having set a target, CPSs (representing factory resources in the broad sense) can self-organise and cooperate to reach the target. Thus, new architectures that bring about a change in the ERP systems we know today will be achievable. These systems need to have a high-level coordination function, while the factory processes will be managed ‘autonomously’ by the CPSs.

10.2.2 Industrial Internet of Things

The term Internet of things (IoT) first appeared in 1999 in a presentation by Massachusetts Institute of Technology (MIT) researcher Kevin Ashton at Procter & Gamble. Ashton was working with colleagues on RFID tags, special electronic tags that can be applied almost anywhere and read remotely with special radio equipment (Ashton 2009). Since 1999, the IoT has undergone numerous reinterpretations. In some respects, the IoT is identified as a wireless sensors network (WSN). In practice, the IoT and WSN are not synonymous. In fact, the objective of the WSN is to collect data from a network of nodes (sensors) distributed in space, while the IoT aims to perform actions, starting with information from the WSN, to achieve a goal without human intervention, interacting with other intelligent systems. Therefore, some characteristics of the IoT are: (1) the interconnection of things and their connection to the Internet, (2) uniquely identifiable things, (3) ubiquity, (4) sensing/actuation capability, (5) embedded intelligence, (6) interoperable communication capability (based on standard and interoperable communication protocols), (7) self-configurability, and (8) programmability (Minerva et al. 2015). From another viewpoint, the IoT concept can be considered similar to CPS since the objects in an IoT system are able to implement and execute simple control actions. However, a CPS represents much more complex elements that can work as a hub for IoT systems.

In Industry 4.0 applications, the IoT is tailored to the industrial Internet of things (IIoT). The latter represents a subset of the IoT, characterised by being machine-oriented. In particular, the IIoT facilitates connections between machines, control systems, and business processes in general. Therefore, it is a critical element in the creation of a smart factory (Sisinni et al. 2018). The main communication frameworks of the IIoT are a data distribution service (DDS), an open-platform communication unified architecture (OPC-UA), and MTConnect.

The DDS is a standard of the object management group, which aims to distribute messages according to a publish/subscribe logic. It means that a set of publishers sends messages, and a set of subscribers receives them. The DDS has two levels, as shown in Fig. 10.4. The lowest level is data-centric publish/subscribe (DCPS), while the highest is the data-local reconstruction layer (DLRL). The DLRL aims to ensure communication between the application level and the DCPS. The DCPS is structured in seven entities:

-

1.

DomainParticipantFactory—This entity represents the container of the different domains in which the information will be generated.

-

2.

DomainParticipant—This entity represents a specific domain belonging to the DomainParticipantFactory. Within the DomainParticipant, specific topics, publishers, and subscribers are created.

-

3.

Topics—This entity represents a part of a typed domain. It means that within a Topic, there is specific semantic content expressed through a particular data structure.

-

4.

Publisher—This is an object that publishes data sent by different DataWriters related to it.

-

5.

DataWriter—This is an abstract entity implemented based on the data structure of the topic to which it is uniquely associated.

-

6.

Subscriber—This is the entity that collects the data published on topics related to it and sends them to the associated DataReaders.

-

7.

DataReader—This is an abstract entity, like the DataWriter, which is implemented based on the topic to which it is related.

DDS architecture. (From Corsaro and Schmidt 2012)

The OPC-UA, instead, is an accepted standard issued by the OPC Foundation and ratified by the International Electrotechnical Commission (IEC 62541) for the exchange of data between programmable logic controllers (PLCs), human-machine interfaces, servers, clients, and other machinery. The OPC-UA specifications are divided into two groups; the first one refers to the primary functions/services offered, and the second one refers to the particular specifications of data access. Regarding the information model, the standard provides only the infrastructure of the model information; individual vendors must model the information, generating significant fragmentation in the solutions. To avoid this problem, OPC-UA offers the ability to define extensions based on a basic information model developed by the OPC Foundation. Each manufacturer can then create solutions applicable to its devices, so clients can access this information regardless of the manufacturer because they use the same basic information model (Pfrommer et al. 2016).

The basic principles of modelling are the following:

-

Using object-oriented techniques, including type and inheritance hierarchies.

-

Access mode to types identical to those of instances.

-

Possibility of exposing information by exploiting (fully connected) networks of nodes in many ways.

-

Extensibility of type hierarchies and node links.

-

No limitations on the modelling of information.

-

Information modelling always placed on the server-side.

The basic concepts of information modelling in OPC-UA are nodes and references between nodes. Nodes and References are grouped in the address space, which represents the set of information exposed by the OPC-UA server and accessible by any OPC-UA client.

Comparing the two standards, we can say that the strength of DDS is the ability to share information in real-time with low sensitivity to failures and high scalability without sacrificing performance. DDS was created for the global information grid, which is the backbone of the current IoT. The OPC-UA, however, was built in the field of industrial automation to allow interoperability/integration between devices, sensors, and PLCs. Therefore, its genesis and the initial ecosystem are its strengths. Both standards, in their most recent versions, guarantee the security of information transfer. This characteristic is fundamental in the current context of Industry 4.0. To this extent, in recent years, some researchers have proposed hybrid models combining OPC-UA and DDS to identify an architecture that can overcome the limitations of individual architectures (OPC Foundation 2019).

Finally, MTConnect (MTConnect Institute 2018) is an open and royalty-free standard released by the MTConnect Institute to exchange data and information. It is based on a data dictionary defined within the standard and provides a semantic data model. The transport protocol used by the standard is HTTP, and the semantic coding of the information is done using the XML language. The three main elements of the implementation of MTConnect are equipment, an MTConnect agent, and client software. The equipment represents a data source (e.g. machine tools and workstations) that standardises the data through an adapter transferring them to one agent. The MTConnect agent is a software that collects information from data sources and replies to data requests from Clients. This software can be installed directly on the supervisory control and data acquisition (SCADA) of the machine or on a computer that acquires data from multiple systems. The client is the application that requests data from the MTConnect agent using them to support operations. MTConnect is a read-only standard. It means that the information is collected and transmitted at a level of control where it is then analysed. However, the control level does not send information to a single machine. Unlike OPC-UA, MTConnect does not comply with any IT security regulations. Currently, the OPC Foundation and the MTConnect Institute have developed MTConnect-OPC UA companion specifications (OPC Foundation 2019), which aim to provide an information management model that can be used via either the MTConnect or OPC-UA standard. In today’s complex production systems involving large numbers of machines and production lines, data exchange protocols that allow easy access to information from the shop floor are preferred over legacy systems.

10.2.3 Additional Enabling Technologies

In addition to these enabling technologies, Industry 4.0 makes extensive use of a large number of technologies that did not necessarily arise in the industrial field (e.g. artificial intelligence [AI], cloud technology, blockchain technology, immersive technologies; Habib and Chimsom 2019). These technologies make it possible to implement production models that, until a few years ago, were only hypothesised in the world of research and could not find a real-world application. Artificial intelligence (AI), for example, is used in CPS for prediction and decision-making activities. Cloud technologies allow managing and coordinating CPS systems distributed across a territory; such systems can belong to the same company or different companies. Therefore, cloud technologies have a significant impact on the coordination and efficiency of supply networks. Thus, companies are increasingly integrated, which increases the need for security and privacy in the management of information. Blockchain technology satisfies this need by guaranteeing immutability, transparency, authenticity, decentralisation, distribution, the absence of intermediaries, and anonymity (Dolgui et al. 2020; Lee et al. 2019). Finally, immersive technologies are currently used in the industrial field, mainly in operator training and plant maintenance. Although they still have limitations (e.g. for health reasons, it is advisable not to use such devices for long periods of time), they can potentially make essential contributions on the shop floor (e.g. the use of collaborative robots and manual assembly activities).

10.3 New Models and Perspectives for Production Planning and Control in the Industry 4.0 Era

As discussed in the previous paragraph, it can be observed that there has never been a strong evolution of the systems of planning and control of production. In previous industrial revolutions, effort was focused on increasing productivity and the technological aspects of production. For example, except for the Taylorism or labour division and its subsequent practical application to mass production by Henry Ford (Fordism) in 1913, the first manufacturing planning and control (MPC) system was not developed until 1960. The intent, of course, was always the same: reduce production costs while maximising the use of the production plant and the exploitation of operators’ working hours. After the rise and development of the information technology (IT) field, material requirements planning (MRP) and, subsequently, manufacturing resource planning (MRP-II, referred below as simply MRP) systems evolved. MRP systems were developed with the aim to tackle the increasing complexity of manufactured products and reduce the average inventory level since, through such a reduction, a considerable amount of immobilised capital could be released while simultaneously increasing plant flexibility.

Unfortunately, in the following years, such ambitions were not realised. Furthermore, many firms believe that MRP systems, despite further improvements (e.g. rough-cut capacity verification tools), have resulted in a slight increase in the average level of plant supplies (Hopp and Spearman 2011). One might ask what caused this problem. Basically, the issues are the assumptions involved in MRP: (1) the MRP assumes that the lead times of the various components are independent of the production system state (i.e. that an MRP system works at infinite capacity); (2) MRP considers a static lead time for components (i.e. it does not take into account the potential production problems or, in general, the variability of the processing cycle); and (3) a delayed job is worse than an excess in the inventory level in a real-world production environment, and thus firms’ propensity to inflate components’ lead times was observed. The third issue, referred to in the literature as ‘lead time syndrome’ (Knollmann and Windt 2013), leads to anticipating the release of production orders, with the intent to limit the delay risk involved in lead-time variability. However, the propensity to anticipate the processing of components and maximise machine utilisation, which is typical of a push control strategy, resulted in increased WIP levels, causing a vicious cycle of continuously lengthening production lead times.

As discussed in the above paragraph, in parallel to the MRP production philosophy, the Japanese ‘just-in-time’ approach is found. Here the firms are forced to standardise products and processes to obtain a continuous and balanced production flow across the entire system, working at the demand pace. The resulting approach focuses attention on the system as a whole rather than individual production resources, making it possible to achieve some operational advantages (e.g. shorter production cycles, reduced lead times, and lower inventories). This strategy has shown greater effectiveness in keeping the production flow organised and controlled, acting in a more targeted manner on WIP reduction. Undeniably, firms that have implemented JIT techniques have experienced significant improvements in productivity, as well as gradually reducing their WIP levels. However, this WIP reduction requires a trade-off; the standardisation of processes must be pushed continuously, which results in products having to be designed to facilitate this process and allow flow-oriented production. Additionally, this standardisation requirement, which often involves the whole production chain, is not only difficult to achieve but also pushes manufacturing companies into direct competition with industries in developing countries, where labour costs are low. This facilitates a shift in the production vision from a ‘mass production’ scenario, typical of the previous century, toward ‘mass customisation’. Therefore, instead of achieving mere cost reduction, it is desirable to create value while meeting customers’ customisation and speed of delivery requirements. Furthermore, in this dynamic context, it becomes essential to acquire and evolve the managerial capabilities of a manufacturing plant, enabling the MPC system to allocate production resources quickly and effectively (i.e. increasing their interoperability and flexibility). The impact of such a strategy is so critical that it justifies the creation of a new industrial paradigm: the ‘fourth industrial revolution’ and the introduction of the abovementioned Cyber-Physical System (CPS) concept. This is nowadays furthermore motivated by the need of reconfigurability and responsiveness brought to light by emerging challenges such as pandemic-related critical situations (Ivanov & Dolgui, In press ).

The CPS and Internet of things (IoT) concepts are recognised as technological, infrastructural, and enabling elements of this revolution, in which it is advisable to develop new organisation models (Riedl et al. 2014). In this regard, it is possible to identify a multitude of ‘reference models’, ‘reference architectures’, and ‘architectures’ in the literature (Moghaddam et al. 2018). Before going on to mention some of the most important ones, we want to clarify their differences, from a taxonomic perspective, considering the various definitions available in literature. It is assumed that a ‘reference model’ is based on a small number of unifying concepts and can be used as a basis for the development and explanation of standards to a non-specialist. Hence, a reference model should not be linked directly to any standards, technology, or other concrete implementation details, but should try to utilise conventional semantics that can be used unambiguously through different implementations. It is then a stable model, universally recognised and recommended, based on which architectural reference models (e.g. reference architecture) can be derived for an assigned specific area. Hence, it is assumed that reference architecture is a fundamental structural model, but applicable in a particular domain, accepted as a starting point for the definition of new system-specific architectures. To this extent, it must be a sufficiently abstract framework that includes a set of basic concepts, axioms, and descriptions of the main interactions between entities in the internal and external application domain. Finally, we can refer to an ‘architecture’ as a well-defined system structure in greater detail regarding its elementary components, principles and relationships existing between its components (Pisching et al. 2018).

Regarding the manufacturing field, reference models available in the literature are Industry 4.0, cloud manufacturing, and the Internet of things. Liu and Xu (2017) conducted a comparative analysis of Industry 4.0 and cloud manufacturing, highlighting the similarities and differences between them. As previously stated, CPS is the enabling technology of the Industry 4.0 model, being able to adequately summarise all the technological aspects required in this production paradigm (Fig. 10.5), including the machine-to-machine (M2M) concept (e.g. inter-communication between machines), ‘horizontal and vertical integration’, and ‘end-to-end integration’. In an Industry 4.0 environment, machines and, hence, CPSs, must be able to interact with their digital counterpart (digital twin) to optimise their operating conditions (e.g. evaluate their state of health with prognostics techniques) and with other CPSs to facilitate cooperation and achieve production objectives (i.e. schedule activities).

The Industry 4.0 reference model. (Inspired from Liu and Xu 2017)

Cloud manufacturing is a new service-oriented business paradigm based on the cloud-computing concept and method. This term first appeared in a published work by Bo-Hu et al. (2010), which defined cloud manufacturing as ‘a new networked manufacturing paradigm that organises manufacturing resources over networks according to consumers’ needs and requirements to provide a variety of on-demand manufacturing services via networks (e.g. the Internet) and cloud manufacturing service platforms’. Figure 10.6 is a diagram from Liu and Xu (2017) that depicts the core concepts of the cloud manufacturing reference model, where ‘providers supply their manufacturing resources, which will be transformed into services and then pooled into the cloud manufacturing platform’. Then, within this new platform, customers may submit their requirements for requested services, ranging from product design, manufacturing, management, and all other stages of a product’s production. Therefore, cloud manufacturing evolves the concept of manufacturing-as-a-service (MaaS), which shifts production-oriented manufacturing processes into a service-oriented network by representing single manufacturing assets as services.

The Cloud Manufacturing reference model. (Inspired from Liu and Xu 2017)

Finally, there is a plenty of reference models for the Internet of things (Bandyopadhyay and Sen 2011; Bassi et al. 2013; Xu et al. 2014). However, strictly speaking, some of these models should be considered reference architectures because their structure and definition are too detailed, meaning that it is impossible to recognise the universal, basic principles of the IoT paradigm within them. As discussed in the previous paragraph, the identified key aspects of the IoT are interconnection and interoperability. Hence, the IoT enables data acquisition from the connected ‘thing’ and facilitates their communication through the network and data analysis for their exploitation.

Once the reference model of a given paradigm has been defined, it is possible to derive the reference architecture. For example, as stated in the previous paragraph, considering the IoT reference model applied to the manufacturing context, we will find the industrial IoT (IIoT) reference architecture, defined specifically for the industrial context. However, since the objective here is to focus on Industry 4.0, we will analyse the reference architecture and related architectures on the latter, clarifying the various similarities among architectural proposals. Since Industry 4.0 was conceived, many working groups have been involved in its development, expressing different viewpoints. For example, the ‘IBM Industry 4.0 architecture’ (IBM 2018) reflects IBM’s vision, introducing the division of manufacturing system architectures into three functional layers (i.e. edge, plant, and enterprise), with enhanced flexibility to deploy and move similar functionality between the three layers. The ‘NIST Service-Oriented Smart Manufacturing System Architecture’ (Lu et al. 2016), instead, introduced the ‘Smart Manufacturing Ecosystems’ concept, integrating for the first time in the same paradigm all the manufacturing competences, like production, management, design, and engineering function. Finally, ‘Reference Architecture Model Industry 4.0’ (RAMI 4.0 [DIN SPEC 91345:2016-04 2016]), depicted in Fig. 10.7, is derived from the CENELEC model for the Smart Grid Architecture Model (SGAM [CEN-CENELEC-ETSI 2014]), and represents the most comprehensive Industry 4.0 reference architecture, considering the many functional levels a manufacturing ‘asset’ may have. And it is precisely the asset concept the first definition of the architecture reference document DIN SPEC 91345:2016-04 referred to as a component or a set of components of the factory. It can be a simple sensor or a set of simple components, expressions of a processing machine. An asset may also be physical (e.g. an industrial machine or a product), as well as logical (e.g. a management system). In turn, once the asset has been positioned on the basic plane, consisting of the life cycle and value stream axis, defined with respect to the IEC 62890, and a second axis characteristic of the managerial hierarchical level, expanded from IEC 61512, it is possible to characterise its ‘functionalities’ among the different provided layers. This model considers seven levels of functional interaction and can describe all the structural properties of the asset: business, functional, information, communication, and integration, as well as the asset itself.

After explaining the reference architecture concept, the discussion now focuses on the analysis and definition of the architecture of industrial production planning and control systems (i.e. MPC systems). The problem is that, to the authors’ best knowledge, there are not many examples of Industry 4.0 architecture, and, more importantly, none are derived from a specific reference architecture. Thus, to derive an MPC system architecture model from the proposed RAMI 4.0, it becomes clear that an asset or, more precisely, a set of assets may represent an MPC system. Interestingly, from the structural viewpoint, the aforementioned reference architectures betray their assumptions. These reference architecture models are the result of the generalisation effort from existing architectural models. In particular, the ANSI/ISA95 standard shown in Fig. 10.8, falling into the category of RAMI 4.0 specifications, represents a specific application, also if it was developed before the reference architecture. The problem is that ANSI/ISA95 was defined with the aim to rationalise the different competencies of the different management levels of a production plant, clarifying the interactions between the different levels and identifying the communication standards among them (e.g. OPC-UA). In this attempt, the ANSI/ISA95 standard provides a hierarchical view of a production planning and control system.

Moreover, it is from this standard that MRP systems started their development process, with all the limitations that we have previously exposed. Their ability to react to a problem is limited to only the same predefined and basic rules that an advanced manufacturing executing system (MES) may have. With the intention to generalise, we may say that the limits of hierarchically structured architectures are that (1) most real-world manufacturing systems are too complex to be modelled in exact terms and solved optimally (e.g. optimising operations is an NP-hard problem in the industrial field), and (2) a breakdown between the enterprise resource planning (ERP) system and the MES creates a situation in which, if the best mathematical optimisation is found at the ERP level, it might not be performed optimally for the specific state of the shop floor (e.g. an unexpected delay or a machine failure; Guizzi et al. 2017).

RAMI 4.0, like all reference architectures based on the Industry 4.0 reference model, is structured to include the hierarchical organisational system as a particular case, (e.g. the ERP level described from the ANSI/ISA95 may be represented from a logical asset located within the enterprise base plane of the RAMI 4.0, with high-level functionality), while being open to all possible system configurations. Hence, the intention is to overcome the rigid structure of previous MPC systems when possible, proposing a general framework in which all the managerial structures may be accepted. Duffie and Prabhu (1996) propose the first complete taxonomy of management architectures that an MPC system may have, identifying four possible alternatives (Fig. 10.9): hierarchical, oligarchical, semi-heterarchical, and heterarchical.

Spectrum of distributed manufacturing control systems. (From Duffie and Prabhu 1996)

All these alternatives are allowed in RAMI 4.0 and, by analysing the scientific literature, it is possible to find studies that, even prior to RAMI 4.0, propose MPC system transitions from a centralised hierarchical approach (i.e. MRP-based) to a decentralised and heterarchical one (Scholz-Reiter 2004).

However, due to the greater complexity and dynamism required by continuously increasing product customisation requirements, shortening the product life cycle, and reducing the delivery time, the transition from a pure hierarchical structure to a more heterarchical one is not a trivial or quick task (Bozarth et al. 2009). The underlying problem of such a transition is that a considerable amount of decision-making has to be transferred to entities localised at the bottom levels of the system. When switching such an increased level of decision-making autonomy to low-level entities (i.e. the assets), productivity can be increased only up to a certain threshold, after which it is agreeable to assume that the behaviour of the individual entities will tend to drive the objectives toward achieving local goals, short-sighting the system’s objectives (Philipp et al. 2006). This happens because the behaviour of a completely heterarchical system strongly depends on local decisions and, therefore, the logics that each system component makes (Jeken et al. 2012). In a completely decentralised system, decisions are made with only partial information regarding the system since each entity having global knowledge is an unrealisable objective because it would cause the deployment of the entire system’s complexity on every component. For the above-stated reasons, more in-depth scientific studies are necessary in this regard, mainly because most of the proposed Industry 4.0 architectures consider purely decentralised (i.e. heterarchical) management architectures.

In conclusion, even in an Industry 4.0 empowered environment, it is still necessary to gain a clear understanding of the dynamics involved in the complex interactions taking place in a manufacturing system. It is also advisable to learn as much as possible from the expertise and advances achieved by academics and practitioners regarding manufacturing-related reference architectures. Therefore, considering RAMI 4.0 as a commonly accepted, established reference architecture for Industry 4.0, future research effort could be focused on formulating architectures that go beyond the limits and problems associated with a strict hierarchical scheme while attempting to avoid the complete delegation of autonomy to entities within the plant.

It is, thus, advisable that future studies may also consider the possibility of developing new MPC configurations based on intermediate approaches, such as oligarchic or semi-heterarchical ones (Grassi et al. 2020). These latter approaches can better support the merging of the predictive and managerial advantages of a centralised structure with the reactivity of a fully decentralised one, given their intrinsic capability to allow functional and physical hybridisation deployable in the design of an Industry 4.0-oriented MPC system.

References

Ashton, K. (2009). That ‘internet of things’ thing. RFID Journal, 22, 97–114.

Bandyopadhyay, D., & Sen, J. (2011). Internet of things: Applications and challenges in technology and standardization. Wireless Personal Communications, 58(1), 49–69.

Bassi, A., Bauer, M., Fiedler, M., Kramp, T., van Kranenburg, R., Lange, S., & Meissner, S. (Eds.). (2013). Enabling things to talk. Berlin, Heidelberg: Springer Berlin Heidelberg.

Bo-Hu, L., Lin, Z., Shi-Long, W., Fei, T., Jun-Wei, C., Xiao-Dan, J., Xiao, S., & Xu-Dong, C. (2010). Cloud manufacturing: A new service-oriented networked manufacturing model. Computer Integrated Manufacturing Systems, 16, 1–7.

Bozarth, C. C., Warsing, D. P., Flynn, B. B., & Flynn, E. J. (2009). The impact of supply chain complexity on manufacturing plant performance. Journal of Operations Management, 27, 78–93. https://doi.org/10.1016/j.jom.2008.07.003.

CEN-CENELEC-ETSI. (2014). Smart Grid Coordination Group—Smart Grid Information Security, December 2014.

Corsaro, A., & Schmidt, D. C. (2012). The data distribution service the communication middleware fabric for scalable and extensible systems-of-systems, Chapter 2. In A. V. Gheorghe (Ed.), System of systems. Rijeka: IntechOpen.

DIN SPEC 91345:2016-04. (2016). Reference Architecture Model Industrie 4.0 (RAMI4.0).

Dolgui, A., Ivanov, D., Potryasaev, S., Sokolov, B., Ivanova, M., & Werner, F. (2020). Blockchain-oriented dynamic modelling of smart contract design and execution in the supply chain. International Journal of Production Research, 58(7), 2184–2199.

Dolgui, A., Ivanov, D., Sethi, S. P., & Sokolov, B. (2019b). Scheduling in production, supply chain and Industry 4.0 systems by optimal control. International Journal of Production Research, 57(2), 411–432.

Duffie, N., & Prabhu, V. V. (1996). Heterarchical control of highly distributed manufacturing systems. International Journal of Computer Integrated Manufacturing, 9, 270–281. https://doi.org/10.1080/095119296131562.

Grassi, A., Guizzi, G., Santillo, L. C., & Vespoli, S. (2020). A semi-heterarchical production control architecture for industry 4.0-based manufacturing systems. Manufacturing Letters, 24, 43–46. https://doi.org/10.1016/j.mfglet.2020.03.007.

Guizzi, G., Vespoli, S., & Santini, S. (2017). On the architecture scheduling problem of Industry 4.0. In CEUR Workshop Proceedings.

Guizzi, G., Falcone, D., & De Felice, F. (2019). An integrated and parametric simulation model to improve production and maintenance processes: Towards a digital factory performance. Computers and Industrial Engineering, 137, 106052.

Habib, M., & Chimsom, C. (2019). Industry 4.0: Sustainability and design principles. In Proceedings of the 2019 20th International Conference on Research and Education in Mechatronics, REM 2019.

Hopp, W. J., & Spearman, M. L. (2011). Factory physics (3rd ed.). Long Grove, IL: Waveland Press.

IBM. (2018). IBM Industrie 4.0 Architecture—SmartFactoryXL.

Ivanov, D., Sokolov, B., & Dilou Raguinia, E. A. (2014). Integrated dynamic scheduling of material flows and distributed information services in collaborative cyber-physical supply networks. International Journal of Systems Science: Operations & Logistics, 1(1), 18–26.

Ivanov, D., Sokolov, B., Dolgui, A., Werner, F., & Ivanova, M. (2016). A dynamic model and an algorithm for short-term supply chain scheduling in the smart factory Industry 4.0. International Journal of Production Research, 54(2), 386–402.

Ivanov, D., Das, A., & Choi, T.-M. (2018a). New flexibility drivers for manufacturing, supply chain and service operations. International Journal of Production Research, 56(10), 3359–3368.

Ivanov, D., Sethi, S., Dolgui, A., & Sokolov, B. (2018b). A survey on the control theory applications to operational systems, supply chain management and Industry 4.0. Annual Reviews in Control, 46, 134–147.

Ivanov, D., & Dolgui, A. (In press). Viability of intertwined supply networks: Extending the supply chain resilience angles towards survivability. A position paper motivated by COVID-19 outbreak. International Journal of Production Research.

Jeken, O., Duffie, N., Windt, K., Blunck, H., Chehade, A., & Rekersbrink, H. (2012). Dynamics of autonomously acting products and work systems in production and assembly. CIRP Journal of Manufacturing Science and Technology, 5, 267–275. https://doi.org/10.1016/j.cirpj.2012.09.012.

Jin, W., Liu, Z., Shi, Z., Jin, C., & Lee, J. (2017). CPS-enabled worry-free industrial applications. In 2017 Prognostics and System Health Management Conference, PHM—Harbin 2017—Proceedings.

Knollmann, M., & Windt, K. (2013). Control-theoretic analysis of the lead time syndrome and its impact on the logistic target achievement. Procedia CIRP, 7, 97–102. https://doi.org/10.1016/j.procir.2013.05.017.

Lee, J., Bagheri, B., & Kao, H.-A. (2015). A cyber-physical systems architecture for industry 4.0-based manufacturing systems. Manufacturing Letters, 3, 18–23.

Lee, J., Azamfar, M., & Singh, J. (2019). A blockchain enabled cyber-physical system architecture for industry 4.0 manufacturing systems. Manufacturing Letters, 20, 34–39.

Liu, Y., & Xu, X. (2017). Industry 4.0 and cloud manufacturing: A comparative analysis. Journal of Manufacturing Science and Engineering, 139(3), 034701. https://doi.org/10.1115/1.4034667 .

Lu, Y., Morris, K., & Frechette, S. (2016) Current Standards Landscape for Smart Manufacturing Systems. https://doi.org/10.6028/NIST.IR.8107.

Minerva, R., Biru, A., & Rotondi, D. (2015). Towards a definition of the internet of things (IoT). IEEE Internet Initiative.

Moghaddam, M., Cadavid, M. N., Kenley, C. R., & Deshmukh, A. V. (2018). Reference architectures for smart manufacturing: A critical review. Journal of Manufacturing Systems, 49, 215–225. https://doi.org/10.1016/j.jmsy.2018.10.006.

MTConnect Institute (2018). MTConnect Standard, Version 1.4.0 (ANSI/MTC1.4-2018).

Ohno, T. (1988). Toyota production system: Beyond large-scale production (1st ed.). Boca Raton, FL: CRC Press.

OPC Foundation (2019). OPC 30070-1 OPC UA Companion Specification release 2.00.00.

Orlicky, J. (1975). Material requirements planning: The new way of life in production and inventory management. New York: McGraw-Hill.

Panetto, H., Iung, B., Ivanov, D., Weichhart, G., & Wang, X. (2019). Challenges for the cyber-physical manufacturing enterprises of the future. Annual Reviews in Control, 47, 200–213.

Pfrommer, J., Gruner, S., & Palm, F. (2016). Hybrid OPC UA and DDS: Combining architectural styles for the industrial internet. In IEEE International Workshop on Factory Communication Systems—Proceedings, WFCS, June (Vol. 2016).

Philipp, T., Böse, F., & Windt, K. (2006). Evaluation of autonomously controlled logistic processes. In Proceedings of the Fifth CIRP International Seminar on Intelligent Computation in Manufacturing Engineering (pp. 347–352).

Pisching, M. A., Pessoa, M. A. O., Junqueira, F., dos Santos Filho, D. J., & Miyagi, P. E. (2018). An architecture based on RAMI 4.0 to discover equipment to process operations required by products. Computers and Industrial Engineering, 125, 574–591. https://doi.org/10.1016/j.cie.2017.12.029.

Riedl, M., Zipper, H., Meier, M., & Diedrich, C. (2014). Cyber-physical systems alter automation architectures. Annual Reviews in Control, 38, 123–133. https://doi.org/10.1016/j.arcontrol.2014.03.012.

Scholz-Reiter, B. (2004). Autonomous logistic processes: New demands and first approaches. In: Proceedings of the 37th CIRP International Seminar on Manufacturing Systems (pp. 357–362).

Sisinni, E., Saifullah, A., Han, S., Jennehag, U., & Gidlund, M. (2018). Industrial internet of things: Challenges, opportunities, and directions. IEEE Transactions on Industrial Informatics, 14(11), 4724–4734.

Vespoli, S., Grassi, A., Guizzi, G., & Santillo, L. C. (2019). Evaluating the advantages of a novel decentralised scheduling approach in the industry 4.0 and cloud manufacturing era. IFAC-PapersOnLine, 52(13), 2170–2176. https://doi.org/10.1016/j.ifacol.2019.11.527.

Xu, L. D., He, W., & Li, S. (2014). Internet of things in industries: A survey. IEEE Transactions on Industrial Informatics, 10, 2233–2243. https://doi.org/10.1109/TII.2014.2300753.

Xu, L., Xu, E., & Li, L. (2018). Industry 4.0: State of the art and future trends. International Journal of Production Research, 56(8), 2941–2962.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Grassi, A., Guizzi, G., Santillo, L.C., Vespoli, S. (2020). The Manufacturing Planning and Control System: A Journey Towards the New Perspectives in Industry 4.0 Architectures. In: Sokolov, B., Ivanov, D., Dolgui, A. (eds) Scheduling in Industry 4.0 and Cloud Manufacturing. International Series in Operations Research & Management Science, vol 289. Springer, Cham. https://doi.org/10.1007/978-3-030-43177-8_10

Download citation

DOI: https://doi.org/10.1007/978-3-030-43177-8_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-43176-1

Online ISBN: 978-3-030-43177-8

eBook Packages: Business and ManagementBusiness and Management (R0)