Abstract

In approximation theory, it is standard to approximate functions by polynomials expressed in the Chebyshev basis. Evaluating a polynomial f of degree n given in the Chebyshev basis can be done in O(n) arithmetic operations using the Clenshaw algorithm. Unfortunately, the evaluation of f on an interval I using the Clenshaw algorithm with interval arithmetic returns an interval of width exponential in n. We describe a variant of the Clenshaw algorithm based on ball arithmetic that returns an interval of width quadratic in n for an interval of small enough width. As an application, our variant of the Clenshaw algorithm can be used to design an efficient root finding algorithm .

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Clenshaw showed in 1955 that any polynomial given in the form

can be evaluated on a value x with a single loop using the following functions defined by recurrence:

such that \(p(x) = u_0(x)\).

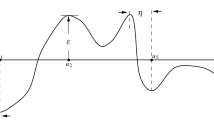

Unfortunately, if we use Eq. (2) with interval arithmetic directly, the result can be an interval of size exponentially larger than the input, as illustrated in Example 1.

Example 1

Let \(\varepsilon > 0\) be a positive real number, and let x be the interval \([\frac{1}{2} - \varepsilon , \frac{1}{2} + \varepsilon ]\) of width \(2\varepsilon \). Assuming that \(a_n = 1\), we can see that \(u_{n-1}\) is an interval of width \(4\varepsilon \). Then by recurrence, we observe that \(u_{n-k}\) is an interval of width at least \(4\varepsilon F_k\) where \({(F_n)}_{n\in \mathbb N}\) denotes the Fibonacci sequence, even if all \(a_i=0\) for \(i<n\).

Note that the constant below the exponent is even higher when x is closer to 1. These numerical instabilities also appear with floating point arithmetic near 1 and \(-1\) as analyzed in [4].

To work around the numerical instabilities near 1 and \(-1\), Reinsch suggested a variant of the Clenshaw algorithm [4, 7]. Let \(d_n(x) = a_n\) and \(u_n(x) = a_n\), and for k between 0 and \(n-1\), define \(d_k(x)\) and \(u_k(x)\) by recurrence as follows:

Computing p(x) with this recurrence is numerically more stable near 1. However, this algorithm does not solve the problem of exponential growth illustrated in Example 1.

Our first main result is a generalization of Eq. 3 for any value in the interval \([-1, 1]\). This leads to Algorithm 1 that returns intervals with tighter radii, as analyzed in Lemma 2. Our second main result is the use of classical backward error analysis to derive Algorithm 2 which gives an even better radii. Then in Sect. 3 we use the new evaluation algorithm to design a root solver for Chebyshev series, detailed in Algorithm 3.

2 Evaluation of Chebyshev Polynomials on Intervals

2.1 Forward Error Analysis

In this section we assume that we want to evaluate a Chebyshev polynomial on the interval I. Let a be the center of I and r be its radius. Furthermore, let \(\gamma \) and \(\overline{\gamma }\) be the 2 conjugate complex roots of the equation:

In particular, using Vieta’s formulas that relate the coefficients to the roots of a polynomial, \(\gamma \) satisfies \(\gamma + \overline{\gamma }= 2a\) and \(\gamma \overline{\gamma }= 1\).

Let \(z_n(x) = a_n\) and \(u_n(x) = a_n\), and for k between 0 and \(n-1\), define \(z_k(x)\) and \(u_k(x)\) by recurrence as follows:

Using Eq. (4), we can check that the \(u_k\) satisfies the recurrence relation \(u_k(x) = 2xu_{k+1}(x) - u_{k+2}(x) + a_k\), such that \(p(x) = xu_1(x) - u_2(x) + a_0\).

Let \((e_k)\) and \((f_k)\) be two sequences of positive real numbers. Let \(\mathcal B_\mathbb R(a,r)\) and \(\mathcal B_\mathbb R(u_k(a), e_k)\) represent the intervals \([a-r, a+r]\) and \([u_k(a)-e_k, u_k(a)+e_k]\). Let \(\mathcal B_\mathbb C(z_k(a), f_k)\) be the complex ball of center \(z_k(a)\) and radius \(f_k\).

Our goal is to compute recurrence formulas on the \(e_k\) and the \(f_k\) such that:

Lemma 1

Let \(e_n=0\) and \(f_n=0\) and for \(n > k \ge 1\):

Then, \((e_k)\) and \((f_k)\) satisfy Eq. (6).

Proof (sketch)

For the inclusion \(z_k(\mathcal B_\mathbb R(a,r)) \subset \mathcal B_\mathbb C(z_k(a), f_k)\), note that \(\gamma \) has modulus 1, such that the radius of \(\gamma z_{k+1}\) is the same as the radius of \(z_{k+1}\) when using ball arithmetics. The remaining terms bounding the radius of \(z_k\) follow from the standard rules of interval arithmetics.

For the inclusion \(u_k(\mathcal B_\mathbb R(a,r)) \subset \mathcal B_\mathbb C(u_k(a), e_k)\), note that the error segment on \(u_k\) is included in the Minkowski sum of a disk of radius \(f_k\) and a segment of radius \(e_{k+1}\), denoted by M. If \(\theta \) is the angle of the segment with the horizontal, we have \(\cos \theta = a\). We conclude that the intersection of M with a horizontal line is a segment of radius at most \(\min (e_{k+1} + f_k, \frac{f_k}{\sqrt{1-a^2}})\).

Corollary 1

Let \(\mathcal B_\mathbb R(u,e)=\mathtt {BallClenshawForward}((a_0, \ldots , a_n), a, r)\) be the result of Algorithm 1, then

Moreover, the following lemma bounds the radius of the ball returned by Algorithm 1.

Lemma 2

Let \(\mathcal B_\mathbb R(u,e)=\mathtt {BallClenshawForward}((a_0, \ldots , a_n), a, r)\) be the result of Algorithm 1, and let M be an upper bound on \(|u_k(a)|\) for \(1 \le k \le n\). Assume that \(\varepsilon _k < Mr\) for \(1 \le k \le n\), then

Proof (sketch)

We distinguish 2 cases. First if \(n < \frac{1}{2\sqrt{1-a^2}}\), we focus on the relation \(e_k \le e_{k+1} + f_k + Mr\), and we prove by descending recurrence that \(e_k \le 2M(n-k)^2r\) and \(f_k \le 2Mr(2(n-k-1)+1)\).

For the case \(\frac{1}{2\sqrt{1-a^2}} \le n\), we use the relation \(e_k \le \frac{f_k}{\sqrt{1-a^2}} + Mr\), that we substitute in the recurrence relation defining \(f_k\) to get \(f_k \le 2rM + \frac{2r}{\sqrt{1-a^2}} f_{k+1} + f_{k+1} + Mr\sqrt{1-a^2}\). We can check by recurrence that \(f_k \le \frac{3}{2}M\sqrt{1-a^2}\left[ (1+\frac{2r}{\sqrt{1-a^2}})^n-1\right] \), which allows us to conclude for the case \(\frac{\sqrt{1-a^2}}{2r} \le n\). Finally, when \(\frac{1}{2\sqrt{1-a^2}} \le n < \frac{\sqrt{1-a^2}}{2r}\), we observe that \((1+\frac{2r}{\sqrt{1-a^2}})^n-1 \le n\exp (1)\frac{2r}{\sqrt{1-a^2}}\) which leads to the bound for the last case.

2.2 Backward Error Analysis

In the literature, we can find an error analysis of the Clenshaw algorithm [3]. The main idea is to add the errors appearing at each step of the Clenshaw algorithm to the input coefficients. Thus the approximate result correspond to the exact result of an approximate input. Finally, the error bound is obtained as the evaluation of a Chebyshev polynomial. This error analysis can be used directly to derive an algorithm to evaluate a polynomial in the Chebyshev basis on an interval in Algorithm 2.

Lemma 3

Let \(e_n=0\) and for \(n > k \ge 1\):

and \(e_0 = r|u_1(a)| + e_1\). Then \((e_k)\) satisfies \(u_k(\mathcal B_\mathbb R(a,r)) \subset \mathcal B_\mathbb R(u_k(a), e_k)\).

Proof (sketch)

In the case where the computations are performed without errors, D. Elliott [3, Equation (4.9)] showed that for \(\gamma = \tilde{x} - x\) we have:

In the case where \(\tilde{x} = a\) and \(x \in \mathcal B_{\mathbb R}(a,r)\) we have \(\gamma \le r\) and \(|T(x)| \le 1\) which implies \(e_k \le r|u_1(a)| + \sum _{i=2}^n 2r|u_i(a)|\).

Corollary 2

Let \(\mathcal B_\mathbb R(u,e)=\mathtt {BallClenshawBackward}((a_0, \ldots , a_n), a, r)\) be the result of Algorithm 2, and let M be an upper bound on \(|u_k(a)|\) for \(1 \le k \le n\). Assume that \(\varepsilon _k < Mr\) for \(1 \le k \le n\), then \(e < 3Mnr\).

3 Application to Root Finding

For classical polynomials, numerous solvers exist in the literature, such as those described in [5] for example. For polynomials in the Chebyshev basis, several approaches exist that reduce the problem to polynomial complex root finding [1], or complex eigenvalue computations [2] among other.

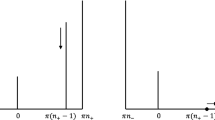

In this section, we experiment a direct subdivision algorithm based on interval evaluation, detailed in Algorithm 3. This algorithm is implemented and publicly available in the software clenshaw [6].

We applied this approach to Chebyshev polynomials whose coefficients are independently and identically distributed with the normal distribution with mean 0 and variance 1.

As illustrated in Fig. 1 our code performs significantly better than the classical companion matrix approach. In particular, we could solve polynomials of degree 90000 in the Chebyshev basis in less than 5 s and polynomials of degree 5000 in 0.043 s on a quad-core Intel(R) i7-8650U cpu at 1.9 GHz. For comparison, the standard numpy function chebroots took more than 65 s for polynomials of degree 5000. Moreover, using least square fitting on the ten last values, we observe that our approach has an experimental complexity closer to \(\varTheta (n^{1.67})\), whereas the companion matrix approach has a complexity closer to \(\varTheta (n^{2.39})\).

References

Boyd, J.: Computing zeros on a real interval through chebyshev expansion and polynomial rootfinding. SIAM J. Numer. Anal. 40(5), 1666–1682 (2002). https://doi.org/10.1137/S0036142901398325

Boyd, J.: Finding the zeros of a univariate equation: proxy rootfinders, chebyshev interpolation, and the companion matrix. SIAM Rev. 55(2), 375–396 (2013). https://doi.org/10.1137/110838297

Elliott, D.: Error analysis of an algorithm for summing certain finite series. J. Aust. Math. Soc. 8(2), 213–221 (1968). https://doi.org/10.1017/S1446788700005267

Gentleman, W.M.: An error analysis of Goertzel’s (Watt’s) method for computing Fourier coefficients. Comput. J. 12(2), 160–164 (1969). https://doi.org/10.1093/comjnl/12.2.160

Kobel, A., Rouillier, F., Sagraloff, M.: Computing real roots of real polynomials ... and now for real! In: Proceedings of the ACM on International Symposium on Symbolic and Algebraic Computation, ISSAC 2016, pp. 303–310. ACM, New York (2016). https://doi.org/10.1145/2930889.2930937

Moroz, G.: Clenshaw 0.1, December 2019. https://doi.org/10.5281/zenodo.3571248, https://gitlab.inria.fr/gmoro/clenshaw

Oliver, J.: An error analysis of the modified Clenshaw method for evaluating Chebyshev and Fourier series. IMA J. Appl. Mathe. 20(3), 379–391 (1977). https://doi.org/10.1093/imamat/20.3.379

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Ledoux, V., Moroz, G. (2020). Evaluation of Chebyshev Polynomials on Intervals and Application to Root Finding. In: Slamanig, D., Tsigaridas, E., Zafeirakopoulos, Z. (eds) Mathematical Aspects of Computer and Information Sciences. MACIS 2019. Lecture Notes in Computer Science(), vol 11989. Springer, Cham. https://doi.org/10.1007/978-3-030-43120-4_4

Download citation

DOI: https://doi.org/10.1007/978-3-030-43120-4_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-43119-8

Online ISBN: 978-3-030-43120-4

eBook Packages: Computer ScienceComputer Science (R0)