Abstract

One of the key challenges in ab initio nuclear theory is to understand the emergence of nuclear structure from quantum chromodynamics. I will address this challenge and focus on the statistical aspects of uncertainty quantification and parameter estimation in chiral effective field theory.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

It is well-known that quantum chromodynamics (QCD) is non-perturbative in the low-energy region where atomic nuclei exist. This feature prevents us from direct application of perturbation theory. To make progress, two complementary approaches are presently employed; lattice QCD (LQCD) [1] and chiral effective field theory (\(\chi \)EFT) [2]. The former amounts to numerical evaluation of the QCD path integral on a space-time lattice, while the latter is aimed at exploiting the decoupling principles of the renormalization group (RG) to systematically formulate a potential description of the nuclear interaction rooted in QCD. LQCD is a computationally expensive approach that requires at least exascale resources for a realistic analysis of multi-nucleon systems, and will most likely not be the most economical choice for analyzing nuclear systems. Nevertheless, in cases where numerically converged results can be obtained, LQCD offers a unique computational laboratory for theoretical studies of QCD in a low-energy setting [3].

The derivation of a nuclear potential in \(\chi \)EFT proceeds via the construction of an effective Lagrangian consisting of pions, nucleons, sometimes also the \(\varDelta \) isobar, endowed with all possible interactions compatible with the symmetries of low-energy QCD. The details can be found in extensive reviews [4,5,6]. All short-distance physics, normally associated with quarks and gluons, reside beyond a hard momentum scale \(\varLambda _b \sim 1\) GeV, that remains unresolved in \(\chi \)EFT. Such high-momentum dynamics is instead encoded in a set of low-energy constants (LECs) that must be determined from experimental data, or in a future scenario hopefully computed directly from LQCD. \(\chi \)EFT is the theoretical framework to calculate observables in an expansion expressed in powers of the small ratio \(Q/\varLambda _b\), where Q is a soft momentum scale \(\sim m_{\pi }\). If done right, this approach allows for a systematically improvable description of low-energy nuclear properties in harmony with the symmetries of low-energy QCD.

The promise of being systematically improvable is a unique selling point of \(\chi \)EFT, or any EFT for that matter. Indeed, although the order-by-order expansion contains an infinite number of terms and must be truncated, the omitted terms represent neglected physics and contribute to the systematic uncertainty. The upshot is that higher-order corrections should be less important and follow a pattern determined by the EFT expansion ratio. The organization of this expansion, such that increasingly unimportant physics appear at consecutively higher orders, is called power counting (PC).

The leading-order (LO) in this expansion consists of the well-known one-pion exchange potential (Yukawa term) accompanied by a contact potential to describe any unresolved short-ranged physics at this order. The potentials at higher orders, i.e. next-to-leading order (NLO) etc., systematically introduce multiple-pion exchanges, accompanied additional zero-ranged contact potentials, possibly \(\varDelta \) perturbations, and irreducible many-nucleon interactions, see Fig. 90.1.

To achieve an accurate theoretical description of the nuclear interaction, with quantified statistical and systematic uncertainties of the theoretical predictions, can be referred to as achieving a state of precision nuclear physics. There are several interesting facets of this ongoing endeavor:

-

On a fundamental physics level, it is well-known that the nuclear potentials from \(\chi \)EFT that are based on Weinberg power counting (WPC) do not generate observables that respect RG invariance, see e.g. [7] and references therein. At the same time, there is an ongoing debate regarding the need or validity for probing large momenta in a potential description from EFT that is only valid at low-energies to begin with, see e.g. [8,9,10] for a selection of viewpoints. Presently, most ab initio calculations of atomic nuclei, including the calculations presented here, employ potentials based on WPC. There exist potentials with alternative PCs that fulfill the fundamental tests of RG invariance for observables in two- and three-nucleon systems, see e.g. [11]. Unfortunately, such potentials have not yet been employed in nuclear many-body calculations.

-

The numerical values of the LECs in \(\chi \)EFT must be determined from data before any quantitative analysis can proceed. From a frequentist perspective, parameter estimation often amounts to maximizing a likelihood. For \(\chi \)EFT, this turns into a non-linear optimization problem over a high-dimensional parameter domain [12,13,14]. Bayesian parameter estimation is explored more and more in ab initio nuclear theory [15, 16]. This approach captures the entire probability distribution of the relevant parameters, and not just the values at the maximum of the probability mode. However, the computational demands are substantially higher compared to most of the frequentist methods, mainly due to repeated sampling of the model across the parameter domain.

-

There are several sources of uncertainty in model calibration. For instance, the calibration data itself come with uncertainties. Thus, any parameter estimation process will contain covariances that must be quantified and propagated. There exist well-known methods, frequentist as well as Bayesian, for quantifying the statistical uncertainties at any level of the calculation, see e.g. [17, 18]. However, it remains a challenge to achieve full uncertainty quantification in complex models that require substantial high-performance resources for a single evaluation at one point in the parameter domain. Well-designed surrogate models can hopefully provide some leverage, see e.g. [19,20,21].

-

A theoretical model will never represent nature fully. Consequently, there are theory errors (sometimes referred to as systematic uncertainties or model discrepancies). The statistical uncertainties stemming from the calibration data discussed above are typically not the main source of error in \(\chi \)EFT predictions [22, 23]. It is therefore of key importance to identify and quantify the sources of systematic errors in \(\chi \)EFT. At the moment, such analyses are rarely performed in ab initio nuclear theory. \(\chi \)EFT models combined with ab initio methods are often computationally complex and require substantial computational resources. As such, Markov Chain Monte Carlo with long mixing times can be prohibitively expensive. Furthermore, it is not clear how to identify and exploit the relevant momentum scales in descriptions of atomic nuclei.

1 Ab initio Nuclear Theory with \(\chi \)EFT

Ab initio methods, such as the no-core shell-model (NCSM) [24], the coupled cluster method (CC) [25], in-medium similarity renormalization (IM-SRG) [26], or lattice EFT [27], for solving the many-nucleon Schrödinger equation

with two-nucleon (NN) and three-nucleon (NNN) potentials derived from \(\chi \)EFT with a set of LECs \(\mathbf {\alpha }\), make use of controlled mathematical approximations. Such many-body approaches can provide numerically exact nuclear wave functions for several bound, resonant, and scattering states in isotopes well into the region of medium-mass nuclei [28,29,30,31]. This development has drastically changed the agenda in development of nuclear interactions for atomic nuclei.

In the beginning of the previous decade, a lot of effort was spent on constructing so-called high-precision nuclear interactions, most prominently Idaho-N3LO [32], AV18 [33], and CD-Bonn [34], that could reproduce the collected data on NN scattering below the pion-production threshold with nearly surgical precision. We now know that such interactions often fail to reproduce important bulk properties of atomic nuclei [31, 35,36,37]. However, fifteen years ago, it was unclear how to gauge the quality of the many-nucleon wave functions since they relied on a series of involved approximations. Although it is still a challenge to quantify the theoretical uncertainty in many-body calculations, modern ab initio methods are tremendously refined. Indeed, their fidelity, and domain of applicability have been dramatically extended during the recent decade. This development has led to an increased focus on designing improved microscopic nuclear potentials that are based on novel fitting protocols. To ensure steady progress, we need to critically examine and systematically compare the quality of different sets of interaction models and their predictive power.

1.1 Optimization of LECs and Uncertainty Quantification of Predictions from \(\chi \)EFT

The canonical approach to estimate the numerical values of the LECs \(\mathbf {\alpha }\) in \(\chi \)EFT is to minimize some weighted sum of squared residuals

where \(\mathcal {D}\) represents the calibration dataset, and \(\mathcal {O}_{i}\) denotes experimental and theoretical values for observable i in \(\mathcal {D}\) with an obvious notation. The theoretical description of each observable depends explicitly on the LECs \(\mathbf {\alpha }\). In the limit of independent data, the uncertainty associated with each observable is represented by \(\sigma _i\). Known, or estimated, correlations across the data can also be incorporated [38, 39]. Using well-known methods from statistical regression analysis, often assuming normally distributed residuals, it is possible to extract the covariance matrix of the parameters that minimize the objective.

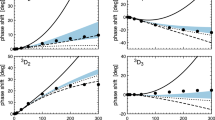

An order-by-order uncertainty analysis of chiral interactions up to NNLO was undertaken in [13]. The objective function in that work incorporated an estimate of the theory uncertainty from \(\chi \)EFT, and the dataset \(\mathcal {D}\) comprised \(\pi N\) and NN scattering data as well as bound-state observables in \(A=2,3\) nuclei. The total covariance matrix for the LECs was determined for each analyzed interaction model. Additional components of the systematic uncertainty were probed by varying the regulator cutoff \(\varLambda \in [450,600]\) MeV as well as the maximally allowed scattering energy in the employed database of measured scattering cross sections. This effort resulted in a family of 42 chiral interactions at NNLO. Together, they furnish a valuable tool for probing uncertainties in ab initio few-nucleon predictions, see [40, 41] for representative examples of their use.

1.2 With an EFT, We Can Do Better

One way to estimate the effect of the first excluded term in an EFT expansion was suggested in [42]. Building on the work in [43], this was given a Bayesian interpretation in [18]. In brief, if we write the order-by-order expansion of some observable \(\mathcal {O}\) as

where \(\mathcal {O}_0\) is the overall scale, e.g. the leading order contribution, and we know the expansion parameter, e.g. \(q = (Q/\varLambda _b)\), then we can compute the probability distribution of the expansion coefficient \(a_i\) provided that we know the values of the lower-order coefficients \(a_0,\ldots ,a_{i-1}\). The application of Bayes theorem with identically distributed, independent, boundless, and uniform prior distributions of the expansion coefficients \(a_i\), leads to a simple expression for the estimate of \(a_i\), with \((100 \times i/(i+1))\%\) confidence, given by

Although the above expression only provides an estimate, theoretical predictions equipped with truncation errors provide important guidance and demonstrate one of the main advantages of using an EFT. Refined methods for quantifying EFT truncation errors in nuclear physics is of key importance.

2 Muon-Capture on the Deuteron

An excellent example of where theoretical uncertainty quantification plays an important role is in the theoretical analysis of the muon-deuteron \(\mu -d\) (doublet) capture rate \(\varGamma _D\), i.e. the rate of

Experimentally, this will be determined with 1.5% precision in the MuSun experiment. Such precision, if attained, corresponds to a tenfold improvement over previous experiments. The centerpiece of the MuSun experiment is to extract the two-body weak LEC \(d_R\) from a two-nucleon process. This LEC is of central importance in several other low-energy processes that are currently studied. It is proportional to the proton-proton (pp) fusion cross-section, an important low-energy process that generates energy in the Sun. Given its extremely low cross-section, this cannot be measured on earth. The LEC \(d_R\) also appears in neutrino-deuteron scattering, and once the \(\pi N\) couplings \(c_3\) and \(c_4\) are fixed, it determines the LEC \(c_D\) which governs the strength of the one-pion exchange plus contact piece of the leading NNN interaction. A thorough analysis of the uncertainties in theoretical descriptions of \(\mu -d\) capture was carried out in [44], using the covariance matrices from [13], yielding for the \(S-\)wave contribution \( \varGamma _D^{^1S_0} = 252.4 ^{+1.5}_{-2.1} \, \mathrm{s}^{-1}.\)

Exploiting the Roy-Steiner analysis from [45], it was also possible to quantify the correlation between the \(\mu -d\) capture rate and the pp-fusion low-energy cross section in terms of the LEC \(c_D\). Furthermore, assuming an EFT expansion ratio \(q=\frac{m_{\pi }}{\varLambda _b} \sim 0.28\), i.e. estimating \(\varLambda _b \sim 500\) MeV, allowed for an order-by-order estimate of the EFT truncation error of the capture rate along the lines presented in Sect. 90.1.2. The LO-NLO-NNLO predictions of the capture rate are \(\varGamma _{D}^{^1S_0} = 186.3 + 61.0 + 5.5\) s\(^{-1}\), where the second and third term indicate the NLO and NNLO contributions, respectively, to the LO result (first term). This information leads to an estimated EFT truncation error of 4.6 s\(^{-1}\), with 75%-confidence. Clearly, the dominating source of uncertainty.

3 From Few to Many

Increasing the number of nucleons in the system under study introduces several new challenges. The presence of multiple scales, emergence of many-body effects such as collectivity, clusterization, and saturation are not trivial to understand from first principles, nor particularly easy to handle when solving the Schrödinger equation and therefore not straightforward to incorporate when calibrating the interaction. In [36], the LECs of a chiral NNLO interaction was optimized to reproduce few-nucleon data as well as binding energies and radii in \(^{14}\)C and selected oxygen isotopes. This approach to parameter estimation, resulting in the NNLO\(_\mathrm{sat}\) interaction was facilitated by a novel application the POUNDERs optimization algorithm [46] coupled to jacobi-NCSM and CC methods. NNLO\(_\mathrm{sat}\) has enabled accurate predictions of radii and ground-state energies in selected medium-mass nuclei [47].

It should be pointed out that the NNLO\(_\mathrm{sat}\) interaction does not provide an accurate description of NN scattering cross-sections, in particular for pp scattering, at relative momenta beyond \(\sim m_{\pi }\). At the same time, it is not obvious how to determine the domains of applicability of an interaction model and exploit this information such that the risk of overfitting is minimized. This, and other challenges are intimately related to quantifying truncation errors in \(\chi \)EFT and predictions from ab initio nuclear theory.

3.1 Delta Isobars and Nuclear Saturation

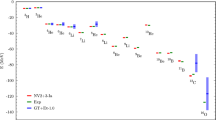

It turns out that the inclusion of the \(\varDelta \) isobar as an explicit low-energy degree of freedom in the effective Lagrangian, in addition to pions and nucleons, play an important role for accurately reproducing the saturation properties of the nuclear interaction. See [48] for additional details. Figure 90.2 demonstrates the effect of incorporating the \(\varDelta \) up to NNLO in CC calculations of symmetric nuclear matter. Additional advantages of including the \(\varDelta \) were observed in [49,50,51]. Such results are not surprising from an EFT perspective, given that the \(\varDelta -N\) mass splitting is only twice the pion mass and therefore below the expected breakdown scale of \(\chi \)EFT potentials [52]. Thus, the \(\varDelta \)-full chiral interaction provides a valuable starting point for constructing more refined \(\chi \)EFT interactions with improved uncertainty estimates.

CC calculations of the energy per nucleon (in MeV) in symmetric nuclear matter at NNLO in \(\chi \)EFT with (solid line) and without (dashed line) the \(\varDelta \) isobar. Both interactions employ a momentum regulator-cutoff \(\varLambda \) = 450 MeV. The shaded areas indicate the estimated EFT-truncation errors following the prescription presented in Sect. 90.1.2. The diamonds mark the saturation point and the black rectangle indicates the region E/A = 16 ± 0.5 MeV and \(\rho \) = 0.16 ± 0.01 fm\(^{3}\)

4 Discussion and Outlook

It is clear that the computational capabilities in ab initio nuclear physics exceed the accuracy of available chiral interactions. To make further progress requires improved statistical analysis and evaluation of interaction models. Hopefully, such efforts will bring us closer to a well-founded and microscopically rooted formulation of the nuclear interaction. There are several interesting challenges ahead of us. We must push the frontier of accurate ab initio methods further towards exotic systems and decays; systematically exploit information from NNN scattering data, decay probabilities, and saturation properties of infinite matter when optimizing the LECs of chiral interactions; demonstrate a connection between EFT(s) applied to nuclei and low-energy QCD, e.g. test PCs for RG invariance; and quantify systematic and statistical uncertainties in theoretical predictions. Continuous development of efficient computer codes to harness high-performance computing resources will hopefully enable detailed Bayesian analyses of ab initio calculations in the near future.

References

Beane, S.R., Detmold, W., Orginos, K., Savage, M.J.: Nuclear physics from lattice QCD. Prog. Part. Nucl. Phys. 66(1), 1–40 (2011)

Weinberg, Steven: Effective chiral Lagrangians for nucleon—pion interactions and nuclear forces. Nucl. Phys. B 363, 3–18 (1991)

Chang, C.C., Nicholson, A.N., Rinaldi, E., et al.: A per-cent-level determination of the nucleon axial coupling from quantum chromodynamics. Nat. Publ. Group 558(7708), 91–94 (2018)

Bedaque, P.F., Van Kolck, U.: Effective field theory for few-nucleon systems. Annu. Rev. Nucl. Part. Sci. 52(1), 339–396 (2002)

Epelbaum, E., Hammer, H.-W., Meißner, U.-G.: Modern theory of nuclear forces. Rev. Mod. Phys. 81, 1773–1825 (2009)

Machleidt, R., Entem, D.R.: Chiral effective field theory and nuclear forces. Phys. Rep. 503, 1–75 (2011)

Nogga, A., Timmermans, R.G.E., van Kolck, U.: Renormalization of one-pion exchange and power counting. Phys. Rev. C 72, 054006 (2005)

Phillips, D.R. Recent results in chiral effective field theory for the NN system. PoS CD12, 172 (2013)

Griehammer, H.W.: Assessing theory uncertainties in EFT power countings from residual cutoff dependence. PoS CD15, 104 (2016)

Epelbaum, E., Meissner, U.G.: on the renormalization of the one-pion exchange potential and the consistency of Weinberg‘s power counting. Few Body Syst. 54, 2175–2190 (2013)

Song, Y.-H., Lazauskas, R., van Kolck, U.: Triton binding energy and neutron-deuteron scattering up to next-to-leading order in chiral effective field theory. Phys. Rev. C 96, 024002 (2017)

Ekström, A., Baardsen, G., Forssén, C., et al.: Optimized chiral nucleon-nucleon interaction at next-to-next-to-leading order. Phys. Rev. Lett. 110(19), 192502 (2013)

Carlsson, B.D., Ekström, A., Forssén, C., et al.: Uncertainty analysis and order-by-order optimization of chiral nuclear interactions. Phys. Rev. X 6(1), 011019 (2016)

Reinert, P., Krebs, H., Epelbaum, E.: Semilocal momentum-space regularized chiral two-nucleon potentials up to fifth order. Eur. Phys. J. A 54(5), 86 (2018)

Schindler, M.R., Phillips, D.R.: Bayesian methods for parameter estimation in effective field theories. Ann. Phys. 324(3), 682–708 (2009)

Wesolowski, S., Klco, N., Furnstahl, R.J., Phillips, D.R., Thapaliya, A.: Bayesian parameter estimation for effective field theories. J. Phys. G: Nucl. Part. Phys. 43(7), 074001 (2016)

Dobaczewski, J., Nazarewicz, W., Reinhard, P.-G.: Error estimates of theoretical models: a guide. J. Phys. G: Nucl. Part. Phys. 41(7), 074001 (2014)

Furnstahl, R.J., Klco, N., Phillips, D.R., et al.: Quantifying truncation errors in effective field theory. Phys. Rev. C 92(2), 024005 (2015)

McDonnell, J.D., Schunck, N., Higdon, D., et al.: Uncertainty quantification for nuclear density functional theory and information content of new measurements. Phys. Rev. Lett. 114, 122501 (2015)

Vernon, I., Goldstein, M., Bower, R.G.: Galaxy formation: a Bayesian uncertainty analysis. Bayesian Anal. 5(4), 619–669 (2010)

Neufcourt, L., Cao, Y., Nazarewicz, W., et al.: Neutron drip line in the ca region from bayesian model averaging. Phys. Rev. Lett. 122, 062502 (2019)

Ekström, A., Carlsson, B.D., Wendt, K.A., et al.: Statistical uncertainties of a chiral interaction at next-to-next-to leading order. J. Phys. G: Nucl. Part. Phys. 42(3), 034003 (2015)

Pérez, RN., Amaro, J.E., Arriola, ER., Maris, P., Vary, J.P.: Statistical error propagation in ab initio no-core full configuration calculations of light nuclei. Phys. Rev. C 92, 064003 (2015)

Barrett, B.R., Navrátil, P., Vary, J.P.: Ab initio no core shell model. Prog. Part. Nucl. Phys. 69, 131–181 (2013)

Hagen, G., Papenbrock, T., Hjorth-Jensen, M., et al.: Coupled-cluster computations of atomic nuclei. Rept. Prog. Phys. 77(9), 096302 (2014)

Hergert, H., Bogner, S.K., Morris, T.D., et al.: The in-medium similarity renormalization group: a novel ab initio method for nuclei. Phys. Rept. 621, 165–222 (2016)

Lee, Dean: Lattice simulations for few and many-body systems. Prog. Part. Nucl. Phys. 63, 117–154 (2009)

Hagen, G., Ekström, A., Forssén, C., et al.: Neutron and weak-charge distributions of the \(^{48}\)ca nucleus. Nat. Phys. 12(2), 186–190 (2016)

Hagen, G., Jansen, G.R., Papenbrock, T.: Structure of \(^{78}\)Ni from first principles computations. Phys. Rev. Lett. 117(17), 172501 (2016)

Morris, T.D., Simonis, J., Stroberg, S.R., et al.: Structure of the lightest tin isotopes. Phys. Rev. Lett. 120, 152503 (2018)

Lapoux, V., Somà, V., Barbieri, C., et al.: Radii and binding energies in oxygen isotopes: achallenge for nuclear forces. Phys. Rev. Lett. 117, 052501 (2016)

Entem, D.R., Machleidt, R.: Accurate charge dependent nucleon nucleon potential at fourth order of chiral perturbation theory. Phys. Rev. C 68, 041001 (2003)

Wiringa, R.B., Stoks, V.G.J., Schiavilla, R.: An accurate nucleon-nucleon potential with charge independence breaking. Phys. Rev. C 51, 38–51 (1995)

Machleidt, R.: The high precision, charge dependent Bonn nucleon-nucleon potential (CD-Bonn). Phys. Rev. C 63, 024001 (2001)

Binder, S., Langhammer, J., Calci, A., et al.: Ab initio path to heavy nuclei. Phys. Lett. B 736(C), 119–123 (2014)

Ekström, A., Jansen, G.R., Wendt, K.A., et al.: Accurate nuclear radii and binding energies from a chiral interaction. Phys. Rev. C 91(5), 051301 (2015)

Drischler, C., Hebeler, K., Schwenk, A.: Chiral interactions up to next-to-next-to-next-to-leading order and nuclear saturation. Phys. Rev. Lett. 122, 042501 (2019)

Stump, D., Pumplin, J., Brock, R., et al.: Uncertainties of predictions from parton distribution functions. I. the lagrange multiplier method. Phys. Rev. D, 65(1), 014012 (2001)

Wesolowski, S., Furnstahl, R., Melendez, J.A., et al.: Exploring Bayesian parameter estimation for chiral effective field theory using nucleon-nucleon phase shifts. J. Phys. G: Nucl. Part. Phys. (2018)

Hernandez, O.J. Ekström, A., Dinur, N.N., et al.: The deuteron-radius puzzle is alive: a new analysis of nuclear structure uncertainties. Phys. Lett. B 778, 377–383 (2018)

Gazda, D., Catena, R., Forssén, C.: Ab initio nuclear response functions for dark matter searches. Phys. Rev. D 95, 103011 (2017)

Epelbaum, E., Krebs, H., Meißner, U.-G.: Improved chiral nucleon-nucleon potential up to next-to-next-to-next-to-leading order. Eur. Phys. J. A 51(5), 53 (2015)

Cacciari, M., Houdeau, N.: Meaningful characterisation of perturbative theoretical uncertainties. J. High Energy Phys. 2011(9), 39 (2011)

Acharya, B., Ekström, A., Platter, Lucas: Effective-field-theory predictions of the muon-deuteron capture rate. Phys. Rev. C 98, 065506 (2018)

Hoferichter, M., de Elvira, J.R., Kubis, B., Meiner, U.-G.: Roysteiner-equation analysis of pionnucleon scattering. Phys. Rep. 625, 1–88 (2016)

Wild, S.M.: Solving derivative-free nonlinear least squares problems with POUNDERS. In: Terlaky, T., Anjos, M.F., Ahmed, S. (eds.) Advances and trends in optimization with engineering applications, pp. 529–540. SIAM (2017)

Hagen, G., et al.: Neutron and weak-charge distributions of the \(^{48}\)Ca nucleus. Nature Phys. 12(2), 186–190 (2015)

Ekström, A., Hagen, G., Morris, T.D., et al.: \(\Delta \) isobars and nuclear saturation. Phys. Rev. C 97(2), 024332 (2018)

Piarulli, M., Girlanda, L., Schiavilla, R., et al.: Minimally nonlocal nucleon-nucleon potentials with chiral two-pion exchange including \(\Delta \) resonances. Phys. Rev. C 91(2), 024003 (2015)

Piarulli, M., et al.: Light-nuclei spectra from chiral dynamics. Phys. Rev. Lett. 120(5), 052503 (2017)

Logoteta, D., Bombaci, I., Kievsky, A.: Nuclear matter properties from local chiral interactions with \(\rm \Delta \) isobar intermediate states. Phys. Rev. C 94, 064001 (2016)

van Kolck, U.: Few nucleon forces from chiral Lagrangians. Phys. Rev. C 49, 2932–2941 (1994)

Acknowledgements

I would like to thank all my collaborators for sharing their insights during our joint work on the range of topics presented here. This work has received funding from the European Research Council (ERC) under the European Unions Horizon 2020 research and innovation programme (Grant Agreement No. 758027) and the Swedish Research Council under Grant No. 2015-00225 and Marie Sklodowska Curie Actions, Cofund, Project INCA 600398.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Ekström, A. (2020). Strong Interactions for Precision Nuclear Physics. In: Orr, N., Ploszajczak, M., Marqués, F., Carbonell, J. (eds) Recent Progress in Few-Body Physics. FB22 2018. Springer Proceedings in Physics, vol 238. Springer, Cham. https://doi.org/10.1007/978-3-030-32357-8_90

Download citation

DOI: https://doi.org/10.1007/978-3-030-32357-8_90

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-32356-1

Online ISBN: 978-3-030-32357-8

eBook Packages: Physics and AstronomyPhysics and Astronomy (R0)