Abstract

Full-field optical measurements like Digital Image Correlation or the Grid Method have brought a paradigm shift in the experimental mechanics community. While inverse identification techniques like Finite Element Model Updating (FEMU) or the Virtual Fields Method (VFM) have been the object of significant developments, current test methods, inherited from the age of strain gauges or LVDTs, are not generally well adapted to the rich information provided by these new measurement tools. This paper provides a short review of the research dealing with the design and optimization of heterogeneous mechanical tests for identification of material parameters from full-field measurements.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

Introduction

In the last decade, optical full-field measurements like Digital Image Correlation (DIC, [1]) or the Grid Method (GM, [2]) have provided in-depth transformations to the way researchers and engineers perform mechanical experiments on materials and structures. These measurement technologies have become mature, after intensive international research effort, as reflected by the recently published guide of good practice for DIC [3]. Such measurements make it possible to move from simple statically determinate tests to more complex ones, with a view to get more data from a single test, thus reducing the testing effort and increasing the quality of the material models. However, in this case, inverse solutions have to be employed to go from heterogeneous kinematic fields to material parameters (and/or unknown loading distributions). This has also been the object of extensive research, with two main techniques having emerged, Finite Element Model Updating (FEMU, [4]) and the Virtual Fields Method (VFM, [5]).

However, there is a third element to this new paradigm in materials testing: the test configuration itself. Most of our mechanical testing toolkit was developed at a time when only strain gauges or other point sensors were available. As a consequence, they are generally strongly inspired from traditional tests developed for a different type of metrological information.

There has been some research effort to look at the problem of designing heterogeneous tests that would be better adapted to full-field measurements and inverse identification for a given type of constitutive model, and this is the object of this short review. However, this is still an underexplored area of research that requires sustained effort to realise the full potential of this new paradigm in materials testing.

The Early Attempts

In the late 1980s and early 1990s, two pioneering research groups started to work on new testing procedures based on full-field measurements and inverse identification. The first one was at the School of Mines in St-Etienne, France, with the PhD of Michel Grédiac under the supervision of Alain Vautrin. They combined a deflectometry set-up and the Virtual Fields Method, first proposed by M. Grédiac in his PhD, to the identification of elastic anisotropic stiffness components of thin composite plates [6, 7]. The test configuration was a series of three bending tests realised on the same plate but moving the loading point, to create a database with enough information to identify the six anisotropic bending stiffness components. The second one was the group of Cees Oomens at TU Eindhoven, with a motivation to test biological membranes like skin [8], and later, metals [9]. In the latter, a double asymmetrical notch configuration was selected to create heterogeneity, though little justification was provided for this choice.

Research in this area expanded in the 1990s and 2000s, for anisotropic elasticity [10,11,12,13], elasto-plasticity [14,15,16,17,18], hyperelasticity [19,20,21,22,23], high strain rate behaviour [24,25,26,27,28,29,30] and heterogeneous materials [31,32,33,34,35], to cite but a few references.

Test Design and Optimization

Designs Based on the Strain State

Attempts to optimize test configurations were only scarce initially. To the best knowledge of the present author, the first paper on this was published in 1998 [36]. To identify the full set of in-plane elastic parameters for an orthotropic composite material, a tension/bending test on a unidirectional composite specimen in the shape of a ‘T’ was imagined (Fig. 16.1). The ends of the horizontal bar of the ‘T’ rest on rollers while the vertical bar is gripped and pulled down. The vertical bar is where the vertical modulus is activated, while the horizontal bar sees bending and shear, activating the horizontal and the shear moduli. The aspect ratio of the specimen was optimized using the native ANSYS APDL language so that the average horizontal strain in area 1, the average vertical strain in area 2 and the average shear strain in area 3 were identical. This was then validated experimentally in [10]. The results were rather good except for major’s Poisson’s ratio but this is likely to come from the manually-defined virtual fields used in that study.

T-shaped specimen, inspired from [36]

In [37], a number of indicators based on strain metrics were developed for elasto-plastic identification. These indicators were then used in a shape optimization problem by the same group [38]. Starting from a circular shape parameterized by cubic splines, the control points of the splines were adjusted with respect to a cost function that combined the indicators from [37]. The result was a ‘butterfly-shaped specimen and experimental validations was published in [39]. Another interesting shape was devised using similar criteria but including the strain rate for visco-plasticity identification [40].

Designs Based on Identification Quality

Strain metrics, while easy to compute, are several steps away from the actual identification results and therefore, may lead to non-optimal configurations in practice. A step forward consists in considering the identification uncertainty as the cost function to optimize test configurations. To the best knowledge of the present author, the first attempt was published in 2007 [41]. A rectangular unidirectional composite test specimen was loaded in a combination of bending and shear using a Iosipescu fixture.

The specimen free length (between supports) and off-axis fibre angle were used as design variables and a cost function established at balancing the four sensitivity-to-noise coefficients from the noise-optimized VFM [43], one for each individual orthotropic stiffness component. It should be noted that these coefficients scale down with the signal to noise ratio and therefore, a normalizing procedure had to be established to limit the allowable deformation in the purely elastic simulations. This is an essential step that has to be controlled carefully as it can significantly affect the end result. The other issue is that the length had to be constrained to a maximum value as the algorithm has a tendency to converge towards much longer specimens that would have been physically impractical because of the loss of spatial resolution. This was one of the motivations for moving to the full identification chain as reported in the next subsection. The problem was solved by driving ANSYS® through Matlab using a text input file for the FE model. An optimal configuration was found and successfully validated experimentally using ESPI [41]. Compared to the standard configuration used in [44] for instance, the improvement was significant. A very similar study was conducted using an Arcan test configuration and DIC to identify the orthotropic stiffness components of a PVC foams [45].

The same approach was used by Michel Grédiac’s group [42] to optimize the shape of thin plates in bending, to identify the full set of orthotropic bending stiffness components for a unidirectional composite. The only difference is that the design variables were much wider: shape, support and loading points, and fibre angle. The shape was parameterized using splines, as in [38]. The final result for the shape and loading points is non-intuitive (Fig. 16.2) but again, it may not be practical because of potential strain concentrations not well reconstructed by the measurement technique, and the loss of pixels as the shape moves further away from the camera aspect ratio. No experimental validation was attempted on this shape.

Optimized bending test, from [42]. Red triangles indicate supports, arrow indicates applied force

Designs Based on Full Identification Chain Simulation

The main limitation of the use of strains directly from finite element simulations to optimize test configurations based on identification quality is the fact that experimental constraints are not taken into account. All experimentalists familiar with DIC or the GM know that spatial resolution and camera noise play an important role in the quality of the results. Moreover, many processing and post-processing parameters have to be selected (DIC subset, step size, shape functions, strain window to cite a few in the case of DIC) and this choice does impact the quality of the identification. For instance, a particular test configuration may provide excellent heterogeneities but the strain gradients are too large to be faithfully rendered for a given imaging set-up and this will lead to a large systematic error that the procedures described above will miss.

To circumvent this issue, a programme was developed to simulate the full measurement and identification chain, so that complete uncertainty and error propagation could be studied. This was developed for the VFM, first with the grid method [46] and later extended to DIC [47]. The initial motivation was to evaluate random and systematic errors with a view to providing accurate uncertainty limits. This is an essential point for such tests to be used as standard tools in industry. The work in [45] on PVC foams was refined by optimizing the configuration based on the total identification error and a different optimal configuration was found [48]. Since the DIC parameters also affect the results, they were optimized in a second step, after a first configuration was found with pre-set DIC parameters. Finally, not only were the four orthotropic stiffness components identified successfully from a single test, but the standard deviations were predicted within less than a factor of 2. This was thought very satisfactory as a starting point as many experimental issues like variations in light (amplified by reflective hotspots), machine vibrations, specimen alignment and geometrical defects were not taken into account. A similar study was performed on three different test configurations to identify the orthotropic stiffness components of composites with a single test. Each configuration was optimized for geometry and fibre angle, and then for DIC parameters. Interestingly, all cases converged to quadratic shape functions and steps of 1 pixel [49].

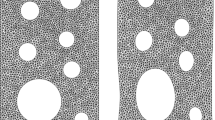

Clearly, the ultimate stage is to combine geometry and measurement parameters simultaneously into a single optimization problem. This was attempted on the T-shaped specimen problem from [36]. Eight design variables were used: three geometrical parameters (b and c as defined in Fig. 16.1, plus the radius of a fillet joining the two bars of the ‘T’, total height and width were fixed to correspond to the camera sensor aspect ratio), the orthotropy angle, and DIC subset, step size, shape functions (linear or quadratic) and the strain window [50]. A genetic algorithm was used to solve the problem. The cost function was total error (systematic ± twice the standard deviation, whichever was the further away from the reference). This converged to the configuration shown in Fig. 16.3. It is very interesting to notice how the optimization always tries to minimize the number of lost pixels, which strongly affects the systematic error. Also worth noting that again, quadratic shape functions and step of 1 are obtained at convergence. It has not yet been attempted to experimentally validate this work but experimental difficulties may arise from the very thick vertical bar which may mead to instabilities. This suggests that a buckling load constraint may have to be added to the optimization problem.

Optimal (left) and non-optimal (right) configurations for the T-shaped specimen [50]. Strain transverse to the fibres represented. For optimal configuration: fibre direction 27° from horizontal axis, subset 36, step 1, quadratic shape functions and strain window of 8

Conclusion and Future Work

This short review has shown that although some research effort has been dedicated to the design and/or optimization of new heterogeneous tests for inverse identification from full-field measurements, there is still a long way to go. Most of the examples are on anisotropic elasticity, with a few on anisotropic plasticity and/or visco-plasticity. Also, the vast majority of these efforts have risen from the VFM community. This is not a surprise considering the large difference of computational efficiency between the VFM and FEMU (VFM quoted to be 125 times faster than FEMU in [23]). Since test optimization has to rely on many resolutions of subsequent identification problems, the use of FEMU for this makes it quickly computationally prohibitive.

The next stage is now be to extend the optimization based on the full measurement and identification simulator to anisotropic plasticity, to progress the interesting work by Andrade-Campos and Thuillier further. The recent development of the sensitivity-based virtual fields [51, 52] provide the perfect tool for this. Extension to hyperelastic models would also be interesting, though additional constraints may be needed to prevent wrinkling. Finally, once promising tests have been designed that seem fit for purpose, experimental validation of uncertainty bounds, coupled with round-robin testing will be needed to establish them as the next generation of test standards.

References

M.A. Sutton, J.J. Orteu, H.W. Schreier, Image Correlation for Shape, Motion and Deformation Measurements: Basic Concepts, Theory and Applications (Springer, New York, 2009), pp. 1–321

M. Grédiac, F. Sur, B. Blaysat, The Grid Method for in-plane displacement and strain measurement: a review and analysis. Strain 52(3), 205–243 (2016)

E.M.C. Jones, M.A. Iadicola, A Good Practices Guide for Digital Image Correlation (International Digital Image Correlation Society, Portland, 2018)

S. Avril et al., Overview of identification methods of mechanical parameters based on full-field measurements. Exp. Mech. 48(4), 381–402 (2008)

F. Pierron, M. Grédiac, The Virtual Fields Method. Extracting Constitutive Mechanical Parameters from Full-Field Deformation Measurements (Springer, New-York, 2012), p. 517

M. Grédiac, A. Vautrin, A new method for determination of bending rigidities of thin anisotropic plates. J. Appl. Mech. 57(4), 964–968 (1990)

M. Grédiac, Principle of virtual work and identification. C. R. Acad. Sci. Sér. II 309(1), 1–5 (1989)

C.W.J. Oomens et al., A numerical-experimental method for a mechanical characterization of biological materials. J. Biomech. 26(4-5), 617–621 (1993)

M.H.H. Meuwissen et al., Determination of the elasto-plastic properties of aluminium using a mixed numerical–experimental method. J. Mater. Process. Technol. 75(1–3), 204–211 (1998)

M. Grédiac, F. Pierron, Y. Surrel, Novel procedure for complete in-plane composite characterization using a single T-shaped specimen. Exp. Mech. 39(2), 142–149 (1999)

R. Moulart, S. Avril, F. Pierron, Identification of the through-thickness rigidities of a thick laminated composite tube. Compos. Pt A Appl. Sci. Manuf. 37(2), 326–336 (2006)

C. Gogu et al., Bayesian identification of elastic constants in multi-directional laminate from moiré interferometry displacement fields. Exp. Mech. 53(4), 635–648 (2013)

R. Gras et al., Identification of a set of macroscopic elastic parameters in a 3D woven composite: uncertainty analysis and regularization. Int. J. Solids Struct. 55, 2–16 (2015)

J. Kajberg, G. Lindkvist, Characterisation of materials subjected to large strains by inverse modelling based on in-plane displacement fields. Int. J. Solids Struct. 41(13), 3439–3459 (2004)

L. Robert et al., Identification of hardening parameters using finite element models and full-field measurements: some case studies. J. Strain Anal. Eng. Des. 47(1), 3–17 (2012)

M. Grédiac, F. Pierron, Applying the virtual fields method to the identification of elasto-plastic constitutive parameters. Int. J. Plast. 22(4), 602–627 (2006)

M. Bertin et al., Integrated digital image correlation applied to elastoplastic identification in a biaxial experiment. J. Strain Anal. Eng. Des. 51(2), 118–131 (2016)

J.M.P. Martins, A. Andrade-Campos, S. Thuillier, Comparison of inverse identification strategies for constitutive mechanical models using full-field measurements. Int. J. Mech. Sci. 145, 330–345 (2018)

N. Promma et al., Application of the virtual fields method to mechanical characterization of elastomeric materials. Int. J. Solids Struct. 46(3-4), 698–715 (2009)

S. Avril, P. Badel, A. Duprey, Anisotropic and hyperelastic identification of in vitro human arteries from full-field optical measurements. J. Biomech. 43(15), 2978–2985 (2010)

G. Palmieri et al., Virtual fields method on planar tension tests for hyperelastic materials characterisation. Strain 47(suppl. 2), 196–209 (2011)

L.E. Perotti et al., Method for the unique identification of hyperelastic material properties using full-field measures. Application to the passive myocardium material response. Int. J. Numer. Methods Biomed. Eng. 33(11), e2866 (2017)

L. Zhang et al., Verification of a virtual fields method to extract the mechanical properties of human optic nerve head tissues in vivo. Biomech. Model. Mechanobiol. 16(3), 871–887 (2017)

J. Kajberg, B. Wikman, Viscoplastic parameter estimation by high strain-rate experiments and inverse modelling - speckle measurements and high-speed photography. Int. J. Solids Struct. 44(1), 145–164 (2007)

J. Kajberg et al., High strain-rate tensile testing and viscoplastic parameter identification using microscopic high-speed photography. Int. J. Plast. 20(4-5), 561–575 (2004)

D. Notta-Cuvier et al., Identification of Johnson-Cook’s viscoplastic model parameters using the virtual fields method: application to titanium alloy Ti6Al4V. Strain 49(1), 22–45 (2013)

S.-h. Yoon, I. Giannakopoulos, C.R. Siviour, Application of the Virtual Fields Method to the uniaxial behavior of rubbers at medium strain rates. Int. J. Solids Struct. 69, 553–568 (2015)

B. Koohbor, A. Kidane, W.-Y. Lu, Effect of specimen size, compressibility and inertia on the response of rigid polymer foams subjected to high velocity direct impact loading. Int. J. Impact Eng. 98, 62–74 (2016)

F. Pierron, H. Zhu, C. Siviour, Beyond Hopkinson’s bar. Philos. Trans. R. Soc. London, Ser. A 372(2023), 20130195 (2014)

L. Fletcher, F. Pierron, An image-based inertial impact (IBII) test for tungsten carbide cermets. J. Dyn. Behav. Mater. 4(4), 481–504 (2018)

Y. Sato, S. Arikawa, S. Yoneyama, Identification of heterogeneous elastic material characteristics by virtual fields method, in International Conference on Experimental Mechanics, (SPIE, Bellingham, 2014)

S. Huang, P. Feissel, P. Villon, Modified constitutive relation error: an identification framework dealing with the reliability of information. Comput. Methods Appl. Mech. Eng. 311, 1–17 (2016)

J.M. Considine et al., Smoothly varying in-plane stiffness heterogeneity evaluated under uniaxial tensile stress. Strain 53(5), e12237 (2017)

M.A. Sutton et al., Identification of heterogeneous constitutive parameters in a welded specimen: uniform stress and virtual fields methods for material property estimation. Exp. Mech. 48(4), 451–464 (2008)

G.L. Louëdec et al., Identification of the local elasto-plastic behavior of FSW welds using the virtual fields method. Exp. Mech. 53(5), 849–859 (2013)

M. Grédiac, F. Pierron, A T-shaped specimen for the direct characterization of orthotropic materials. Int. J. Numer. Methods Eng. 41(2), 293–309 (1998)

N. Souto, S. Thuillier, A. Andrade-Campos, Design of an indicator to characterize and classify mechanical tests for sheet metals. Int. J. Mech. Sci. 101, 252–271 (2015)

N. Souto, A. Andrade-Campos, S. Thuillier, Mechanical design of a heterogeneous test for material parameters identification. Int. J. Mater. Form. 10(3), 353–367 (2016)

J. Aquino et al., Experimental validation of a new heterogeneous mechanical test design. AIP Conf. Proc. 1960, 090002 (2018)

E.M.C. Jones et al., Parameter covariance and non-uniqueness in material model calibration using the virtual fields method. Comput. Mater. Sci. 152, 268–290 (2018)

F. Pierron et al., Identification of the orthotropic elastic stiffnesses of composites with the virtual fields method: sensitivity study and experimental validation. Strain 43(3), 250–259 (2007)

K. Syed-Muhammad, E. Toussaint, M. Grédiac, Optimization of a mechanical test on composite plates with the virtual fields method. Struct. Multidiscip. Optim. 38(1), 71–82 (2009)

S. Avril, M. Grédiac, F. Pierron, Sensitivity of the virtual fields method to noisy data. Comput. Mech. 34(6), 439–452 (2004)

H. Chalal et al., Experimental identification of a nonlinear model for composites using the grid technique coupled to the virtual fields method. Compos. Pt. A Appl. Sci. Manuf. 37(2), 315–325 (2006)

P. Wang, F. Pierron, O.T. Thomsen, Identification of material parameters of PVC foams using digital image correlation and the virtual fields method. Exp. Mech. 53(6), 1001–1015 (2013)

M. Rossi, F. Pierron, On the use of simulated experiments in designing tests for material characterization from full-field measurements. Int. J. Solids Struct. 49(3-4), 420–435 (2012)

M. Rossi et al., Effect of DIC spatial resolution, noise and interpolation error on identification results with the VFM. Strain 51(3), 206–222 (2015)

P. Wang et al., Optimised experimental characterisation of polymeric foam material using DIC and the virtual fields method. Strain 52(1), 59–79 (2016)

X. Gu, F. Pierron, Towards the design of a new standard for composite stiffness identification. Compos. Pt. A Appl. Sci. Manuf. 91, 448–460 (2016)

X. Gu, F. Pierron, Full Optimization of the T-Shaped Tensile Test Using Genetic Algorithm (University of Southampton/Shanghai Jiaotong University, Southampton/Shanghai, 2016)

A. Marek, F.M. Davis, F. Pierron, Sensitivity-based virtual fields for the non-linear virtual fields method. Comput. Mech. 60(3), 409–431 (2017)

A. Marek, F.M. Davis, F. Pierron, Sheet metals characterization using the virtual fields method. AIP Conf. Proc. 1960, 170011 (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Society for Experimental Mechanics, Inc.

About this paper

Cite this paper

Pierron, F. (2020). Test Design for Identification from Full-Field Measurements: A Concise Review. In: Baldi, A., Kramer, S., Pierron, F., Considine, J., Bossuyt, S., Hoefnagels, J. (eds) Residual Stress, Thermomechanics & Infrared Imaging and Inverse Problems, Volume 6. Conference Proceedings of the Society for Experimental Mechanics Series. Springer, Cham. https://doi.org/10.1007/978-3-030-30098-2_16

Download citation

DOI: https://doi.org/10.1007/978-3-030-30098-2_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-30097-5

Online ISBN: 978-3-030-30098-2

eBook Packages: EngineeringEngineering (R0)