Abstract

OpenMP offers directives for offloading computations from CPU hosts to accelerator devices such as GPUs. A key underlying challenge is in efficiently managing the movement of data across the host and the accelerator. User experiences have shown that memory management in OpenMP programs with offloading capabilities is non-trivial and error-prone.

This paper presents OMPSan (OpenMP Sanitizer) – a static analysis-based tool that helps developers detect bugs from incorrect usage of the map clause, and also suggests potential fixes for the bugs. We have developed an LLVM based data flow analysis that validates if the def-use information of the array variables are respected by the mapping constructs in the OpenMP program. We evaluate OmpSan over some standard benchmarks and also show its effectiveness by detecting commonly reported bugs.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- OpenMP offloading

- OpenMP target data mapping

- LLVM

- Memory management

- Static analysis

- Verification

- Debugging

1 Introduction

Open Multi-Processing (OpenMP) is a widely used directive-based parallel programming model that supports offloading computations from hosts to accelerator devices such as GPUs. Notable accelerator-related features in OpenMP include unstructured data mapping, asynchronous execution, and runtime routines for device memory management.

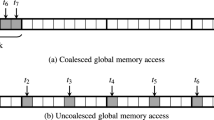

OMP Target Offloading and Data Mapping. OMP offers the omp target directive for offloading computations to devices and the omp target data directive for mapping data across the host and the corresponding device data environment. On heterogeneous systems, managing the movement of data between the host and the device can be challenging, and is often a major source of performance and correctness bugs. In the OpenMP accelerator model, data movement between device and host is supported either explicitly via the use of a map clause or, implicitly through default data-mapping rules. The optimal, or even correct, specification of map clauses can be non-trivial and error-prone because it requires users to reason about the complex dataflow analysis. To ensure that the map clauses are correct, the OpenMP programmers need to make sure that variables that are defined in one data environments and used in another data environments are mapped accordingly across the different device and host data environments. Given a data map construct, its semantics depends on all the previous usages of the map construct. Therefore, dataflow analysis of map clauses is necessarily context-sensitive since the entire call sequence leading up to a specific map construct can impact its behavior.

1.1 OpenMP 5.0 Map Semantics

Figure 1 shows a schematic illustration of the set of rules used when mapping a host variable to the corresponding list item in the device data environment, as specified in the OpenMP 5.0 standard. The rest of this paper assumes that the accelerator device is a GPU, and that mapping a variable from host to device introduces a host-to-device memory copy, and vice-versa. However, the bugs that we identify reflect errors in the OpenMP code regardless of the target device.

The different map types that OpenMP 5.0 supports are,

-

alloc: allocate on device, uninitialized

-

to: map to device before kernel execution, (host-device memory copy)

-

from: map from device after kernel execution (device-host memory copy)

-

tofrom: copy in and copy out the variable at the entry and exit of the device environment.

Arrays are implicitly mapped as tofrom, while scalars are firstprivate in the target region implicitly, i.e., the value of the scalar on the host is copied to the corresponding item on the device only at the entry to the device environment. As Fig. 1 shows, OpenMP 5.0 specification uses the reference count of a variable, to decide when to introduce a device/host memory copy. The host to device memory copy is introduced only when the reference count is incremented from 0 to 1 and the to attribute is present. Then the reference count is incremented every time a new device map environment is created. The reference count is decremented on encountering a from or release attribute, while exiting the data environment. Finally, when the reference count is decremented to zero from 1, and the from attribute is present, the variable is mapped back to the host from the device.

1.2 The Problem

For target offloading, the map clause is used to map variables from a task’s data environment to the corresponding variable in the device data environment. Incorrect data map clauses can result in usage of stale data in either host or device data environment, which may result in the following kinds of issues,

-

When reading the variable on the device data environment, it does not contain the updated value of its original variable.

-

When reading the original variable, it was not updated with the latest value of the corresponding device environment variable.

1.3 Our Solution

We propose a static analysis tool called OMPSan to perform OpenMP code “sanitization”. OMPSan is a compile-time tool, which statically verifies the correctness of the data mapping constructs based on a dataflow analysis. The key principle guiding our approach is that: an OpenMP program is expected to yield the same result when enabling or disabling OpenMP constructs. Our approach detects errors by comparing the dataflow information (reaching definitions via LLVM’s memory SSA representation [10]) between the OpenMP and baseline code. We developed an LLVM-based implementation of our approach and evaluated its effectiveness using several case studies. Our specific contributions include:

-

an algorithm to analyze OpenMP runtime library calls inserted by Clang in the LLVM IR, to infer the host/device memory copies. We expect that this algorithm will have applications beyond our OMPSan tool.

-

a dataflow analysis to infer Memory def-use relations.

-

a static analysis technique to validate if the host/device memory copies respect the original memory def-use relations.

-

diagnostic information for users to understand how the map clause affects the host and device data environment.

Even though our algorithm is based on clang OpenMP implementation, it can very easily be applied to other approaches like using directives to delay the OpenMP lowering to a later LLVM pass. The paper is organized as follows. Section 2 provides motivating examples to describe the common issues and difficulties in using OpenMP’s data map construct. Section 3 provides the background information that we use in our analysis. Section 4 presents an overview of our approach to validate the usage of data mapping constructs. Section 5 presents the LLVM implementation details, and Sect. 6 presents the evaluation and some case studies. Subsection 6.3 also lists some of the limitations of our tool, some of them common to any static analysis.

2 Motivating Examples

To motivate the utility and applicability of OMPSan, we discuss three potential errors in user code arising from improper usage of the data mapping constructs.

2.1 Default Scalar Mapping

Example 1: Consider the snippet of code in Listing 2.1. The printf on host, line 8, prints stale value of sum. Note that the definition of sum on line 5 does not reach line 8, since the variable sum is not mapped explicitly using the map clause. As such, sum is implicitly firstprivate. As Listing 2.2 shows, an explicit map clause with the tofrom attribute is essential to specify the copy in and copy out of sum from device.

2.2 Reference Count Issues

Example 2: Listing 2.3 shows an example of a reference count issue. The statement in line 12, which executes on the host, does not read the updated value of A from the device. This is again because of the from clause on line 5, which increments the reference count to 2 on entry, and back to 1 on exit, hence after line 10, A is not copied out to host. Listing 2.4 shows the usage of target update directive to force the copy-out and to read the updated value of A on line 15.

This example shows the difficulty in interpreting an independent map construct. Especially when we are dealing with the global variables and map clauses across different functions, maybe even in different files, it becomes difficult to understand and identify potential incorrect usages of the map construct.

3 Background

OMPSan assumes certain practical use cases, for example, in Listing 2.3, a user would expect the updated value of A on line 12. Having said that, a skilled ninja programmer may very well expect A to remain stale, because of their knowledge and understanding of the complexities of data mapping rules. Our analysis and error/warning reports from this work are intended primarily for the former case.

3.1 Memory SSA Form

Our analysis is based on the LLVM Memory SSA [10, 12], which is an imprecise implementation of Array SSA [7]. The Memory SSA is a virtual IR, that captures the def-use information for array variables. Every definition is identified by a unique name/number, which is then referenced by the corresponding use.

The Memory SSA IR has the following kinds of instructions/nodes,

-

INIT, a special node to signify uninitialized or live on entry definitions

-

\(N' = MemoryDef(N)\), \(N'\) is an operation which may modify memory, and N identifies the last write that \(N'\) clobbers.

-

MemoryUse(N), is an operation that uses the memory written by the definition N, and does not modify the memory.

-

\(MemPhi(N_1,N_2,...)\), is an operation associated with a basic block, and \(N_i\) is one of the may reaching definitions, that could flow into the basic block.

We make the following simplifying assumptions, to keep the analysis tractable

-

Given an array variable we can find all the corresponding load and store instructions. So, we cannot handle cases, when pointer analysis fails to disambiguate the memory a pointer refers to.

-

A MemoryDef node clobbers the array associated with its store instruction. As a result, write to any array location, is considered to update the entire array.

-

We analyze only the array variables that are mapped to a target region.

3.2 Scalar Evolution Analysis

LLVM’s Scalar Evolution (SCEV) is a very powerful technique that can be used to analyze the change in the value of scalar variables over iterations of a loop. We can use the SCEV analysis to represent the loop induction variables as chain of recurrences. This mathematical representation can then be used to analyze the index expressions of the memory operations.

We implemented an analysis for array sections, that given a load/store, uses the LLVM SCEV analysis, to compute the minimum and maximum values of the corresponding index into the memory access. If the analysis fails, then we default to the maximum array size, which is either a static array, or can be extracted from the LLVM memory alloc instructions.

4 Our Approach

In this section, we outline the key steps of our approach with the algorithm and show a concrete example to illustrate the algorithm in action.

4.1 Algorithm

Algorithm 1 shows an overview of our data map analysis algorithm. First, we collect all the array variables used in all the map clauses in the entire module. Then line 5, calls the function ConstructArraySSA, which constructs the Array SSA for each of the mapped Array variables. (In this paper, we use ”Array SSA” to refer to our extensions to LLVM’s Memory SSA form by leveraging the capabilities of Array SSA form [7].) Then, we call the function, InterpretTargetClauses, which modifies the Array SSA graph, in accordance of the map semantics of the program. Then finally ValidateDataMap checks the reachability on the final graph, to validate the map clauses, and generates a diagnostic report with the warnings and errors.

Example. Let us consider the example in Fig. 2a to illustrate our approach for analysis of data mapping clauses. ConstructArraySSA of Algorithm 1, constructs the memory SSA form for arrays “A” and “C” as shown in Fig. 2b. Then, InterpretTargetClauses, removes the edges between host and device nodes, as shown in Fig. 2c, where the host is colored green and device is blue. Finally, the loop at line 29 of the function InterpretTargetClauses, introduces the host-device/device-host memory copy edges, as shown in Fig. 2d. For example L1 is connected to S2 with a host-device memory copy for the enter data map pragma with to : A[0 : 50] on line 5. Also, we connect the INIT node with L2, to account for the alloc:C[0 : 100], which implies an uninitialized reaching definition for this example.

Lastly, ValidateDataMap function, traverses the graph, resulting in the following observations:

-

(Error) Node S4:MemUse(5) is not reachable from its corresponding definition \(L2:5 = MemPhi(0,6)\)

-

(Warning) Only the partial artial array section A[0 : 50], is reachable from definition \(L1: 1 = MemPhi(0,2)\) to \(S2:MemUse(1)\langle 0:100 \rangle \)

Section 6 contains other examples of the errors and warnings discovered by our tool.

5 Implementation

We implemented our framework in LLVM 8.0.0. The OpenMP constructs are lowered to runtime calls in Clang, so in the LLVM IR we only see calls to the OpenMP runtime. There are several limitations of this approach with respect to high level analysis like the one OMPSan is trying to accomplish. For example, the region of code that needs to be offloaded to a device is opaque since it is moved to a separate function. These functions are in turn called from the OpenMP runtime library. As a result, it is challenging to perform a global data flow analysis for the memory def-use information of the offloaded region. To simplify the analysis, we have to compile with clang twice.

First, we compile the OpenMP program with the flag that enables parsing the OpenMP constructs, and compile it again without the flag, so that Clang ignores the OpenMP constructs and instead generates the baseline LLVM IR for the sequential version. During the OpenMP compilation pass, we execute our analysis pass, which parses the runtime library calls and generates a csv file that records all the user specified “target map” clauses, as explained in Subsect. 5.1.

Next we compile the program by ignoring the OpenMP pragmas, and perform whole program context and flow sensitive data flow analysis on LLVM code generated from the sequential version, to construct the Memory def-use chains, explained in Subsect. 5.2. Then this pass validates if the “target map” information recorded in the csv file, respects all the Memory def-use relations present in the sequential version of the code.

5.1 Interpreting OpenMP Pragmas

Listing 5.1 shows a very simple user program, with a target data map clause. Listing 5.2 shows the corresponding LLVM IR in pseudocode, after clang introduces the runtime calls at Line 5. We parse the arguments of this call to interpret the map construct. For example, the 3rd argument to the call at line 6 of Listing 5.2 is 1, that means there is only one item in the map clause. Line 1, that is the value loaded into ArgsBase is used to get the memory variable that is being mapped. Line 3, ArgsSize gives the end of the corresponding array section, starting from ArgsBase. Line 4, ArgsMapType, gives the map attribute used by the programmer, that is “tofrom”.

We wrote an LLVM pass that analyzes every such Runtime Library (RTL) call, and tracks the value of each of its arguments, as explained above. Once we obtain this information, we use the algorithm in Fig. 1 to interpret the data mapping semantics of each clause. The data mapping semantics can be classified into following categories,

-

Copy In: A memory copy is introduced from the host to the corresponding list item in the device environment.

-

Copy Out: A memory copy is introduced from the device to the host environment.

-

Persistent Out: A device memory variable is not deleted, it is persistent on the device, and available to the subsequent device data environment.

-

Persistent In: The memory variable is available on entry to the device data environment, from the last device invocation.

The examples in Subsect. 6.2 illustrate the above classification.

5.2 Baseline Memory Use Def Analysis

LLVM has an analysis called the MemorySSA [10], it is a relatively cheap analysis that provides an SSA based form for memory def-use and use-def chains. LLVM MemorySSA is a virtual IR, which maps Instructions to MemoryAccess, which is one of three kinds, MemoryPhi, MemoryUse and MemoryDef.

Operands of any MemoryAccess are a version of the heap before that operation, and if the access can modify the heap, then it produces a value, which is the new version of the heap after the operation. Figure 3 shows the LLVM Memory SSA for the OpenMP program in Listing 5.3. The comments in the listing denote the LLVM IR and also the corresponding MemoryAccess.

We have simplified this example, to make it relevant to our context. LiveonEntry is a special MemoryDef that dominates every MemoryAccess within a function, and implies that the memory is either undefined or defined before the function begins. The first node in Fig. 3 is a LiveonEntry node. The \(3=MemoryDef(2)\) node, denotes that there is a store instruction which clobbers the heap version 2, and generates heap 3, which represents the line 8 of the source code. Whenever more than one heap versions can reach a basic block, we need a MemoryPhi node, for example, \(2=MemoryPhi(1,3)\) corresponds to the for loop on line 4. There are two versions of the heap reaching this node, the heap 1, \(1 = LiveonEntry\) and the other one from the back edge, heap 3, \(3=MemoryDef(2)\). The next MemoryAccess, \(4=MemoryPhi(2,5)\), corresponds to the for loop at line 14. Again the clobbering accesses that reach it are 2 from the previous for loop and 5, from its loop body. The load of memory A on line 18, corresponds to the MemoryUse(4), that notes that the last instruction that could clobber this read is MemoryAccess \(4=MemoryPhi(2,5)\). Then, \(5=MemoryDef(4)\) clobbers the heap, to generate heap version 5. This corresponds to the write to array B on line 22. This is an important example of how LLVM deliberately trades off precision for speed. It considers the memory variables as disjoint partitions of the heap, but instead of trying to disambiguate aliasing, in this example, both stores/MemoryDefs clobber the same heap partition. Finally, the read of B on line 29, corresponds to MemoryUse(4), with the heap version 4, reaching this load. Since this loop does not update memory, there is no need for a MemoryPhi node for this loop, but we have left the node empty in the graph to denote the loop entry basic block.

Now, we can see the difference between the LLVM memory SSA (Fig. 3) and the array def-use chains required for our analysis (Fig. 2). We developed a dataflow analysis to extract the array def-use chains from the LLVM Memory SSA, by disambiguating the array variable that each load/store instruction refers to. So, for any store instruction, for example line 22, Listing 5.3, we can analyze the LLVM IR, and trace the value that the store instruction refers to, which is “B” as per the IR, comment of line 19.

We perform an analysis on the LLVM IR, which tracks the set of memory variables that each LLVM load/store instruction refers to. It is a context-sensitive and flow-sensitive iterative data flow analysis that associates each MemoryDef/MemoryUse with a set of memory variables. The result of this analysis is an array SSA form, for each array variable, to track its def-use chain, similar to the example in Fig. 2.

6 Evaluation and Case Studies

For evaluating OMPSan we use the DRACC [1] suite, which is a benchmark for data race detection on accelerators, and also includes several data mapping errors also. Table 1 shows some distinct errors found by our tool in the benchmark [1] and the examples of Sect. 2. We were able to find the 15 known data mapping errors in the DRACC benchmark.

6.1 Analysis Time

To get an idea of the runtime overhead of our tool, we also measured the runtime of the analysis. Table 2 shows the time to run OMPSan, on few SPEC ACCEL and NAS parallel benchmarks. Due to the context and flow sensitive data flow analysis implemented in OMPSan, its analysis time can be significant; however the analysis time is less than or equal to the -O3 compilation time in all cases.

6.2 Diagnostic Information

Another major use case for OMPSan, is to help OpenMP developers understand the data mapping behavior of their source code. For example, Listing 6.5 shows a code fragment from the benchmark “FT” in the “NAS” suite. Our tool can generate the following information diagnostic information on the current version of the data mapping clause.

6.3 Limitations

Since OMPSan is a static analysis tool, it includes a few limitations.

-

Supports statically and dynamically allocated array variables, but cannot handle dynamic data structures like linked lists It can possibly be addressed in future through advanced static analysis techniques (like shape analysis).

-

Cannot handle target regions inside recursive functions. It can possibly be addressed in future work by improving our context sensitive analysis.

-

Can only handle compile time constant array sections, and constant loop bounds. We can handle runtime expressions, by adding static analysis support to compare the equivalence of two symbolic expressions.

-

Cannot handle declare target since it requires analysis across LLVM modules.

-

May report false positives for irregular array accesses, like if a small section of the array is updated, our analysis may assume that the entire array was updated. More expensive analysis like symbolic analysis can be used to improve the precision of the static analysis.

-

May fail if Clang/LLVM introduces bugs while lowering OpenMP pragmas to the RTL calls in the LLVM IR.

-

May report false positives, if the OpenMP program relies on some dynamic reference count mechanism. Runtime debugging approach will be required to handle such cases.

It is interesting to note that, we did not find any false positives for the benchmarks we evaluated on.

7 Related Work and Conclusion

Managing data transfers to and from GPUs has always been an important problem for GPU programming. Several solutions have been proposed to help the programmer in managing the data movement. CGCM [6] was one of the first systems with static analysis to manage CPU-GPU communications. It was followed by [5], a dynamic tool for automatic CPU-GPU data management. The OpenMPC compiler [9] also proposed a static analysis to insert data transfers automatically. [8] proposed a directive based approach for specifying CPU-GPU memory transfers, which included compile-time/runtime methods to verify the correctness of the directives and also identified opportunities for performance optimization. [13] proposed a compiler analysis to detect potential stale accesses and uses a runtime to initiate transfers as necessary, for the X10 compiler. [11] has also worked on automatically inferring the OpenMP mapping clauses using some static analysis. OpenMP has also defined standards, OMPT and OMPD [3, 4] which are APIs for performance and debugging tools. Archer [2] is another important work that combines static and dynamic techniques to identify data races in large OpenMP applications.

In this paper, we have developed OMPSan, a static analysis tool to interpret the semantics of the OpenMP map clause, and deduce the data transfers introduced by the clause. Our algorithm tracks the reference count for individual variables to infer the effect of the data mapping clause on the host and device data environment. We have developed a data flow analysis, on top of LLVM memory SSA to capture the def-use information of Array variables. We use LLVM Scalar Evolution, to improve the precision of our analysis by estimating the range of locations accessed by a memory access. This enables the OMPSan to handle array sections also. Then OMPSan computes how the data mapping clauses modify the def-use chains of the baseline program, and use this information to validate if the data mapping in the OpenMP program respects the original def-use chains of the baseline sequential program. Finally OMPSan reports diagnostics, to help the developer debug and understand the usage of map clauses of their program. We believe the analysis presented in this paper is very powerful and can be developed further for data mapping optimizations also. We also plan to combine our static analysis with a dynamic debugging tool, that would enhance the performance of the dynamic tool and also address the limitations of the static analysis.

References

Aachen University: OpenMP Benchmark. https://github.com/RWTH-HPC/DRACC

Atzeni, S., et al.: Archer: effectively spotting data races in large OpenMP applications. In: 2016 IEEE International Parallel and Distributed Processing Symposium (IPDPS), pp. 53–62, May 2016. https://doi.org/10.1109/IPDPS.2016.68

Eichenberger, A., et al.: OMPT and OMPD: OpenMP tools application programming interfaces for performance analysis and debugging. In: International Workshop on OpenMP (IWOMP 2013) (2013)

Eichenberger, A.E., et al.: OMPT: an OpenMP tools application programming interface for performance analysis. In: Rendell, A.P., Chapman, B.M., Müller, M.S. (eds.) IWOMP 2013. LNCS, vol. 8122, pp. 171–185. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-40698-0_13

Jablin, T.B., Jablin, J.A., Prabhu, P., Liu, F., August, D.I.: Dynamically managed data for CPU-GPU architectures. In: Proceedings of the Tenth International Symposium on Code Generation and Optimization, CGO 2012, pp. 165–174. ACM, New York (2012). https://doi.org/10.1145/2259016.2259038

Jablin, T.B., Prabhu, P., Jablin, J.A., Johnson, N.P., Beard, S.R., August, D.I.: Automatic CPU-GPU communication management and optimization. SIGPLAN Not. 46(6), 142–151 (2011). https://doi.org/10.1145/1993316.1993516

Knobe, K., Sarkar, V.: Array SSA form and its use in parallelization. In: Proceedings of the 25th ACM SIGPLAN-SIGACT Symposium on Principles of Programming Languages, POPL 1998, pp. 107–120. ACM, New York (1998). https://doi.org/10.1145/268946.268956

Lee, S., Li, D., Vetter, J.S.: Interactive program debugging and optimization for directive-based, efficient GPU computing. In: 2014 IEEE 28th International Parallel and Distributed Processing Symposium, pp. 481–490, May 2014. https://doi.org/10.1109/IPDPS.2014.57

Lee, S., Eigenmann, R.: OpenMPC: extended OpenMP programming and tuning for GPUs. In: Proceedings of the 2010 ACM/IEEE International Conference for High Performance Computing, Networking, Storage and Analysis, SC 2010, pp. 1–11. IEEE Computer Society, Washington, DC (2010). https://doi.org/10.1109/SC.2010.36

LLVM: LLVM MemorySSA. https://llvm.org/docs/MemorySSA.html

Mendonça, G., Guimarães, B., Alves, P., Pereira, M., Araújo, G., Pereira, F.M.Q.: DawnCC: automatic annotation for data parallelism and offloading. ACM Trans. Arch. Code Optim. 14(2), 13:1–13:25 (2017). https://doi.org/10.1145/3084540

Novillo, D.: Memory SSA - a unified approach for sparsely representing memory operations. In: Proceedings of the GCC Developers’ Summit (2007)

Pai, S., Govindarajan, R., Thazhuthaveetil, M.J.: Fast and efficient automatic memory management for GPUs using compiler-assisted runtime coherence scheme. In: Proceedings of the 21st International Conference on Parallel Architectures and Compilation Techniques, PACT 2012, pp. 33–42. ACM, New York (2012). https://doi.org/10.1145/2370816.2370824

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Barua, P., Shirako, J., Tsang, W., Paudel, J., Chen, W., Sarkar, V. (2019). OMPSan: Static Verification of OpenMP’s Data Mapping Constructs. In: Fan, X., de Supinski, B., Sinnen, O., Giacaman, N. (eds) OpenMP: Conquering the Full Hardware Spectrum. IWOMP 2019. Lecture Notes in Computer Science(), vol 11718. Springer, Cham. https://doi.org/10.1007/978-3-030-28596-8_1

Download citation

DOI: https://doi.org/10.1007/978-3-030-28596-8_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-28595-1

Online ISBN: 978-3-030-28596-8

eBook Packages: Computer ScienceComputer Science (R0)