Abstract

The variance-covariance method makes use of covariances (volatilities and correlations) of the risk factors and the sensitivities of the portfolio values with respect to these risk factors with the goal of approximating the value at risk.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

The variance-covariance method makes use of covariances (volatilities and correlations) of the risk factors and the sensitivities of the portfolio values with respect to these risk factors with the goal of approximating the value at risk. This method leads directly to the final result, i.e., the portfolio’s value at risk, based on the properties of the assumed portfolio value’s probability distribution; no simulation of market data scenarios is involved. The variance-covariance method utilizes linear approximations of the risk factors themselves throughout the entire calculation, often neglecting the drift as well. In view of Eq. 21.25, we have

The main idea characterizing this method, however, is that the portfolio value V is expanded in its Taylor series as a function of its risk factors S i, i = 1, …n, and approximated by breaking off after the first or second order term. Let

denote the vector of risk factors. The Taylor expansion for the change in portfolio value δV (S) up to second order is

In Eq. 22.2, the portfolio value has been approximated by its Taylor expansion. In Eq. 22.3, the risk factor changes were linear approximate according to Eq. 22.1, and finally, in Eq. 22.4, the average returns have been neglected. The last line in 22.4 is referred to as the delta-gamma approximation. Taking the Taylor expansion up to linear order only is called delta approximation correspondingly, resulting in an approximation solely consisting of the first of the two sums appearing in the last equation in 22.4.

The abbreviations Δi and Γij, as usual, denote the sensitivities (at time t) of V with respect to the risk factors

Note here that the mixed partial derivatives arise in the expression for Γij. In the literature, the matrix Γij is sometimes called the Hessian matrix.

We will see below that the sensitivities usually appear in connection with the current levels S i(t) and S j(t). The notation \(\widetilde {\Delta } _{i}\) and \(\widetilde {\Gamma }_{ij}\) will be used to denote these sensitivities multiplied by the current levels:

Using \(\widetilde {\Delta }_{i}\) and \(\widetilde {\Gamma }_{ij}\) will prove to substantially simplify the notation. Interpreting the \(\widetilde {\Delta }_{i}\) as components of a vector \(\widetilde {\boldsymbol {\Delta }}\), and \(\widetilde {\Gamma }_{ij}\) as the elements of a matrix \(\widetilde {\boldsymbol {\Gamma }}\), Eq. 22.4 can be written in vector form as

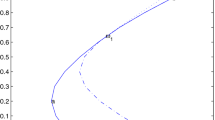

The approximation of V (S) through its Taylor series expansion up to second order is presented in Fig. 22.1 for a straddle (a portfolio made up of a call and a put option) on a risk factor S. The figure has been extracted from the Excel workbook Straddle.xlsm available in the download section [50]. We can recognize that the delta-gamma approximation for a simple payoff profile is quite a good approximation. For somewhat more complicated portfolios, however, the delta-gamma approximation fails to be a reasonable representation of the payoff profile. In such cases, we recommend using one of the simulation methods presented in Chap. 23 instead of the Delta-Gamma method. However, the drawback of the simulation approach is of course the significant increase of numerical computations required.

1 Portfolios vs. Financial Instruments

Although we continually refer to portfolio sensitivities, the same results hold for individual financial instruments as well. In fact, sensitivity of a portfolio composed of financial instruments on the same underlying as described in Sect. 12.4.1 can be obtained by simply adding together the sensitivities of the individual instruments. This is the approach most commonly taken when calculating portfolio sensitivities. For the sake of clarity, we once again present this method explicitly here.

Consider a portfolio with a value V consisting of M different financial instruments with values V k ,k = 1, …M. N k denotes the number of each instrument with value V k held in the portfolio. The total value of the portfolio is naturally the sum of the values of each individual position

The change in value δV (t) of this portfolio is (approximated up to second order)

where the sensitivities of the financial instruments have been introduced in the last step. For example, \(\Delta _{i}^{k}\) is the linear sensitivity of the kth financial instrument in the portfolio with respect to the ith risk factor, etc.:

Simply rearranging the terms makes it clear that the summing over the index k (which denotes the different financial instruments) yields the portfolio sensitivities:

Thus, a portfolio sensitivity like Δi, for example, contains the sensitivities of all instruments in the portfolio (including all the position sizes N k) with respect to the considered risk factor. This then yields (as is intuitively clear) the sensitivities of the entire portfolio as sums over the sensitivities of all instruments in the portfolio:

In practice, this procedure is usually referred to as position mapping. Using the approximation in Eq. 22.1 for the change in risk factor, we finally obtain an expression for the portfolio’s change in value as

using the modified portfolio sensitivities defined as in Eq. 22.5:

Adding the sensitivities of the financial instruments and multiplying by the current levels of the risk factors to obtain these modified portfolio sensitivities is sometimes referred to as VaR mapping.

2 The Delta-Normal Method

In the delta-normal method, the Taylor series 22.4 of the portfolio value is broken off after the linear term.

2.1 The Value at Risk with Respect to a Single Risk Factor

For a single risk factor this means

with the sensitivity Δ := ∂V∕∂S. The change in the portfolio’s value is thus approximated to be a linear function of the change in the underlying risk factor. This corresponds to the situation described in Sect. 21.3. There, the constant of proportionality (the sensitivity) was not Δ but N or − N for a long or short position, respectively. The linear approximation implies intuitively that a portfolio with a linear sensitivity Δ with respect to a risk factor can be interpreted as a portfolio consisting of Δ risk factors. The only subtlety in this argumentation is that in Sect. 21.3, we distinguished between a long and a short position, treating the two cases differently on the basis of whether the proportionality constant N was positive or negative (which leads to the two different VaRs in Eq. 21.16). However, we cannot know a priori whether Δ is greater or less than 0. We do know, however, that V is linear and in consequence, a monotone function of S. Therefore, in the sense of Eq. 21.6, the results following from Eq. 21.16 hold with the correspondence \(\Delta \widehat {=}N\) for Δ > 0, and \(\Delta \widehat {=}-N\) for Δ < 0. Using this fact allows us to write

using the notation \(\widetilde {\Delta }:=S(t)\Delta =S(t)\partial V/\partial S\). As usual, Q 1−c is the (1 − c) percentile of the standard normal distribution.Footnote 1 The maximum function in Eq. 21.6 effects the correct choice for the VaR. If Δ > 0, the lower bound of the confidence interval of the risk factorFootnote 2 is relevant and consequently the VaR function as defined above takes on the value corresponding to a long position in Eq. 21.16. Likewise for Δ < 0, the upper bound of the confidence interval of the risk factorFootnote 3 is relevant and the above defined maximum function takes on the value corresponding to the VaR of the short position in Eq. 21.16.

In all our deliberations up to this point, only the portfolio value has been approximated with Eq. 22.10. The change in the risk factor in Eq. 22.11 is still exact. Approximating this risk factor change with Eq. 22.1, the VaR becomes

This corresponds exactly to Eq. 21.19, since \(\widetilde {\Delta }\widehat {=}N\) for \(\widetilde {\Delta }>0\) and \(\widetilde {\Delta }\widehat {=}-N\) for \(\widetilde {\Delta }<0\).

The common summand \(-\widetilde {\Delta }\mu \delta t\) can now be taken out of the maximum function

In this approximation, the maximum function produces precisely the absolute value of the risk which is caused by the volatility of the risk factor. A positive drift μ of the risk factor reduces the portfolio risk when \(\widetilde {\Delta }>0\) (intuitively, the portfolio then represents a long position). If, on the other hand, the portfolio sensitivity is negative, i.e., \(\widetilde {\Delta }<0\), a positive drift increases the portfolio risk (intuitively, the portfolio represents a short position). The drift’s influence is of course lost if the drift is neglected in the approximation. The value at risk then reduces to

where the absolute value makes it immediately clear that the sign of the portfolio sensitivity no longer plays a role.

2.2 The Value at Risk with Respect to Several Risk Factors

In the previous section, linear approximations enabled us to reduce the VaR with respect to a single risk factor to that of a position consisting of Δ instruments representing the risk factor. We were then able, as was done in Sect. 21.3, to deduce information about the unknown distribution of the portfolio’s value V from the known distribution of the risk factorS. The extension of these results to the case of several risk factors is not trivial even for the delta-normal approximation. Only by using the roughest approximation in Eq. 22.1 for the change in the risk factors, namely δS i(t) ≈ S i(t)δZ i, can we manage to avoid involving the distribution of V in the discussion.

The approximation Eq. 21.26, i.e. δS(t) ≈ S(t)δZ, led to the value at risk Eq. 22.13 with respect to a single risk factor. Squaring both sides of this equation yields

since the approximation in Eq. 21.26 allows the approximation of the variance of δS(t) with \(S(t)^{2} \operatorname *{var}\left [ \delta Z\right ] \). On the other hand, the variance of V can be calculated from Eq. 22.10 simply as

This means that this approximation can be used to express the square of the value at risk in terms of a multiple of the variance of the portfolio:

Now, only the variance of the portfolio’s value needs to be determined for the computation of the VaR and not its distribution or its percentiles.

If several risk factors are involved, Eq. 22.9 can be used to write the portfolio’s change in value, δV , in the approximation given by Eq. 21.26, as a linear combination of normally distributed random variables δZ i (with deterministic coefficients \(\widetilde {\Delta }_{i}\))

The variance of a sum of random variables is equal to the sum of the covariances of these random variable as can be seen from Eq. A.17. The variance of the portfolio value is thus

where the definition of the covariance matrix in Eq. 21.22 was used in the last step. This means that the value at risk can be approximated as

This is the central equation for the delta-normal method. It summarizes all assumptions, approximations, and computation methods of the delta-normal method.Footnote 4

In the linear approximation, the effect of the drifts can be subsequently introduced into the approximation. The expected change in portfolio value is calculated using the deltas and the drifts of the risk factors. Analogously to Eq. 22.12 for a single risk factor, this expected change is then subtracted from the value at risk of the portfolio given in Eq. 22.15:

The delta-normal approach to the calculation of the value at risk can be summarized as follows:

-

Calculate the sensitivities of the portfolio with respect to all risk factors.

-

Multiply the covariance matrix with the sensitivities of the portfolio and the current values of the risk factors as in Eq. 22.14 to obtain the variance of the portfolio. The covariance matrix’s elements consist of the product of volatilities and correlations of the risk factors as defined in Eq. 21.22.

-

Multiply the portfolio variance as in Eq. 22.15 by the liquidation period and the square of the percentile corresponding to the desired confidence interval (for example, − 2.326 for 99% confidence).

-

The square root of the thus obtained number is the value at risk of the entire portfolio, neglecting the effect of the drifts of the risk factors.

-

The effect of the drifts can be taken into account using Eq. 22.17.

For future reference we re-write the final Value at Risk in Eq. 22.17 in terms of the covariances for the logarithmic changes and in terms of the covariances of the returns. According to Eqs. 21.27 and 21.28 the difference is an overall factor δt:

Here, the r i are the historic portfolio returns over holding periods of length δt, each return annualized.

These two forms of the VaR are very important in practice, when one is given historical time series of risk factor prices S i or annualized risk factor returns r i rather then the volatilities and correlations needed in Eq. 22.17, which otherwise would have to be calculated or bought from some market data vendor.

3 The Delta-Gamma Method

The delta-gamma method for calculating the portfolio’s VaR makes use of the Taylor series expansion of the value of the portfolio up to and including the second order terms along with the approximation in Eq. 22.1 for the risk factors. The starting point for the delta-gamma method is thus the last line in Eq. 22.4, which when written in vector notation is given by

where

The right-hand side of Eq. 22.19 can not be written as the sum of the contributions of each risk factor as was the case for the delta-normal method:

The contributions of the individual risk factors can not be considered separately since they are coupled in the above equation by the matrix \(\widetilde {\boldsymbol {\Gamma }}\). Furthermore the random variables δZ j are not independent. They are correlated through the covariance matrix δΣ. Two essential elements of the method presented here areFootnote 5:

-

Using the Cholesky decomposition of the covariance matrix δΣ to transform the δZ j into independent random variables.

-

Diagonalizing the gamma matrix \(\widetilde {\boldsymbol {\Gamma }}\) thereby decoupling the contributions of the individual risk factors.

Also after the Cholesky decompositions, and in contrast to the delta-normal case, it is still not possible in the situation of Eq. 22.19 to reduce the VaR with respect to a risk factor to the VaR of a position consisting of Δ of these risk factors. Thus the (unknown) distribution of the portfolio value can no longer be substituted by the (known) distribution of the individual risk factors, as could still be done in Sect. 21.3. Instead, the distribution of δV must be determined directly in order to calculate the value at risk defined in Eqs. 21.2 or 21.3. A third essential step of the delta-gamma method presented here involves the determination of the distribution of δV .

3.1 Decoupling of the Risk Factors

Motivated by Eq. 21.40, we first introduce a matrix A satisfying the property 21.35. This matrix can be constructed through the Cholesky decomposition of the covariance matrix as described in detail in Sect. 21.5.3. This matrix transforms the correlated δZ i into uncorrelated random variables. With this goal in mind, we rewrite Eq. 22.19, first introducing identity matrices into the equation and then replacing them with AA −1 or (A T)−1A T as follows:

In the penultimate step, the property 21.39 has been used; recall that this holds for every invertible matrix. In the last step, the parentheses are intended to emphasize the fact that δZ only appears in combination with A −1. We have shown before that the components of the vector A −1δZ are iid random variables, see Eq. 21.40. We can therefore write

Thus, the first goal has been accomplished. The δZ i have been transformed into iid random variables δY i.

Because \(\widetilde {\boldsymbol {\Gamma }}\) is by definition a symmetric matrix, i.e., \(\widetilde {\Gamma }_{ij}=\widetilde {\Gamma }_{ji}\), we can show that the newly defined matrix M is symmetric as well:

3.2 Diagonalization of the Gamma Matrix

The next step is to decouple the contributions to δV of the individual random variables in Eq. 22.20. This is accomplished by diagonalizing the gamma matrix, or more precisely, the transformed gamma matrix M introduced in Eq. 22.20. Diagonalizing a matrix is a standard procedure in linearalgebra. We refer the reader to the relevant literature.Footnote 6 We nevertheless take the opportunity to demonstrate the fundamental operations for diagonalizing a matrix here since they contain essential elements of the practical value at risk computations to be performed in the delta-gamma method.

The eigenvectorse i of a matrix M are the non-zero vectors which are mapped by M to the same vector multiplied by a number (called a scalar in algebra):

These scalars λ i are called eigenvalues of the matrix. As known from linear algebra, an equation of this kind has a non-trivial solution e i ≠ 0 if and only if the matrix \(\left (\mathbf {M}-\lambda _{i}\mathbf {1}\right )\) is singular. For this to be the case, the determinant of this matrix must be zero:

The solutions of Eq. 22.22 are the eigenvaluesλ i. Having determined these values, they can be substituted into Eq. 22.21 to determine the eigenvectorse i of the matrix. The eigenvectors have as yet only been defined up to a multiplicative scalar since if e i solves Eq. 22.21 then ce i does as well, for any arbitrary scalar c. The uniqueness of the eigenvalues can be guaranteed by demanding that the eigenvectors have norm 1:

As is known from linear algebra, a symmetric, non-singular n × n matrix has n linearly independent eigenvectors which are orthogonal. This means that the inner product of each pair of different eigenvectors equals zero (graphically: the angle formed by the two vectors is 90 degrees). Together with the normalization the eigenvectors thus have the following property

where δ ij denotes the well-known Kronecker delta. A collection of vectors satisfying this property is called orthonormal. Since we have shown that the n × n matrix M in Eq. 22.20 is symmetric, we can be sure that it indeed has n orthonormal eigenvectors satisfying Eq. 22.24.

To clarify the notation for these eigenvectors: The subscript k and the superscript i of \(e_{k}^{i}\) identify this value as the k-th component of the i-th eigenvector e i.

A matrix O can now be constructed whose column vectors are composed of the eigenvectors of M:

The jth eigenvector e j is in the jth column of the matrix. In the ith row, we find the ith components of all eigenvectors. As we will soon see, this matrix is an indispensable tool for the purpose of the diagonalization. As can be immediately verifiedFootnote 7

and therefore also

Equation 22.26 characterizes a group of matrices known as orthonormal transformations. Applying such a matrix to a vector effects a rotation of the vector.

The eigenvalues of the matrix M can be used to construct a matrix as well, namely the diagonal matrix

From Eq. 22.21, it follows immediately that the relation

holds for the matrices M, O and λ. Such matrix equations can often be verified quite easily by comparing the matrix elements individually:

The decisive step in the above proof is the first equality in the second line where the eigenvector equation 22.21 was used. Multiplying both sides of this equation from the left by the matrix O T and using Eq. 22.26 directly yields the desired diagonalization of M, since λ is a diagonal matrix:

Multiplying both sides of Eq. 22.29from the right by the matrix O T and using Eq. 22.27, also yields a very useful representation of M, namely the spectral representation (also referred to as eigenvectordecomposition)

We are now in a position to introduce the diagonalized matrix O TMO into Eq. 22.20 by inserting identity matrices in Eq. 22.20 and subsequently replacing them by OO T. Equation 22.27 ensures that equality is maintained.

In the last equality, the parentheses are meant to emphasize that δY appears only in combination with O T. In consequence, we can write

The δY i were iid, standard normally distributed random variables. This was accomplished in the previous section by the mapping A −1. Now to achieve the diagonalization of the gamma matrix, the random variables must undergo a further transformation under the mapping O T. The question remains as to whether the accomplishments of the previous section was undone by this new transformation, in other words, whether the transformed random variables have remained independent. We therefore consider the covariance of the new random variables

The covariances have remained invariant under the transformation O T. Thus, the new random variables are also uncorrelated and all have variance 1. Also, the zero expectation does not change under the transformation O T:

Since matrix multiplication is a linear transformation (we are operating in the realm of linear algebra), the form of the distribution remains the same as well. In summary, the new random variables are uncorrelated and all have the same standard-normal distribution. We have argued after Eq. 21.40 that such variables are indeed iid random variables. Summarizing the above deliberations, we can write for the δX i:

We have only used property 22.26, i.e., the iid property of random variables remains invariant under every orthonormal transformation.

If we define a “transformed sensitivity vector” as

(which implies for its transposed \({\mathbf {L}}^{T}=\widetilde {\boldsymbol {\Delta }}^{T}\mathbf {A\mathbf {O}}\)), the portfolio-change Eq. 22.31 can be brought into the following simple form

or, expressed component-wise

The change in the portfolio’s value is now the decoupled sum of the individual contributions of iid random variables as was our original intention.

At this stage, we collect all transformations involved in mapping the original random variables in Eq. 22.19 into the iid random variables in the above expression Eq. 22.32:

From Eq. 22.27 we know that \({\mathbf {O}}^{T}{\mathbf {A}}^{-1}= {\mathbf {O}}^{-1}{\mathbf {A}}^{-1}=\left ( \mathbf {AO}\right ) ^{-1}\). We now recognize that all of these transformations can be represented with a single matrix defined as

With this matrix, the transformations become simply

The matrix Ddirectly diagonalizes the gamma matrix \(\widetilde {\boldsymbol {\Gamma }}\) (as opposed to O, which diagonalizes the matrix M ). In addition, D is by definition, an orthonormal transformation (a “rotation”) of A, the Cholesky decomposition of the covariance matrix. D is likewise a “square root” of the covariance matrix, since the square of a matrix remains invariant under orthonormal transformations of the matrix. Explicitly:

Therefore, the matrix D satisfies both tasks, namely the decoupling of the gamma matrix and the transformation of the correlated random variables into uncorrelated ones.

As a little consistence check, using the matrix D we immediately recognize the equivalence of Eqs. 22.32 and 22.19:

where Eq. 21.39 was again used in verifying this equivalence.

3.3 The Distribution of the Portfolio Value Changes

Having decoupled the individual contributions to δV in Eq. 22.33 into standard normally distributed iid random variables, we can now determine the distribution of the sum. δV is nevertheless not simply the sum of normally distributed random variables alone, since the expression also includes the square of normally distributed random variables. These additional random variables represent the difference in complexity compared to the delta-normal method. According to Sect. A.4.6, the square of a standard normally distributed random variable is χ 2-distributed with one degree of freedom. We can thus write Eq. 22.33 as

However, the \(\widetilde {X}_{i}\) are not independent random variables since we obviously have

We need to re-write δV in such a way that every term appearing is statistically independent of every other term. First note that a δX i is independent of every other term in δV if and only if the corresponding eigenvalue λ i is zero, since in this case the corresponding \(\widetilde {X}_{i}\) does not appear in the sum. We will emphasize this by introducing the index set J which contains only the indices of non-zero eigenvalues:

With this index set we can writeFootnote 8

The first sum in Eq. 22.38 is actually a sum of normally distributed random variables and as such is again a normally distributed random variable which we denote by u 0. The expectation of this random variable can be calculated as

and the variance is

with the components L i of the transformed sensitivity vector L defined in Eq. 22.35. Thus

Consider now the sums over j ∈ J in Eq. 22.38. To combine the dependent random numbers δX j and \(\delta X_{j}^{2}\) in the square brackets of Eq. 22.38 into one single random number, we complete the square for each j ∈ J:

Since δX j is a standard normal random variable, we have

Therefore, according to Eq. A.94 in Sect. A.4.6 \(u_{j}:=\left ( \delta X_{j}+L_{j}/\lambda _{j}\right ) ^{2}\) has a non-centralχ 2-distribution with one degree of freedom and non-central parameter \(L_{j}^{2}/\lambda _{j}^{2}\):

In summary, δV has now become a sum of non-central χ 2-distributed random variables u j plus a normally distributed random variable u 0 (plus a constant), where all the random variables appearing are independent of each other:

The problem now consists in determining the distribution of the sum of independent but differently distributed random variables (or at least its percentiles). According to Eq. 21.4, the value at risk at a specified confidence level is then computed with precisely these percentiles or, equivalently, by inverting the cumulative distribution function of δV .

3.4 Moments of the Portfolio Value Distribution

We begin by calculating the moments of the random variable δV . The first moments, the expectation, the variance, etc. (see Eqs. A.22 and A.23) have intuitive interpretations and their explicit forms provide an intuitive conception of the distribution. In addition, approximations for the distribution (the Johnson approximation) and for the percentiles (the Cornish-Fisher approximation) will later be presented which can be computed with the moments.

The Moment Generating Function is a very useful tool for calculating the moments. The moment generating function (abbreviated as MGF) G x of a random variable x with density function \(\operatorname {pdf}(x)\) is defined in Sect. A.3.1 by

where in the last step the exponential function e sx has been expanded in its Taylor series. The name moment generating function stems from the fact, stated mathematically in Eq. A.27, that the derivatives of the function G x(s) with respect to s evaluated at s = 0 generate the moments of the random variable x

As demonstrated in Sect. A.3.1, the MGF can be explicitly computed for many distributions from their integral representation, Eq. A.25. In particular, according to Eq. A.57, the MGF of u 0, i.e., the MGF of a N\((0,\sum _{i\notin J}L_{i}^{2})\) distributed random variable is given by

while Eq. A.95 gives the MGF of u j, i.e., of a non-central χ 2-distribution with one degree of freedom χ 2(1, (L j∕λ j)2)

This function is well-defined for s < 1∕2, which is sufficient for our needs since as is clear from Eq. 22.41 that we are particularly interested in values of s in a neighborhood of zero.

The usefulness of the MGF in the calculation of the distribution of δV in Eq. 22.39 stems from the fact that according to Eq. A.30 the MGF of the sum of independent random variables x, y is simply the product of the MGFs of each of the random variables:

and that furthermore, from Eq. A.31

for all non-stochastic values a, b and random variables x. The MGF of δV can thus be written as the product of the each of the MGFs appearing in the sum:

The MGFs of each of the individual random variables are given explicitly in Eqs. 22.42 and 22.43. Substituting s by λ js∕2 in Eq. 22.43 yields the required MGF for the argument λ js∕2:

We thus obtain an explicit expression for the moment generating function of the distribution of the portfolio’s value changes:

Using the same denominator in the second \(\exp \)-function finally yields

This can be simplified even further by the following trick: Since λ i = 0 for all i∉J, we can re-write the first \(\exp \)-function in the following way:

Using this form in Eq. 22.46 allows us to write δV very compactly as a product over all indexes j = 1, …, n

This function is well-defined for all \(s<\min _{j\in J}\left ( \frac {1}{2|\lambda _{i}|}\right ) \), which is sufficient for our needs since because of Eq. 22.41, we are only interested in values of s which are close to zero.

Now, using Eq. 22.41, arbitrary moments of δV can be computed. We start the calculation of the first moment by introducing the abbreviation

Application of the well-known product rule yields

The derivative we need to calculate is

For s = 0 almost all terms vanish and we are left with λ j∕2. Thus E[δV ] becomes simply

The expectation of the portfolio’s value changes in the delta-gamma approximation Eq. 22.4 is thus just half the sum of the eigenvalues of the transformed gamma matrix M. This is by definition half the trace of the eigenvalue matrix λ. With Eqs. 22.35 and 22.36 we arrive at the conclusion that the expectation of δV equals half the trace of the product of the gamma matrix and the covariance matrixFootnote 9:

Note that the drifts of all risk factors have been neglected (see Eqs. 22.19 and 22.1). The risk factors are thus all approximated to be drift-free. But then, for a portfolio depending only linearly on the risk factors (or in the linear approximation of the delta-normal method) the expectation (the drift) of the portfolio value changes also equals zero. In Eq. 22.48, the expectation (the drift) of the portfolio changes is not zero because non-linear effects were taken into consideration. It is readily seen that the gamma matrix gives rise to the drift of δV in contrast to the linear sensitivities \(\widetilde {\boldsymbol {\Delta }}\) which do not appear in 22.48.

To find out more about the distribution of δV , we proceed by computing its variance. According to Eq. A.5 the variance is the second central moment which can be calculated via Eq. A.29:

with the abbreviation

The second derivative of this product is quite involved. We nonetheless present it explicitly here to demonstrate how moments of δV can be determined in practice. Such moments are needed quite often, for instance for the Cornish-Fisher expansion. We start by repeatedly applying the product rule to arrive at

Thus, we mainly have to differentiate a j. For ease of notation, we introduce yet another abbreviation, namely

The first derivative with respect to a j is now calculated as

For s = 0 the first two terms in the last line vanish and the last two terms just compensate each other so that ∂a j∕∂s vanishes completely at s = 0:

Therefore only the term involving the second derivative in Eq. 22.50 contributes to Eq. 22.49. Using the result 22.51, this second derivative is explicitly:

Most terms above have s as a factor. They all vanish for s = 0. The only terms remaining are:

Inserting all these results into Eq. 22.49 finally yields

In the first step we used Eq. 22.50 and \(\left . a_{k}\right \vert { }_{s=0}=1\). In the second step the result 22.52 was inserted and in the third step the result 22.53. The sum \(\sum L_{j}^{2}\) is just the square of the transformed sensitivity vector and \(\sum \lambda _{j}^{2}\) is the trace of the square of the matrix of eigenvalues, i.e.,

Finally, making use of the transformations in Eq. 22.35 and applying Eq. 22.36, the variance of the portfolio’s value change in the framework of the delta-gamma method becomes

Note that the first term resulting from the linear portfolio sensitivities \(\widetilde {\boldsymbol {\Delta }}\) is identical to the portfolio variance in the delta-normal method (see Eq. 22.14). The non-linear sensitivities \(\widetilde {\boldsymbol {\Gamma }}\) effect a correction of the linear portfolio variance which has a form similar to the drift correction from the non-linear term in Eq. 22.48. While in Eq. 22.48, the trace of the product of the gamma matrix and the covariance matrix was relevant, now the trace of the square of this product is required for computing the variance.

The variance is the second central moment of the random variable. The central momentsμ i of a random variable are defined in general terms in Eq. A.23 as the “expectation of powers of the deviation from the expectation”:

Analogously to the approach for the first two moments demonstrated above, we can continue to calculate the further moments of δV . The first (central) moments are compiled here:

In this way, a great deal of additional information about the distribution of δV can be generated. For instance skewness and kurtosis of the distribution of δV areFootnote 10

A percentile, however, is needed for the computation of the value at risk as given in Eq. 21.4.

3.4.1 Johnson Transformation

Computation of a percentile necessitates knowledge of the distribution of the random variable directly and not of its moments. In order to be able to proceed, we could assume a particular functional form of the distribution and then establish a relation between the parameters of this functional form and the moments of the random variable via moment matching. Since the moments, as shown above, can be explicitly computed, the parameters of the assumed distribution can thus be determined. For example, if we assume that a random variable is normally or lognormally distributed, we would take Eqs. 22.48 and 22.54 as parameter values. Additional functional forms for approximating the distribution of δV were suggested by Johnson [114]. These Johnson transformations have four parameters which can be determined from the first four moments in Eq. 22.55. They represent a substantially better approximation than, for example a lognormal distribution.

3.4.2 Cornish-Fisher Expansion

One possibility of approximating the percentiles of a distribution from its centralmoments and the percentiles \(Q^{{ }_{\text{N}(0,1)}}\) of the standard normal distribution is the Cornish-Fisher expansion. Since this expansion makes use of the standard normal distribution, we must first transform δV into a centered and normalized random variable \(\widetilde {\delta V}\) with expectation 0 and variance 1. This is accomplished by defining

The percentile of the distribution of \(\widetilde {\delta V}\) can now be approximated with the Cornish-Fisher expansion [41, 196] as follows

where the expansion is taken up to the order, which uses only the first four moments from Eq. 22.55. The probability that \(\widetilde {\delta V}\) is less than a number a is, naturally, the same as the probability that δV is less than \(\mu +\sqrt {\mu _{2}}\,a\). Thus

holds for the percentiles. From Eq. 21.4, the value at risk is thus

where we now can use the approximation 22.56 for \(Q_{1-c} ^{{ }_{\widetilde {\delta V}}}\) since the percentiles of the standard normal distribution for the confidence level (1 − c) are well known (see for instance Eq. 21.13).

3.5 Fourier-Transformation of the Portfolio Value Distribution

While a great deal of information about the distribution function can be gleaned from the computation of the moments with the moment generating function, we are still not able to calculate the distribution itself directly. Characteristic functions (CFs), however, generate the distribution directly (this can at least be accomplished numerically). As defined in Sect. A.3.2, the characteristic function Φx of a random variable x with density function \(\operatorname {pdf}(x)\) isFootnote 11

This is precisely the definition of the Fourier transformation of the density function. As demonstrated in Sect. A.3.2, the CFs of many random variables with density functions can be computed explicitly. In particular, the CF of u 0, i.e., the CF of a normally distributed random variable \({\text N}(0,\sum _{i\notin J}L_{i}^{2})\) is given by

And according to Eq. A.97, the CF of the u j , i.e., of a non-central χ 2-distributed random variable with one degree of freedom χ 2(1, (L j∕λ j)2) is given by

Similarly to the moment generating function, the usefulness of the CF in computing the distribution of δV in Eq. 22.39 stems from the property A.34. The CF of a sum of independent random variables x, y is simply the product of the CFs of each of these variables:

Furthermore, according to Eq. A.35,

holds for all non-stochastic values a, b and random variables x. Thus the CF of δV can be expressed as the product of the CFs of the random variables appearing in the definition of δV :

The CFs of the individual random variables are given explicitly in Eqs. 22.58 and 22.59. We now have all information at our disposal to calculate an explicit expression for the characteristic function of the distribution of δV . The result is of course the same as Eq. 22.46 for the MGF with the obvious substitution s → is:

Since λ i = 0 for i∉J,we can—as we did with the MGF—write the first \(\exp \)-function as

Thus, the characteristic function can be written as a product over all indexes j:

In contrast to the moment generating function, there exists an inverse transformation for the characteristic function, namely the inverse Fourier Transformation (see Sect. A.3.2). From Eq. 22.64 the density function \(\operatorname {pdf}(\delta V)\) can thus be computed (at least numerically).

The cumulative probability function of δV can now be obtained through the (numerical) integration of this probability density

A method which can likewise be applied in practice does not use the Fourier transformation of the density, but the Fourier transformation of the cumulative distribution directly:

This Fourier transformation has the analogous properties to those indicated in Eqs. 22.61 and 22.60. Hence, the Fourier transformation of the cumulative distribution function of the portfolio’s change is (up to a constant)

analogous to Eq. 22.62. Here, the individual Fourier transformations of the cumulative distribution function of a normally distributed random variable (for u 0) and the cumulativedistribution of the non-central χ 2 random variables with one degree of freedom (for the u j) appear. These can only be computed numerically. Then, the results as shown in Eq. 22.66 are multiplied in Fourier space. Finally, the function F δV(s) thus obtained must be transformed back with the inverse Fourier transformation to obtain the cumulative probability function of δV as

The Wiener-Chintchine theorem states that the approach just described is equivalent to taking the convolution of the cumulative distribution functions. The twice computed Fourier transformation is numerically preferable to computing the convolution. The recommended method for numerically performing Fourier transformations (or inverse Fourier transformations like Eq. 22.67) is the fast Fourier transformation (FFT). This method requires significantly fewer computations as compared to other common procedures for numerical integration.Footnote 12

3.6 Monte Carlo Simulation of the Portfolio Value Distribution

Calculating the cumulative distribution of δV with characteristic functions involves complicated numerical procedures. Using moment-generating functions to calculate the moments we need additional assumptions and approximations to establish a relation between those moments and the distribution or the percentiles.Footnote 13 All methods introduced here therefore offer sufficient scope for error to creep into the calculations. Additionally, significant difficulties are often involved in calculating the gamma and covariance matrices. Hence, it is by all means legitimate to apply a simple Monte Carlo simulation, instead of the often complicated methods described above, to generate the distribution of δV. The statistical error in doing so is often no larger than the errors of the above methods assuming of course that a sufficient number of simulation runs have been carried out.

For a Monte Carlo Simulation we proceed directly from Eq. 22.33. n normally distributed random numbers are generated with which the simulated change in the portfolio’s value can be immediately computed with the expression

This procedure is repeated N times (as a rule, several thousand times) thus obtaining N simulated changes in the portfolio’s value from which the distribution of δV can be approximated. The percentiles of this distribution can be approximated by simply sorting the simulated values of δV in increasing order as described in Sect. 23.1 (a detailed discussion of value at risk computations using the Monte Carlo Method can be found in this section).

Here, in contrast to the method described in Sect. 23.1, we do not simulate each single risk factor separately. Instead, the portfolio change is directly calculated based on Eq. 22.33. Therefore, no time consuming revaluation of the portfolio for the each generated scenarios is required. However, before the simulation can be performed, the eigenvalues of the transformed gamma matrix must be calculated by solving Eq. 22.22, and the transformed sensitivities L i need to be determined as well. Because of Eqs. 22.35 and 22.34, both the Cholesky decomposition of the covariance matrix as well as the eigenvectors of the gamma matrix must be computed. The eigenvectors are determined by solving Eq. 22.21.

Notes

- 1.

For all relevant confidence levels, this percentile is a negative number, see Eq. 21.13.

- 2.

This corresponds to the percentile Q 1−c of the standard normal distribution.

- 3.

This corresponds to the percentile − Q 1−c = Q c of the standard normal distribution.

- 4.

If all portfolio sensitivities are non-negative (which is often the case for instance for a private investor’s portfolio containing only long positions), then this equation can be rewritten in an alternative and quite intuitive form. Let VaRi(c) denote the value at risk of the portfolio with respect to a particular risk factor S i. Using Eq. 22.13, we can approximate this by

$$\displaystyle \begin{aligned} \mathrm{VaR}_{i}(c)\approx\left\vert \widetilde{\Delta}_{i}Q_{1-c}\sigma _{i}\sqrt{\delta t}\right\vert\;. \end{aligned}$$Thus, for the special case that none of the portfolio deltas is negative, the VaR with respect to all risk factors can be obtained by computing the square root of the weighted sum of the products of all the VaRs with respect to the individual risk factors. The weights under consideration are the respective correlations between the risk factors:

$$\displaystyle \begin{aligned} \mathrm{VaR}_{V}^{{}}(c)\approx\sqrt{\sum_{i,j=1}^{n}\mathrm{VaR}_{i} (c)\rho_{ij}\mathrm{VaR}_{j}(c)} \ \ \text{falls} \widetilde{\Delta}_{i} \geq0\forall i\in\left\{ 1,\ldots,n\right\}\;.{} \end{aligned} $$(22.16) - 5.

This goes back to a paper by Rouvinez, see [166].

- 6.

The most important results required for the analysis here receive a clear and concise treatment in [78], for example.

- 7.

\(\left ({\mathbf {O}}^{T}\mathbf {O}\right ) _{ij}=\sum _{k}(O^{T})_{ik}O_{kj}=\sum _{k}e_{k}^{i}e_{k}^{j}=\delta _{ij}\;.\)

- 8.

The notation i∉J denotes all indices i with eigenvalue λ i = 0, i.e., the set \(\left \{ 1,\ldots ,n\left \vert \lambda _{i}=0\right . \right \} \).

- 9.

Here the well-known cyclic property of the trace has been used: \(\operatorname {tr}\left ( \mathbf {ABC\,}\right ) =\operatorname {tr} \left ( \mathbf {BCA\,}\right ) \) for arbitrary matrices A,B,C.

- 10.

Recall that a normal distribution has skewness 0 and kurtosis 3, see Eq. A.59.

- 11.

Here, i denotes the imaginary number satisfying the property i 2 = −1, thus intuitively \(i=\sqrt {-1}.\)

- 12.

- 13.

It should not be forgotten that the Delta-Gamma method itself is only an approximation of the portfolio’s value obtained from the second-order Taylor series approximation.

References

M. Abramowitz, I. Stegun, Handbook of Mathematical Functions (Dover Publications, New York, 1972)

C. Alexander (ed.), The Handbook of Risk Management and Analysis (Wiley, Chichester, 1996)

L.B.G. Andersen, R. Brotherton-Ratcliffe, The equity option volatility smile: an implicit finite-difference approach. J. Comput. Finance 1(2), 5–37 (1998)

L.B.G. Andersen, V.V. Piterbarg, Interest Rate Modeling (Atlantic Financial Press, New York, London, 2010)

N. Anderson, F. Breedon, M. Deacon, et al., Estimating and Interpreting the Yield Curve (Wiley, Chichester, 1996)

D.F. Babbel, C.B. Merrill, Valuation of Interest-Sensitive Instruments (Society of Actuaries, Schaumburg, IL, 1996)

L. Bachelier, Théorie de la spéculation. Annales scientifiques de l’École Normale Supérieure 3(17), 21–86 (1900)

Bank for International Settlements, International convergence of capital measurement and capital standards, part 2. http://www.bis.org/publ/bcbs128b.pdf, June 2006

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Copyright information

© 2019 The Author(s)

About this chapter

Cite this chapter

Deutsch, HP., Beinker, M.W. (2019). The Variance-Covariance Method. In: Derivatives and Internal Models. Finance and Capital Markets Series. Palgrave Macmillan, Cham. https://doi.org/10.1007/978-3-030-22899-6_22

Download citation

DOI: https://doi.org/10.1007/978-3-030-22899-6_22

Published:

Publisher Name: Palgrave Macmillan, Cham

Print ISBN: 978-3-030-22898-9

Online ISBN: 978-3-030-22899-6

eBook Packages: Economics and FinanceEconomics and Finance (R0)