Abstract

With the development of brain-computer interface (BCI) technology, fast and accurate analysis of Electroencephalography (EEG) signals becomes possible and has attracted a lot of attention. One of the emerging applications is eye state recognition based on EEG signals. A few schemes like the K* algorithm have been proposed which can achieve high accuracy. Unfortunately, they are generally complex and hence too slow to be used in a real-time BCI framework such as an instance-based learner. In this paper, we develop a novel effective and efficient EEG based eye state recognition system. The proposed system consists of four parts: EEG signal preprocessing, feature extraction, feature selection and classification. First, we use the ‘sym8’ wavelet to decompose the original EEG signal and select the 5th floor decomposition, which is subsequently de-noised by the heuristic SURE threshold method. Then, we propose a novel feature extraction method by utilizing the information accumulation algorithm based on wavelet transform. By using the CfsSubsetEval evaluator based on the BestFirst search method for feature selection, we identify the optimal features, i.e., optimal scalp electrode positions with high correlations to eye states. Finally, we adopt Random Forest as the classifier. Experiment results show that the accuracy of the overall EEG eye state recognition system can reach 99.8% and the minimum number of training samples can be kept small.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Electroencephalogram (EEG)

- Eye state identification

- Feature extraction

- Wavelet transform

- Information accumulation algorithm

- Random forest

1 Introduction

A brain-computer interface (BCI) [1] is a direct communication system between the human brain and the external world, which supports communication and control between brain and external devices without use of peripheral nerves and muscles. By using BCI, people can directly express ideas or bring them to actions only through their brains. For instance, BCI can enable disabled patients to communicate with the outside world and control external devices. As a new kind of human-computer interaction, BCI has attracted intensive attention in the field of rehabilitation engineering and biomedical engineering in recent years. EEG based eye state recognition is one of the most important research fields of BCI, which has been investigated for many applications, particularly in human cognitive state classification. For example, EEG based eye state classification has been successfully applied to fatigue driving detection [2], epileptic seizure detection [3], human eye state detection, recognition of infant sleep state [4], classification of bipolar affective disorder [5], human eye blinking detection, etc. These phenomena demonstrate the importance of studying eye state recognition based on EEG.

Previous studies on EEG based eye state recognition can be classified into two categories: improving the accuracy and shortening the computing time. In the first category, one of the most representative works is by Röser and Suendermtann [6], which develops a system to detect a person’s eye state based on EEG recordings. The authors test 42 classification algorithms and found that the K* algorithm can get the highest accuracy of 97.3%. As a classic statistical pattern recognition method, the K* algorithm performs classification on a data sample mainly based on the surrounding neighboring samples. However, when the training sample set is large, the computing time of the K* algorithm increases significantly. In order to address this problem, some studies in the second category employ more efficient classification methods to reduce the computing time. For instance, Hamilon, Shahryari, and Rasheed [7] use Boosted Rotational Forest (BRF) to predict eye state with an accuracy of 95.1% and speed of 454.1 instances per second. Reddy and Behera [8] design an online eye state recognition with an accuracy of 94.72% and the classification speed of 192 instances per second.

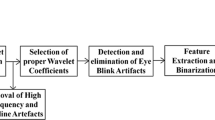

In this paper, we aim to develop an effective and efficient EEG based eye state recognition system. Different from the above methods which mostly focus on optimizing the classification algorithm, we explore the overall system design consisting of EEG signal preprocessing, feature extraction, feature selection, and classification. Specifically, we first decompose the signal and mitigate noise in it. Then, we conduct feature extraction. We argue that feature extraction of dynamic signals like EEG should consider the information of adjacent time-domain signals rather than only include the information at a certain time instance. Therefore, we propose a novel feature extraction scheme by utilizing the information accumulation 3 algorithm based on wavelet transform. After that, we employ the BestFirst search algorithm to select features, and the Random Forest algorithm to perform classification. The overall EEG eye state recognition system achieves the classification accuracy of 99.8% and the speed of 639.5 instances per second. The rest of this paper is organized as follows. Section II describes the proposed real-time EEG eye state recognition system in detail. Section III demonstrates the experiment results. The last section concludes the paper.

2 System Design

2.1 EEG Signal Proprecessing

EEG signals, which are different from normal electrical signals, are dynamic, random, non-linear bio-electrical signals with high instablity. Traditional de-noising methods including linear filtering and nonlinear filtering, such as wiener filtering [9] and median filtering [10], are inappropriate for EEG signal preprocessing, because the entropy and the non-stationary characteristics of signal transformation cannot be clearly described, and the correlation of signals cannot be easily obtained. Wavelet transform [11] has strong data de-correlation capability, which can make the energy of the signal in the wavelet domain concentrated on a few large wavelet coefficients, and the noise energy distributed in the entire wavelet domain. Moreover, the noise in EEG signal is usually close to white noise. So, this paper uses the wavelet threshold de-noising [12], which can almost completely suppress the white noise, and the characteristics of the original signal are retained well.

Particularly, wavelet threshold de-noising [13] is to employ an appropriate threshold function so that the wavelet coefficients obey certain rules after the wavelet transform to achieve the purpose of de-noising. The selection of the threshold function and the determination of the threshold value are two key problems in the design of wavelet threshold de-noising algorithm, which influence the de-noising result directly. In general, threshold functions can be divided into two categories: hard threshold and soft threshold. Currently, fixed threshold [14], Stein unbiased likelihood estimation threshold, heuristic threshold [15] and minimum maximum criterion threshold are the four most frequently used selection rules. Because Stein unbiased likelihood estimation threshold and min-max criterion threshold often result in incomplete de-noising, in this paper we adopt the heuristics threshold.

2.2 Feature Extraction

In feature extraction, fast Fourier transform (FFT), autoregressive (AR) models, wavelet transform (WT) and short-time Fourier transform (STFT) are widely used to extract features of EEG signals. But transient features cannot be captured by AR mod- els or FFT models. Both SFT transform and wavelet transform are time-frequency analysis methods, and have a unified time window to simultaneously locate different frequency ranges and time intervals. Studies have shown that the combination of time domain information and frequency information can improve the classification performance of the EEG recognition system, and that for non-stationary transient signals such as EEG, WT is more effective than SFT. Therefore, we develop a wavelet packet decomposition (WPD) based approach to extract features of EEG signals. The coefficients of WPD and the wavelet packet energy of special sub-bands are taken as the original features.

Note that in the proposed scheme determining suitable wavelet and the number of decomposition levels is critical. In particular, different types of wavelets are usually used in testing to find the wavelet with the highest efficiency for a particular application. The smoothing characteristic of the db4 wavelet is more suitable for detecting changes of EEG signals. Thus, we employ this scheme to compute the wavelet coefficients in this paper. Moreover, the number of decomposition levels is usually chosen based on the main frequency components of the signal. According to previous studies, the number of decomposition levels is set to 5 because EEG signals do not have any useful information above 30 Hz. Then, the EEG signals were decomposed into details D3-D5 and one final approximation, A5. Table 1 shows the correspondence between components of the wavelet and frequencies of the EEG Signals.

Furthermore, after analyzing the EEG signal changes corresponding to more than 50 eye state changes, we find that changes in EEG usually happen before eye movement as shown in Fig. 1. The reason for this phenomenon may be that there is a process of brain consciousness formation before people perform physiological activities. This process is related to Event-related potentials (ERP [16]), a special kind of brain evoked potentials. Evoked Potentials (EPs [17]), also known as Evoked Responses, refer to the specific stimulation of the nervous system (from the receptor to the cerebral cortex) or the processing of information about the stimulus (positive or negative). EPs are bioelectrical responses that are detectable in a system and at a corresponding portion of the brain with a relatively fixed time interval (lock-time relationship) and a specific phase. Experimental psychologists and neuroscientists have discovered many different stimuli that elicit reliable ERPs from participants. The timing of these responses can provide a measure of the timing of the brain’s communication or timing of information processing. Therefore, we attempt to improve our algorithm by exploring the occurrence of brain event-related potential (ERP) in the case of human eye movement and finding out the response time of the brain to eye movement consciousness through experiments.

Specifically, according to the Parseval theorem, we can obtain the energy of each component after wavelet transform. Let fx represent the energy of the x component. Then the feature vector f can be described as: \( f = [f_{D3} , f_{D4} , f_{D5} , f_{A5} ]^{T} \). As mentioned above, it has been shown that the voltage amplitude of the EEG signals starts to rise or descend before the change of eye state. Thus, the vector f only includes the energy at a certain time, but neglects the useful information of adjacent time or previous time of the signal. So, we employ the information accumulation algorithm to extend the feature vector f to \( f^{{\prime }} \) which can better represent the characteristics of the EEG signal as in (1) and (2).

Here, T represents a time window whose optimal value can be determined by experiments, and n represents the number of data samples.

2.3 Feature Selection

In machine learning, if the number of features is too many, there may exist irrelevant features and may be interdependency among features. So, it is necessary to select features before classification. This paper uses CfsSubsetEval evaluator based on the BestFirst search method [18] derived in Weka toolkit [19] to select features.

2.4 Classification

The Random Forest classification (RFC) [20] is a classification model that is composed of many decision tree classification models. Specifically, given one variable X, in each decision tree classification model, the optimal classification result depending on one vote. In contrast RFC works as follows. First, it uses the bootstrap sample method to extract k samples from the original training set. Second, k decision tree models are established from k samples, and k classification results are obtained. Finally, the classification result is obtained by following the plurality rule, i.e.,

In (3), H(x) is the final classification result, hi(x) is the classification result of a single decision tree, Y is target classification, and \( I( \bullet ) \) is the indicator function.

3 Experiment Results

3.1 Dataset

This paper uses EEG Eye State Data Set [21] from the UCI database. All EEG signals were recorded by Emotiv EEG Neuroheadset [22]. Each sample consists of 14 values from 14 electrode positions, and a label indicating the eye state (‘1’ indicates the eye-closed state and ‘0’ the eye-open state). The duration of the EEG recording was 117 s.

3.2 Results

3.2.1 EEG Signal Proprecessing Results

In the SIGNAL toolbox of the MATLAB2010 platform, the signal is decomposed by the ‘sym8’ wavelet. On the 5th floor of the decomposition, the heuristic SURE threshold is used to de-noise the signal. The Fig. 2 shows the difference between the signal before de-noising and after de-noising in channel AF3. From the Fig. 2, it can be seen that the shaking of the waveform after de-noising is reduced, and a larg- e proportion of noises have been removed. Consequently, the wavelet threshold de-noising is a useful method to the EEG signal.

3.2.2 Classification Results

In the experiments, all the patterns were partitioned for training and testing with the division of 66% and 34% (Röser & Suendermann’s work use 10-fold cross validation). In the step of feature extraction, there are 8 methods shown in Table 2 which lead to 8 results of feature selection and classification shown in Table 3. The classification speed \( v \) is defined as:

In (4), n is the number of instance, t is the cpu execution time. The cost time didn’t include the obtainment and transmission time in hardware.

Table 3 shows that the best approach is the No.5 which has an accuracy rate of 99.8% and speed of 639.5 instances/s if taking all aspects into consideration. The best selection of the parameter T is the time period including 49 points before the current point and 50 points after it. The information in this time period can reflect eye state most effectively. The margin curve of this method is shown in Fig. 6. From this figure, we can find that, when the number of samples is larger than 1563, the classification result tends to be stable and the calculation cost is low. When the number of samples is less than 210, the classification accuracy is low and the calculation cost is high. When the number of samples is larger than 210, the accuracy increases rapidly and the calculation cost is reduced greatly. So, the minimum number of training samples is 1563. Compared not using feature extraction with only using wavelet transform when select RF as classifier, the accuracy rate increases 7.9% and 1.9% respectively. When using K* classification algorithm, the accuracy rate increases 3.7% and the speed increases to ten times. Moreover, by feature selection, it is proved that AF3(A5), F7(A5), T7(A5), O1(A5) and FC6(D5) are 5 scalp electrode positions with high correlation to eye state. So, the recognition of eye state based on the EEG signal only needs the information of 5 channels and frequency components of delta waves and alpha waves.

4 Conclusions

This paper develops a novel efficient EEG eye state recognition system. It has a significantly faster classification speed and higher accuracy compared with the K* algorithm. Compared with the K*algorithm, the optimal performance in this study reaches the accuracy of 99.8% and the classification speed of at-least 639.5 samples per second, making it appropriate to real-time BCI systems. We hope that this study will help more scientists and engineers understand brain activities and develop BCI systems for improving human lives.

References

Kim, Y.-J., Kwak, N.-S., Lee, S.-W.: Classification of motor imagery for Ear-EEG based brain-computer interface. In: 2018 6th International Conference on Brain-Computer Interface (BCI). IEEE (2018)

Hailin, W., Hanhui, L., Zhumei, S.: Driving detection system design based on driving behavior. In: 2010 International Conference on Optoelectronics and Image Processing. IEEE (2010)

Sinha, A.K., Loparo, K.A., Richoux, W.J.: A new system theoretic classifier for detection and prediction of epileptic seizures. In: The 26th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE (2005)

Fraiwan, L., Lweesy, K.: Neonatal sleep state identification using deep learning autoencoders. In: 2017 IEEE 13th International Colloquium on Signal Processing and its Applications (CSPA). IEEE (2017)

Leow, A., et al.: Measuring inter-hemispheric integration in bipolar affective disorder using brain network analyses and HARDIA. In: 2012 9th IEEE International Symposium on Biomedical Imaging (ISBI) In 4th International Conference. IEEE, 2012

RoSler, O., Suendermann, D.: A first step towards eye state prediction using EEG. In: AIHLS 2013: The International Conference on Applied Informatics for Health and Life Sciences. IEEE (2013)

Hamilton, C.R., Shahryari, S., RasheedEye, K.M.: State prediction from EEG data using boosted rotational forests, In: IEEE International Conference on Machine Learning. IEEE (2016)

Reddy, T.K., Behera, L.: Online eye state recognition from EEG data using deep architectures.: 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC 2016). IEEE (2016)

Girault, B., Gonc¸alves, P., Fleury, E., Mor, A.S.: Semisupervised learning for graph to signal mapping: A graph signal wiener filter interpretation. In: Proceedings of IEEE International Conference Acoustic, Speech and Signal Process. (ICASSP), pp.1115–1119. IEEE (2014)

Huang, T.S. (ed.): Two-Dimensional Digital Signal Processing II: Transforms and Median Filters. Springer-Verlag, Berlin (1981)

Grossmann, A.: Wavelet transforms and edge detection to be published in Stochastic Processes in Physics and Engineering, Ph.Blanchard, L. Streit, and M. Hasewinkel, Eds

Jin, Z., Jia-lunl, L., Xiao-ling, L., wei-quan, W.: ECG signals denoising method based on improved wavelet threshold algorithm. In: Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC). IEEE (2016)

Saxena, S., Khanduga, H.S., Mantri, S.: An efficient denoising method based on SURE-LET and Wavelet Transform. In: International Conference on Electrical, Electronics, and Optimization Techniques (ICEEOT). IEEE (2016)

Movahednasab, M., Soleimanifar, M., Gohari, A.: Adaptive transmission rate with a fixed threshold decoder for diffusion-based molecular communication. IEEE Trans. Commun. 64, 236–248 (2016)

Wang, Y., He, S., Jiang, Z.: Weak GNSS signal acquisition based on wavelet de-noising through lifting scheme and heuristic threshold optimization.In: 2014 International Symposium on Wireless Personal Multimedia Communications (WPMC). IEEE (2015)

Zhifeng Lin, Zhihua Huang”. Research on event-related potentials in motor imagery BCI”: 10th International Congress on Image and Signal Processing, p. 2017. BioMedical Engineering and Informatics (CISP-BMEI), IEEE (2017)

Kidmose, P., Looney, D., Ungstrup, M., Rank, M.L., MandicA, D.P.: Study of evoked potentials from ear-EEG. IEEE Trans. Biomed. Eng. 60, 2824–2830 (2013)

Saad, S., Ishtiyaque, M., Malik, H.: Selection of most relevant input parameters using WEKA for artificial neural network based concrete compressive strength prediction model. In: Power India International Conference (PIICON). IEEE (2016)

Kumar, N., Khatri, S.: Implementing WEKA for medical data classification and early disease prediction. In: 3rd International Conference on Computational Intelligence and Communication Technology (CICT). IEEE (2017)

Kooistra, I., Kuilder, E.T., Mücher, C.A.: Object-based random forest classification for mapping floodplain vegetation structure from nation-wide CIR AND LiDAR datasets. In: Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS). IEEE (2017)

Frank, A., Asuncion, A.: UCI Machine Learning Repository. (2010) http://archive.ics.uci.edu/ml/

Diego, S., Toscano, B.S., Silva, A.: On the use of the Emotiv EPOC neuroheadset as a low cost alternative for EEG signal acquisition. In: 2016 IEEE Colombian Conference on Communications and Computing (COLCOM). IEEE (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 ICST Institute for Computer Sciences, Social Informatics and Telecommunications Engineering

About this paper

Cite this paper

Zhou, Z., Li, P., Liu, J., Dong, W. (2019). A Novel Real-Time EEG Based Eye State Recognition System. In: Liu, X., Cheng, D., Jinfeng, L. (eds) Communications and Networking. ChinaCom 2018. Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, vol 262. Springer, Cham. https://doi.org/10.1007/978-3-030-06161-6_17

Download citation

DOI: https://doi.org/10.1007/978-3-030-06161-6_17

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-06160-9

Online ISBN: 978-3-030-06161-6

eBook Packages: Computer ScienceComputer Science (R0)