Abstract

This chapter presents a user-centered perspective on the design of effective mid-air gesture interaction with mobile technology for seniors. Starting from the basic characteristics of mid-air gesture interaction, we introduce the main design challenges of this interaction and report on a case study in which we implemented a user-centered design process focused on the engagement of older adults in each design stage. We present a series of studies in which we aimed at involving older users as active creators of the interaction, taking into account their feedback and values in the design process. Finally, we propose a set of recommendations for the design of mid-air gesture interaction for older adults. These recommendations are elaborated combining research on HCI, ageing and ergonomic principles, and the results from the user studies we conducted in the process.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 From the Compensation Model to Engagement

Within the field of Human-Computer Interaction (HCI), ageing has become a primary research area that is expected to grow even more. However, the dominant research approach of HCI for older people seems to be a step behind with respect to the mainstream trend of HCI, which values the emotional aspects of user experience (UX), the empowerment of users and value-sensitive design (Harrison et al. 2011).

Indeed, a major strand of research in this field has conceptualized ageing as a slow but steady process that eventually leads to functional decline and extended needs. This strand has been mainly focusing on designing assistive technologies that can compensate for older people’s frailties and disabilities. Consistently with the first wave of HCI, inspired by engineering and human factors and devoted to optimizing the fit between humans and computers (Harrison et al. 2011), this approach is functional for inclusive design, and for accommodating cognitive and physical decline occurring with ageing by compensating such decline and focusing on usability and accessibility. However, if taken alone, it is biased and incomplete.

Another important research area involving older adults and HCI has aimed at designing technology to support healthy ageing, independent living and those other needs that are assumed to arise with ageing, fostering an active lifestyle and computer-mediated communication with peers and relatives. These aspects are consistent with the second wave of HCI, along with effective methods to involve older people into the design process, such as participatory design and contextual inquiry (Bødker 2006).

In the third wave of HCI, focused on embodied interaction, empowerment of users and value-sensitive design (Harrison et al. 2011), contexts of use of technology broadened and spread to our homes and everyday lives, by valuing emotional aspects of user experience. However, when designing for older adults, it seems that these aspects are not commonly taken into consideration (Rogers and Marsden 2013) and technological solutions are still designed to compensate for some kind of lack or frailty (Ryan et al. 1992). Nevertheless, research has now widely shown that the ageist stereotype does not generally apply to older adults, who are not intrinsically reluctant towards technology and consider themselves as active actors in society.

New approaches can be adopted to reframe the relationship between ageing and technology, overcoming the compensation model towards the empowerment of people, the inclusion of pleasure, the value-based design and the development of new interaction modalities. Among the latter, there is mid-air gesture interaction, i.e. interaction based on the automatic recognition of user’s hand and arm movements around the device. To date, mid-air gesture interaction has received a growing attention in HCI, but so far, research has mainly targeted younger users because this kind of interaction is often described as fun, targeted for the technology-savvy, and thus often used in gaming and entertaining contexts. Although mid-air gestures can potentially make the interaction not only fun but also easy, intuitive and natural, this novel way of interacting with technology has not been targeted to older users yet. However, mid-air gesture interaction cannot only overcome some accessibility issues occurring with age, but also make the interaction more pleasant and engaging for older users too.

In this chapter, we (1) present the main characteristics of mid-air gesture inter-action and discuss the most prominent design challenges for older adults, (2) describe how we approached the design of this kind of interaction through a user-centered approach, and finally (3) we propose a set of recommendations for the design of mid-air gesture interaction with mobile devices for older adults.

2 Mid-Air Gesture Interaction and Design Challenges

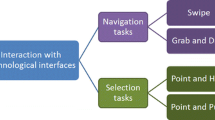

Gestures, classified in touch-based and mid-air gestures, have become one of the main ways to interact with digital tools. In particular, mid-air gestures cover different types of interaction, such as manipulation of digital objects (Cockburn et al. 2011), menu selection (Ni et al. 2011) or text entry (Markussen et al. 2014), and are becoming increasingly popular due to the availability of effective automatic gesture recognition technology.

Mid-air gestures can be categorized in micro and macro gestures. The former involve the movement of a finger or a hand (Wigdor and Wixon 2011), whereas the latter comprise arm or body movement (England 2011). Some activities may be more compatible with either micro (e.g., interaction with mobile devices) or macro air gestures (e.g., for health and rehabilitation applications), whereas others are compatible with both (e.g., for entertainment, where micro air gestures are suitable for leap motion or tablet devices and macro gestures for Kinect device; Cronin 2014).

Mid-air gestures can also be classified according to their mapping to the intended task (Hurtienne et al. 2010; Ruiz et al. 2011) in: (1) real-world metaphorical gestures, which are representations of everyday-life actions or objects (e.g., the gesture of opening a book for opening the menu of an application), (2) physical/deictic gestures, which refer to spatial information and comprise a direct relation between the gesture and a manipulated object, (3) symbolic gestures, which are highly conventional and require learning and interpretation (e.g., tracing an “M” with the hand to open the Menu), and (4) abstract gestures, characterized by arbitrary mapping (e.g., moving the arm upward for opening the menu). Especially when targeting beginners, occasional users and diverse user groups such as older adults, designing for intuitive use is crucial for an effective interaction. For an effective interaction, the system should allow users to apply prior knowledge (Hurtienne and Blessing 2007). To this end, metaphorical or physical/deictic gestures should be preferred to increase logical functionality and make recall easier. Concerning memorability, Nacenta et al. (2013) also found that user-defined gestures are easier to remember.

Most studies evaluating gestural interfaces for older adults fall within the gaming and physical activity contexts. In these areas, research focusing on the acceptance of mid-air gestures found a generally positive attitude of older adults towards this type of interaction. For example, Gerling et al. (2013) compared two types of interaction (computer mouse and gesture-based) with younger and older adults, and found that older participants used the motion-based controls efficiently and overall enjoyed the interaction, not perceiving it as more exhausting than younger participants. Interestingly, older adults welcomed fatigue to a certain extent, whereas younger participants considered the increased physical effort as a negative aspect of the interaction. Gerling et al. (2012) created four static and four dynamic gestures for a full-body motion-control game in collaboration with a physical therapist in a nursing home, and found that playing the gestural game had a beneficial effect on participants’ mood. However, they also pointed out that recalling gestures was a difficult challenge for seniors. Hassani et al. (2011) developed a robot that helped seniors to perform physical exercises and compared two simple input modalities: touch and gesture-based. To move to the next exercise, participants had to tap a touch device or perform a next gesture. Participants rated the mid-air gesture interaction more positively compared to the touch interaction, suggesting that they found it an easy interaction modality.

When designing gesture interaction, social acceptability has to be considered above all due to politeness conventions for gestural use depending on the cultural context (Vaidyanathan and Rosenberg 2014). For example, Rico and Brewster (2010) examined the social acceptability of eight common body and device-based gestures, where the former involved body movements without using a mobile device (i.e., head nodding), while the latter involved touching or moving a mobile device (i.e., shaking a mobile phone). The authors found that both location and audience can significantly impact on users’ willingness to perform gestures. In particular, location providing more privacy and familiar audiences showed higher acceptability rates. Moreover, device-based gestures, which clearly showed that the action was related to the interaction with a mobile device, were more likely to be used than body-based gestures. Although participants in this study were aged from 22 to 55 for the survey-based study, and from 21 to 28 for the field study, these results suggest that gestures may be generally acceptable in public settings if they are clearly device-based. Few studies investigated gesture acceptance for older adults, especially in private, indoor settings. For example, Bobeth et al. (2012) used the technology acceptance questionnaire (TAM; Venkatesh and Bala 2008) to assess the acceptance of freehand gesture-based menu interactions in a private TV-control setting, and found a high level of acceptance in terms of usability, behavioral intention and enjoyment.

Also, memorability and fatigue (gorilla arm effect; Boring et al. 2009) should be paid particular attention when designing gestures for seniors. Concerning memorability, Nacenta et al. (2013) found that user-defined gestures are easier to remember. With regard to fatigue, centralized positions of the arms with minimal joint extensions were found to be less tiring (Hincapié-Ramos et al. 2014).

3 The ECOMODE Project: A Series of Studies on Designing Mid-Air Gesture Interaction

Designing effective mid-air gesture is challenging because current technology still lacks robustness and reliability to compel to restrictive environmental operating conditions: for example, neither video nor infrared technologies reliably work outdoors. In order to tackle these limitations, new sensing technology is under development in the ECOMODE project, funded by the EU H2020. The project aims at realizing a new generation of low-power multimodal human-computer interfaces for mobile devices, combining voice commands and mid-air gestures, by exploiting an event-driven compressive (EDC) biology-inspired technology (Camunas-Mesa et al. 2012).

The design of the ECOMODE technology followed an iterative approach based on the user-centered framework (Maguire 2001): it started by investigating users’ needs and desires through reviewing the literature, testing commercial tools and organizing studies with target users (Mana et al. 2017) (see Fig. 6.1—Understand). The findings of this first step guided the interaction design, informing the design of the prototype, which was finally evaluated with experts and end users. The process was then iteratively repeated to further refine the design specifications and improve the technology, until the final version.

Here, we describe a series of studies that we carried out to understand the design space and how older users approach and perceive mid-air gesture interaction (Table 6.1).

3.1 Understanding the Design Space

To reach a global view of the boundaries of our design space, we carried out preliminary research with experts and older users (Ferron et al. 2015). We performed interviews with experts to investigate current practices concerning the use of digital technologies among older adults, their needs and desires, aiming to detect an appropriate use case for the development of multimodal (mid-air gestures and speech) interaction. Experts highlighted that, in their experience, older adults tended to regard the tablet as a means of entertainment and to maintain social connections, showing interest in learning to use the camera and to share pictures with others. We conducted a study involving six older adults, asking them to use smartphones and tablets to take photographs with a traditional touchscreen. In this preliminary study, we aimed at detecting common pitfalls that mid-air and speech interaction could overcome.

Both the interviews and our observations showed that older adults were generally interested in learning to use tablet PCs, especially if they support their needs (e.g., to communicate with relatives). However, experts also highlighted that hardware components of tablet PCs (e.g., on/off button, charging port) may be uncomfortable because of being too little or fragile. Moreover, dexterity issues may cause problems for senior citizens performing touch gestures and introduce difficulties in selecting small icons, whereas low vision may cause problems in reading small labels.

Finally, we confirmed that gestures designed for older adults should be natural and easy to memorize and perform, in order to make the technology more pleasant, inclusive and acceptable (Jayroe and Wolfram 2012; Pernice and Nielsen 2012).

3.2 Test with Commercial Tools

To investigate the characteristics of existing tools on the market targeted to older adults and employing different interaction modalities, we asked a pool of experts to test nine commercial tools based on touch, mid-air gestures and voice interaction. Four of these were tested on tablet (Eldy,Footnote 1 Wave-o-rama,Footnote 2 BreezieFootnote 3 and Myo Air Gesture ArmbandFootnote 4), four on smartphone (Apple Siri, Aire Gesture Control,Footnote 5 Easy SmartphoneFootnote 6 and Big LauncherFootnote 7), and one on smartwatch (Samsung Geo 2 NeoFootnote 8). Four were specifically designed for elderly people and two of them (EasySmart Phone and Big Launcher) were launchers. Four systems (Myo, Wave-o-rama, Air Gesture Control, and Geo 2 Neo) included mid-air gesture interaction, although three were still limited at the time of the expert evaluation.

Then, in order to better explore UX and ergonomic aspects of mid-air gesture interaction in an entertainment context, we conducted an exploratory study with two participants employing the Myo Air Gesture Armband; it allows for controlling computer applications by a set of touchless gestures. We observed difficulties in remembering the sequence of gestures and in performing the unlock gesture, which participants found uncomfortable. Overall, participants considered the interaction modality interesting, fun and playful, but also not efficient. They suggested alternative applications of touchless interaction, e.g., remote control of applications with dirty hands. Furthermore, the study highlighted the importance of minimal physical effort (e.g., avoiding strong pressure or excessive rotation of the wrist and forearm). Similarly, complex mid-air gestures that are difficult to memorize (e.g., combination of more basic gestures) should be avoided or limited.

3.3 Design of a Gesture Set

We followed a participatory and task-based approach to design a usable and effective set of mid-air gestures (Nielsen et al. 2004). Taking pictures was the task chosen, which had to be performed with a tablet not resting on a surface but held in a hand. Three adults were involved. They worked separately, using the same tablet, a Samsung Galaxy TabS with a display of 10.5″, and following the same list of subtasks (open the camera app, select a special effect, zoom in or out, take the picture, open the galley, scroll back and forth). Each person was asked to freely design and then describe a mid-air gesture for each of the subtasks. The resulting mid-air gestures were analyzed by two usability experts, who selected them and defined the final set (Fig. 6.2).

Some general considerations made by the three participants involved in this study were:

-

the tablet PC is heavy (more or less 500 gr with the cover); holding it with a single hand while performing mid-air gestures with the other one to interact is tiresome;

-

it is fundamental to check the field of view of the camera on the tablet, to be sure that also mid-air gestures performed not exactly in front of the tablet are recognized;

-

in order to understand if a gesture has been recognized, it is crucial to get a feedback.

3.4 The Wizard of Oz Approach

In order to explore the UX of mid-air gesture interaction with mobile technology for elderly users, we conducted a series of studies implementing the Wizard-of-Oz (WoZ) approach (Green and Wei-Haas 1985), which has been shown valuable for developing gesture interfaces (Carbini et al. 2006). WoZ experiments simulate the response of an apparently fully functioning system, whose missing functions are supplemented by a human operator called “wizard”.

This approach offers the advantage of testing new user interface concepts before the technology is mature enough, considering different scenarios. It also facilitates gathering qualitative and quantitative data on user’s preferences and usage patterns, and depending on the study setup, it might enable creative responses. Older adults can contribute at various stages of the design process, for example providing their opinions on the prototypes or discussing features of future technology. The WoZ technique, which supports older adults in the physical exploration of technological prototypes, can also improve their engagement and participation in the design process (Schiavo et al. 2016).

3.5 Satisfaction and Comfort

To explore the perceived satisfaction and comfort of multimodal interaction—gestures + speech—with a tablet device, an exploratory study with WoZ was conducted by involving ten volunteers (5 females; M = 69; SD = 3.62). The participants were asked to perform multimodal interaction with a tablet device while taking pictures by using a set of predefined mid-air gestures and voice commands (Mana et al. 2017). The video recordings of the experimental sessions were analyzed to measure the distance between gesture and tablet device, as well as the distance between user’s face and tablet: most of the participants (8 out of 10) performed the gestures very close to the device (6–15 cm), whereas they held it at about 30–40 cm from the face.

By asking the participants to self-report satisfaction, ratings for taking photos with the tablet device using a multimodal interaction were on average high: on a scale from 1 to 5, participants reported a mean value of 4.4 (SD = 0.48). Four participants stated they would use this device during a trip outdoor. On average, holding the tablet with one hand was not perceived as an obstacle (M = 4.10, SD = 0.99) and making gestures using the tablet was considered quite comfortable (M = 3.75, SD = 1.03).

3.6 Mid-Air Gesture Interaction: Older Adults vs Middle-Aged and Younger Adults

To go deeper into how older adults approach mid-air gesture interaction, we explored with a WoZ study how older (65+), middle-aged (55–65 years old) and younger adults (25–35) used mid-air gestures and voice commands interaction in a common activity, such as taking photos with a tablet device. We proposed the use of both mid-air gestures and vocal commands to create a more natural interaction, even if here we focus specifically on mid-air gestures.

Thirty participants (10 for each age group, 5 females and 5 males for each group) were asked to take some pictures with a tablet by using the set of predefined mid-air gestures and voice commands (Mana et al. 2017). They were video-recorded, observed during the experimental session and interviewed at the end to collect their comments, feeling and preferences.

Results showed that all groups could correctly replicate the gestures presented in the training session, even if they performed the mid-air gestures with a certain variability. Moreover, probably due to their familiarity with touchscreen interfaces, younger participants tended to use only one finger (mostly their index finger) instead of the entire hand, performing small and rapid movements. On the contrary, older adults tended to exaggerate their movements, making wide and ample gestures with the whole hand. We found that participant remarks were generally positive regarding the comfort of performing the mid-air gesture with one hand and holding the tablet with the other. However, it should be taken into account that none of our participants (including older adults) reported any significant physical impairments and that the tablet device used in the study was lighter (272 g) compared to similar models in the market.

3.7 Data Collection of a Mid-Air Gestures Dataset

Within the ECOMODE project, we conducted a data collection aimed at building a dataset of mid-air gestures to be used for training the automatic recognition algorithm, involving 20 older adults (10 females; M = 71 years-old; SD = 8.61) (Ferron et al. 2018). We grouped our participants, according to Gregor and colleagues’ classification (2002), as follow: 13 were “fit older adults” (able to live independently, with no main disabilities), 6 were “frail older adults” (with one or more disabilities, or a general reduction of their functionalities), and 1 was a “disabled older adult” (with long-term disabilities). The prototype used for our data collection consisted of the ECOMODE camera attached to a tablet PC running an application that showed video descriptions of the multimodal gestures to be performed. The experimenter used the Mobizen mirroring application (https://www.mobizen.com/) to control the participant’s device from her notebook PC. Before starting the session, each participant was instructed about the distance to hold the device from the lips (about 30 cm) and about the distance from the camera to make the gesture.

During the data collection, we observed that most of them (13 out of 17—three participants carried out the task sitting on a chair with the tablet PC placed on a table, due to physical problems—see Fig. 6.3) tended to hold the tablet PC more distant (about 40–45 cm) than the recommended 30 cm, to have space for performing the hand gesture (Fig. 6.4). Moreover, the majority of participants (65%) performed the gestures too close to the camera to be appropriately recorded (see Fig. 6.5). No gesture was felt complex to be performed by the participants, but a certain variability (in particular different tablet orientation and gesture amplitude) was observed between subjects. About 60% of the elderly participants often performed the gestures partially out of the camera field of view. This issue and the previous one should guide the choice of the optics, and highlight the need to include appropriate feedback and feedforward.

Some users complained about the difficulty of holding the tablet without touching the screen, or being afraid of dropping it. Indeed, we noticed that they sometimes put the thumb on the screen (see Fig. 6.5), preventing a correct interaction. For this reason, a new case for the ECOMODE camera was designed (see Fig. 6.6).

3.8 Fatigue and Mid-Air Gesture Interaction

To further understand how fatigue would increase with interaction time, we investigated with a group of 17 older adults (8 females; M = 69 years-old; SD = 7.3) the fatigue they perceived while performing mid-air gestures in front of the ECOMODE prototype. In particular, we asked participants to carry out a series of predefined interaction tasks. Within three intervals of three minutes each, we asked participants to score their perceived exertion on the Italian version of Borg’s CR10 Scale (Borg 1998), which is tailored to physical exertion and maps 10 numeric ratings to verbal cues on a Likert-scale question: “How do you perceive you effort, from 1 to 10 (1 = no effort; 10 = extremely high effort)?” A repeated measures ANOVA showed that the perceived exertion significantly increased between time-points (F(2, 26) = 21.8; p < 0.001; Mean fatigue at minute 3 = 2.5, SD = 1.9; Mean fatigue at minute 6 = 3.6; SD = 2.2; Mean fatigue at minute 9 = 4.8, SD = 3). This would suggest that a continuous short multimodal interaction (i.e. < 6 min) could be feasible for older adults. Taking into account that in realistic application contexts a continuous prolonged interaction is unlikely, multimodal interaction can be considered a practical way of interacting with technology for the elderly population.

3.9 Unfolding the Values of Mid-Air Gesture Interaction

In the previous studies, we investigated mid-air gestures for older adults particularly from the interaction perspective. To move a further step toward the design of mid-air gesture interaction, in another study we explored the perceived benefits and values related to mid-air gestures used for accomplishing a task resembling an example of daily activity for older adults, i.e. mobile photography, by implementing the UX Laddering technique (Vanden Abeele and Zaman 2009) with 22 older participants (12 females; M = 70 years-old; SD = 6.83).

UX Laddering builds upon Means-End Theory proposed by Gutman (1982), according to which people choose a product because its attributes are instrumental to achieve certain consequences and fulfill personal values. One valuable elicitation method for identifying attributes, consequences and values related to a product is laddering (Reynolds and Gutman 1988), an in-depth, one-to-one interviewing method that comprises both qualitative (interviewing) and quantitative techniques (matrix processing) for data acquisition and analysis. Through UX Laddering it is possible to elicit concrete and abstract attributes, functional and psychosocial consequences, and values related to user experiences. In our study, we investigated mid-air gesture interaction by three subsequent phases: (1) product interaction, in which participants used both touchscreen and mid-air gesture interaction (implemented with the WoZ technique) to take pictures to the surrounding environment; (2) preference ranking, in which participants indicated their preferred interaction modality, and (3) laddering interview, in which participants explained their preferences. In addition, to enhance subsequent recall (Kurtz and Hovland 1953), we asked participants to verbalize their thoughts and sensations during the interaction phase, and we elicited alternative situational contexts using photo prompts during the laddering interview.

A preliminary analysis of the collected data highlighted peculiar attributes of mid-air gesture interaction that might be particularly valuable for older adults. One of them is the fact that mid-air interaction does not need fine movements, which was connected to higher accessibility, and in particular contexts to safety (i.e., driving). Moreover, participants appreciated that mid-air gestures allowed them to interact with the device with unclean hands (e.g., while cooking), which they related to a reduced likelihood to damage the device. In addition, they valued the fact that mid-air gestures are a cleaner interaction modality than touchscreen.

4 Recommendations for the Design of Mid-Air Gesture Interaction for Older Adults

Based on the user studies and the observation of user interactions, we reviewed the initial gesture set and revised it according to user feedback and ergonomic guidelines. For each gesture (Fig. 6.2), Table 6.2 reports the positive and negative aspects emerged from the user studies, and how we dealt with these issues for the second release of the gesture set.

Elaborating on the results of our studies, the observations of user interactions and the users’ comments, and building on previous research, we derive the following recommendations for the design of mid-air gestural interfaces for older adults, although we believe they can also be extended to average users.

Prefer Human-Based Over Technology-Based Gesture Sets. One common approach to define a gesture set is to implement gestures that are easy for the computer’s recognition algorithms to recognize. However, this often results in a number of difficulties for the user, such as fatigue, difficulty of performing the gestures, memorability issues, illogical functionality. Gestures should be designed with a user-centered approach, possibly involving users in participatory design sessions and taking into account relevant characteristics of the users (Kortum 2008). Gestures should be intuitive, metaphorically logical toward functionality, easy to remember without hesitation (Hurtienne et al. 2010; Kortum 2008; Schiavo et al. 2017). Also, when designing sequences of gestures (e.g., vertical or horizontal scrolling), different commands should require only small adjustments in order to reduce cognitive load and physical effort.

Respect Ergonomic Principles and Biomechanics of the Hand. From the physical point of view, the design of mid-air gesture interaction interfaces for older adults should take into account poor manual dexterity issues, reduced eyesight and auditory capabilities, and slow speed in gesturing. Gestures should not be physically stressful and avoid static and dynamic constraints (Eaton 1997; Ferron et al. 2015; Keir et al. 1998), as well as outer positions and excessive force on joints. Recognition algorithm should be tolerant to non-stressing movements, avoiding the user to remain for long in static positions.

Find a Contextually Appropriate Way to Reduce False Positives. It is important for the system to know when to start and stop interpreting gestures. If the goal is a natural immersive experience, the system should be very tolerant to spontaneous gestures. Unfortunately, this is technically difficult. A solution is to adopt an unblock command, or clutch, which puts the device into a tracking state (Kortum 2008). However, our exploratory study with the Myo Air Gesture Armband with a 76 years-old woman (see Sect. 4.2), suggested that it may be hard for seniors to (1) remember to do the mid-air clutch gesture before every command, and (2) perform the sequence “clutch + command” in a timely manner. For these reasons, we suggest the use of a different input channel for the clutch, such as a voice command or a physical button.

Feedforward and Feedback. While feedforward helps the user to decide what actions to carry out and informs about the sensor’s field of view, feedback informs the user about the system status (Vermeulen et al. 2013). Consistently with previous research (Cabreira and Hwang 2016), we observed that it can be challenging for older adults to know where to perform the mid-air gestures, which gestures are available and how to perform them (see Sect. 4.6). Even if recent works have proposed different types of feedback and feedforward (i.e. Delamare et al. 2016), more research is needed on this topic.

Allow Personalization. Our studies (Ferron et al. 2018) showed substantial intersubject variability in the performance of mid-air gestures, which is consistent with previous works (Carreira et al. 2016). Since each user has his/her own preferences regarding to how performing a gesture (e.g., amplitude, speed or distance), it is recommended to allow manual or automatic dynamic thresholds that can be individually tailored.

Design for Fun, Daily Use and Social Connectivity. Our expert interviews and user studies (Ferron et al. 2015) highlighted the preferred recreational use of tablet devices by older adults, along with initial curiosity and enthusiasm, which however gives way to accessibility issues and fears of damage after the first interactions. In response to the users’ needs, the development of mid-air gesture interaction could be directed towards daily and recreational use of tablet devices, such as photography, social connectivity, news reading and Internet surfing, which has not received much attention yet (Carreira et al. 2016). New interaction modalities that take into account the particular needs of the ageing population and focus more on empowering seniors instead of helping them (Rogers and Marsden 2013) are still missing. By overcoming at least some of the accessibility pitfalls of touch and mouse-based interaction, mid-air gesture interaction could sustain mobile technology appropriation and provide additional means for fun.

5 Conclusion

The goal of this chapter was to provide a unique perspective on the design of effective mid-air gesture interaction with mobile devices for older adults, by inte-grating research on HCI and ergonomic principles with research on ageing, as well as taking into account user-centred design methods to meet older people’s needs and values.

We reported on how we implemented the design of mid-air gesture interaction in ECOMODE, engaging older users as primary actors in providing feedback at each step of the process. We discussed the most prominent design challenges of mid-air gesture interaction and we presented a number of user studies with older adults, following a user-centred and value-based design approach. Finally, we proposed a set of recommendations for the design of mid-air gesture interaction for older adults, based on the literature and on our case studies. Mid-air gestures have the potential to make interaction not only fun, but also easy, intuitive and natural for the older adults. However, making mobile technology more inclusive requires the active engagement of older people in envisioning the design of such technologies in ways that can not only improve accessibility, but also sustain the activities they care about.

Notes

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

References

Bobeth J, Schmehl S, Kruijff E et al (2012) Evaluating performance and acceptance of older adults using freehand gestures for TV menu control. In: Proceedings of the 10th European conference on interactive TV and video. ACM, pp 35–44

Bødker S (2006) When second wave HCI meets third wave challenges. In: Proceedings of the 4th Nordic conference on human-computer interaction: changing roles. ACM, pp 1–8

Borg G (1998) Borg’s perceived exertion and pain scales. Human Kinetics, Champaign

Boring S, Jurmu M, Butz A (2009) Scroll, tilt or move it: using mobile phones to continuously control pointers on large public displays. In: Proceedings of the 21st annual conference of the Australian computer-human interaction special interest group: design: open 24/7. ACM, pp 161–168

Cabreira AT, Hwang F (2016) How do novice older users evaluate and perform mid-air gesture interaction for the first time? Paper presented at the proceedings of the 9th Nordic conference on human-computer interaction, Gothenburg, Sweden

Camunas-Mesa L, Zamarreno-Ramos C, Linares-Barranco A et al (2012) An event-driven multi-kernel convolution processor module for event-driven vision sensors. IEEE J Solid-State Circuits 47:504–517

Carbini S, Delphin-Poulat L, Perron L et al (2006) From a Wizard of Oz experiment to a real time speech and gesture multimodal interface. Sig Process 86:3559–3577

Carreira M, Ting KLH, Csobanka P et al (2016) Evaluation of in-air hand gestures interaction for older people. Univers Access Inf Soc 16:1–20

Chen J, Proctor RW (2013) Response–Effect compatibility defines the natural scrolling direction. Hum Factors 55:1112–1129. https://doi.org/10.1177/0018720813482329

Cockburn A, Quinn P, Gutwin C et al (2011) Air pointing: design and evaluation of spatial target acquisition with and without visual feedback. Int J Hum Comput Stud 69:401–414

Cronin D (2014) Usability of Micro- vs. Macro-gestures in camera-based gesture interaction. Diplomarbeit, California Polytechnic State University, San Luis Obispo. (Zitiert auf Seite 21)

Delamare W, Janssoone T, Coutrix C et al (2016) Designing 3D gesture guidance: visual feedback and feedforward design options. In: Proceedings of the international working conference on advanced visual interfaces. ACM, pp 152–159

Eaton C (1997) Electronic textbook on hand surgery. http://www.eatonhand.com

England D (2011) Whole body interaction: an introduction. In: Whole body interaction. Springer, London, pp 1–5

Ferron M, Mana N, Mich O (2015) Mobile for older adults: towards designing multimodal interaction. In: Proceedings of the 14th international conference on mobile and ubiquitous multimedia. ACM, pp 373–378

Ferron M, Mana N, Mich O et al (2018) Design of multimodal interaction with mobile devices. Challenges for visually impaired and elderly users. In: 3rd international conference on human computer interaction theory and applications (HUCAPP). Funchal, Madeira. 140–146

Gerling K, Dergousoff K, Mandryk R (2013) Is movement better? Ccomparing sedentary and motion-based game controls for older adults. In: Proceedings of graphics interface 2013. Canadian Information Processing Society, pp 133–140

Gerling K, Livingston I, Nacke L et al (2012) Full-body motion-based game interaction for older adults. In: Proceedings of the SIGCHI conference on human factors in computing systems. ACM, pp 1873–1882

Green P, Wei-Haas L (1985) The rapid development of user interfaces: experience with the wizard of Oz method. In: Proceedings of the human factors society annual meeting. Sage, Los Angeles, CA, pp 470–474

Gutman J (1982) A means-end chain model based on consumer categorization processes. J Mark 46:60–72

Harrison S, Sengers P, Tatar D (2011) Making epistemological trouble: third-paradigm HCI as successor science. Interact Comput 23:385–392

Hassani AZ, Van Dijk B, Ludden G et al (2011) Touch versus in-air hand gestures: evaluating the acceptance by seniors of human-robot interaction. In: International joint conference on ambient intelligence. Springer, pp 309–313

Hincapié-Ramos JD, Guo X, Moghadasian P et al (2014) Consumed endurance: a metric to quantify arm fatigue of mid-air interactions. In: Proceedings of the 32nd annual ACM conference on human factors in computing systems. ACM, pp 1063–1072

Hurtienne J, Blessing L (2007) Design for intuitive use-testing image schema theory for user interface design. In: 16th international conference on engineering design. Citeseer, Paris, France. 829–830

Hurtienne J, Stößel C, Sturm C et al (2010) Physical gestures for abstract concepts: Inclusive design with primary metaphors. Interact Comput 22:475–484. https://doi.org/10.1016/j.intcom.2010.08.009

Jayroe TJ, Wolfram D (2012) Internet searching, tablet technology and older adults. Proc Am Soc Inf Sci Technol 49:1–3

Keir PJ, Bach JM, Rempel DM (1998) Effects of finger posture on carpal tunnel pressure during wrist motion. J Hand Surg 23:1004–1009

Kortum P (2008) HCI beyond the GUI: design for haptic, speech, olfactory, and other nontraditional interfaces. Morgan Kaufmann, Burlington

Kurtz KH, Hovland CI (1953) The effect of verbalization during observation of stimulus objects upon accuracy of recognition and recall. J Exp Psychol 45:157

Maguire M (2001) Methods to support human-centred design. Int J Hum Comput Stud 55:587–634

Mana N, Mich O, Ferron M (2017) How to increase older adults’ accessibility to mobile technology? The new ECOMODE camera. Paper presented at ForItAAL-Forum Italiano Ambient Assisted Living. Genoa, Italy.

Markussen A, Jakobsen MR, Hornb K (2014) Vulture: a mid-air word-gesture keyboard. Paper presented at the proceedings of the 32nd annual ACM conference on human factors in computing systems, Toronto, ON, Canada

Nacenta MA, Kamber Y, Qiang Y et al (2013) Memorability of pre-designed and user-defined gesture sets. Paper presented at the proceedings of the SIGCHI conference on human factors in computing systems, Paris, France

Ni T, Bowman DA, North C et al (2011) Design and evaluation of freehand menu selection interfaces using tilt and pinch gestures. Int J Hum Comput Stud 69:551–562

Nielsen M, Störring M, Moeslund TB et al (2004) A procedure for developing intuitive and ergonomic gesture interfaces for HCI. In: Camurri A, Volpe G (eds) Gesture-based communication in human-computer interaction. Springer, Heidelberg, pp 409–420

Pernice K, Nielsen J (2012) Web usability for senior citizens: design guidelines based on usability studies with people age 65 and older. Nielsen Norman Group

Reynolds TJ, Gutman J (1988) Laddering theory, method, analysis, and interpretation. J Advert Res 28:11–31

Rico J, Brewster S (2010) Usable gestures for mobile interfaces: evaluating social acceptability. In: Proceedings of the SIGCHI conference on human factors in computing systems. ACM, pp 887–896

Rogers Y, Marsden G (2013) Does he take sugar? Moving beyond the rhetoric of compassion. Interactions 20:48–57

Ruiz J, Li Y, Lank E (2011) User-defined motion gestures for mobile interaction. Paper presented at the proceedings of the SIGCHI conference on human factors in computing systems, Vancouver, BC, Canada

Ryan EB, Szechtman B, Bodkin J (1992) Attitudes toward younger and older adults learning to use computers. J Gerontol 47:P96–P101

Schiavo G, Mich O, Ferron M, Mana N (2017) Mobile multimodal interaction for older and younger users: exploring differences and similarities. In: Proceedings of the 16th international conference on mobile and ubiquitous multimedia. ACM, pp 407–414

Schiavo G, Ferron M, Mich O et al (2016) Wizard of Oz studies with older adults: a methodological note. Int Rep Socio-Inform 13:93–100

Vaidyanathan V, Rosenberg D (2014) “Will use it, because I want to look cool” a comparative study of simple computer interactions using touchscreen and in-air hand gestures. In: International conference on human-computer interaction. Springer, pp 170–181

Vanden Abeele V, Zaman B (2009) Laddering the user experience! In: User experience evaluation methods in product development (UXEM 2009)-workshop

Venkatesh V, Bala H (2008) Technology acceptance model 3 and a research agenda on interventions. Decis Sci 39:273–315

Vermeulen J, Luyten K, van den Hoven E, Coninx K (2013) Crossing the bridge over Norman’s gulf of execution: revealing feedforward’s true identity. In: Proceedings of the SIGCHI conference on human factors in computing systems. ACM, pp 1931–1940

Wigdor D, Wixon D (2011) Brave NUI world: designing natural user interfaces for touch and gesture. Elsevier, Amsterdam

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Ferron, M., Mana, N., Mich, O. (2019). Designing Mid-Air Gesture Interaction with Mobile Devices for Older Adults. In: Sayago, S. (eds) Perspectives on Human-Computer Interaction Research with Older People. Human–Computer Interaction Series. Springer, Cham. https://doi.org/10.1007/978-3-030-06076-3_6

Download citation

DOI: https://doi.org/10.1007/978-3-030-06076-3_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-06075-6

Online ISBN: 978-3-030-06076-3

eBook Packages: Computer ScienceComputer Science (R0)