Abstract

Alzheimer’s disease (AD) currently affects over 36 million people worldwide, without any approved disease-modifying products capable of slowing or arresting the disease, and prevalence expected to triple by 2050. Clinical trial failures attributable to design aspects could be reduced by developing drug-disease-trial (DDT) models suitable for clinical trial simulations (CTSs). To achieve this, noncompetitive sharing of standardized patient-level data is critical. Initiatives such as the Alzheimer’s Disease Neuroimaging Initiative (ADNI) or Critical Path Institute’s Coalition Against Major Diseases (CAMD) database have become foundational to the quantitative understanding of disease progression and clinical trial design aspects in AD. Understanding the performance of cognitive and functional outcome measures is also fundamental to the development of applicable CTS tools; especially under the current state of affairs, in which efforts are now being focused on pre-dementia stages (i.e., mild cognitive impairment, MCI). These CTS tools will likely be more successful under noncompetitive collaborations, and utilizing expertise and learnings from health authorities, sponsors, and academia, the CTS tool builds on key findings and structural elements from previous researchers while providing further refinements. This chapter describes relevant efforts in modeling and simulation utilizing DDT models in AD, focusing on cognition. This includes data considerations and descriptions of relevant public data sources available for AD model developers. It includes a brief description of previous work in the field, along with a description of common elements contained within DDT models currently used for CTS in AD. Examples of applications for study planning and study interpretation among other potential uses are also included. It concludes with a look at potential future applications of CTS in AD and areas for growth.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Alzheimer’s disease

- Clinical pharmacology

- Pharmacometrics

- Clinical trial simulation

- Drug disease trial model

- Drug development

15.1 Introduction

Alzheimer’s disease (AD) affects 35 million patients worldwide, with an expected increase to 150 million within the next generation (World Alzheimer’s Report 2010; Schneider and Sano 2009).

Recent late phase failures for candidate drugs for AD highlights the importance of developing more informative tools to increase the efficiency of the decision-making process (Schneider and Sano 2009). Currently available evidence suggests the initiating event in AD is related to abnormal processing of beta-amyloid (Abeta) peptide, ultimately leading to the formation of Abeta plaques in the brain. Jack et al. have proposed an overarching model that relates disease stage to AD biomarkers in which Abeta biomarkers become abnormal first, before neurodegenerative biomarkers and cognitive symptoms, and neurodegenerative biomarkers become abnormal later, and correlate with clinical symptom severity. This process can begin decades prior to any clinical signs of diminished cognition.

Ideally, a quantitative understanding of the time course of disease progression (cognitive and functional deterioration), and the relevant sources of variability would be the most useful for drug development . While the ability to detect and analyze biomarkers in the cerebrospinal fluid (CSF) related to Abeta and Tau have emerged over the past decade, limited longitudinal data are yet available to completely quantify each of the curves above. In addition, the ability of these biomarkers (Abeta, Tau, and brain structure) to translate into clinical outcome has not yet been determined. Attempts at meta-analytics for these endpoints are further hampered by factors such as interlaboratory assay and imaging algorithm differences. On the other hand, a wealth of information is available for the clinical manifestations, especially memory or cognition. As such, the majority of work involving clinical trial simulation (CTS) on AD has involved the primary clinical outcomes typically measured in these studies—function and cognition. While various tests have been used to measure functional changes across clinical studies, the Alzheimer’s disease assessment scale cognitive sub-scale (ADAS-cog) has been used almost universally in trials of mild and moderate AD patients to measure changes in cognition. As a result, the majority of CTS activities in AD have focused on variants of the ADAS-cog.

Assumptions about disease progression and the time-variant effects of placebo and existing drug treatments for AD form the basis for various decisions made in AD drug development, including decisions relating to trial design and analysis (Rogers et al. 2012). While ad hoc synthesis of estimates from a small number of trials can, in some cases, form sufficient evidence base for such assumptions, it is a generally a more informative and objective approach to concisely summarize all available and relevant data with the aid of a meta-analytic model (Rogers et al. 2012). Such a meta-analytic synthesis is particularly relevant in AD , where extensive historical data are available (Romero et al. 2009, 2011; Sheiner 1997). Moreover, models may be used to interpolate expected results and to simulate data under conditions that have not been previously studied, e.g., when sampling at different time points or when enrolling patients with a different set of covariates (Rogers et al. 2012). Such approaches also allow the incorporation of different sequences of active treatment and placebo (like staggered start or delayed withdrawal designs), while accounting for residual effects for both active treatment and placebo (Rogers et al. 2012; Holford and Peace 1992).

Standard statistical analysis methods (ANOVA, ANCOVA) are typically used for the predefined primary analysis of the results of the active treatment and control arms at the end of the randomized phase of trials in AD (Holford and Peace 1992). These approaches are also used for post-hoc subgroup analyses (mild vs. moderate ApoE4, carrier vs. noncarrier, background therapy vs. no background therapy, etc.) following large late-stage failed trials. In many cases these post-hoc analyses have lead to further development activities in these subgroups, often resulting in further failures. In addition to its role in CTS, a meta-analytic model can provide a useful informed prior consent when attempting to understand such post-hoc analyses.

This chapter describes relevant efforts in modeling and simulation-utilizing drug-disease-trial (DDT) models in AD (Gobburu and Lesko 2009), focusing on cognition. This includes data considerations and descriptions of relevant public data sources available for AD model developers. It includes a brief description of previous work in the field, along with a description of common elements contained within DDT models currently used for CTS in AD. Examples of applications for study planning and study interpretation among other potential uses are also included. It concludes with a look at potential future applications of CTS in AD and areas for growth.

15.2 Data Considerations

The data used to aid in the design of a clinical trial can come from a variety of sources. A team may use past and recent literature to inform them about expected treatment effects and current study designs in use. They may have patient-level data in their organization that informs them about expected intrasubject variability, intersubject variability, and interoccasion variability (Milligan et al. 2013). They often have past clinical trial experiences that they draw from (which varies between individuals). The team designing a clinical trial will attempt to implicitly integrate all of this information to form conclusions about what design is likely to be the best for the stage of development and the compound in question. The broader the data source(s) used with respect to patient types, study durations and designs, and patient inclusion/exclusion criteria, the more “rugged” the final model is likely to be.

Standardized quality data sources remain a significant hurdle to developing and implementing a longitudinal DDT model (Romero et al. 2009, 2011). Often, when pooling across different data sources (different studies, different programs, different sponsor), a significant amount of effort and resources are required to ensure that common standards for data collection and scoring have been adhered to (Romero et al. 2009, 2011). Small changes in something as simple as how missing scores are handled can lead to increased noise within the dataset. Often, an arduous remapping process may need to occur (if item level data are available). In addition, standards between analysts are likely to differ, making it nearly impossible to merge additional relevant datasets, without another lengthy remapping process.

In an ideal scenario, data standards would be applied a priori so that data are collected, scored, and recorded in a standardized form. The field of drug development for AD is at the forefront, being the first to have generated therapeutic area standards in this area, in a form accepted by FDA (Romero et al. 2009, 2011).

15.2.1 Relevant Data Sources for Modeling and Simulation in AD

Researchers aiming to develop a quantitative understanding of AD disease progression and drug effects, often start with data within their own organizations, or other proprietary data that have been made available to them . Often, however, they find that the data they have are limited in one or more ways, such as by limited numbers in subsets of interest (disease severity, genotype, biomarker classification). Generally, developers will utilize one or more of the number of large available data sources in AD, which may provide robust information to inform the different components of the DDT.

15.2.1.1 Literature Data

In the field of AD, a wealth of literature data from many different clinical trials and observational studies are readily available that can contribute to the development of quantitative modeling and simulation tools . While limited in its value for determining impact of individual patient covariates of disease or drug effects, it can provide valuable estimates of drug effects (size, onset, offset), disease progression within a trial, etc.

15.2.1.2 ADNI Studies

The longitudinal Alzheimer’s Disease Neuroimaging Initiative (ADNI; http://www.adni-info.org/) was launched in 2003 by the National Institute on Aging (NIA), the National Institute of Biomedical Imaging and Bioengineering (NIBIB), the US Food and Drug Administration (FDA), private pharmaceutical companies, and nonprofit organizations, initially as a 5-year public–private partnership (Weiner et al. 2012). Since 2005, the longitudinal ADNI has been validating the use of biomarkers including blood tests, tests of CSF, and magnetic resonance imaging–positron emission tomography (MRI–PET) imaging for AD clinical trials and diagnosis . Now in its third phase (ADNI, ADNI GO, and ADNI 2), ADNI 2 is studying the rate of change of cognition, function, brain structure, and biomarkers in 150 elderly controls, 450 subjects with mild cognitive impairment (MCI), 150 with mild-to-moderate AD, and a new group of 100 people with significant, yet subtle, memory complaints, referred to as the significant memory concern cohort. It has also added whole genome sequences (WGS) for 809 ADNI participants. Similar studies have also been launched in other regions, such as Japan (J_ADNI). As such, the ADNI study series will continue to be a rich and complete source of data on the natural history of AD at various stages .

15.2.1.3 The Coalition Against Major Diseases Database

Coalition Against Major Diseases (CAMD) is a formal consortium of pharmaceutical companies, research foundations, and patient advocacy/voluntary health associations, with advisors from government research and regulatory agencies including the FDA, the European Medicines Agency (EMA), the National Institute of Neurological Disorders and Stroke (NINDS), and the NIA. The CAMD is led and managed by the nonprofit Critical Path Institute (C-Path), which is funded by a cooperative agreement with the FDA (Romero et al. 2009, 2011) .

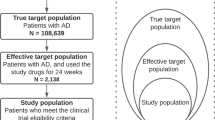

The CAMD database represents patient-level data from the control arms from phase II and III clinical trials in patients with MCI as well as mild and moderate Alzheimer’s dementia. As of September 2014, the CAMD database represents >6500 individual patients. Access to this database can be requested at www.codr.c-path.org. It is a rich source of control-arm data for the model developer.

In addition, CAMD partnered with the Clinical Data Interchange Standards Consortium (CDISC) to develop a standard for data collection in CDISC form. This AD standard represents the first-ever therapeutic area standards in CIDSC form. The intended advantage of such a standard is that it not only serves the purpose of integrating data from legacy clinical trials but it is also suited for prospective data collection in new trials, foreseeing the coming FDA requirement for data to be in CDISC standard form by 2017.

15.3 Summary of Disease Progression Models for ADAS-cog to Date

15.3.1 Historical Progression of AD Models

Various disease progression models for clinical outcomes in AD have been published (Holford and Peace 1992; Chan and Holford 2001) and the methods utilized in early publications laid the groundwork for future modeling work (Mould et al. 2007). Newer work provides further improvement and increased complexity and continues to build on past researchers findings, but incorporates newer, broader data types and sources, and utilizes new modeling methodologies resulting in an evolution of models over time (Table 15.1) .

Early models were based on a limited number of trials of short duration used to evaluate symptomatic agents and did not contain newer key data types such as genotype and biomarker information, now known to be important covariates in understanding the rate of disease progression (Atchison et al. 2007). Later models described utilizing a variety of data types including summary level data from literature sources, data directly from one or more of a related series of controlled clinical trials, or noninterventional natural history studies. Rogers et al. have attempted to integrate all these sources in one analysis.

Historical models primarily described AD disease progression as linear, which was sufficient for simulation of trials of the shorter durations used for the development of symptomatic agents. The Ito literature model identified that the severity of the disease itself influenced the slope, and thus the slope changed over time (introducing nonlinearity). More recent models have directly incorporated nonlinear relationships to describe the course of disease over time .

In addition, these models lacked certain structural features that would improve their use for CTS, such as constraining the limits of the ADAS-cog (0–70), and allowing for variance components to change over time (an essential feature if the model is to be used for CTS of disease progression for AD).

The models described in the literature also improved with respect to all the components typically required for a DDT model. A DDT model that includes all these components would require underlying data that can inform each of the various trial components in the model. For example, natural history data to inform underlying disease progression, placebo arm data to inform about magnitude, onset and offset of placebo response in controlled clinical trials, estimates of various drug effects (magnitude, time to onset, and durability), rate and magnitude of dropouts in the trials, and a rich source of covariates for model building. Over time, more and more of these components have been added in .

A brief description of more recent work is provided below.

15.3.2 Model-Based AD Literature Meta-Analyses

15.3.2.1 Ito (2010)

Ito et al. (2010) applied a model-based meta-analysis to summary level data available in the literature to quantify the dependence of rates of progression on baseline ADAS-cog scores . In this analysis, a systematic literature review from 1990 to 2008 for all available AChE inhibitor studies as well as clinical studies that evaluated the rate of deterioration in AD patients was conducted. From 52 trials, which represented approximately 19,992 patients and more than 84,000 individual observations, a total of 576 mean ADAS-cog change-from-baseline data points were collected. Based on the data available from these articles, a model was developed to describe the longitudinal response in ADAS-cog (change from baseline) in mild-to-moderate severity AD patients. The model described the rate of disease progression, the placebo effect observed, and the symptomatic effect of AChE inhibitors. Baseline ADAS-cog, mini-mental state examination (MMSE), age, and publication year were tested as covariates .

Ito’s model reports that disease progression in mild-to-moderate AD patients across all available and relevant literature sources was estimated at 5.5 ADAS-cog units per year. An Emax-type model best described the symptomatic drug effect for AChE inhibitors. The rate of disease progression (underlying disease progression) was not different between placebo and AChE-inhibitor-treated groups. Unlike previous modeling work, which did not include covariates, Ito’s model identified baseline ADAS-cog as significant covariate on disease progression. Baseline age was also tested as a covariate on the rate of disease progression but the model was not able to describe any effect, likely due to the narrow distribution of mean age (literature-level analysis). There was no significant impact of publication year in the model.

The literature-based meta-analyses provided a useful and complete integration of the estimated natural history of AD and provided estimates of treatment effects for currently available AChE-inhibitor therapies. However, due to the nature of the literature data in that it is only study-level summary data; the model had limited ability to evaluate important individual covariates, such as age and ApoE4 genotype. Also, the meta-analysis model from the literature using study-level data neither provides intersubject variability information nor includes components for increasing variance over time .

15.3.3 Patient-Level Models

15.3.3.1 Ito ADNI Model (2011)

In 2011, Ito et al. published a patient-level model-based meta-analysis to describe the longitudinal response in ADAS-cog obtained from the ADNI (Ito et al. 2011). The model was fit to the longitudinal ADAS-cog scores from 889 patients. Risk factors (age, ApoE4 genotype, sex, family history of AD, and years of education) and baseline severity were tested as covariates. Results indicated that rate of disease progression increased with baseline severity. Age, ApoE4 genotype, and sex were identified as potential covariates influencing disease progression . The rate of disease progression as described by the ADAS-cog in mild-to-moderate AD patients was estimated at approximately 5.5 ADAS-cog units/year, similar to that reported using literature-based analyses .

The authors concluded that a linear disease progression model adequately described the natural decline of ADAS-cog observed in ADNI over 2–3 years within the individual patients. Baseline severity, which is incorporated into the model to explain the nonlinearity of the disease progression, is an important covariate to predict a curvilinear rate of disease progression in normal elderly, mild MCI and patients with Alzheimer’s dementia. Age, ApoE4 genotype, and sex also influenced the rate of disease progression.

15.3.3.2 Samtani ADNI Model (2012)

The objective of the Samtani et al. analysis was to develop a semimechanistic nonlinear disease progression model from the ADNI study, but that used an expanded set of covariates that captured the longitudinal change of ADAS-cog scores (Samtani et al. 2012). The model described the rate of progression and baseline disease severity as a function of influential covariates. The covariates that were tested fell into four categories: (1) imaging volumetric measures, (2) serum biomarkers, (3) demographic and genetic factors, and (4) baseline cognitive tests .

Covariates found to affect baseline disease status were years since disease onset, hippocampal volume, and ventricular volume. Disease progression rate in the model was influenced by age, total serum cholesterol, ApoE4 genotype, trail-making test (part B) score as well as current levels of cognitive impairment as measured by ADAS-cog. Rate of progression was slower for patients with mild and severe AD compared with moderate AD.

15.3.3.3 Faltaos Model (2013)

This research aimed to quantitatively describe the natural progression of AD based on ADAS-cog scores in patients with mild-to-moderate AD utilizing data from ten placebo-controlled clinical trials submitted to the FDA (> 2600 patients) with up to 72 weeks of treatment (William-Faltaos et al. 2013). Different models describing the time course of ADAS-cog were evaluated. Patient characteristics potentially affecting score changes were assessed. Patient-dropout patterns were characterized using parametric survival models. Covariate selection was performed to identify the risk factors associated with a higher dropout rate. In this case, the ADAS-cog time course in mild-to-moderate AD patients receiving placebo was described by a log-linear model, where the intercept represents the log-transformed ADAS-cog score at week 10 , the slope is the disease progression (i.e., natural increase of ADAS-cog score) on the log scale. Covariates influencing the intercept were baseline ADAS-cog score and baseline MMSE score. No covariates were identified that influenced the disease progression slope. A parametric log-normal model fit the dropout data best. Baseline ADAS-cog score and age were found to be significant predictors for dropout.

15.3.4 Integrative Meta-analytic Approaches

15.3.4.1 Rogers Model (2012)

This research aimed to incorporate many of the best elements of the models described above in a beta regression (BR) model (Rogers et al. 2012) . The use of the BR constrained simulations to the 0–70 range of the ADAS-cog, even when residuals were incorporated. In addition, the model described the longitudinal progression of the 11 item ADAS-cog in AD patients in both natural history and randomized clinical trial settings, utilizing both individual patient and summary level literature data. Patient data from the CAMD database (3223 patients), the ADNI study database (186 patients), and summary data from 73 literature references (representing 17,235 patients) were fit to a BR DDT model. Treatment effects for currently available acetyl cholinesterase inhibitors, longitudinal changes in disease severity, dropout rate, placebo effect, and factors influencing these parameters were estimated in the model. Based on predictive checks and external validation, the researchers concluded that an adequate BR meta-analysis model for ADAS-cog using both summary-level and patient-level data was developed. Baseline ADAS-cog was estimated from baseline MMSE score. Disease progression was found to be dependent on time, ApoE4 status, age, and gender. Study dropout was a function of time, baseline age, and baseline MMSE .

The model allowed for simultaneous fitting of summary and patient-level data, allowing for integration of all information available. A further advantage of the BR model was that it constrained values to the range of the original instrument for simulation purposes, in contrast to methodologies that provide appropriate constraints only for conditional expectations.

15.4 Review of Structural Components for Models in AD

Table 15.2 lists the general basic components of a DDT model, as described by Gobburu and Lesko (2009).

15.4.1 Disease Model Components

Understanding both the placebo response and the natural underlying disease progression is crucial to designing and interpreting results from AD clinical trials, given that it is sometimes difficult to differentiate the placebo effect and underlying disease progression in longitudinal studies, resulting in misinterpretation of the study results. Several authors have proposed that the placebo response be assumed to consist of “underlying disease progression” and “placebo effect,” where “underlying disease progression” describes the natural history of the disease, and the “placebo effect” represents a temporal component, i.e., such as psychological effect, or any effect derived from the conduction of and participation in clinical trials.

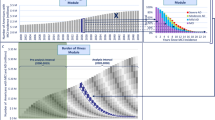

Figure 15.1 illustrates the concept behind the longitudinal diseases progression model, where the overall observed placebo response in a trial (C) is simply the addition of the underlying disease progression (A) and placebo effect (B). Increase in ADAS-cog score indicates cognitive deterioration over time.

In general, the shape described above, is adequate to describe both data reported from the literature and from patient-level data collected in placebo-controlled clinical trials, as shown in Fig. 15.2.

Observed placebo response for change from baseline Alzheimer’s disease assessment scale cognitive sub-scale (ADAS-cog) from literature, 1990–2008. (Top: all data with loess line and model prediction; bottom: CAMD studies; the blue line and gray-shaded area in the figure indicate a lowess fit line with 95 % confidential intervals)

15.4.1.1 Natural Longitudinal Progression

In the case of natural history studies, such as ADNI, a placebo effect is not required, and the time course may be described by the underlying natural history of disease progression (Fig. 15.3).

Historically, the progression rate has been modeled as a linear function. Although the data appear linear over a short duration, given the scale is finite, and that most AD patients reach the maximum as they become more severe (requiring the use of other measures such as the severe impairment battery) the data are better described by a sigmoid-like function restricted between 0 and 70 (the limits of the ADAS-cog score; Rogers et al. 2012; Samtani et al. 2012).

Recently, different researchers (Ito et al. 2010; Samtani et al., 2012; William-Faltaos et al. 2013; Ashford and Schmitt 2001) have provided important insights into the nonlinear progression of AD. Ito et al. (2010) analyzed the ADNI data using a linear AD progression model based on a population-based mixed effects approach (with a function to introduce nonlinearity based on baseline severity), Ashford and Schmitt (2001) applied a logistic model to characterize disease progression, while Samtani et al. (2012) developed a nonlinear mixed effects model. Samtani et al. (2012) proposed a logit function that restricts the ADAS-cog scores to the test’s intrinsic range of 0–70 points. Samtani’s models were in turn captured using a Bayesian meta-analytic approach by Rogers et al. (2012) thus giving a comprehensive aggregation of literature- and patient-based knowledge.

In essence, the disease progression function proposed by Samtani et al. (2012) and Ito et al. (2010) has been integrated by Rogers et al. (2012), and can be described as the following logit function:

Relevant covariates that affect the “intercept” (η pk ) that have been identified by different authors include: baseline disease severity as expressed by ADAS-cog or MMSE, baseline age, and age of onset of disease (which is usually derived from arguably unreliable data from dates of first diagnosis). ADAS ipk denote the observed ADAS-cog score on the ith occasion in the pth patient in the kth study. In turn, t ipk represents the time of the observation relative to the randomization time for that given patient.

In line with current thinking regarding AD pathophysiology, covariates identified by several authors as affecting the rate of progression α pk have included ApoE4 genotype, total blood cholesterol, baseline age, gender, and baseline disease severity (baseline ADAS-cog or baseline MMSE).

15.4.1.2 Placebo Function Components

The onset, offset, and overall extent of placebo effect has been successfully estimated and described in the past by the use of a first order appearance (onset) and a first order disappearance (offset) constant, commonly known as a Bateman-type function (Holford and Peace 1992; Ito et al. 2010):

where β p is a factor defining the magnitude of the placebo effect, Kel p is the rate constant for the offset rate of the placebo effect, and Keq p is the rate constant for the onset rate of the placebo effect.

15.4.2 Drug Model Components

The selection of drug model components and the underlying assumptions around it are highly dependent on the proposed use of the model, the mechanism(s) postulated, and information available on the compound(s) of interest. In the past, models have attempted to describe either symptomatic effects or disease-modifying effects.

15.4.2.1 Symptomatic Effect

Agents thought to have “pure symptomatic” effects can be expressed as a shift in the overall disease progression curve, without a change in the rate (slope) of progression (Holford and Peace 1992; Samtani et al. 2012; Bhattaram et al. 2009). Estimates for magnitude, onset and offset of these symptomatic effects can be estimated from data available for currently approved drugs, or from proprietary data.

It has been proposed that Emax-type models adequately describe the symptomatic drug effect for cholinesterase inhibitors, which are the main currently available symptomatic treatments for AD. For example, Ito et al. (2010, 2013) proposed the following expression:

where D ipk represents the dose administered to a given patient at a given occasion in a given trial, E Δ,d(p) denotes the maximum symptomatic effect at a given dose for a given patient, and ET 50,d(p) expresses the time at which 50 % of the maximum symptomatic effect is achieved for a given patient at a given dose.

15.4.2.2 Disease-Modifying Effect

Agents thought to have “pure disease-modifying” effects can be expressed as a change in the rate of progression (α pk in the model defined above) without a shift in the overall disease progression curve (Holford and Peace 1992; Samtani et al. 2012; Bhattaram et al. 2009). Currently, there are no FDA-approved disease-modifying treatments, which constitute a limitation in terms of available data for modeling purposes.

As mentioned previously, covariates identified by several authors as affecting the rate of progression have included ApoE4 genotype, total blood cholesterol, baseline age, gender, and baseline disease severity (baseline ADAS-cog or baseline MMSE). As such, it is possible to “enrich patient populations using these factors to observe a faster rate of progression, and theoretically, to observe a disease-modifying effect in a treatment arm more easily”. This has led researchers to propose the inclusion of proportional hazard functions to the rate of progression, in which a given percent modification (reduction) in the rate of progression could be incorporated into the disease progression function described before.

15.4.2.3 Dropout Component

Since the described modeling approaches aim at characterizing the complete data distribution, summary statistics based on direct simulation from the model would not correctly mimic the behavior of real summary statistics, as actual summary statistics usually are computed using incomplete data. Even in the unlikely scenario that the true missing data mechanism (MDM) is missing completely at random (MCAR), the real summary means will be based on fewer observations than their simulated counterparts, and the latter will therefore have standard errors that are unrealistically low. Accordingly, for the purpose of model validation, a MDM or “dropout model” is a reasonable component to be incorporated for simulation purposes.

Here, the work of William-Faltaos et al. (2013) constitutes an important example. As explained before, these authors identified the Weibull distribution as the one that would most adequately characterize the evolution of the dropout hazard over time, and the two most important covariates for the dropout hazard were baseline age and baseline disease severity.

As implemented in the Rogers et al. work, the fitted dropout model utilizing these two covariates exhibited a high degree of agreement with the observed dropout rates, as seen in Fig. 15.4. The model adequately captures the dropout rate both by baseline MMSE and by age in these two plots.

Plot of probability (dropout) over time by baseline age (upper panel) and baseline mini-mental state examination (BMMSE, lower panel). (Solid line represents Kaplan–Meier (nonparametric) estimates based on observed data; dashed line represents model prediction; grey region represents 90 % credible interval for model prediction)

It is important to note that such a working model for the MDM is reasonable to employ for the purpose of model validation. However, for the purpose of model fitting, researchers can assume less restrictive conditions that are required for ignorability of the missing data mechanism, implying that posterior distribution for parameters describing the complete data distribution may be computed using the observed response and covariates.

A fully realistic MDM would be fairly complex and correspondingly would require substantial justification. Moreover, since the true MDM is never known, the issue cannot be adequately addressed without considering several MDMs, including various combinations of missingness related to tolerability, missingness related to lack of efficacy, and missingness associated with disease state. While this full treatment would be a desirable research project, currently available work is only able to propose a plausible “working hypothesis” MDM based on observed associations between baseline covariates and dropout.

15.4.3 Meta-analytic Integration of Literature and Patient-Level Data

As explained before, there are a number of relevant databases for modeling and simulation in AD, which represent patient-level and study-level data from both observational studies and clinical trials. These can be combined with additional patient-level data from active treatment arms that may be available to specific researchers. However, the amount of data available from the scientific literature should not be ignored (especially when trying to incorporate drug effects into models). In order to adequately integrate those patient-level data with summary-level information, Gillespie et al. (2009) have proposed a Bayesian implementation, which allows a probabilistically correct synthesis of literature meta-data with patient-level data. Additional contributions have also been made by Rogers et al. (2012), in terms of applying β-distributed residuals in conjunction with a generalized logistic function for expected disease progression (i.e., “BR”), with the defining feature of specifying the residual scores for a given patient as following a β distribution. This results in a predictive distribution that falls entirely within the 0–70 range of the ADAS-cog, which is a valuable feature for the purpose of simulating clinical trials.

Regarding the challenge of integrating patient-level and summary-level information, Gillespie et al. (2009) propose that the summary-level data be modeled by directly specifying likelihoods based on approximate sampling distributions. As has been explained before, the model for individual ADAS-cog scores is nonlinear, and the exact sampling distributions for sample means are not available in analytical form. An elegant solution proposed by Rogers et al. (2012) concentrates on the approximate linearity of the logit function over the range of primary interest to derive the approximate distributions. These approximations can then be employed as the operational likelihoods.

15.5 Example Applications

15.5.1 Planning Prospective Trials

These models described previously have had varying degrees of applicability for CTSs, understanding CTS as a means of estimating relevant operating characteristics for essentially any clinical trial design under any hypothesized parameter configuration for the “true” effects of a drug. It may be used to assess how different trial design and drug factors affect trial performance. These factors may be controllable trial design properties, such as the doses studied, the sampling times, the optimal study duration and sampling times, and use of washouts (Gobburu and Lesko 2009) or uncontrollable factors, such as the drug characteristics (pharmacokinetic or pharmacodynamics; Hennig et al. 2009). Other influencing factors may include the progression of disease over time or subject-specific characteristics that may be related to disease progression or treatment response.

15.5.1.1 “Super Symptomatic” Agents

“Super symptomatic drug effect” is conceptualized as superior clinical efficacy to currently available symptomatic therapies, either as stand-alone or as add-on therapy. Such a super symptomatic drug profile is desired for new symptomatic drugs under development for AD.

As stand-alone therapy, the drug could achieve the super symptomatic effect either by having superior acute cognitive enhancement or by having acute symptomatic benefit similar to marketed agents plus disease-stabilizing attributes. However, for compounds with new mechanisms of action, it remains unclear which of these could occur.

Given the extremely high failure rate in neurodegenerative drug development programs, it is often the goal in early development to obtain an early and cost effective read of efficacy for compounds thought to be predominantly “symptomatic” agents. In this scenario, a crossover design may provide more to detect a difference rapidly, but would underestimate the total treatment effect relative to the longer parallel trial design typically used.

Two drug profiles were studied under this scenario. In this study, the desired super symptomatic drug effect was set as 3.5 points of ADAS-cog better than placebo at week 24. For the first drug profile, it was assumed that the drug had a superior symptomatic drug effect of 3.5 points on ADAS-cog at 24 weeks but similar drug onset compared to donepezil (thus, the first profile is E drug,24week = 3.5 and ET50 = 1.62 week). For the second drug profile, it was assumed that the drug had donepezil-like symptomatic effect (E drug,24week = 2.5) and a moderate disease-stabilizing effect (i.e., 50 % decrease on slope). In both drug scenarios, true drug effect would be 3.5 points on ADAS-cogat 24 weeks, which is the desired effect for the super symptomatic treatment.

The objectives of early studies to advance drug candidates that are expected to have a super symptomatic profile are not only to test whether the drug is better than placebo but also to obtain certain confidence that the estimated drug effect would achieve the “target value.” Typically, this target value is defined by current standard of care, regulatory requirements or other thresholds for evidence of efficacy. The confidence level required is dependent on multiple factors, like the stage of drug development , the overall development plan and the medical need for the drug. The drug candidate in this scenario was considered to be at an early stage of development, so the confidence requirement was not as high as that for a drug in later stages of development. Herein, we defined that we would need at least 25 % confidence the drug is 3.5 points or more, better than placebo on the ADAS-cog scale after completion of the study in order to continue the drug as a potential super symptomatic treatment. Besides the 6-week crossover and 12-week parallel designs as described above (a and b), a third candidate trial design was also evaluated, which was a two-arm parallel design (75 patients per arm) with 24 week treatment duration and assessments at weeks 0, 3, 6, 9, 12, and 24. The primary analysis is based on a linear mixed-effects model with random subject effect and fixed effects for baseline ADAS-cog, visit (nominal scale), treatment, and visit by treatment interaction, with drug effect formulated as the expected difference at week 24.

Figure 15.5 displays the average simulated results for a 6-week crossover design and a 12-week parallel design using a symptomatic drug that was similar to donepezil (2.5 points superior to placebo on ADAS-cog at week 24, ET50 of 1.62 week and washout half-life of 1 week). In the crossover design, under these assumptions, the treatment effect (difference between placebo and treatment) at the end of each 6-week period is independent of the treatment period. Thus, in this context a crossover design has the potential to reduce the sample size while maintaining appropriate power to demonstrate the drug benefit.

The simulation results showed that approximately 89 % power was achieved with 30 patients per arm (60 patients in total) in a 6-week crossover study (Table 15.3). The power of a 12-week parallel design with 75 patients per arm (150 patients in total) was about 82 %. Meanwhile, as expected, the relative bias of the 6-week treatment in the crossover study (− 17.3 %) was higher than the 12-week parallel study (− 7.3 %), both of which would underestimate the true drug effect at week 24, given the achievement of a partial drug effect over the duration of the study. As also shown in Table 15.3, with a slower drug onset (e.g., ET50 of 3 weeks, two times that of donepezil), the power in a 6-week crossover study (81 %) still remained comparable to a 12-week parallel study (79 %), although the difference of the relative bias for 6-week crossover study versus 12-week parallel study significantly increased. Depending on the primary goal of the study, the development team can use these results to determine the trade-off between the increase in bias and the gain in power. For example, when the objective of the study is to test if the drug has any effect rather than to measure the steady-state treatment effect, the crossover design would be favorable due to smaller sample size and higher power.

For super symptomatic drug scenarios, two different types of drug profiles, superior acute symptomatic drug effect (E drug,24week = 3.5) and acute symptomatic benefit plus disease-stabilizing drug effect (Edrug,24week = 2.5 plus 50 % decrease on slope) were assumed and studied. Three study designs of interest (6-week crossover, 12-week parallel and 24-week parallel studies) were simulated and compared for each drug profile and the results are displayed in Table 15.4.

When a drug exhibited the desired super symptomatic efficacy (3.5 points at week 24) on ADAS-cog measures, the power to detect the drug effect was high regardless of the design (≥ 92 %) for the superior symptomatic drug profile and the symptomatic plus disease-stabilizing drug profile. However, as expected, the true drug effect at week 24 would be underestimated in both the 6-week crossover study and, to a lesser extent, the 12-week parallel study. The bias of drug effect estimates increased remarkably for drugs with acute symptomatic plus disease-stabilizing effects compared to a drug with only superior acute symptomatic effect (− 36.7 vs. − 17.8 % in 6-week crossover study and − 19.0 vs. − 7.0 % in 12-week parallel study, respectively).

The probability for a drug to achieve the target value (3.5 points with at least 25 % confidence) in each study design is shown in Table 15.4. For a drug having superior acute symptomatic effect, the probability was 41 % in a 6-week crossover study and increased to 65 % in a 12-week parallel study while the probability was estimated as 73 % for a 24-week parallel study under the assumption that the true effect was 3.5 points at week 24. However, if a drug achieved the super symptomatic profile by having combined symptomatic and disease-stabilizing effects, the probability would be only 11 and 45 % in a 6-week crossover study and a 12-week parallel study, respectively. The probability remained the same (72 %) in a 24-week parallel trial since the true drug effect was still 3.5 points at week 24.

15.5.2 Retrospective Analyses

Given the high attrition, and often late stages of failure of compounds developed for AD, it is not surprising that following a negative trial a large number of post-hoc analyses are completed to determine if a group of responders can be identified. Typically these post-hoc analyses are data cuts done by disease severity (very mild, mild, and moderate), ApoE4 genotype (carriers vs. noncarriers) CSF Abeta or Tau cuts, gender, age, etc. Given the number of analyses involved (often without correction for multiplicity), and the smaller sample sizes, the likelihood of a false positive being identified is high.

Alternatively, a small parallel design POC trial may yield positive results in a select group of study centers or patients, but the results are not replicated in larger, multinational trials in phase III. The question then becomes which study represents the true potential of the new agent?

15.5.2.1 Using Drug Models to Facilitate Interpretation of Study Results

Figure 15.6 (upper panel) shows results from two phase-II clinical trials for two different compounds (drug A and drug B) with similar inclusion criteria. In both cases, based on change from baseline, it appears as though a treatment effect was present as compared with the placebo group in each study. However, when these two clinical trial results are compared against the historical control data overlaid along with model predictions conditioned for baseline severity, it appears that the placebo response in the drug B trial was much worse than what would be predicted (Fig. 15.6, lower panel). Conversely, the treatment arm in trial B appears to be where the expected response for placebo usually falls. Given this result, and without clear rationale for why a difference in placebo response would be observed, the clinical team concluded that the placebo response in the drug B study was not normal and needed more data to confirm the efficacy before taking further action (Ito et al. 2013).

Phase II clinical trial results from different drugs: change from baseline Alzheimer’s disease assessment scale cognitive sub-scale (ADAS-cog, mean ± SE). Upper panels: The typical plot obtained after completion of the clincial trial, compared with the controlled placebo arm within the study. Lower panels: The same data above are overlaid with the historical data (literature) and its model prediction. (Reprinted from Ito et al. 2013, Copyright 2013, with permission from IOS Press)

15.5.2.2 Comparison of Phase II to Phase III Results

In this example, the treatment group from a 52-week, multi-center, placebo-controlled, double-blind phase-II study demonstrated a significant effect in a phase-II study, followed by a 26-week, placebo-controlled, double-blind, multi-center, global (including USA, Latin America, Europe, and Russia) phase-III study (Fig. 15.7, upper panel). The phase-III results appeared markedly different from the phase-II study, in the sense that there was no significant difference between the treatment and the placebo control groups. Unaided, by historical reference, the clinical team questioned the placebo response in the phase-III study, which appeared almost flat (Ito et al. 2013).

Different results between phase-II and phase-III studies: change from baseline Alzheimer’s disease assessment scale cognitive sub-scale (ADAS-cog, mean ± SE). (case study 2). Upper panels: The results obtained after completion of phase II (left) and phase III (right) studies compared with the controlled placebo arm displayed with the range of the treatment duration (52 and 26 weeks for phase II and III, respectively). Lower panels: The same data are overlaid with the historical data (literature) and its model prediction, using the same x- and y-axis range. The size of point is proportional to the number of patients in each treatment group. (Reprinted from Ito et al. 2013, Copyright 2013, with permission from IOS Press)

Phase-II and phase-III clinical trial results were then compared against the historical control data and with model predictions conditioned for baseline severity. In this case, the placebo response in phase II and III could be quantitatively assessed against historical controls and was deemed well within the normal range; it was still within the 90 % prediction intervals when compared with historical placebo response and model prediction (Fig. 15.7, lower panel). It was revealed that the placebo responses in both phase II and phase III were reasonable, and it was the treatment group that appeared different, resulting in a failed phase-III trial. Note that symbol size (points) in Fig. 15.7 (lower panel) is proportional to the sample size of the study, i.e., bigger symbols indicate larger sample studies. This method is useful when visualizing information about the size of the study and comparing different studies, as is also seen in Fig. 15.6.

15.6 Discussion and Future Perspectives

15.6.1 Moving to Early AD: Selection and Modeling of Selective Subscores of the ADAS-cog

With the understanding that AD pathology and irreversible neuronal damage are present decades before presentation of clinical symptoms, researchers are moving to study disease-modifying agents in patients at a much earlier stage of the disease. The trade-off is that in these populations, it becomes harder to measure changes in cognition and function, as the magnitude of impairment and the overall rates of change in early AD are much slower.

Although some success has been noted in modeling longitudinal changes in cognition in MCI and early AD populations with ADAS-cog, it is unlikely that the total ADAS-cog (designed for use in mild and moderate patients) will be sufficiently sensitive to be used in these populations. Other measures have been proposed for early AD Huang et al. (2014), and yet other composites, containing ADAS-cog subscales sensitive in early AD, are under development. For example, using ADNI data, Samtani et al. (2013) also identified the most informative cognitive measures from the ADAS-Cog and other available scales. Informative measures were identified based on standardized mean of 2-year change from baseline and were combined into novel composite endpoints. They assessed performance of the novel endpoints based on sample size requirements for a 2-year clinical trial. Further improvements were achieved by using cognitive-functional composites. Combining the novel composites with an enrichment strategy based on CSF beta-amyloid (A(1–42)) in a 2-year trial yielded gains in power of 20–40 % over ADAS-Cog 11, regardless of the novel measure considered.

15.6.2 Integrating Data Across the Entire AD Spectrum:the IRT Approach

As the number of tools designed for specific parts of the AD continuum continues to grow, the result is a further fragmentation of the tools used to capture the changes in the AD patient’s cognition over time, and likely the need to develop new longitudinal DDT models when sufficient data becomes available.

Ueckert et al. (2014) have proposed an alternative model-based framework that maximizes the precision gained from the existing ADAS-cog assessments in any AD population, utilizing an item response theory (IRT) model for the ADAS-cog assessment. In the framework of IRT, the questions of the ADAS-cog (or any other cognitive measurement instrument now or in the future) can be described through characteristic curves, which describe the probability to answer correctly given a certain cognitive disability. This in essence, allows mapping of other instruments to a common scale. Based on a prior assumption about the distribution of cognitive disability in the population and the characteristic curves of the questions, IRT delivers an estimate of the most likely cognitive disability given a patient’s response. By combining the entire knowledge gained from all questions (right or wrong; easy, hard, harder), a more precise estimate of the patients’ ability can be obtained than that which would be obtained from just the simple summing of all scores typically used.

Using this approach, Ueckert et al. determined the most sensitive test subsets in MCI and mild AD populations using Fisher information. The IRT-based framework would allow use and comparison of data from any cognitive instrument (present or future), to permit instrument-independent assessment of cognition of the patient over the entire span of the disease.

15.6.3 Future

The development of models for CTS in AD have evolved continuously, as the understanding of the disease improves, and as more sophisticated modeling techniques become available. Complete DDT models require a variety of data types that support each of the components (natural progression, placebo effect, drug effects, dropouts, etc.). Obviously, no single study can provide all the relevant information for all components at once. This means that the integration of disparate data sources becomes key. Normally, literature knowledge, public access data (CAMD, ADNI), and data within one’s own organization inform decision making in drug development. Rogers et al. (2012) attempted to integrate all these relevant data sources to inform each component.

While it may be that newer models have made incremental improvements in describing longitudinal changes over a longer duration, added trial execution components, such as dropout, lengthened the duration for which simulations can be completed; the key structural elements and idea behind the work remain similar in all the work reported here. At some point it may be beneficial for the field to adopt one common background model to move forward as a community. CAMD, FDA, and EMA have come together to evaluate the work of Rogers et al. as such a common background model. This CTS tool was put by CAMD through the first regulatory review process of this kind for modeling and simulation tools. As of June 12, 2013, FDA issued a regulatory letter to CAMD, regarding the Agency’s decision to deem this proposed clinical trial simulator as a “fit-for-purpose” drug development tool for AD. In the Agency’s opinion, this tool will assist sponsors in optimizing clinical trial designs for AD therapies. As the first ever stand-alone CTS tool to receive a regulatory decision, this tool represents a milestone in the effort to improve the efficiency and success of future clinical trials by integrating knowledge gained from earlier studies. Moreover, since it was developed through a partnership involving multiple pharmaceutical companies, regulatory agencies, patient groups, academia, and research organizations, the tool demonstrates that stakeholders can come together precompetitively to develop tools that will benefit the entire field. In issuing a positive regulatory decision for the CAMD AD CTS tool, the FDA stated that the model can now be used to aid in the selection of clinical trial design features for mild-to-moderate AD, and that the use of this tool can facilitate the review of new drug protocols. http://www.fda.gov/AboutFDA/CentersOffices/OfficeofMedicalProductsandTobacco/CDER/ucm180485.htm. Accessed 09 Sep 2014. The FDA also recommended that sponsors help update the tool with new information about drugs under development. For example, incorporating clinical and biomarker data from patients at earlier stages of the disease could expand the usefulness of the tool in helping sponsors design appropriate clinical trials to evaluate novel therapeutic candidates.

The EMA has also endorsed the tool in Europe, through the issue of a positive qualification opinion for the CTS tool in AD drug development. http://www.ema.europa.eu/ema/pages/includes/document/open_document.jsp?webContentId=WC500146179. Accessed 09 Sep 2014

References

Ashford JW, Schmitt FA (2001) Modeling the time-course of Alzheimer dementia. Curr Psychiatry Rep 3:20–28

Atchison TB, Massman PJ, Doody RS (2007) Baseline cognitive function predicts rate of decline in basic-care abilities of individuals with dementia of the Alzheimer’s type. Arch Clin Neuropsychol 22(1):99–107

Bhattaram VA, Siddiqui O, Kapcala LP, Gobburu JV (2009) Endpoints and analyses to discern disease-modifying drug effects in early Parkinson’s disease. AAPS J 11:456–464

Chan PLS, Holford NHG (2001) Drug treatment effects on disease progression. Annu Rev Pharmacol Toxicol 41:625–659

Gillespie W (2009) Population dose-response model for ADAS-cog scores in patients with Alzheimer’s disease by meta-analysis of a mixture of summary and individual data. American Conference on Pharmacometrics, Mashantucket, CT, 4–7 October 2009

Gobburu JV, Lesko LJ (2009) Quantitative disease, drug, and trial models. Annu Rev Pharmacol Toxicol 49:291–301

Huang Y, Ito K, Billing CB Jr, Anziano RJ (2014) For the Alzheimer’s Disease Neuroimaging Initiative. Development of a straightforward and sensitive scale for MCI and early AD clinical trials. Alzheimers Dement [Epub ahead of print]

Hennig S, Nyberg J, Hooker AC et al (2009) Trial treatment length optimization with an emphasis on disease progression studies. J Clin Pharmacol 49:323–335

Holford NH, Peace KE (1992) Methodologic aspects of a population pharmacodynamic model for cognitive effects in Alzheimer patients treated with tacrine. Proc Natl Acad Sci U S A 89(23):11466–11470

Ito K, Ahadieh S, Corrigan B, French J, Fullerton T, Tensfeldt T (2010) Disease progression meta-analysis model in Alzheimer’s disease. Alzheimers Dement 6(1):39–53

Ito K, Corrigan B, Zhao Q, French J, Miller R, Soares H, Katz E, Nicholas T, Billing B, Anziano R, Fullerton T (2011) Alzheimer’s Disease Neuroimaging Initiative. Disease progression model for cognitive deterioration from Alzheimer’s Disease Neuroimaging Initiative database. Alzheimers Dement 7(2):151–160

Ito K, Corrigan B, Romero K, Anziano R, Neville J, Stephenson D, Lalonde R (2013) Understanding placebo responses in Alzheimer’s disease clinical trials from the literature meta-data and CAMD database. J Alzheimers Dis 37(1):173–183

Milligan PA, Brown MJ, Marchant B, Martin SW, van der Graaf PH, Benson N, Nucci G, Nichols DJ, Boyd RA, Mandema JW, Krishnaswami S, Zwillich S, Gruben D, Anziano RJ, Stock TC, Lalonde RL (2013) Model-based drug development: a rational approach to efficiently accelerate drug development. Clin Pharmacol Ther [Epub ahead of print]

Mould DR, Denman NG, Duffull S (2007) Using disease progression models as a tool to detect drug effect. Clin Pharmacol Ther 82(1):81–86

Rogers JA, Polhamus D, Gillespie WR, Ito K, Romero K, Qiu R, Stephenson D, Gastonguay MR, Corrigan B (2012) Combining patient-level and summary-level data for Alzheimer’s disease modeling and simulation: a beta regression meta-analysis. J Pharmacokinet Pharmacodyn 39:479–498

Romero K, de Mars M, Frank D, Anthony M, Neville J, Kirby L, Smith K, Woosley RL (2009) The coalition against major diseases: developing tools for an integrated drug development process for Alzheimer’s and Parkinson’s diseases. Clin Pharmacol Ther 86(4):365–367

Romero K, Corrigan B, Neville J, Kopko S, Cantillon M (2011) Striving for an integrated drug development process for neurodegeneration: the coalition against major diseases. Neurodegen Dis Manage 1(5):379–385

Samtani MN, Farnum M, Lobanov V, Yang E, Raghavan N, Dibernardo A, Narayan V (2012) An improved model for disease progression in patients from the Alzheimer’s disease neuroimaging initiative. J Clin Pharmacol 52:629–644

Samtani MN, Raghavan N, Shi Y, Novak G, Farnum M, Lobanov V, Schultz T, Yang E, DiBernardo A, Narayan VA, Alzheimer’s disease Neuroimaging Initiative (2013) Disease progression model in subjects with mild cognitive impairment from the Alzheimer’s disease neuroimaging initiative: CSF biomarkers predict population subtypes. Br J Clin Pharmacol 75(1):146–161

Schneider LS, Sano M (2009) Current Alzheimer’s disease clinical trials: methods and placebo outcomes. Alzheimers Dement 5(5):388–397

Sheiner LB (1997) Learning versus confirming in clinical drug development. Clin Pharmacol Ther 61(3):275–291

Ueckert S, Plan EL, Ito K, Karlsson MO, Corrigan B, Hooker AC (2014) The Alzheimer’s Disease Neuroimaging Initiative. Improved Utilization of ADAS-Cog Assessment Data Through Item Response Theory Based Pharmacometric Modeling.Pharm Res [Epub ahead of print]

Weiner MW, Veitch DP, Aisen PS, Beckett LA, Cairns NJ, Green RC, Harvey D, Jack CR, Jagust W, Liu E, Morris JC, Petersen RC, Saykin AJ, Schmidt ME, Shaw L, Siuciak JA, Soares H, Toga AW, Trojanowski JQ (2012) Alzheimer’s disease neuroimaging initiative. The Alzheimer’s disease neuroimaging initiative: a review of papers published since its inception. Alzheimers Dement 8(1 Suppl):S1–S68

William-Faltaos D, Chen Y, Wang Y, Gobburu J, Zhu H (2013) Quantification of disease progression and dropout for Alzheimer’s disease. Int J Clin Pharmacol Ther 51(2):120–131

World Alzheimer’s Report (2010) The global economic impact of dementia. Alzheimer’s Disease International. http://www.alz.co.uk/research/world-report. Accessed 1 March 2012

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 American Association of Pharmaceutical Scientists

About this chapter

Cite this chapter

Corrigan, B., Ito, K., Rogers, J., Polhamus, D., Stephenson, D., Romero, K. (2014). Clinical Trial Simulation in Alzheimer’s Disease. In: Schmidt, S., Derendorf, H. (eds) Applied Pharmacometrics. AAPS Advances in the Pharmaceutical Sciences Series, vol 14. Springer, New York, NY. https://doi.org/10.1007/978-1-4939-1304-6_15

Download citation

DOI: https://doi.org/10.1007/978-1-4939-1304-6_15

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4939-1303-9

Online ISBN: 978-1-4939-1304-6

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)