Abstract

This paper examines the asymptotic properties of the popular within and GLS estimators and the Hausman test for panel data models with both large numbers of cross-section (N) and time-series (T) observations. The model we consider includes the regressors with deterministic trends in mean as well as time invariant regressors. Under general circumstances, we find that the within estimator is as efficient as the GLS estimator. Despite this asymptotic equivalence, however, the Hausman statistic, which is essentially a distance measure between the two estimators, is well defined and asymptotically χ 2-distributed under the random effects assumption. It is also found that the power properties of the Hausman test are sensitive to the size of T and the covariance structure among regressors and unobservable individual effects.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

7.1 Introduction

Error-components models have been widely used to control for unobservable cross-sectional heterogeneity in panel data with a large number of cross-section units (N) and a small number of time-series observations (T). These models assume that stochastic error terms have two components: an unobservable time-invariant individual effect, which captures the unobservable individual heterogeneity, and the usual random noise. The most popular estimation methods for error-components models are the within and the generalized least squares (GLS) estimators. A merit of the within estimator (least squares on data transformed into deviations from individual means) is that it is consistent even if regressors are correlated with the individual effect (fixed effects). A drawback, however, is that it cannot estimate the coefficients of time-invariant regressors.Footnote 1 Among various alternative estimators for the coefficients of time-invariant regressors,Footnote 2 the GLS estimator has been popularly used in the literature due to its efficiency. Its consistency, however, requires a strong assumption that no regressor is correlated with the individual effects (random effects). Because of its restrictiveness, the empirical validity of the random effects assumption should be tested to justify the use of the GLS estimator. The Hausman test statistic (1978) has been popularly used for this purpose (e.g., Hausman and Taylor 1981; Cornwell and Rupert 1988; Baltagi and Khanti-Akom 1990; Ahn and Low 1996; or Guggenberger 2010).

This paper studies the asymptotic and finite-sample properties of the within and GLS estimators and the Hausman statistic for a general panel data error-components model with both large N and T. The GLS estimator has been known to be asymptotically equivalent to the within estimator for the cases with infinite N and T (see, for example, Hsiao 1986, Chap. 3; Mátyás and Sevestre 1992, Chap. 4; Baltagi 1995, Chap. 2). This asymptotic equivalence result has been obtained using a sequential limit method (T → ∞ followed by N → ∞) and some strong assumptions such as fixed regressors. This result naturally raises several questions. First, does the equivalence result hold for more general cases? Second, does the equivalence result indicate that the Hausman statistic, which is essentially a distance measure between the within and GLS estimators, should have a degenerating or nonstandard asymptotic distribution under the random effects assumption? Third, does the equivalence result also imply that the Hausman test would have low power to detect any violation of the random effects assumption when T is large? This paper is concerned with answering these questions.

Panel data with a large number of time-series observations have been increasingly more available in recent years in many economic fields such as international finance, finance, industrial organization, and economic growth. Furthermore, popular panel data, such as the Panel Study of Income Dynamics (PSID) and the National Longitudinal Surveys (NLS), contain increasingly more time-series observations as they are updated regularly over the years. Consistent with this trend, some recent studies have examined the large-N and large-T properties of the within and GLS estimators for error-component models.Footnote 3 For example, Phillips and Moon (1999) and Kao (1999) establish the asymptotic normality of the within estimator for the cases in which regressors follow unit root processes. Extending these studies, Choi (1998) considers a general random effects model which contains both unit-root and covariance-stationary regressors. For this model, he derives the asymptotic distributions of both the within and GLS estimators.

This paper is different from the previous studies in three respects. First, the model we consider contains both time-varying and time-invariant regressors. The time-varying regressors are cross-sectionally heterogeneous or homogeneous. Analyzing this model, we study how cross-sectional heterogeneity and the covariance structure between the time-varying and time-invariant regressors, as well as time trends in regressors, would affect the convergence rates of the panel data estimators. Second, we examine how the large-N and large-T asymptotic equivalence of the within and GLS estimators influences the asymptotic and finite-sample performances of the Hausman test. Ahn and Low (1996) have investigated the size and power properties of the Hausman test for the cases with large N and small T. In this paper, we reexamine the asymptotic and finite-sample properties of the test in more detail. In particular, we study how the power of the Hausman test would depend on the size of T and the covariance structure among regressors. Third, and perhaps less importantly, we use the joint limit approach developed by Phillips and Moon (1999).

The main findings of this paper are as follows. First, consistent with the previous studies, we find that the within and GLS estimators of the coefficients of the time-varying regressors are asymptotically equivalent under quite general conditions. However, the convergence rates of the two estimators depend on (i) whether means of time-varying regressors are cross-sectionally heterogenous or homogenous and (ii) how the time-varying and time-invariant regressors are correlated. Second, if T is large, the GLS estimators of the coefficients of the time-varying regressors are consistent even if the random effect assumption is violated. This finding implies that the choice between within and GLS is irrelevant for the studies focusing on the effects of time-varying regressors. The choice matters for the studies focusing on the effects of time-invariant regressors. Third, despite the equivalence between the GLS and within estimators, the Hausman statistic has well-defined asymptotic distributions under the random effects assumption and under its local alternatives. We also find that the power of the Hausman test crucially depends on the covariance structure between time-varying and time-invariant regressors, the covariance structure between regressors and the individual effects, and the size of T. The Hausman test has good power to detect non-zero correlation between the individual effects and the permanent (individual-specific and time-invariant) components of time-varying regressors, even if T is small. In contrast, the power of the test is somewhat limited when the effects are correlated with the time-invariant regressors and/or they are only correlated with the transitory (time-varying) components of time-varying regressors. For such cases, the size of T could rather decrease the power of the Hausman test.

This paper is organized as follows. Section 7.2 introduces the panel model of interest, and defines the within, between and GLS estimators as well as the Hausman test. For several simple illustrative models, we derive the asymptotic distributions of the panel data estimators and the Hausman test statistic. Section 7.3 reports the results from our Monte Carlo experiments. In Sect. 7.4, we provide our general asymptotic results. Concluding remarks follow in Sect. 7.5. All the technical derivations and proofs are presented in the Appendix and the previous version of this paper.

7.2 Preliminaries

7.2.1 Estimation and Specification Test

The model under discussion here is given:

where i = 1, …, N denotes cross-sectional (individual) observations, t = 1, …, T denotes time, \(w_{it} ={ \left (x_{it}^{{\prime}},z_{i}^{{\prime}}\right )}^{{\prime}}\), and \(\delta = {{(\beta }^{{\prime}}{,\gamma }^{{\prime}})}^{{\prime}}\). In model (7.1), x it is a k × 1 vector of time-varying regressors, z i is a g × 1 vector of time-invariant regressors, ζ is an overall intercept term, and the error \(\varepsilon _{it}\) contains a time-invariant individual effect u i and random noise v it . We consider the cases with both large numbers of individual and time series observations, so asymptotic properties of the estimators and statistics for model (7.1) apply as N, T → ∞. The orders of convergence rates of some estimators can depend on whether or not the model contains an overall intercept term. This problem will be addressed later.

We assume that data are distributed independently (but not necessarily identically) across different i, and that the v it are independently and identically distributed (i.i.d.) with \(var(v_{it}) =\sigma _{ v}^{2}\). We further assume that u i , \(x_{i1},\ldots,x_{iT}\) and z i are strictly exogenous with respect to v it ; that is, \(E(v_{it}\mid u_{i},x_{i1},\ldots,x_{iT}) = 0,\) for any i and t. This assumption rules out the cases in which the set of regressors includes lagged dependent variables or predetermined regressors. Detailed assumptions about the regressors \(x_{i1},\ldots,x_{iT},z_{i}\) will be introduced later.

For convenience, we adopt the following notational rule: For any p × 1 vector a it , we denote \(\overline{a}_{i} = \frac{1} {T}\mathop{ \sum }\nolimits _{t}a_{it}\); \(\tilde{a}_{it} = a_{it} -\overline{a}_{i}\); \(\overline{a} = \frac{1} {N}\mathop{ \sum }\nolimits _{i}\overline{a}_{i}\); \(\tilde{a}_{i} = \overline{a}_{i} -\overline{a}\). Thus, for example, for \(w_{it} ={ \left (x_{it}^{{\prime}},z_{i}^{{\prime}}\right )}^{{\prime}}\), we have \(\overline{w}_{i} = {(\overline{x}_{i}^{{\prime}},z_{i}^{{\prime}})}^{{\prime}}\); \(\tilde{w}_{it} = {(\tilde{x}_{it}^{{\prime}},0_{1\times g})}^{{\prime}}\); \(\overline{w} = ({\overline{x}}^{{\prime}},\overline{z});\tilde{w}_{i} = {({(\overline{x}_{i} -\overline{x})}^{{\prime}},{(z_{i} -\overline{z})}^{{\prime}})}^{{\prime}}\).

When the regressors are correlated with the individual effect, the OLS estimator of δ is biased and inconsistent. This problem has been traditionally addressed by the use of the within estimator (OLS on data transformed into deviations from individual means):

Under our assumptions, the variance-covariance matrix of the within estimator is given:

Although the within method provides a consistent estimate of β, a serious defect is its inability to identify γ, the impact of time-invariant regressors. A popular treatment of this problem is the random effects (RE) assumption under which the u i are random and uncorrelated with the regressors:

Under this assumption, all of the parameters in model (7.1) can be consistently estimated. For example, a simple but consistent estimator is the between estimator (OLS on data transformed into individual means):

However, as Balestra and Nerlove (1966) suggest, under the RE assumption, an efficient estimator is the GLS estimator of the following form:

where \(\theta _{T} = \sqrt{\sigma _{v }^{2 }/(T\sigma _{u }^{2 } +\sigma _{ v }^{2 })}\). The variance-covariance matrix of this estimator is given:

For notational convenience, we assume that σ u 2 and σ v 2 are known, while in practice they must be estimated.Footnote 4

An important advantage of the GLS estimator over the within estimator is that it allows researchers to estimate γ. In addition, the GLS estimator of β is more efficient than the within estimator of β because \([V ar(\hat{\beta }_{w}) - V ar(\hat{\beta }_{g})]\) is positive definite so long as θ T > 0. Despite these desirable properties, it is important to notice that the consistency of the GLS estimator crucially depends on the RE assumption (7.3). Accordingly, the legitimacy of the RE assumption should be tested to justify the use of GLS. In the literature, a Hausman test (1978) has been widely used for this purpose. The statistic used for this test is a distance measure between the within and GLS estimators of β:

For the cases in which T is fixed and N → ∞, the RE assumption warrants that the Hausman statistic \(\mathcal{H}\mathcal{M}_{NT}\) is asymptotically χ 2-distributed with degrees of freedom equal to k. This result is a direct outcome of the fact that for fixed T, the GLS estimator \(\hat{\beta }_{g}\) is asymptotically more efficient than the within estimator \(\hat{\beta }_{w}\), and that the difference between the two estimators is asymptotically normal; specifically, as N → ∞,

where “⇒” means “converges in distribution.”

An important condition that guarantees (7.6) is that θ T > 0. If θ T = 0, then \(\hat{\beta }_{w}\) and \(\hat{\beta }_{g}\) become identical and the Hausman statistic is not defined. Observing θ T → 0 as T → ∞, we can thus easily conjecture that \(\hat{\beta }_{w}\) and \(\hat{\beta }_{g}\) should be asymptotically equivalent as T → ∞. This observation naturally raises two issues related to the asymptotic properties of the Hausman test as T → ∞. First, since the Hausman statistic is asymptotically χ 2−distributed for any fixed T under the RE assumption, we can conjecture that it should remain asymptotically χ 2−distributed even if T → ∞. Thus, we wish to understand the theoretical link between the asymptotic distribution of the Hausman statistic and the equivalence of the within and GLS estimators. Second, it is a well-known fact that the GLS estimator \(\hat{\beta }_{g}\) is a weighted average of the within and between estimators \(\hat{\beta }_{w}\) and \(\hat{\beta }_{b}\) (Maddala 1971). Thus, observing the form of the Hausman statistic, we can conjecture that the Hausman test should have the power to detect any violation of the RE assumption that causes biases in \(\hat{\beta }_{b}\). However, the weight given to \(\hat{\beta }_{b}\) in \(\hat{\beta }_{g}\) decreases with T. Thus, we wish to understand how the power of the Hausman test would be related to the size of T. We will address these two issues in the following sections.

What makes it complex to investigate the asymptotic properties of the Hausman statistic is that its convergence rate crucially depends on data generating processes. The following subsection considers several simple cases to illustrate this point.

7.2.2 Preliminary Results

This section considers several simple examples demonstrating that the convergence rate of the Hausman statistic depends on (i) whether or not the time-varying regressors are cross-sectionally heterogeneous and (ii) how the time-varying and time-invariant regressors are correlated.

For model (7.1), we can easily show that

where,

Equation (7.10) provides some insight into the convergence rate of the Hausman test statistic. Note that \((\hat{\beta }_{w} -\hat{\beta }_{g})\) depends on both \((\hat{\beta }_{w}-\beta )\) and \((\hat{\beta }_{b}-\beta )\). Apparently, the between estimator \(\hat{\beta }_{b}\) exploits only N between-individual variations, while the within estimator \(\hat{\beta }_{w}\) is computed based on N(T − 1) within-individual variations. Accordingly, \((\hat{\beta }_{b}-\beta )\) converges to a zero vector in probability much slower than \((\hat{\beta }_{w}-\beta )\) does. Thus, we can conjecture that the convergence rate of \((\hat{\beta }_{w} -\hat{\beta }_{g})\) will depend on that of \((\hat{\beta }_{b}-\beta )\), not \((\hat{\beta }_{w}-\beta )\). Indeed, we below justify this conjecture.

In this subsection, we only consider a simple model that has a single time-varying regressor (x it ) and a single time-invariant regressor (z i ). Accordingly, all of the terms defined in (7.7)–(7.11) are scalars. The within and GLS estimators and the Hausman test are well defined even if there is no time-invariant regressor. However, we consider the cases with both time-varying and time-invariant regressors because the correlation between the two regressors plays an important role in determining the convergence rate of the Hausman statistic. Asymptotic results for the cases with a single time-varying regressor only can be easily obtained by setting C NT = 0.

We consider asymptotics under the RE assumption (7.3). The power property of the Hausman test will be discussed in the following subsection. To save space, we only consider the estimators of β and the Hausman test. The asymptotic distributions of the estimators of γ will be discussed in Sect. 7.4. Throughout the examples below, we assume that the z i are i. i. d. over different i with N(0, σ z 2). In addition, we introduce a notation e it to denote a white noise component in the time-varying regressor x it . We assume that the e it are i.i.d. over different i and t with N(0, σ e 2), and are uncorrelated with the z i .

We consider two different cases separately: the cases in which x it and z i are uncorrelated (CASE A), and the cases in which the regressors are correlated (CASE B).

CASE A:

We here consider a case in which the time-varying regressor x it is stationary without trend. Specifically, we assume:

where \(\Theta _{a,i}\) and \(\Psi _{a,t}\) are fixed individual-specific and time-specific effects, respectively. Define \(\overline{\Theta }_{a} = \frac{1} {N}\mathop{ \sum }\nolimits _{i}\Theta _{a,i}\) and \(\overline{\Psi }_{a} = \frac{1} {N}\mathop{ \sum }\nolimits _{i}\Psi _{i};\) and let

We can allow them to be random without changing our results, but at the cost of analytical complexity. We consider two possible cases: one in which the parameters \(\Theta _{a,i}\) are heterogeneous, and the other in which they are constant over different individuals. Allowing the \(\Theta _{a,i}\) to be different across different individuals, we allow the means of x it to be cross-sectionally heterogeneous. In contrast, if the \(\Theta _{a,i}\) are constant over different i, the means of x it become cross-sectionally homogeneous. As we show below, the convergence rates of the between estimator and Hausman test statistic are different in the two cases.

To be more specific, consider the three terms B NT , C NT , and b NT defined below (7.11). Straightforward algebra reveals that

It can be shown that the terms including \((\Theta _{a,i} -\overline{\Theta }_{a})\) will be the dominant factors determining the asymptotic properties of B NT , C NT , and b NT . However, if the parameters \(\Theta _{a,i}\) are constant over different individuals so that \(\Theta _{a,i} -\overline{\Theta }_{a} = 0\), none of B NT , C NT , and b NT depend on \((\Theta _{a,i} -\overline{\Theta }_{a})\). For this case, the asymptotic properties of the three terms depend on \((\overline{e}_{i} -\overline{e})\). This result indicates that the asymptotic distribution of the between estimator \(\hat{\beta }_{b}\), which is a function of B NT , C NT , and b NT , will depend on whether the parameters \(\Theta _{a,i}\) are cross-sectionally heterogeneous or homogeneous.Footnote 5

We now consider the asymptotic distributions of the within, between, GLS estimators and the Hausman statistic under the two alternative assumptions about the parameters \(\Theta _{a,i}\).

CASE A.1:

Assume that the \(\Theta _{a,i}\) vary across different i; that is, p a, 2 ≠ 0. With this assumption, we can easily show that as (N, T → ∞)Footnote 6:

Three remarks follow. First, consistent with previous studies, we find from (7.15) that the within and GLS estimators, \(\hat{\beta }_{w}\) and \(\hat{\beta }_{g},\) are \(\sqrt{ NT}\)-equivalent in the sense that \((\hat{\beta }_{w} -\hat{\beta }_{g})\) is \(o_{p}(\sqrt{NT})\). This is so because the second term in the right-hand side of (7.15) is \(O_{p}(1/\sqrt{T})\). At the same time, (7.15) also implies that \((\hat{\beta }_{w} -\hat{\beta }_{g})\) is \(O_{p}(\sqrt{N{T}^{2}})\) and asymptotically normal. These results indicate that the within and GLS estimators are equivalent to each other by the order of \(\sqrt{ NT}\), but not by the order of \(\sqrt{N{T}^{2}}\). Second, from (7.15) and (7.16), we can see that the Hausman statistic is asymptotically χ 2-distributed with the convergence rate equal to \(\sqrt{ N{T}^{2}}\).Footnote 7 In particular, (7.15) indicates that the asymptotic distribution of the Hausman statistic is closely related to the asymptotic distribution of the between estimator \(\hat{\beta }_{b}\).

Finally, the above asymptotic results imply some simplified GLS and Hausman test procedures. From (7.13) and (7.14), it is clear that

With (7.15), this result indicates that the Hausman test based on the difference between \(\hat{\beta }_{w}\) and \(\hat{\beta }_{g}\) is asymptotically equivalent to the Wald test based on the between estimator \(\hat{\beta }_{b}\) for the hypothesis that the true β equals the within estimator \(\hat{\beta }_{w}\). We obtain this result because the convergence rate of \(\hat{\beta }_{w}\) is faster than that of \(\hat{\beta }_{b}\). In addition, the GLS estimator of \(\hat{\gamma }_{g}\) can be obtained by the between regression treating \(\hat{\beta }_{w}\) as the true β, that is, the regression on the model \(\tilde{y}_{i} -\tilde{ x}_{i}\hat{\beta }_{w} =\tilde{ z}_{i}\gamma + error\). An advantage of these alternative procedures is that GLS and Hausman tests can be conducted without estimating θ T . The alternative procedures would be particularly useful for the analysis of unbalance panel data. For such data, θ T is different over different cross-sectional units. When T is sufficiently large for individual i’s, we do not need to estimate these different θ T ’s for GLS. The alternative procedures work out for all of the other cases analyzed below.

CASE A.2:

Now, we consider the case in which the \(\Theta _{a,i}\) are constant over different i (\(\Theta _{a}\)); that is, p a, 2 = 0. It can be shown that the asymptotic distributions of the within and GLS estimators are the same under both CASEs A.1 and A.2. However, the asymptotic distributions of the between estimator \(\hat{\beta }_{b}\) and the Hausman statistic are different under CASEs A.1 and A.2.Footnote 8 Specifically, for CASE A.2, we can show that as (N, T → ∞),

Several comments follow. First, observe that differently from CASE A.1, the between estimator \(\hat{\beta }_{b}\) is now \(\sqrt{ N/T}\)-consistent. An interesting result is obtained when N∕T → c < ∞. For this case, the between estimator is inconsistent although it is still asymptotically unbiased. This implies that the between estimator is an inconsistent estimator for the analysis of cross-sectionally homogeneous panel data unless N is substantially larger than T. Second, the convergence rate of \((\hat{\beta }_{w} -\hat{\beta }_{g}),\) as well as that of the Hausman statistic, is different between CASEs A.1 and A.2. Notice that the convergence rate of \((\hat{\beta }_{w} -\hat{\beta }_{g})\) is \(\sqrt{ N{T}^{3}}\) for CASE A.2, while it is \(\sqrt{N{T}^{2}}\) for CASE A.1. Thus, \((\hat{\beta }_{w} -\hat{\beta }_{g})\) converges in probability to zero much faster in CASE A.2 than in CASE A.1. Nonetheless, the Hausman statistic is asymptotically χ 2-distributed in both cases, though with different convergence rates.

Even if the time-varying regressor x it contains a time trend, we can obtain the similar results as in CASEs A.1 and A.2. For example, consider a case in which the time-varying regressor x it contains a time trend of order m:

where the parameters \(\Theta _{b,i}\) are fixed.Footnote 9 Not surprisingly, for this case, we can show that the within and GLS estimators are superconsistent and \({T}^{m}\sqrt{NT}\)-equivalent. However, the convergence rates of the between estimator \(\hat{\beta }_{b}\) and the Hausman test statistic crucially depend on whether the parameters \(\Theta _{b,i}\) are heterogenous or homogeneous. When, the parameters \(\Theta _{b,i}\) are heterogeneous over different i, the between estimator \(\hat{\beta }_{b}\) is \({T}^{m}\sqrt{N}\)-consistent, while the convergence rate of the Hausman statistic equals \({T}^{m}\sqrt{N{T}^{2}}.\) In contrast, somewhat surprisingly, when the parameters \(\Theta _{b,i}\) are heterogeneous over different i, the estimator \(\hat{\beta }_{b}\) is no longer superconsistent. Instead, it is \(\sqrt{N/T}\)-consistent as in CASE A.2. The convergence rate of the Hausman statistic changes to \({T}^{2m}\sqrt{N{T}^{3}}\).Footnote 10 This example demonstrates that the convergence rates of the between estimator and the Hausman statistic crucially depend on whether means of time-varying regressors are cross-sectionally heterogenous or not.

CASE B:

So far, we have considered the cases in which the time-varying regressor x it and the time-invariant regressor z i are uncorrelated. We now examine the cases in which this assumption is relaxed. The degree of the correlation between the x it and z i may vary over time. As we demonstrate below, the asymptotic properties of the panel data estimators and the Hausman test statistic depend on how the correlation varies over time. The basic model we consider here is given by

where the \(\Pi _{i}\) are individual-specific fixed parameters, and m is a non-negative real number.Footnote 11 Observe that because of the presence of the \(\Pi _{i}\), the x it are not i.i.d. over different i.Footnote 12 The correlation between x it and z i decreases over time if m > 0. In contrast, m = 0 implies that the correlation remains constant over time. For CASE B, the within and GLS estimators are always \(\sqrt{ NT}\)-consistent regardless of the size of m. Thus, we only report the asymptotic results for the between estimator \(\hat{\beta }_{b}\) and the Hausman statistic.

We examine three possible cases: m ∈ (0. 5, ∞], m = 0. 5, and m ∈ [0, 0. 5). We do so because, depending on the size of m, one (or both) of the two terms e it and \(\Pi _{i}z_{i}/{t}^{m}\) in x it becomes a dominating factor in determining the convergence rates of the between estimator \(\hat{\beta }_{b}\) and the Hausman statistic \(\mathcal{H}\mathcal{M}_{TN}\).

CASE B.1:

Assume that m ∈ (0. 5, ∞]. This is the case where the correlation between x it and z i fades away quickly over time. Thus, one could expect that the correlation between x it and z i (through the term \(\Pi _{i}z_{i}/{t}^{m}\)) would not play any important role in asymptotics. Indeed, straightforward algebra, which is not reported here, justifies this conjecture: The term e it in x it dominates \(\Pi _{i}z_{i}/{t}^{m}\) in asymptotics, and, thus, this is essentially the same case as CASE A.2.Footnote 13

CASE B.2:

We now assume m = 0. 5. For this case, define \(\overline{\Pi } = \frac{1} {N}\mathop{ \sum }\nolimits _{i}\Pi _{i};\)

and \(q_{b} = \mathit{lim}_{T\rightarrow \infty } \frac{1} {{T}^{1-m}}\int _{0}^{1}{r}^{-m}dr = \frac{1} {1-m}\) for m ≤ 0. 5. With this notation, a little algebra shows that as (N, T → ∞),

Observe that the asymptotic variance of the between estimator \(\hat{\beta }_{b}\) depends on both the terms σ e 2 and \(p_{b,2}q_{b}^{2}\sigma _{z}^{2}\). That is, both the terms e it and \(\Pi _{i}z_{i}/{t}^{m}\) in x it are important in the asymptotics of the between estimator \(\hat{\beta }_{b}\). This implies that the correlation between the x it and z i , when it decreases reasonably slowly over time, matters for the asymptotic distribution of the between estimator \(\hat{\beta }_{b}\). Nonetheless, the convergence rate of \(\hat{\beta }_{b}\) is the same as that of \(\hat{\beta }_{b}\) for CASEs A.2 and B.1. We can also show

both of which imply that the Hausman statistic is asymptotically χ 2-distributed.

CASE B.3:

Finally, we consider the case in which m ∈ [0, 0. 5), where the correlation between x it and z i decays over time slowly. Note that the correlation remains constant over time if m = 0. We can show

Observe that the asymptotic distribution of \(\hat{\beta }_{b}\) no longer depends on σ e 2. This implies that the term \(\Pi _{i}z_{i}/{t}^{m}\) in x it dominates e it in the asymptotics for \(\hat{\beta }_{b}\). Furthermore, the convergence rate of \(\hat{\beta }_{b}\) now depends on m. Specifically, so long as m < 0. 5, the convergence rate increases as m decreases. In particular, when the correlation between x it and z i remains constant over time (m = 0), the between estimator \(\hat{\beta }_{b}\) is \(\sqrt{N}\)-consistent as in CASE A.1. Finally, the following results indicate that the convergence rate of the Hausman statistic \(\mathcal{H}\mathcal{M}_{NT}\) also depends on m:

7.2.3 Asymptotic Power Properties of the Hausman Test

In this section, we consider the asymptotic power properties of the Hausman test for the special cases discussed in the previous subsection. To do so, we need to specify a sequence of local alternative hypotheses for each case. Among many, we consider the alternative hypotheses under which the conditional mean of u i is a linear function of the regressors \(\tilde{x}_{i}\) and \(\tilde{z}_{i}\).

We list in Table 7.1 our local alternatives and asymptotic results for CASEs A.1–B.3. For all of the cases, we assume that \(var(u_{i}\vert \tilde{x}_{i},\tilde{z}_{i}) =\sigma _{ u}^{2}\) for all i. The parameters λ x and λ z are nonzero real numbers. Notice that for CASEs A.2–B.3, we use \(\sqrt{ T}\tilde{x}_{i}\) or \({T}^{m}\tilde{x}_{i}\) instead of \(\tilde{x}_{i}\). We do so because, for those cases, \(\mathit{plim}_{T\rightarrow \infty }\tilde{x}_{i} = 0.\) The third column indicates the convergence rates of the Hausman test, which are the same as those obtained under the RE assumption. Under the local alternatives, the Hausman statistic asymptotically follows a noncentral χ 2 distribution. The noncentral parameters for individual cases are listed in the fourth column of Table 7.1.

where λ o is any constant scalar. The asymptotic results remain the same with this replacement.

A couple of comments follow. First, although the noncentral parameter does not depend on λ z for any case reported in Table 7.1, it does not mean that the Hausman test has no power to detect nonzero correlation between the effect u i and the time-invariant regressor z i . The Hausman test comparing the GLS and within estimators is not designed to directly detect the correlations between the time-invariant regressors and the individual effects. Nonetheless, the test has power as long as the individual effect u i is correlated with the time-varying regressors conditionally on the time-invariant regressors. To see this, consider a model in which x it and z i have a common factor f i ; that is, \(x_{it} = f_{i} + e_{it}\) and \(z_{i} = f_{i} +\eta _{i}\). (This is the case discussed below in Assumption 5.) Assume \(E(u_{i}\ \vert \ f_{i},\eta _{i},\bar{e}_{i}) = c\eta _{i}/\sqrt{N}.\) Also assume that f i , η i and e it are normal, mutually independent, and i.i.d. over different i and t with zero means, and variances \(\sigma _{f}^{2},\ \sigma _{\eta }^{2},\) and σ e 2, respectively. Note that x it is not correlated with u i , while z i is. For this case, however, we can show that

where \(d = (\sigma _{f}^{2} +\sigma _{ e}^{2}/T)(\sigma _{f}^{2} +\sigma _{ \eta }^{2}) -\sigma _{f}^{4},\ \lambda _{x} = -c\sigma _{f}^{2}\sigma _{\eta }^{2}/d,\lambda _{z} = c(\sigma _{f}^{2} +\sigma _{ e}^{2}/T)\sigma _{\eta }^{2}/d\). Observe that λ x is functionally related to λ z : λ x = 0 if and only if λ z = 0. For this case, it can be shown that the Hausman test has the power to detect non-zero correlation between u i and \(\tilde{z}_{i}\).

Second and more importantly, the results for CASE A show that the large-T and large-N power properties of the Hausman test may depend on (i) what components of x it are correlated with the effect u i and (ii) whether the mean of x it is cross-sectionally heterogeneous. For CASE A, the time-invariant and time-varying parts of \(x_{it},\ \Theta _{a,i}\) and e it , can be viewed as permanent and transitory components, respectively. For fixed T, it does not matter to the Hausman test which of these two components of x it is correlated with the individual effect u i . The Hausman test has power to detect any kind of correlations between x it and u i . In contrast, for the cases with large T, the same test can have power for specific correlations only. To see why, observe that for CASE A.1, the noncentral parameter of the Hausman statistic depends only on the variations of the permanent components \(\Theta _{i}\), not on those of the transitory components e it . This implies that for CASE A.1 (where the permanent components \(\Theta _{a,i}\) are cross-sectionally heterogeneous), the Hausman test has power for nonzero correlation between the effect u i and the permanent component \(\Theta _{a,i},\) but no power for nonzero-correlation between the effect and the temporal component e it . In contrast, for CASE A.2 (where the permanent components \(\Theta _{a,i}\) are the same for all i), the noncentral parameter depends on the variations in e it . That is, for CASE A.2, the Hausman test does have power to detect nonzero-correlation between the effect and the temporal component of x it .Footnote 14

Similar results are obtained from the analysis of CASE B. The results reported in the fourth column of Table 7.1 show that when the correlation between x it and z i decays slowly over time (m ≤ 0. 5), the Hausman test has power to detect nonzero-correlation between the individual effect and the transitory component of time-varying regressors, even if T is large. In contrast, when m > 0. 5, the same test has no power to detect such nonzero correlations if T is large. These results indicate that the asymptotic power of the Hausman test can depend on the size of T. That is, the Hausman test results based on the entire data set with large T could be different from those based on subsamples with small T. These findings will be more elaborated in Sect. 7.3.

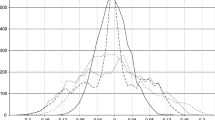

7.3 Monte Carlo Experiments

In this section, we investigate the finite-sample properties of the within and GLS estimators, as well as those of the Hausman test. Consistent with the previous section, we consider a model with one time-varying regressor x it and one time-invariant regressor z i . The foundations of our experiments are CASEs A and B discussed above. For all of our simulations, both the individual effects u i and random errors v it are drawn from N(0, 1).

For the cases in which the two regressors are uncorrelated (CASE A), we generate x it and z i as follows:

where \(\Theta _{i} =\rho _{xu,1}u_{i} + \sqrt{1 -\rho _{ xu,1 }^{2}}\Theta _{i}^{c}\), \(\xi _{i} =\rho _{xu,2}u_{i} + \sqrt{1 -\rho _{ xu,2 }^{2}}\xi _{i}^{c}\), the \(\Theta _{i}^{c}\) and ξ i c are random variables from N(0, 1), the e it and f i are drawn from a uniform distribution in the range (−2, 2). The term \(\xi _{i}\phi _{t} + e_{it}\) is the transitory component of x it . The term ξ i ϕ t is introduced to investigate the cases with non-zero correlations between the individual effect and the transitory component of x it . The degrees of correlations between the individual effects and regressors are controlled by ρ xu, 1, ρ xu, 2 and ρ zu . For each of the simulation results reported below, 5,000 random samples are used.

Table 7.2 reports the simulation results from CASE A.1. When regressors are uncorrelated with the effect u i , both the GLS and within estimators have only small biases. The Hausman test is reasonably well sized although it is somewhat oversized when both N and T are small. When the permanent component of x it is correlated with the effect (Panel I), the GLS estimator of β is biased. However, the size of bias decreases with T, as we expected. The bias in the within estimator of β is always small regardless of the sizes of N and T. The Hausman test has great power to detect non-zero correlation between the permanent component of x it and u i regardless of sample size. The power increases with T while the bias in the GLS estimator of β decreases.

Panel II of Table 7.2 shows the results from the cases in which the effect u it and the transitory component of x it are correlated. Our asymptotic results predict that this type of correlation does not bias the GLS estimates when T is large and is not detected by the Hausman test. The results reported in Panel II are consistent with this prediction. Even if T is small, we do not see substantial biases in the GLS estimates. When T is small, the Hausman test has some limited power to detect the non-zero correlation between the effect and the transitory component of the time-varying regressor. However, the power decreases with T.

Table 7.3 reports the results from the cases in which the time-varying regressor does not have a permanent component (CASE A.2). Similar to those that are reported in Panel II of Table 7.2, there is no sign that non-zero correlation between the effect and the transitory component of x it causes a substantial bias in the GLS estimator. However, differently from the results reported in Panel II of Table 7.2, the Hausman test now has better power to detect non-zero correlation between the effect and the transitory component of x it . The power increases as either N or T increases.

We now consider the cases in which x it and z i are correlated (CASE B). For these cases, the x it are generated by

where the π i are drawn from a uniform distribution in the range (0, 1). As in (7.22), the term ξ i ϕ t is introduced to investigate the cases with non-zero correlations between the individual effect and the transitory component of x it . Observe that in (7.24), we use f i , not z i . As we have discussed in the previous section, the Hausman test would not have any power to detect non-zero correlation between z i and u i for the cases with large T if z i instead of f i were used for (7.24). We use f i to investigate the power properties of the Hausman test under more general cases than the cases we have considered in the previous sections. Under (7.24), the Hausman test can have power to detect non-zero correlation between z i and u i .

Table 7.4 shows our simulation results from the cases in which the time-varying regressor is correlated with the time-invariant regressor. Panel I reports the results when the time-invariant regressor z i is correlated with the effect u i . Regardless of how fast the correlation between x it and z i decays over time, the GLS estimator of γ shows some signs of biases. While the biases reported in Panel I appear only mild, the biases become substantial if we increase the size of ρ zu further. When the correlation between x it and z i remains constant over time (m = 0), the GLS estimator of β is mildly biased. However, the bias becomes smaller as the size of T increases. When the correlation between x it and z i decays over time (m ≥ 0. 5), no substantial bias is detected in the GLS estimator of β even if T is small. The Hausman test has some power to detect non-zero correlation between the time-invariant regressor z i and the effect u i . However, the power appears to be limited in our simulation exercises: Its power never exceeds 61 %. The power increases with T when m ≤ 0. 5, but the power decreases with T if m > 0. 5.

Panel II of Table 7.4 reports the results for the cases in which the transitory component of x it is correlated with the effect u i . Similarly to those reported in Table 7.3, both the GLS estimators of β and γ show no signs of significant biases. For the cases in which the correlation between x it and z i decays only mildly over time (m < 0. 5), the power of the Hausman test to detect nonzero-correlation between the individual effect and the transitory component of the time-varying regressor is extremely low, especially when T is large. In contrast, when m ≥ 0. 5, the power of the Hausman test increases with T. These results are consistent with what the asymptotic results derived in the previous section have predicted.

Our simulation results can be summarized as follows. First, the finite-sample properties of the GLS estimators and the Hausman test are generally consistent with their asymptotic properties. Second, even if time-varying or time-invariant regressors are correlated with the unobservable individual effects, the GLS estimators of the coefficients of the time-varying regressors do not suffer from substantial biases if T is large, although the GLS estimators of the coefficients on time-invariant regressors could be biased regardless of the size of T. Third, the Hausman test has great power to detect non-zero correlation between the unobservable individual effects and the permanent components of time-varying regressors. In contrast, it has only limited power to detect non-zero correlations between the effects and transitory components of the time-varying regressors and between the effects and time-invariant regressors. The power of the Hausman test crucially depends on both the size of T and the covariance structure among regressors and the effects.

Both our asymptotic and Monte Carlo results provide empirical researchers with practical guidance. For the studies that focus on the effects of time-varying regressors on the dependent variable, the choice between the GLS and within estimators is an irrelevant issue when T is as large as N. Both the GLS and within estimators are consistent. For the studies that focus on the effects of time-invariant regressors, some cautions are required for correct interpretations of the Hausman test results. One important reason to prefer GLS over within is that it allows estimation of the effects of time-invariant regressors on dependent variables. For the consistent estimation of the effects of the time-invariant regressors, however, it is important to test endogeneity of the regressors. Our results indicate that large-T data do not necessarily improve the power property of the Hausman test. When the degrees of correlations between time-varying and time-invariant variables decrease quickly over time, the Hausman test generally lacks the power to detect endogeneity of time-invariant regressors. The tests based on subsamples with small T could provide more reliable test results. The different test results from a large-T sample and its small-T subsamples may provide some information about how the individual effect might be correlated with time-varying regressors. The rejection by large-T data but acceptance by small-T data would indicate that the effect is correlated with the permanent components of the time-varying regressor, but the degrees of the correlations are low. In contrast, the acceptance by large-T data but rejection by small-T data may indicate that the effect is correlated with the temporal components of the time-varying regressors.

So far, we have considered several simple cases to demonstrate how the convergence rates of the popular panel data estimators and the Hausman test are sensitive to data generating processes. For these simple cases, all of the relevant asymptotics can be obtained in a straightforward manner. In the following section, we will show that the main results obtained from this section apply to more general cases in which regressors are serially dependent with arbitrary covariance structures.

7.4 General Case

This section derives for the general model (7.1) the asymptotic distributions of the within, between, GLS estimators and the Hausman statistic. In Sect. 7.2, we have considered independently several simple models in which regressors are of particular characteristics. The general model we consider in this section contains all of the different types of regressors analyzed in Sect. 7.2. More detailed assumptions are introduced below.

From now on, the following notation is repeatedly used. The expression “ → p ” means “converges in probability,” while “ ⇒ ” means “converges in distribution” as in Sect. 7.2.2. For any matrix A, the norm \(\left \|A\right \|\) signifies \(\sqrt{ tr(A{A}^{{\prime}})}.\) When B is a random matrix with \(E\left \|B\right \|_{p} < \infty \), then \(\left \|B\right \|_{p}\) denotes \({\left (E{\left \|B\right \|}^{p}\right )}^{1/p}\). We use \(E_{\mathcal{F}}(\cdot )\) to denote the conditional expectation operator with respect to a sigma field \(\mathcal{F}\). We also define \(\left \|B\right \|_{\mathcal{F},p} ={ \left (E_{\mathcal{F}}{\left \|B\right \|}^{p}\right )}^{1/p}.\) The notation x N ∼ a N indicates that there exists n and finite constants d 1 and d 2 such that \(\inf _{N\geq n}\frac{x_{N}} {a_{N}} \geq d_{1}\) and \(\sup _{N\geq n}\frac{x_{N}} {a_{N}} \leq d_{2}.\) We also use the following notation for relevant sigma-fields: \(\mathcal{F}_{x_{i}} =\sigma (x_{i1},\ldots,x_{iT})\); \(\mathcal{F}_{z_{i}} =\sigma \left (z_{i}\right )\); \(\mathcal{F}_{z} =\sigma \left (\mathcal{F}_{z_{1}},\ldots,\mathcal{F}_{z_{N}}\right )\); \(\mathcal{F}_{w_{i}} =\sigma \left (\mathcal{F}_{x_{i}},\mathcal{F}_{z_{i}}\right )\); and \(\mathcal{F}_{w} =\sigma \left (\mathcal{F}_{w_{1}},\ldots,\mathcal{F}_{w_{N}}\right ).\) The x it and z i are now k × 1 and g × 1 vectors, respectively.

As in Sect. 7.2, we assume that the regressors \({(x_{i1}^{{\prime}},\ldots,x_{iT}^{{\prime}},z_{i}^{{\prime}})}^{{\prime}}\) are independently distributed across different i. In addition, we make the following the assumption about the composite error terms u i and v it :

Assumption 1

(about u i and v it ): For some q > 1,

-

(i)

The u i are independent over different i with sup \(_{i}E{\left \vert u_{i}\right \vert }^{4q} < \infty \) .

-

(ii)

The v it are i.i.d. with mean zero and variance σ v 2 across different i and t, and are independent of x is , z i and u i , for all i, t, and s. Also, \(\left \|v_{it}\right \|_{4q} \equiv \kappa _{v}\) is finite.

Assumption 1(i) is a standard regularity condition for error-components models. Assumption 1(ii) indicates that all of the regressors and individual effects are strictly exogenous with respect to the error terms v it .Footnote 15

We now make the assumptions about regressors. We here consider three different types of time-varying regressors: We partition the k × 1 vector x it into three subvectors, x 1, it , x 2, it , and x 3, it , which are k 1 × 1, k 2 × 1, and k 3 × 1, respectively. The vector x 1, it consists of the regressors with deterministic trends. We may think of three different types of trends: (i) cross-sectionally heterogeneous nonstochastic trends in mean (but not in variance or covariances); (ii) cross-sectionally homogeneous nonstochastic trends; and (iii) stochastic trends (trends in variance) such as unit-root time series. In Sect. 7.2, we have considered the first two cases while discussing the cases related with (7.20). The latter case is materially similar to CASE A.2, except that the convergence rates of estimators and test statistics are different under these two cases. Thus, we here only consider the case (i). We do not cover the cases of stochastic trends (iii), leaving the analysis of such cases to future study.

The two subvectors x 2, it and x 3, it are random regressors with no trend in mean. The partition of x 2, it and x 3, it is made based on their correlation with z i . Specifically, we assume that the x 2, it are not correlated with z i , while the x 3, it are. In addition, in order to accommodate CASEs A.1 and A.2, we also partition the subvector x 2, it into x 21, it and x 22, it , which are k 21 × 1 and k 22 × 1, respectively. Similarly to CASE A.1, the regressor vector x 21, it is heterogeneous over different i, as well as different t, with different means \(\Theta _{21,it}\). In contrast, x 22, it is homogeneous cross-sectionally with means \(\Theta _{22,t}\) for all i for given t (Case A.2). We also incorporate CASEs B.1–B.3 into the model by partitioning x 3, it into x 31, it , x 32, it , and x 33, it , which are k 31 × 1, k 32 × 1, and k 33 × 1, respectively, depending on how fast their correlations with z i decay over time. The more detailed assumptions on the regressors x it and z i follow:

Assumption 2

(about x 1,it ):

-

(i)

For some q > 1, \(\kappa _{_{x_{ 1}}} \equiv \sup _{i,t}\left \|x_{1,it} - Ex_{1,it}\right \|_{4q} < \infty \) .

-

(ii)

Let x h,1,it be the h th element of x 1,it . Then, \(Ex_{h,1,it} \sim {t}^{m_{h,1}}\) for all i and h = 1,…,k 1 , where m h,1 > 0.

Assumption 3

(about x 2,it ): For some q > 1,

-

(i)

\(E(x_{21,it}) = \Theta _{21,it}\) and \(E(x_{22,it}) = \Theta _{22,t}\) , where \(\sup _{i,t}\left \|\Theta _{21,it}\right \|\), \(\sup _{t}\left \|\Theta _{22,t}\right \| < \infty \) , and \(\Theta _{21,it}\neq \Theta _{21,jt}\) if i ≠ j.

-

(ii)

\(\kappa _{x_{2}} \equiv \sup _{i,t}\left \|x_{2,it} - Ex_{2,it}\right \|_{4q} < \infty \) .

Assumption 4

(about x 3,it ): For some q > 1,

-

(i)

\(E\left (x_{3,it}\right ) = \Theta _{3,it},\) where \(\sup _{i,t}\left \|\Theta _{3,it}\right \| < \infty.\)

-

(ii)

\(E\left (\sup _{i,t}\left \|x_{3,it} - E_{\mathcal{F}_{z_{ i}}}x_{3,it}\right \|_{\mathcal{F}_{z_{ i}},4q}^{8q}\right ) < \infty.\)

-

(iii)

Let x h,3k,it be the h th element of x 3k,it , where k = 1,2,3. Then, conditional on z i,

-

(iii.1)

\(\left (E_{\mathcal{F}_{z_{ i}}}x_{h,31,it} - Ex_{h,31,it}\right ) \sim {t}^{-m_{h,31}}\) a.s., where \(\frac{1} {2} < m_{h,31} \leq \infty \) for \(h = 1,\ldots,k_{_{31}}\)

(here, m h,31 = ∞ implies that \(E_{\mathcal{F}_{z_{ i}}}x_{h,31,it} - Ex_{h,31,it} = 0\) a.s.);

-

(iii.2)

\(\left (E_{\mathcal{F}_{z_{ i}}}x_{h,32,it} - Ex_{h,32,it}\right ) \sim {t}^{-\frac{1} {2} }\) a.s. for \(h = 1,\ldots,k_{_{32}}\);

-

(iii.3)

\(\left (E_{\mathcal{F}_{z_{ i}}}x_{h,33,it} - Ex_{h,33,it}\right ) \sim {t}^{-m_{h,33}}\) a.s., where \(0 \leq m_{h,33} < \frac{1} {2}\) for \(h = 1,\ldots,k_{_{33}}\) .

-

(iii.1)

Assumption 5

(about z i ): {z i } i is i.i.d. over i with \(E(z_{i}) = \Theta _{z},\) and \(\left \|z_{i}\right \|_{4q} < \infty \) for some q > 1.

Panel data estimators of individual coefficients have different convergence rates depending on the types of the corresponding regressors. To address these differences, we define:

where

Observe that D 1T , D 2T , and D 3T are conformable to regressor vectors x 1, it , x 2, it , and x 3, it , respectively, while D T and I g are to x it and z i , respectively. The diagonal matrix D T is chosen so that \(\mathit{plim}_{N\rightarrow \infty }\frac{1} {N}\sum _{i}D_{T}\tilde{w}_{i}\tilde{w}_{i}^{{\prime}}D_{ T}\) is well defined and finite. For future use, we also define

so that

Using this notation, we make the following regularity assumptions on the unconditional and conditional means of regressors:

Assumption 6

(convergence as T →∞): Defining t = [Tr], we assume that the following restrictions hold as T →∞.

-

(i)

Let \(\tau _{1}\left (r\right ) = \mathit{diag}\left ({r}^{m_{1,1}},\ldots,{r}^{m_{k_{1},1}}\right )\) , where m h,1 is defined in Assumption 2. Then,

$$\displaystyle{ D_{1T}E\left (x_{1,it}\right ) \rightarrow \tau _{1}\left (r\right )\Theta _{1,i} }$$uniformly in i and r ∈ [0,1], for some \(\Theta _{1,i} ={ \left (\Theta _{1,1,i},\ldots,\Theta _{k_{1},1,i}\right )}^{{\prime}}\) with \(\sup _{i}\left \|\Theta _{1,i}\right \| < \infty \) .

-

(ii)

\(\Theta _{21,it} \rightarrow \Theta _{21,i}\) and \(\Theta _{3,it} \rightarrow \Theta _{3,i}\) uniformly in i with \(\sup _{i}\left \|\Theta _{21,i}\right \| < \infty \) and \(\sup _{i}\left \|\Theta _{3,i}\right \| < \infty \) .

-

(iii)

Uniformly in i and r ∈ [0,1],

-

\(D_{31T}\left (E_{\mathcal{F}_{z_{ i}}}x_{31,it} - Ex_{31,it}\right ) \rightarrow 0_{k_{31}\times 1}\) a.s.;

-

\(D_{32T}\left (E_{\mathcal{F}_{z_{ i}}}x_{32,it} - Ex_{32,it}\right ) \rightarrow \frac{1} {\sqrt{r}}I_{k_{32}}g_{32,i}\left (z_{i}\right )\) a.s.;

-

\(D_{33T}\left (E_{\mathcal{F}_{z_{ i}}}x_{33,it} - Ex_{33,it}\right ) \rightarrow \tau _{33}\left (r\right )g_{33,i}\left (z_{i}\right )\) a.s.,

where

$$\displaystyle{ g_{32,i} ={ \left (g_{1,32,i},\ldots,g_{k_{32},32,i}\right )}^{{\prime}};\text{ }g_{ 33,i} ={ \left (g_{1,33,i},\ldots,g_{k_{33},33,i}\right )}^{{\prime}}, }$$and \(g_{32,i}\left (z_{i}\right )\) and g 33,i (z i ) are zero-mean functions of z i with

$$\displaystyle{ 0 < E\sup _{i}{\left \|g_{3k,i}\left (z_{i}\right )\right \|}^{4q} < \infty \text{, for some }q > 1, }$$and \(g_{3k,i}\neq g_{3k,j\text{ }}for\ i\neq j,\) and \(\tau _{33}\left (r\right ) = \mathit{diag}\left ({r}^{-m_{1,33}},\ldots {r}^{-m_{k_{33},33}}\right )\) .

-

-

(iv)

There exist \(\tilde{\tau }\left (r\right )\) and \(\tilde{G}_{i}\left (z_{i}\right )\) such that

$$\displaystyle{ \Vert D_{3T}\left (E_{\mathcal{F}_{z_{ i}}}x_{3,it} - Ex_{3,it}\right )\Vert \leq \tilde{\tau } (r)\tilde{G}_{i}(z_{i})\text{,} }$$where \(\int \tilde{\tau }{\left (r\right )}^{4q}dr < \infty \) and \(E\sup _{i}\tilde{G}_{i}{\left (z_{i}\right )}^{4q} < \infty \) for some q > 1.

-

(v)

Uniformly in \(\left (i,j\right )\) and r ∈ [0,1];

-

\(D_{31T}\left (Ex_{31,it} - Ex_{31,jt}\right ) \rightarrow 0_{k_{31}\times 1},\)

-

\(D_{32T}\left (Ex_{32,it} - Ex_{32,jt}\right ) \rightarrow \frac{1} {\sqrt{r}}I_{k_{32}}\left (\mu _{g_{32i}} -\mu _{g_{32j}}\right )\),

-

\(D_{33T}\left (Ex_{33,it} - Ex_{33,jt}\right ) \rightarrow \tau _{33}\left (r\right )\left (\mu _{g_{33i}} -\mu _{g_{33j}}\right ),\)

with \(\sup _{i}\Vert \mu _{g_{32i}}\Vert,\sup _{i}\Vert \mu _{g_{33i}}\Vert < \infty.\)

-

Some remarks would be useful to understand Assumption 6. First, to have an intuition about what the assumption implies, we consider, as an illustrative example, the simple model in CASE 3 in Sect. 7.2.2, in which \(x_{3,it} = \Pi _{i}z_{i}/{t}^{m} + e_{it},\) where e it is independent of z i and i. i. d. across i. For this case,

Thus,

Second, Assumption 6(iii) makes the restriction that \(E\sup _{i}{\left \|g_{3k,i}\left (z_{i}\right )\right \|}^{4q}\) is strictly positive, for k = 2, 3. This restriction is made to warrant that \(g_{3k,i}\left (z_{i}\right )\neq 0\) a.s. If \(g_{3k,i}\left (z_{i}\right ) = 0\) a.s.,Footnote 16 then

and the correlations between x 3, it and z i no longer play any important role in asymptotics. Assumption 6(iii) rules out such cases.

Assumption 6 is about the asymptotic properties of means of regressors as T → ∞. We also need additional regularity assumptions on the means of regressors that apply as N → ∞. Define

and

With this notation, we assume the followings:

Assumption 7

(convergence as N →∞): Define \(\tilde{\Theta }_{1,i} = \Theta _{1,i} - \frac{1} {N}\mathop{ \sum }\nolimits _{i}\Theta _{1,i}\); \(\tilde{\Theta }_{21,i} = \Theta _{21,i} - \frac{1} {N}\mathop{ \sum }\nolimits _{i}\Theta _{21,i}\); \(\tilde{\mu }_{g_{32,i}} =\mu _{g_{32,i}} - \frac{1} {N}\mathop{ \sum }\nolimits _{i}\mu _{g_{32,i}}\) ; and \(\tilde{\mu }_{g_{33,i}} =\mu _{g_{33,i}} - \frac{1} {N}\mathop{ \sum }\nolimits _{i}\mu _{g_{33,i}}\) . As N →∞,

-

(i)

\(\frac{1} {N}\!\!\sum _{i}\!\!\left (\begin{array}{c} H_{1}\tilde{\Theta }_{1,i} \\ \tilde{\Theta }_{21,i} \\ H_{32}\tilde{\mu }_{g_{32,i}} \\ H_{33}\tilde{\mu }_{g_{33,i}}\end{array} \right )\ \ {\left (\begin{array}{c} H_{1}\tilde{\Theta }_{1,i} \\ \tilde{\Theta }_{21,i} \\ H_{32}\tilde{\mu }_{g_{32,i}} \\ H_{33}\tilde{\mu }_{g_{33,i}}\end{array} \right )}^{{\prime}}\!\!\rightarrow \!\!\left (\begin{array}{cccc} \Gamma _{\Theta _{1},\Theta _{1}} & \Gamma _{\Theta _{1,}\Theta _{21}} & \Gamma _{\Theta _{1},\mu _{32}} & \Gamma _{\Theta _{1},\mu _{33}} \\ \Gamma _{\Theta _{1,}\Theta _{21}}^{{\prime}}&\Gamma _{\Theta _{21},\Theta _{21}} & \Gamma _{\Theta _{21},\mu _{32}} & \Gamma _{\Theta _{21},\mu _{33}} \\ \Gamma _{\Theta _{1},\mu _{32}}^{{\prime}} & \Gamma _{\Theta _{21},\mu _{32}}^{{\prime}} & \Gamma _{\mu _{32},\mu _{32}} & \Gamma _{\mu _{32},\mu _{33}} \\ \Gamma _{\Theta _{1},\mu _{33}}^{{\prime}} & \Gamma _{\Theta _{21},\mu _{33}}^{{\prime}} &\Gamma _{\mu _{32},g_{33}}^{{\prime}}& \Gamma _{\mu _{33},\mu _{33}}\end{array} \right )\!.\)

-

(ii)

\(\frac{1} {N}\!\!\sum _{i}\!\!\left (\begin{array}{lll} \Gamma _{g_{32},g_{32},i} &\Gamma _{g_{32,}g_{33,}i} &\Gamma _{g_{32},z,i} \\ \Gamma _{g_{32,}g_{33,}i}^{{\prime}}&\Gamma _{g_{33},g_{33},i}&\Gamma _{g_{33},z,i} \\ \Gamma _{g_{32},z,i}^{{\prime}} &\Gamma _{g_{33},z,i}^{{\prime}} &\Gamma _{zz,i}\end{array} \right )\!\! \rightarrow \!\!\left (\begin{array}{lll} \Gamma _{g_{32},g_{32}} & \Gamma _{g_{32},g_{33}} & \Gamma _{g_{32},z} \\ \Gamma _{g_{32},g_{33}}^{{\prime}}&\Gamma _{g_{33},g_{33}} & \Gamma _{g_{33},z} \\ \Gamma _{g_{32},z}^{{\prime}} &\Gamma _{g_{33},z}^{{\prime}} &\Gamma _{z,z}\end{array} \right )\!\) .

-

(iii)

The limit of \(\frac{1} {N}\sum _{i}\Theta _{1,i}\Theta _{1,i}^{{\prime}}\) exists.

Apparently, by Assumptions 6 and 7, we assume the sequential convergence of the means of regressors as T → ∞ followed by N → ∞. However, this by no means implies that our asymptotic analysis is a sequential one. Instead, the uniformity conditions in Assumption 6 allow us to obtain our asymptotic results using the joint limit approach that applies as (N, T → ∞) simultaneously.Footnote 17 Joint limit results can be obtained under an alternative set of conditions that assume uniform limits of the means of regressors sequentially as N → ∞ followed by T → ∞. Nonetheless, we adopt Assumptions 6 and 7 because they are much more convenient to handle the trends in regressors x 1, it and x 3, it for asymptotics.

The following notation is for conditional or unconditional covariances among time-varying regressors. Define

where \(\Gamma _{jl,i}\left (t,s\right ) = E\left (x_{j,it} - E_{\mathcal{F}_{z_{ i}}}x_{j,it}\right )\left (x_{l,is} - E_{\mathcal{F}_{z_{ i}}}x_{l,is}\right )\), for j, l = 2, 3. Essentially, the \(\Gamma _{i}\) is the unconditional mean of the conditional variance-covariance matrix of \({(x_{2,it}^{{\prime}},x_{3,it}^{{\prime}})}^{{\prime}}\). We also define the unconditional variance-covariance matrix of \({(x_{1,it}^{{\prime}},x_{2,it}^{{\prime}},x_{3,it}^{{\prime}})}^{{\prime}}\) by

where \(\tilde{\Gamma }_{jl,i}\left (t,s\right ) = E\left (x_{j,it} - Ex_{j,it}\right )\left (x_{l,is} - Ex_{l,is}\right )\), for j, l = 1, 2, 3. Observe that \(\Gamma _{22,i}\left (t,s\right ) =\tilde{ \Gamma }_{22,i}\left (t,s\right )\), since x 2, it and z i are independent. With this notation, we make the following assumption on the convergence of variances and covariances:

Assumption 8

(convergence of covariances): As \(\left (N,T \rightarrow \infty \right )\),

-

(i)

\(\frac{1} {N}\mathop{ \sum }\nolimits _{i} \frac{1} {T}\mathop{ \sum }\nolimits _{t}\mathop{ \sum }\nolimits _{s}\left (\begin{array}{ll} \Gamma _{22,i}\left (t,s\right ) &\Gamma _{23,i}\left (t,s\right ) \\ \Gamma _{23,i}^{{\prime}}\left (t,s\right )&\Gamma _{33,i}\left (t,s\right )\end{array} \right ) \rightarrow \left (\begin{array}{ll} \Gamma _{22} & \Gamma _{23} \\ \Gamma _{23}^{{\prime}}&\Gamma _{33}\end{array} \right ).\)

-

(ii)

\(\frac{1} {N}\mathop{ \sum }\nolimits _{i} \frac{1} {T}\mathop{ \sum }\nolimits _{t}\tilde{\Gamma }_{i}\left (t,t\right ) \rightarrow \Phi \) .

Note that the variance matrix \([\Gamma _{jl}]_{j,l=2,3}\) is the cross section average of the long-run variance-covariance matrix of \({\left (x_{2,it}^{{\prime}},x_{3,it}^{{\prime}}\right )}^{{\prime}}\). For future use, we partition the two limits in the assumption conformably to \({(x_{21,it}^{{\prime}},x_{22,it}^{{\prime}},x_{31,it}^{{\prime}},x_{32,it}^{{\prime}},x_{33,it}^{{\prime}})}^{{\prime}}\) as follows:

Assumption 9

Let \(\mathcal{F}_{z}^{\infty } =\sigma \left (z_{1},\ldots,z_{N},\ldots \right ).\) For a generic constant M that is independent of N and T, the followings hold:

-

(i)

\(\sup _{i,T}\left \| \frac{1} {T}\sum _{t}\left (x_{1,it} - Ex_{1,it}\right )\right \|_{4} < M,\)

-

(ii)

\(\sup _{i,T}\left \| \frac{1} {\sqrt{T}}\sum _{t}\left (x_{2,it} - Ex_{2,it}\right )\right \|_{4} < M,\)

-

(iii)

\(\sup _{i,T}\left \| \frac{1} {\sqrt{T}}\sum _{t}\left (x_{3,it} - E_{\mathcal{F}_{z_{ i}}}x_{3,it}\right )\right \|_{4} < M.\)

Assumption 9 assumes that the fourth moments of the sums of the regressors x 1, it , x 2, it , and x 3, it are uniformly bounded. This assumption is satisfied under mild restrictions on the moments of x it and on the temporal dependence of x 2, it and x 3, it . For sufficient conditions for Assumption 9, refer to Ahn and Moon (2001).

Finally, we make a formal definition of the random effects assumption, which is a more rigorous version of (7.3).

Assumption 10

(random effects): Conditional on \(\mathcal{F}_{w},\left \{u_{i}\right \}_{i=1,\ldots,N}\) is i.i.d. with mean zero, variance σ u 2 and finite \(\kappa _{u} \equiv \left \|u_{i}\right \|_{\mathcal{F}_{w},4}\) .

To investigate the power property of the Hausman test, we also need to define an alternative hypothesis that states a particular direction of model misspecification. Among many alternatives, we here consider a simpler one. Specifically, we consider an alternative hypothesis under which the conditional mean of u i is a linear function of \(D_{T}\tilde{w}_{i}\). Abusing the conventional definition of fixed effects (that indicates nonzero-correlations between \(w_{i} = {(x_{it}^{{\prime}},z_{i}^{{\prime}})}^{{\prime}}\) and u i ), we refer to this alternative as the fixed effects assumption:

Assumption 11

(fixed effects): Conditional on \(\mathcal{F}_{w}\) , the \(\left \{u_{i}\right \}_{i=1,\ldots,N}\) is i.i.d. with mean \(\tilde{w}_{i}^{{\prime}}D_{T}\lambda\) and variance σ u 2 , where λ is a (k + g) × 1 nonrandom nonzero vector.

Here, \(D_{T}\tilde{w}_{i} = [{(D_{x,T}\tilde{x}_{i})}^{{\prime}},\tilde{z}_{i}]\) can be viewed as a vector of detrended regressors. Thus, Assumption 11 indicates non-zero correlations between the effect u i and detrended regressors. The term \(\tilde{w}_{i}^{{\prime}}D_{T}\lambda\) can be replaced by \(\lambda _{o} + \overline{w}_{i}^{{\prime}}D_{T}\lambda\), where λ o is any constant scalar. We use the term \(\tilde{w}_{i}^{{\prime}}D_{T}\lambda\) instead of \(\lambda _{o} + \overline{w}_{i}^{{\prime}}D_{T}\lambda\) simply for convenience.

A sequence of local versions of the fixed effects hypothesis is given:

Assumption 12

(local alternatives to random effects): Conditional on \(\mathcal{F}_{w}\) , the sequence \(\left \{u_{i}\right \}_{i=1,\ldots,N}\) is i.i.d. with mean \(\tilde{w}_{i}^{{\prime}}D_{T}\lambda /\sqrt{N}\) , variance σ u 2 , and \(\kappa _{u}^{4} = E_{\mathcal{F}_{w}}{\left (u_{i} - E_{\mathcal{F}_{w}}u_{i}\right )}^{4} < \infty \) , where λ ≠ 0 (k+g)×1 is a nonrandom vector in \({\mathbb{R}}^{k+g}\) .

Under this Assumption, \(E\left (D_{T}\tilde{w}_{i}u_{i}\right )\) = \(\frac{1} {\sqrt{N}}E\left (D_{T}\tilde{w}_{i}\tilde{w}_{i}^{{}^{{\prime}} }D_{T}\right )\lambda \rightarrow 0_{(k+g)\times 1}\), as (N, T → ∞). Observe that these local alternatives are of the forms introduced in Table 7.1

The following assumption is required for identification of the within and between estimators of β and γ.

Assumption 13

The matrices \(\Psi _{x}\) and \(\Xi \) are positive definite.

Two remarks on this assumption follow. First, this assumption is also sufficient for identification of the GLS estimation. Second, while the positive definiteness of the matrix \(\Xi \) is required for identification of the between estimators, it is not a necessary condition for the asymptotic distribution of the Hausman statistic obtained below. We can obtain the same asymptotic results for the Hausman test even if we alternatively assume that within estimation can identify β (positive definite \(\Psi _{x}\)) and between estimation can identify γ given β (the part of \(\Xi \) corresponding to \(\tilde{z}_{i}\) is positive definite).Footnote 18 Nonetheless, we assume that \(\Xi \) is invertible for convenience.

We now consider the asymptotic distributions of the within, between and GLS estimators of β and γ:

Theorem 1

(asymptotic distribution of the within estimator): Under Assumptions 1–8 and 13 , as (N,T →∞),

Theorem 2

(asymptotic distribution of the between estimator): Suppose that Assumptions 1–8 and 13 hold. As \(\left (N,T \rightarrow \infty \right )\),

-

(a)

Under Assumption 10 (random effects),

$$\displaystyle{ D_{T}^{-1}\sqrt{N}\left (\begin{array}{l} \hat{\beta }_{b}-\beta \\ \hat{\gamma }_{b}-\gamma \end{array} \right ) = \left (\begin{array}{l} D_{x,T}^{-1}\sqrt{N}\left (\hat{\beta }_{b}-\beta \right ) \\ \sqrt{N}\left (\hat{\gamma }_{b}-\gamma \right )\end{array} \right ) \Rightarrow N\left (0,\sigma _{u}^{2}{\Xi }^{-1}\right ); }$$ -

(b)

Under Assumption 12 (local alternatives to random effects),

$$\displaystyle{ D_{T}^{-1}\sqrt{N}\left (\begin{array}{l} \hat{\beta }_{b}-\beta \\ \hat{\gamma }_{b}-\gamma \end{array} \right ) = \left (\begin{array}{l} D_{x,T}^{-1}\sqrt{N}\left (\hat{\beta }_{b}-\beta \right ) \\ \sqrt{N}\left (\hat{\gamma }_{b}-\gamma \right )\end{array} \right ) \Rightarrow N\left (\Xi \lambda,\sigma _{u}^{2}{\Xi }^{-1}\right ). }$$

Theorem 3

(asymptotic distribution of the GLS estimator of β): Suppose that Assumptions 1–8 and 13 hold.

-

(a)

Under Assumption 12 (local alternatives to random effects),

$$\displaystyle{ \sqrt{NT}G_{x,T}^{-1}\left (\hat{\beta }_{ g}-\beta \right ) = \sqrt{NT}G_{x,T}^{-1}\left (\hat{\beta }_{ w}-\beta \right ) + o_{p}\left (1\right ), }$$as \(\left (N,T\text{ } \rightarrow \text{}\infty \right ).\)

-

(b)

Suppose that Assumption 11 (fixed effects) holds. Partition \(\lambda = {(\lambda _{x}^{{\prime}},\lambda _{z}^{{\prime}})}^{{\prime}}\) conformably to the sizes of x it and z i . Assume that λ x ≠ 0 k×1 . If N∕T → c < ∞ and the included regressors are only of the x 22,it - and x 3,it -types (no trends and no cross-sectional heterogeneity in x it ), then

$$\displaystyle{ \sqrt{NT}G_{x,T}^{-1}\left (\hat{\beta }_{ g}-\beta \right ) = \sqrt{NT}G_{x,T}^{-1}\left (\hat{\beta }_{ w}-\beta \right ) + o_{p}\left (1\right ). }$$

Theorem 4

(asymptotic distribution of the GLS estimator of γ): Suppose that Assumptions 1–8 and 13 hold. Define \(l_{z}^{{\prime}} = \left (0_{g\times k}\vdots I_{g}\right ).\) Then, the following statements hold as \(\left (N,T \rightarrow \infty \right ).\)

-

(a)

Under Assumption 12 (local alternatives to random effects),

$$\displaystyle\begin{array}{rcl} \sqrt{N}\left (\hat{\gamma }_{g}-\gamma \right )& =&{ \left ( \frac{1} {N}\sum _{i}\tilde{z}_{i}\tilde{z}_{i}^{{\prime}}\right )}^{-1}\left ( \frac{1} {\sqrt{N}}\sum _{i}\tilde{z}_{i}\tilde{u}_{i}\right ) + o_{p}\left (1\right ) {}\\ & \Rightarrow & N\left ({\left (l_{z}^{{\prime}}\Xi l_{ z}\right )}^{-1}l_{ z}^{{\prime}}\Xi \lambda,\text{ }\sigma _{ u}^{2}{\left (l_{ z}^{{\prime}}\Xi l_{ z}\right )}^{-1}\right ). {}\\ \end{array}$$ -

(b)

Under Assumption 11 (fixed effects),

$$\displaystyle{ \left (\hat{\gamma }_{g}-\gamma \right ) \rightarrow _{p}{\left (l_{z}^{{\prime}}\Xi l_{ z}\right )}^{-1}l_{ z}^{{\prime}}\Xi \lambda. }$$

Several remarks follow. First, all of the asymptotic results given in Theorems 1–4 except for Theorem 3(b) hold as (N, T → ∞), without any particular restriction on the convergence rates of N and T. The relative size of N and T does not matter for the results, so long as both N and T are large. Second, one can easily check that the convergence rates of the panel data estimates of individual β coefficients (on the x 2, it - and x 3, it -type regressors) reported in Theorems 1–4 are consistent with those from Sect. 7.2.2. Third, Theorem 2 shows that under Assumption 10 (random effects), the between estimator of γ, \(\hat{\gamma }_{b}\), is \(\sqrt{ N}\)-consistent regardless of the characteristics of time-varying regressors. Fourth, both the between estimators of β and γ are asymptotically biased under the sequence of local alternatives (Assumption 12). Fifth, as Theorem 3(a) indicates, the within and GLS estimators of β are asymptotically equivalent not only under the random effects assumption, but also under the local alternatives. Furthermore, the GLS estimator of β is asymptotically unbiased under the local alternatives, while the between estimator of β is not. The asymptotic equivalence between the within and GLS estimation under the random effects assumption is nothing new. Previous studies have shown this equivalence based on a naive sequential limit method (T → ∞ followed by N → ∞) and some strong assumptions such as fixed regressors. Theorem 3(a) and (b) confirm the same equivalence result but with more a rigorous joint limit approach as \(\left (N,T \rightarrow \infty \right )\) simultaneously. It is also intriguing to see that the GLS and within estimators are equivalent even under the local alternative hypotheses.

Sixth, somewhat surprisingly, as Theorem 3(b) indicates, even under the fixed effects assumption (Assumption 11), the GLS estimator of β could be asymptotically unbiased (and consistent) and equivalent to the within counterpart, (i) if the size (N) of the cross section units does not dominate excessively the size (T) of time series in the limit (N∕T → c < ∞), and (ii) if the model does not contain trended or cross-sectionally heterogenous time-varying regressors. This result indicates that when the two conditions are satisfied, the biases in GLS caused by fixed effects are generally much smaller than those in the between estimator. If at least one of these two conditions is violated, that is, if N∕T → ∞, or if the other types of regressors are included, the limit of \((\hat{\beta }_{g} -\hat{\beta }_{w})\) is determined by how fast N∕T → ∞ and how fast the trends in the regressors increase or decrease.Footnote 19

Finally, Theorem 4(a) indicates that under the local alternative hypotheses, the GLS estimator \(\hat{\gamma }_{g}\) is \(\sqrt{ N}\)-consistent and asymptotically normal, but asymptotically biased. The limiting distribution of \(\hat{\gamma }_{g},\) in this case, is equivalent to the limiting distribution of the OLS estimator of γ in the panel model with the known coefficients of the time-varying regressors x it (OLS on \(\tilde{y}_{it} {-\beta }^{{\prime}}\tilde{x}_{it} {=\gamma }^{{\prime}}\tilde{z}_{i} + (u_{i} +\tilde{ v}_{it})\)). Clearly, the GLS estimator \(\hat{\gamma }_{g}\) is asymptotically more efficient than the between estimator \(\hat{\gamma }_{b}\). On the other hand, under the fixed effect assumption, unlike the GLS estimator of \(\beta,\ \hat{\beta }_{g}\), the GLS estimator \(\hat{\gamma }_{g}\) is not consistent as \(\left (N,T \rightarrow \infty \right )\). The asymptotic bias of \(\hat{\gamma }_{g}\) is given in Theorem 4(b).

Lastly, the following theorem finds the asymptotic distribution of the Hausman test statistic under the random effect assumption and the local alternatives:

Theorem 5

Suppose that Assumptions 1–8 and 13 hold. Corresponding to the size of \({(\overline{x}_{i}^{{\prime}},z_{i}^{{\prime}})}^{{\prime}}\) , partition \(\Xi \) and λ, respectively, as follows:

Then, as \(\left (N,T \rightarrow \infty \right ),\)

-

(a)

Under Assumption 10 (random effects),

$$\displaystyle{ \mathcal{H}\mathcal{M}_{NT} \Rightarrow \chi _{k}^{2}; }$$ -

(b)

Under Assumption 12 (local alternatives to random effects),

$$\displaystyle{ \mathcal{H}\mathcal{M}_{NT} \Rightarrow \chi _{k}^{2}(\eta ), }$$where \(\eta =\lambda _{ x}^{{\prime}}(\Xi _{xx} - \Xi _{xz}\Xi _{zz}^{-1}\Xi _{xz}^{{\prime}})\lambda _{x}/\sigma _{u}^{2}\) is the noncentral parameter.

The implications of the theorem are discussed in Sect. 7.2.3.

7.5 Conclusion

This paper has considered the large-N and large-T asymptotic properties of the within, between and random effects GLS estimators, as well as those of the Hausman test statistic. The convergence rates of the between estimator and the Hausman test statistic are closely related, and the rates crucially depend on whether regressors are cross-sectionally heterogeneous or homogeneous. Nonetheless, the Hausman test is always asymptotically χ 2-distributed under the random effects assumption. Our simulation results indicate that our asymptotic results are generally consistent with the finite-sample properties of the estimators and the Hausman test even if N and T are small.

Under certain local alternatives (where the conditional means of unobservable individual effects are linear in the regressors), we also have investigated the asymptotic power properties of the Hausman test. Regardless of the size of T, the Hausman test has power to detect non-zero correlations between unobservable individual effects and the permanent components of time-varying regressors. In contrast, the test has no power to detect non-zero correlations between the effects and the transitory components of time-varying-regressors if T is large and if the time-varying regressors do have permanent components. The Hausman test has some (although limited) power to detect non-zero correlations between the effects and time-invariant regressors when the correlations between time-varying and time-invariant regressors remain high over time. However, when the correlations decay quickly over time, the test loses its power.

In this paper, we have restricted our attention to the asymptotic and finite-sample properties of the existing estimators and tests when panel data contain both large numbers of cross section and time series observations. No new estimator or test is introduced. However, this paper makes several contributions to the literature. First, we have shown that the GLS and within estimators, as well as the Hausman test, can be used without any adjustment for the data with large T. Second, for the cases with both large N and T, we provide a theoretical link between the asymptotic equivalence of the within and GLS estimator and the asymptotic distribution of the Hausman test. Third, we have shown that cross-sectional heterogeneity in regressors can play an important role in asymptotics. Previous studies have often assumed that data are cross-sectionally i.i.d. Our findings suggest that future studies should pay attention to cross-sectional heterogeneity. Fourth, we find that the power of the Hausman test depends on T.

Fifth and finally, our results also provide empirical researchers with some useful guidance. Different Hausman test results from large-T and small-T data can provide some information about how the individual effect is correlated with time-varying regressors. The rejection by large-T data but acceptance by small-T data would indicate that the effect is correlated with the permanent components of the time-varying regressor, but the degrees of the correlations are low. In contrast, the acceptance by large-T data but rejection by small-T data may indicate that the effect is correlated with the temporal components of the time-varying regressors. Whether the individual effect is correlated with temporal or permanent components of time-varying regressors is important to determine what instruments should be used to estimate the coefficients of time-invariant regressors when the random effects assumption is rejected. For example, as an anonymous referee pointed out, a key identification requirement of the instrumental variables proposed by Breusch et al. (1989) is that only the permanent components of the time-varying regressors are correlated with the individual effects. If the Hausman test indicates that the individual effects are only temporally correlated with the time-varying regressors, the BMS instruments need not be used.

Needless to say, the model we have considered is a restrictive one. Extensions of our approach to more general models would be useful future research agendas. First, we have not considered the cases with more general errors; e.g., hetroskedastic and/or serially correlated errors. It would be useful to extend our approach to such general cases. Second, we have focused on the large-N and large-T properties of the panel data estimators and tests that are designed for the models with large N and small T. For the models with large N and large T, it may be possible to construct the estimators and test methods based on large-N and large-T asymptotics that may have better properties than the estimators and the tests analyzed here. Developing alternative estimators based on large-T and large-N asymptotics and addressing the issue of unit roots would be important research agendas. Third, another possible extension would be the instrumental variables estimation of Hausman and Taylor (1981), Amemiya and MaCurdy (1986), and Breusch et al. (1989). For an intermediate model between fixed effects and random effects, these studies propose several instrumental variables estimators by which both the coefficients on time-varying and time-invariant regressors can be consistently estimated. It would be interesting to investigate the large-N and large-T properties of these instrumental variables estimators as well as those of the Hausman test and other GMM tests based on these estimators.

Notes

- 1.

Estimation of the effect of a certain time-invariant variable on a dependent variable could be an important task in a broad range of empirical research. Examples would be the labor studies about the effects of schooling or gender on individual workers’ earnings, and the macroeconomic studies about the effect of a country’s geographic location (e.g., whether the country is located in Europe or Asia) on its economic growth. The within estimator is inappropriate for such studies.

- 2.