Abstract

Unmanned ground vehicles (UGV) have been the subject of research in recent years due to their future prospective of solving the traffic congestion and improving the safety on roads while having a more energy-efficient profile. In this chapter, the first UGV of Turkey, Otonobil, will be introduced detailing especially on its hardware and software design architecture, the perception capabilities and decision algorithms used in obstacle avoidance, and autonomous goal-oriented docking. UGV Otonobil features a novel heuristic algorithm to avoid dynamic obstacles, and the vehicle is an open test-rig for studying several intelligent-vehicle technologies such as steer-by-wire, intelligent traction control, and further artificial intelligence algorithms for acting in real-traffic conditions.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

The unmanned ground vehicle (UGV) Otonobil Footnote 1 (Fig. 4.1) is essentially an urban concept small electric vehicle (EV) which is mechanically converted to modify the driver–vehicle interfaces for autonomous operation. Hardware conversion process is divided into two main parts as mechanical and electrical modifications. Interface circuit, interface software, additional power system, selection of the sensors, and computer hardware are given in electrical modifications part. The multisensory perception capabilities of the UGV are given in Sect. 4.2, and the decision algorithms representing the artificial intelligence of the car is mentioned in Sect. 4.3.

Unmanned ground vehicle Otonobil at Istanbul Technical University, TR [1]

The mechanical modifications on the vehicle are mainly performed on the brake and steering wheel for autonomous operation. Two separate external servo motors with a gear box are used to activate the steering wheel column and brake pedal, each according to the commands sent by the main computer onboard. The modified steering system can be seen in Fig. 4.2.

Automatic steering system for Otonobil [2]

Additional mechanical modifications are in the form of extra components such as the top unit for carrying the GPS, IMU, and RF unit and the cage design in front of the car for supporting the LIDAR units.

2 Multisensor Data Acquisition, Processing, and Sensor Fusion

In this section, the multisensor platform in the UGV is examined together with data processing and sensor fusion strategies and algorithms. The system architecture is especially an important design consideration since the real-time performance of the applications depends heavily on the communication and general architecture such as distributed versus central structure and the communication protocols used. Another aspect of multisensor platform on the vehicle is that the platform can be used in both data acquisition/logging mode and real-time processing mode.

2.1 System Architecture

The sensors used in Otonobil are mainly for localization and state estimation purposes. The list of the sensors is given in Table 4.1.

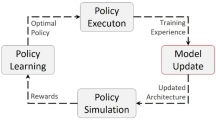

The full system with their connection schematics and communication paths is given in Fig. 4.3. The computational components are mainly NI-PXI box used for localization, mapping, and path planning; DSpace MicroAutoBox used for local trajectory planning and tracking including low-level control of steering, braking, and wireless communication; and IBEO ECU used for object and raw data of the obstacles in front of the car. The related processing hardware and software structure is given in Fig. 4.4. Using this structure, several software pieces work in their own cycle time and the computation results are sent finally to local controller for the operation of the vehicle. These pieces are mainly image processing, LIDAR processing, vehicle state estimation, local mapping, motion planner, local trajectory planner, trajectory tracking, and wireless communication.

Processing hardware and software structure of Otonobil [2]

For digital signal processing applications used in UGV Otonobil, it is essential to have accurate, synchronized, and real-time logged dataset. This dataset can give the opportunity to perform the implemented algorithms in simulations to observe the performance and errors in the algorithm. First, we need the dataset in order to be able to simulate the motion. Without obtaining the data first, it would not be possible to simulate the vehicle dynamics and kinematics with precision. The acquisition, processing, and logging of the sensors are performed in real-time module of LabVIEW since these applications require deterministic real-time performance that general purpose operating systems cannot guarantee. After acquisition and processing are performed, the data should be transferred via global variables since the data logging and the data acquisition processes are performed in different loops so that data wiring is impossible. However, this may cause race conditions because global variables could violate the strict data flow structure of LabVIEW. To overcome such a condition like this, functional global variable (FGV) or semaphores could be used. After the data is acquired and processed, it is transferred via an FGV to the data-logging loop which runs parallel to the acquisition and processing loop [3].

2.2 Sensor Fusion and Mapping

The sensor fusion and mapping algorithms are crucial in designing of a UGV because all the perception and decision making depends on the results from such calculations. For example, for path planning, an obvious map must be constructed. In context of intelligent vehicles, on board obstacle detection is an essential part. Obstacles must be detected in a correct and fast manner because the obstacles might be dynamic quickly changing their location and velocities with respect to UGV. Here, a sample algorithm for obstacle detection using laser scanner, camera, GPS, gyro, and compass is detailed. First, the vehicle is modeled as a platform with multiple sensors, and it is embedded in a simulation program (Webots) for simulating real driving scenarios as seen in Fig. 4.5.

Since the UGV has multiple sensors and none of these sensors are adequate for a precise measurement of the obstacle locations, a Bayesian inference method is used for detecting and tracking the obstacles. Some simulation results are given in Fig. 4.6 showing the obstacle map learning using different number of sensors.

2.3 Localization and Navigation

A fundamental capability of a UGV is navigation. Using the information from various sensors, a UGV should be capable of determining vehicle’s kinematic states, path planning, and calculating the necessary maneuvers to move between desired locations. To reach this objective with desired reliability, multisensor data fusion of various sensors is essential. For this objective, Otonobil is equipped with sensors like IMU, GPS, motor encoder, digital compass, and optic speed sensor. All the information from these sensors is fused using EKF algorithm given in Fig. 4.7. Also a stand-alone orientation estimation algorithm is proposed in order to have an accurate transformation of the information measured in vehicle’s body frame into navigation frame. Full details of the work can be referred to for further information [4].

3 Dynamic Obstacle Avoidance

In UGV Otonobil project, a novel obstacle avoidance method called “follow the gap” is designed and it has been tested in the real scenarios. The method is easy to tune and considers the practical constraints in real vehicle such as limited field of view for sensors and nonholonomic motion constraints. A brief explanation and experimental results of the developed method is detailed here; however, the full details of the work can be referred to for further information [5].

3.1 “Follow the Gap” Method

The method assumes that both the UGV and the obstacles are circular objects with minimum diameter covering all the physical extensions of the real objects. “Follow the gap” method depends on the construction of a gap array around the vehicle and calculation of the best heading angle for heading the robot into the center of the maximum gap around, while at the same time considering the goal point. The algorithm can be divided into three main parts as illustrated in Fig. 4.8.

Maximum gap, the gap center angle, and the goal angle can be understood in Fig. 4.9.

Gap center angle is calculated in terms of the measurable parameters using the trigonometric relations as illustrated in (4.1)

In this function, the variables are defined as follows: ϕ gap _ c is the gap center angle, and d 1, d 2 are the distances to obstacles of maximum gap. ϕ 1, ϕ 2 are the angles of obstacles of the maximum gap.

The final heading angle of the vehicle is computed using both center angle and goal angle. A fusion function for the final angle calculation is given in (4.2):

In this function, the variables are defined as follows: ϕ goal , goal angle; α, weight coefficient for gap; β, weight coefficient for goal; n, number of obstacles; d n , distance to n-th obstacle; and d mi n , minimum of d n distance values.

The α value defines how much the vehicle is goal oriented or gap oriented.

3.2 Experimental Results

Obstacle avoidance algorithm based on “follow the gap method” is coded using C programming language. The real-time code runs in MicroAutoBox hardware. The only tuning parameter, alpha, is selected as 20 in experimental tests as in the simulations. The field of view of the LIDAR is 150° and its measurement range is restricted with 10 m. The first test configuration is composed of seven static obstacles with a goal point. In the second test scenario, “follow the gap” method is tested using dynamic obstacles. The results of the dynamic obstacle tests are given in Fig. 4.10 with a 3D graph showing the time-dependent locations of the obstacles and their perceived trajectories by the vehicle.

4 Conclusions

An UGV named Otonobil is introduced in this chapter detailing on its mechatronics design, perception, and decision algorithms. First the modifications on an urban EV are mentioned briefly to convert the vehicle for autonomous operation. Then the multisensory structure with several processing units is given to emphasize the importance of data acquisition and real-time processing needs of such applications. Finally a novel dynamic obstacle avoidance algorithm developed for Otonobil is explained briefly. The vehicle will be used in similar research projects in the field of active vehicle safety and intelligent vehicles in future work.

Notes

- 1.

Otonobil is one of the major projects at Mechatronics Research and Education Centre of Istanbul Technical University supported by Turkish Ministry of Development since 2008.

References

V. Sezer, C. Dikilitas, Z. Ercan, H. Heceoglu, P. Boyraz, M. Gökaşan, “Hardware and Software Structure of Unmanned Ground Vehicle Otonobil”, 5th biennial workshop on DSP for ın-vehicle systems, Kiel, Germany, 2011

V. Sezer, C. Dikilitas, Z. Ercan, H. Heceoglu, A. Öner, A. Apak, M. Gökasan, A. Mugan, “Conversion of a Conventional Electric Automobile Into an Unmanned Ground Vehicle (UGV) IEEE – international conference on mechatronics, pp. 564–569, Istanbul, Turkey, 13–15 April 2011

Z. Ercan, V. Sezer, C. Dikilitas, H. Heceoglu, P. Boyraz, M. Gökaşan, “Multi-sensor Data Acquision, Processing and Logging using Labview”, 5th biennial workshop on DSP for ın-vehicle systems, Kiel, Germany, 2011

Z. Ercan, V. Sezer, H. Heceoglu, C. Dikilitas, M. Gökasan, A. Mugan, S. Bogosyan, “Multi-sensor data fusion of DCM based orientation estimation for land vehicles” IEEE – international conference on mechatronics, pp. 672–677, Istanbul, Turkey, 13–15 April 2011

V. Sezer, M. Gokasan, A novel obstacle avoidance algorithm: “follow the gap method”. Robot Auton Syst 60(9), 1123–1134 (2012)

Acknowledgement

The researchers in Otonobil project would like to thank Prof. Ata Muğan, Director of Mechatronics Education and Research Center, for his invaluable support and constant encouragement during this project.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer Science+Business Media New York

About this chapter

Cite this chapter

Sezer, V. et al. (2014). Unmanned Ground Vehicle Otonobil: Design, Perception, and Decision Algorithms. In: Schmidt, G., Abut, H., Takeda, K., Hansen, J. (eds) Smart Mobile In-Vehicle Systems. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-9120-0_4

Download citation

DOI: https://doi.org/10.1007/978-1-4614-9120-0_4

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4614-9119-4

Online ISBN: 978-1-4614-9120-0

eBook Packages: EngineeringEngineering (R0)