Abstract

Successful quantitative information extraction and the generation of useful products from hyperspectral imagery (HSI) require the use of computers. Though HSI data sets are stacks of images and may be viewed as images by analysts, harnessing the full power of HSI requires working primarily in the spectral domain. Algorithms with a broad range of sophistication and complexity are required to sift through the immense quantity of spectral signatures comprising even a single modestly sized HSI data set. The discussion in this chapter will focus on the analysis process that generally applies to all HSI data and discuss the methods, approaches, and computational issues associated with analyzing hyperspectral imagery data.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Successful quantitative information extraction and the generation of useful products from hyperspectral imagery (HSI) require the use of computers. Though HSI data sets are stacks of images and may be viewed as images by analysts (‘literal’ analysis), harnessing the full power of HSI requires working primarily in the spectral domain. And though individual spectral signatures are recognizable, knowable, and interpretable,Footnote 1 algorithms with a broad range of sophistication and complexity are required to sift through the immense quantity of spectral signatures comprising even a single modestly sized HSI data set and to extract information leading to the formation of useful products (‘nonliteral’ analysis).

But first, what is HSI and why acquire and use it? Hyperspectral remote sensing is the collection of hundreds of images of a scene over a wide range of wavelengths in the visible (∼0.40 micrometers or μm) to longwave infrared (LWIR, ∼14.0 μm) region of the electromagnetic spectrum. Each image or band samples a small wavelength interval. The images are acquired simultaneously and are thus coregistered with one another forming a stack or image cube. The majority of hyperspectral images (HSI) are from regions of the spectrum that are outside the range of human vision which is ∼0.40 to ∼0.70 μm. Each HSI image results from the interaction of photons of light with matter: materials reflect (or scatter), absorb, and/or transmit electromagnetic radiation (see, e.g., Hecht 1987; Hapke 1993; Solé et al. 2005; Schaepman-Strub et al. 2006; and Eismann 2012, for detailed discussions of these topics fundamental to HSI). Absorbed energy is later emitted (and at longer wavelengths—as, e.g., thermal emission). The light energy which is received by the sensor forms the imagery. Highly reflecting materials form bright objects in a band or image; absorbing materials (from which less light is reflected) form darker image patches. Ultimately, HSI sensors detect the radiation reflected (or scattered) from objects and materials; those materials that mostly absorb light (and appear dark) are also reflecting (or scattering) some photons back to the sensor. Most HSI sensors are passive; they only record reflected (or scattered) photons of sunlight or photons self-emitted by the materials in a scene; they do not provide their own illumination as is done by, e.g., lidar or radar systems. HSI is an extension of multispectral imagery remote sensing (MSI; see, e.g., Jensen 2007; Campbell 2007; Landgrebe 2003; Richards and Jia 1999). MSI is the collection of tens of bands of the electromagnetic spectrum. Individual MSI bands or images sample the spectrum over larger wavelength intervals than do individual HSI images.

The discussion in this chapter will focus on the analysis process beginning with the best possible calibrated at-aperture radiance data. Collection managers/data consumers/end users are advised to be cognizant of the various figures of merit (FOM) that attempt to provide some measure of data quality; e.g., noise equivalent spectral radiance (NESR), noise equivalent change of reflectance (NEΔρ), noise equivalent change of temperature (NEΔT), and noise equivalent change of emissivity (NEΔε).

What we will discuss generally applies, at some level, to all HSI data: visible/ near-infrared (VNIR) through LWIR. There are procedures that are applied to the midwave infrared (MWIR) and LWIRFootnote 2 that are not applied to VNIR/shortwave infrared (SWIR); e.g., temperature/emissivity separation (TES). Atmospheric compensation (AC) for thermal infrared (TIR) spectral image data is different (and, for the MWIR,Footnote 3 arguably more complicated) than for the VNIR/SWIR. But such differences notwithstanding, the bulk of the information extraction algorithms and methods (e.g., material detection and identification; material mapping)—particularly after AC—apply across the full spectral range from 0.4 μm (signifying the lower end of the visible) to 14 μm (signifying the upper end of the LWIR).

What we won’t discuss (and which require computational resources): all the processes that get the data to the best possible calibrated at-aperture radiance; optical distortion correction (e.g., spectral smile); bad/intermittent pixel correction; saturated pixel(s) masking; “NaN” pixel value masking; etc.

Also, we will not rehash the derivation of algorithm equations; we’ll provide the equations, a description of the terms, brief descriptions that will give the needed context for the scope of this chapter, and one or more references in which the reader will find significantly more detail.

2 Computation for HSI Data Analysis

2.1 The Only Way to Achieve Success in HSI Data Analysis

No amount of computational resources can substitute for practical knowledge of the remote sensing scenario (or problem) for which spectral image (i.e., HSI) data have been acquired. Successful HSI analysis and exploitation are based on the application of several specialized algorithms deeply informed by a detailed understanding of the physical, chemical, and radiative transfer (RT) processes of the scenario for which the imaging spectroscopy data are acquired. Thus, the astute remote sensing data analyst will seek the input of a subject matter expert (SME) knowledgeable of the materials, objects, and events captured in the HSI data. The analyst, culling as many remote sensing and geospatial data sources as possible (e.g., other forms of remote sensing imagery; digital elevation data) should work collaboratively with the SME (who is also culling as many subject matter information sources as possible) through much of the remote sensing exploitation flow―each informing the other about analysis strategies, topics for additional research, and materials/objects/events to be searched for in the data. It behooves the analyst to be a SME; remote sensing is, after all, a tool; one of many today's multi-disciplinary professional should bring to bear on a problem or a question of scientific, technical, or engineering interest.

It s important to state again, no amount of computational resources can substitute for practical knowledge of the problem and its setting for which HSI data have been acquired. Even with today’s desktop computational resources such as multi-core central processing units (CPUs) and graphics processing units (GPUs), brute force attempts to process HSI data without specific subject matter expertise simply lead to poor results faster. Stated alternatively, computational resources should never be considered a substitute (or proxy) for subject matter expertise. With these caveats in mind, let’s now proceed to discussing the role of computation in HSI data analysis and exploitation.

2.2 When Computation Is Needed

2.2.1 The General HSI Data Analysis Flow

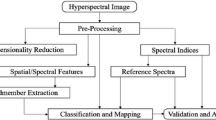

The general HSI data analysis flow is shown in Fig. 1. We will begin our discussion with ‘Look At/Inspect the Data’ (indicated by small arrow in top box). The flow chart from this box downwards is essentially the outline for the bulk of this chapter. The flow reflects the data analyst’s perspective though he/she, as a data end-user, will begin at ‘Data Ingest’ (again assuming one starts with the best possible, highest-quality, calibrated at-aperture radiance data).

Though we’ll follow the flow of Fig. 1, there is, implicitly, a higher-level clustering of the steps in the figure. This shown by the gray boxes subsuming one or more of the steps and which also form a top-down flow; they more succinctly indicate when computational resources are brought to bear in HSI data analysis. For example, ‘Data Ingest’, ‘Look At/Inspect the Data’ and ‘Atmospheric Compensation’ may perhaps logically fall into something labeled ‘Computation Before Full-Scene Data Analysis’. An example of this is the use of stepwise least-squares regression analysisFootnote 4 to select the best bands and/or combination of bands that best map one or more ground-truth parameters such as foliar chemistry derived by field sampling (and laboratory analysis) at the same time an HSI sensor was collecting (Kokaly and Clark 1999). We refer to this as ‘regression remote sensing’; there is a computational burden for the statistical analyses that generate coefficients for one (or more) equations which will then be applied to the remotely sensed HSI data set. The need for computational resources can vary widely in this phase of analysis. The entire pantheon of existing (and steady stream of new) multivariate analysis, optimization, etc., techniques for fitting and for band/band combinations selection may be utilized.

Atmospheric compensation (AC) is another example. There are numerous AC techniques that ultimately require the generation of look-up-tables (LUTs) with RT (radiative transfer) modeling. The RT models are generally tuned to the specifics of the data for which the LUTs will be applied (e.g., sensor altitude, time of day, latitude, longitude, expected ground cover materials); the LUTs may be generated prior to (or at the very beginning of) HSI data analysis.

The second gray box subsumes ‘Algorithms for Information Extraction’ and all subsequent boxes down to (and including) ‘Iteration’ (which isn’t really a process but a reminder that information extraction techniques should be applied numerous times with different settings, with different spatial and spectral subsets, with in-scene and with library signatures, different endmember/basis vector sets, etc.). This box is labeled ‘Computation During Full-Scene Data Analysis’.

The third box covers the remaining steps in the flow and is labeled ‘Computation After Full-Scene Data Analysis’. We won’t have much to say about this phase of HSI analysis beyond a few statements about the need for computational resources for geometric/orthorectification post-processing of HSI-derived results and products.

Experienced HSI practitioners may find fault with the admittedly coarse two-tier flow categorization described above. And indeed, they’d have grounds for argument. For example, a PCA may rightly fall into the first gray box ‘Computation Before Full-Scene Data Analysis’. Calculation of second order statistics for a data cube (see below) and the subsequent generation of a PC-transformed cube for use in data inspection may be accomplished early on (and automatically) in the data analysis process—and not in the middle gray box in Fig. 1. Another example is AC. AC is significantly more than the early-on generation of LUTs. There is the actual process of applying the LUT with an RT expression to the spectra comprising the HSI cube. This processing (requiring band depth mapping, LUT searching, optimization, etc.) is part of the core HSI analysis process and is not merely a ‘simple’ LUT-generation process executed early on. Other AC tools bring to bear different procedures that may also look more like ‘Computation During Full-Scene Data Analysis’ such as finding the scene endmembers (e.g., the QUAC tool; see below).

Nonetheless, a structure is needed to organize our presentation and what’s been outlined above will suffice. We will thus continue our discussion guided by the diagram in Fig. 1. Exemplar algorithms and techniques for each process will be discussed. Ground rules. (1) Acronyms will be used in the interest of space; an acronym table is provided in an appendix. (2) We will only discuss widely recognized, ‘mainstream’ algorithms and tools that have been discussed in the literature and are widely used. References are provided for the reader to find out more about any given algorithm or tool mentioned. (3) Discussions are necessarily brief. Here, too, we assume that the literature citations will serve as starting points for the reader to gather much more information on each topic. A later section lists a few key sources of information commonly used by the growing HSI community of practice.

2.2.2 Computation Before Full-Scene Data Analysis

2.2.2.1 Atmospheric Compensation (AC)

AC is the process of converting calibrated at-aperture radiance data to reflectance, ρ(λ), for the VNIR/SWIR and to ground-leaving radiance data (GLR) for the LWIR. LWIR GLR data are then converted to emissivity, ε(λ), by temperature/emissivity separation (TES).Footnote 5 Though AC is considered primarily the process for getting ρ(λ) and ε(λ), it may also be considered an inversion to obtain the atmospheric state captured in the HSI data. Much has been written about AC for HSI (and MSI). Additionally, AC borrows heavily from atmospheric science—another field with an extensive literature.

AC is accomplished via one of two general approaches. (1) In scene methods such as QUAC (Bernstein et al. 2005) or ELM, both for the VNIR/SWIR; or ISAC (Young et al. 2002) for the LWIR. (2) RT models such as MODTRAN.Footnote 6 In practice, the RT models are used in conjunction with in-scene data such as atmospheric water vapor absorption band-depth to guide LUT search for estimating transmissivity. Tools such as FLAASH (Adler-Golden et al. 2008) are GUI-driven and combine the use of MODTRAN and the interaction with the data to generate reflectance. The process is similar for the LWIR; AAC is an example of this (Gu et al. 2000). It is also possible to build a single RT model based tool to ingest LWIR at-aperture radiance data and generate emissivity that essentially eliminates (actually subsumes) the separate TES process.

In-scene AC methods span the range of computational burden/overhead from low (ELM) to moderate/high (QUAC). RT methods, however, can span the gamut from ‘simple’ LUT generation to increasing the complexity of the RT expressions and numerical analytical techniques used in the model. This is then followed by increasing the complexity of the various interpolation and optimization schemes utilized with the actual remotely sensed data to retrieve reflectance or emissivity. Here, too, when trying to match a physical measurement to modeled data, the entire pantheon of existing and emerging multivariate analysis, optimization, etc., techniques may be utilized.

In a nutshell, quite a bit of AC for HSI is RT-model driven combined with in-scene information. It should also be noted that typical HSI analysis generates one AC solution for each scene. Depending on the spatial dimensions of the scene, its expected statistical variance, or scene-content complexity, one or several solutions may be appropriate. As such, opportunities to expend computational resources utilizing a broad range of algorithmic complexity are many.

2.2.2.2 Regression Remote Sensing

Regression remote sensing was described above and is only briefly recapped here. It is exemplified by the use of stepwise least-squares regression analysis to select the best bands or combination of bands that correlate one (or more) desired parameters from the data. An example would be foliar chemistry derived by field sampling (followed by laboratory analysis) at the same time an HSI sensor was collecting. Coefficients are generally derived using the actual remotely sensed HSI data (and laboratory analyses) but may be derived using ground-truth point spectrometer data (with sampling characteristics comparable to the airborne HSI sensor; see ASD, Inc. 2012). Computation is required for the statistical analyses that generate coefficients for the model (regression) equation (e.g., an nth-degree polynomial) which will then be applied to the remotely sensed HSI data set. The need for computational resources can vary widely. Developers may draw on a large and growing inventory of techniques for multivariate analysis, optimization, etc., techniques for fitting and for feature selection. The ultimate application of the model to the actual HSI data is generally not algorithmically demanding or computationally complex.

2.2.3 Computation During Full-Scene Data Analysis

2.2.3.1 Data Exploration: PCA, MNF, and ICA

Principal components analysis (PCA), minimum noise fraction (MNF; Green et al. 1988), and independent components analysis (ICA; e.g., Comon 1994) are statistical transformations applied to multivariate data sets such as HSI. They are used to: (1) assess data quality and the presence of measurement artifacts; (2) estimate data dimensionality; (3) reduce data dimensionality (see, e.g., the ENVI® Hourglass; ITT Exelis-VIS 2012); (4) separate/highlight unique signatures within the data; and (5) inspect the data in a space different than its native wavelength-basis representation. Interesting color composite images may be built with PCA and MNF results that draw an analyst’s attention to features that would otherwise have been overlooked in the original, untransformed space. Second and higher-order statistics are estimated from the data; an eigendecomposition is applied to the covariance (or correlation) matrix. There is perhaps little frontier left in applying PCA and MNF to HSI. The algorithmic complexity and computational burden of these frequently applied processes is quite low when the appropriate computational method is chosen, such as SVD. A PCA or MNF for a moderately sized HSI data cube completes in under a minute on a typical desktop CPU. ICA is different; it is still an active area of research. Computational burden is very high; on an average workstation, an ICA for a moderately sized HSI data cube could take several hours to complete—depending on the details of the specific implementation of ICA being applied—and data volume.

The second-order statistics (e.g., the covariance matrix and its eigenvectors and eigenvalues) generated by a PCA or an MNF may be used by directed material search algorithms (see below). Thus, these transformations may be applied early on for data inspection/assessment and to generate information that will be used later in the analysis flow.

2.2.3.2 HSI Scene Segmentation/Classification

2.2.3.2.1 HSI Is to MSI as Spectral Mixture Analysis (SMA) Is to ‘Traditional’ MSI Classification

Based on traditional analysis of MSI, it has become customary to classify spectral image data—all types. Traditional scene classification as described in, e.g., Richards and Jia (1999) and Lillesand et al. (2008), is indeed possible with HSI but with caveat. (1) Some of the traditional supervised and unsupervised MSI classification algorithms are unable to take full advantage of the increased information content inherent in the very high dimensional, signature-rich HSI data. They report diminishing returns in terms of classification accuracy after some number of features (bands) is exceeded—absorbing computation time but providing no additional benefit.Footnote 7 (2) For HSI, it is better to use tools based on spectral mixture analysis (SMA; see, e.g., Adams et al. 1993).Footnote 8 SMA attempts to unravel and identify spectral signature information from two or more materials captured in the ground resolution cell that yields a pixel in an HSI data cube. The key to successful application of SMA and/or an SMA-variant is the selection of endmembers. And indeed, this aspect of the problem is the one that has received, in our opinion, the deepest, most creative, and most interesting thinking over the last two decades. Techniques include (but certainly not limited to) PPI (Boardman et al. 1995), N-FINDR (Winter 1999), SMACC (Gruninger et al. 2004; ITT Exelis-VIS 2011), MESMA/Viper Tools (Roberts et al. 1998), and AutoMCU (Asner and Lobell 2000). The need for computational resources varies widely based on the endmember selection method. (3) If you insist on utilizing heritage MSI methods (for which the need for computation also varies according to method utilized), we suggest that you do so to the full range HSI data set, and then repeat with successively smaller spectral subsets and compare results. Indeed, consider simulating numerous MSI sensor data sets with HSI by resampling the HSI down to MSI using the MSI systems’ bandpass/spectral response functions. More directly, simulate an MSI data set using best band selection (e.g., Keshava 2004) based on the signature(s) of the class(es) to be mapped. Some best band selection approaches have tended to be computationally intensive, though not all. Best band selection is a continuing opportunity for the role of computation in spectral image analysis.

Additional opportunities for computation include combining spectral- and object-based scene segmentation/classification by exploiting the high spatial resolution content of ground-based HSI sensors.

2.2.3.3 Directed Material Search

The distinction between HSI and MSI is starkest when considering directed material searching. The higher spectral resolution of HSI, the generation of a spectral signature, the resolution of spectral features, facilitates directed searching for specific materials that may only occur in a few or even one pixel (or even be subpixel in abundance within those pixels). HSI is best suited for searching for—and mapping of—specific materials and this activity is perhaps the most common use of HSI. There is a relationship with traditional MSI scene classification, but there are very important distinctions and a point of departure from MSI to HSI. Traditional classification is indeed material mapping but a family of more capable algorithms can take more advantage of the much higher information content inherent in an HSI spectrum.Footnote 9 The following sections describe the various algorithms.

2.2.3.3.1 Whole Pixel Matching: Spectral Angle and Euclidean Distance

Whole or single pixel matching is the comparison of two spectra. It is a fundamental HSI function; it is fundamental to material identification: the process of matching a remotely sensed spectrum with a spectrum of a known material (generally computer-assisted but also by visual recognition). The two most common methods to accomplish this are spectral angle (θ) mapping (SAM) and minimum Euclidean distance (MED).Footnote 10 Note from the numerator of Eq. 1 that the core of SAM is a dot (or inner) product between two spectra, s1 and s2 (the denominator is a product of vector magnitudes); MED is the Pythagorean theorem in n-dimensional space. There are many other metrics; many other ways to quantify distance or proximity between two points in n-dimensional space, but SAM and MED are the most common and their mathematical structure underpins the more sophisticated and capable statistical signal processing based algorithms.

Whole pixel, in the present context, refers to the process of matching two spectral signatures; a relatively unsophisticated, simple (but powerful) operation. Ancillary information, such as global second-order statistics or some other estimate of background clutter is not utilized (but is in other techniques; see below). Thus, subpixel occurrences of the material being sought may be missed.

There is little algorithmic or computational complexity required for these fundamental operations—even if combined with statistical testing (e.g., the t-test in CCSM of van der Meer and Bakker 1997).

Often, a collection of pixels (spectra) from an HSI data set is assumed to represent the same material (e.g., the soil of an exposed extent of ground). These spectra will not be identical to each other; there will be a range of reflectance values within each band; this variation is physically/chemically real and not due to measurement error. Similarly, rarely is there a single ‘library’ or ‘truth’ spectral signature for a given material (gases within normal earth surface temperature and pressure ranges being the notable exception). Compositional and textural variability and complexity dictate that a suite of spectra best characterizes any given substance. This is also the underlying concept to selecting training areas in MSI for scene segmentation with, e.g., maximum likelihood classification (MLC). Thus, when calculating distance, it is sometimes best to use metrics that incorporate statistics (as MLC does). The statistics attempt to capture the shape of the cloud of points in hyperspace and use this in estimating distance—usually between two such clouds. Two examples are the Jeffries-Matusita (JM) distance and transformed divergence (TD). The reader is referred to Richards and Jia (1999) and Landgrebe (2003) for more on the JM and TD metrics and other distance metrics incorporating statistics. Generally speaking, such metrics require the generation and inversion of covariance matrices. The use of such distance metrics is relatively rare in HSI analysis; they are more commonly applied in MSI analysis.

2.2.3.3.2 Statistical Signal Processing: MF and ACE

Two pillars of HSI analysis are the spectral matched filter (MF; Eq. 2)Footnote 11 and the adaptive coherence/cosine estimator (ACE; Eq. 3) algorithms (see, e.g., Stocker et al. 1990; Manolakis et al. 2003; Manolakis 2005). In Eqs. 2 and 3, μ is the global mean spectrum, t is the desired/sought target spectrum, x is a pixel from the HSI data, and Γ is the covariance matrix (and thus Γ−1 is the matrix inverse). MF and ACE are statistical signal processing based methods that use the data’s second order statistics (i.e., covariance or correlation matrices) calculated either globally or adaptively. In some sense, they are a culmination of the basic spectral image analysis concepts and methods discussed up to this point. They incorporate the Mahalanobis distance (which is related to the Euclidean distance) and spectral angle, and they effectively deal with mixed pixels. They are easily described (and derived) mathematically and are analytically and computationally tractable. They operate quickly and require minimal analyst interaction. They execute best what HSI does best: directed material search. Perhaps their only downside is that they work best when the target material of interest does not constitute a significant fraction of the scene thus skewing the data statistics upon which they are based (a phenomenon sometimes called ‘target leakage’). But even here, at least for the MF, some work-arounds such as reduced rank inversion of the covariance matrix can alleviate this effect (e.g., Resmini et al. 1997). Excellent discussions are provided in Manolakis et al. (2003), Chang (2003), and Schott (2007).Footnote 12

2.2.3.3.3 Spectral Signature Parameterization (Wavelets, Derivative Spectroscopy, SSA, ln(ρ))

HSI algorithms (e.g., SAM, MED, MF, ACE, SMA) may be applied, as appropriate, to radiance, reflectance, GLR, emissivity, etc., data. They may also be applied to data that have been pre-processed to, ideally, enhance desirable information while simultaneously suppressing components that do not contribute to spectral signature separation. The more common pre-processing techniques are wavelets analysis and derivative spectroscopy. Other techniques include single scattering albedo (SSA) transformation (Mustard and Pieters 1987; Resmini 1997), continuum removal, and a natural logarithm transformation of reflectance (Clark and Roush 1984).

Other pre-processing includes quantifying spectral shape such as band depth, width, and asymmetry to incorporate in subsequent matching algorithms and/or in an expert system; see, e.g., Kruse (2008).

2.2.3.3.4 Implementing the Regression Remote Sensing Equations

As mentioned above, applying the model equation, usually an nth-degree polynomial, to the HSI data is not computationally complex or algorithmically demanding. The computational resources and opportunities are invested in the generation of the regression coefficients.

2.2.3.4 Single Pixel/Superpixel Analysis

Often, pixels which break threshold following an application of ACE or MF are subjected to an additional processing step. This is often (and rightly) considered the actual material identification process but is largely driven by the desire to identify and eliminate false alarms generated by ACE and MF (and every other algorithm). Individual pixels or the average of several pixels (i.e., superpixels) which pass threshold are subjected to matching against a spectral library and, generally, quite a large library. This is most rigorously performed with generalized least squares (GLS) thus incorporating the scene second-order statistics. This processing step becomes very computationally intensive based on spectral library size and the selection of the number of spectral library signatures that may be incorporated into the solution. It is, nonetheless, a key process in the HSI analysis and exploitation flow.

2.2.3.5 Anomaly Detection (AD)

We have not said anything to this point about anomaly detection (AD). The definition of anomaly is context-dependent. E.g., a car in a forest clearing is an anomaly; the same car in an urban scene is most likely not anomalous. Nonetheless, the algorithms for AD are similar to those for directed material search; many are based on the second-order statistics (i.e., covariance matrix) calculated from the data. For example, the Mahalanobis distance, an expression with the same mathematical form as the numerator of the matched filter, is an AD algorithm. Indeed, an application of the MF (or ACE) may be viewed as AD particularly if another algorithm will be applied to the pixels that pass a user-defined threshold. The MF, in particular, is known to be sensitive to signatures that are ‘anomalous’ in addition to the signature of the material actually sought. Stated another way, the MF has a reasonably good probability of detection but a relatively high false alarm rate (depending, of course, on threshold applied to the result). This behavior motivated the development of MTMF (Boardman 1998) as well as efforts to combine the output of several algorithms such as MF and ACE. An image of residuals derived from a spectral mixture analysis will also yield anomalies.

Given the similarity of AD methods to techniques already discussed, we will say no more on this subject. The interested reader is referred to Manolakis et al. (2009), and references cited therein, for more information.

2.2.4 Error Analysis

Error propagation through the entire HSI image chain or even through an application of ACE or MF is still an area requiring additional investigation. Though target detection theory (e.g., Neyman-Pearson [NP] theory; see Tu et al. 1997) may be applied to algorithms that utilize statistics, there is a subtle distinctionFootnote 13 between algorithm performance based on target-signal to background-clutter ratio (SCR; and modifying this by using different spatial and spectral subsets with which data statistics are calculated or using other means to manipulate the data covariance matrix) and the impact of sensor noise on the fundamental ability to make a radiometric measurement; i.e., the NESR, and any additional error terms introduced by, e.g., AC (yielding the NEΔρ). NESR impacts minimum detectable quantity (MDQ) of a material, an HSI system (hardware + algorithms) FOM. An interesting assessment of the impact of signature variability on subpixel abundance estimation is given in Sabol et al. (1992) and Adams and Gillespie (2006). See also Kerekes (2008), Brown and Davis (2006), and Fawcett (2006) for detailed discussions on receiver operating characteristic (ROC) curvesFootnote 14—another mechanism used to assess HSI system performance and which also have dependencies on signature variability/target SCR and FOMs such as NESR and NEΔρ.

2.2.5 Computational Scope

Much has been said thus far about the algorithms used in HSI analysis. It is worth pausing to discuss the computational implications of hyperspectral data exploitation and the implementation of the algorithms. A typical hyperspectral imagery cube may be 1,000 lines by 500 samples by 250 bands. That is 500,000 pixels or spectra. And though most HSI sensors are 12 or 14 bit systems, the data are handled as 16-bit information. Thus this example data cube is 2 × 109 bits or 250 megabytes. Today, this data cube is small in comparison to the random access memory (RAM) available in modern computers. If read sequentially from RAM to the CPU this operation may take less than 0.04 s. But this is a naïve assessment as the number of operations that must take place, the order in which the data must be read, programming language applied, and the latencies between storage, memory, cache, and CPU must be considered. Let's take a quick look at the order of operations required for a simple hyperspectral algorithm.

Using the above data cube size as an example, a simple calculation of Euclidean distance requires a subtraction of one pixel vector from a reference vector (250 operations), a square of the elements of the result (250 operations), a sum of the vector (249 operations), and a square root of the total (1 operation). This gives 750 operations for each pixel leading to 375 million operations to calculate Euclidean distance for one reference spectrum. This is on the order of n operations where n = # of pixels x # of bands. This can quickly escalate as the order of operations for more complex algorithms can approach n 2 operations (endmember decomposition) or even n 3 operations (non-linear methods).

In an ideal world with CPUs reporting performance in the 100 GFLOP range, calculation time would appear to be trivial. But simply adding a 1 microsecond delay to any of these operations results in seconds of latency. In assessing expected performance of these algorithms it is insufficient to compare simple CPU or even GPU reported processing performance. Other latencies of the system, memory access and bandwidth, cache misses, memory and storage read and write speeds, all contribute to the problem and must be assessed.

2.2.6 Interim Summary

Successful HSI analysis is based on the application of specialized algorithms deeply informed by a detailed understanding of the physical, chemical, and radiative transfer processes of the scenario for which the imaging spectroscopy data are acquired. HSI data are significantly more than a seemingly indecipherable collection of points in a high dimensional hyperspace to which an endless mish mash of methods from electrical engineering, signal processing, multivariate analysis, and optimization theory may be blindly applied as a substitute for any and all understanding of the underlying nature and structure of the data and of the objects for which the data were acquired. Apply a technique if its underlying assumptions are met by the HSI data and/or the nature and structure of the HSI data and the underpinning physical, chemical, and radiative transfer processes are amenable to the information extraction capabilities of the method.

2.2.7 Miscellaneous Topics

There are many other topics that could be discussed; some commonly applied, others still under development or not yet widely utilized. Topics in the former category include: dimensionality reduction and/or data volume reduction (beyond PCA and MNF); product generation via fusion with lidar and SAR, pan-sharpening, georeferencing, and orthorectification; scene/data modeling and simulation with, e.g., DIRSIG (Schott 2007) and FASSP (Kerekes 2012), and spectral signature modeling. Topics in the later category include: topological methods (Basener et al. 2007); expert systems (Clark et al. 2003; Kruse et al. 1993; Kruse and Lefkoff 1993), genetic algorithms (Harvey et al. 2002), support vector machines (SVMs), Bayesian model averaging (BMA; e.g., Burr and Hengartner 2006); spatial and spectral data analysis (Resmini 2012); parallel processing/multicore processing/high performance computing; computer-assisted/analyst interactive data analysis and exploration, and visual analytics; and scientific databases (“big data”) and data mining. The interested reader may readily find information on these and many other topics in the scientific literature.

3 A Note to Developers and What’s Next

A new technique should be unique, stable, and robust. Its performance should not be easily bested by a skilled, experienced analyst applying the well known, well established toolbox of existing techniques to, say, different spatial and spectral subsets of the HSI data set or after utilizing some simple pre-processing methods (see, e.g., Funk et al. 2001) and/or by simply using the existing workhorse algorithms and tools in sequence and combining the results. Developers are thus urged to: (1) rigorously and honestly compare the performance of their new method with the existing suite of standard tools in the field; (2) apply their new method to a wide diversity of real remotely sensed data and not simply tune algorithm performance for the data set used for development and testing; i.e., honestly probe the technique’s performance bounds; and (3) perhaps most importantly, carefully review (and cite) the literature to avoid reinventing the wheel. And it cannot be stressed too strongly: computation is not a substitute for a deeper understanding of the nature of HSI data and practical knowledge of the problem and its setting for which the data have been acquired. That being said and emphasized, the next several sections discuss how computational resources can be applied.

3.1 Desktop Prototyping and Processing Peril

Many HSI practitioners develop new methods and algorithms out of necessity. Solving unique problems requires development or modification of algorithms for specific needs. The availability of desktop programming and mathematical tools such as Matlab or IDL has increased our productivity tremendously. These commercially available tools abstract complex algorithms into simple function calls for easy implementation. This is not without peril. Although the majority of new algorithm development applies sound fundamentals in regards to phenomenology, there is a need to understand the computational complexities of these approaches. A quick perusal of the help files of desktop prototyping tools such as Matlab or IDL for a simple function such as matrix inverse, will lead to discussion and examples of non-exact solutions and warnings of singular matrices. Since our fundamental problem is that of remote sensing inversion (Twomey 1977), we must expect that our computational results can yield non-exact or non-physical solutions. In addition, a naïve application of these functions may lead to significant computational issues such as rounding and truncation due to machine precision. A simple computational example (b = Ax) for solving a set of linear equations in Matlab is illustrated below.

>> A = magic(3)

A =

8 1 6

3 5 7

4 9 2

>> A(:,1) = zeros(1,3)

A =

0 1 6

0 5 7

0 9 2

>> b = [1;2;5];

>> x = A\b

Warning: Matrix is singular to working precision.

x =

Inf

0.584905660377358

-0.132075471698113

>> x = pinv(A)*b

x =

0

0.531943065210195

-0.015557762330354

>> A*x-b

ans =

-0.561403508771931

0.550810989738495

-0.243627937768954

In this example, we attempt to solve a set of linear equations using both Matlab’s ‘\’ operator (matrix inverse) and ‘pinv’ (pseudoinverse). Both solutions attempt a least squares solution. In the case of matrix inverse the solution goes to infinity and gives a warning of a singular matrix. Attempting a pseudoinverse leads to a solution, but comparison to the original vector b leads to a surprising result. The function did not fail, but the calculation did―and without warning.

The matrix inverse operation is key to many steps of the HSI analysis process and necessitates a check of both data quality and validity of results. This example illustrates sensitivity of a solution to the methods and values applied. As a practical exercise, one may choose to attempt a spectral unmixing method with artifact-laden or poorly calibrated data, e.g., bad/noisy bands, bands of all zeros, etc., and study the stability and physical implications of the unmixing model and its residuals when applying a pseudoinverse method.

Another critical operation to many algorithms is calculation of the covariance matrix. This calculation is a relatively straight forward combination of subtraction operations and array multiplication. While these operations present no inherent computational issues, the choice and quantity of pixels used for covariance estimation are critical. In regards to selection of which pixels to use, an assumption in calculating covariance for the matched filter is that it represents a homogenous background population. Target materials or anomalies present in the covariance estimation significantly degrade performance of the matched filter. In regards to quantity, the size of the background population for covariance estimation can suffer from two pitfalls: (1) the pixels chosen should represent the variance of the background data. Using pixels which are too similar or too varied (i.e., contain target materials or anomalies) will again degrade performance of the algorithm. (2) the quantity of pixels chosen should be sufficient to avoid computational issues of inverting a singular matrix. A good rule of thumb is to estimate the covariance with at least 10-times the number of data dimensions.

Significant effort has been made to ensure the computational accuracy of these methods and their implementation in software packages. Many of the desktop packages utilize the well known BLAS (Basic Linear Algebra Subprograms) and LAPACK (Linear Algebra Package) algorithm libraries first developed in the 1980s and 1990s and continually updated (Anderson 1999). These libraries are highly efficient implementations of numerical linear algebra methods for single and double precision and real and complex calculations. Functions also return flags indicating some measure of validity of the returned result. A basic understanding of these methods and their implementation in desktop computing applications should not be overlooked. This understanding parallels the deeper understanding of HSI data and practical knowledge of the problem as stated previously.

3.2 Automated Processing and Time Critical Applications

Discussions so far have focused on analysis methods suited for manual or analyst-interactive processing of individual HSI data sets. As the number of hyperspectral sensors and thus data increase in both military and civilian applications, the need for automated processing increases. Although much can be said about the complexities of this particular remote sensing problem, the need to automatically process data for anomaly detection or directed material search remains. In general, automated hyperspectral processing is driven by two circumstances: (1) the availability of suitable data analysts; and (2) the need for time critical analysis. In regards to 1, we believe it is safe to propose that the growth of HSI data will always exceed the availability of suitable analysts. Given that, automated processing for a portion, if not for all of HSI analysis, is necessary to support the limited availability of HSI analysts.

In regards to 2, hyperspectral sensors as a reconnaissance and surveillance tool seek to provide information and not just data to the appropriate first responders and decision makers. It would be naïve of us to consider only scientists and engineers as the sole consumers of such information. Because of this, automated processing to discover specific types of information is a necessity. As experts in the methodologies of HSI analysis, it is up to us to develop suitable methods of automated processing for the non-expert user and to thoroughly understand and explain the constraints in which that automated processing is valid. Automated processing is there to support the time critical nature of a specific mission or objective─e.g. military operations or disaster support. Choosing appropriate algorithms, specific target libraries, and providing some method of data/processing quality assurance and confidence is absolutely necessary.

Time critical analysis is driven by a need for information as soon as possible after the data are collected. This can be either in-flight or post-flight. An in-flight scenario requires on-board processing in which there may or may not be analyst on-board; e.g. UAV. In a post-flight scenario, multiple analysts and a mission specific set of computing hardware and software may be available. In both cases algorithm and target library selection remain critical. Typically, automated processes are studied in detail for specific target libraries before implementation in an actual data collection operation. An example process includes the following steps:

-

1.

Data pre-processing: This step generally brings the data from DN to a calibrated radiance. A check of the data for data quality prior to processing is performed. Bad bands and pixels may be removed. Geo-registration may be performed.

-

2.

Atmospheric correction: This step converts at-sensor radiance to reflectance. This may be an in-scene or RT/modeled method.

-

3.

Target Detection: In this step a statistical detector such as MF or ACE is applied using the target spectral library. Individual target detection planes are created.

-

4.

Thresholding: Using the detection planes and a predetermined threshold, pixels above the threshold are selected as possible target materials. Spatial operations are performed to generate discrete regions of interest (ROI).

-

5.

Identification: To confirm results of detection, individual spectra from the ROIs are compared to a larger set of target materials. Comparisons are made using various methods such as SAM, MED, or step-wise linear regression. This is the spectroscopy step of HSI analysis. Score values are then generated for each ROI.

An individual on a desktop computer may take several minutes to analyze a single data set following these steps. Implemented as a fully automated process on a GPU and using a target library of a hundred materials, this can be completed is several seconds (Brown et al. 2012).

This brings us to another scenario which drives time critical analysis and that is real time or near real time processing. There appears to be some misconception that on-board analysis necessitates real time processing of HSI data. The mention of real time processing usually leads to discussion of what real time processing is. In the present context, we use real time and near real time processing interchangeably. We define near real time processing to be automated processing with very low processing latency; e.g., a few seconds. In other words, once an HSI data cube is collected, it is then processed in an amount of time less than or equal to the collection time. Practical experience shows us that the difference between a few seconds or even a few minutes of processing latency is insignificant in most applications where the HSI sensor is the primary or only data collector. The fact that the sensor platform observed a location one or more seconds ago has little bearing on the ability of the sensor or processing algorithm’s ability to confidently perform a directed material search. The critical requirement is that on-board processing keeps pace with the data collection rate of the HSI sensor such that the initial processing latency allows the sensor system (i.e., hardware plus processing) to provide relevant information while it remains in its desired operating area.

A more stringent real time processing requirement occurs when HSI data are combined for data or information fusion with sensors that collect and process data that have temporal relevance such as motion imagery. In this case, the materials of interest may have a persistent signature, but the activities identified in the motion imagery are fleeting. It is now critical to overlay track or cue information onto broadband imagery such that an analyst/operator can associate spectral information with motion based activity. Processing latencies of more than just a few seconds would be unacceptable for real time vehicle tracking that combines spectral and motion imagery.

Real time HSI processing systems and algorithms have been pursued over the past several years with varying success (Stevenson et al. 2005; Chang 2013; Brown et al. 2012). The availability of inexpensive high performance computing hardware (GPU, DSP, FPGA) and their associated development environments facilitate the migration of HSI algorithms to embedded computing applications. In recent years the migration of HSI algorithms to GPUs has been researched and widely published on (e.g., Morgenstern and Zell 2011; Trigueros-Espinosa et al. 2011; Winter and Winter 2011). More complex algorithms to include non-linear methods and HSI georeferencing have also found significant performance improvement on GPUs (Campana-Olivo and Manian 2011; Opsahl et al. 2011). It is simply a matter of time before our most reliable and robust HSI algorithms are operating as ubiquitous automated processors.

3.3 A New Paradigm: Big Data

Up to this point we have largely considered analyst-interactive analysis of individual HSI data sets. This is either a desktop process conducted by an analyst, or possibly a near real time system processing data cubes as they are collected. A new paradigm in data analysis exists that must now be considered for spectral processing and exploitation. To motivate the reader we pose the following questions:

-

1.

Consider the scope of your spectral data holdings. If you had the ability to process and analyze groupings of data or the entire collection/campaign of data in minutes, would you want to?

-

2.

Have you ever considered the temporal or spatial evolution of material signatures, atmospheric effects, data covariance, or any other aspects of your hyperspectral information across years of collected data?

-

3.

Can you now analyze more than one data cube simultaneously and jointly?

-

4.

If Google had access to your data, how would they store, process, analyze, distribute, and study it?

Most of us are familiar with Google and maybe somewhat familiar with cloud computing. What most of us are not familiar with are the concepts of Big Data and the volume of information it represents. Years ago, when we considered the difficulty in processing large hyperspectral data sets, our concepts of big data were limited by our processing ability on a single CPU or possibly across multiple CPUs in a homogenous compute cluster. Today, Big Data represents the vast amount of structured and unstructured digital data that simply exist on computers and servers the world over. Big Data is of such concern to the commercial, business, and defense communities, in March 2012 the Office of the President of the United States announced the “Big Data Research and Development InitiativeFootnote 15”. This initiative funds efforts across the U.S. Government to research and develop techniques and methodologies to process and exploit extremely large data holdings. This includes intelligence, reconnaissance, and surveillance data from DoD, the vast holdings of earth observation and remote sensing data from NASA, and large data holdings across NIH, DOE and many other government agencies.

The first step in approaching the Big Data problem is an understanding of existing tools and methodologies for a distributed computing environment. This begins with Mapreduce developed by Google. Mapreduce is a programming model and implementation for processing large data sets (Dean and Ghemawat 2008). Programs written in the Mapreduce construct are automatically parallelized and can be reliably executed on large distributed heterogeneous systems. Using the Mapreduce model allows simplified development of parallel processing methods across thousands of distributed computers. Mapreduce is the basis of the production indexing system supporting the Google web search (Dean and Ghemawat 2008) and has been found effective in various applications such as machine learning, bioinformatics, astrophysics, and cyber-security (Lin et al. 2010).

Mapreduce has been implemented in the open-source application Hadoop, developed by the Apache Software Foundation.Footnote 16 Hadoop has become the preferred solution for Big Data analytics and is in use by Google, Yahoo, IBM, Facebook, and others (Burlingame 2012). Hadoop implements distributed computing and distributed file system elements with a Java programming interface to allow for the development of distributed computing environments. A Hadoop implementation is available to users of Amazon Web Services as Amazon Elastic MapReduce (EMR).Footnote 17 EMR provides access to a user configurable number of compute nodes and charges a fee based on compute capacity needed. Amazon has effectively and inexpensively provided supercomputer access to any individual, company, or government.

Mapreduce has created a new kind of supercomputer for Big Data analysis (McMillan 2012). In this context, HSI analysis must be viewed no longer in terms of full-scene analysis, but full-campaign analysis, or full-regional analysis, or fully integrated temporal-spatial analysis. It is now up to us to integrate our practical knowledge of HSI analysis with the computational resources available to anyone with access to a computer and the internet.

3.4 Where to Find More Information: The HSI Community of Practice

HSI remote sensing is an established, active field of research and practical application with a large and growing body of literature. Practitioners and would-be contributors have many resources at their disposal for research on previous work and for communication of results. Scientific journals include Remote Sensing of Environment, the International Journal of Remote Sensing, the IEEEFootnote 18 Transactions on Geoscience and Remote Sensing, and the IEEE Geoscience and Remote Sensing Letters. Scientific associations include the Society of Photo-optical Instrumentation Engineers (SPIE), IEEE, the American Society of Photogrammetry and Remote Sensing (ASPRS), and the American Geophysical Union (AGU). Each society has a host of journals, both peer reviewed and non-reviewed, and major symposia at which results are communicated. HSI remote sensing is a vigorous community of practice and one in which government, private sector, and academic institutions participate. A wealth of information about HSI is also available on the World Wide Web.

Notes

- 1.

The analyst is encouraged to study and become familiar with several spectral signatures likely to be found in just about every earth remote sensing data set: vegetation, soils, water, concrete, asphalt, iron oxide (rust), limestone, gypsum, snow, paints, fabrics, etc.

- 2.

The MWIR and LWIR (together or individually) may be referred to as the thermal infrared or TIR.

- 3.

We will no longer mention the MWIR; though the SEBASS sensor (Hackwell et al. 1996) provides MWIR data, very little have been made available. MWIR HSI is an area for research, however. MWIR data acquired during the day time have a reflective and an emissive component which introduces some interesting complexity for AC.

- 4.

Or principal components regression (PCR) or partial least squares regression (PLSR; see, e.g., Feilhauer et al. 2010).

- 5.

Reflectivity and emissivity are related by Kirchhoff’s law: ε(λ) = 1− ρ(λ).

- 6.

MODTRAN (v5) is extremely versatile and may be used for HSI data from the VNIR through the LWIR.

- 7.

This phenomenon has indeed been demonstrated. It is most unfortunate, however, that it has been used to impugn HSI technology when it is really an issue with poor algorithm selection and a lack of understanding of algorithm performance and of the information content inherent in a spectrum.

- 8.

Also known as spectral unmixing/linear spectral unmixing (LSU), subpixel analysis, subpixel abundance estimation, etc. The mixed pixel, and the challenges it presents, is a fundamental concept underlying much of the design of HSI algorithms and tools.

- 9.

These algorithms may also be (and have been) applied to MSI. At some level of abstraction, the multivariate statistical signal processing-based algorithms that form the core HSI processing may be applied to any multivariate data set (e.g., MSI, HSI, U.S. Dept. of Labor statistics/demographic data) of any dimension greater than 1.

- 10.

Sometimes also referred to as simply ‘minimum distance’ (MD).

- 11.

There are various names for this algorithm. Some are reinventions of the same technique; others represent methods that are variations on the basic mathematical structure as described in, e.g., Manolakis et al. (2003).

- 12.

As well as an historical perspective provided by the references cited in these works.

- 13.

And a relationship; i.e., signature variability will have two components contributing to the two probability distribution functions in NP theory: an inherent, real variability of the spectral signatures of materials and the noise in the measurement of those signatures imparted by the sensor.

- 14.

And area under the ROC curve or AUC.

- 15.

- 16.

http://hadoop.apache.org/, last accessed May 8, 2012.

- 17.

- 18.

Institute of Electrical and Electronics Engineers.

References

Adams JB, Gillespie AR (2006) Remote sensing of landscapes with spectral images: a physical modeling approach. Cambridge University Press, Cambridge, 362 p

Adams JB, Smith MO, Gillespie AR (1993) Imaging spectroscopy: interpretation based on spectral mixture analysis. In: Pieters CM, Englert PAJ (eds) Remote geochemical analysis: elemental and mineralogical composition, vol 4, Topics in remote sensing. Cambridge University Press, Cambridge, pp 145–166

Adler-Golden S, Berk A, Bernstein LS, Richtsmeier S, Acharya PK, Matthew MW, Aderson GP, Allred CL, Jeong LS, Chetwynd JH (2008) FLAASH, a MODTRAN4 atmospheric correction package for hyperspectral data retrieval and simulations. ftp://popo.jpl.nasa.gov/pub/docs/workshops/98_docs/2.pdf. Last accessed 29 Jan 2012

Anderson E, Bai Z, Bischof C, Blackford S, Demmel J, Dongarra J, Du Croz J, Greenbaum A, Hammarling S, McKenney A, Sorensen D (1999) LAPACK users' guide, 3rd edn. Society for Industrial and Applied Mathematics, Philadelphia

Asner GP, Lobell DB (2000) A biogeophysical approach for automated SWIR unmixing of soils and vegetation. Remote Sens Environ 74:99–112

Basener B, Ientilucci EJ, Messinger DW (2007) Anomaly detection using topology. In: Proceedings of SPIE, algorithms and technologies for multispectral, hyperspectral, and ultraspectral imagery XIII, vol 6565. Orlando, April 2007

Bernstein LS, Adler-Golden SM, Sundberg RL, Levine RY, Perkins TC, Berk A, Ratkowski AJ, Felde G, Hoke ML (2005) Validation of the QUick atmospheric correction (QUAC) algorithm for VNIR-SWIR multi- and hyperspectral imagery. In: Shen SS, Lewis PE (eds) Proceedings of the SPIE, algorithms and technologies for multispectral, hyperspectral, and ultraspectral imagery XI, vol 5806. Orlando, 28 Mar–1 Apr 2005, pp 668–678

Boardman JW (1998) Leveraging the high dimensionality of AVIRIS data for improved subpixel target unmixing and rejection of false positives: mixture tuned matched filtering. In: Green RO (ed) Proceedings of the 7th JPL geoscience workshop, NASA Jet Propulsion Laboratory, pp 55–56

Boardman JW, Kruse FA, Green RO (1995) Mapping target signatures via partial unmixing of AVIRIS data. In: Summaries, fifth JPL airborne earth science workshop, NASA Jet Propulsion Laboratory Publication 95–1, vol 1, pp 23–26

Brown CD, Davis HT (2006) Receiver operating characteristics curves and related decision measures: a tutorial. Chemometr Intell Lab Syst 80:24–38. doi:10.1016/j.chemolab.2005.05.004

Brown MS, Glaser E, Grassinger S, Slone A, Salvador M (2012) Proceeding of SPIE 8390. Algorithms and technologies for multispectral, hyperspectral, and ultraspectral imagery XVIII 839018, 8 May 2012. doi:10.1117/12.918667

Burlingame N (2012) The little book of big data. New Street Communications, LLC., Wickford, 590 p

Burr T, Hengartner N (2006) Overview of physical models and statistical approaches for weak gaseous plume detection using passive infrared hyperspectral imagery. Sensors 6: 1721–1750 (http://www.mdpi.org/sensors)

Campana-Olivo R, Manian V (2011) Parallel implementation of nonlinear dimensionality reduction methods applied in object segmentation using CUDA in GPU. In: Proceedings of SPIE 8048, algorithms and technologies for multispectral, hyperspectral, and ultraspectral imagery XVII, 80480R, 20 May 2011, doi:10.1117/12.884767

Campbell JB (2007) Introduction to remote sensing, 4th edn. The Guilford Press, New York, 626 p

Chang C-I (2003) Hyperspectral imaging. Techniques for spectral detection and classification. Kluwer/Plenum Publishers, New York, 370 p

Chang C-I (2013) Real time hyperspectral image processing: algorithm architecture and implementation. Springer (in press) ISBN 978-1-4419-6186-0, 490 p

Clark RN, Roush TL (1984) Reflectance spectroscopy: quantitative analysis techniques for remote sensing applications. J Geophys Res 89(B7):6329–6340

Clark RN, Swayze GA, Live KE, Kokaly RF, Sutley SJ, Dalton JB, McDougal RR, Gent CA (2003) Imaging spectroscopy: earth and planetary remote sensing with the USGS Tetracorder and expert systems. J Geophys Res 108(E12):5131. doi:10.1029/2002JE001847

Comon P (1994) Independent component analysis, a new concept? Signal Process 36:287–314

Dean J, Ghemawat S (2008) Mapreduce: simplified data processing on large clusters. Comm ACM 51(1):107–113

Eismann MT (2012) Hyperspectral remote sensing. SPIE Press, Bellingham, 725 p

ITT Exelis-VIS (2011) ENVI tutorial: using SMACC to extract endmembers. www.exelisvis.com/portals/0/tutorials/envi/SMACC.pdf. Last accessed 12 Feb 2012

ITT Exelis-VIS (2012) http://www.exelisvis.com/language/en-us/productsservices/envi.aspx. Last accessed 17 Oct 2012

Fawcett T (2006) An introduction to ROC analysis. Pattern Recogn Lett 27:861–874. doi:10.1016/j.patrec.2005.10.010

Feilhauer H, Asner GP, Martin RE, Schmidtlein S (2010) Brightness-normalized partial least squares regression for hyperspectral data. J Quant Spectrosc Radiat Transfer 111:1947–1957

FLAASH http://www.spectral.com/remotesense.shtml#FLAASH. Last accessed 29 Jan 2012

Funk CC, Theiler J, Roberts DA, Borel CC (2001) Clustering to improve matched filter detection of weak gas plumes in hyperspectral thermal imagery. IEEE T Geosci Remote Sens 39(7): 1410–1420

Green AA, Berman M, Switzer P, Craig MD (1988) A transformation for ordering multispectral data in terms of image quality with implications for noise removal. IEEE T Geosci Remote Sens 26(1):65–74

Gruninger J, Ratkowski AJ, Hoke ML (2004) The Sequential Maximum Angle Convex Cone (SMACC) endmember model. In: Shen SS, Lewis PE (eds) Proceedings of the SPIE, algorithms for multispectral and hyper-spectral and ultraspectral imagery, vol 5425–1. Orlando, April 2004

Gu D, Gillespie AR, Kahle AB, Palluconi FD (2000) Autonomous atmospheric compensation (AAC) of high-resolution hyperspectral thermal infrared remote-sensing imagery. IEEE T Geosci Remote Sens 38(6):2557–2570

Hackwell JA, Warren DW, Bongiovi RP, Hansel SJ, Hayhurst TL, Mabry DJ, Sivjee MG, Skinner JW (1996) LWIR/MWIR imaging hyperspectral sensor for airborne and ground-based remote sensing. In: Proceedings of the SPIE, vol 2819, pp 102–107

Hapke B (1993) Theory of reflectance and emittance spectroscopy. Cambridge University Press, Cambridge, 455 p

Harvey NR, Theiler J, Brumby SP, Perkins S, Szymanski JJ, Bloch JJ, Porter RB, Galassi M, Young AC (2002) Comparison of GENIE and conventional supervised classifiers for multispectral image feature extraction. IEEE T Geosci Remote Sens 40(2):393–404

Hecht E (1987) Optics, 2nd edn. Addison-Wesley Publishing Company, Reading, 676 p

ASD Inc. (2012) http://www.asdi.com/. Last accessed 29 Jan 2012

Jensen JR (2007) Remote sensing of the environment: an earth resource perspective, 2nd edn. Prentice Hall Series in Geographic Information Science, Upper Saddle River, 608 p

Kerekes JP (2008) Receiver operating characteristic curve confidence intervals and regions. IEEE Geosci Remote Sens Lett 5(2):251–255

Kerekes JP (2012) http://www.cis.rit.edu/people/faculty/kerekes/fassp.html. Last accessed 2 Feb 2012

Keshava N (2004) Distance metrics and band selection in hyperspectral processing with application to material identification and spectral libraries. IEEE T Geosci Remote Sens 42(7):1552–1565

Kokaly RF, Clark RN (1999) Spectroscopic determination of leaf biochemistry using band-depth analysis of absorption features and stepwise multiple linear regression. Remote Sens Environ 67:267–287

Kruse FA (2008) Expert system analysis of hyperspectral data. In: Shen SS, Lewis PE (eds) Proceedings of the SPIE, algorithms and technologies for multispectral, hyperspectral, and ultraspectral imagery XIV, vol 6966, doi:10.1117/12.767554

Kruse FA, Lefkoff AB (1993) Knowledge-based geologic mapping with imaging spectrometers: remote sensing reviews, special issue on NASA Innovative Research Program (IRP) results, vol 8, pp 3–28. http://www.hgimaging.com/FAK_Pubs.htm. Last accessed 29 Jan 2012

Kruse FA, Lefkoff AB, Dietz JB (1993) Expert system-based mineral mapping in northern Death Valley, California/Nevada using the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS): remote sensing of environment, special issue on AVIRIS, May–June 1993, vol 44, pp 309–336. http://www.hgimaging.com/FAK_Pubs.htm. Last accessed 29 Jan 2012

Landgrebe DA (2003) Signal theory methods in multispectral remote sensing. Wiley-Interscience/Wiley, Hoboken, 508 p

Lillesand TM, Kiefer RW, Chipman JW (2008) Remote sensing and image interpretation, 6th edn. Wiley, New York, 756 p

Lin H, Archuleta J, Ma X, Feng W, Zhang Z, Gardner M, (2010) MOON: MapReduce on opportunistic environments. In: Proceedings of the 19th ACM international symposium on high performance distributed computing, ACM, New York

Manolakis D (2005) Taxonomy of detection algorithms for hyperspectral imaging applications. Opt Eng 44(6):1–11

Manolakis D, Marden D, Shaw GA (2003) Hyperspectral image processing for automatic target detection applications. MIT Lincoln Lab J 14(1):79–116

Manolakis D, Lockwood R, Cooley T, Jacobson J (2009) Is there a best hyperspectral detection algorithm? In: Shen SS, Lewis PE (eds) Algorithms and technologies for multispectral, hyperspectral, and ultraspectral imagery XV, vol 7334. Orlando, doi:http://dx.doi.org/10.1117/12.816917, 16 p

McMillan R (2012) Project moon: one small step for a PC, one giant leap for data. http://www.wired.com/wiredenterprise/2012/05/project_moon/. Last accessed 8 May 2012

MODTRAN5 http://www.modtran.org/. Last accessed 29 Jan 2012

Morgenstern J, Zell B (2011) GPGPU-based real-time conditional dilation for adaptive thresholding for target detection. In: Proceedings of SPIE 8048, algorithms and technologies for multispectral, hyperspectral, and ultraspectral imagery XVII, 80480P, 20 May 2011, doi:10.1117/12.890851

Mustard JF, Pieters CM (1987) Abundance and distribution of ultramafic microbreccia in moses rock dike: quantitative application of mapping spectroscopy. J Geophys Res 92(B10): 10376–10390

Opsahl T, Haavardsholm TV, Winjum I (2011) Real-time georeferencing for an airborne hyperspectral imaging system. In: Proceedings of SPIE 8048, algorithms and technologies for multispectral, hyperspectral, and ultraspectral imagery XVII, 80480S, 20 May 2011, doi:10.1117/12.885069

Resmini RG (1997) Enhanced detection of objects in shade using a single-scattering albedo transformation applied to airborne imaging spectrometer data. The international symposium on spectral sensing research, CD-ROM, San Diego, 7 p

Resmini RG (2012) Simultaneous spectral/spatial detection of edges for hyperspectral imagery: the HySPADE algorithm revisited. In: Shen SS, Lewis PE (eds) Proceedings of the SPIE, algorithms and technologies for multispectral, hyperspectral, and ultraspectral imagery XVIII, vol 8390. Baltimore, 23–27 April 2012, doi:http://dx.doi.org/10.1117/12.918751, 12 p

Resmini RG, Kappus ME, Aldrich WS, Harsanyi JC, Anderson ME (1997) Mineral mapping with Hyperspectral Digital Imagery Collection Experiment (HYDICE) sensor data at Cuprite, Nevada, U.S.A. Int J Remote Sens 18(7):1553–1570. doi:10.1080/014311697218278

Richards JA, Jia X (1999) Remote sensing digital image analysis, an introduction, 3rd, revised and enlarged edition. Springer, Berlin, 363 p

Roberts DA, Gardner M, Church R, Ustin S, Scheer G, Green RO (1998) Mapping Chaparral in the Santa Monica Mountains using multiple endmember spectral mixture models. Remote Sens Environ 65:267–279

Sabol DE, Adams JB, Smith MO (1992) Quantitative subpixel spectral detection of targets in multispectral images. J Geophys Res 97:2659–2672

Schaepman-Strub G, Schaepman ME, Painter TH, Dangel S, Martonchik JV (2006) Reflectance quantities in optical remote sensing—definitions and case studies. Remote Sens Environ 103:27–42

Schott JR (2007) Remote sensing: the image chain approach, 2nd edn. Oxford University Press, New York, 666 p

Solé JG, Bausá LE, Jaque D (2005) An introduction to the optical spectroscopy of inorganic solids. Wiley, Hoboken, 283 p

Stevenson B, O’Connor R, Kendall W, Stocker A, Schaff W, Alexa D, Salvador J, Eismann M, Barnard K, Kershenstein J (2005) Design and performance of the civil air patrol ARCHER hyperspectral processing system. In: Proceedings of SPIE, vol 5806, p 731

Stocker AD, Reed IS, Yu X (1990) Multi-dimensional signal processing for electro-optical target detection. In: Signal and data processing of small targets 1990, Proceedings of the SPIE, vol 1305, pp 218–231

Trigueros-Espinosa B, Vélez-Reyes M, Santiago-Santiago NG, Rosario-Torres S (2011) Evaluation of the GPU architecture for the implementation of target detection algorithms for hyperspectral imagery. In: Proceedings of SPIE 8048, algorithms and technologies for multispectral, hyperspectral, and ultraspectral imagery XVII, 80480Q, May 20 2011, doi:10.1117/12.885621

Tu TM, Chen C-H, Chang C-I (1997) A least squares orthogonal subspace projection approach to desired signature extraction and detection. IEEE T Geosci Remote Sens 35(1):127–139

Twomey S (1977) Introduction to the mathematics of inversion and indirect measurements. Development in geomathematics, no. 3. Elsevier Scientific Publishing, Amsterdam, (republished by Dover Publ., 1996), 243 p

van Der Meer F, Bakker W (1997) CCSM: cross correlogram spectral matching. Int J Remote Sens 18(5):1197–1201. doi:10.1080/014311697218674

Winter ME (1999) N-FINDR: an algorithm for fast autonomous spectral end-member determination in hyperspectral data. In: Descour MR, Shen SS (eds) Proceedings of the SPIE, imaging spectrometry V, vol 3753. Denver, 18 July 1999, pp 266–277, doi:10.1117/12.366289

Winter ME, Winter EM (2011) Hyperspectral processing in graphical processing units. In: Proceedings of SPIE 8048, algorithms and technologies for multispectral, hyperspectral, and ultraspectral imagery XVII, 80480O, 20 May 2011, doi:10.1117/12.884668

Young SJ, Johnson RB, Hackwell JA (2002) An in-scene method for atmospheric compensation of thermal hyperspectral data. J Geophys Res 107(D24):4774. doi:10.1029/2001JD001266, 20 p

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix: Acronyms, Symbols, and Abbreviations Table

Appendix: Acronyms, Symbols, and Abbreviations Table

Rights and permissions

Copyright information

© 2014 Springer Science+Business Media New York

About this chapter

Cite this chapter

Salvador, M., Resmini, R. (2014). Computation in Hyperspectral Imagery (HSI) Data Analysis: Role and Opportunities. In: Cervone, G., Lin, J., Waters, N. (eds) Data Mining for Geoinformatics. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-7669-6_1

Download citation

DOI: https://doi.org/10.1007/978-1-4614-7669-6_1

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4614-7668-9

Online ISBN: 978-1-4614-7669-6

eBook Packages: Computer ScienceComputer Science (R0)