Abstract

Shared random parameter (SRP) models provide a framework for analyzing longitudinal data with missingness. We discuss the basic framework and review the most relevant literature for the case of a single outcome followed longitudinally. We discuss estimation approaches, including an approximate approach which is relatively simple to implement. We then discuss three applications of this framework in novel settings. First, we show how SRP models can be used to make inference about pooled or batched longitudinal data subject to non-ignorable dropout. Second, we show how one of the estimation approaches can be extended for estimating high dimensional longitudinal data subject to dropout. Third, we show how to use jointly model complex menstrual cycle length data and time to pregnancy in order to study the evolution of menstrual cycle length accounting for non-ignorable dropout due to becoming pregnant and to develop a predictor of time-to-pregnancy from repeated menstrual cycle length measurements. These three examples demonstrate the richness of this class of models in applications.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Longitudinal Measurement

- Menstrual Cycle Length

- Random Effect Distribution

- Pattern Mixture Model

- Informative Dropout

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Modeling longitudinal data subject to missingness has been an active area of research in the last few decades. The missing-data mechanism is said to be missing completely at random if the probability of missing is independent of both the observed and unobserved data. Further, the mechanism is not missing at random (NMAR) if the probability of missingness depends on the unobserved data (Rubin 1976; Little and Rubin 1987). It is well known that naive methods that do not account for NMAR can lead to biased estimation. The use of shared (random) parameter models has been been one approach that accounts for non-random missing data. In this formulation, a model for the longitudinal response measurements is linked with a model for the missing-data mechanism through a set of random effects that are shared between the two processes. Wu and Carroll (1988) proposed a model whereby the response process, which was modeled with a linear mixed model with a random intercept and slope was linked with the censoring process by including an individual’s random slope as a covariate in a probit model for the censoring process. When the probit regression coefficient for the random slope is not zero, there is a dependence between the response and missing-data processes. Failure to account for this dependence can lead to biased estimation of important model parameters. Shared-parameter models (Follmann and Wu 1995) induce a type of non-randomly missing-data mechanism that has been called “informative missingness” (Wu and Carroll 1988). For a review and comparison with other methods, see Little (1995), Hogan and Laird (1997), and Vonesh et al. (2006). More recently Molenberghs et al. (2012) have discussed a fundamental non-identifiability of shared random effects models. Specifically, these models make non-verifiable assumptions about data not seen and there are multiple models in a wide class that can equally explain the observed data. Thus, shared random parameter (SRP) models make implicit assumptions that need to be justified from on a scientific basis and cannot be completely verified empirically.

This article discusses some applications of SRPs to some interesting novel applications. In Sect. 2, we set up the general model formulation and show how this mechanism induces a special type of nonignorable missingness. We also discuss both a full maximum-likelihood approach and conditional approach for parameter estimation that is easier to implement. We discuss some examples where a single longitudinal measurement is subject to non-ignorable dropout. Section 3 shows an example of batched laboratory data and how a SRP model can be used to account for the apparent non-ignorable missingness. In Sect. 4 we provide an example of the joint modeling of multiple or high dimensional longitudinal biomarker and time-to-event data. Section 5 shows an example of jointly modeling complex menstrual cycle data and time-to-pregnancy using a SRP approach. Lastly, we present a discussion in Sect. 6.

2 Model Formulation and Estimation

Let \(\mathbf{Y}_{i} = (Y _{i1},Y _{i2},\ldots,Y _{iJ})^\prime\) be a vector of longitudinal outcomes for the ith subject (i = 1, 2, …, I) observed on J occasions t 1, t 2, …, t J , and let \(R_{i} = (R_{i1},R_{i2},\ldots,R_{iJ})^\prime\) be a vector of random variables reflecting the missing data status (e.g., R ij = 0 denoting a missed visit). Further, let \(b_{i} = (b_{i1},b_{i2},\ldots,b_{iL})^\prime\) be an L-element vector of random effects for the ith subject which can be shared between the response and missing data mechanism. We assume that b i is multivariate normal with mean vector 0 and covariance matrix Σ b . Covariates \(X_{ij}\) are also measured which can influence both Y ij and R ij .

The joint distribution of \(Y _{i},R_{i},b_{i}\) can be written as

We make the assumption that conditional on the random effects, the responses do not depend on the missing data status, thus \(g(y_{i}\vert b_{i},r_{i}) = g(y_{i}\vert b_{i})\). Furthermore, the elements of Y i are conditionally independent given b i . By conditional independence, the density for the response vector Y i conditional on b i , \(g(y_{i}\vert b_{i})\) can be decomposed into the product of the densities for the observed and unobserved values of Y i . Namely, \(g(y_{i}\vert b_{i}) = g(y_{i}^{o}\vert b_{i})g(y_{i}^{m}\vert b_{i})\), where \(y_{i}^{o}\) and \(y_{i}^{m}\) are vectors of observed and missing data responses, respectively, for the ith subject. The density of the observed random variables can be expressed as

Although the conditional independence of \(Y _{i}\vert b_{i}\) is easy to verify when there is no missing data, it is difficult to verify for SRP models. Serial correlation could be incorporated (conditional on the random effects) using autoregressive or lagged responses (see, Zeger and Qaqish 1988; Albert 2000; Sutradhar and Mallick 2010). These lag-response modeling components can be formulated with the addition of a shared random effect that links the response and missing data mechanism together. Alternatively, Albert et al. (2002) link together the response and missing data mechanism with a shared latent process where the subject-specific random effect b is replaced by a random process \(b_{i} = (b_{i1},b_{i2},\ldots,b_{iJ})^\prime\). They consider a random process that follows a continuous-time exponential correlation structure since observations are not equally spaced. Although the shared latent processes model is an attractive approach, it requires computationally intensive techniques such as Monte-Carlo EM for parameter estimation. In the remainder of this article, we focus on the SRP rather than the shared latent process model.

Tsiaits and Davidian (2004) provide a concise discussion of how the joint density is obtained for the case where missingness is monotone (i.e., patients only drop out of the study) and measured in continuous time.

The choice of a density function g depends on the type of longitudinal response data being analyzed. For Gaussian longitudinal data, g can be specified as a Gaussian distribution, and the model formulation can be specified as a linear mixed model (Laird and Ware 1982). A simple linear mixed model which can be used as an illustration is

where X i is a subject-specific covariate such as treatment group, b i is a random effect which is often assumed normally distributed, and ε ij is an error term which is assumed normally distributed. Alternatively, for discrete or dichotomous longitudinal responses, g can be formulated as a generalized linear mixed model (Follmann and Wu 1995; Ten Have et al. 1998; Albert and Follmann 2000).

The choice of the density for the missing data indicators, m, depends on the type of missing data being incorporated. When missing data is a discrete time to dropout, a monotone missing data mechanism, then a geometric distribution is often used for m (Mori et al. 1994). For example, the probability of dropping out is

Various authors have proposed shared random effects models for the case in which dropout is a continuous event time (Schluchter 1992; Schluchter et al. 2001; DeGruttola and Tu 1994; Tsiatis et al. 1995; Wulfson and Tsiatis 1997; Tsiatis and Davidian 2001; Vonesh et al. 2006). When missing data includes only intermittently missed observations without dropout, then the product of Bernoulli densities across each of the potential observations may be a suitable density function for g. Alternatively, when multiple types of missing data such as both intermittent missingness and dropout need to be incorporated, a multinomial density function for g can be incorporated (Albert et al. 2002).

The shared random effects model accounts for a MNAR data mechanism, which can be seen with the following argument. Suppressing the index i for notational simplicity, suppose that the random effect b is a scalar with R j indicating whether Y j is observed. MAR implies that the conditional density of R j given the complete data Y does not depend on Y m, while a MNAR implies that this conditional density depends on Y m. The conditional density of R j given \(Y = ({Y }^{o},{Y }^{m})\) is

A MNAR data mechanism follows since the conditional density depends on y m and since \(h(b\vert {y}^{o},{y}^{m})\) depends on y m. It is interesting to note that for models (2) and (3) when the residual variance is very small, error is very small, \(h(r_{j}\vert {y}^{m},{y}^{o}) \approx h(r_{j}\vert {y}^{o})\) since \(h(b\vert {y}^{m},{y}^{o}) \approx h(b\vert {y}^{o})\). In this situation, the missing data mechanism will be close to MAR, so simply fitting a likelihood-based model for y o will result in valid inference.

Albert and Follmann (2007) discuss various SRP modeling formulation for analyzing binary longitudinal data with applications to an opiates clinical trial.

There are various approaches for parameter estimation. First, maximization of the likelihood \(L = \Pi _{i=1}^{I}f(y_{i}^{o},r_{i})\), where f is given by (1) can be used to obtain the maximum-likelihood estimates (MLEs). Maximizing the likelihood may be computationally intensive since it involves integrating over the random effects distribution. For a high dimensional random effects distribution, this involves the numerically difficult evaluation of a high dimensional integral. Approaches such as Monte-Carlo EM or Laplace approximations of the likelihood (Gao 2004) may be good alternatives to direct evaluation of the integral. Fortunately, many applications involve only one or two shared random effects where the integral can be evaluated more straightforwardly with Gaussian quadrature, adaptive Gaussian quadrature, or other numerical integration techniques. Various statistical software packages can be used to fit these models including procedures in SAS and specialized code in R.

An alternative approach for parameter estimation, which conditions on R i , has been proposed (Wu and Carroll 1988; Follmann and Wu 1995; Albert and Follmann 2000). In developing this approach, first note that the joint distribution of \((Y _{i}^{o},R_{i},b_{i})\) can be re-written as

Thus, the conditional likelihood for \(y_{i}^{o}\vert r_{i}\) is given by \(L = \Pi _{i=1}^{I}\int g(y_{i}^{o}\vert b_{i})h(b_{i}\vert r_{i})db_{i}\). Note that this approximate conditional model can be directly viewed as a pattern mixture model as

Little (1993).

For illustration, we can estimate the treatment effect β 1 in the non-random dropout model (2) and (3) by noting that \(b_{i}\vert d_{i}\) can be approximated by a normal distribution with mean \(\omega _{0} +\omega _{1}d_{i}\). The conditional model can then be characterized by a linear mixed model of the form \(Y _{ij} =\beta _{ 0}^{{\ast}} +\omega _{1}d_{i} +\beta _{ 1}^{{\ast}} + b_{i} +\epsilon _{ij}\). An important point is that the parameters of this model are conditional on the dropout time d i and are easily interpretable. What is of interest are inferences on the marginal distribution of Y ij . To estimate β 1 in model (2), we need to marginalize over the dropout time distribution. Specifically, \(E(Y \vert x) = E(E(Y \vert d,x)) =\beta _{ 0}^{{\ast}} +\beta _{ 1}^{{\ast}}x +\omega _{1}E(d\vert x)\), where E(d | x) is the conditional distribution of dropout given the covariate x. We can estimate this conditional distribution in a two group comparison with \(\hat{E}(d\vert x) = \overline{d}_{x}\). Thus, \(\beta _{1} = E(Y \vert x = 1) - E(Y \vert x = 0) =\beta _{ 1}^{{\ast}} +\omega _{1}(\overline{d}_{1} -\overline{d}_{0})\). Variance estimation for the MLE approach can be obtained through standard asymptotic techniques (i.e., inverting the observed Fisher information matrix). For the conditional modeling approach, the simplest approach is to perform a bootstrap procedure (Efron and Tibshirani 1993) where all measurements on a chosen individual are sampled with replacement.

3 An Analysis of Longitudinal Batched Gaussian Data Subject to Non-random Dropout

Due to cost or feasibility, longitudinal data may be measured in pools or batches whereby samples are combined or averaged across individuals at a given time point. In these studies, interest may be on comparing the longitudinal measurements across groups. A complicating factor may be that subjects are subject to dropout from the study. An example of this type of data structure is a large mouse study examining the effect of an experimental antioxidant on the weight profiles over time in mice. It was suspected that animals receiving the treatment would reach a lower adult weight than control animals and that the decline in weight among treated animals would be less than that for control animals. Ninety-five genetically identical animals were enrolled into the treatment and control groups, respectively (190 total animals). Within a group, five animals were placed in each of the 19 cages at birth. Due to the difficulty in repeatedly weighting each animal separately, the average weight per cage was recorded at approximately bi-weekly intervals over the life span of the animals (2–3 years). At each follow-up time, average batch weight was measured as the total batch weight divided by the number of animals alive in that batch.

Albert and Shih (2011) proposed a SRP model for each of the two groups separately. Initially, we present the model when individual longitudinal data are observed and then develop the model for batched longitudinal data. Denote Y ij as the jth longitudinal observation at time t j for the ith subject. As described in Sect. 2, for a dropout process where an individual dies between the (d − 1)th and the dth time point, \(R_{i1} = R_{i2} =\ldots = R_{id-1} = 1\) and R i d = 0. The dropout time for the ith subject is denoted as d i .

We assume a linear mixed model in each group of the form

where \(b_{i} = (b_{0i},b_{1i}) \sim N(0,\Sigma _{b})\) and \(\epsilon _{ij} \sim N(0,\sigma _{\epsilon }^{2})\) is independent of b i . Further, we denote

Model (4) can be made more general by including a change point or additional polynomial functions of time to the fixed and random effects. Similar to (2) and (3), the dropout mechanism can be modeled with a geometric distribution in which, conditional on the random intercept b 0i and random slope b 1i , \(R_{ij}\vert (R_{ij-1} = 1,b_{0i},b_{1i})\) is Bernoulli with probability

where α(t j ) is a function of follow-up time t j . As discussed in Sect. 2, incorporating SRPs between the response and dropout process induces a non-ignorable dropout mechanism.

For the longitudinal animal study, interest is on estimating changes in the longitudinal process over time while accounting for potential informative dropout. For batched samples, we do not observe the actual Y ij , but rather the average measurement in each batch. At the beginning of the study, subjects are placed into batches, and these batches are maintained throughout. Since subjects are dying over time and the batch structure is maintained, there may be very few subjects in a batch as the study draws to an end. Define B l j as the set of subjects who are alive in the lth batch at the jth time point. Define n l j as the number of subjects contained in B l j . Further, define \(X_{lj} = \frac{1} {n_{lj}}\sum \limits _{i\in B_{lj}}y_{ij}\), where in each group, l = 1, 2, …, L, and where L is the number of batches in that group. Animals are grouped into batches of five animals that are repeatedly weighed in the same cage. In this study, there are 19 batches in each group (L = 19 in each of the two groups).

When individual longitudinal measurements Y ij ’s are observed, maximum-likelihood estimation is relatively simple as described earlier in Sect. 2. Estimation is much more difficult when longitudinal measurements are collected in batches. In principle, we can obtain MLEs of the parameters in model (4)–(5), denoted by η, by directly maximizing the joint likelihood, where the individual contribution of the likelihood for the lth batch is

where \(X_{l} = (X_{l1},X_{l2},\ldots,X_{lJ_{l}})^\prime\), J l is the last observed time-point immediately before the last subject in the lth batch dies (n l j = 0 for j > J l ) and b l and d l are a vectors of all the random effects and dropout times, respectively, for individuals in the lth batch. In the application considered here, b l is a vector contain ten random effects and d l is a vector containing the dropout times for a batch size of five mice per cage. In (6), \(f(X_{l}\vert b_{l})\) \(= \Pi _{j=1}^{J_{l}}f(X_{ lj}\vert b_{l})\), where \(f(X_{lj}\vert b_{l})\) is a univariate normal density with mean given by \(\beta _{0} +\beta _{1}t_{j} + \frac{1} {n_{lj}}\sum \limits _{i\in B_{lj}}(b_{0i} + b_{1i}t_{j})\) (see (4)) and variance \(\sigma _{\epsilon }^{2}/n_{lj}\). Further, f(b i ) is a multivariate normal with block diagonal matrix (under an ordering where random effects on the same subjects are grouped together) and \(f(d_{l}\vert b_{l})\) is the product of geometric probabilities.

One approach to maximize the likelihood is to use the E–M algorithm. In the E-step we compute the expected value of the complete-data log likelihood (the log-likelihood we would have if we observed b l ) given the observed data X l and d l , and in the M-step, we maximize the resulting expectation. Specifically,

where η is a vector of all parameters of the shared parameter model and \(logL(X_{l},b_{l}, d_{l};\eta )\) is the log of the complete data likelihood for the lth batch. The standard E–M algorithm is implemented by iterating between an E- and an M-step, whereby the expectation in (7) is evaluated in the E-step and the parameters are updated through the maximization of (7) in the M-step. Unfortunately, the E-step is difficult to implement in closed form. As an alternative Albert and Shih (2011) proposed a Monte-Carlo (MC) EM algorithm where the E-step is evaluated using the Metropolis–Hastings algorithm; the details are included in this paper.

Although the shared parameter modeling approach is feasible, it can be computational intensive due to the Monte-Carlo Sampling. An alternative approach that is simpler to implement for the practitioner is the conditional model discussed in Sect. 2. The conditional approach can easily be adapted for approximate parameter estimation for the shared random effects model with batched longitudinal data.

The approximate conditional model approach discussed for unbatched longitudinal data can be applied to the batched data (i.e., observing X l j ’s rather than Y ij ’s). Since \(Y _{i}\vert d_{i}\) in (4) is multivariate normal, \(X_{l}\vert d_{l}\) is also multivariate normal. Denote

The conditional distribution of \(X_{l}\vert d_{l}\) is multivariate normal with means and covariance matrix given by

for j≠j ′, and

The multivariate normal likelihood with mean and variance given by (8)–(10) can be maximized using a quasi-Newton Raphson algorithm. This has been implemented in R using the optimum function. Once the MLEs of the conditional model are computed, inference about the average intercept and slope can be performed by marginalizing over the dropout times. Similar to what was described for un-batched longitudinal data in Sect. 2, the average intercept and slope can be estimated by \(\hat{\omega }_{o} +\hat{\omega } _{2}\overline{d}_{1}\) and \(\hat{\omega }_{1} +\hat{\omega } _{3}\overline{d}_{2}\), respectively. Similar to variance estimation for the SRP model, standard errors for the estimated mean intercept and slope can be estimated using the bootstrap by re-sampling cage-specific data.

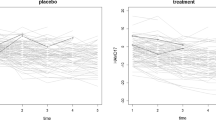

We examined the statistical properties of the maximum-likelihood and the approximate conditional approach using simulations. First, we simulated data according to the SRP model (4) and (5) and fit the correctly specified SRP model, the approximate conditional model (APM), and an ignorable model (IM) that simply fit (4) without regard to the dropout process. Data are simulated under model (4) and (5) with \(\sigma _{b0} =\sigma _{b1} =\sigma _{\epsilon } = 1\), \(\sigma _{b0b1} = 0\), α(t j ) = − 1, \(\theta _{1} =\theta _{2} = 0.25\), and an intercept and slope of 0 and 1, respectively. The average estimated slopes under the SRP model, ACM, and the IM were 0.99 (SD = 0.12), 1.07 (0.11), and 0.83 (0.08), respectively. Not surprisingly, the SRP model is unbiased under the correct specification and the IM is highly biased. The ACM is approximately unbiased which is consistent with our previous theoretical discussions. Second, we simulated data according to the ACM. In this case, the ACM is unbiased, but both the SRP model and IM are severely biased. These simulations suggest that the ACM model may be more robust (under different model formulations) than the SRP model.

A detailed analysis of these data is presented in Albert and Shih (2011). We will summarize the analysis here. Scientific interest was on estimating and comparing the weight in animals at full growth (15 months) and subsequently the decline in weight in older age (slope) between the treatment group (an agent called Tempol) and a control group. Table 1 shows estimates and standard errors for the IM (simply fit the longitudinal model and discard the relationship between the two processes), SRP, and conditional approximation approaches. All methods show that Tempol treated animals have a statistically significant lower early adult weight (intercept) and a slower decline in weight into later adulthood (slope) as compared with genetically identical control animals.

4 Jointly Modeling Multivariate Longitudinal Measurements and Discrete Time-to-Event Data

An exciting area in biomedical research is investigating the relationship between biomarker measurements and time-to-event. For example, developing a predictor of the risk of pre-term birth from biomarker data is an important goal in obstetrical medicine. SRP models that link the two processes provides a nice way to do this. Unfortunately, this is problematic in relatively high dimensions.

Denote \(Y _{1i}=(Y _{1i1},Y _{1i2},\ldots,Y _{1iJ_{i}})^\prime\), \(Y _{2i}=(Y _{2i1},Y _{2i2},\ldots,Y _{2iJ_{i}})^\prime\), …\(Y _{Pi}=(Y _{Pi1},Y _{Pi2},\!\ldots, Y _{PiJ_{i}})^\prime\) as the P biomarkers measured repeatedly at j = 1, 2, . . , J i time points. Further, define \(Y _{pi}^{{\ast}} = (Y _{pi1}^{{\ast}},Y _{pi2}^{{\ast}},\ldots,Y _{piJ_{i}}^{{\ast}})^\prime\) as the longitudinal measurements without measurement error for the pth biomarker and \(Y _{i}^{{\ast}} = (Y _{1i}^{{\ast}},Y _{2i}^{{\ast}},\ldots,Y _{Pi}^{{\ast}})\). We consider a joint model for multivariate longitudinal and discrete time-to-event data in which the discrete event time distribution is modeled as a linear function of previous true values of the biomarkers without measurement error on the probit scale. Specifically,

where i = 1, 2, …, I, j = 1, 2, …, J i , R i0 is taken as 1, α 0j governs the baseline discrete event time distribution and α p measures the effect of the pth biomarker (p = 1, 2, …, P) at time t j − 1 on survival at time t j .

The longitudinal data is modeled assuming that the fixed and random effect trajectories are linear. Specifically, the multivariate longitudinal biomarkers can be modeled as

where

where β p0 and β p1 are the fixed effect intercept and slope for the pth biomarker, and γ p i0 and γ p i1 are the random effect intercept and slope for the pth biomarker on the ith individual. Denote

and

We assume that b i is normally distributed with mean 0 and variance Σ b , where Σ b is a 2P × 2P dimensional variance matrix and ε p i j are independent error terms which are assumed to be normally distributed with mean 0 and variance \(\sigma _{p\epsilon }^{2}\) (p = 1, 2, …, P).

Albert and Shih (2010b) proposed a two-stage regression calibration approach for estimation, which can be described as follows. In the first stage, multivariate linear mixed models can be used to model the longitudinal data. In the second stage, the time-to-event model is estimated by replacing the random effects with corresponding empirical Bayes estimates. There are three problems with directly applying this approach. First, estimation in the first stage is complicated by the fact that simply fitting multivariate linear mixed models results in bias due to informative dropout; this is demonstrated by Albert and Shih (2010a) for the case of P = 1. Second, parameter estimation for multivariate linear mixed models can be computationally difficult when the number of longitudinal measurements (P) is even moderately large. Third, calibration error in the empirical Bayes estimation needs to be accounted for in the time-to-event model. The proposed approach will deal with all three of these problems.

The bias from informative dropout is a result of differential follow-up whereby the longitudinal process is related to the length of follow-up. That is, in (13), patients with large values of \(Y _{pij}^{{\ast}}\) are more likely to have an early event when α p > 0 for p = 1, 2, …, P. There would be no bias if all J follow-up measurements were observed on all patients. As proposed by Albert and Shih (2010a) for univariate longitudinal data, we can avoid this bias by generating complete data from the conditional distribution of Y i = \((Y _{1i},Y _{2i},\ldots,Y _{Pi})\) given d i , denoted as \(Y _{i}\vert d_{i}\). Since \(Y _{i}\vert d_{i}\) under model (11–12) does not have a tractable form, we propose a simple approximation for this conditional distribution. The distribution of \(Y _{i}\vert d_{i}\) can be expressed as

Since d i and the values of Y i are conditional independent given b i , \(h(Y _{i}\vert b_{i},d_{i}) = h(Y _{i}\vert b_{i})\), where \(h(Y _{i}\vert b_{i})\) \(= \Pi _{p=1}^{P}h(Y _{pi}\vert b_{pi0},b_{pi1})\). The distribution of \(Y _{i}\vert d_{i}\) can be expressed as a multivariate linear mixed model if we approximate \(g(b_{i}\vert d_{i})\) by a normal distribution. Under the assumption that \(g(b_{i}\vert d_{i})\) is normally distributed with mean \(\mu _{d_{i}} = (\mu _{01d_{i}},\mu _{11d_{i}}\), \(\mu _{02d_{i}},\mu _{12d_{i}},\ldots\), \(\mu _{0Pd_{i}},\mu _{1Pd_{i}})^\prime\) and variance \(\Sigma _{bd_{i}}^{{\ast}}\), and by re-arranging mean structure parameters in the integrand of (14) so that the random effects have mean zero, \(Y _{i}\vert d_{i}\) corresponds to the following multivariate linear mixed model

where i = 1, 2, …, I, j = 1, 2, …, J i , and p = 1, 2, …, P. The parameters \(\beta _{p0d_{i}}^{{\ast}}\) and \(\beta _{p1d_{i}}^{{\ast}}\) are intercept and slope parameters for the pth longitudinal measurement and for patients who have an event time at time d i or who are censored at time t J . In addition, the associated random effects \(b_{id_{i}}^{{\ast}} = (b_{i10d_{i}}^{{\ast}},b_{i11d_{i}}^{{\ast}},b_{i20d_{i}}^{{\ast}}, b_{i21d_{i}}^{{\ast}},\ldots,b_{iP0d_{i}}^{{\ast}},b_{iP1d_{i}}^{{\ast}})^\prime\) are multivariate normal with mean 0 and variance \(\Sigma _{bd_{i}}^{{\ast}}\), and the residuals ε p i j ∗ are assumed to have an independent normal distribution with mean zero and variance \(\sigma _{\epsilon p}^{{\ast}2}\). Thus, this conditional model involves estimating separate fixed effect intercept and slope parameters for each potential event-time and for subjects who are censored at time t J . Likewise, separate random effects distributions are estimated for each of these discrete time points. For example, the intercept and slope fixed-effect parameters for the pth biomarker for those patients who have an event at time \(d_{i} = t_{3}\) is \(\beta _{p0t_{3}}^{{\ast}}\) and \(\beta _{p1t_{3}}^{{\ast}}\), respectively. Further, the intercept and slope random effects for all P biomarkers on those patients who have an event at time \(d_{i} = t_{3}\), \(b_{it_{3}}^{{\ast}}\), is multivariate normal with mean 0 and variance \(\Sigma _{bt_{3}}^{{\ast}}\). A similar approximation has been proposed by Albert and Shih (2010a) for univariate longitudinal data (P = 1).

Recall that by generating complete data from (15) we are able to avoid the bias due to informative dropout. However, when P is large, direct estimation of model (15) is difficult since the number of elements in \(b_{id_{i}}^{{\ast}}\) grows exponentially with P. For example, the dimension of the variance matrix \(\Sigma _{bd_{i}}^{{\ast}}\) is 2P by 2P for P longitudinal biomarkers. Fieuws and Verbeke (2005) proposed estimating the parameters of multivariate linear mixed models by formulating bivariate linear mixed models on all possible pairwise combinations of longitudinal measurements. In the simplest approach, they proposed fitting bivariate linear mixed models on all \(\left ({ P \atop 2} \right )\) combinations of longitudinal biomarkers and averaging “overlapping” or duplicate parameter estimates. Thus, we estimate the parameters in the fully specified model (15) by fitting \(\left ({ P \atop 2} \right )\) bivariate longitudinal models that only include pairs of longitudinal markers. Fitting these bivariate models is computationally feasible since only four correlated random effects are contained in each model. (i.e., \(\Sigma _{bd_{i}}^{{\ast}}\) is a four-by-four dimensional matrix for each discrete event-time d i .) Duplicate estimates of fixed effects and random-effect variances from all pairwise bivariate models are averaged to obtain final parameter estimates of the fully specified model (15). For example, when P = 4 there are (P − 1) = 3 estimates of \(\beta _{p0d_{i}}^{{\ast}}\), \(\beta _{p1d_{i}}^{{\ast}}\), \(\sigma _{\epsilon p}^{{\ast}2}\) for the pth longitudinal biomarker that need to be averaged.

Model (15) is then used to construct complete longitudinal pseudo data sets which in turn are used to estimate the mean of the posterior distribution of an individual’s random effects given the data. Specifically, multiple complete longitudinal data sets can be constructed by simulating Y p i j values from the approximation to the distribution of \(Y _{i}\vert d_{i}\) given by (15) where the parameters are replaced by their estimated values. Since the simulated data sets have complete follow-up on each individual, the bias in estimating the posterior mean of b i caused by informative dropout will be much reduced.

The posterior mean of distribution b i given the data can be estimated by fitting (11)–(13) to the generated complete longitudinal pseudo data. However, similar to fitting the conditional model (15), fitting model (11)–(13) is difficult due to the high dimension of Σ b . Thus, we again use the pairwise estimation approach of Fieuws and Verbeke (2005), whereby we estimate the parameters of (2)–(3) by fitting all pairwise bivariate models and averaging duplicate parameter estimates to obtain final parameter estimates. For each generated complete longitudinal pseudo data set, the estimate of the posterior mean, denoted as \(\hat{b}_{i}\)= \((\hat{b}_{1i0},\hat{b}_{1i1},\ldots,\hat{b}_{Pi0},\hat{b}_{Pi1})^\prime\) can be calculated as

where Z i is a P J × 2P design matrix corresponding to the fixed and random effects in (11)–(13), where \(Z_{i} = diag \underbrace{(\mathop{A^\prime,A^\prime,\ldots,A^\prime})}_{P\,\,\mathrm{Times}}\),

and V i is the variance of X i . Estimates of \(Y _{pij}^{{\ast}}\), denoted as \(\hat{Y }_{pij}^{{\ast}}\), are obtained by substituting \((\hat{\beta }_{p0},\hat{\beta }_{p1},\hat{b}_{pi0},\hat{b}_{pi1})\) for \((\beta _{p0},\beta _{p1},b_{pi0},b_{pi1})\) in (13).

To account for the measurement error in using \(\hat{b_{i}}\) as compared with using b i in (11), we note that

where \(V ar\Big\{\sum _{p=1}^{P}\omega _{p}(\hat{Y }_{pi(j-1)}^{{\ast}}- Y _{pi(j-1)}^{{\ast}})\Big\} = C^\prime_{ij}V ar(\hat{b}_{i} - b_{i})C_{ij}\), \(C_{ij} = (\omega _{1},\omega _{1}t_{j-1}, \omega _{2},\omega _{2}t_{j-1}\) \(,\ldots,\omega _{p},\omega _{p}t_{j-1})\), \(V ar(\hat{b}_{i} - b_{i}) = \Sigma _{b} - \Sigma _{b}Z_{i}^\prime\{V _{i}^{-1} - V _{i}^{-1}Z_{i}QZ_{i}^\prime V _{i}^{-1}\}Z_{i}\Sigma _{b}\), and where \(Q =\sum _{ i=1}^{I}{(Z_{i}^\prime V _{i}^{-1}Z_{i})}^{-1}\) (Laird and Ware 1982). Expression (17) follows from the fact that \(E[\Phi (a + V )] = \Phi \big[(a+\mu )/\sqrt{1 {+\tau }^{2}}\big]\), where V ∼ N(μ, τ 2).

In the second stage, α 0j (j = 1, 2, …, J) and α p (p = 1, 2, …, P) can be estimated by maximizing the likelihood

where \(P(R_{ij} = 0\vert R_{i(j-1)} = 1,\hat{Y }_{i}^{{\ast}})\) is given by (17). Thus, we propose the following algorithm for estimating α 0j and α p (p = 1, 2, …, P).

-

1.

Estimate the parameters of model (15) by fitting \(\left ({ P \atop 2} \right )\) bivariate models to each of the pairwise combinations of longitudinal measurements and averaging duplicate parameter estimates. The bivariate models can be fit in R using code presented in Doran and Lockwood (2006).

-

2.

Simulate complete longitudinal pseudo measurements (i.e., Y p i j for p = 1, 2, …, P, i = 1, 2, …, I, j = 1, 2, …, J) from model (15) with model parameters estimated from step 1.

-

3.

Estimate the parameters in model (12)–(13) without regard to the event time distribution from complete longitudinal pseudo measurements (simulated in step 2) by fitting all possible \(\left ({ P \atop 2} \right )\) bivariate longitudinal models and averaging duplicate model parameter estimates.

-

4.

Calculate \(\hat{b}_{i}\) using (7) and \(\hat{Y }_{pij}^{{\ast}}\) using (13) with b i replaced by \(\hat{b}_{i}\) and β being replaced by \(\hat{\beta }\) estimated in step 3.

-

5.

Estimate α 0j (j = 1, 2, . . , J) and α p (p = 1, 2, …, P) using (17) and (18).

-

6.

Repeat steps 2 to 5 M times and average \(\hat{\alpha }_{0j}\) and \(\hat{\alpha }_{p}\) to get final estimates.

We choose M = 10 in the simulations and data analysis since this was shown to be sufficiently large for univariate longitudinal modeling discussed in Albert and Shih (2010a). Asymptotic standard errors of \(\hat{\alpha }_{0j}\) and \(\hat{\alpha }_{p}\) cannot be used for inference since they fail to account for the missing data uncertainty in our procedure. The bootstrap (Efron and Tibshirani 1993) can be used for valid standard error estimation.

This approach is most useful in situations where the number of longitudinal measurements is very large (e.g., panels of cytokine measurements followed longitudinally). For computational simplicity, we focus on a simulated example where three biomarkers are measured longitudinally at five time points on 300 individuals. Table 2 shows the results of these simulations. The results show that simply using the observed longitudinal data will result in severely biased estimation. The proposed approach results in unbiased estimation for the parameters of the joint model. The table also includes estimates for the situation in which we observe the biomarkers without measurement error (only possible to do in simulations). This strawman case simply shows us that we could do better in terms of efficiency if the biomarkers could be assessed with less measurement error.

5 Jointly Modeling of Menstrual Cycle Length and Time-to-Pregnancy

An important scientific problem in reproductive epidemiology is both to characterize the menstrual cycle patterns in women who are attempting pregnancy and to develop predictive models for time-to-pregnancy. A SRP model that links together the complex menstrual cycle pattern with time-to-pregnancy is important for valid statistical analysis in both problems. Specifically, when interest focuses on characterizing longitudinal changes in the menstrual cycle, it is important to account for the dependence between the two processes since failure to do so results in informative dropout in the longitudinal process. Further, incorporating dependence between the two processes is important for developing a flexible class of prediction models of time-to-pregnancy.

The menstrual cycle pattern is complex since it is well known to be long tailed with a proportion of cycles being unusually long while a majority appear within normal ranges. Various authors (e.g., Guo et al. 2006 and references within) have proposed two component mixture models with one component reflecting a distribution with a long right tail and the other reflecting a normal distribution. McLain et al. (2012) propose a class a mixture model with a normal distribution for “normal” cycles and a long tailed distribution (reflecting the possibility of both extremely long and short irregular cycles). Further, McLain et al. introduce random effects which are shared between the two models as well as with a discrete time survival model characterizing time-to-pregnancy.

For illustration, we present a simplified version of McLain et al.’s modeling approach without external covariates. The menstrual cycle is modeled as a mixture of two components. First, for normal cycles

where \(\sigma _{i}^{2} =\sigma _{ 0}^{2}exp(b + b_{S,i})\). For abnormal cycles, the following representation is assumed

where E V D(0, η) denotes an extreme-value type I distribution with location 0 and scale η. Further, for implementation it was assumed that \(b_{A,ij} =\epsilon b_{N,ij}\), that the between subject heterogeneity is a scalar shift between the normal and abnormal cycles. The distribution of the menstrual cycle length Y ij is completed through the specification of the mixture, \(Y _{ij} = g_{ij}Y _{N,ij} + (1 - g_{ij})Y _{A,ij}\), where g ij is an indicator that the ith women’s jth cycle is normal, and p = P(g ij = 1). Finally, time-to-pregnancy is specified with a discrete-time survival model as in (5) and (11) with a different link function,

where V ij is an indicator of whether the ith subject had sexual intercourse during the fertile window (period in which they can conceive) during the jth cycle. The parameters ϕ μ and ϕ σ characterize the dependence between time-to-pregnancy and the mean structure and variance structure (among normal cycles), respectively. Negative values of these parameters characterize positive associations between the two processes in terms of mean and variance of the longitudinal process.

Parameter estimation was conducted by maximizing the joint likelihood as described in their manuscript. The major complication was evaluating the bi-variate integral in the joint likelihood (integrating over the bi-variate random effects). McLain et al. used Gaussian quadrature (Abramowitz and Stegun 1972) for this numerical integration.

McLain et al. (2012) fit this model to interesting time-to-pregnancy cohort data. Of interest is that they found estimates of ϕ μ which were positive and estimates of ϕ σ which were negative (ϕ σ estimates were significantly different from zero), reflecting that women with shorter cycles and more normal cycle variability had a longer time to pregnancy.

6 Discussion

This paper presents a summary of methodology and approaches for using SRP models to analyze longitudinal data subject to missingness. The basic approach which started with Wu and Carroll (1988) has been expanded in many directions. In this paper we reviewed some of these expansions focusing on making inference in batched or pooled longitudinal data subject to missingness, joint modeling of high dimensional longitudinal data and time-to-event data, and in the joint modeling of time-to-pregnancy and complex menstrual cycle patterns.

We discuss both a direct maximum-likelihood approaches and approximating approaches for fitting SRP models. The direct modeling approaches, although feasible for univariate longitudinal responses, can be very computationally intensive for either batched or high-dimensional longitudinal data. In fact, direct maximum-likelihood approach would be infeasible for joint modeling of high-dimensional and time-to-event data with all known approaches. The approximate conditional model, although not ideal in certain cases, provides a feasible solution to this difficult problem.

Most SRP models assume that the random effects are normally distributed. Various authors have investigated the robustness of inferences for different settings. Davidian et al. have shown that for a joint model of longitudinal and survival inferences on joint model parameters are relatively insensitive to random effects misspecification, particularly when the number of longitudinal measurements is relatively large.

Selection models where the non-ignorable missing data mechanism is incorporated by modeling the probability of missing as a direct function of the missed observation had it been observed are alternatives to SRP models. Pattern mixture modeling, another commonly used technique for analyzing longitudinal data with missingness, is one where we condition on the missing data pattern and make inference marginalized over the missing data pattern. In spirt, the pattern mixture model is the similar to the conditional model which is proposed as an approximation to the SRP model.

We recommend that model adequacy be examined in traditional ways such as the examination of residuals and fitted values as well as through goodness of fit tests. However, as pointed out by Molenberghs et al. (2012), SRP models (as well as other models for missing data) require assumptions about underlying mechanism that are impossible to fully verify empirically. Knowledge about the subject at hand needs to be incorporated in model development for proper inference.

References

Abramowitz, M., Stegun, I.A.: Handbook of Mathematical Functions. Dover, New York (1972)

Albert, P.S.: A transition model for longitudinal binary data subject to nonignorable missing data. Biometrics 56, 602–608 (2000)

Albert, P.S., Follmann, D.A.: Modeling repeated count data subject to informative dropout. Biometrics 56, 667–677 (2000)

Albert, P.S., Follmann, D.A.: Random effects and latent processes approaches for analyzing binary longitudinal data with missingness: a comparison of approaches using opiate clinical trial data. Stat. Methods Med. Res. 16, 417–439 (2007)

Albert, P.S, Follmann, D.A., Wang, S.A., Suh, E.B.: A latent autoregressive model for longitudinal binary data subject to informative missingness. Biometrics 58, 631–642 (2002)

Albert, P.S., Shih, J.H.: On estimating the relationship between longitudinal measurements and time-to-event data using a simple two-stage procedure. Biometrics 66, 983–991 (2010a)

Albert, P.S., Shih, J.H.: An approach for jointly modeling multivariate longitudinal measurements and discrete time-to-event data. Ann. Appl. Stat. 4, 1517–1532 (2010b)

Albert, P.S., Shih, J.H.: Modelling batched Gaussian longitudinal weight data in mice subject to informative dropout. Published on-line before print. Stat. Methods Med. Res. (2011)

DeGruttola, V.T., Tu, X.M.: Modeling progression of CD4-lymphocyte count and its relationship to survival time. Biometrics 50, 1003–1014 (1994)

Doran, H.C., Lockwood, J.R.: Fitting value-added models in R. J. Educ. Behav. Stat. 31, 205–230 (2006)

Efron, B., Tibshirani, R.J.: An Introduction to the Bootstrap. Chapman and Hall, New York (1993)

Fieuws, S., Verbeke, G.: Pairwise fitting of mixed models for the joint modelling of multivariate longitudinal profiles. Biometrics 62, 424–431 (2005)

Follmann, D., Wu, M.: An approximate generalized linear model with random effects for informative missing data. Biometrics 51, 151–168 (1995)

Gao, S.: A shared random effect parameter approach for longitudinal dementia data with non-ignorable missing data. Stat. Med. 23, 211–219 (2004)

Guo, Y., Manatunga, A.K., Chen, S., Marcus, M.: Modeling menstrual cycle length using a mixture distribution. Biostatistics 7, 151–168 (2006)

Hogan, J.W., Laird, N.M.: Model-based approaches to analyzing incomplete longitudinal and failure time data. Stat. Med. 16, 259–272 (1997)

Laird, N.M., Ware, J.H.: Random -effects models for longitudinal data. Biometrics 38, 963–978 (1982)

Little, R.J.A.: Pattern-Mixture models for multivariate incomplete data. J. Am. Stat. Assoc. 88, 125–134 (1993)

Little, R.J.A.: Modeling the drop-out mechanism in repeated-measures studies. J. Am. Stat. Assoc. 90, 1112–1121 (1995)

Little, R.J.A., Rubin, D.B.: Statistical Analysis with Missing Data. Wiley, New York (1987)

McLain, A., Lum, K.J., Sundaram, R.: A joint mixed effects dispersion model for menstrual cycle length and time-to-pregnancy. Biometrics 68, 648–656 (2012)

Molenberghs, G., Njeru, E., Kenward, M.G., Verbeke, G.: Enriched-data problems and essential non-identifiability. Int. J. Stat. Med. Res. 1, 16–44 (2012)

Mori, M., Woolson, R.F., Woodworth, G.G.: Slope estimation in the presence of informative censoring: modeling the number of observations as a geometric random variable. Biometrics 50, 39–50 (1994)

Rubin, D.B.: Inference and missing data. Biometrika 63, 581–592 (1976)

Schluchter, M.D.: Methods for the analysis of informative censored longitudinal data. Stat. Med. 11, 1861–1870 (1992)

Schluchter, M.D., Greene, T., Beck, G.J.: Analysis of change in the presence of informative censoring: application to a longitudinal clinical trial of progressive renal disease. Stat. Med. 20, 989–1007 (2001)

Sutradhar, B.C., Mallick, T.S.: Modified weights based on generalized inferences in incomplete longitudinal binary models. Can. J. Stat. 38, 217–231 (2010)

Ten Have, T.R., Pulkstenis, E., Kunselman, A., Landis, J.R.: Mixed effects logistic regression models for longitudinal binary response data with informative dropout. Biometrics 54, 367–383 (1998)

Tsiaits, A.A., Davidian, M.: Joint modeling of longitudinal and time-to-event data: an overview. Stat. Sin. 14, 809–834 (2004)

Tsiatis, A.A., Davidian, M.: A semiparametric estimator for the proportional hazards model with longitudinal covariates measured with error. Biometrika 88, 447–459 (2001)

Tsiatis, A.A., DeGruttola, V., Wulfsohn, M.S.: Modeling the relationship of survival to longitudinal data measured with error. Applications to survival and CD4 counts in patients with AIDS. J. Am. Stat. Assoc. 90, 27–37 (1995)

Vonesh, E.F., Greene, T., Schluchter, M.D.: Shared parameter models for the joint analysis of longitudinal data with event times. Stat. Med. 25, 143–163 (2006)

Wu, M.C., Carroll, R.J.: Estimation and comparison of changes in the presence of informative right censoring by modeling the censoring process. Biometrics 44, 175–188 (1988)

Wulfson, M.S., Tsiatis, A.A.: A joint model for survival and longitudinal data measured with error. Biometrics 53, 330–339 (1997)

Zeger, S.L., Qaqish, B.: Markov regression models for time series: a quasilikelihood approach. Biometrics 44, 1019–1031 (1988)

Acknowledgments

We thank the referee and editor for their thoughtful and constructive comments which lead to an improved manuscript. We also thank the audience of the International Symposium in Statistics (ISS) on Longitudinal Data Analysis Subject to Outliers, Measurement Errors, and/or Missing Values. This research was supported by the Intramural Research Program of the Eunice Kennedy Shriver National Institute of Child Health and Human Development.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer Science+Business Media New York

About this paper

Cite this paper

Albert, P.S., Sundaram, R., McLain, A.C. (2013). Innovative Applications of Shared Random Parameter Models for Analyzing Longitudinal Data Subject to Dropout. In: Sutradhar, B. (eds) ISS-2012 Proceedings Volume On Longitudinal Data Analysis Subject to Measurement Errors, Missing Values, and/or Outliers. Lecture Notes in Statistics(), vol 211. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-6871-4_7

Download citation

DOI: https://doi.org/10.1007/978-1-4614-6871-4_7

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4614-6870-7

Online ISBN: 978-1-4614-6871-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)