Abstract

Ordinary logistic regression (OLR) models the probability of a binary outcome. A logistic regression tree (LRT) is a machine learning method that partitions the data and fits an OLR model in each partition. This chapter motivates LRT by highlighting the challenges of OLR with respect to model selection, interpretation, and visualization on a completely observed dataset. Being nonparametric, a LRT model typically has higher prediction accuracy than OLR for large datasets. Further, by sharing model complexity between the tree structure and the OLR node models, the latter can be made simple for easier interpretation and visualization.

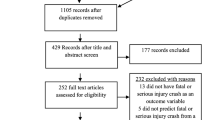

OLR is more challenging if there are missing values in the predictor variables, because imputation must be carried out first. The second part of the chapter reviews the GUIDE method of constructing LRT models. A strength of GUIDE is its ability to deal with large numbers of variables and without the need to impute missing values. This is demonstrated on a vehicle crash-test dataset for which imputation is difficult due to missing values and other problems.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Classification and regression trees

- Imputation

- Logistic regression

- Machine learning

- Missing data

- Visualization

1 Introduction

Ordinary logistic regression (OLR) is a technique for modeling the probability of a binary outcome in terms of one or more predictor variables. Consider, for example, a dataset on tree damage during a severe thunderstorm over 477,000 acres of the Boundary Waters Canoe Area Wilderness in northeastern Minnesota in July 4, 1999 (R package alr4 [1]). Observations from 3666 trees were collected, including for each tree, whether it was blown down (Y = 1) or not (Y = 0), its trunk diameter D in centimeters, its species S, and the local intensity L of the storm, as measured by the fraction of damaged trees in its vicinity.

Let p = P(Y = 1) denote the probability that a tree is blown down. OLR approximates the logit function \(\mbox{logit}(p) = \log (p/(1-p))\) as a function of the predictor variables linear in any unknown parameters. A simple linear OLR model has the form \(\mbox{logit}(p) = \log (p/(1-p)) = \beta _0 + \beta _1 X\), where X is the only predictor variable. Solving for p yields the p-function

In general, if there are k predictor variables, X1, …, Xk, a multiple linear OLR model has the form \(\mbox{logit}(p) = \beta _0 + \sum _{j=1}^k \beta _j X_j\). The parameters β0, β1, …, βk are typically estimated by maximizing the likelihood function. Let n denote the sample size, and let (xi1, …, xik, yi) denote the values of (X1, …, Xk, Y ) for the ith observation (i = 1, …, n). Treating each yi as the outcome of an independent Bernoulli random variable with success probability pi, the likelihood function is

The maximum likelihood estimates are the values of (β0, β1, …, βk) that maximize this function.

2 Fitting OLR Models

Fitting a simple linear OLR model to the tree damage data using L yields

with estimated p-function shown in Fig. 30.1. The equation implies that the stronger the local storm intensity, the higher the chance that a tree is blown down. The boxplots in Fig. 30.2 show that the distributions of D are skewed. To reduce the skewness, Cook and Weisberg [2] transformed D to \(\log (D)\) and obtained the model

which suggests that larger trees are less likely to survive the thunderstorm than narrower ones. If both \(\log (D)\) and L are used, the model becomes

The relative stability of the coefficients of L and \(\log (D)\) in Eqs. (30.1)–(30.3) is due to the weak correlation of 0.168 between the two variables. If the interaction \(L \log (D)\) is included, the model changes to

and the coefficients of \(\log (D)\) and L are changed more dramatically.

So far, species S has been excluded from the models. As in linear regression, a categorical variable having m distinct values may be represented by (m − 1) indicator variables, U1, …, Um−1, each taking value 0 or 1. The variables for species are shown in Table 30.1, which uses the “set-to-zero constraint” that sets all the indicator variables to 0 for the first species (aspen). A model that assumes the same slope coefficients for all species but that gives each a different intercept term is

How well do models (30.1)–(30.5) fit the data? One popular way to assess fit is by means of significance tests based on the residual deviance and its degrees of freedom (df)—see, e.g., [3, p. 96] for the definitions. The residual deviance is analogous to the residual sum of squares in linear regression. For model (30.5), the residual deviance is 3259 with 3655 df. We can evaluate the fit of this model by comparing its residual deviance against that of a larger one, such as the 27-parameter model

that allows the coefficients of \(\log (D)\) and L to vary with species. It has a residual deviance of 3163 with 3639 df. If model (30.5) fits the data well, the difference between its residual deviance and that of model (30.6) is approximately distributed as a chi-squared random variable with df equal to the difference in df of the two models. The difference in deviance is 3259 − 3163 = 96, which is improbably large for a chi-squared random variable with 3655 − 3639 = 16 df.

Rejection of model (30.5) does not necessarily imply that model (30.6) is satisfactory. To find out, it may be compared with a larger one, such as the 28-parameter model

that includes an interaction between L and \(\log (D)\). This has a residual deviance of 3121 with 3638 df. Therefore model (30.6) is rejected because its residual deviance differs from that of (30.7) by 42 but their dfs differ only by 1. With this procedure, each of models (30.1) through (30.6) is rejected when compared against the next larger model in the sequence.

Another way to select a model employs a function such as AIC, which is residual deviance plus two times the number of estimated parameters. AIC tries to balance deviance against model complexity (see, e.g., [4, p. 234]), but it tends to over-fit the data. That is, AIC often chooses a large model. In this dataset, if we apply AIC to the set of all models up to third order, it chooses the largest, namely, the three-factor interaction model

which has 36 parameters.

Models (30.7) and (30.8) are hard to graph. Plotting the estimated p-function as in Fig. 30.1 is impossible if a model has more than one predictor variable. This problem is exacerbated by the tendency of model complexity increasing with increase in sample size and number of predictors. Interpretation of the estimated coefficients is futile then, as they often change from one model to another, due to multicollinearity among the terms. For example, the coefficient for L is 4.424, −1.482, and 4.629 in models (30.3), (30.4), and (30.5), respectively.

To deal with this problem, [2] used a “partial one-dimensional model” (POD) that employs a linear function of \(\log (D)\) and L as predictor variable. They found that if the observations for balsam fir (BF) and black spruce (BS) are excluded, the model logit(p) = β0 + Z +∑jγjUj, with \(Z = 0.78 \log (D) + 4.1 L\), fits the remaining data quite well. Now the estimated p-function can be plotted as shown in Fig. 30.3, but the graph is not as simple to interpret as that in Fig. 30.1 because Z is a linear combination of two variables. To include species BF and BS, [2] settled on the larger model

Estimated probability of blowdown for seven species, excluding balsam fir (BF) and black spruce (BS), according to model (30.9)

which contains separate coefficients (θj, ϕj) for BF and BS. Here I(⋅) denotes the indicator function, i.e., IA = 1 if species is A, and IA = 0 otherwise. The model cannot be displayed graphically for species BF and BS because it is a function of three predictor variables.

3 Logistic Regression Trees

A logistic regression tree (LRT) model is a machine learning solution that simultaneously retains the graphical advantage of simple models and the prediction accuracy of more complex ones. It recursively partitions the dataset and fits a simple or multiple linear OLR model in each partition. As a result, the partitions can be displayed as a decision tree [5] such as Fig. 30.4, which shows a simple linear LRT model fitted to the tree damage data by the GUIDE algorithm [6, 7]. A terminal node represents a partition, and an OLR model with a single linear predictor is fitted in each one. Beside each intermediate node is a condition stating that an observation goes to the left subnode if and only if the condition is satisfied. Below each terminal node are the sample size (in italics), the proportion of blown down trees, and the name of the best linear predictor variable. The split at the root node (labeled “1”) sends observations to node 2 if and only if S is A, BS, JP, or RP. (Node labels employ the convention that a node with label k has left and right child nodes labeled 2k and 2k + 1, respectively.) Node 5, consisting of the JP and RP species, has the highest proportion of blown down trees at 0.82. Node 9, which consists of species A and BS trees with diameters greater than 9.75 cm, has the second highest proportion of 0.67. Variable L is the best linear predictor in all terminal nodes except nodes 13 and 15, where D is the best linear predictor. The main advantage in using one linear predictor in each node is that the fitted p-functions can be displayed graphically, as shown in Fig. 30.5. It is not necessary to transform D to \(\log (D)\) in the LRT.

GUIDE simple linear LRT model for P(blowdown). At each split, an observation goes to the left branch if and only if the condition is satisfied. Sample size (in italics), proportion of blowdowns, and name of regressor variable are printed beneath each terminal node. Green and yellow terminal nodes have L and D, respectively, as best linear predictor

Estimated p-functions in terminal nodes of the tree in Fig. 30.4

The LRT model in Fig. 30.4 may be considered a different kind of POD model from that proposed in [2]. Whereas the word “partial” in POD refers to model (30.9) being one-dimensional if restricted to certain parts of the data (species in this example), it refers to partitions of the predictor space in a LRT. In addition, whereas “one-dimensional” refers to Z being a linear combination of \(\log (D)\) and L in (30.9), the OLR predictor in each node of a LRT is trivially one-dimensional because it is an original variable.

GUIDE is a classification and regression tree algorithm with origins in the FACT [8], SUPPORT [9], QUEST [10], CRUISE [11, 12], and LOTUS [13] methods; see [14]. All of them split a dataset recursively, choosing a single X variable to split each node. If X is an ordinal variable, the split typically has the form s = {X ≤ c}, where c is a constant. If X is a categorical variable, the split has the form s = {X ∈ ω}, where ω is a subset of the values taken by X. For linear regression trees, algorithms such as AID [15], CART [16], and M5 [17] choose s to minimize the total sum of squared residuals of the regression models fitted to the two data subsets formed by s. Though seemingly innocuous, this approach is flawed as it is biased toward choosing X variables that allow more splits. To see this, suppose that X is an ordinal variable having m distinct values. Then there are (m − 1) ways to split the data along the X axis, with each split s = {X ≤ c} being such that c is the midpoint between two consecutively ordered distinct values of X. This creates a selection bias toward X variables with large values of m. In the current example, variable L has 709 unique values but D has only 87. Hence L has eight times as many opportunities as D to split the data. The bias is worse if there are high-level categorical variables, because a categorical variable having m categorical values permits (2m−1 − 1) splits of the form s = {X ∈ ω}. For example, variable S permits (29−1 − 1) = 255 splits, which is almost three times as many splits as D allows. The earliest warning on the potential for the bias to produce misleading conclusions seems to be [18].

GUIDE avoids the bias by using a two-step approach to split selection. First, it uses significance tests to select the X variable. Then it searches for c or ω for X. For linear regression trees, this is achieved by fitting a linear model to the data in the node and using a contingency table chi-squared test of the association between grouped values of each predictor variable and the signs of the residuals. If X is ordinal, the groups are intervals between certain order statistics. If X is categorical, the groups are the categorical levels. Then the X variable having the smallest chi-squared p-value is selected. Repeating this procedure recursively produces a large binary tree that is pruned to minimize a cross-validation estimate of prediction mean squared error [16].

Let \(\hat {p}(x)\) denote the estimated value of p(x) = P(Y = 1 | X = x). The preceding split variable selection method needs modification for logistic regression, because the residual \(y-\hat {p}(x)\) is positive if y = 1 and negative if y = 0, irrespective of the value of \(\hat {p}(x)\). Consequently, the residual signs provide no information on the adequacy of \(\hat {p}(x)\). A first attempt at a solution was proposed in [19], where the residuals \(y\,{-}\,\hat {p}(x)\) are replaced with “pseudo-residuals” \(\bar {p}(x) - \hat {p}(x)\), with \(\bar {p}(x)\) being a weighted average of the y values in a neighborhood of x. Its weaknesses are sensitivity to choice of weights and neighborhoods and difficulty in specifying the neighborhoods if the dimension of the predictor space is large or if there are missing values. LOTUS uses a trend-adjusted chi-squared test [20, 21] that effectively replaces \(\bar {p}(x)\) with a linear estimate.

For logistic regression, GUIDE uses the average from an ensemble of least-squares GUIDE regression trees (called a “GUIDE forest”) to form the pseudo-residuals for variable selection. The main steps are as follows:

-

1.

Fit a least-squares GUIDE forest [22] to the data to obtain a preliminary estimate \(\tilde {p}(x)\) of p(x) for each observed x. (Random forest [23] cannot substitute for GUIDE forest if the data contain missing values.)

-

2.

Beginning with the root node, carry out the following steps on the data in each node, stopping only if the number of observations is below a pre-specified threshold or if all the values of the predictor variables or the Y values are constant:

-

(a)

For each X variable to be used in fitting an OLR model in the node, temporarily impute its missing values with its node sample mean.

-

(b)

Fit a simple or multiple linear OLR model to the imputed data in the node. If a simple linear OLR model is desired, fit one to each linear predictor variable in turn, and choose the one with smallest residual deviance. Let \(\hat {p}(x)\) denote the estimated value of p(x) from the fitted model.

-

(c)

Revert the imputed values in step (2a) to their original missing state.

-

(d)

For each ordinal X variable, let q1 ≤ q2 ≤ q3 denote its sample quartiles at the node, and define the categorical variable \(V = \sum _{j=1}^3 I(X > q_j)\). If X is a categorical variable, define V = X. Add an extra “missing” category to V if X has missing values.

-

(e)

Form a contingency table for each X variable using the signs of \(\tilde {p}(x)-\hat {p}(x)\) as rows and the values of V as columns. Find the chi-squared statistic \(\chi ^2_{\nu }\) for the test of independence between rows and columns.

-

(f)

Let Gν(x) denote the distribution function of a chi-squared variable with ν df, and let 𝜖 = 2 × 10−6. Convert each \(\chi ^2_{\nu }\) to its equivalent one-df \(\chi ^2_1\) value as follows:

-

i.

If \(\epsilon < G_{\nu }(\chi ^2_{\nu }) < 1-\epsilon \), define \(\chi ^2_1 = G_{1}^{-1}(G_{\nu }(\chi ^2_{\nu }))\).

-

ii.

Otherwise, to avoid dealing with very small or large p-values, use the following dual application of the Wilson-Hilferty approximation [24]. Define

$$\displaystyle \begin{aligned} \begin{array}{rcl} W_1 & = &\displaystyle \left\{ \sqrt{2\chi^2_{\nu}} - \sqrt{2\nu-1} + 1 \right\}^2/2 \\ W_2 & = &\displaystyle \max \left(0, \left[ \frac{7}{9} + \sqrt{\nu} \left\{ \left( \frac{\chi^2_{\nu}}{\nu} \right)^{1/3}\right.\right.\right. \\ & &\displaystyle \left.\left.\left. - 1 + \frac{2}{9\nu} \right\} \right]^3 \right). \end{array} \end{aligned} $$Approximate the one-df chi-squared value with

$$\displaystyle \begin{aligned} \chi^2_1 = \left\{ \begin{array}{ll} W_2 & \mbox{if }\chi^2_{\nu} < \nu + 10 \sqrt{2\nu} \\ (W_1+W_2)/2 & \mbox{if }\chi^2_{\nu} \geq \nu + 10 \sqrt{2\nu}\mbox{ and }\\ & W_2 < \chi^2_{\nu} \\ W_1 & \mbox{otherwise.} \end{array} \right. \end{aligned}$$

An earlier one-step approximation is used in [7]. Tables 30.2 and 30.3 show the contingency tables and corresponding chi-squared statistics for Species, Intensity, and Diameter at the root node of the tree in Fig. 30.4.

Table 30.2 Chi-squared test for Species with Wilson-Hilferty \(\chi ^2_1\) value Table 30.3 Chi-squared tests for Intensity and Diameter with quartile intervals Q1, Q2, Q3, Q4 and Wilson-Hilferty \(\chi ^2_1\) values -

i.

-

(g)

Let X∗ be the variable with the largest value of \(\chi ^2_1\), and let NA denote the missing value code:

-

i.

If X∗ is ordinal, let s be a split of the form {X∗ = NA}, {X∗≤ c}∪{X∗ = NA}, or {X∗≤ c}∩{X∗≠ NA}.

-

ii.

If X∗ is categorical, let s be a split of the form {X∗∈ ω}, where ω is a proper subset of the values (including NA) of X∗.

-

i.

-

(h)

For each split s, apply steps (2a) and (2b) to the data in the left and right subnodes induced by s, and let dL(s) and dR(s) be their respective residual deviances.

-

(i)

Select the split s that minimizes dL(s) + dR(s).

-

(a)

-

3.

After splitting stops, prune the tree with the CART cost-complexity method [16] to obtain a nested sequence of subtrees.

-

4.

Use the CART cross-validation method to estimate the prediction deviance of each subtree.

-

5.

Select the smallest subtree whose estimated prediction deviance is within a half standard error of the minimum.

Figure 30.6 shows the LOTUS tree for the current data. MOB [25] is another algorithm that can construct a LRT, but for simple linear LRT models, it requires the linear predictor to be pre-specified and to be the same in all terminal nodes. Figure 30.7 shows the MOB tree with L as the common linear predictor. Figure 30.8 compares the values of \(\hat {p}(x)\) from a GUIDE forest of 500 trees, model (30.9) and the simple linear GUIDE, LOTUS, and MOB LRT models. Although there are clear differences in the values of \(\hat {p}(x)\) between GUIDE, LOTUS, and MOB, they seem to compare similarly against (30.9) and GUIDE forest. Figure 30.9 shows the corresponding results where LOTUS fits the multiple linear LRT model logit(p) = β0 + β1D + β2L and GUIDE and MOB fit \(\mbox{logit}(p) = \beta _0 + \beta _1 D + \beta _2 L + \sum _{j=1}^8 \gamma _j U_j\) in each terminal node. (LOTUS does not convert categorical variables to indicator variables to serve as regressors.) The correlations among the \(\hat {p}(x)\) values are much higher.

LOTUS simple linear LRT model for P(blowdown). At each split, an observation goes to the left branch if and only if the condition is satisfied. Sample size (in italics), proportion of blowdowns, and name of regressor variable (if any) are printed below nodes. Green and yellow terminal nodes have L and D, respectively, as best linear predictor

Comparison of fitted values \(\hat {p}\) of Cook-Weisberg model (30.9) and GUIDE forest versus simple linear LRT models

Comparison of fitted values \(\hat {p}\) of Cook-Weisberg model (30.9) and GUIDE forest versus multiple linear LRT models

4 Missing Values and Cyclic Variables

The US National Highway Traffic Safety Administration has been evaluating vehicle safety by performing crash tests with dummy occupants since 1972 (ftp://www.nhtsa.dot.gov/ges). We use data from 3310 crash tests where the test dummy is in the driver’s seat to show how GUIDE deals with missing values and cyclic variables. Each test gives the severity of head injury (HIC) sustained by the dummy and the values of about 100 variables describing the vehicle, test environment, and the test dummy. The response variable is Y = 1 if HIC > 1000 (threshold for severe head injury) and Y = 0 otherwise. About half of the predictor variables are ordinal, six are cyclic, and the rest are categorical.

Three features in the data make model building particularly challenging. The first is missing data. Missing values in categorical variables are not problematic, as they can be assigned a “missing” category. Missing values in other variables, however, need to be imputed before application of OLR. This can be extraordinarily difficult if there are many missing values and the missingness patterns are complex [22, 26]. All ordinal and cyclic variables here have missing values. Table 30.4 gives the names and numbers of missing values of some of them (see [27] for the others). For example, IMPANG, the angle between the axis of a vehicle and the axis of another vehicle or barrier, is undefined for a rollover crash test, where there is no barrier and only one vehicle is involved. In such cases, the value of IMPANG is recorded as missing and imputing it with a number is inappropriate. The situation is worse for variable CARANG, which has 991 missing values. Given that the crash tests are carefully monitored and have been performed for years, it is unlikely for so many observations to be missing by chance.

For split selection, GUIDE sends all missing values in the selected ordinal or cyclic variable either to the left or to the right subnode, depending on which split gives a smaller sum of residual deviances in the two subnodes. Hence no imputation is carried out in this step. To fit an OLR model to a node, GUIDE imputes missing values in the selected predictor variable with its node mean.

A second challenging feature is the presence of cyclic variables that are angles with periods of 360 degrees. These variables are traditionally transformed to sines and cosines, but splits on one of them at a time are not as meaningful as splits on the angles themselves. The problem is more difficult if the variable has missing values. Should we impute the angles and then compute the sines and cosines of the imputed values, or should we impute the sines and cosines directly? GUIDE avoids imputation entirely by restricting cyclic variables to split the nodes. If a cyclic variable is selected, the split takes the form of a sector “X ∈ [θ1, θ2],” where θ1 and θ2 are angles, and missing values are sent to the left or right subnode in the same fashion as noncyclic variables.

The third challenging feature is that, apparently by design, high-speed crash tests are more often carried out on deformable barriers and low-speed tests more often on rigid barriers. This is evident from the boxplots of CLSSPD by BARRIG in Fig. 30.10, where half of the tests with deformable barriers are above closing speeds of 60 km/h, but less than one quarter of those with rigid barriers are above 60 km/h. Presumably, crashes into rigid barriers are not performed at high speeds because the outcomes are predictable, but this confounds the effects of CLSSPD and BARRIG in an OLR model.

We say that X is an “s” variable if it can be used to split the nodes and an “f” variable if it can be used to fit OLR models in the nodes. To limit the amount of imputation in this example, we restrict ordinal variables with more than 20 percent missing values to serve as s variables only. Cyclic and categorical variables are also restricted to splitting nodes.

Figure 30.11 shows the LRT where a simple linear OLR model is fitted in each node. The root node is split on COLMEC, which is steering wheel collapse mechanism. Observations with COLMEC equal to BWU (behind wheel unit), EMB (embedded ball), EXA (extruded absorber), NON (none), or OTH (other) go to node 2. Otherwise, if COLMEC is CON (convoluted tube), CYL (cylindrical mesh tube), NAP (not applicable), UNK (unknown), or missing, observations go to node 3. At node 2, observations go to node 4 if BX2 ≤ 3496.5 or missing (the asterisk beside the inequality sign in the figure indicates that missing values go to the left node). At node 3, observations go to node 6 if BARSHP is LCB (load cell barrier), POL (pole), US2, or US3 (different barrier types). Node 6 is split on impact angle IMPANG, where 0 degree indicates impact is head-on. If an observation has IMPANG between 284 and 286 degrees inclusive (i.e., Driver side), it goes to node 12. The 2-degree range may seem narrow, but there are 67 observations in the node, suggesting that the tests were by design. Below each terminal node are the sample size (in italics), proportion of Y = 1, and the selected OLR predictor variable, with the sign of its estimated coefficient.

GUIDE piecewise simple linear LRT for crash-test data. At each split, an observation goes to the left branch if and only if the condition is satisfied. The symbol “≤∗” stands for “≤ or missing.” Set S1 = {BWU, EMB, EXA, NON, OTH}. Set S2 = {LCB, POL, US2, US3}. Sample size (in italics), proportion of cases with Y = 1, and sign and name of regressor variable printed below nodes. Terminal nodes with proportions of Y = 1 above and below value of 0.08 at root node are colored yellow and green, respectively

The tree shows that nodes 5, 9, and 34 have the highest proportions of severe head injury, at 34, 39, and 44%, respectively. Vehicles in these nodes have certain steering wheel collapse mechanisms, and they tend to be longer (BX2 > 3496.5 or BX5 > 82.5) or are heavy (VEHTWT > 1368.5) and narrow (VEHWID ≤ 1846). Figure 30.12 shows the fitted logistic regression curves in the terminal nodes. The proportion of tests with severe head injury is indicated by a dotted line in each plot.

Fitted logistic regression curves in terminal nodes of Fig. 30.11; horizontal dotted lines indicate proportion of severe injury in the node

5 Conclusion

Logistic regression is a technique for estimating the probability of an event in terms of the values of one or more predictor variables. If there are missing values among the predictor variables, they need to be imputed first. Otherwise, the observations or variables containing the missing values would need to be deleted. Neither solution is attractive. In practice, finding a logistic regression model with good prediction accuracy is seldom automatic; it usually requires trial-and-error selection of variables, choice of transformations, and estimation of the accuracy of numerous models. Even when a model with good estimated accuracy is found, interpretation of the regression coefficients is not straightforward if there are two or more predictor variables.

A logistic regression tree is a piecewise logistic regression model, with the pieces obtained by recursively partitioning the space of predictor variables. Consequently, if there is no over-fitting, it may be expected to possess higher prediction accuracy than a one-piece logistic regression model. Recursive partitioning has two advantages over a search of all partitions: it is computationally efficient and it allows the partitions to be displayed as a decision tree. At a minimum, a logistic regression tree can serve as an informal goodness of fit test of whether a one-piece logistic model is adequate for the whole sample. A nontrivial pruned tree would indicate that a one-piece logistic model has lower prediction accuracy, possibly due to unaccounted interactions or nonlinearities among the variables. Ideally, an effective tree-growing and pruning algorithm would automatically account for the overlooked effects, making it unnecessary to specify interaction and higher-order terms. It would also allow the models in the terminal nodes to be as simple as desired (such as fitting a single linear predictor in each node).

Tree pruning is very important for prediction accuracy. Many methods adopt the AIC-type approach of selecting the tree that minimizes the sum of the residual deviance and a multiple, K, of the number of terminal nodes. There being no value of K that works for all datasets [16], the advantage of this approach is mainly computational speed. Our experience indicates that it is inferior to a pruning approach that uses cross-validation to estimate prediction accuracy.

Despite a binary decision tree being intuitive to interpret, a poor split selection method can yield misleading conclusions. A common cause is selection bias. The greedy approach used by CART and many other algorithms is known to prefer variables that permit more splits of the data. Consequently, it is hard to know if a variable is chosen due to its predictive power or because it has more ways to partition the data. LOTUS and GUIDE avoid the bias by selecting variables with chi-squared tests. At the time of completion of this article, GUIDE is the only tree algorithm that can deal with cyclic variables and with two or more missing value codes [22]. The GUIDE software and manual may be obtained from www.stat.wisc.edu/~loh/guide.html.

References

Weisberg, S.: Applied Linear Regression, 4th edn. Wiley, Hoboken, NJ (2014)

Cook, R.D., Weisberg, S.: Partial one-dimensional regression models, American Statistician 58, 110–116 (2004)

Agresti, A.: An Introduction to Categorical Data Analysis. Wiley, New York (1996)

Chambers, J.M., Hastie, T.J.: Statistical Models in S. Wadsworth, Pacific Grove (1992)

Loh, W.-Y.: Classification and regression trees. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery 1, 14–23 (2011). https://doi.org/10.1002/widm.8

Loh, W.-Y.: Regression trees with unbiased variable selection and interaction detection. Statistica Sinica 12, 361–386 (2002)

Loh, W.-Y.: Improving the precision of classification trees. Ann. Appl. Stat. 3, 1710–1737 (2009)

Loh, W.-Y., Vanichsetakul, N.: Tree-structured classification via generalized discriminant analysis (with discussion). J. Am. Stat. Assoc. 83, 715–728 (1988)

Chaudhuri, P., Huang, M.-C., Loh, W.-Y., Yao, R.: Piecewise-polynomial regression trees. Statistica Sinica 4, 143–167 (1994)

Loh, W.-Y., Shih, Y.-S.: Split selection methods for classification trees. Statistica Sinica 7, 815–840 (1997)

Kim, H., Loh, W.-Y.: Classification trees with unbiased multiway splits. J. Am. Stat. Assoc. 96, 589–604 (2001)

Kim, H., Loh, W.-Y.: Classification trees with bivariate linear discriminant node models. J. Comput. Graph. Stat. 12, 512–530 (2003)

Chan, K.-Y., Loh, W.-Y.: LOTUS: An algorithm for building accurate and comprehensible logistic regression trees. J. Comput. Graph. Stat. 13, 826–852 (2004)

Loh, W.-Y.: Fifty years of classification and regression trees (with discussion). Int. Stat. Rev. 34, 329–370 (2014)

Morgan, J.N., Sonquist, J.A.: Problems in the analysis of survey data, and a proposal. J. Am. Stat. Assoc. 58, 415–434 (1963)

Breiman, L., Friedman, J.H., Olshen, R.A., Stone, C.J.: Classification and Regression Trees. Wadsworth, Belmont, CA, USA (1984)

Quinlan, J.R.: Learning with continuous classes. In: Proceedings of AI’92 Australian National Conference on Artificial Intelligence, pp. 343–348. World Scientific, Singapore (1992)

Doyle, P.: The use of Automatic Interaction Detector and similar search procedures. Oper. Res. Q. 24, 465–467 (1973)

Chaudhuri, P., Lo, W.-D., Loh, W.-Y., Yang, C.-C.: Generalized regression trees. Statistica Sinica 5, 641–666 (1995)

Cochran, W.G.: Some methods of strengthening the common χ2 tests. Biometrics 10, 417–451 (1954)

Armitage, P.: Tests for linear trends in proportions and frequencies. Biometrics 11, 375–386 (1955)

Loh, W.-Y., Eltinge, J., Cho, M.J., Li, Y.: Classification and regression trees and forests for incomplete data from sample surveys. Statistica Sinica 29, 431–453 (2019)

Breiman, L.: Random forests. Machine Learning 45(1), 5–32 (2001)

Wilson, E.B., Hilferty, M.M.: The distribution of chi-square. Proc. Natl. Acad. Sci. USA 17, 684–688 (1931)

Zeileis, A., Hothorn, T., Hornik, K.: Model-based recursive partitioning. J. Comput. Graph. Stat. 17, 492–514 (2008)

Loh, W.-Y., Zhang, Q., Zhang, W., Zhou, P.: Missing data, imputation and regression trees. Statistica Sinica 30, 1697–1722 (2020)

NHTSA: Test Reference Guide Version 5, https://one.nhtsa.gov/Research/Databases-and-Software/NHTSA-Test-Reference-Guides, 1 (2014)

Acknowledgements

Part of this work was done in the summer of 2019 during the author’s visit to the National Chung Cheng and National Tsing Hua Universities, Taiwan, under the auspices of the National Center for Theoretical Sciences.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Section Editor information

Rights and permissions

Copyright information

© 2023 Springer-Verlag London Ltd., part of Springer Nature

About this chapter

Cite this chapter

Loh, WY. (2023). Logistic Regression Tree Analysis. In: Pham, H. (eds) Springer Handbook of Engineering Statistics. Springer Handbooks. Springer, London. https://doi.org/10.1007/978-1-4471-7503-2_30

Download citation

DOI: https://doi.org/10.1007/978-1-4471-7503-2_30

Published:

Publisher Name: Springer, London

Print ISBN: 978-1-4471-7502-5

Online ISBN: 978-1-4471-7503-2

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)