Abstract

This chapter deals with the perception of compliance of objects with rigid surfaces when vision is present. Compliance (or its inverse, stiffness) is one of a number of properties that can be called “higher-order,” in the sense that it is computed as a combination of components that are physically independent. For objects having rigid surfaces, the components of compliance are position and force. We consider how each component is conveyed by different sensory modalities used for the interaction, vision and touch. This analysis highlights in particular that vision predominantly contributes to the sensing of position, whereas haptics (active touch) contributes to force sensing. We will further discuss integration of information across the senses; in particular, when such integration occurs in relation to the combination of the components of compliance. Finally, we describe applications of research on multi-modal compliance perception.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Analysis of Softness as a Higher-Order Property

Traditional psychophysical methods are so-called because some physical dimension is carefully manipulated while people make judgments of its psychological impact (see Chap. 1). Typical psychophysical measures are: the detection threshold (minimum stimulus intensity required for conscious perception), the discrimination threshold or Just Noticeable Difference (JND; minimum difference in intensity required for discrimination), and parameters of the function relating perceived intensity to physical intensity across a range of values on the target dimension. Early attempts to measure these psychophysical variables tended to focus on univariate quantities. Thus, for example, one can find values for the threshold intensity of physical dimensions such as length, brightness, or weight, as well as other quantitative dimensions with less obvious physical interpretations such as salt dilution or voltage of a current applied to the skin (see Woodworth and Schlossberg 1960). One can also find, from so-called magnitude estimation tasks, that perceived stimulus magnitude tends to be related to physical signal intensity in the form of a logarithmic function (Fechner 1860) or a power function, the exponent of which provides a summary measure of the perceptual transduction output for a given dimension (Stevens 1975).

The present chapter focuses on stiffness, the mathematical inverse of compliance. Stiffness is inherently a higher-order property, in the sense that it is computed from the relation between two underlying quantities. By Hooke’s Law, stiffness (denoted k) is the relation between displacement (d, change in position or length) and the force that produces that displacement (F), as specified by the equation, \({F=kd}\). Given that stiffness is defined by the ratio of two physical variables, force and position, a psychophysical characterisation means examining first how the individual variables and the ratio are perceived. This chapter is concerned with an additional source of complexity, namely, the contributions of vision and haptic perception to the perception of stiffness, as illustrated in Fig. 2.1.

1.1 The Components of Stiffness Through Vision and Haptics

In this section we briefly consider how displacement and force are perceived through vision and haptic perception in isolation. There are, then, four cases to consider, resulting from the combination of two properties and two modalities.

The first case is the perception of displacement by the visual modality. Some experiments have shown that visual judgments of length in near space are proportional to physical length, as indicated by power-function exponents of 1.0 (Seizova-Cajic 1998; Teghtsoonian and Teghtsoonian 1965). Linear relations, however, can still show bias; for example, the slope relating judged length to physical length was shown in one experiment to be on the order of 0.9, introducing systematic under-estimation bias (Keyson 2000).

Next, consider the perception of displacement by touch. For haptic judgments of length of a line, power-function exponents extracted from magnitude estimation vary between 0.8 and 1.2, suggesting greater distortion than is generally found for vision. The exponent depends on the stimulus range (a usual phenomenon in scaling experiments), orientation of the stimulus (Lanca and Bryant 1995), and circumstances of exploration. For example, tracing the length of the line with the fingertip leads to relatively low error (Stanley 1966), but the length of a stimulus felt through a pinch grasp is overestimated (Seizova-Cajic 1998; Teghtsoonian and Teghtsoonian 1965, 1970).

Force, the second component of stiffness, is sensed haptically through cutaneous deformation and mechanoreceptors in muscles, tendons, and joints. Characterizations of haptic force perception have been widely variable (see Jones 1986, for review), depending on the range of forces tested and the experimental procedures. For example, an exponent has been reported of 1.7 for hand-gripping forces (Stevens and Mack 1959), 0.8 for lifted weights (Curtis et al. 1968—in which case force is derived from the relation to mass and gravitational acceleration), and 1.0 for tangential forces applied to the fingertip (Paré et al. 2002).

Visual cues to force are minimal and essentially heuristic. Surface deformation patterns offer cues to compressive force (Wang et al. 2001), although generally without metric scaling. An interesting approach was offered by Sun et al. (2008), who analysed camera images of the fingernail and nail bed, then used changes in colour to predict the applied force. Beyond these direct cues, people may exhibit what Bayesian modeling refers to as “priors”—expectations based on past experience (see Chap. 5 for a discussion about Bayesian models). Visually based priors come into play because our experiences of force generally occur in the context of visual experience. It has been suggested that the sensation of force through mechanical interaction and the corresponding displacements perceived by vision become associated in long-term memory, and thus kinematic features in a visual percept can be matched to stored haptic experiences to infer force (White 2012). Michaels and De Vries (1998) asked their participants to judge the force exerted by a videotaped puller who gripped a handle and made pulls to specified target forces without moving their feet. Their results showed high correlations between visual judgments and target forces.

1.2 Multi-Modal Perception of Stiffness Components

Even if we were to know, for each modality, the psychophysical functions relating perceived force and displacement to their physical values, we would not necessarily be able to quantitatively predict the perceived stiffness. For one thing, internalized quantities may not behave like ratio scales, so the simplicity of Hooke’s Law is questionable here. More importantly, the presence of two modalities tends to lead to perceptual outcomes that differ from the simple effects of either component in isolation. Here we consider interactions between vision and haptics in the estimate of the component properties of stiffness, displacement and force.

Consider first the multisensory integration of spatial cues in displacement perception. Relative displacement might be computed from comparing successive representations of the effector location. In relevant work, it has been found that when a person’s hand is localized in space using proprioceptive and visual information, the two type of cues are averaged in a weighted linear manner, so that the reciprocal of the bimodal variance is equal to the sum of the reciprocals of the two unimodal variances (van Beers et al. 1999). Relative displacement can also be computed by comparing simultaneous positions of effectors. In a classic study, Ernst and Banks (2002) demonstrated that the integration of visual and haptic information concerning distance between the fingers follows a Maximum-Likelihood Estimation model (MLE). The perceptual outcome was a weighted linear combination of estimates from each modality, where the weights were inversely related to the estimates’ variability. That is, the more reliable estimates received more weight.

Another way to compute displacement is to compare the position of a surface indented by an effector to a surrounding surface. One of our studies found that visual and haptic cues combine in a linear fashion in the perception of surface displacement (Wu et al. 2008). Participants were asked to indent a probe into a soft surface until it “bottomed out” against a barrier; they then attempted to estimate the extent of the indentation. Visual cues were present from the indentation per se and also from deformation of a grid pattern painted on the surface. Haptic feedback was experimentally manipulated by varying stiffness of an underlying membrane. We found that resisting forces arising from surface indentation heightened the perception of deformation. For a constant physical indentation, higher force led to greater perceived surface indentation. A regression analysis found that the data could be described by a weighted linear combination of visual and haptic cues, although the optimality of the weighting was not tested.

We next turn from multi-modal perception of displacement to multi-modal perception of force. The haptic perception of force direction, for example, can be significantly enhanced with congruent visual cues. Bargagli et al. (2006) found that the threshold of force-direction discrimination was reduced from \(25.6^{\circ }\) in the haptic-only condition to \(18.4^{\circ }\) when congruent visual cues were introduced, but increased to \(31.9^{\circ }\) when the haptic and visual inputs were incongruent. The size/weight illusion (large objects are perceived as lighter than smaller objects of equal weight) might be the most extensively studied phenomenon with respect to visual influence on the perception of force magnitude. Ellis and Lederman (1993) showed that visual cues alone could yield the illusion, but with a lower magnitude than that observed in haptic-only or bimodal conditions. Valdez and Amazeen (2008) suggested that vision could augment the basic haptic illusion by virtue of visual facilitation of size perception.

Multi-modal interactions occur for higher-order dimensions as well as their unitary components. We have argued that stiffness perception cannot be directly predicted from force and displacement in a single modality, and the same is true for multi-modal stiffness perception, considered next.

2 Visual-Haptic Stiffness Perception

In this section we turn from multi-modal interactions affecting the perception of the components of stiffness, to the higher-order percept itself, which requires combining the components of force and position.

2.1 Integration of Visual and Haptic Cues in Stiffness Perception

Multi-modal contributions to stiffness perception were examined by Varadharajan et al. (2008). Using a high-fidelity haptic force-feedback device along with a visual display, they created a simulation of 3D virtual springs that can buckle and tilt in any direction as force is applied. In a stiffness magnitude-estimation experiment, participants freely explored a set of 12 randomly ordered springs with rigidity ranging from 12.0 to 48.0 N/mm. After having interacted with a spring, participants rated perceived stiffness using any number, with the rule that higher numbers meant that the spring felt stiffer. A monotonic relationship between the judged and rendered stiffness was evident. More importantly for present purposes, participants were tested in haptic-only and haptic-visual conditions, and no significant contribution of vision to the perception of stiffness magnitude was found.

Differential threshold for discriminating spring stiffness was reduced with visual feedback (adapted from Varadharajan et al. 2008)

In contrast, the same authors found that stiffness discrimination performance was improved by adding visual rendering of the interaction. When vision was excluded in the haptic-only condition, the JND increased by over 20 % relative to the haptic-visual condition, as shown in Fig. 2.2. Thus while vision failed to change the relation between perceived and physical stiffness magnitude, it did provide greater sensitivity in the discrimination of stiffness. Presumably this arose from the kinematics of interaction and changes in size and shape of the spring, although the quantitative weight given to visual versus haptic cues cannot be determined from this study.

The MLE model suggests that the relative weight given to each modality in a multi-modal situation should depend on the relative reliability of the estimates (i.e. inverse variance). In this way, there will be always an advantage for integrated estimates in terms of reliability. This prediction does not appear to be confirmed in a study by Srinivasan et al. (1996). They investigated the impact of visual information over kinaesthetic information in a comparison of two springs rendered in a virtual environment with a 3 DOF haptic interface. The relationship between visual and haptic stiffness ranged from consistent to reversed (i.e. visual deformation for a hard spring at a particular force level was depicted in conjunction with a haptic rendering of a soft spring at that force level). They found that visual cues essentially dominated kinaesthetic cues. Another effect of vision may be to mitigate biases induced under haptic perception. Along these lines, Wu et al. (1999) found that vision reduced a haptic bias to feel more distant objects as softer.

2.2 Models of Visual-Haptic Integration in Stiffness Perception

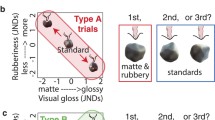

Higher-order properties require multiple component properties to be combined. In the case of stiffness, a ratio is computed between force and displacement. Multi-modal inputs add to the processing burden by imposing the need to integrate information from the multiple modalities. This raises an interesting question: When does cue combination occur in relation to integration, or correspondingly, what is integrated/combined? Are individual unidimensional properties (force, displacement) estimated by each modality, integrated across modalities, and then combined? Or does each modality independently combine the components into a higher-order property (stiffness), followed by integration of the multiple estimates of the higher-order that ensue? The models are illustrated in Fig. 2.3.

Two processing models have been proposed to explain the visual-haptic integration in stiffness perception (Kuschel et al. 2010). Model (a) suggests that individual properties (i.e., force and deformation) are estimated multimodally and then used in the estimation of stiffness. In contrast, Model (b) suggests cross-modal integration follows the process of cue combination within each modality. That is, stiffness is estimated from unimodal information obtained through vision or touch, followed by a process of cross-modal integration

This question was addressed by Kuschel et al. (2010) using a visual-haptic stiffness display. Participants pinched a virtual spring between two fingers, each attached to a robot, while viewing the consequences in the form of a deformable virtual cube. As the thumb moved toward the index finger, the robots produced resisting forces depending on the spring model, and the cube’s shape was visibly deformed by two spheres representing the squeezing digits. Thus in the normal active mode, sufficient visual and haptic cues were provided to participants for estimating force and deformation. Visually, deformation could be judged from the distance between spheres, and force from the cube’s curvature. By touch, finger displacement could be felt through kinaesthetic sensing, and force through both cutaneous and kinaesthetic sensing.

The test of which operation occurs first, combination or integration, was conducted by implementing a reduced-cue condition that eliminated the weaker of the two sources for each component property—the visual force cue and the haptic displacement cue. To eliminate visual force, the curvature deformation was eliminated, and the cube only grew thinner as it was indented. To eliminate haptic displacement, the fingers were held apart, and a force from the robot, representing resistance from the spring, was pressed into the passive thumb. Thus, in the reduced-cue condition, the subject saw one side of the cube moving in and out along a sinusoidal trajectory, while feeling corresponding forces on the fixed thumb.

The reasoning behind such a test is as follows: If combination within each modality into a higher-order property precedes integration, the reduced-cue condition should essentially preclude stiffness from being perceived. That is because both displacement and force are needed to compute the within-modality stiffness estimate; lacking even the weaker cue, the task cannot be done: In the reduced-cue condition, visual cues are available for deformation but not for force, while haptic feedback provides only information of force but no finger displacement. In contrast, if integration precedes combination, the reduced-cue condition should have little negative impact on performance. The weaker cue of each modality can be discarded and the stronger ones (visual displacement and haptic force) can be combined to produce a stiffness estimate. Essentially, there is no integration in this case, because each component property is computed by a single modality, using its stronger cue.

The results of the study supported combination before integration: When the JND was computed from a standard psychophysical task, the value was 0.29 in the active condition and 0.83 in the reduced-cue condition; nearly a 300 % increase. Thus it appears that each modality combines cues to arrive at an estimate of stiffness, and an integration process transforms those estimates into a multi-modal value.

Given that unimodal stiffness estimates are integrated, a natural question to follow is: Does the integration process follow the MLE rule? To answer this question, Kuschel et al. (2010) distorted the visual display in relation to the haptic, so that the relative ratio of visual:haptic displacement was 2:1, 1:1, or 0.5:1. Under the MLE rule, the perceived estimate should move toward the visual value. For example, if the visual display is seen to move twice as much for a given haptic value, the perceived stiffness should become less. These patterns were observed and were quantitatively consistent with the model.

Another prediction of the MLE model however, failed. The prediction was that the standard deviation for every visual-haptic condition should be less than that of the haptic alone, and this was violated for the 2:1 visual:haptic distortion. Quantitative analysis of the data showed that the weights observed in the no-distortion condition, which were optimal only for that condition, did not change even when the visual input was distorted. Drewing et al. (2009) reported a similar phenomenon. When estimating the compliance of soft rubber specimens under a visual-haptic condition, participants’ judgments were shifted halfway from haptic-only estimates towards vision-only estimates. However, the reliability of judgments, as measured by the standard deviations of individual’s estimates, was not improved by the addition of visual information. Further evidence for non-optimal integration of visual and haptic softness was found by Cellini et al. (2013). These studies collectively suggest that visual and haptic softness cues may not be integrated optimally, particularly when the two inputs are not in congruence.

3 Applications of Research on Visual-Haptic Stiffness Perception

A practical problem, particularly in medicine, is how to help people better perceive stiffness through effective augmentation of visual and haptic cues. For example, when performing ultrasound examinations for breast cancer, radiologists compress the target area with the ultrasound probe, observe the ultrasound video, and feel the resisting force to detect possible tumours as changes in tissue stiffness. Research has shown that the human ability to distinguish stiffness is limited. Jones and Hunter (1990) reported an average JND of 23 % for participants haptically comparing the stiffness of simulated springs using a contralateral limb matching procedure. Tan et al. (1995) found a low JND of 8 % for compliance discrimination in a fixed-displacement condition and a significantly higher JND (22 %) when the displacement was varied across the stimuli. For visual discrimination of stiffness, Wu et al. (2012) presented to their participants simulated ultrasound with different levels of speckle noise and structural regularity, and reported JNDs ranging from 12 % to 17 %. In clinical practice, such perceptual limits are reflected in the limited sensitivity of palpation screening. The reported detection rate is only 39–59 % for breast cancer examination (Shen and Zelen 2001).

Engineering platforms have been developed to enhance stiffness perception by the augmentation of visual, haptic, or both types of cues. Based on the results of Kuschel et al. (2010), one approach to augmentation is to facilitate the within-modality perception of stiffness, for example, to augment visual force cues for improved visual perception of stiffness. In an effort toward this goal, Bethea et al. (2004) evaluated a visual force feedback system developed for the da Vinci Surgical System, in which the intensity of the force at the tip of the surgical instrument was indicated using a colour bar varying from green to yellow to red. Their results showed that with such visual force feedback, surgeons could perform robot-assisted surgical knot tying with more consistency, precision, and greater tension to suture materials without breakage. Similarly, Horeman et al. (2012) augmented the display of their laparoscopic training platform with an arrow that continuously informed the trainee about the magnitude and direction of applied force. Their experimental results demonstrated that such a visual representation led to significant improvements in the control of tissue-handling force, not only during the training sessions but also in the post-training tests. Although these studies did not directly assess the perception of stiffness, one would expect that more precise control of force might be associated with enhanced perception of tissue mechanical properties, including stiffness.

Alternatively, haptic cues can be augmented (for an in-depth discussion of haptic augmentation, see Chap. 12). Many tasks require a steady hand, particularly in delicate surgical procedures like microsurgical operations, and therefore the goal of engineering intervention is often to reduce, rather than enhance, the haptic force cues arising from action. The opposite situation also arises, however, when the interaction forces of surgery are imperceptible, and access to force information could be useful to the surgeon. Accordingly, considerable engineering effort has been devoted to developing devices to augment force, in order to help the user better perceive it and hence the inherent stiffness of the interaction. One such example is a hand-held force magnification device developed by Stetten et al. (2011), as shown in Fig. 2.4. The device includes a handle held by the user, a sensor and an actuator placed at two ends of the handle, a brace for mounting the device on the user’s hand, and a control system. A sensor is mounted on the tip of the handle to measure the pushing/pulling force of the interaction. At the other end, a stack of permanent rare-earth magnets are placed inside the handle and inserted into a custom solenoid. The solenoid is powered by an electrical current from the control box, inducing a Lorentz force on the magnets/handle that is proportional to and in the same direction as the input force. The entire device is mounted to the back of the user’s right hand for high portability and manipulation capacities. After careful calibration, the device could amplify the sensed force up to 5.8 times. Jeon and Choi (2009) developed a stiffness-modulation system with a similar goal. Their system could alter the subjective stiffness of soft objects to a specific value by measuring the deformation and then accordingly applying additional forces to the user’s hand.

In contrast to the amount of research on design and implementation of augmentation devices, relatively little research has examined the perceptual effectiveness of such augmentation and investigated the factors that may influence the utility of augmented haptic and visual feedback. Consider first the augmentation of force cues by visual feedback. While it is technically easy to use colour scales or arrows to present force information, the perceptual effectiveness of such feedback is limited, because interpretation requires cognitive mediation. Furthermore, given humans’ poor ability to make absolute judgments of colour and length, colour scales or arrows provide the user with little more than a heuristic to estimate the force intensity. An additional consideration is that in order to avoid occlusion of the field of surgery, visual force feedback is often shown at a displaced location, and such spatial displacement could hinder the integration of visual and haptic cues (Gepshtein et al. 2005; Klatzky et al. 2010).

An example of a force augmentation device. The Hand-Held Force Magnifier (Stetten et al. 2011) uses a sensor to measure force F at the tip of the handle, which is amplified to produce \({F^* = gF }\) in the same direction on the handle using a solenoid mounted on the back of the hand. The total force felt by the user is then (\({F+F^*}\)) with an amplification of (\({1+g}\)). (Adapted from Stetten et al. 2011)

Haptic augmentation of force has the advantage that the augmented signal occurs in the natural perceptual modality, but it is also limited by the sensory and perceptual capacities inherent in the sense of touch. Stetten et al. (2011) characterized their haptic AR system and assessed its effectiveness in judgments of stiffness. Their results showed that the force augmentation induced by the device was well perceived by their participants, leading to significantly higher subjective estimation of stiffness, as shown in Fig. 2.5a. The augmentation effectiveness, however, was found to be greatest for the softest stimuli and to decline gradually with stiffness. The subjective estimates, if related to an up-scaling of the stiffness stimuli by the magnifier’s actual power, fell below the control curve and diverged more from it as the stimuli increased (Fig. 2.5b). The change in the augmentation effectiveness may be partly accounted for by a shift in utilization of haptic cues. Small forces are sensed mostly through the deformation of the skin, whereas the perception of large forces relies more on the information from receptors in muscles, tendons, and joints that have relatively lower sensitivity. Jeon and Choi (2009) also conducted an experiment to evaluate the perceptual performance of their stiffness modulation system and found the modulation was felt to be stiffer than the desired value. The authors attributed such error to a lag in haptic rendering. Although these results necessarily reflect the limitations of the devices used, they provide data more generally relevant to the mechanisms underlying stiffness perception.

Perceptual effectiveness of the Hand-Held Force Magnifier (Stetten et al. 2011) in stiffness estimation. a Mean estimates of perceived stiffness without the device (the control condition) or with the device turned on or off. b Re-plot of the magnifier-on data versus the prediction given by the augmented force feedback. The magnifier-on curve fell below the control curve and diverged more from it as the stimuli increased, indicating a gradual declination in effectiveness

4 Conclusion

Stiffness, or its inverse compliance, is one of a number of properties that can be called “higher-order”, in the sense that it is computed from more than one physically independent component. Not only does stiffness perception require the combination of these components, but when signals arise from multiple sense modalities, it also requires integration across channels with very different specializations. Here we have briefly reviewed research directed at understanding how visual and haptic cues to the component properties are conveyed and how they are integrated or combined into the percept of stiffness. Many issues remain to be addressed.

One of the least understood issues is whether and how humans make use of all the available sensory channels, including the ones that provide unreliable or biased cues to component properties, as is the case of visual cues to convey force information. An understanding of how prior experience shapes the visual perception of force would inform the design of virtual and augmented reality environments that support stiffness perception with multimodal cues. Given the importance of application domains involving interaction with compliant objects, it is clear that further research in this direction would have both basic and applied value.

References

Barbagli F, Salisbury K, Ho C, Spence C, Tan HZ (2006) Haptic discrimination of force direction and the influence of visual information. ACM Trans Appl Percept 3:125–135

Bethea BT, Okamura AM, Kitagawa M, Fitton TP, Cattaneo SM, Gott VL, Baumgartner WA, Yuh DD (2004) Application of haptic feedback to robotic surgery. J Laparoendosc Adv Surg Tech 14(3):191–195

Cellini C, Kaim L, Drewing K (2013) Visual and haptic integration in the estimation of softness of deformable objects. Perception 4(8):516–531

Curtis DW, Attneave F, Harrington TL (1968) A test of a two-stage model for magnitude estimation. Attention Percept Psychophys 3:25–31

Drewing K, Ramisch A, Bayer F (2009) Haptic, visual and visuo-haptic softness judgments for objects with deformable surfaces. In: Proceedings of world haptics 2009, third joint EuroHaptics conference and symposium on haptic interfaces for virtual environment and teleoperator systems. Piscataway, NJ, IEEE, pp 640–645

Ellis RR, Lederman SJ (1993) The role of haptic versus visual volume cues in the size-weight illusion. Attention Percept Psychophys 55(3):315–324

Ernst MO, Banks MS (2002) Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415:429–433

Fechner GT (1860) Elemente der Psychophysik. Breitkopf and Härtel, Leipzig, Germany

Gepshtein S, Burge J, Ernst MO, Banks MS (2005) The combination of vision and touch depends on spatial proximity. J Vis 5(11):1013–1023

Horeman T, Rodrigues SP, van den Dobbelsteen JJ, Jansen FW, Dankelman J (2012) Visual force feedback in laparoscopic training. Surg Endosc 26(1):242–248

Jeon S, Choi S (2009) Haptic Augmented reality: taxonomy and an example of stiffness modulation. Presence Teleoperators Virtual Environ 18:387–408

Jones LA (1986) Perception of force and weight: theory and research. Psychol Bull 100:29–42

Jones LA, Hunter IW (1990) A perceptual analysis of stiffness. Exp Brain Res 79:150–156

Keyson DV (2000) Estimation of virtually perceived length. Presence Teleoperators Virtual Environ 9:394–398

Klatzky RL, Wu B, Stetten G (2010) The disembodied eye: consequences of displacing perception from action. Vis Res 50:2618–2626

Kuschel M, Di Luca M, Buss M, Klatzky RL (2010) Combination and integration in the perception of visual-haptic compliance information. IEEE Trans Haptics 3:234–244

Lanca M, Bryant D (1995) Effect of orientation in haptic reproduction of line length. Percept Mot Skills 80:1291–1298

Michaels CF, De Vries MM (1998) Higher and lower order variables in the visual perception of relative pulling force. J Exp Psychol Hum Percept Perform 24:526–546

Paré M, Carnahan H, Smith AM (2002) Magnitude estimation of tangential force applied to the fingerpad. Exp Brain Res 142:342–348

Seizova-Cajic T (1998) Size perception by vision and kinesthesia. Attention Percep Psychophys 60:705–718

Shen Y, Zelen M (2001) Screening sensitivity and sojourn time from breast cancer early detection clinical trials: mammograms and physical examinations. J Clin Oncol 19:3490–3499

Srinivasan MA, Beauregard GL, Brock DO (1996) The impact of visual information on haptic perception of stiffness in virtual environments. ASME Dyn Syst Control Div 58:555–559

Stanley G (1966) Haptic and kinesthetic estimates of length. Psychon Sci 5:377–378

Stetten Gl, Wu B, Klatzky R, Galeotti J, Siegel M, Lee R, f Mah F, Eller A, Schuman J, Hollis R (2011) Hand-held force magnifier for surgical instruments. Information processing in computer-assisted interventions. Lecture notes in computer science, 6689, Springer, Berlin. pp 90–100

Stevens JC, Mack JD (1959) Scales of apparent force. J Exp Psychol 58:405–413

Stevens SS (1975) Psychophysics: introduction to its perceptual, neural, and social prospects. Wiley, New York

Sun Y, Hollerbach JM, Mascaro SA (2008) Predicting fingertip forces by imaging coloration changes in the fingernail and surrounding skin. IEEE Trans Biome Eng 55:2363–2371

Tan HZ, Durlach NI, Beauregard GL, Srinivasan MA (1995) Manual discrimination of compliance using active pinch grasp: the roles of force and work cues. Attention Percep Psychophys 57:495–510

Teghtsoonian M, Teghtsoonian R (1965) Seen and felt length. Psychon Sci 3:465–466

Teghtsoonian M, Teghtsoonian R (1970) Two varieties of perceived length. Attention Percept Psychophys 8:389–392

Valdez AB, Amazeen EL (2008) Sensory and perceptual interactions in weight perception. Attention Percept Psychophys 70:647–657

van Beers RJ, Sittig AC (1999) Integration of proprioceptive and visual position information: an experimentally supported model. J Neurophysiol 81:1355–1364

Varadharajan V, Klatzky R, Unger B, Swendsen R, Hollis R (2008) Haptic rendering and psychophysical evaluation of a virtual three-dimensional helical spring. In: Proceedings of the 16th symposium on haptic interfaces for virtual environments and teleoperator systems. IEEE, Piscataway, NJ, pp 57–64

Wang X, Ananthasuresh GK, Ostrowski JP (2001) Vision-based sensing of forces in elastic objects. Sens Actuators A Phys 94:142–156

White PA (2012) The experience of force: the role of haptic experience of forces in visual perception of object motion and interactions, mental simulation, and motion-related judgments. Psychol Bull 138:589–615

Woodworth R, Schlossberg H (1960) Experimental psychology, Revised edn. Henry Holt, New York

Wu B, Klatzky RL, Shelton D, Stetten G (2008) Mental concatenation of perceptually and cognitively specified depth to represent locations in near space. Exp Brain Res 184:295–305

Wu B, Klatzky RL, Hollis R, Stetten G (2012) Visual perception of viscoelasticity in virtual materials. Presented at the 53rd annual meeting of the psychonomic society, Minneapolis, Minnesota, Nov 2012

Wu W, Basdogan C, Srinivasan MA (1999) Visual, haptic, and bimodal perception of size and stiffness in virtual environments. ASME Dyn Syst Control Div 67:19–26

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer-Verlag London

About this chapter

Cite this chapter

Klatzky, R.L., Wu, B. (2014). Visual-Haptic Compliance Perception. In: Di Luca, M. (eds) Multisensory Softness. Springer Series on Touch and Haptic Systems. Springer, London. https://doi.org/10.1007/978-1-4471-6533-0_2

Download citation

DOI: https://doi.org/10.1007/978-1-4471-6533-0_2

Published:

Publisher Name: Springer, London

Print ISBN: 978-1-4471-6532-3

Online ISBN: 978-1-4471-6533-0

eBook Packages: Computer ScienceComputer Science (R0)