Abstract

The notion of a modular form is introduced. The dimension of the space of modular forms is determined and Fourier-expansions of Eisenstein series are determined leading to the first number theoretical applications. The Fourier-coefficients of a modular form are fed into a Dirichlet series thus forming the associated L-function, which is shown to extend to an entire function. Hecke operators acting on modular forms are introduced and it is shown that L-functions of Hecke eigenforms admit Euler products. Finally, non-holomorphic Eisenstein series and Maaß-wave forms, the real-analytic counterparts of modular forms, and there L-functions, are introduced.

Access provided by Autonomous University of Puebla. Download chapter PDF

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

In this chapter we introduce the notion of a modular form and its L-function. We determine the space of modular forms by giving an explicit basis. We define Hecke operators and we show that the L-function of a Hecke eigenform admits an Euler product.

2.1 The Modular Group

Recall the notion of an action of a group G on a set X. This is a map G×X→X, written (g,x)↦gx, such that 1x=x and g(hx)=(gh)x, where x∈X and g,h∈G are arbitrary elements and 1 is the neutral element of the group G.

Two points x,y∈X are called conjugate modulo G, if there exists a g∈G with y=gx. The orbit of a point x∈X is the set Gx of all gx, where g∈G, so the orbit is the set of all points conjugate to x. We write G∖X or X/G for the set of all G-orbits.

Example 2.1.1

Let G be the group of all complex numbers of absolute value one, also known as the circle group

The group G acts on the set ℂ by multiplication. The map

is a bijection.

An action of a group is said to be transitive if there is only one orbit, i.e. if any two elements are conjugate.

This is the usual notion of a group action from the left, or left action. Later, in Lemma 2.2.2, we shall also define a group action from the right.

For given g∈G the map x↦gx is invertible, as its inverse is x↦g −1 x.

The group \(\operatorname{GL}_{2}(\mathbb{C})\) acts on the set ℂ2∖{0} by matrix multiplication. Since this action is by linear maps, the group also acts on the projective space ℙ1(ℂ), which we define as the set of all one-dimensional subspaces of the vector space ℂ2. Every non-zero vector in ℂ2 spans such a vector space and two vectors give the same space if and only if one is a multiple of the other, which means that they are in the same ℂ×-orbit. So we have a canonical bijection

We write the elements of ℙ1(ℂ) in the form [z,w], where (z,w)∈ℂ2∖{0} and

For w≠0 there exists exactly one representative of the form [z,1], and the map z↦[z,1] is an injection ℂ↪ℙ1(ℂ), so that we can view ℂ as a subset of ℙ1(ℂ). The complement of ℂ in ℙ1(ℂ) is a single point ∞=[1,0], so that ℙ1(ℂ) is the one-point compactification \(\widehat{\mathbb{C}}\) of ℂ, the Riemann sphere. We consider the action of \(\operatorname{GL}_{2}(\mathbb{C})\) given by g.(z,w)=(z,w)g

t; then with  we have

we have

if cz+d≠0. The rational function \(\frac{az+b}{cz+d}\) has exactly one pole in the set \(\widehat{\mathbb{C}}\), so we define an action of \(\operatorname{GL}_{2}(\mathbb{C})\) on the Riemann sphere by

if z∈ℂ. Note that cz+d and az+b cannot both be zero (Exercise 2.1). We finalize the definition of this action with

Any matrix of the form  with λ≠0 acts trivially, so it suffices to consider the action on the subgroup \(\mathrm{SL}_{2}(\mathbb{C})=\{g\in\operatorname{GL}_{2}(\mathbb {C}):\det(g)=1\}\).

with λ≠0 acts trivially, so it suffices to consider the action on the subgroup \(\mathrm{SL}_{2}(\mathbb{C})=\{g\in\operatorname{GL}_{2}(\mathbb {C}):\det(g)=1\}\).

Lemma 2.1.2

The group SL2(ℂ) acts transitively on the Riemann sphere

\(\widehat {\mathbb{C}}\). The element

acts trivially. If we restrict the action to the subgroup

G=SL2(ℝ), the set

\(\widehat{\mathbb{C}}\)

decomposes into three orbits: ℍ and −ℍ, as well as the set

\(\widehat{\mathbb{R}}=\mathbb{R}\cup\{\infty\}\).

acts trivially. If we restrict the action to the subgroup

G=SL2(ℝ), the set

\(\widehat{\mathbb{C}}\)

decomposes into three orbits: ℍ and −ℍ, as well as the set

\(\widehat{\mathbb{R}}=\mathbb{R}\cup\{\infty\}\).

Proof

For given z∈ℂ one has  , so the action is transitive. In particular it follows that \(\widehat{\mathbb{R}}\) lies in the G-orbit of the point ∞.

, so the action is transitive. In particular it follows that \(\widehat{\mathbb{R}}\) lies in the G-orbit of the point ∞.

For  and z∈ℂ one computes

and z∈ℂ one computes

This implies that G leaves the three sets mentioned invariant. We have  and

and  , therefore \(\widehat{\mathbb{R}}\) is one G-orbit. We show that G acts transitively on ℍ. For a given z=x+iy∈ℍ one has

, therefore \(\widehat{\mathbb{R}}\) is one G-orbit. We show that G acts transitively on ℍ. For a given z=x+iy∈ℍ one has

□

Definition 2.1.3

We denote by LATT the set of all lattices in ℂ. Let \(\operatorname{BAS}\) be the set of all ℝ-bases of ℂ, i.e. the set of all pairs (z,w)∈ℂ2, which are linearly independent over ℝ. Let \(\operatorname{BAS}^{+}\) be the subset of all bases that are clockwise-oriented, i.e. the set of all \((z,w)\in\operatorname{BAS}\) with \(\operatorname {Im}(z/w)>0\). There is a natural map

defined by

This map is surjective but not injective, since for example Ψ(z+w,w)=Ψ(z,w). The group Γ

0=SL2(ℤ) acts on \(\operatorname{BAS}^{+}\) by γ.(z,w)=(z,w)γ

t=(az+bw,cz+dw) if  . Here we remind the reader that an invertible real matrix preserves the orientation of a basis if and only if the determinant of the matrix is positive.

. Here we remind the reader that an invertible real matrix preserves the orientation of a basis if and only if the determinant of the matrix is positive.

The group Γ 0=SL2(ℤ) is called the modular group.

Lemma 2.1.4

Two bases are mapped to the same lattice under Ψ if and only if they lie in the same Γ 0-orbit. So Ψ induces a bijection

Proof

Let (z,w) and (z′,w′) be two clockwise-oriented bases such that Ψ(z,w)=Λ=Ψ(z′,w′). Since z′,w′ are elements of the lattice generated by z and w, there are a,b,c,d∈ℤ with  . Since, on the other hand, z and w lie in the lattice generated by z′ and w′, there are α,β,γ,δ∈ℤ with

. Since, on the other hand, z and w lie in the lattice generated by z′ and w′, there are α,β,γ,δ∈ℤ with  , so

, so  . As z and w are linearly independent over ℝ, it follows that

. As z and w are linearly independent over ℝ, it follows that  and so

and so  is an element of \(\operatorname{GL}_{2}(\mathbb{Z})\). In particular one gets det(g)=±1. Since g maps the clockwise-oriented basis (z,w) to the clockwise-oriented basis (z′,w′), one concludes det(g)>0, i.e. det(g)=1 and so g∈Γ

0, which means that the two bases are in the same Γ

0-orbit. The converse direction is trivial. □

is an element of \(\operatorname{GL}_{2}(\mathbb{Z})\). In particular one gets det(g)=±1. Since g maps the clockwise-oriented basis (z,w) to the clockwise-oriented basis (z′,w′), one concludes det(g)>0, i.e. det(g)=1 and so g∈Γ

0, which means that the two bases are in the same Γ

0-orbit. The converse direction is trivial. □

The set \(\operatorname{BAS}^{+}\) is a bit unwieldy, so one divides out the action of the group ℂ×. This action of ℂ× on the set \(\operatorname{BAS}^{+}\) is defined by ξ(a,b)=(ξa,ξb). One has (a,b)=b(a/b,1), so every ℂ×-orbit contains exactly one element of the form (z,1) with z∈ℍ. The action of ℂ× commutes with the action of Γ 0, so ℂ× acts on \(\varGamma_{0}\backslash \operatorname{BAS}^{+}\). On the other hand, ℂ× acts on LATT by multiplication and the map Ψ translates one action into the other, which means Ψ(λ(z,w))=λΨ(z,w). As Ψ is bijective, the two ℂ×-actions are isomorphic and Ψ maps orbits bijectively to orbits, so giving a bijection

Now let z∈ℍ. Then \((z,1)\in\operatorname{BAS}^{+}\). For  one has, modulo the ℂ×-action:

one has, modulo the ℂ×-action:

Letting Γ 0 act on ℍ by linear fractionals, the map z↦(z,1)ℂ× is thus equivariant with respect to the actions of Γ 0.

Theorem 2.1.5

The map z↦ℤz+ℤ induces a bijection

Proof

The map is a composition of the maps

so it is well defined. We have to show that φ is bijective.

To show surjectivity, let \((v,w)\in\operatorname{BAS}^{+}\). Then (v,w)ℂ×=(v/w,1)ℂ× and v/w∈ℍ, so φ is surjective. For injectivity, assume φ(Γ

0

z)=φ(Γ

0

w). This means Γ

0(z,1)ℂ×=Γ

0(w,1)ℂ×, so there are  and λ∈ℂ× with (w,1)=γ(z,1)λ. The right-hand side is

and λ∈ℂ× with (w,1)=γ(z,1)λ. The right-hand side is

Comparing the second coordinates, we get λ=(cz+d)−1 and so \(w=\frac{az+b}{cz+d}=\gamma.z\), as claimed. □

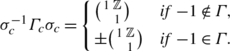

The element  acts trivially on the upper half plane ℍ. This motivates the following definition.

acts trivially on the upper half plane ℍ. This motivates the following definition.

Definition 2.1.6

Let \(\overline{\varGamma }_{0}=\varGamma_{0}/\pm1\). For a subgroup Γ of Γ 0 let \(\overline{\varGamma }\) be the image of Γ in \(\overline{\varGamma }_{0}\). Then we have

Let

One has

as well as S 2=−1=(ST)3. Denote by D the set of all z∈ℍ with \(|\operatorname{Re}(z)|<\frac{1}{2}\) and |z|>1, as depicted in the next figure. Let \(\overline{D}\) be the closure of D in ℍ. The set D is a so-called fundamental domain for the group SL2(ℤ); see Definition 2.5.17.

Theorem 2.1.7

-

(a)

For every z∈ℍ there exists a γ∈Γ 0 with \(\gamma z\in\overline{D}\).

-

(b)

If \(z,w\in\overline{D}\), with z≠w, lie in the same Γ 0-orbit, then we have \(\operatorname{Re}(z)=\pm\frac{1}{2}\) and z=w±1, or |z|=1 and w=−1/z. In any case the two points lie on the boundary of D.

-

(c)

For z∈ℍ let Γ 0,z be the stabilizer of z in Γ 0. For \(z\in\overline{D}\) we have Γ 0,z ={±1} except when

-

z=i, then Γ 0,z is a group of order four, generated by S,

-

z=ρ=e 2πi/3, then Γ 0,z is of order six, generated by ST,

-

\(z=-\overline{\rho}=e^{\pi i/3}\), then Γ 0,z is of order six, generated by TS.

-

-

(d)

The group Γ 0 is generated by S and T.

Proof

Let Γ′ be the subgroup of Γ

0 generated by S and T. We show that for every z∈ℍ there is a γ′∈Γ′ with \(\gamma'z\in\overline{D}\). So let  in Γ′. For z∈ℍ one has

in Γ′. For z∈ℍ one has

Since c and d are integers, for every M>0 the set of all pairs (c,d) with |cz+d|<M is finite. Therefore there exists γ∈Γ′ such that \(\operatorname {Im}(\gamma z)\) is maximal. Choose an integer n such that T n γz has real part in [−1/2,1/2]. We claim that the element w=T n γz lies in \(\overline{D}\). It suffices to show that |w|≥1. Assuming |w|<1, we conclude that the element −1/w=Sw has imaginary part strictly bigger than \(\operatorname {Im}(w)\), which contradicts our choices. So indeed we get w=T n γz in \(\overline{D}\) and part (a) is proven.

We now show parts (b) and (c). Let \(z\in\overline{D}\) and let  with \(\gamma z\in\nobreak \overline{D}\). Replacing the pair (z,γ) by (γz,γ

−1), if necessary, we assume \(\operatorname{Im}(\gamma z)\ge\operatorname{Im}(z)\), so |cz+d|≤1. This cannot hold for |c|≥2, so we have the cases c=0,1,−1.

with \(\gamma z\in\nobreak \overline{D}\). Replacing the pair (z,γ) by (γz,γ

−1), if necessary, we assume \(\operatorname{Im}(\gamma z)\ge\operatorname{Im}(z)\), so |cz+d|≤1. This cannot hold for |c|≥2, so we have the cases c=0,1,−1.

-

If c=0, then d=±1 and we can assume d=1. Then γz=z+b and b≠0. Since the real parts of both numbers lie in [−1/2,1/2], it follows that b=±1 and \(\operatorname{Re}(z)=\pm1/2\).

-

If c=1, then the assertion |z+d|≤1 implies d=0, except if \(z=\rho, -\overline{\rho}\), in which case we can also have d=1,−1.

-

If d=0, then |z|=1 and ad−bc=1 implies b=−1, so gz=a−1/z and we conclude a=0, except if \(\operatorname{Re}(z)=\pm \frac{1}{2}\), so \(z=\rho,-\overline{\rho}\).

-

If z=ρ and d=1, then a−b=1 and gρ=a−1/(1+ρ)=a+ρ, so a=0,1. The case \(z=-\overline{\rho}\) is treated similarly.

-

-

If c=−1, one can replace the whole matrix with its negative and thus can apply the case c=1.

Finally, we must show that Γ 0=Γ′. For this let γ∈Γ 0 and z∈D. Then there is γ′∈Γ′ with γ′γz=z, so γ=γ′−1∈Γ′. □

2.2 Modular Forms

In this section we introduce the protagonists of this chapter. Before that, we start with weakly modular functions.

Definition 2.2.1

Let k∈ℤ. A meromorphic function f on the upper half plane ℍ is called weakly modular of weight k if

holds for every z∈ℍ, in which f is defined and every  .

.

Note: for such a function f≠0 to exist, k must be even, since the matrix  lies in SL2(ℤ).

lies in SL2(ℤ).

For  we denote the induced map \(z\mapsto\sigma z=\frac{az+b}{cz+d}\) again by σ. Then

we denote the induced map \(z\mapsto\sigma z=\frac{az+b}{cz+d}\) again by σ. Then

We deduce from this that a holomorphic function f is weakly modular of weight 2 if and only if the differential form ω=f(z) dz on ℍ is invariant under Γ 0, i.e. if γ ∗ ω=ω holds for every γ∈Γ 0, where γ ∗ ω is the pullback of the form ω under the map γ:ℍ→ℍ.

More generally, we define for k∈ℤ and f:ℍ→ℂ:

where  . If k is fixed, we occasionally leave the index out, i.e. we write f|σ=f|

k

σ.

. If k is fixed, we occasionally leave the index out, i.e. we write f|σ=f|

k

σ.

Lemma 2.2.2

The maps f↦f|σ define a linear (right-)action of the group G on the space of functions f:ℍ→ℂ, i.e.

-

for every σ∈G the map f↦f|σ is linear,

-

one has f|1=f and f|(σσ′)=(f|σ)|σ′ for all σ,σ′∈G.

Every right-action can be made into a left-action by inversion, i.e. one defines σf=f|σ −1 and one then gets (σσ′)f=σ(σ′f).

Proof

The only non-trivial assertion is f|(σσ′)=(f|σ)|σ′. For k=0 this is simply:

Let j(σ,z)=(cz+d). One verifies that this ‘factor of automorphy’ satisfies a so-called cocycle relation:

As f| k σ(z)=j(σ,z)−k f|0 σ(z), we conclude

□

Lemma 2.2.3

Let k∈2ℤ. A meromorphic function f on ℍ is weakly modular of weight k if and only if for every z∈ℍ one has

Proof

By definition, f is weakly modular if and only if f| k γ=f for every γ∈Γ 0, which means that f is invariant under the group action of Γ 0. It suffices to check invariance on the two generators S and T of the group. □

We now give the definition of a modular function. Let f be a weakly modular function. The map q:z↦e 2πiz maps the upper half plane surjectively onto the pointed unit disk \(\mathbb{D}^{*}=\{z\in\mathbb{C}:0<|z|<1\}\). Two points z,w in ℍ have the same image under q if and only if there is m∈ℤ such that w=z+m. So q induces a bijection \(q:\mathbb{Z}\backslash \mathbb{H}\to \mathbb{D}^{*}\). In particular, for every weakly modular function f on ℍ there is a function \(\tilde{f}\) on \(\mathbb {D}^{*}\smallsetminus q(\{\mbox {poles}\})\) with

This means that for \(w\in\mathbb{D}^{*}\) we have

where logw is an arbitrary branch of the holomorphic logarithm, being defined in a neighborhood of w. Then \(\tilde{f}\) is a meromorphic function on the pointed unit disk.

Definition 2.2.4

A weakly modular function f of weight k is called a modular function of weight k if the induced function \(\tilde{f}\) is meromorphic on the entire unit disk \(\mathbb{D}=\{z\in\mathbb{C}:|z|<1\}\).

Suggestively, in this case one also says that f is ‘meromorphic at infinity’. This means that \(\tilde{f}(q)\) has at most a pole at q=0. It follows that poles of \(\tilde{f}\) in \(\mathbb{D}^{*}\) cannot accumulate at q=0, because that would imply an essential singularity at q=0. For the function f it means that there exists a bound T=T f >0 such that f has no poles in the region \(\{ z\in\mathbb{H}: \operatorname {Im}(z)>T\}\).

The Fourier expansion of the function f is of particular importance. Next we show that the Fourier series converges uniformly. In the next lemma we write C ∞(ℝ/ℤ) for the set of all infinitely often differentiable functions g:ℝ→ℂ, which are periodic of period 1, which means that one has g(x+1)=g(x) for every x∈ℝ.

Definition 2.2.5

Let D⊂ℝ be an unbounded subset. A function f:D→ℂ is said to be rapidly decreasing if for every N∈ℕ the function x N f(x) is bounded on D.

For D=ℕ one gets the special case of a rapidly decreasing sequence.

Examples 2.2.6

-

For D=ℕ the sequence \(a_{k}=\frac{1}{k!}\) is rapidly decreasing.

-

For D=[0,∞) the function f(x)=e −x is rapidly decreasing.

-

For D=ℝ the function \(f(x)=e^{-x^{2}}\) is rapidly decreasing.

Proposition 2.2.7

(Fourier series)

If g is in C ∞(ℝ/ℤ), then for every x∈ℝ one has

where \(c_{k}(g)=\int_{0}^{1}g(t) e^{-2\pi ikt}\,dt\) and the sum converges uniformly. The Fourier coefficients c k =c k (g) are rapidly decreasing as functions in k∈ℤ.

The Fourier coefficients c k (g) are uniquely determined in the following sense: Let (a k ) k∈ℤ be a family of complex numbers such that for every x∈ℝ the identity

holds with locally uniform convergence of the series. Then it follows that a k =c k (g) for every k∈ℤ.

Proof

Using integration by parts repeatedly, we get for k≠0,

So the sequence (c k (g)) is rapidly decreasing. Consequently, the sum ∑ k∈ℤ|c k (g)| converges, so the series ∑ k∈ℤ c k (g)e 2πikx converges uniformly. We only have to show that it converges to g. It suffices to do that at the point x=0, since, assuming we have this convergence at x=0, we can set g x (t)=g(x+t) and we see

By \(c_{k}(g_{x})=\int_{0}^{1}g(t+x)e^{-2\pi ikt}\,dt = e^{2\pi ikx}c_{k}(g)\) we get the claim. So we only have to show g(0)=∑ k c k (g). Replacing g(x) with g(x)−g(0), we can assume g(0)=0, in which case we have to show that ∑ k c k (g)=0. Let

As g(0)=0, it follows that h∈C ∞(ℝ/ℤ) and we have

Since h∈C ∞(ℝ/ℤ), the series ∑ k c k (h) converges absolutely as well and ∑ k c k (g)=∑ k (c k−1(h)−c k (h))=0.

Now for the uniqueness of the Fourier coefficients. Let (a k ) k∈ℤ be as in the proposition. By locally uniform convergence the following interchange of integration and summation is justified. For l∈ℤ we have

One has

This implies c l (g)=a l . □

This nice proof of the convergence of Fourier series is, to the author’s knowledge, due to H. Jacquet.

Let f be a weakly modular function of weight k. As f(z)=f(z+1) and f is infinitely differentiable (except at the poles), one can write it as a Fourier series:

if there is no pole of f on the line \(\operatorname{Im}(w)=y\), which holds true for all but countably many values of y>0. For such y the sequence (c n (y)) n∈ℤ is rapidly decreasing.

Lemma 2.2.8

Let f be a modular function on the upper half plane ℍ and let T>0 such that f has no poles in the set \(\{\operatorname{Im}(z)>T\}\). For every n∈ℤ and y>T one has c n (y)=a n e −2πny for a constant a n . Then

where −N is the pole-order of the induced meromorphic function \(\tilde{f}\) at q=0. For every y>0, the sequence a n e −yn is rapidly decreasing.

Proof

The induced function \(\tilde{f}\) with \(f(z)=\tilde{f}(q(z))\) or \(\tilde{f}(q)=f(\frac{\log q}{2\pi i})\) is meromorphic around q=0. In a pointed neighborhood of zero, the function \(\tilde{f}\) therefore has a Laurent expansion

Replacing w by q(z), one gets

The claim follows from the uniqueness of the Fourier coefficients. □

Note, in particular, that the Fourier expansion of a modular function f equals the Laurent expansion of the induced function \(\tilde{f}\).

Definition 2.2.9

A modular function f is called a modular form if it is holomorphic in the upper half plane ℍ and holomorphic at ∞, i.e. a n =0 holds for every n<0.

A modular form f is called cusp form if additionally a 0=0. In that case one says that f vanishes at ∞.

As an example, consider Eisenstein series G k for k≥4. Write q=e 2πiz.

Proposition 2.2.10

For even k≥4 we have

where σ k (n)=∑ d|n d k is the kth divisor sum.

Proof

On the one hand we have the partial fraction expansion of the cotangent function

and on the other

So

We repeatedly differentiate both sides to get for k≥4,

The Eisenstein series is

The proposition is proven. □

Let f be a modular function of weight k. For γ∈Γ 0 the formula f(γz)=(cz+d)k f(z) shows that the orders of vanishing of f at the points z and γz agree. So the order \(\operatorname{ord}_{z} f\) depends only on the image of z in Γ 0∖ℍ.

We further define \(\operatorname{ord}_{\infty}(f)\) as the order of vanishing of \(\tilde{f}(q)\) at q=0, where \(\tilde{f}(e^{2\pi i z})=f(z)\). Finally let z∈ℍ be equal to the number 2e z , the order of the stabilizer group of z in Γ 0, so \(e_{z}=\frac{|\varGamma_{0,z}|}{2}\). Then

Here we recall that the orbit of an element w∈ℍ is defined as

Theorem 2.2.11

Let f≠0 be a modular function of weight k. Then

Proof

Note first that the sum is finite, as f has only finitely many zeros and poles modulo Γ 0. Indeed, in Γ 0∖ℍ these cannot accumulate, by the identity theorem. Also at ∞ they cannot accumulate, as f is meromorphic at ∞ as well.

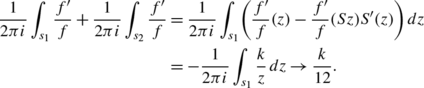

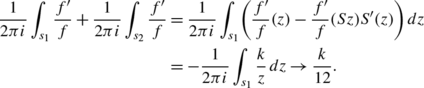

We write the claim as

Let D be the fundamental domain of Γ 0 as in Sect. 2.1. We integrate the function \(\frac{1}{2\pi i}\frac{f'}{f}\) along the positively oriented boundary of D, as in the following figure.

Assume first that f has neither a zero or a pole on the boundary of D, with the possible exception of i or \(\rho,-\overline{\rho}\). Let C be the positively oriented boundary of D, except for \(i,\rho ,-\overline{\rho}\), which we circumvent by circular segments as in the figure. Further, we cut off the domain D at \(\operatorname{Im}(z)=T\) for some T>0 which is bigger than the imaginary part of any zero or pole of f. By the residue theorem we get

On the other hand:

-

(a)

Substituting q=e 2πiz we transform the line \(\frac{1}{2}+iT,-\frac{1}{2}+iT\) into a circle ω around q=0 of negative orientation. So

$$\frac{1}{2\pi i} \int_{\frac{1}{2}+iT}^{-\frac{1}{2}+iT}\frac{f'}{f} = \frac{1}{2\pi i}\int_\omega\frac{\tilde{f}'}{\tilde{f}} = -\operatorname {ord}_\infty(f). $$ -

(b)

The circular segment k(ρ) around ρ has angle \(\frac {2\pi}{6}\). By Exercise 1.11 we conclude:

$$\frac{1}{2\pi i}\int_{k(\rho)}\frac{f'}{f} \to -\frac{1}{6} \operatorname{ord}_\rho(f), $$as the radius of the circular segment tends to zero. Analogously, one treats the circular segments k(i) and \(k(-\overline{\rho})\),

$$\frac{1}{2\pi i}\int_{k(i)}\frac{f'}{f} \to -\frac{1}{2} \operatorname {ord}_i(f),\qquad \frac{1}{2\pi i}\int _{k(-\overline{\rho})}\frac{f'}{f} \to -\frac{1}{6}\operatorname{ord}_\rho(f). $$ -

(c)

The vertical path integrals add up to zero.

-

(d)

The two segments s 1,s 2 of the unit circle map to each other under the transform z↦Sz=−z −1. One has

$$\frac{f'}{f}(Sz)S'(z)=\frac{k}{z}+ \frac{f'}{f}(z). $$So

Comparing these two expressions for the integral, letting the radii of the small circular segments shrink to zero, one obtains the result.

If f has more poles or zeros on the boundary, the path of integration may be modified so as to circumvent these, as shown in the figure. □

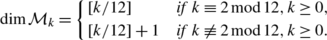

Let \(\mathcal{M}_{k}=\mathcal{M}_{k}(\varGamma_{0})\) be the complex vector space of all modular forms of weight k and let S k be the space of cusp forms of weight k. Then \(S_{k}\subset\mathcal{M}_{k}\) is the kernel of the linear map f↦f(i∞). By definition, it follows that

which means that if \(f\in\mathcal{M}_{k}\) and \(g\in\mathcal{M}_{l}\), then \(fg\in\mathcal{M}_{k+l}\).

Note that a holomorphic function f on ℍ with f| k γ=f for every γ∈Γ 0 lies in \(\mathcal{M}_{k}\) if and only if the limit

exists.

The differential equation of the Weierstrass function ℘ features the coefficients

It follows that g 4(i∞)=120ζ(4) and g 6(i∞)=280ζ(6). By Proposition 1.5.2 we have

So with

it follows that Δ(i∞)=0, i.e. Δ is a cusp form of weight 12.

Theorem 2.2.12

Let k be an even integer.

-

(a)

If k<0 or k=2, then \(\mathcal{M}_{k}=0\).

-

(b)

If k=0,4,6,8,10, then \(\mathcal{M}_{k}\) is a one-dimensional vector space spanned by 1, G 4, G 6, G 8, G 10, respectively. In these cases the space S k is zero.

-

(c)

Multiplication by Δ defines an isomorphism

$$\mathcal{M}_{k-12}\stackrel{\cong}{\rightarrow} S_{k}. $$

Proof

Take a non-zero element \(f\in\mathcal{M}_{k}\). All terms on the left of the equation

are ≥0. Therefore k≥0 and also k≠2, as 1/6 cannot be written in the form a+b/2+c/3 with a,b,c∈ℕ0. This proves (a).

If 0≤k<12, then \(\operatorname{ord}_{\infty}(f)=0\), and therefore S k =0 and \(\dim\mathcal{M}_{k}\le1\). This implies (b).

The function Δ has weight 12, so k=12. It is a cusp form, so \(\operatorname{ord}_{\infty}(\varDelta )>0\). The formula implies \(\operatorname{ord}_{\infty}(\varDelta )=1\) and that Δ has no further zeros. The multiplication with Δ gives an injective map \(\mathcal{M}_{k-12}\to S_{k}\) and for 0≠f∈S k we have \(f/\varDelta \in\mathcal{M}_{k-12}\), so the multiplication with Δ is surjective, too. □

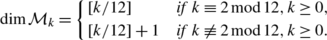

Corollary 2.2.13

-

(a)

One has

-

(b)

The space \(\mathcal{M}_{k}\) has a basis consisting of all monomials \(G_{4}^{m}G_{6}^{n}\) with m,n∈ℕ0 and 4m+6n=k.

Proof

(a) follows from Theorem 2.2.12. For (b) we show that these monomials span the space \(\mathcal{M}_{k}\). For k≤6, this is contained in Theorem 2.2.12. For k≥8 we use induction. Choose m,n∈ℕ0 such that 4m+6n=k. The modular form \(g=G_{4}^{m}G_{6}^{n}\) satisfies g(∞)≠0. Therefore, for given \(f\in\mathcal{M}_{k}\) there is λ∈ℂ such that f−λg is a cusp form, i.e. equal to Δh for some \(h\in\mathcal{M}_{k-12}\). By the induction hypothesis the function h lies in the span of the monomials indicated, and so does f.

It remains to show the linear independence of the monomials. Assume the contrary. Then a linear equation among these monomials of a fixed weight would lead to a polynomial equation satisfied by the function \(G_{4}^{3}/G_{6}^{2}\), which would mean that this function is constant. This, however, is impossible, as the formula of Theorem 2.2.11 shows that G 4 vanishes at ρ, but G 6 does not. □

Let \(M=\bigoplus_{k=0}^{\infty}\mathcal{M}_{k}\) be the graded algebra of all modular forms. One can formulate the corollary by saying that the map

is an isomorphism of ℂ-algebras.

We have seen that

where σ k (n)=∑ d|n d k. Denote the normalized Eisenstein series by E k (z)=G k (z)/(2ζ(k)). With \(\gamma_{k}=(-1)^{k/2}\frac {2k}{B_{k/2}}\) we then have

Examples

Remark

As the spaces of modular forms of weights 8 and 10 are one-dimensional, we immediately get

These formulae are equivalent to

and

It is quite a non-trivial task to find proofs of these number-theoretical statements without using analysis!

2.3 Estimating Fourier Coefficients

Our goal is to attach so-called L-functions to modular forms by feeding their Fourier coefficients into Dirichlet series. In order to show convergence of these Dirichlet series, we must give growth estimates for the Fourier coefficients. Let

be a modular form of weight k≥4.

Proposition 2.3.1

If f=G k , then the Fourier coefficients a n grow like n k−1. More precisely: there are constants A,B>0 with

Proof

There is a positive number A>0 such that for n≥1 we have |a n |=Aσ k−1(n)≥An k−1. On the other hand,

□

Theorem 2.3.2

(Hecke)

The Fourier coefficients a n of a cusp form f of weight k≥4 satisfy

The O-notation means that there is a constant C>0 such that

Proof

Since f is a cusp form, it satisfies the estimate f(z)=O(q)=O(e −2πy) for q→0 or y→∞. Let ϕ(z)=y k/2|f(z)|. The function ϕ is invariant under the group Γ 0. Furthermore, it is continuous and ϕ(z) tends to 0 for y→∞. So ϕ is bounded on the fundamental domain D of Sect. 2.1, so it is bounded on all of ℍ. This means that there exists a constant C>0 with |f(z)|≤Cy −k/2 for every z∈ℍ. By definition, \(a_{n} = \int_{0}^{1} f(x+iy)q^{-n}\,dx\), so that |a n |≤Cy −k/2 e 2πny, and this estimate holds for every y>0. For y=1/n one gets |a n |≤e 2π Cn k/2. □

Remark

It is possible to improve the exponent. Deligne has shown that the Fourier coefficients of a cusp form satisfy

for every ε>0.

Corollary 2.3.3

For every \(f\in\mathcal{M}_{k}(\varGamma_{0})\) with Fourier expansion

we have the estimate

Proof

This follows from \(\mathcal{M}_{k}= S_{k}+\mathbb{C}G_{k}\), as well as Proposition 2.3.1 and Theorem 2.3.2. □

2.4 L-Functions

In this section we encounter the question of why modular forms are so important for number theory. To each modular form f we attach an L-function L(f,s). These L-functions are conjectured to be universal in the sense that L-functions defined in entirely different settings are equal to modular L-functions. In the example of L-functions of (certain) elliptic curves this has been shown by Andrew Wiles, who used it to prove Fermat’s Last Theorem [Wil95].

Definition 2.4.1

For a cusp form f of weight k with Fourier expansion

we define its L-series or L-function by

Lemma 2.4.2

The series L(f,s) converges locally uniformly in the region \(\operatorname{Re}(s)>\frac{k}{2}+1\).

Proof

From a n =O(n k/2), as in Theorem 2.3.2, it follows that

which implies the claim. □

For the functional equation of the L-function we need the Gamma function, the definition of which we now recall.

Definition 2.4.3

The Gamma function is defined for \(\operatorname{Re} (z)>0\) by the integral

Lemma 2.4.4

The Gamma integral converges locally uniformly absolutely in the right half plane \(\operatorname{Re}(z)>0\) and defines a holomorphic function there. It satisfies the functional equation

The Gamma function can be extended to a meromorphic function on ℂ, with simple poles at z=−n, n∈ℕ0 and holomorphic otherwise. The residue at z=−n is \(\frac{(-1)^{n}}{n!}\).

Proof

The function e −t decreases faster at +∞ than any power of t. Therefore the integral \(\int_{1}^{\infty}e^{-t} t^{z-1}\,dt\) converges absolutely for every z∈ℂ and the convergence is locally uniform in z. For 0<t<1 the integrand is \(\le t^{\operatorname{Re}(z)-1}\), so the integral \(\int_{0}^{1} e^{-t}t^{z-1}\,dt\) converges locally uniformly for \(\operatorname{Re}(z)>0\). As zt z−1 is the derivative of t z, we can use integration by parts to compute

The function Γ(z) is holomorphic in \(\operatorname{Re}(z)>0\). Using the formula

we can extend the Gamma function to the region \(\operatorname {Re}(z)>-1\) with a simple pole at z=0 of residue equal to \(\varGamma (1)=\int_{0}^{\infty}e^{-t}\,dt=1\). This argument can be iterated to get the meromorphic continuation to all of ℂ. □

Theorem 2.4.5

Let f be a cusp form of weight k. Then the L-function L(f,s), initially holomorphic for \(\operatorname {Re}(s)>\frac{k}{2}+1\), has an analytic continuation to an entire function. The extended function

is entire as well and satisfies the functional equation

The function Λ(f,s) is bounded on every vertical strip, i.e. for every T>0 there exists C T >0 such that |Λ(f,s)|≤C T for every s∈ℂ with \(|\operatorname{Re}(s)|\le T\).

Proof

Let \(f(z)=\sum_{n=1}^{\infty}a_{n}q^{n}\) with q=e 2πiz be the Fourier expansion. According to Theorem 2.3.2 there is a constant C>0 such that |a n |≤Cn k/2 holds for every n∈ℕ. So for given ε>0 we have for all y≥ε,

with \(D=C\sum_{n=1}^{\infty}n^{k/2}e^{-\varepsilon \pi n}<\infty\). So the function f(iy) is rapidly decreasing as y→∞. The same estimate holds for the function \(y\mapsto\sum_{n=1}^{\infty}|a_{n}|e^{-2\pi yn}\). Consequently, for every s∈ℂ we have

Hence we are allowed to interchange sums and integrals in the following computation due to absolute convergence:

For \(\operatorname{Re}(s)>\frac{k}{2}+1\) the right-hand side converges to

as ε tends to zero. On the other hand, \(f(i\frac{1}{y})=f(-\frac{1}{iy}) =(yi)^{k}f(iy)\), so that f(i/y) is also rapidly decreasing, and the left-hand side converges to \(\int_{0}^{\infty}f(iy)y^{s-1}\,dy\), as ε→0. Together, for \(\operatorname{Re}(s)>\frac{k}{2}+1\) we get

We write this integral as the sum \(\int_{0}^{1}+\int_{1}^{\infty}\). As f(iy) is rapidly decreasing, the integral \(\varLambda_{1}(f,s)=\int_{1}^{\infty}f(iy)y^{s-1}\,dy\) converges for every s∈ℂ and defines an entire function.

Because of

the function Λ 1(f,s) is bounded on every vertical strip.

For the second integral we have

which means Λ 2(f,s)=(−1)k/2 Λ 1(f,k−s), so the claim follows. □

Generally, a series of the form

for s∈ℂ, convergent or not, is called a Dirichlet series. The following typical convergence behavior of a Dirichlet series will be needed in the sequel.

Lemma 2.4.6

Let (a n ) be a sequence of complex numbers. If for a given s 0∈ℂ the sequence \(\frac{a_{n}}{n^{s_{0}}}\) is bounded, then the Dirichlet series \(L(s)=\sum_{n=1}^{\infty}\frac {a_{n}}{n^{s}}\) converges absolutely uniformly on every set of the form

where ε>0.

This lemma reminds us of the convergence behavior of a power series. This is by no means an accident, as the power series with coefficients (a n ) and the corresponding Dirichlet series are linked via the Mellin transform, as we shall see below.

Proof

Suppose that \(|a_{n}n^{-s_{0}}|\le M\) for some M>0 and every n∈ℕ. Let ε>0 be given and let s∈ℂ with \(\operatorname{Re}(s)\ge\operatorname{Re}(s_{0})+1+\varepsilon \). Then s=s 0+α with \(\operatorname{Re}(\alpha )\ge 1+\varepsilon \), and so

As the series over 1/n 1+ε converges, the lemma follows. □

Theorem 2.4.7

(Hecke’s converse theorem)

Let a n be a sequence in ℂ, such that the Dirichlet series \(L(s)=\sum_{n=1}^{\infty}a_{n} n^{-s}\) converges in the region \(\{ \operatorname{Re} (s)>\nobreak C\}\) for some C∈ℝ. If the function Λ(s)=(2π)−s Γ(s)L(s) extends to an entire function, which satisfies the functional equation

then there exists a cusp form f∈S k with L(s)=L(f,s).

Proof

We use the inversion formula of the Fourier transform: For f∈L 1(ℝ) let

Suppose that f is two times continuously differentiable and that the functions f,f′,f″ are all in L 1(ℝ). Then \(\hat{f}(y)=O((1+|y|)^{-2})\), so that \(\hat{f}\in L^{1}(\mathbb{R})\). Under these conditions, we have the Fourier inversion formula:

A proof of this fact can be found in any of the books [Dei05, Rud87, SW71]. We use this formula here for the proof of the Mellin inversion formula.

Theorem 2.4.8

(Mellin inversion formula)

Suppose that the function g is two times continuously differentiable on the interval (0,∞) and for some c∈ℝ the functions

are all in \(\in L^{1}(\mathbb{R}_{+},\frac{dx}{x})\). Then the Mellin transform

exists for \(\operatorname{Re}(s)=c\), and satisfies the growth estimate \(\mathcal{M} g(c+it)=O((1+|t|)^{-2})\). Finally, for every x∈(0,∞) one has the inversion formula:

Proof

A given s∈ℂ with \(\operatorname{Re}(s)=c\) can be written as s=c−2πiy for a unique y∈ℝ. The substitution x=e t gives

with F(t)=e ct g(e t). The conditions imply that F is two times continuously differentiable and that F,F′,F″ are all in L 1(ℝ). Further, one has \(\hat{F}(y)=\mathcal{M}g(c-2\pi iy)\). By the Fourier inversion formula we deduce

The theorem is proven. □

We now show Hecke’s converse theorem. Let a n be a sequence in ℂ, such that the Dirichlet series \(L(s)=\sum_{n=1}^{\infty}a_{n} n^{-s}\) converges in the region \(\{ \operatorname{Re} (s)> C\}\) for a given C∈ℝ. We define

According to Lemma 2.4.6 there is a natural number N∈ℕ such that the Dirichlet series L(s) converges absolutely for \(\operatorname{Re}(s)\ge N\). Therefore one has a n =O(n N), so the series f(z) converges locally uniformly on the upper half plane ℍ and defines a holomorphic function there. We intend to show that it is a cusp form of weight k. Since the group Γ is generated by the elements S and T, it suffices to show that f(−1/z)=z k f(z). As f is holomorphic, it suffices to show that f(i/y)=(iy)k f(iy) for y>0.

We first show that the Mellin transform of the function g(y)=f(iy) exists and that the Mellin inversion formula holds for g. We have

Denote \(g_{N}(y)=\sum_{n=1}^{\infty}n^{N} e^{-2\pi ny}\). Let

for some function h which is holomorphic in y=0. Then

so \(|g_{N}(y)|\le\frac{C}{y^{N+1}}\) for y→0. The same estimate holds for f(iy). For y>1 the function |f(iy)| is less then a constant times

So the function f(iy) is rapidly decreasing for y→∞. The same estimates hold for every derivative of f, increasing N if necessary. So the Mellin integral \(\mathcal{M}g(s)\) converges for \(\operatorname{Re}(s)> N+1\) and since f(iy) is rapidly decreasing for y→∞, the conditions for the Mellin inversion formula are satisfied. Hence by Theorem 2.4.8 we have for every c>N+1,

We next use a classical result of complex analysis, which itself follows from the maximum principle.

Lemma 2.4.9

(Phragmén–Lindelöf principle)

Let ϕ(s) be holomorphic in the strip \(a\le\operatorname{Re}(s)\le b\) for some real numbers a<b. Assume there is α>0, such that for every a≤σ≤b we have \(\phi(\sigma+it)=O(e^{|t|^{\alpha}})\). Suppose there is M∈ℝ with ϕ(σ+it)=O((1+|t|)M) for σ=a and σ=b. Then we have ϕ(σ+it)=O((1+|t|)M) uniformly for all σ∈[a,b].

Proof

See for instance [Con78], Chap. VI, or [Haz01, SS03]. □

We apply this principle to the case ϕ=Λ and a=k−c as well as b=c. We move the path of integration to \(\operatorname{Re}(s)=c'=k-c\), where the integral also converges, according to the functional equation. This move of the integration path is possible by the Phragmén–Lindelöf principle. We infer that

2.5 Hecke Operators

We introduce Hecke operators, which are given by summation over cosets of matrices of fixed determinant. In later chapters, we shall encounter a reinterpretation of these operators in the adelic setting.

For given n∈ℕ let M n denote the set of all matrices in M2(ℤ) of determinant n. The group Γ 0=SL2(ℤ) acts on M n by multiplication from the left.

Lemma 2.5.1

The set M n decomposes into finitely many Γ 0-orbits under multiplication from the left. More precisely, the set

is a set of representatives of Γ 0∖M n .

Notation

Here and for the rest of the book we use the convention that a zero entry of a matrix may be left out, so  stands for the matrix

stands for the matrix  .

.

Proof

We have to show that every Γ

0-orbit meets the set R

n

in exactly one element. For this let  . For x∈ℤ we have

. For x∈ℤ we have

This implies that, modulo Γ 0, we can assume 0≤c<|a|. By the identity

one can interchange a and c, then reduce again by the first step and iterate this process until one gets c=0, which implies that every Γ

0-orbit contains an element of the form  . Then ad=det=n, and since −1∈Γ

0 one can assume a,d∈ℕ. By

. Then ad=det=n, and since −1∈Γ

0 one can assume a,d∈ℕ. By

one can finally reduce to 0≤b<d, so every Γ 0-orbit meets the set R n .

In order to show that R

n

is a proper set of representatives, it remains to show that two elements in R

n

, which lie in the same Γ

0-orbit, are equal. For this let  be in the same Γ

0-orbit. This means that there is

be in the same Γ

0-orbit. This means that there is  with

with

The right-hand side is of the form  . Since a≠0, we infer that z=0. Then xw=1, so x=w=±1. Because of

. Since a≠0, we infer that z=0. Then xw=1, so x=w=±1. Because of

one has a′=ax>0, so x>0 and therefore x=1=w, so a′=a and d′=d. It follows that

so that the condition 0≤b,b′<d finally forces b=b′. □

Let \(\operatorname{GL}_{2}(\mathbb{R})^{+}\) be the set of all \(g\in \operatorname{GL}_{2}(\mathbb{R})\) of positive determinants. The group \(\operatorname{GL}_{2}(\mathbb{R})^{+}\) acts on the upper half plane ℍ by

The center  acts trivially.

acts trivially.

For k∈2ℤ, a function f on ℍ and  we write

we write

If k is fixed, we also use the simpler notation f|γ(z). Note that the power k/2 of the determinant factor has been chosen so that the center of \(\operatorname{GL}_{n}(\mathbb{R})^{+}\) acts trivially.

We write Γ 0=SL2(ℤ). For n∈ℕ define the Hecke operator T n as follows.

Definition 2.5.2

Denote by V the vector space of all functions f:ℍ→ℂ with f|γ=f for every γ∈Γ 0. Define T n :V→V by

where the colon means that the sum runs over an arbitrary set of representatives of Γ 0∖M n in M n . The factor \(n^{\frac{k}{2}-1}\) is for normalization only. The sum is well defined and finite, as f|γ=f for every γ∈Γ 0 and Γ 0∖M n is finite. In order to show that T n f indeed lies in the space V, we compute for γ∈Γ 0,

Using Lemma 2.5.1 we can write

Lemma 2.5.3

The Hecke operator T n preserves the spaces \(\mathcal{M}_{k}(\varGamma_{0})\) and S k (Γ 0).

Proof

We have just shown that for a given \(f\in\mathcal{M}_{k}(\varGamma_{0})\) the function T n f is invariant under the action of Γ 0. Being a finite sum of holomorphic functions, the function T n f is holomorphic on ℍ. To show that T n f is a modular form, we write

This formula shows that T n f(z) converges as \(\operatorname {Im}(z)\to\infty\), since f(z) does. This means that \(T_{n}f\in\mathcal{M}_{k}(\varGamma_{0})\). If f is a cusp form, the limit is zero and the same holds for T n f. □

Proposition 2.5.4

The Hecke operators satisfy the equations

-

\(T_{1}=\operatorname{Id}\),

-

T mn =T m T n , if \(\operatorname{gcd}(m,n)=1\),

-

for every prime number p and every n∈ℕ one has \(T_{p}T_{p^{n}} = T_{p^{n+1}}+p^{k-1}T_{p^{n-1}}\).

Together these equations imply that T n T m =T m T n always, i.e. all Hecke operators commute with each other.

Proof

The first assertion is trivial. For the second note

If m,n∈ℕ are coprime, then it follows that |R

mn

|=|R

m

||R

n

|. To ease the presentation we will, in the following calculations, in an integer matrix  , consider the number b only modulo d. Under this proviso, we show that the map

, consider the number b only modulo d. Under this proviso, we show that the map

is a bijection, where we still assume that m and n are coprime. As both sets have the same cardinality, it suffices to show injectivity. So let

Then aa′=αα′ and since (m,n)=1, it follows that a=α and a′=α′. Analogously for d and δ. So we have

Reduction modulo d′ gives

Being a divisor of n, the number a is coprime to d′, so \(b'\equiv \beta'\operatorname{mod}(d')\). In the same way we get \(b\equiv\beta\operatorname{mod}d\). Hence R m R n =R mn and so

For the last point note

as well as

It follows that

The second set, together with  , is a set of representatives \(R_{p^{n+1}}\). The sum over this gives the term \(T_{p^{n+1}}\). The first set minus

, is a set of representatives \(R_{p^{n+1}}\). The sum over this gives the term \(T_{p^{n+1}}\). The first set minus  is

is

Denote this last set by S. Since the central p acts trivially, one gets

□

We now want to see how the application of a Hecke operator changes the Fourier expansion of a modular form.

Proposition 2.5.5

For a given form \(f(z)=\sum_{m\ge0}c(m)q^{m}\in\mathcal{M}_{k}\) and n∈ℕ the Fourier expansion of T n f is

with

Proof

By definition we have

The sum ∑0≤b<d e 2πibm/d equals d if d|m and 0 otherwise. Setting m′=m/d one gets

Sorting this by powers of q results in

The proposition is proven. □

The following two corollaries are simple consequences of the proposition.

Corollary 2.5.6

One has γ(0)=σ k−1(n)c(0) and γ(1)=c(n).

Corollary 2.5.7

If p is a prime number, then

In Proposition 2.5.4 we have shown that Hecke operators commute with each other. We next show that they can be diagonalized simultaneously.

Lemma 2.5.8

A set of commuting self-adjoint operators on a finite-dimensional unitary space can be simultaneously diagonalized.

We elaborate the formulation of this lemma as follows: let V be a finite-dimensional complex vector space equipped with an inner product 〈.,.〉 and let \(E\subset\operatorname{End}(V)\) be a set of self-adjoint operators on V. Suppose that any two elements S,T∈E commute, i.e. ST=TS. Then there exists a basis of V such that all elements of E are represented by diagonal matrices with respect to that basis. More precisely, this basis, say v 1,…,v n , consists of simultaneous eigenvectors, so for each 1≤j≤n there exists a map χ j :E→ℂ such that

holds for every T∈E.

Proof

We prove the lemma by induction on the dimension of V. If dim(V)=1, then there is nothing to show. So suppose dim(V)>1 and that the claim is proven for all spaces of smaller dimension. If all T∈E are multiples of the identity, i.e. \(T=\lambda \operatorname{Id}\) for some λ=λ(T)∈ℂ, then the claim follows. So assume there exists a T∈E not a multiple of the identity. Since T is self-adjoint, it is diagonalizable, so V is the direct sum of the eigenspaces of T, and each eigenspace is of dimension strictly smaller than dim(V). Let S∈E and let V λ be the T-eigenspace for the eigenvalue λ. We claim that S(V λ )⊂V λ . For a given v∈V λ we have

i.e. S(v)∈V λ and the space V λ is stable under all S∈E and by the induction hypothesis, V λ has a basis of simultaneous eigenvectors. As this holds for all eigenvalues of T, the entire space V has such a basis. □

Definition 2.5.9

Let E be as in the lemma. Then V has a basis v 1,…,v n such that for every S∈E,

for a scalar χ j (S)∈ℂ. We say, the v j are simultaneous eigenvectors of E.

Recall the notion of a complex algebra. This is a ℂ-vector space A with a bilinear map A×A→A written (a,b)↦ab, which is associative, i.e. one has

for all a,b,c∈A.

Examples 2.5.10

-

The set M n (ℂ) of complex n×n matrices is a complex algebra which is isomorphic to the algebra \(\operatorname{End}(V)\) of linear endomorphisms of a complex vector space of dimension n. Giving an isomorphism \(\operatorname{End}(V)\cong\mathrm {M}_{n}(\mathbb{C})\) is equivalent to choosing a basis of V.

-

The set \(\mathcal{B}(V)\) of bounded linear operators on a Banach space V is a complex algebra.

-

Let \(\emptyset\ne E\subset\operatorname{End}(V)\) for a vector space V. The algebra generated by E is the set of all linear combinations of operators of the form S 1⋯S n , where S 1,…,S n ∈E. It is the smallest algebra which contains E.

Denote by \(\mathcal{A}\) the algebra generated by E. Then the v j are simultaneous eigenvectors for the whole of \(\mathcal{A}\), and the maps χ j can be extended to maps \(\chi_{j}:\mathcal{A}\to\mathbb{C}\), such that for every operator \(T\in\mathcal{A}\) the eigen-equation Tv j =χ j (T)v holds. Note that for \(S,T\in\mathcal{A}\) one has

so χ j (S+T)=χ j (S)+χ j (T). Further χ j (λT)=λχ j (T) for every λ∈ℂ; this means that each χ j is a linear map. More than that, one has

so it even follows χ j (ST)=χ j (S)χ j (T), i.e. the map χ j is multiplicative. Together this means: every χ j is an algebra homomorphism of the algebra \(\mathcal{A}\) to ℂ.

In the sequel, we shall need to following theorem, known as the Elementary Divisor Theorem.

Theorem 2.5.11

(Elementary Divisor Theorem)

For a given integer matrix A∈M n (ℤ) with det(A)≠0 there exist invertible matrices \(S,T\in\operatorname{GL}_{n}(\mathbb{Z})\) and natural numbers d 1,d 2,…,d n with d j |d j+1 such that

The numbers d 1,…,d n are uniquely determined by A and are called the elementary divisors of A.

Proof

For example in [HH80]. □

Definition 2.5.12

Denote by \(\operatorname{GL}_{2}(\mathbb{Q})^{+}\) the set of all matrices \(g\in\operatorname{GL}_{2}(\mathbb{Q})\) with det(g)>0. This is a subgroup of the group \(\operatorname{GL}_{2}(\mathbb{Q})\) of index 2.

Proposition 2.5.13

We continue to write Γ 0=SL2(ℤ). A complete set of representatives of the double quotient

is given by the set of all diagonal matrices

, where

a∈ℚ and

n∈ℕ.

, where

a∈ℚ and

n∈ℕ.

Proof

For a given \(\alpha \in\operatorname{GL}_{2}(\mathbb{Q})^{+}\) there exists N∈ℕ, such that Nα is an integer matrix. By the Elementary Divisor Theorem there are \(S,T\in\operatorname{GL}_{2}(\mathbb{Z})\) such that Nα=SDT, where  with d

1,n∈ℕ. If necessary, one can multiply S and T with the matrix

with d

1,n∈ℕ. If necessary, one can multiply S and T with the matrix  , so that S,T∈SL2(ℤ) can be assumed. Therefore we find

, so that S,T∈SL2(ℤ) can be assumed. Therefore we find  . The uniqueness of the representative follows from the Elementary Divisor Theorem, if one chooses N as the unique smallest N∈ℕ making Nα an integer matrix. □

. The uniqueness of the representative follows from the Elementary Divisor Theorem, if one chooses N as the unique smallest N∈ℕ making Nα an integer matrix. □

Corollary 2.5.14

For given \(g\in\operatorname{GL}_{2}(\mathbb{Q})^{+}\) and Γ 0=SL2(ℤ) one has

Proof

By the proposition we can assume that g is a diagonal matrix  . Then

. Then  and this last matrix lies in the same double Γ

0-coset as g, since

and this last matrix lies in the same double Γ

0-coset as g, since

so the corollary is proven. □

We have seen that the group G=SL2(ℝ) acts on the upper half plane ℍ via

Lemma 2.5.15

The measure \(d\mu=\frac{dx\,dy}{y^{2}}\) on ℍ is invariant under the action of G, i.e. we have

for every integrable function f and every g∈G.

Proof

Every g∈G defines a holomorphic map z↦gz on ℍ. We compute its differential as

This is equivalent to the identity of differential forms

where dz=dx+idy and d(gz) is the pullback of dz under g. Applying complex conjugation yields \(\overline{dz}=dx-idy\), so \(dz\wedge\overline{dz}=-2i (dx\wedge dy)\). Further, by the above,

or

which is to say that the differential form \(\frac{dz\wedge\overline {dz}}{\operatorname{Im}(z)^{2}}\) is invariant under G. This implies the claim. □

This lemma can also be proved without the use of differential forms; see Exercise 2.8.

Theorem 2.5.16

The spaces \(\mathcal{M}_{k}\) and S k have bases consisting of simultaneous eigenvectors of all Hecke operators.

Proof

We want to apply the lemma with E={T n :n∈ℕ}. For this we have to define an inner product on \(\mathcal{M}_{k}\). For given \(f,g\in\mathcal{M}_{k}\) the function \(f(z)\overline{g(z)}y^{k}\) is invariant under the group Γ 0. It is a continuous, hence measurable, function on the quotient Γ 0∖ℍ. The measure \(\frac{dx\,dy}{y^{2}}\) is Γ 0-invariant as well, and hence defines a measure μ on Γ 0∖ℍ. This is an important point, so we will explain it a bit further. One way to view this measure on the quotient Γ 0∖ℍ is to identify Γ 0∖ℍ with a measurable set of representatives R with \(D\subset R\subset \overline{D}\), where D is the standard fundamental domain of Definition 2.1.6. Then any measurable subset A⊂Γ 0∖ℍ can be viewed as a subset of R⊂ℍ and the measure \(\frac{dx\,dy}{y^{2}}\) can be applied. Interestingly, the measure μ on Γ 0∖ℍ is a finite measure, i.e.

as the \(\frac{dx\, dy}{y^{2}}\)-measure of \(\overline{D}\) is finite by Exercise 2.9. According to Exercise 2.15 the integral

exists if one of the two functions f,g is a cusp form. This integral defines an inner product on the space S k , which is called the Petersson inner product. We show that \(\langle T_{n}f,g\rangle_{\operatorname{Pet}}=\langle f,T_{n}g\rangle_{\operatorname{Pet}}\), so the T n are self-adjoint on the space S k . This implies the claim on S k . The space \(S_{k}^{\perp}=\{ f\in\mathcal{M}_{k}:\langle f,g\rangle_{\operatorname{Pet}}=0\ \forall g\in S_{k}\}\) is one-dimensional if \(\mathcal{M}_{k}\ne0\). By the self-adjointness of the Hecke operators, this space is T n -invariant as well, so, being one-dimensional, it is a simultaneous eigenspace. It only remains to show the claimed self-adjointness.

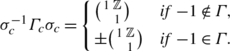

We do this by extending the Petersson inner product to functions which are not necessarily invariant under Γ 0, but only under a subgroup of finite index in Γ 0. We first consider the case k=0. Take two continuous and bounded functions f,g on ℍ, which are invariant under Γ 0, so they satisfy f(γz)=f(z) for every z∈ℍ and every γ∈Γ 0, and the same for the function g. Then we define

where μ is the measure \(\frac{dx\,dy}{y^{2}}\). The integral exists, since f and g are bounded and Γ 0∖ℍ has finite measure, as we have seen above. We now make a crucial observation: If Γ⊂Γ 0 is a subgroup of finite index, then

where, as in Definition 2.1.6, the group \(\overline{\varGamma }_{0}\) is Γ 0/±1 and \(\overline {\varGamma }\) is the image of Γ in \(\overline{\varGamma }_{0}\). If the functions f and g are continuous and bounded, but only invariant under Γ and no longer invariant under Γ 0, then the last expression still does make sense. This means that we can define 〈f,g〉 in this more general situation by the expression

In this way we extend the definition of the Petersson inner product in the case k=0. In the case k>0 we consider two continuous functions f,g with f| k σ=f for every σ∈Γ, and the same for g. We assume that the Γ-invariant function |f(z)y k/2| is bounded on the upper half plane ℍ and the same for g. We then define

We claim that for a given \(\alpha \in\operatorname{GL}_{2}(\mathbb {Q})^{+}\) the group Γ=α −1 Γ 0 α∩Γ 0 is a subgroup of Γ 0 of finite index.

Proof of This Claim

By Proposition 2.5.13 we can assume  with r∈ℚ and n∈ℕ. Then

with r∈ℚ and n∈ℕ. Then

So a given  lies in Γ if and only if c/n∈ℤ, i.e. if n divides c. Therefore the group Γ contains the group Γ(n) of all matrices γ∈SL2(ℤ) with

lies in Γ if and only if c/n∈ℤ, i.e. if n divides c. Therefore the group Γ contains the group Γ(n) of all matrices γ∈SL2(ℤ) with  . This group is by definition the kernel of the group homomorphism SL2(ℤ)→SL2(ℤ/nℤ), which comes from the reduction homomorphism ℤ→ℤ/nℤ. As the group SL2(ℤ/nℤ) is finite, the group Γ has finite index in Γ

0.

. This group is by definition the kernel of the group homomorphism SL2(ℤ)→SL2(ℤ/nℤ), which comes from the reduction homomorphism ℤ→ℤ/nℤ. As the group SL2(ℤ/nℤ) is finite, the group Γ has finite index in Γ

0.

Definition 2.5.17

Let Γ⊂SL2(ℤ) be a subgroup. A fundamental domain for Γ is an open subset F⊂ℍ, such that there is a set R⊂ℍ of representatives for Γ∖ℍ with

where μ is the measure \(\frac{dx\,dy}{y^{2}}\).

In particular, if F is a fundamental domain for Γ, then \(\bigcup_{\sigma\in\varGamma }\sigma\overline{F} = \mathbb{H}\), so every point in ℍ lies in a Γ-translate of \(\overline{F}\).

Lemma 2.5.18

Let F⊂ℍ be a fundamental domain for the group Γ⊂SL2(ℤ). For every measurable, Γ-invariant function f on ℍ one has

where \(\mu=\frac{dx\,dy}{y^{2}}\) is the invariant measure. So in particular, the first integral exists if and only if the second does.

Proof

The projection p:ℍ→Γ∖ℍ maps F injectively onto a subset, whose complement is of measure zero. Therefore ∫ Γ∖ℍ f(z) dμ(z)=∫ p(F) f(z) dμ(z). Since the measure on the quotient is defined by the measure on ℍ, the bijection p:F→p(F) preserves measures. This implies the claim. □

Lemma 2.5.19

-

(a)

D is a fundamental domain for Γ 0=SL2(ℤ).

-

(b)

If Γ is a subgroup of Γ 0=SL2(ℤ) of finite index and S is a set of representatives of \(\overline{\varGamma }\backslash \overline{\varGamma }_{0}\), then

$$SD = \bigcup_{\gamma\in S}\gamma D $$is a fundamental domain for the group Γ. The set \(S\subset\overline{\varGamma }_{0}\) is uniquely determined by the fundamental domain SD.

Proof

Part (a) follows from Theorem 2.1.7.

(b) The set S is finite, as Γ has finite index in Γ 0. Hence it follows that \(\overline{SD}=\bigcup_{\gamma\in S}\gamma \overline{D}\). Now let \(R_{\varGamma _{0}}\) be a set of representatives of Γ 0∖ℍ with \(D\subset R_{\varGamma _{0}}\subset\overline{D}\). Then \(R_{\varGamma}=\bigcup_{\gamma\in S}\gamma R_{\varGamma _{0}}\) is a set of representatives of Γ∖ℍ with \(SD\subset R_{\varGamma}\subset\overline{SD}\). Further one has

The last assertion follows from the fact that for γ≠τ in \(\overline{\varGamma }_{0}\) the translates γD and τD are disjoint. □

The points \(\gamma\infty\in\widehat{\mathbb{R}}\) for γ∈S are called the cusps of the fundamental domain SD. These lie in \(\widehat{\mathbb{Q}}=\mathbb{Q}\cup\{\infty\}\). The wording becomes clearer, when one considers the unit disk instead of the upper half plane. So let \(\mathbb{E}=\{ z\in\mathbb{C}: |z|<1\}\) be the open unit disk. The Cayley map:

is a bijection from ℍ to \(\mathbb{E}\) such that τ as well as its inverse τ −1 are both holomorphic. Transporting the fundamental domain SD into E by means of the map τ, the cusps are the points where the fundamental domain touches the boundary of the disk, i.e. the unit circle. Each cusp is the endpoint of two circles which lie inside E and are orthogonal to the unit circle, so they are tangential at the cusp, i.e. the cusp is ‘infinitesimally sharp’, which explains the name ‘cusp’. The next figure shows a fundamental domain F with one cusp.

As the specific choice of a set of representatives S is not important, we frequently write D Γ for the fundamental domain SD.

Lemma 2.5.20

The Petersson inner product is invariant under \(\operatorname {GL}_{2}(\mathbb{Q})^{+}\), which means the following: For given \(f,g\in\mathcal{M}_{k}\), one of them in S k and for each \(\alpha \in\operatorname{GL}_{2}(\mathbb{Q})^{+}\), the inner product 〈f|α,g|α〉 k is defined in the above sense with Γ=αΓ 0 α −1∩Γ 0, and it holds that

Proof

Let Γ 0=SL2(ℤ) and Γ=αΓ 0 α −1∩Γ 0, as well as Γ′=α −1 Γα=α −1 Γ 0 α∩Γ 0. For given \(f\in\mathcal{M}_{k}\) the function h=f|α has the property that h|σ=h for every σ∈Γ′, since σ=α −1 γα for some γ∈Γ 0, so

The same holds for g, so the inner product 〈f|α,g|α〉 is well defined. Note that for  we have

we have

In the following calculation we use the \(\operatorname{GL}_{2}(\mathbb{Q})^{+}\)-invariance of the measure μ together with the fact that we may replace integration over Γ∖ℍ with integration over a fundamental domain according to Lemma 2.5.18. We further use that α −1 D Γ is a fundamental domain for Γ′ to get

Finally we have \([\overline{\varGamma }_{0}:\overline{\varGamma }]=[\overline{\varGamma }_{0}:\overline{\varGamma }']\), since \([\overline{\varGamma }_{0}:\overline{\varGamma }] = \mu(D_{\varGamma})/\mu (D) = \mu(\alpha^{-1}D_{\varGamma})/\mu(D) = [\overline{\varGamma }_{0}:\overline{\varGamma }']\). □

The lemma implies for \(y\in\operatorname{GL}_{2}(\mathbb{Q})^{+}\),

hence,

As f and g are both invariant under Γ 0, the expression 〈f,g|y −1〉 depends only on the double coset Γ 0 y −1 Γ 0. By Corollary 2.5.14, this double coset equals \(\varGamma_{0}\frac{1}{\det (y)}y\varGamma_{0}\). The center acting trivially on \(\mathcal{M}_{k}\), this matrix acts like y. Therefore,

It follows that there are bases of \(\mathcal{M}_{k}\) and S k consisting of simultaneous eigenvectors of all Hecke operators. Theorem 2.5.16 follows. □

Theorem 2.5.21

Let \(f(z)=\sum_{n=0}^{\infty}c(n)q^{n}\) be a non-constant simultaneous eigenfunction of all Hecke operators, i.e. for every n∈ℕ there is a number λ(n)∈ℂ such that T n f=λ(n)f.

-

(a)

The coefficient c(1) is not zero.

-

(b)

If c(1)=1, which can be reached by scaling f, then c(n)=λ(n) for every n∈ℕ.

Proof

By Corollary 2.5.6 the coefficient of q in T n f equals c(n). On the other hand, this coefficient equals λ(n)c(1). Therefore, c(1)=0 would lead to c(n)=0 for all n, hence f=0. Both claims follow. □

A Hecke eigenform \(f\in\mathcal{M}_{k}\) is called normalized if the coefficient c(1) is equal to 1.

Corollary 2.5.22

Let k>0. Two normalized Hecke eigenforms, which share the same Hecke eigenvalues, coincide.

Proof

Let \(f,g\in\mathcal{M}_{k}\) with T n f=λ(n)f and T n g=λ(n)g for every n∈ℕ. By the theorem, all coefficients of the q-expansions of f and g coincide, with the possible exception of the zeroth coefficients. This means that f−g is constant. As k>0, there are no constant modular forms of weight k other than zero. We conclude f=g. □

Corollary 2.5.23

For a normalized Hecke eigenform \(f(z) = \sum_{n=0}^{\infty}c(n)q^{n}\) we have

-

c(mn)=c(m)c(n) if \(\operatorname{gcd}(m,n)=1\),

-

c(p)c(p n)=c(p n+1)+p k−1 c(p n−1), n≥1.

Proof

The assertion follows from the corresponding relations for Hecke operators in Proposition 2.5.4. □

Definition 2.5.24

We say that a Dirichlet series \(L(s)=\sum_{n=1}^{\infty}a_{n}n^{-s}\), which converges in some half plane \(\{\operatorname{Re}(s)>a\}\), has an Euler product of degree k∈ℕ, if for every prime p there is a polynomial

such that in the domain \(\operatorname{Re}(s)>a\) one has

Example 2.5.25

The Riemann zeta function \(\zeta(s)=\sum_{n=1}^{\infty}n^{-s}\), convergent for \(\operatorname{Re}(s)>1\), has the Euler product

see Exercise 1.5.

Corollary 2.5.26

The L-function \(L(f,s)=\sum_{n=1}^{\infty}c(n) n^{-s}\) of a normalized Hecke eigenform \(f(z)=\sum_{n=0}^{\infty}c(n)q^{n}\in\mathcal{M}_{k}\) has an Euler product:

which converges locally uniformly absolutely for \(\operatorname{Re}(s)>k\).

Proof

By Corollary 2.3.3 the coefficients grow at most like c(n)=O(n k−1). So the L-series converges locally uniformly absolutely for \(\operatorname{Re}(s)>k\). The partial sum

also converges absolutely. Denote by ∏ p≤N the finite product over all primes p≤N for a given N∈ℕ. For coprime m,n∈ℕ we have c(mn)=c(m)c(n), so that

where the sum on the right-hand side runs over all natural numbers whose prime divisors are all ≤N. As the L-series converges absolutely, the right-hand side converges to L(f,s) for N→∞, and we have

It remains to show

We expand

□

2.6 Congruence Subgroups

In the theory of automorphic forms one also considers functions which satisfy the modularity condition not for the full modular group SL2(ℤ), but only for subgroups of finite index. The most important subgroups are the congruence subgroups.

Definition 2.6.1

Fix a natural number N. The reduction map ℤ→ℤ/Nℤ is a ring homomorphism and it induces a group homomorphism SL2(ℤ)→SL2(ℤ/Nℤ). The group Γ(N)=ker(SL2(ℤ)→SL2(ℤ/Nℤ)) is called the principal congruence subgroup of Γ 0=SL2(ℤ) of level N. So we have

A subgroup Γ⊂SL2(ℤ) is called a congruence subgroup if it contains a principal congruence subgroup, i.e. if there is a natural number N∈ℕ with Γ(N)⊂Γ.

Note the special case

Note that for N≥3 the group Γ(N) does not contain the element −1. Therefore, for such a group Γ there can exist non-zero modular forms of odd weight.

Lemma 2.6.2

-

(a)

The intersection of two congruence groups is a congruence group.

-

(b)

Let Γ be a congruence subgroup and let \(\alpha \in \operatorname{GL}_{2}(\mathbb{Q})\). Then Γ∩αΓα −1 is also a congruence subgroup.

Proof

(a) Let Γ,Γ′⊂Γ 0 be congruence subgroups. By definition, there are M,N∈ℕ with Γ(M)⊂Γ, Γ(N)⊂Γ′. Then Γ(MN)⊂(Γ(M)∩Γ(N))⊂(Γ∩Γ′).

(b) Fix N≥2 such that Γ(N)⊂Γ. There are natural numbers M 1,M 2 such that M 1 α,M 2 α −1∈M2(ℤ). Set M=M 1 M 2 N. We claim that Γ(M)⊂αΓ 0 α −1 or equivalently α −1 Γ(M)α⊂Γ 0. For γ∈Γ(M) we write γ=I+Mg with g∈M2(ℤ). It follows that α −1 γα=I+N(M 2 α −1)g(M 1 α)∈Γ(N)⊂Γ. □

Let D Γ be a fundamental domain for the congruence subgroup Γ as constructed in Lemma 2.5.19. The cusps of the fundamental domain D Γ lie in the set

The stabilizer group Γ(1)∞ of the point ∞ in Γ(1) is  .

.

Lemma 2.6.3

Let Γ be a subgroup of finite index in Γ 0=SL2(ℤ). For every c∈ℚ∪{∞} there exists a \(\sigma_{c}\in\operatorname{GL}_{2}(\mathbb{Q})^{+}\) such that

-

σ c ∞=c and

-

The element

σ

c

is uniquely determined up to multiplication from the right by a matrix of the form

with an

x∈ℚ and

a∈ℚ×.

with an

x∈ℚ and

a∈ℚ×.

Proof

A given c∈ℚ can be written as c=α/γ with coprime integers α and γ. There then exist β,δ∈ℤ with αδ−βγ=1, so  . It follows that σ∞=c. Replacing Γ with the group σ

−1

Γσ we reduce the claim to the case c=∞.

. It follows that σ∞=c. Replacing Γ with the group σ

−1

Γσ we reduce the claim to the case c=∞.

So we can assume c=∞. Since Γ has finite index in Γ(1), there exists n∈ℕ with  , so

, so  . Let n∈ℕ be the smallest with this property. This means

. Let n∈ℕ be the smallest with this property. This means  or

or  , so the claim follows with

, so the claim follows with  .

.

For the uniqueness of σ

c

, let \(\sigma_{c}'\) be another element of \(\operatorname{GL}_{2}^{+}(\mathbb{Q})\) with the same properties. Let \(g=\sigma_{c}^{-1}\sigma_{c}'\), so \(\sigma_{c}'=\sigma_{c}g\). The first property implies g∞=∞, so  is an upper triangular matrix. Consider the case −1∉Γ. The second property implies

is an upper triangular matrix. Consider the case −1∉Γ. The second property implies  . In particular, one gets

. In particular, one gets  , which implies the claim. The case −1∈Γ is similar. □

, which implies the claim. The case −1∈Γ is similar. □

Definition 2.6.4

Let Γ be a subgroup of finite index in SL2(ℤ). A meromorphic function f on ℍ is called weakly modular of weight k with respect to Γ, if f| k γ=f holds for every γ∈Γ.

A weakly modular function f is called modular if for every cusp c∈ℚ∪{∞} there exists T c >0 and some N c ∈ℕ such that

holds for every z∈ℍ with \(\operatorname{Im}(z)>T_{c}\). In other words this means that the Fourier expansion is bounded below at every cusp. One also expresses this by saying that f is meromorphic at every cusp. By Lemma 2.6.3 this condition does not depend on the choice of the element σ c , whereas the Fourier coefficients do depend on this choice.

The function f is called a modular form of weight k for the group Γ, if f is modular and holomorphic everywhere, including the cusps, which means that a c,n =0 for n<0 at every cusp c. A modular form is called a cusp form if the zeroth Fourier coefficients a c,0 vanish for all cusps c. The vector spaces of modular forms and cusp forms are denoted by \(\mathcal{M}_{k}(\varGamma )\) and S k (Γ).

As already mentioned in the proof of Theorem 2.5.16, the Petersson inner product can be defined for cusp forms of any congruence group Γ as follows: for f,g∈S k (Γ) one sets

2.7 Non-holomorphic Eisenstein Series

In the theory of automorphic forms one also considers non-holomorphic functions of the upper half plane, besides the holomorphic ones. These so-called Maaß wave forms will be introduced properly in the next section. In this section, we start with a special example, the non-holomorphic Eisenstein series. We introduce a fact, known as the Rankin–Selberg method, which says that the inner product of a non-holomorphic Eisenstein series and a Γ 0-automorphic function equals the Mellin integral transform of the zeroth Fourier coefficient of the automorphic function. This in particular implies that the Eisenstein series is orthogonal to the space of cusp forms, a fact of central importance in the spectral theory of automorphic forms.

Definition 2.7.1

The non-holomorphic Eisenstein series for Γ 0=SL2(ℤ) is for z=x+iy∈ℍ and s∈ℂ defined by

By Lemma 1.2.1 the series E(z,s) converges locally uniformly in \(\mathbb{H}\times\{\operatorname{Re}(s)>1\}\). Therefore the Eisenstein series is a continuous function, holomorphic in s, by the convergence theorem of Weierstrass.

Definition 2.7.2

By a smooth function we mean an infinitely often differentiable function.

Lemma 2.7.3

For fixed s with \(\operatorname{Re}(s)>2\) the Eisenstein series E(z,s) is a smooth function in z∈ℍ.

Proof

We divide the sum that defines E(z,s) into two parts. One part with m=0 and the other with m≠0. For m=0 the sum does not depend on z, so the claim follows trivially. Consider the case m≠0 and let log be the principal branch of the logarithm, i.e. it is defined on ℂ∖(−∞,0] by log(re iθ)=log(r)+iθ, if r>0 and −π<θ<π. For z∈ℍ and \(w\in\overline{\mathbb{H}}\), the lower half plane, we have

For m≠0, n∈ℤ, and z∈ℍ, one of the two complex numbers mz+n, \(m\bar{z}+n\) is in ℍ, the other in \(\overline{\mathbb{H}}\). Hence

Write log(mz+n)=log(|mz+n|)+iθ for some |θ|<π. Then

so that

For z∈ℍ and \(w\in\overline{\mathbb{H}}\) define

Keep w fixed and estimate the summand of the series F(z,w,s) as follows

with a constant C>0, which depends on w. According to Lemma 1.2.1, the series F(z,w,s) converges locally uniformly in z, for fixed w and s with \(\operatorname{Re}(s)>2\). As the summands are holomorphic, the function F(z,w,s) is holomorphic in z. The same argument shows that F is holomorphic in w for fixed z. By Exercise 2.20 the function F(z,w,s) can locally be written as a power series in z and w simultaneously, which means that F(z,w,s) is a smooth function in (z,w) for fixed s with \(\operatorname{Re} (s)>2\). Therefore \(F(z,\overline{z},s) = E(z,s)\) is a smooth function, too. □

Lemma 2.7.4

Let

Γ

0=SL2(ℤ) and let

Γ

0,∞

be the stabilizer group of ∞, so

. Then the map

. Then the map

is a bijection.

Proof

If c,d∈ℤ are coprime, then there exist a,b∈ℤ such that ad−bc=1. If (a,b) is one such pair, then every other is of the form (a+cx,b+dx) for some x∈ℤ. (Idea of proof: Assume 1<c≤d. After division with remainder there is 0≤r<c with d=r+cq. Then divide c by r with remainder and so on. This algorithm will stop. Plugging in the solutions backwards gives a pair (a,b).)

For  and

and  one has

one has

This implies the lemma. □

Definition 2.7.5

An automorphic function on ℍ with respect to the congruence subgroup Γ⊂SL2(ℤ) is a function ϕ:ℍ→ℂ, which is invariant under the operation of Γ, so that ϕ(γz)=ϕ(z) holds for every γ∈Γ.

Proposition 2.7.6

-

(a)

The series \(\tilde{E}(z,s) = \sum_{\gamma:\varGamma _{\infty}\backslash \varGamma }\operatorname{Im}(\gamma z)^{s}\) converges for \(\operatorname {Re}(s)>1\) and we have

$$E(z,s) = \pi^{-s}\varGamma (s)\zeta(2s)\tilde{E}(z,s), $$where ζ(s) is the Riemann zeta function.

-

(b)

The functions E(z,s) and \(\tilde{E}(z,s)\) are automorphic under Γ=SL2(ℤ), i.e. we have

$$E(\gamma z,s) = E(z,s) $$for every γ∈Γ. The same holds for \(\tilde{E}\).

Proof

(a) With  we have \(\operatorname {Im}(\gamma z)=\operatorname{Im}(z)/|cz+d|^{2}\). According to Lemma 2.7.4 it holds that

we have \(\operatorname {Im}(\gamma z)=\operatorname{Im}(z)/|cz+d|^{2}\). According to Lemma 2.7.4 it holds that

Hence we get convergence with E(z,s) as a majorant. We conclude

(b) It suffices to show the claim for \(\tilde{E}\). We compute

since if τ runs through a set of representatives for Γ ∞∖Γ, then so does τγ. □

In particular it follows that

It follows that for \(\operatorname{Re}(s)>2\) the smooth function E(z,s) has a Fourier expansion in z. We will examine this Fourier expansion more closely.

The integral

converges locally uniformly absolutely for y>0 and s∈ℂ. The function K s so defined is called the K-Bessel function. It satisfies the estimate

Proof

For two real numbers a,b we have

The last assertion is symmetric in a and b, so it holds for all a,b>2. Therefore one has e −ab<e −a e −b. Applying this to a=y/2>2 and b=t+t −1 and integrating along t gives

We also note that the integrand in the Bessel integral is invariant under t↦t −1, s↦−s, so that

Theorem 2.7.7

The Eisenstein series E(z,s) has a Fourier expansion

where

and for r≠0,

One reads off that the Eisenstein series E(z,s), as a function in s, has a meromorphic expansion to all of ℂ. It is holomorphic except for simple poles at s=0,1. It is a smooth function in z for all s≠0,1. Every derivative in z is holomorphic in s≠0,1. The residue at s=1 is constant in z and takes the value 1/2. The Eisenstein series satisfies the functional equation

Locally uniformly in x∈ℝ one has

where \(\sigma=\max(\operatorname{Re}(s),1-\operatorname{Re}(s))\).

Proof

The claims all follow from the explicit Fourier expansion, which remains to be shown.

Definition 2.7.8

A function f:ℝ→ℂ is called a Schwartz function if f is infinitely differentiable and every derivative f (k), k≥0 is rapidly decreasing. Let \(\mathcal{S}(\mathbb{R})\) denote the vector space of all Schwartz functions on ℝ. If f is in \(\mathcal{S}(\mathbb{R})\), then its Fourier transform \(\hat{f}\) also lies in \(\mathcal{S}(\mathbb{R})\); see [Dei05, Rud87, SW71].

Lemma 2.7.9

If \(\operatorname{Re}(s)>\frac{1}{2}\) and r∈ℝ, then

Proof

We plug in the Γ-integral on the left-hand side to get

where we have substituted t↦πt(x 2+y 2)/y. The function \(f(x)=e^{-\pi x^{2}}\) is its own Fourier transform: \(\hat{f}=f\). To see this, note that f is, up to scaling, uniquely determined as the solution of the differential equation

By induction one shows that for every natural n there is a polynomial p n (x), such that \(f^{(n)}(x)=p_{n}(x)e^{-\pi x^{2}}\). So f lies in the Schwartz space \(\mathcal{S}(\mathbb{R})\) and so does its Fourier transform \(\hat{f}\), and one computes

Therefore \(\hat{f}=c f\) and \(\skew{7}\hat{\hat{f}}=c\hat{f}= c^{2} f\). Since, on the other hand, \(\skew{7}\hat{\hat{f}}(x)=f(-x)=f(x)\), we infer that c 2=1, so c=±1. By \(\hat{f}(0)=\int_{\mathbb{R}}e^{-\pi x^{2}}\,dx>0\) it follows that c=1.

By a simple substitution one gets from this

We see that the left-hand side of the lemma equals

which gives the claim. □

We now compute the Fourier expansion of the Eisenstein series E(z,s). The coefficients are given by

The summands with m=0 only give a contribution in the case r=0. This contribution is

For m≠0 note that the contribution for (m,n) and (−m,−n) are equal. Therefore it suffices to sum over m>0. The contribution to a r is

The substitution x↦x−n/m yields

Because of

the contribution is

There are two cases. Firstly, if r=0, the condition m|r is vacuous and we get

where we have used Lemma 2.7.9. The Riemann zeta function satisfies the functional equation

with \(\hat{\zeta}(s)=\pi^{-s/2}\varGamma (s/2)\zeta(s)\), as is shown in Theorem 6.1.3. Therefore the zeroth term a 0 is as claimed. Secondly, in the case r≠0 we get the claim again by Lemma 2.7.9. □

We now explain the Rankin–Selberg method. Let Γ=SL2(ℤ) and let ϕ:ℍ→ℂ be a smooth, Γ-automorphic function. We assume that ϕ is rapidly decreasing at the cusp ∞, i.e. that

holds for every N∈ℕ. Because of ϕ(z+1)=ϕ(z) the function ϕ has a Fourier expansion

with \(\phi_{n}(y)=\int_{0}^{1}\phi(x+iy)\,e^{-2\pi inx}\,dx\). The term ϕ 0 is called the constant term of the Fourier expansion. Let

be the Mellin transform of the zeroth term. We shall show that this integral converges for \(\operatorname{Re}(s)>0\). Put

Proposition 2.7.10

(Rankin–Selberg method)

The integral \(\mathcal{M}\phi_{0}(s)\) converges locally uniformly absolutely in the domain \(\operatorname{Re}(s)>0\). One has

The function Λ(s), defined for \(\operatorname{Re}(s)>0\), extends to a meromorphic function on ℂ with at most simple poles at s=0 and s=1. It satisfies the functional equation

The residue at s=1 equals

Proof

The proof relies on an unfolding trick as follows

The claims now follow from Theorem 2.7.7. □

We apply the Rankin–Selberg method to show that the Rankin–Selberg convolution of modular L-functions is meromorphic. Let k∈2ℕ0 and let \(f,g\in\mathcal{M}_{k}\) be normalized Hecke eigenforms. Denote the Fourier coefficients of f and g by a n and b n for n≥0, respectively. We define the Rankin–Selberg convolution of L(f,s) and L(g,s) by

By Proposition 2.3.1 and Theorem 2.3.2 one has a n ,b n =O(n k−1). Therefore the series L(f×g,s) converges absolutely for \(\operatorname{Re}(s)>2k-1\). We put

Theorem 2.7.11

Suppose that one of the functions f,g is a cusp form. Then Λ(f×g,s) extends to a meromorphic function on ℂ. It is holomorphic except for possible simple poles at s=k and s=k−1. It satisfies the functional equation

The residue at s=k is \(\frac{1}{2}\pi^{1-k}{\langle f,g\rangle}_{k}\).

Proof

We apply Proposition 2.7.10 to the function \(\phi(z)=f(z)\overline{g(z)}y^{k}\). Then

Since \(\int_{0}^{1}e^{2\pi i(n-m)x}\,dx=0\) except for n=m, we get \(\phi_{0}(y) = \sum_{n=1}^{\infty}a_{n}\overline{b_{n}}e^{-4\pi ny}y^{k}\). So

The number b n is the eigenvalue of the Hecke operator T n . As T n is self-adjoint, b n is real. Therefore,

Let Λ(s) be as in Proposition 2.7.10. It follows that

or

By Proposition 2.7.10 one has Λ(s+1−k)=Λ(1−(s+1−k)), which implies the claimed functional equation. Finally one has

□

Next we show that the L-function L(f×g,s) has an Euler product. We factorize the polynomials

Theorem 2.7.12

Let k∈2ℕ0 and let \(f,g\in\mathcal{M}_{k}\) be normalized Hecke eigenforms. The Rankin–Selberg L-function has the Euler product expansion

Proof

This is a consequence of the following lemma.

Lemma 2.7.13

Let α 1,α 2,β 1,β 2 be complex numbers with α 1 α 2 β 1 β 2≠0 and suppose that the equalities

hold for small complex numbers z. Then for small z one has

Proof