Abstract

This chapter presents the results of simulations performed with various computationally efficient model predictive control (MPC) strategies applied to the state space model of an active vibration control (AVC) demonstration device. A numerical study analyzing the minimal prediction horizon necessary to steer system state into equilibrium for the AVC demonstrator model suggests very long horizons, even for relatively small outside disturbances. Because infinite horizon cost dual-mode quadratic programming based MPC (QPMPC) with stability guarantees is assumed for this test, the feasibility of the online implementation with several hundred steps long horizon is unlikely due to excessive computation times. Through the computation of offline optimization times, number of regions and controller sizes, it is demonstrated here that the computational requirements of multi-parametric programming based MPC (MPMPC) also render it as an unlikely candidate for the AVC of lightly damped systems. From the viewpoint of computational complexity, Newton–Raphson MPC (NRMPC) is certainly a good choice for AVC, however, not without drawbacks. As the simulation results presented here suggest, invariance of the target set and therefore constraints can be violated due to numerical imprecision at the offline computational stage. Moreover, the suboptimality of this approach becomes troubling with increasing problem dimensionality. As the numerical tests demonstrate, the offline computational drawbacks can be partly remedied by performance bounds and proper solver settings, while the optimality is somewhat influenced by a suitable input penalty choice. The chapter is finished with a simulation comparison of the QPMPC, MPMPC and NRMPC algorithms, which shows input and output sequences in agreement with theoretical expectations.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Model Predictive Control

- Prediction Horizon

- Controller Output

- Initial Deflection

- Active Vibration Control

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

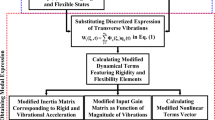

This chapter presents the results of simulations performed with various model predictive control (MPC) strategies applied to the state-space model of the active vibration control (AVC ) demonstration device, representing a class of lightly damped mechanical systems. The simulations have been aimed at evaluating the implementation properties of MPC algorithms and investigating the issues caused by the lightly damped nature of the controlled plant, numerical limitations, optimality problems and others.

The active vibration suppression of lightly damped flexible mechanical systems is a uniquely difficult control task, in case model predictive control is considered with stability and feasibility guarantees. As the numerical study in Sect. 11.1 implies, this is caused by the long horizons necessary to create a sufficiently large region of attraction for the control law. Using the state-space model of the AVC demonstrator, minimal necessary horizons are compared with the largest achievable deflections at the tip under stabilized infinite horizon dual-mode quadratic programming-based MPC (QPMPC). Because of the several hundred steps long prediction horizons—necessary to drive the system state from an initial state caused by a large deformation—the application of classical QPMPC on lightly damped mechanical systems with fast sampling is very unlikely. Although multi-parametric MPC is computationally more efficient than QPMPC, the long prediction horizons may prohibit practical implementation because of the intractable offline computation times. Amongst others, the time required to evaluate an MPMPC controller is related to the achieved horizon length in Sect. 11.2. The number of polyhedral regions in the controller and the size of the executable to be loaded onto the hardware running the online MPMPC routine is also evaluated here.

Simulation evaluation of the Newton–Raphson MPC (NRMPC) algorithm shed light on some serious numerical problems, which may occur in real-time implementations. Imprecision at the offline optimization stage caused violation of the invariance condition and by that indirectly the violation of system constraints as demonstrated in Sect. 11.3. In addition to exhibiting the nature of this difficulty, the effects of imposing a prediction performance bound are evaluated. As the simulation results imply, invariance condition violation is clearly caused by numerical obstacles and can be partly remedied by increasing solver precision.

Suboptimality is a natural drawback of the NRMPC approach. However, it is well illustrated in Sect. 11.4 that simulations performed with higher order lightly damped state-space models are suboptimal beyond all expectations. Both the evolution of controller outputs in the time domain and state trajectories point out the deficiencies of NRMPC in comparison with optimal methods. As the trials indicated in Sect. 11.4 suggest, lowering controller output penalization may improve the situation slightly and allows the actuators to use their full potential. The use of a certain penalization value is rationalized for second order models of the vibration attenuation example in this work, and compared to the much lower settings suitable for higher order examples.

To see the optimality improvement promised by the discussion in Sect. 8.1.2.1, simulation trials were performed with the alternate NRMPC extensions. Closed-loop costs are compared for a simple example in Sect. 11.5, and despite their drawbacks, the several steps ahead extensions show significant improvement in process optimality for certain problem classes. The evolution of perturbation scaling variables acquired via evaluating for the future membership and feasibility conditions are also shown. Although promising for certain applications, the simulation carried out with a fourth order model of the vibrating system shows no significant improvement in closed-loop cost and performance. The use of alternative NRMPC extensions is not justified for the problem considered in this work.

Finally, Sect. 11.6 compares the vibration damping performance of quadratic programming, multi-parametric programming and based MPC strategies. The results of this trial show no surprising facts which could not have been deducted from the theoretical discussion. The NRMPC algorithm respects constraints and behaves as expected while the QPMPC- and MPMPC-based controllers provide a faster and better damped response to the same initial condition.

1 On the Horizon Length of Stable MPC

A simple second order prediction model for the experimental demonstration device described in Chap. 5 with a 100 Hz rate would be suitable to use with a quadratic programming based MPC controller. The optimization task is likely to be tractable, as higher sampling rates have already been successfully implemented for vibration control [71, 72]. Neither the model order, nor the sampling rate requires algorithmic or special hardware requirements. However, this is only true if constraints are considered—but no guarantees on stability or feasibility are given.

If system constraints are considered, the set of allowable states or initial conditions is generally limited to a subset of space given by those constraints—for example box constraints on the state. The use of an MPC formulation with an a priori stability guarantee further limits the size (volume) of this set of allowable states. This limited subset of state-space is called the region of attraction. If the disturbance takes the system state outside this region, the optimization is simply infeasible. Therefore, the volume of the region of attraction shall be made as large as possible.

Amongst others, the size or volume of the region of attraction depends on the given MPC strategy. In case it is an optimal strategy such as dual-mode stabilized QPMPC or optimal MPMPC, the region is a polyhedral set in hyperspace. In case it is a suboptimal MPC strategy such as NRMPC, or target sets like the ones in Sect. 7.5 or 7.6 are used, the region of attraction is only a subset of the maximal possible region of attraction. In addition to the type of MPC strategy, the volume of the region of attraction is impacted by the choice of state and input penalization matrices \({\mathbf{Q}}\) and \({\mathbf{R}}, \) the character of the controlled system itself and the length of the prediction horizon.

The dynamic properties of the controlled system have a great influence on the relative size of the region of attraction and the target set. For certain systems with very light damping, such as the AVC demonstrator example, the effect of the actuators is very small compared to the range of disturbances one might reasonably expect. In other words, a large region of attraction has to be computed to allow the state to migrate inside the target set by the end of the prediction horizon. In this light we may alternatively state that the necessary horizon length for stabilized MPC control may be understood as the number of steps necessary to drive the system state from a given initial condition into the terminal set.

This concept is graphically illustrated in Fig. 11.1. The slowly decaying waveform suggests a lightly damped behavior of the beam tip or possibly other systems where the settling time is very long in comparison with sampling rate. Such systems have been discussed earlier in Sect. 5.1.1, where it has been suggested that a class of engineering problems such as manipulators [8, 19, 26, 42, 61, 63, 73, 74, 76], helicopter rotors [7, 40, 43], wing surfaces [1, 14, 15] and space structures [20, 39, 44, 51, 57] may have very similar dynamic behavior to the simple vibrating cantilever from the viewpoint of predictive control.

The initial condition of these systems has to be contained within the region of attraction, shown in a conceptional manner as the large polyhedron. There is a certain point in the course of behavior, where the state of the system enters the given terminal set—illustrated as the smaller polyhedron on the figure. The minimal necessary prediction horizon for stable MPC with constraint feasibility guarantees is then the time \(t_m\) in samples. Note that here the invariant sets are only for illustration purposes, and the concepts of state-space and control output course in the time domain are mixed purely for demonstration.

This indeed suggests that all systems where the effect of the control action is limited in comparison with the effort necessary to drive the trajectory into equilibrium will require lengthy control horizons. The displacement effect of piezoelectric actuators is small compared to the range they need to cover, when used for the vibration suppression of very flexible structures. This effect is not limited to piezoelectric actuators as active vibration attenuation through electrostrictive or magnetostrictive materials [4, 9, 49, 56, 69], electrochemical materials [2, 30, 46, 58] and possibly other actuators would create dynamic model s with similar properties and issues for MPC implementation.

The number of samples required to settle the system from its initial condition gives an indication of extremely long control horizons. In the case of the laboratory demonstration model running saturated linear quadratic (LQ) control—the time necessary to reach near equilibrium from an initial deflection of 15 mm is approximately around 2 s. This divided by the sampling rate suggests a necessary minimal prediction horizon of 200 steps if dual-mode QPMPC with polytopic terminal sets is considered as a controller. The issue is the same with MPMPC, only instead of the online computational issues, the implementation difficulties would be transferred to the offline controller computation stage. The majority of the MPC implementations available in the literature considered no constraints, which neither limits the available state-space nor creates computational issues [16, 19, 50, 59, 75]. Other researchers considered constrained MPC that requires online optimization, thus creating certain implementation issues, however failed to address the question of stability [8, 13, 21, 27, 48, 71, 72]. Unlike these cases the authors of this book considered MPC implementations with stability guarantees in preliminary works such as in [64–67] and this will also be the assumption throughout the simulations and experiments presented in this and the upcoming chapter.

Let us get back to the case of vibrating systems and estimate the necessary prediction horizon based on the settling time of the system. If an exponential decay of vibration amplitudes is presumed due to the damping, we may easily approximate the prediction horizon necessary to ensure a feasible and stable MPC run. In case the system has been let to vibrate freely starting from a given initial condition, amplitudes \(d_t\) at a given time can be approximated by the following relation [6, 17, 23]:

where \(d_0\) is the initial deflection, \(t\) is the time in seconds since the initial conditions had affected the system, \(\zeta\) is the damping ratio and \(\omega_{n}\) is the first or dominant natural frequency of the vibrating system. If MPC control of the system is presumed with guaranteed stability , there must be an amplitude level under which the system enters the target set. In case we denote this level with \(d_{ts},\) then by utilizing relation (11.1), the minimal prediction horizon \(n_{min}\) for initial deflection \(d_0\) and smaller can be approximated by:

where \(f_0\) is the first or dominant mechanical eigenfrequency, \(T_s\) the sampling rate considered for control and the rest of the variables as defined for (11.1).

1.1 Simulating Necessary Horizon Lengths

Two simulations have been designed to determine the relationship between maximal allowable deflection at the beam tip and minimal associated prediction horizon. The first algorithm is based on the traditional dual-mode QPMPC formulation [3, 45, 60, 70], with stability and feasibility guarantees ensured by terminal constraints [12, 47]. The mathematical model used in the simulation and its settings corresponded to the physical laboratory device and the implementation has been carried out assuming the general workflow introduced earlier in Sect. 10.1.

The choice of method to resolve the minimal control horizon for a given deflection was to evaluate the online quadratic programming problem. In case the QP problem was infeasible at any of the steps, the horizon has been increased until a full successful run has been achieved. This has been repeated for increasing values of tip deflection and corresponding states. The summary of the algorithm goes as follows:

Algorithm 11.1

-

1.

Increase initial deflection \(d\) and corresponding state \(x_k.\)

-

2.

Perform the minimization \(\min_{{\mathbf{u}}} J({\mathbf{u}}_k,x_k)\) subject to input and terminal constraints.

-

3.

If the minimization is infeasible, increase prediction horizon \(n_c\) and repeat algorithm from step 2.

-

4.

Else note deflection value \(d\) and corresponding horizon \(n_c\) and repeat algorithm from step 1.

This method is inexact, though it is time efficient compared to the computationally more intensive search for the extreme points of the maximal admissible set given the expected horizon lengths.

An alternative algorithm to determine the minimal horizons to ensure a given beam tip deflection is based on utilizing successive computations of multi-parametric MPC controllers with varying deflection constraints. The multi-parametric controllers have been iteratively computed using the MPT Toolbox [31, 34, 35]. This method is slightly more computationally intensive than Algorithm 11.1, but also more exact:

Algorithm 11.2

-

1.

Increase initial deflection \(d\) and set deflection constraints to \(\overline{y}=-\underline {y}=d.\)

-

2.

Compute minimum time multi-parametric MPC controller.

-

3.

If the computed horizon \(n_c\) is equal to the one in the previous step, repeat from step 1.

-

4.

Else denote prediction horizon \(n_c\) and the corresponding deflection \(d,\) then repeat from 1.

Results of simulating minimal prediction horizon lengths using the formerly introduced Algorithms 11.1 and 11.2 are featured in Fig. 11.2. As it is evident from this analysis, a fairly small deflection measured at the beam end requires a very long prediction horizon. For the second order prediction models with a 100 Hz sampling and a modest \(y_{max}=\)15 mm maximal allowable deflection is approaching \(n_c=150\) steps. If one includes an excess range reserve in order to prevent infeasible states to enter the optimization procedure, this value is even higher. Considering a higher order prediction model allowing the control of faster dynamics, one requires a faster sampling rate. As an example we demonstrate that a 500 Hz sampled model of the beam would require approximately a \(n_c=\)500 steps ahead prediction horizon to include states resulting from the same deflection.

1.2 NRMPC and Horizon Length

The original development of the NRMPC algorithm presented by Kouvaritakis et al. in [28] and [29] assumes a fixed shift matrix \({\mathbf{T}}\) and \({\mathbf{E}}.\) The role of vector \({\mathbf{E}}\) is merely to select the first perturbation value from the vector of perturbations. This development of NRMPC assumes a fixed and pre set prediction horizon. Naturally, enlarging the horizon here implies a larger region of attraction, too.

While it is possible to use NRMPC in its original formulation on certain systems, this may not be the case with the given active vibration attenuation example and possibly other under-damped physical systems. The size of the region of attraction is very small for typical prediction horizons—in fact beyond the point of practical use. This is clearly illustrated in Fig. 11.3. A second order model of the physical system has been used to plot the region of attraction of the NRMPC law for different horizons. The intersection of the augmented ellipsoid with the state-space , the target set is shown as the shaded area. Footnote 1 The volume of the augmented set projection—which is actually the region of attraction is shown as the ellipse outlines with growing volume. The innermost ellipse shows projection assuming 4 steps ahead prediction. Horizon length was then increased by a factor of two, up to the value of 32 steps shown as the outermost ellipse. Even the relatively large 32 steps horizon would only allow a maximal initial beam tip deflection of \(\pm0.5\) mm, which is indeed unreasonably small.

In the case of NRMPC, the region of attraction can be only the approximation of the polytopic set created by the exact QPMPC control law. The volume of the set of stabilizable states will be actually smaller than that of QPMPC. As it has been demonstrated in Sect. 11.1.1, very long horizons are needed to include the necessary range of deflections into the vibration control problem, which is also true for the NRMPC controller.

The volume of the region of attraction can be maximized by optimizing prediction dynamics as suggested by Cannon and Kouvaritakis in [11] and in a slightly different approach by Imsland et al. in [22]. In the method also introduced in Sect. 8.1.3 the matrices \({\mathbf{T}}\) and \({\mathbf{E}}\) will be full and unknown variables in the offline optimization problem. The horizon will be fixed and equal to the system order. However, this approach may cause numerical problems when calculating the ellipsoids acting as parameters for the online algorithm. This effect has been noted when using under-damped systems, such as the model for the experimental device.

2 Properties of MPMPC for Active Vibration Control

A promising stable and computationally efficient MPC control strategy for vibrating systems is the proven and actively researched MPMPC method [5], briefly introduced in Sect. 8.2.1. In MPMPC, the controller is formulated as a set of regions in the state-space with associated affine and fixed structures stored in a lookup table [53]. However, it has been suggested by the simulation results presented in the previous Sect. 11.1 that the size of the necessary region of attraction for this application may require very lengthy prediction horizons. As the computational burden of MPMPC is transferred into the offline computation of the controller regions, generally speaking systems with more than four states and horizons in the range of ten steps cannot be recommended for MPMPC. Although numerous efforts have been made to limit the size of the online lookup table and to shorten search times [24, 36], the offline computation effort required to realize the simplification procedures themselves may be prohibitive in some cases.

Narrow-band excitation and small discrepancy between the capabilities of actuators and the disturbance render MPMPC as a good controller choice. Nevertheless, one must not forget that lightly damped vibrating systems with weak static actuation are very different. MPMPC has been suggested to use for a vibrating cantilever by Polóni et al. without stability guarantees in [55] while this work was later expanded to include a priori stability guarantees in [65] shedding line on the inconvenient offline properties of MPMPC with lightly damped systems.

It has been long known that the main drawback of the MPMPC approach is its extensive offline computational need [31, 52]. In this section, several simulation trials are introduced using the MPMPC approach to evaluate the offline computational properties of the algorithm. Instead of using generic systems with “nice” dynamics we rather assume the characteristic dynamics of lightly damped underactuated vibrating systems. Since this work is focused on the application of efficient and stable MPC on systems of vibration suppression, practical questions like whether the offline calculation time is tractable using current computer hardware were analyzed. In addition to the very important issue of offline calculation times, this section investigates the number of regions and controller executable sizes necessary to deploy stable MPMPC on a real system subject to narrow-band excitation.

2.1 MPMPC Computation Time

As it has been implied in Sect. 11.1, in order to cover a broad range of deformations on a flexible structure, the computationally efficient MPMPC formulation will still require the same long horizons as QPMPC. While the online computational time is significantly reduced by the use of the MPMPC formulation, large horizons are prohibitive for other reasons. Several hundred steps long prediction horizons make the calculation of the controller intractable, especially with higher order models. Real life applications necessitate eventual system reconfiguration; changes in the constraints also call for repeated and extensive controller recomputation.

Simulations have been carried out to determine the properties of MPMPC control, using a second and fourth order state-space model of the laboratory device. Controller parameters and requirements have been identical to the QPMPC case presented in Sect. 11.1. Most importantly the region of attraction of MPMPC matched that of the QPMPC set. In other words, the controllers were required to cover the same range and produce the same online response, however with a different method of algorithmic implementation.

Explicit controllers with growing prediction horizon were evaluated using the MPT Toolbox [31–33], on a personal computer conforming to current hardware configuration standards. Footnote 2 The maximal prediction horizon that could be reliably computed for a second order system in the offline procedure was 162 steps. Over this horizon, the solver ran into problems, most likely due to memory issues. The offline computational times required to perform various tasks in MPMPC controller evaluation are shown in Fig. 11.4. A real-time implementation necessitates the calculation of the controller and its compilation from source code; therefore, the minimal realization time is the sum of these. Using extrapolation from the simulation data, the time required to evaluate an explicit controller allowing maximal deflections would take approximately 7 days.

Figure 11.4 also shows the time necessary to perform two non-essential tasks aimed at complexity reduction in MPMPC. One of them is merging, used to simplify the controller in order to reduce the number of regions, thereby lowering file size and search times. This merging procedure is implemented in the MPC Toolbox [38] based on the optimal region merging method of Geyer et al. [18]. It essentially involves a complexity reducing procedure, where the regions defining the same control law areunified. Using simulation results to extrapolate to higher initial deflections, the time to simplify regions for a maximal allowable deflection of \(\pm30\) mm is estimated to 500 days. In fact, it is well known that optimal region merging performed as a post-processing operation is prohibitive for systems with state dimensions above \(n_x=2\) due to excessive offline computational demands [35, 37].

The other non-essential task is to use a binary search tree, instead of direct region searching algorithms [25]. This approach has an advantage of decreasing online search times, albeit it requires additional memory to store the precompiled binary search tree and data structure. As pointed out previously, the significant offline computational load is the main drawback in this case—not the online performance. The MPT Toolbox utilizes the method of Tøndel et al. [68] to generate the binary search tree. The calculation of this tree proved to be the most computationally intensive task, therefore it has been evaluated only up to horizons \(n_c=46\) steps ahead, taking over 70 h to complete. Figure 11.4 also illustrates the time necessary to generate this search tree for different horizons and corresponding maximal deflections. Extrapolation suggests extreme calculation times necessary to cover the whole operating region, similarly to the case of merging.

When a fourth order system has been considered, the multi-parametric calculation of the controller regions failed when the horizon exceeded a mere \(n_c\,{=}\,16\) steps forward. With this relatively short horizon and low model order, the controller computational time combined with the executable compilation time approaches 20 h. An MPMPC controller is clearly intractable for model predictive vibration attenuation applications with broadband excitation.

2.2 MPMPC Regions

The explicitly computed MPC controller will contain a significant number of polytopic regions, thus implying issues with on the memory requirements of the controller hardware and increased search times. Region simplification (merging) reduces the number of regions and the controller executable size, but also adds to the computational time.

Figure 11.5 relates the control horizon and thus the maximal allowable tip deflection to the number of controller regions, which increases at an exponential rate. As illustrated in the figure, the number of regions is even more rapidly increasing in case a fourth order system is used. Merging reduces the amount of polytopic controller regions; however, with increasing horizons computation times become intractable as illustrated before.

If one considers covering the second eigenfrequency by the controller, the model order changes to four and at the same time sampling rates will have to increase to include higher frequency dynamics. Faster sampling requires even longer horizons, and this in fact will show the drawbacks of MPMPC in such and similar applications.

Simulations performed using the fourth order state-space model of the laboratory device confirmed this. While the computation of the controller already fails over \(n_c\,{=}\,16\) due to a solver crash attributed to memory issues, this horizon only yields a largest allowable deflection of a little over 1 mm. To allow \(\pm20\) mm deflections a 400-step ahead horizon is required. This clearly is intractable considering the given conditions.

2.3 MPMPC Controller Size

The quantity of regions has a direct effect on the controller size as well. The size of the file containing the raw controller and the C source code of the search tables is indicated in Fig. 11.6 for a second and fourth order system. When considering a fourth order system with a horizon length of \(n_c\,{=}\,16\) steps, the executable size grows up to 60 MBytes.

3 Issues with NRMPC Invariance

The dual-mode control law assumes that a fixed feedback law will steer the state into equilibrium beyond the prediction horizon. Both the feasibility of process constraints and from this indirectly the stability of the process is guaranteed by enforcing process constraints beyond the horizon. Enforcing process constraints beyond the horizon will create a target set which in nature will be invariant, or self-contracting.

In simple terms, invariance of the target set in an MPC strategy with guaranteed stability means that once the system state enters the target set it cannot leave it again. This is assuming the state is steered into equilibrium without further outside disturbance . Naturally, in a real MPC implementation the state may leave the target set since disturbances may occur at any point of the control course, and thus the controller strategy never “switches” permanently to the LQ mode. Given that the formulation of the MPC strategy and its implementation is correct, the violation of the invariance condition in simulation may indicate numerical errors in the implementation. These problems appear in the state-space representation as the state trajectory entering and subsequently leaving the target set, but from the viewpoint of input sequences and possibly outputs, this may materialize as a violation of system constraints.

Violation of both the invariance condition and process constraints has been observed in numerous simulations involving the NRMPC algorithm with optimized prediction dynamics. Figure 11.7 shows the evolution of control outputs for a second order linear time-invariant state-space model of the active vibrating beam. Here too the constraints were set to the physical limits of the transducers, \(\pm120\) V. Input penalization was set to \({\mathbf{R}}\,{=}\,r\,{=}\,\)1E-4, which is based on simulations involving simple LQ controllers. State penalty has been fixed as \({\mathbf{Q}}\,{=}\,{\mathbf{C}}^T{\mathbf{C}}. \) An initial condition emulating the deflection of the beam has been set, as a disturbance at the beginning of the simulation. No further disturbance has been assumed in this test.

To illustrate the issues with invariance better, this NRMPC algorithm implementation was slightly atypical. Normally the algorithm would decide between engaging NRMPC portion or pure LQ code according to whether current state at sample \(k\) is part of the target set Footnote 3 or not. Here however, following the essential principles of invariance, the controller switched permanently to LQ mode in case the state entered the target set. This approach assumes that simulations involve initial conditions and no other disturbances are present during the control course. Naturally, this assumption is unacceptable in an experimental implementation, although it serves a clear diagnostic purpose if invariance is questionable.

The evolution of outputs in Fig. 11.7 seems to be normal until a certain point, where the lower process constraints are clearly violated. After this, the output diagram seems to be ordinary again, albeit serious issues are suggested by the fact that the LQ controller produced outputs exceeding preset limits. Constraint violation occurs after the LQ controller is engaged, but for some reason the states do not remain in the target set where a pure linear-quadratic controller could take care of the rest of the control course.

Figure 11.8 shows a clearer picture on the nature of the problem. Here state-space is depicted in two dimensions, along with the evolution of the state trajectory. States are spiraling towards the origin from the initial condition. This is how oscillations at the output are represented using the state variables. At a certain point, the state trajectory enters the target set, depicted as the gray shaded ellipse in the middle of the figure. There is a problem though, after the state entered the target set it leaves it, which is a clear violation of the invariance condition.

This behavior has been only observed when the dynamics optimization principle as presented by Cannon and Kouvaritakis in [11] is implemented into the NRMPC offline optimization algorithm. In case the original NRMPC formulation is considered, none of these peculiar problems occur. Many signs point to the fact that this problem is of numerical nature, and can be solved by modifying the offline optimization algorithm or solver settings. Variables of the offline NRMPC optimization contain both extremely large and small numbers; simple matrix multiplications may be erroneous and are prone to numerical problems. The optimization process itself may also cause issues with invariance.

3.1 Performance Bounds

Optimized prediction dynamics in NRMPC makes it possible to recover the maximal region of attraction under any given fixed feedback law, while only requiring the horizon equal to that of the model order [11, 22]. While this is theoretically possible, the previous example demonstrates the practical limits of this approach.

A partial remedy to the issue with invariance violation is setting a boundary on the cost function value [11]. This unfortunately also directly affects the projection size, thus limiting the region of attraction. There is a performance boundary or cost limit, which redefines the invariance condition according to (8.57). Footnote 4 The bound of the predicted cost is then set by \(\gamma,\) which is enforced for all initial conditions of the autonomous system in \(E_z\!\!:\)

Sacrificing the size of region of attraction may not be an issue with certain model classes. However, simulations performed using the LTI model of the vibration damping application showed that the range of allowable vibration deflections was severely compromised in the interest of numerical stability . A performance bound, deemed low enough to ensure numerical integrity of the optimization limited the deflection range under ∼10 mm. This clearly defeats the purpose of optimizing prediction dynamics, and makes the practical use of NRMPC in this application questionable.

Using a second order model of the physical system, several tests were performed to approximate the ideal compromise between the size of augmented ellipsoidal set projection and numerical stability . For a given performance bound \(\gamma\) these tests varied the initial condition, changing the first element of the state vector from 0 to decreasing negative values according to \(x_0=[x_{11}\quad0]^T.\) For each initial condition, the evolution of control outputs was calculated, and its maximal value plotted against the given starting state. The tests utilized penalties \({\mathbf{R}}=r=\)1E-4, \({\mathbf{Q}}={\mathbf{C}}^T{\mathbf{C}}\) and output has been constrained within \(\overline{u}=-\underline {u}=120\) V.

As visible in Fig. 11.9, the unrestrained cost bound and maximal region of attraction produces erroneous results for certain initial conditions, while others involve evolution of controller output where the maximal value stays within constraints. This approach is a rough estimation, and has its obvious limitations. Naturally it does not imply that a given \(\gamma\) necessarily ensures a numerically stable controller for all initial conditions, but only for the given search direction. The resolution of this simulation is an additional weak point, where it is possible to imagine a situation where invariance violation occurs exactly between steps changing initial condition.

Despite the shortcomings of this approach, Fig. 11.9 shows that the given controller is numerically adequate below a \(\gamma=\)1E5 performance bound, and suggests that the optimal value might be somewhere around \(\gamma=\)7E5 which provides a control process preserving invariance, but still maximizing the range of allowable initial conditions. Therefore, there must be a performance bound gamma \(\gamma\) over which the numerical integrity of the offline process is optimized.

An approximate method to estimate this level and to ensure a large region of attraction let us consider the following algorithm:

Algorithm 11.3

To estimate the maximal level of performance bound \(\gamma\) where the offline NRMPC optimization remains numerically viable, perform the following algorithm:

-

Create a prediction model and evaluate offline NRMPC problem for the current performance bound \(\gamma.\) Determine bounds on the set defining the region of attraction.

-

For a given direction in state-space , choose an initial condition and run a simulation. If no constraint violation is detected, repeat with a given resolution of initial conditions until the edge of region of attraction is reached.

If constraint violation is detected decrease \(\gamma\) and start over, otherwise increase \(\gamma.\) Stop if pre defined resolution is reached.

In this case, invariance problems were detected according to the previously introduced method: by checking for constraint violation during a given simulation run. A more sophisticated technique could be determining whether the state trajectory leaves the target set after entering. Despite the approximate nature of the algorithm, a good estimate on the performance bound ensuring the maximal safe region of attraction may be computed.

3.2 Solver Precision and Invariance

The violation of the invariance condition experienced throughout simulation trials of the NRMPC algorithm clearly has a numerical character. This suggests opportunity to fine-tune the SDP solver parameters in order to increase precision. Although several possible SDP solvers were considered for the implementation of the offline NRMPC problem in Sect. 10.3.1 none of them was deemed to be suitable Footnote 5 for this application except SeDuMi.

It is possible to redefine some of the default solver parameters in SeDuMi [62]. There are three variables controlling numerical tolerance, although the exact role of these is unlisted in the manual and customizing them is not recommended [54]. The desired solver accuracy is influenced by setting the pars.eps command structure to a smaller value. The default numerical accuracy is set to \(eps=\)1E-9, when this value is reached the optimization terminates. Setting this value smaller means more precision, although optimization will take longer. Fortunately, given the typical problem dimensionality in NRMPC, this is not an issue. Setting parameter eps to 0 means that the optimization will continue as long as the solver can make progress.

Figures 11.10 and 11.11 show the results of simulations searching for the connection between solver precision settings and the size of region of attraction. The simulations have been performed using the NRMPC algorithm, utilizing a second order LTI model of the laboratory device. Input penalty was maintained at \({\mathbf{R}}=r=\)1E-4 and state penalty has been set to \({\mathbf{Q}}={\mathbf{C}}^T{\mathbf{C}}.\) Inputs were constrained to \(\overline{u}=-\underline {u}=120\)V, which agrees with the piezo transducer physical limits of \(\pm120\) V. Algorithm 11.3 has been used to approximate the maximal possible performance bound and the corresponding volume of the region of attraction before violation of the invariance condition occurs.

Solver precision is plotted against the approximate maximal safe performance bound \(\gamma\) in Fig. 11.10. The corresponding volume of region of attraction for the given example and settings is shown in Fig. 11.11. The default precision is indicated on the figures as the vertical line at the 1E-9 mark. Superseding the default tolerance settings and algorithm precision to the obtainable maximum in SeDuMi increased the level of performance bound \(\gamma\) by more than two orders of magnitude. The resulting growth in the volume of the region of attraction has been similarly more than two orders of magnitude. In the light of the practical application, the allowable deflection range increased about an order of magnitude. A maximal tip deflection of \(\pm3\) mm on the laboratory device is hardly exploitable, however increasing this to \(\pm30\) mm allows the controller to perform its task under any mechanically viable situation.

These simulation and findings refer to the case with a second order model of the vibrating beam. We have to note that with other examples, especially higher order models, this improvement was not so significant. To preserve invariance and prevent numerical problems, the size of the initial stabilizable set was sacrificed significantly.

4 Issues with NRMPC Optimality

Simulations performed using a second order mathematical model of the vibrating structure showed the viability of using NRMPC for the model predictive vibration control of lightly damped structures. The optimality difference between the performance of QPMPC experienced both in simulations and experiments proved to be minor enough to produce an indistinguishable vibratory response. In fact, the Monte Carlo simulations described by Kouvaritakis et al. in [29] showed no more than \(2\%\) increase in closed-loop cost when compared to QPMPC. The paper suggests that for randomly generated examples with second and fifth order examples and the NRMPC extension implemented, closed-loop cost remained only \(1\%\) worse in \(97\%\) of the cases.

Unfortunately, the lightly damped example used in this work proves to be a more difficult case for NRMPC. This is especially evident if the model order is increased anything above \(n_x=2.\) Although from a practical engineering standpoint prediction dynamics and a controller based on a second order model is satisfactory, one might argue that a more complex prediction model could also explicitly include higher order dynamics. This is valid in particular for controllers covering more than one vibratory modes and a broadband excitation.

A fourth order model considered for the application on the vibrating beam could explicitly include first and second mode dynamics, thus in this case cover the bandwidth of approximately 0–80 Hz. Such a model has been prepared by using the experimental identification method described in Sect. 5.2. Simulations examining optimality were performed with this model using 250 Hz sampling, and characterized by the following linear time-invariant state-space system:

and with \({\mathbf{D}}=0.\)

The problems caused by suboptimality of the NRMPC method are clearly illustrated in Fig. 11.2, where the evolution of ENRMPC and NRMPC controller outputs \(u_k\) are plotted in time domain and compared to the truly optimal QPMPC controlled system. Here an initial condition \(x_0=\left[0.75\ 0\ 0\ 0\right]^T\) has been considered, which is equivalent to an initial deflection 1.5 mm at the beam tip. Every effort was made to create similar circumstances for both controllers. The prediction horizon of the QPMPC controller was set to \(n_c=36\) steps, the smallest possible for the considered initial condition. The horizon allowed engaging constraints without requiring too lengthy computations. Input penalty has been set to \({\mathbf{R}}=r=\)1E-4 and this time the state penalty \({\mathbf{Q}}\) was equivalent to the identity matrix of conforming dimensions for both QPMPC and NRMPC controllers. Process constraints have been engaged only on inputs, restraining them to \(\pm120\) V. Simulations showed no significant improvement when using the extension with the NRMPC controller; neither did optimized prediction dynamics greatly affect the control outputs.

It is evident that a QPMPC-based controller evolution produces the expected switching behavior, while NRMPC outputs resemble a smoother sinusoidal curve. What is more important, the NRMPC controller outputs are far from the constraints thus not utilizing the full possible potential of the actuators. This is obviously noticeable in the damping performance of the controller too, although tip vibrations are irrelevant to the point and not shown here. Using the extension (ENRMPC) as suggested by Kouvaritakis et al. in [29] does not provide satisfactory improvement either. This simulation did not make use of optimized prediction dynamics, however it would not affect the outcome in any way. Due to the minimal difference between the ENRMPC and NRMPC control outputs, the following state trajectories will not differentiate between them. The trajectory marked as NRMPC utilizes the extension, thus presenting the slightly better case.

Figure 11.3 illustrates the projection of the control trajectory in state-space into the two-dimensional plane defined by \(x_1,\) \(x_2\) and \(x_3=0,\) \(x_4=0.\) Here the cut of the NRPMPC target set is shown as a shaded ellipsoid, and the cut of QPMPC target set as the slightly larger polyhedral region. Intersection of the multidimensional plane defined by constraints with the above-mentioned coordinate system is also depicted. As suggested by the development of control outputs in Fig. 11.12, the QPMPC control trajectory is spiraling toward desired equilibrium at a much faster pace than NRMPC. Furthermore, it is important to note that the NRMPC target set is significantly smaller than that for QPMPC. In addition, the set is not approaching the region bounded by the half-spaces defined by constraints close enough.

Given the difficulties visualizing state behavior in a multidimensional system, projections of trajectories and cuts of target sets defined by the rest of the state coordinates are also depicted in Fig. 11.14. The volume difference of QPMPC and NRMPC target sets is significant in each view. It is worth noting that states \(x_1\) and \(x_2\) are most dominant in the trajectory, the rest of the components play a less vital role in the overall outcome of the trajectory. This also indicates the dominance of the first vibration mode in the overall dynamic response.

4.1 Penalization and Optimality

The majority of simulations and experiments in this work assumes identical input penalty \({\mathbf{R}}. \) The choice of this tuning parameter is in most cases \({\mathbf{R}}=r=\)1E-4, which is based on the physical system model behavior using an LQ controller. A simple simulation Footnote 6 has been designed to determine ideal input penalization value \({\mathbf{R}},\) where a fourth order model with 250 Hz sampling was utilized. State has been penalized using the identity matrix of conforming dimension; initial condition has been set to be the equivalent of a 1.5 mm deflection at the beam tip. The physical limits of the piezoelectric transducers have been kept in mind when determining a suitable \({\mathbf{R}}.\)

Figure 11.5 shows evolution of controller outputs using LQ controllers with different input penalization values. Fixing \({\mathbf{R}}\) at very low values, for example \({\mathbf{R}}=r=\)1E-7 produces a very aggressive simulation, where output voltages exceed 2500 V. This is not shown in the figure for clarity; only the range of \(\pm300\) V is indicated. Setting \({\mathbf{R}}=r=\)1E-4 exceeds the constraints, but if one considers using a saturated controller, produces a reasonably lively output. On the other hand, with setting an input penalty of \({\mathbf{R}}=r=\)7E-4 one will not even reach the constraints, producing a conservative and slow controller.

Taking into account the previously introduced simulation and weighing, it is easy to see that \({\mathbf{R}}=r=\)1E-4 seems to be an ideal setting for the MPC control of this particular system. With an unspecified constrained MPC controller the same simulation run would hit the upper, lower and upper constraint again while avoiding constraints for the rest of the simulation run. This in fact implies an ideal setting, not sacrificing performance but maintaining a reasonable level of aggressiveness.

Determining controller output penalization has many other implications for both constrained and unconstrained MPC, although its most visible effect will still be performance. Amongst others, if constrained stable MPC control is considered penalty settings directly influence the volume of region of attraction and target set. In the case of NRMPC, these volumes are determined by the volumes of multi-dimensional augmented ellipsoid projections and intersections with the original state-space .

To better illustrate this fact, Fig. 11.6 shows the relationship between input penalty \({\mathbf{R}}\) and the volume of region of attraction and the target set. The most noteworthy part of the diagram is the two volumes converging to the same value, after exceeding a certain penalization level. After this, the size of the region of attraction is limited to the size of the target set and the NRMPC controller becomes a simple LQ controller.

As experienced during numerous trials with the NRMPC controller, the level of input penalty \({\mathbf{R}}\) has a surprisingly considerable effect on optimality and general usability of NRMPC using a fourth order model of the vibrating system. Initial conditions have been identical in all cases, emulating a tip deflection of 1.5 mm. To minimize the chance of encountering numerical problems, performance bounds were set to \(\gamma=\)0.5E5 in all cases. States have been penalized by the identity matrix, actuator limits were constrained to the typical \(\overline{u}=-\underline {u}=\)120 V.

Figure 11.7 shows the evolution of inputs \(u_k\) for simulations utilizing the NRMPC algorithm with different settings of \({\mathbf{R}}.\) This simulation utilizes a fourth order prediction model to demonstrate the connection between suboptimality of the controller and penalization. For a fourth order model of the physical system the seemingly ideal \(R=r=\)1E-4 produces particularly suboptimal outputs. In this case, constraints are not even invoked and the controller does not make use of the full potential of actuators. Decreasing the level of \({\mathbf{R}}\) however brings an improvement, at a certain point even constraints are invoked.

Contrary to the earlier presented simulation results using LQ controllers, results demonstrated in Fig. 11.17 imply that much smaller penalization values are required for higher order examples. Penalization \({\mathbf{R}}=r=\)1E-4 produces a remarkably abnormal output while the seemingly too aggressive diminutive penalization at least make use of the full potential of the transducers. However, the built-in suboptimality of NRMPC is still significant, further lowering \({\mathbf{R}}\) does not significantly decrease closed-loop cost.

5 Alternate NRMPC Extension

The extension of the NRMPC algorithm introduced in Sect. 8.1.2.1 building on the optimality improvement of Kouvaritakis et al. in [29] took the concept further by using several steps ahead extrapolations the augmented state in the hope of a performance improvement. The aim of the NRMPC extension proposed by Li et al. in [41] is similar, that is to improve the optimality and thus the performance of the algorithm. Instead of iterating the augmented state \({\mathbf{z}}_{k+1}=\varPsi {\mathbf{z}}_k\) several steps forward and constraining it to the invariant set \(E_z,\) Li et al. chose a one step forward iteration of the state \({\mathbf{x}}_k\) which was then constrained to the \(x\)-subspace projection \(E_{xz}\) of the invariant ellipsoid \(E_z\).

Simulations have been performed to assess optimality of the NRMPC algorithm using the modified extension of applying several steps forward iterations of the augmented state \({\mathbf{z}}_k\) constrained into the augmented invariant ellipsoidal set \(E_z.\) A simple second order state-space model has been assumed for each simulation, having the following structure [10, 28]:

States have been penalized by matrix \({\mathbf{Q}},\) set equal to the identity matrix of conforming dimensions. Controller outputs have been penalized by \({\mathbf{R}}=r=\)1E-4. Prediction horizon for the QPMPC-based controller has been set to \(n_c=4.\) The initial condition of \(x_0=[-0.5\quad0]\) was located on the boundary of the region of attraction. In the case of NRMPC with fixed prediction matrices, the same requirement calls for a horizon of \(n_c=25\) steps forward. State constraints have not been considered and controller outputs were limited to \(|u|\leq1.\) In order to make sure that the invariance condition is not violated due to numerical difficulties, performance bound has been set to \(\gamma=\)1E5.

Figure 11.8 shows the evolution of controller outputs for different versions of the NRMPC controller compared to truly optimal QPMPC. All simulations shown here use algorithms with optimized prediction dynamics. Understandably, QPMPC produces the best result, along with the smallest closed-loop cost. The worst evolution of outputs is acquired trough using the original NRMPC code, since constraints are not even reached. Simulation marked as \({\it NRMPC}_{k+1}\) is actually an algorithm implementing ENRMPC—the original extension introduced by Kouvaritakis et al. in [29]. It provides an improvement relative to NRMPC without the extension, however there is still a possibility approaching optimal QPMPC more closely.

The remainder of simulations indicated in Fig. 11.8 implement extensions to the NRMPC algorithm, where membership of the augmented state \({\mathbf{z}}\) is assumed not at the next step \((k+1),\) but at steps \((k+2)\) and \((k+3).\) Improvement in the evolution of controller outputs is visually distinguishable, where \((k+3)\) produces nearly the same output as optimal MPMPC.

To quantify the level of suboptimality in comparison with QPMPC better, closed-loop costs are indicated in Table 11.1. Costs for the different adaptations of NRMPC have been calculated using the formerly introduced example and assuming the same conditions. The truly optimal closed-loop cost obtained via using QPMPC is \(J_{\it QPMPC}=97.00,\) the NRMPC costs should ideally be as close to this as possible. NRMPC algorithms with and without optimized dynamics were evaluated where OD marked the use of optimized prediction dynamics at the offline stage. From this, it is implied that optimizing prediction dynamics not only enlarges the size of region of attraction, but also improves the optimality . The original formulation of NRMPC [28] produced the worst results. Using the extension and several steps ahead variations on the extension the costs gradually improve. In fact, the \((k+4)\) steps extension ensures the same cost as the QPMPC controller up to numerical precision differences.

Scalers \(\mu\) resulting from different membership and feasibility conditions are plotted for the previously discussed example in Fig. 11.19. The original extension calculates scalers from the membership function for \((k+1)\) and ensures the feasibility of constraints at step \((k).\) For each step, the higher of the two scaler values is selected. On the other hand, much less conservative scalers \(\mu\) are computed for several steps ahead alternative extensions. Membership in the previous steps is not regarded, however the algorithm has to check for the feasibility of constraints for each step ahead. Thus, for an extension assuming membership at \((k+i)\) steps, scalers are compared for membership and feasibility from \((k)\) up to \((k+i-1)\) and the highest value is used to scale the perturbation vector.

The optimal QPMPC controller output has been compared to the original and alternate NRMPC extensions for a vibration suppression example. Higher order models provide better conditions to assess optimality differences, therefore a fourth order state-space model of the vibrating beam has been considered for each trial. Figure 11.20 demonstrates the results of these simulations. A very low input penalty has been used for the NRMPC controllers, according to the findings presented in Sect. 11.4. Controller output was constrained to \(\pm120\) V.

As implied from the figure, alternate extensions of the NRMPC algorithm do not provide significant improvement in comparison with the original one. Two- and three-step ahead alternate extensions \((k+2)\) and \((k+3)\) produce slightly higher output voltages, theoretically being better than their original counterpart. The level of improvement is visually indistinguishable. For this reason the system output, in this case beam tip vibration is not presented here. Closed-loop costs quantify minor improvements in process optimality , where the strictly optimal QPMPC cost is \(J=133.48.\) Original extended NRMPC produces a cost of \(J_{(k+1)}=195.76,\) a two-step ahead modification \(J_{(k+2)}=191.09,\) while three steps lowers the cost to \(J_{(k+2)}=187.66.\) Two-steps ahead extension provides an optimality improvement compared to the original extension of only \(2.4\%,\) and still remains far from ideal. Even the three steps ahead alternate extension NRMPC code produces 40% worse closed-loop costs than QPMPC. Footnote 7

Considering the formerly introduced simulation results, we may state that given the typical models used in the problem area of active vibration suppression, alternative extensions to the original NRMPC problem do not present a viable method of optimality enhancement. The gain in optimality does not justify questionable invariance properties, and the likely issues connected with model uncertainty. Although speed decrease is slight and the algorithm remains computationally efficient, several steps ahead extensions do require more computational time because of the additional feasibility conditions.

Alternate NRMPC extensions may not be suitable for improving optimality in vibration suppression, however it is possible to imagine certain models and applications where even the small optimality increase is advantageous. The simulation results presented by Li et al. in [41] suggest a similar conclusion from the viewpoint of the AVC of lightly damped systems: the optimality improvement is mainly significant with models where the effect of actuation is large, those with large input penalties \({\mathbf{R}}.\) Although the improvement of Li et al. does match the optimality of this modified NRMPC algorithm to QP-based MPC under a percent for the majority of randomly generated models, the results are valid for large \({\mathbf{R}}.\) Neither the iterated augmented state \(\varPsi z_k\) nor the iterated state-based algorithm of Li et al. has been later considered for the active vibration attenuation trial on the demonstrator hardware.

6 Comparison of QPMPC, MPMPC and NRMPC in Simulation

Beam tip vibration suppression performance through various MPC strategies has been compared in simulation and contrasted to the free response. The strategies were QPMPC, optimal MPMPC and NRMPC all with a priori stability guarantees. For this simulation study, an initial deflection of 5 mm has been considered to allow a tractable computation of the MPMPC controller structure, and shorter QPMPC simulation times. This simulation pointed out latent issues with the implementation of QPMPC and MPMPC on the real system, while at the same time validated functionality of the NRMPC algorithm. All necessary steps were taken to create as identical conditions to all three controllers as possible.

A second order model of the vibrating system has been assumed to generate predictions, sampled by 100 Hz which sufficiently includes the first vibration mode and exceeds the requirements of Shannon’s theorem [23]. All simulations started with the same initial condition of \(x_0\,{=}\,[{-}\,7\,{-}\,1.6073]^T,\) emulating a 5 mm deflection at the beam tip. The system response was simulated by the same state-space model, thus not considering model uncertainty. To avoid numerical difficulties, performance of the NRMPC controller has been bounded to \(\gamma=\)1E5—affecting the size of the region of attraction. Inputs have been penalized by \({\mathbf{R}}\,{=}\,r\,{=}\,\)1E-4, while states used the identity matrix for \({\mathbf{Q}}.\) System constraints were set to the typical physical limit of transducers, a maximal \(\pm120\) V. No other process constraints were engaged. The minimal QP-based stable MPC horizon to ensure the inclusion of the given initial condition into the region of attraction required a prediction horizon of \(n_c=40\) steps. This same horizon was also required by the MPMPC controller, implemented as described in Chap. 10.2.

Illustrated in the example shown in Fig. 11.21, the best response is ensured by QPMPC control as it is logically expected. The controller drives actuators hard into saturation, as assumed from the physical properties of the system. QPMPC provides a strictly optimal control run with a closed-loop cost serving as an ideal lowest in comparison with suboptimal NRMPC. The QPMPC controller assumes the same quadratic cost function as the rest of the controllers, and safeguards for constraint feasibility using a constraint checking horizon. Despite the best possible response, the lengthy simulation times forecast issues at the real-time implementation.

In the case of MPMPC control, the tip vibration and the controller output is nearly indistinguishable. Essentially both controllers produce the same output, since they only differ with QPMPC only in the method of implementation. The response is very favorable, driving actuators to saturation as expected. Online simulation times are surprisingly short, however offline precomputation of the controller has been somewhat lengthy even for the limited region of attraction. Details of this example are shown in Fig. 11.22, illustrating the input and output responses between samples 10 and 30 (0.1–0.3 s).

Due to the built-in suboptimality of the formulation NRMPC performs slightly worse than the former two. This is an expected behavior, and it is visible on both figures. Increase in cost function value in MPMPC is insignificant and only due to numerical effects, while the drawback of NRMPC is a quite significant cost increase of \(\sim\!\!15\%\) when compared to both QPMPC and MPMPC. As discussed in Sect. 11.4, higher order models perform worse, the optimality difference can reach \(40\%\) even with the NRMPC extension Footnote 8 implemented and enabled.

Although there are some minor differences in performance, all the investigated MPC methods decrease the settling time of the beam into equilibrium significantly, ultimately improving the natural damping properties of the structure. The simulation results also suggest that the piezoceramic actuators, which contribute only very modest deflections in the static mode, considerably increase the natural damping near resonance.

Notes

- 1.

Simulations show that the intersection (target set) size shrinks with increasing prediction horizon, although in this case with a visually indistinguishable rate—thus not shown on the figure.

- 2.

AMD Athlon X2 DualCore 4400+ @ 2.00 GHz, 2.93 GB of RAM.

- 3.

Coincident with the intersection of the augmented ellipsoid with original state-space .

- 4.

For detailed mathematical description please refer to [11] or the relevant section in this work: See: Sect. 8.1.3

- 5.

See B.3.3 for details.

- 6.

See 12.1 for an experiment with different input penalty values \({\mathbf{R}}.\)

- 7.

This is a quite significant suboptimality especially that [29] states that for randomly generated examples, the error never rose above 2% and remained under 1% for 97% of the examples.

- 8.

See Sect. 8.1.2 for more details.

References

Amer Y, Bauomy H (2009) Vibration reduction in a 2DOF twin-tail system to parametric excitations. Commun Nonlinear Sci Numer Simul 14(2):560–573. doi:10.1016/j.cnsns.2007.10.005, http://www.sciencedirect.com/science/article/B6X3D-4PYP723-2/2/b9d5375168fadb0b4e67857e92948bfc

Bandopadhya D, Bhogadi D, Bhattacharya B, Dutta A (2006) Active vibration suppression of a flexible link using ionic polymer metal composite. In: 2006 IEEE conference on robotics, automation and mechatronics, pp 1–6. doi: 10.1109/RAMECH.2006.252638

Baocang D (2009) Modern predictive control, 1st edn. Chapman and Hall / CRC, Boca Raton

Bartlett P, Eaton S, Gore J, Metheringham W, Jenner A (2001) High-power, low frequency magnetostrictive actuation for anti-vibration applications. Sens Actuators A 91(1–2):133–136. (Third European conference on magnetic sensors & actuators). doi: 10.1016/S0924-4247(01)00475-7, http://www.sciencedirect.com/science/article/B6THG-4313YT1-14/2/851ac15043a568a17313eef3042d685f

Bemporad A, Morari M, Dua V, Pistikopoulos EN (2002) The explicit linear quadratic regulator for constrained systems. Automatica 38(1):3–20. doi: 10.1016/S0005-1098(01)00174-1, http://www.sciencedirect.com/science/article/B6V21-44B8B5J-2/2/2a3176155886f92d43afdf1dccd128a6

Benaroya H, Nagurka ML (2010) Mechanical vibration: analysis, uncertainities and control, 3rd edn. CRC Press, Taylor & Francis Group, Boca Raton

Bittanti S, Cuzzola FA (2002) Periodic active control of vibrations in helicopters: a gain-scheduled multi-objective approach. Control Eng Pract 10(10):1043–1057. doi:10.1016/S0967-0661(02)00052-7, http://www.sciencedirect.com/science/article/B6V2H-45KSPJJ-3/2/9647861ce849d131c7d4b90cdb964751

Boscariol P, Gasparetto A, Zanotto V (2010) Model predictive control of a flexible links mechanism. J Intell Rob Syst 58:125–147. doi:10.1007/s10846-009-9347-5

Braghin F, Cinquemani S, Resta F (2010) A model of magnetostrictive actuators for active vibration control. Sens Actuators A (in press). doi:10.1016/j.sna.2010.10.019, http://www.sciencedirect.com/science/article/B6THG-51F25N5-4/2/f5cf46980d38877c74a3c4d34fbd894d

Cannon M (2005) Model predictive control, lecture notes. Michaelmas Term 2005 (4 Lectures), Course code 4ME44. University of Oxford, Oxford

Cannon M, Kouvaritakis B (2005) Optimizing prediction dynamics for robust MPC. IEEE Trans Autom Control 50(11):1892–1897. doi:10.1109/TAC.2005.858679

Chen H, Allgöver F (1998) A quasi-infinite horizon nonlinear model predictive control scheme with guaranteed stability. Automatica 34(10):1205–1217

Cychowski M, Szabat K (2010) Efficient real-time model predictive control of the drive system with elastic transmission. IET Control Theory Appl 4(1):37–49. doi: 10.1049/iet-cta.2008.0358

Eissa M, Bauomy H, Amer Y (2007) Active control of an aircraft tail subject to harmonic excitation. Acta Mech Sin 23:451–462. doi:10.1007/s10409-007-0077-2

El-Badawy AA, Nayfeh AH (2001) Control of a directly excited structural dynamic model of an F-15 tail section. J Franklin Inst 338(2–3):133–147. doi:10.1016/S0016-0032(00)00075-2, http://www.sciencedirect.com/science/article/B6V04-42HNMDV-3/2/e3bf6f797834c8e8638324be88fb78f7

Eure KW (1998) Adaptive predictive feedback techniques for vibration control. Doctoral dissertation, Virginia Polytechnic Institute and State University, Blacksburg

Fuller CR, Elliott SJ, Nelson PA (1996) Active control of vibration, 1st edn. Academic Press, San Francisco

Geyer T, Torrisi FD, Morari M (2008) Optimal complexity reduction of polyhedral piecewise affine systems. Automatica 44(7):1728–1740. doi: 10.1016/j.automatica.2007.11.027, http://www.sciencedirect.com/science/article/pii/S0005109807004906

Hassan M, Dubay R, Li C, Wang R (2007) Active vibration control of a flexible one-link manipulator using a multivariable predictive controller. Mechatronics 17(1):311–323

Hu Q (2009) A composite control scheme for attitude maneuvering and elastic mode stabilization of flexible spacecraft with measurable output feedback. Aerosp Sci Technol 13(2–3):81–91. doi: 10.1016/j.ast.2007.06.007, http://www.sciencedirect.com/science/article/B6VK2-4P96269-2/2/5fbc47249fdd3f1963c5ba856f071c55

Huang K, Yu F, Zhang Y (2010) Model predictive controller design for a developed electromagnetic suspension actuator based on experimental data. In: 2010 WASE international conference on information engineering (ICIE), vol 4, pp 152–156. doi:10.1109/ICIE.2010.327

Imsland L, Bar N, Foss BA (2005) More efficient predictive control. Automatica 41(8):1395–1403. doi:10.1016/j.automatica.2005.03.010, http://www.sciencedirect.com/science/article/B6V21-4G7NT35-1/2/52a9590bfe1ccc2a9561165c3fbdf872

Inman DJ (2007) Engineering vibrations, 3rd edn. Pearson International Education (Prentice Hall), Upper Saddle River

Johansen T, Grancharova A (2003) Approximate explicit constrained linear model predictive control via orthogonal search tree. IEEE Trans Autom Control 48(5):810–815. doi: 10.1016/j.cnsns.2007.10.005

Johansen TA, Jackson W, Schreiber R, Tøndel P (2007) Hardware synthesis of explicit model predictive controllers. IEEE Trans Control Syst Technol 15(1):191–197

Kang B, Mills JK (2005) Vibration control of a planar parallel manipulator using piezoelectric actuators. J Intell Rob Syst 42:51–70. doi:10.1007/s10846-004-3028-1

Kok J, van Heck J, Huisman R, Muijderman J, Veldpaus F (1997) Active and semi-active control of suspension systems for commercial vehicles based on preview. In: Proceedings of the 1997 American control conference, vol 5, pp 2992–2996. doi: 10.1109/ACC.1997.612006

Kouvaritakis B, Rossiter J, Schuurmans J (2000) Efficient robust predictive control. IEEE Trans Autom Control 45(8):1545–1549. doi:10.1109/9.871769

Kouvaritakis B, Cannon M, Rossiter J (2002) Who needs QP for linear MPC anyway? Automatica 38:879–884. doi:10.1016/S0005-1098(01)00263-1, http://www.sciencedirect.com/science/article/pii/S0005109801002631

Krishen K (2009) Space applications for ionic polymer-metal composite sensors, actuators, and artificial muscles. Acta Astronaut 64(11–12):1160–1166. doi:10.1016/j.actaastro.2009.01.008, http://www.sciencedirect.com/science/article/B6V1N-4VM2K65-3/2/f8b0b2d64f274154a5eb59da52fbf524

Kvasnica M (2009) Real-time model predictive control via multi-parametric programming: theory and tools, 1st edn. VDM Verlag, Saarbrücken

Kvasnica M (2011) Multi-parametric toolbox (MPT). In: Selected topics on constrained and nonlinear control, STU Bratislava–NTNU Trondheim, pp 101–170

Kvasnica M, Grieder P, Baotić M (2004) Multi-parametric toolbox (MPT). http://control.ee.ethz.ch/

Kvasnica M, Grieder P, Baotic M, Morari M (2004) Multi-parametric toolbox (mpt). In: Alur R, Pappas GJ (eds) Hybrid systems: computation and control, Lecture notes in computer science, vol 2993, Springer, Berlin / Heidelberg, pp 121–124

Kvasnica M, Grieder P, Baotic M, Christophersen FJ (2006) Multi-parametric toolbox (MPT). Extended documentation, Zürich. http://control.ee.ethz.ch/~mpt/docs/

Kvasnica M, Christophersen FJ, Herceg M, Fikar M (2008) Polynomial approximation of closed-form MPC for piecewise affine systems. In: Proceedings of the 17th world congress of the international federation of automatic control, Seoul, pp 3877–3882

Kvasnica M, Fikar M, Čirka L’, Herceg M (2011) Complexity reduction in explicit model predictive control. In: Selected topics on constrained and nonlinear control, STU Bratislava–NTNU Trondheim, Bratislava pp 241–288

Kvasnica M, Rauová I, Fikar M (2011) Real-time implementation of model predictive control using automatic code generation. In: Selected topics on constrained and nonlinear control. Preprints, STU Bratislava–NTNU Trondheim, pp 311–316

Kwak MK, Heo S (2007) Active vibration control of smart grid structure by multiinput and multioutput positive position feedback controller. J Sound Vib 304(1–2):230–245. doi: 10.1016/j.jsv.2007.02.021, http://www.sciencedirect.com/science/article/B6WM3-4NH6N96-2/2/ca7b43602b9d052e388f4b2a28f1ebae

Lau K, Zhou L, Tao X (2002) Control of natural frequencies of a clamped–clamped composite beam with embedded shape memory alloy wires. Compos Struct 58(1):39–47. doi:10.1016/S0263-8223(02)00042-9, http://www.sciencedirect.com/science/article/B6TWP-45XTP9W-N/2/07b9a065ac866d8869a4240deb918851

Li S, Kouvaritakis B, Cannon M (2010) Improvements in the efficiency of linear MPC. Automatica 46(1):226–229. doi:10.1016/j.automatica.2009.10.010, http://www.sciencedirect.com/science/article/B6V21-4XGCHXB-3/2/20a93fa6dd4fb88469638ac3bc2fe729

Lin LC, Lee TE (1997) Integrated PID-type learning and fuzzy control for flexible-joint manipulators. J Intell Rob Sys 18:47–66. doi:10.1023/A:1007942528058

Lu H, Meng G (2006) An experimental and analytical investigation of the dynamic characteristics of a flexible sandwich plate filled with electrorheological fluid. Int J Adv Manuf Technol 28:1049–1055. doi:10.1007/s00170-004-2433-8

Luo T, Hu Y (2002) Vibration suppression techniques for optical inter-satellite communications. In: IEEE 2002 international conference on communications, circuits and systems and west sino expositions, vol. 1, pp 585–589. doi:10.1109/ICCCAS.2002.1180687

Maciejowski JM (2000) Predictive control with constraints, 1st edn. Prentice Hall, Upper Saddle River

Malinauskas A (2001) Chemical deposition of conducting polymers. Polymer 42(9):3957–3972. doi: 10.1016/S0032-3861(00)00800-4, http://www.sciencedirect.com/science/article/B6TXW-42C0RR9-1/2/e8084cbb0f228b86a5cc9d061a340e22

Mayne DQ, Rawlings JB, Rao CV, Scokaert POM (2000) Constrained model predictive control: stability and optimality. Automatica 36(6):789–814

Mehra R, Amin J, Hedrick K, Osorio C, Gopalasamy S (1997) Active suspension using preview information and model predictive control. In: Proceedings of the 1997 IEEE international conference on control applications, pp 860–865. doi: 10.1109/CCA.1997.627769

Moon SJ, Lim CW, Kim BH, Park Y (2007) Structural vibration control using linear magnetostrictive actuators. J Sound Vib 302(4–5):875–891. doi: 10.1016/j.jsv.2006.12.023, http://www.sciencedirect.com/science/article/B6WM3-4N2M6HH-5/2/417522adfca8640acfa76e890ae0533c

Moon SM, Cole DG, Clark RL (2006) Real-time implementation of adaptive feedback and feedforward generalized predictive control algorithm. J Sound Vib 294(1–2):82–96. doi:10.1016/j.jsv.2005.10.017, http://www.sciencedirect.com/science/article/B6WM3-4HYMY76-1/2/50d98047187533ebe9d3ea8310446e77

Neat G, Melody J, Lurie B (1998) Vibration attenuation approach for spaceborne optical interferometers. IEEE Trans Control Syst Technol 6(6):689–700. doi: 10.1109/87.726529

Pistikopoulos EN, Georgiadis MC, Dua V (eds) (2007) Multi-parametric model-based control, vol. 2, 1st edn. Wiley-VCH Verlag GmbH & Co., Weinheim

Pistikopoulos EN, Georgiadis MC, Dua V (eds) (2007) Multi-parametric programming, vol 1, 1st edn. Wiley-VCH Verlag GmbH & Co., Weinheim

Pólik I (2005) Addendum to the SeDuMi user guide version 1.1. Technical report, Advanced Optimization Lab, McMaster University, Hamilton. http://sedumi.ie.lehigh.edu/

Polóni T, Takács G, Kvasnica M, Rohal’-Ilkiv B (2009) System identification and explicit control of cantilever lateral vibrations. In: Proceedings of the 17th international conference on process control

Pradhan S (2005) Vibration suppression of FGM shells using embedded magnetostrictive layers. Int J Solids Struct 42(9–10):2465–2488. doi:10.1016/j.ijsolstr.2004.09.049, http://www.sciencedirect.com/science/article/B6VJS-4F6SSGN-1/2/b6f9e2e6ffc65bfc0c4af5083e37df0b

Preumont A (2002) Vibration control of active structures, 2nd edn. Kluwer Academic Publishers, Dordrecht

Rajoria H, Jalili N (2005) Passive vibration damping enhancement using carbon nanotube-epoxy reinforced composites. Compos Sci Technol 65(14):2079–2093. doi:10.1016/j.compscitech.2005.05.015, http://www.sciencedirect.com/science/article/B6TWT-4GHBPN0-5/2/a67d954050aac7829a56e3e4302c8ef6

Richelot J, Bordeneuve-Guibe J, Pommier-Budinger V (2004) Active control of a clamped beam equipped with piezoelectric actuator and sensor using generalized predictive control. In: 2004 IEEE international symposium on industrial electronics, vol 1, pp 583–588. doi: 10.1109/ISIE.2004.1571872

Rossiter JA (2003) Model-based predictive control: a practical approach, 1st edn. CRC Press, Boca Raton

Shan J, Liu HT, Sun D (2005) Slewing and vibration control of a single-link flexible manipulator by positive position feedback (PPF). Mechatronics 15(4):487–503. doi:10.1016/j.mechatronics.2004.10.003, http://www.sciencedirect.com/science/article/B6V43-4DR87K7-4/2/2dd311fdd61308e1415cd45c1edc3076

Sturm JF (2001) SeDuMi 1.05 R5 user’s guide. Technical report, Department of Economics, Tilburg University, Tilburg. http://sedumi.ie.lehigh.edu/

Sun D, Mills JK, Shan J, Tso SK (2004) A PZT actuator control of a single-link flexible manipulator based on linear velocity feedback and actuator placement. Mechatronics 14(4):381–401. doi:10.1016/S0957-4158(03)00066-7, http://www.sciencedirect.com/science/article/B6V43-49DN5K4-1/2/fa21df547f182ad568cefb2ddf3a6352

Takács G, Rohal’-Ilkiv B (2009) Implementation of the Newton–Raphson MPC algorithm in active vibration control applications. In: Mace BR, Ferguson NS, Rustighi E (eds) Proceedings of the 3rd international conference on noise and vibration: emerging methods, Oxford

Takács G, Rohal’-Ilkiv B (2009) MPC with guaranteed stability and constraint feasibility on flexible vibrating active structures: a comparative study. In: Hu H (ed) Proceedings of the eleventh IASTED international conference on control and applications, Cambridge

Takács G, Rohal’-Ilkiv B (2009) Newton–Raphson based efficient model predictive control applied on active vibrating structures. In: Proceedings of the European control conference, Budapest

Takács G, Rohal’-Ilkiv B (2009) Newton–Raphson MPC controlled active vibration attenuation. In: Hangos KM (ed) Proceedings of the 28th IASTED international conference on modeling, identification and control, Innsbruck

Tøndel P, Johansen TA, Bemporad A (2003) Evaluation of piecewise affine control via binary search tree. Automatica 39(5):945–950. doi: 10.1016/S0005-1098(02)00308-4, http://www.sciencedirect.com/science/article/pii/S0005109802003084