Abstract

Mesoscopic brain dynamics, typically studied with electro- and magnetoencephalography (EEG and MEG), display a rich complexity of oscillatory and chaotic-like states, including many different frequencies, amplitudes and phases. Presumably, these different dynamical states correspond to different mental states and functions; studying transitions between such states could provide valuable insights into brain—mind relations that should also be of clinical interest. We use computational methods to investigate these transitions, with the objective of finding relations between structure, dynamics, and function. In particular, we have developed models of paleo- and neocortical structures, in order to study their mesoscopic neurodynamics, as a link between the microscopic neuronal and macroscopic mental events and processes. In this chapter, we describe several types of models that emphasize network connectivity and structure, but we also include molecular and cellular properties at varying detail, depending on the particular problem and experimental data available. We use these models to study how phase transitions can be induced in the mesoscopic neurodynamics of cortical networks by internal (natural) and external (artificial) factors. We discuss the models, and relate the simulation results to macroscopic phenomena, such as arousal, attention, anesthesia, learning, and mental disorders.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- cortical network models

- mesoscopic neurodynamics

- oscillations

- chaos

- noise

- EEG

- neuromodulation

- electrical stimulation

- anesthetics

7.1 Introduction

Brain structures are characterized by their complexity in terms of organization and dynamics. This complexity appears at many different spatial and temporal scales which, in relative terms, can be considered micro, meso, and macro scales. The corresponding dynamics may range from ion-channel kinetics, to spike trains of single neurons, to the neurodynamics of cortical networks and areas [6, 10]. The high complexity of neural systems is partly a result of the web of nonlinear interrelations between levels and parts with positive and negative feedback loops. This in turn introduces thresholds, lags and discontinuities in the dynamics, often leading to unpredictable and nonintuitive system behaviors [68].

Typical for complex systems in general, and for the nervous system in particular, is that different phenomena appear at different levels of spatial (and temporal) aggregation. New and unpredictable qualities emerge at every level, qualities that cannot be reduced to the properties of the components at the underlying level. In some cases, there is a hierarchical structure of a simple kind, where higher macro levels “control” lower ones (c.f., the so-called enslaving principle of Haken [43]). However, there could also be a more “bottom-up” interpretation of systems, where indeed the micro phenomena, through various mechanisms, set the frame for phenomena at higher structural levels. This interplay between micro and macro levels is part of what frames the dynamics of systems. Of special interest is the meso level, i.e., the level in between the micro and the macro, as this is where bottom-up meets top-down [30, 31, 68].

The activity of neural systems often seems to depend on nonlinear threshold phenomena: e.g., microscopic fluctuations may cause rapid and large macroscopic effects. There is a dynamical region between order and pure randomness that involves a high degree of complexity, and which seems characteristic for neural processes. This dynamics is very unstable and shifts from one state to another within a few hundred milliseconds or less, typical of chaotic systems. (It may actually be more appropriate to refer to this behavior as “pseudo-chaotic”, since “true chaos”, as defined mathematically, requires “infinite” time for its development).

Despite at least a century of study, the functional significance of the neural dynamics at different levels is still not clear, nor is much known about the relation between activities at the different levels. However, it is reasonable to assume that different dynamical states correlate with different functional or mental states. This principle guides our research, and will be discussed further in the final section.

By studying transitions in brain dynamics, we may reveal fundamental properties of the brain and its constituents that relate to mental processes and transitions. Such transitions could, for example, involve various cognitive levels and conscious states that would be of interest not only to neuroscience, but also to psychology, psychiatry, and medicine.

In this chapter I present a range of computational models in which we investigate relations between structure, dynamics, and function of neural systems. My focus is on phase transitions in mesoscopic brain dynamics, since this type of dynamics constitutes a well-studied bridge between neural and mental processes [31]. These transitions can be induced by internal causes (noise and neuromodulation), but also by external causes (electric shocks and anesthetics). The functional significance of the model results are discussed in the concluding section.

7.1.1 Mesoscopic brain dynamics

In our description, mesoscopic brain dynamics refers to the neural activity or dynamics at intermediate scales of the nervous system, at levels between neurons and the entire brain. It relates to the dynamics of cortical neural networks, typically on the spatial order of a few millimetres to centimetres, and temporally on the order of milliseconds to seconds. This type of dynamics can be measured by methods such as ECoG (electrocorticography) , EEG (electroencephalography) , or MEG (magnetoencephalography) .

We consider processes and structures studied with a microscope or microelectrodes as defining a microscopic scale of the nervous system; thus the micro scale could, for example, refer to ion channels or single neurons. The macroscopic scale, in this picture, corresponds to the largest measurable extent of brain activity. Typically, this could concern the dynamics of maps and areas, usually measured with PET or fMRI, or other brain-imaging techniques.

Mesoscopic brain dynamics, with its transitions, is partly a result of thresholds and the summed activity of a large number of elements interconnected with positive and negative feedback. It is also a result of the dynamic balance between opposing processes, influx and efflux of ions, inhibition and excitation, etc. Such interplay between opposing processes often results in (transient or continuous) oscillatory and chaotic-like behaviour [6, 32, 44, 78].

The mesoscopic neurodynamics is naturally influenced and shaped by the activity at other scales. For example, it is often mixed with noise that is generated at a microscopic level by spontaneous activity of neurons and ion channels. It is also affected by macroscopic activity, such as slow rhythms generated by cortico-thalamic circuits or neuromodulatory influx from different brain regions. Transitions at these other levels could also be of relevance to the mesoscopic level. For example, at the microscopic level of ion channels, the kinetics assumes stochastic transitions between a limited number of static states. In spite of this, the kinetics can be given a deterministic, dynamic interpretation at a population level. Similarly, at the cellular level, there is regular or irregular spiking, or bursts of spikes, which form the basis for most mesoscopic and macroscopic descriptions of nerve activity. While the causal relations may be difficult to establish, transitions between different states of arousal, attention, or mood, could be seen as a top-down interaction from macroscopic activity to mesoscopic neurodynamics.

7.1.2 Computational methods

Computational approaches complement experimental methods in understanding the complexity of neural systems and processes. Computational methods have long been used in neuroscience, perhaps most successfully for the description of action potentials [49]. When investigating interactions between different neural levels, computational models are essential, and in some cases, may be the only method we have. (For an overview, see Refs. [3, 4, 30, 73]). In recent years, there has also been a growing interest in applying computational methods to problems in clinical neuroscience, with implications for psychology and psychiatry [29, 30, 36, 39, 42, 52, 53, 64, 74, 79, 80, 87, 88].

In our research, we use a computational approach to address questions regarding relations between structure, dynamics, and function of neural systems. Here, the focus is on understanding how transitions between different dynamical states can be implemented and interpreted. For this purpose, we present different kinds of computational models, at different scales and levels of detail, depending on the particular issues addressed.

In almost all cases, the emphasis is on network connectivity and hence there is, in general, a greater level of realism and detail for the network structures than for node characteristics. However, when microscopic details are important, or when model simulations are to be compared with data at molecular and cellular scales, such details need to be incorporated in the model, sometimes at the expense of details at the network level. Our aim is to use a level of description appropriate for the problem we address.

The first examples consider phase transitions in network dynamics arising from noise and neuromodulation. In this case, we use a three-layered paleocortical model with simple network nodes of Hopfield type [50, 51]. Simulation results with this model are compared with LFP (local field potential) and EEG data from the olfactory cortex. For transitions due to attention, we want to compare our results with experimental data on spike trains, so we use a neocortical model with spiking neurons of Hodgkin—Huxley type [49].

In the case of electrical stimulation, we first use our paleocortical model, since we again compare with EEG data from experiments on animal olfactory cortex. The measured response is the summed activity of a very large number of neurons which will drown out single spikes, so there is no need for spiking neurons here.

When modeling and analyzing EEG related to electroconvulsive therapy, we use a neocortical network model with spiking neurons of FitzHugh—Nagumo type [25] (a simplification of the Hodgkin—Huxley description) to enable comparison against previous simulations with such model neurons [35].

In our final example, we investigate the mechanisms of anesthetics that block certain ion channels. We employ a network of Frankenhaeuser—Huxley neurons [54] because of their accurate description of ion-channel currents in cortical neurons. This microscopically detailed model allows us to compare our network results with those from single-neuron simulations for varying ion-channel composition [8].

7.2 Internally-induced phase transitions

The complex neurodynamics of the brain can be regulated by various neuromodulators, and presumably also by intrinsic noise levels, governed by thresholds for spontaneous activity. In addition, the state of arousal or attention may also change the cortical neurodynamics considerably, and even induce phase transitions that could affect the functional efficiency of cognitive processes. Such transitions may also be related to noncognitive mental processes and disorders, but that is beyond the scope of this discussion. In the following three sections, we will look at different possibilities for how intrinsic noise, neuromodulation, and attention may induce phase transitions in cortical structures.

7.2.1 Noise-induced transitions

Spontaneous activity, or neuronal noise, is normally seen as a naturally occurring side phenomenon without any functional role. However, it becomes increasingly clear that stochastic processes play a fundamental role in the nervous system, at least for keeping a baseline activity, but presumably also for increasing the efficiency in system performance (see e.g., Ref. [5], and Sect. 7.4 Discussion).

Noise appears primarily at the microscopic (subcellular and cellular) levels, but it is uncertain to what degree this noise normally is affecting meso- and macroscopic levels (networks and systems). Under certain circumstances, microscopic noise can induce effects on mesoscopic and macroscopic levels, but the role of these effects is still unclear. Evidence suggests that even single channel openings can cause intrinsic spontaneous impulse generation in a subset of small hippocampal neurons [54].

In addition to the microscopic noise, irregular chaotic-like behavior, which may be indistinguishable from noise, could be generated by the interplay of neural excitatory and inhibitory activity at the network level. However, in contrast to a chaotic dynamics, where the dynamics can be controlled and easily shifted into an oscillatory or other state, stochastic noise is not equally controllable, and cannot shift into a completely different dynamics (even though its amplitude and frequency might vary as a result of neuromodulatory control).

7.2.1.1 A paleocortical network model

When studying how the neurodynamics of a cortical structure depends on various internal factors, including neuromodulation and intrinsic noise from spontaneously firing neurons, we use our previously constructed model of the olfactory cortex [60]. (With a few modifications, this model can also be used for the hippocampus, which has a similar structure). Paleocortex, primarily consisting of the olfactory cortex and hippocampus, is more primitive and simpler than neocortical structures, such as the visual cortex. It has a three-layered structure and a distributed connectivity pattern with extensive short- and long-range connections within a layer. Due to its simpler structure and well-studied neurodynamics, the olfactory cortex can be regarded as a suitable model system for the study of mesoscopic brain dynamics.

Our paleocortical model has network nodes with a continuous input—output relation, the output corresponding to the average firing frequency of neural populations [50, 51]. Three different types of nodes (neural populations) are organized in three layers, as seen in Fig. 7.1. The top layer consists of inhibitory feedforward interneurons, which receive inputs from the olfactory bulb, via the lateral olfactory tract (LOT), and from the excitatory pyramidal cells in the middle layer. The bottom layer consists of inhibitory feedback interneurons, receiving inputs only from the pyramidal cells and projecting back to those. The two sets of inhibitory cells are characterized by their different time-constants. In addition to the feedback from inhibitory cells, the pyramidal cells receive extensive inputs from each other and from the olfactory bulb, via the LOT. All connections are modeled with distance-dependent time-delays for signal propagation, corresponding to the geometry and fiber characteristics of the real cortex.

Schematic of our model neural network that mimics the structure of the olfactory cortex. One layer of excitatory nodes, corresponding to populations of pyramidal cells (large circles in middle layer), is sandwiched between two layers of inhibitory nodes, corresponding to two different types of interneurons (smaller circles, top and bottom layers). External input (from “the olfactory bulb”) projects onto the two top layers in a fan-like fashion.

The time-evolution for a network of N network nodes (neural populations) is given by a set of coupled nonlinear first-order differential-delay equations for all the N internal states, u i (corresponding to mean membrane potential of a population i). With external input, I (t), characteristic time constant, \(\tau_i\), and connection weight \(w_{ij}\) between nodes i and j, separated with a time-delay \(\delta_{ij}\), we have for each node activity, u i ,

The input—output function, \(g_i(u_i)\), is a continuous sigmoid function, experimentally determined by Freeman [28]:

The gain parameter Q i determines the slope, threshold and amplitude of the input-output curve for node i. This gain parameter is associated with the level of arousal, which in turn may be linked to the level of a neuromodulator, such as acetylcholine (ACh) . C is a normalisation constant.

The connection weights \(w_{ij}\) are initially set and constrained by the general connectivity principles for the olfactory cortex, but to allow for learning, the weights can be incrementally changed according to a learning rule of Hebbian type [61]. However, learning is not explicitly considered here, although it may well relate to the functional significance of phase transitions in cortical neurodynamics. (Our olfactory/hippocampal model has previously been used for studying the effects of neuromodulation and noise on the efficiency of information processing [61, 63, 69]).

Neuromodulatory effects are simulated by changing the Q-values for primarily the excitatory nodes. When neuromodulatory effects on synaptic transmission are included, we change separately a weight-constant that multiplies all connection strengths, \(w_{ij}\). (Another way to implement neuromodulatory effects is by multiplying the input—output function, g, with an exponential-decay function, representing neuronal adaptation, as has been described elsewhere [67]).

Noise, or spontaneous neural activity, is added in the last term of Eqn. (7.1) via a Gaussian noise function, \(\xi(t)\), such that \(\langle \xi(t) \rangle = 0\), and \(\langle \xi(t) \xi(s) \rangle = 2A\delta(t-s)\). We have studied noise effects by increasing the level A. In some of the simulations, the noise level is changed equally for all network nodes, whereas in other simulations, the change takes place in only some of the network nodes.

7.2.1.2 Simulating noise-induced phase transitions

Simulations with our three-layered paleocortical model display a range of dynamic properties found in olfactory cortex and hippocampus. For example, the model accurately reproduces response patterns associated with a continuous random input signal, and with shock pulses applied to the cortex; see Figs. 7.2 and 7.7 [60].

For a constant, low-amplitude random input (noise), the network is able to oscillate with two separate frequencies simultaneously, around 5 Hz (theta rhythm) and 40 Hz (gamma rhythm). Under certain conditions, such as for high Q-values, the system can also display chaotic-like behaviour, similar to that seen in EEG traces (see Fig. 7.2). In associative memory tasks, the network may initially display a chaotic-like dynamics, which then converges to a near limit-cycle attractor when storing or retrieving a memory (activity pattern) [61, 69].

Simulations with various noise levels show that spontaneously active neurons can induce global, synchronized oscillations with a frequency in the gamma range (30–70 Hz) [62]. Even if only a few network nodes are noisy (i.e., have an increased intrinsic random activity), and the rest are quiescent, coherent oscillatory activity can be induced in the entire network if connection weights are large enough [7, 62, 65]. The onset of global oscillatory activity depends on, for example, connectivity, noise level, number of noisy nodes, and duration of the noise activity [15]. The location and spatial distribution of these nodes in the network is also important for the onset and character of the global activity. For example, as the number or activity of noisy nodes is increased, or if the distance between them increases, the oscillations tend to change into irregular patterns. In Fig. 7.3, we show that global network activity can be induced if only five out of 1024 network nodes are noisy, and the rest are silent. After a short transient period of collective irregular activity, the entire network begins to oscillate, and collective activity waves move across the network. Even if there is only a short burst of noisy activity, this may be enough to induce global oscillations [15].

[Color plate] Spatiotemporal activity of the excitatory layer of a three-layered paleocortical model, presented as snapshots of network activity (as mean membrane potential of neural populations) at 50-ms intervals. Five centrally-located noisy network nodes can induce collective waves of activity across the entire network. Simulations were made with a 32\(\times\)32 grid of network nodes in each network layer, corresponding to a 10- \(\times\) 10-mm square of rat olfactory cortex. Activity is color-coded on a scale ranging from negative = blue to positive = red.

We have also studied the effects of spontaneously active feedforward inhibitory interneurons in the top layer, motivated by the experimental finding that single inhibitory neurons can synchronize the activity of up to 1000 pyramidal cells [21]. Our simulations demonstrated that even a single noisy network node in the feedforward layer could induce periods of synchronous oscillations in the excitatory layer with a frequency in the gamma range, interrupted by periods of irregular activity [15].

From the simulations, it is apparent that internal noise can cause various phase transitions in the network dynamics. An increased noise level in just a few network nodes can result in a transition from a stationary to an oscillatory state, or from an oscillatory to a chaotic state, or alternatively, a shift between two different oscillatory states [56, 69]. (A more thorough investigation—in which we studied the effects of varying the density of noisy nodes, the noise duration, and the noise level—is reported in [15].)

All of these phenomena depend critically on network structure, in particular on the feedforward and feedback inhibitory loops, and the long-range excitatory connections, modeled with distance-dependent time delays. In this model, details concerning neuron structure or spiking activity are not necessary for the neurodynamics under study. Instead, a balance between inhibition and excitation, in terms of connection strength and timing of events, is essential for coherent frequency and phase of the oscillating neural nodes.

7.2.2 Neuromodulatory-induced phase transitions

Brain activity is constantly changing due to sensory input, internal fluctuations, and neuromodulation. Neuromodulators, such as acetylcholine (ACh) and serotonin (5-HT) , can change the excitability of a large number of neurons simultaneously, or the synaptic transmission between them [18], thus dramatically influencing brain dynamics. ACh can increase excitability by suppressing neuronal adaptation, an effect similar to that of increasing the gain in general. The concentration of these neuromodulators seems to be directly related to the level of arousal or motivation of the individual, and can have profound effects on the neural dynamics (e.g., an increased oscillatory activity), and on cognitive functions, such as associative memory [30].

We use the paleocortical model described in Sect. 7.2.1.1 to investigate how network dynamics can be regulated by neuromodulators, implemented in the model as a varied excitability of the network nodes, and modified connection strengths [67]. The frequencies of the network oscillations depend primarily on intrinsic time-constants and delays, whereas the amplitudes depend predominantly on connection weights and gains, which are under neuromodulatory control. Implementation of these neuromodulatory effects in the model cause dynamical changes analogous to those seen in physiological experiments.

In particular, a “cholinergic” increase in excitability together with suppression of synaptic transmission could induce theta (and/or gamma) rhythm oscillations within the model, even when starting from an initially quiescent state with no oscillatory activity. Fig. 7.4 shows how different oscillatory modes can be induced by neuromodulatory effects: increasing gain and decreasing connection weights. The activity evolution of one arbitrarily chosen excitatory network node is shown for three different levels of “ACh”. For example, if \(Q = 10.0\) and \(w_\text{exc} = w_\text{inh} = 1.0\) (i.e., no suppression of synaptic transmission; \(w_\text{exc}\) and \(w_\text{inh}\) are excitatory and inhibitory connection-weight factors respectively), we can get an oscillatory mode with two different frequencies (\(\sim\)5 Hz and 40 Hz) present simultaneously. This is shown in trace (a) of Fig. 7.4. If Q is kept constant (= 10) while \(w_\text{exc}\) and \(w_\text{inh}\) are reduced successively, the high-frequency component weakens and eventually can be totally eliminated. In trace (b), the connection strengths were decreased by 40% for all excitatory nodes (i.e., \(w_\text{exc} = 0.6\)), and by 60% for all inhibitory nodes (\(w_\text{inh} = 0.4\)). Trace (c) shows the result for \(w_\text{exc} = 0.4\) and \(w_\text{inh} = 0.2\). In the latter case, only the low-frequency component remains. If the excitatory connection strengths are decreased further, i.e. if \(w_\text{exc} \le 0.3\), oscillations disappear.

Different oscillatory modes can be induced by cholinergic neuromodulatory effects that increase gain and decrease connection strengths. The activity evolution of one particular (arbitrarily chosen) excitatory network node is shown for three different levels of “cholinergic” action: (a) low; (b) intermediate; and (c) high.

7.2.3 Attention-induced transitions

Related to the level of arousal, and apparently also under neuromodulatory control, is the phenomenon of attention, which plays a key role in perception, action selection, object recognition, and memory [46]. The main effect of visual attentional selection appears to be a modulation of the underlying competitive interaction between stimuli in the visual field. Studies of cortical areas V2 and V4 indicate that attention modulates the suppressive interaction between two or more stimuli presented simultaneously within the receptive field [22]. Visual attention has several effects on modulating cortical oscillations in terms of changes in firing rate [72], and gamma and beta coherence [34].

In selective-attention tasks, after the cue onset and before the stimulus onset, there is a delay-period during which a monkey’s attention was directed to the place where the stimulus would appear [34]. During the delay, the dynamics was dominated by frequencies around 17 Hz, but with attention, this low-frequency synchronization decreased. During the stimulus period, there were two distinct bands in the power spectrum, one below 10 Hz and another at 35–60 Hz (gamma). With attention, there was a reduction in low-frequency synchronization and an increase in gamma-frequency synchronization .

At a meso-scale, each area of the visual cortex is conventionally divided into six layers, some of which can be further divided into several sub-layers, based on their detailed functional roles in visual information processing (such as orientation and retinotopic position).

The inter-scale network interactions of various excitatory and inhibitory neurons in the visual cortex generate oscillatory signals with complex patterns of frequencies associated with particular states of the brain. Synchronous activity at an intermediate and lower-frequency range (theta, delta, and alpha) between distant areas has been observed during perception of stimuli with varying behavioral significance [76, 84]. Rhythms in the beta (12–30 Hz) and the gamma (30–80 Hz) ranges are also found in the visual cortex, and are often associated with attention, perception, cognition and conscious awareness [23, 24, 34, 37, 38]. Data suggest that gamma rhythms are associated with relatively local computations, whereas beta rhythms are associated with higher-level interactions. Generally, it is believed that lower-frequency bands are generated by global circuits, while higher-frequency bands are derived from local connections.

7.2.3.1 A neocortical network model

In order to investigate how attentional neuromodulation can affect cortical neurodynamics, and cause the observed phase shifts discussed above, we use a neural network model of the visual cortex, based on known anatomy and physiology [41].

Although neocortex consists of six layers—in contrast to paleocortex with its three layers—for simplicity, we lump some of the neocortical layers together. Thus, our model has three functional layers, including layer 2/3, layer 4 and layer 5/6 of the visual cortex. Each layer contains 20\(\times\)20 excitatory model neurons (pyramidal neurons in layer 2/3 and layer 5/6, and spiny stellate neurons in layer 4) in a quadratic lattice with lattice distance 0.2 mm. For each excitatory layer, there are also 10\(\times\)10 inhibitory neurons in a quadratic lattice with lattice distance 0.4 mm. Thus, there are 20% inhibitory neurons, which roughly corresponds to the observed cortical distribution.

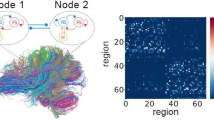

Figure 7.5 shows the schematic diagram of the network topology. The inhibitory neurons in each layer have interactions within their own layer only, while excitatory neurons have interactions within their own layer as well as between layers and areas. The within-layer connections between excitatory and inhibitory neurons is of “Mexican hat” shape, with an on-center and an off-surround lateral synaptic input for each neuron, i.e., excitatory at short distance, and inhibitory at a long distance (see Ref. [41] for details).

Schematic diagram of the model architecture. Small triangles in layers 2/3 and 5/6 represent pyramidal neurons; small open circles in layer 4 are spiny stellate neurons; small filled circles in each layer are inhibitory neurons. Arrows show connection patterns between different layers and signal flows from other areas. Large solid open circles represent lateral excitatory connection radius; large dashed open circles represent inhibitory connection radius; dotted open circles in layers 2/3 and 5/6 denote the top—down attention modulation radius \(R_\text{modu}\).

Since we wish to compare model results against observed data from visual cortex—in particular, spike-triggered averages of local field potentials—we need to use spiking model neurons; this is in contrast to the paleocortical model, which uses network nodes corresponding to populations of neurons, resulting in a continuous non-spiking output. For the present case, all excitatory model neurons satisfy Hodgkin—Huxley equations of the form,

where V is the membrane potential in mV; \(C = 1 \mu\)F is the membrane capacitance ; \(g_\text{L}\) is the leak conductance; \(g_\text{Na} = 20\) mS and \(g_\text{K} = 10\) mS are the maximal sodium and potassium conductances , respectively; \(g_\textsc{ahp}\) is the maximal slow potassium conductance of the after-hyperpolarization (AHP) current —this varies from 0 to 1.0 mS, depending on the attentional state: in an idle state, \(g_\textsc{ahp} = 1.0\) mS; with attention, \(g_\textsc{ahp} \le 1.0\) mS. The variables m, h, N and w are calculated in a conventional way, and described more thoroughly in Ref. [41].

The inhibitory neurons have identical equations as above, except that there is no AHP current. The synaptic input current, \(I^\text{syn}\) of the pyramidal, stellate, and inhibitory neurons is described below.

In each layer j (where \(j = \)2/3, 4, and 5/6) of the local-area network, there are four types of interactions: (i) lateral excitatory—excitatory, (ii) excitatory—inhibitory, (iii) inhibitory—excitatory, and (iv) inhibitory—inhibitory, with corresponding connection strengths, \(C^{ee}_{j,kl}\), \(C^{ie}_{j,kl}\), \(C^{ei}_{j,kl}\), and \(C^{ii}_{j,kl}\), which vary with distance between neurons k and l.

The synaptic input current, \(I^\textrm{syn}_{4s,k}(t)\), of the \(k^\text{th}\) stellate neuron in layer 4 at time t is composed of the ascending input from the pyramidal neurons in layer 5/6, descending input from the pyramidal neurons in layer 2/3, and lateral excitatory inputs from the on-centre neighboring stellate neurons in layer 4. It also includes lateral inhibitory inputs from the off-surround neighboring inhibitory neurons in the same layer, resulting in,

The synaptic input current, \(I^\text{syn}_{4i,k}(t)\), of the \(k^\text{th}\) inhibitory neuron in layer 4 is composed of the lateral excitatory inputs from neighboring stellate neurons and lateral inhibitory inputs from neighboring inhibitory neurons,

The synaptic input currents for the other layers 2/3 and 5/6 are calculated in a similar way (see Ref. [41] for details). In addition, each neuron of the network receives an internal background noise current.

The excitatory and inhibitory presynaptic outputs in Eqs. (7.4) and (7.5) satisfy first-order differential equations (7.6) and (7.7), respectively:

where j refers to the layer, and l to the presynaptic neuron. \(V_{j,l}\) corresponds to the membrane potential of presynaptic neuron l in layer j.

7.2.3.2 Simulating neurodynamical effects of visual attention

Our simulations are based on the visual attention experiment by Fries et al. [34]. Thus, in each of the three layers, we have groups of “attended-in” neurons, \(A_\text{in}\) (where attention is directed to a stimulus location inside the receptive field (RF) of these neurons), and groups of “attended-out” neurons, \(A_\text{out}\) (where attention is directed to a stimulus location outside the RF these neurons). During a stimulus period, two identical stimuli are presented: one appears at a location inside the RF of the \(A_\text{in}\) neurons and the other appears at a location inside the RF of the \(A_\text{out}\) neurons. The top-down modulation radius \(R_\text{modu}\) is taken as 0.6 mm, which is larger than the lateral excitatory connection radius of 0.5 mm, in each layer. In addition, each neuron of the network receives an internal background-noise input current.

When analyzing the simulated spike trains, we calculate power spectra of spike triggered averages (STAs) of the local field potential (LFP) , representing the oscillatory synchronization between spikes and LFP. We investigate the dynamics and the effects of attention (cholinergic modulation) in an idle state, during stimulation, and during a delay period, as described in more detail below.

When attention is directed to a certain place, the prefrontal lobe sends cholinergic input signals via top-down pathways to layers 2/3 and 5/6 of the visual cortex, as shown in Fig. 7.5. To test various hypotheses about the mechanisms of attention modulation, we assume that the top-down signals may have three different effects on the pyramidal neurons, and on the local and global network connections in our simulations: (i) facilitation of extracortical top-down excitatory synaptic inputs to the pyramidal neurons (global connections); (ii) inhibition of certain intracortical excitatory and inhibitory synaptic conductances (local connections) [58, 59]; and (iii) modulation of the slow AHP current by decreasing the K-conductance, \(g_\textsc{ahp}\), thus increasing excitability [19].

We simulated the attentional modulation effect of inhibition of intracortical excitatory and inhibitory synaptic inputs by decreasing the lateral excitatory and inhibitory conductances to zero (i.e., \(g_j^{ee} = g_j^{ei} = 0\) mS) for the pyramidal neurons in the \(A_\text{in}\) neurons within \(R_\text{modu}\) in layers 2/3 and 5/6.

To simulate the dynamics during a stimulus period, we applied a pair of bottom-up sensory stimulation currents: a stronger current of \(25 \mu\)A, and one weaker current of \(5 \mu\)A. The stronger current was directly applied to layer-4 stellate neurons in both the \(A_\text{in}\) and the \(A_\text{out}\) groups. The weaker current was applied to layer-5/6 pyramidal neurons in both groups. In addition, top-down attention modulation was applied to the system.

Figure 7.6 shows the effects of attentional modulation on neuronal spikes, LFP, STA, and STA-power in a delay period (Fig. 7.6(a)), and in a stimulation period (Fig. 7.6(b)). The top traces show the LFP of \(A_\text{in}\) and \(A_\text{out}\) neurons, respectively. Below the LFP traces are the spike trains of a pyramidal cell in each of the \(A_\text{in}\) and \(A_\text{out}\) groups. The computed STA and STA-power of the corresponding neurons in layer 2/3 are shown in the middle and bottom of the figure.

Attentional modulation effects during (a) a delay period, and (b) a stimulus period. LFP (local field potential), spikes, STA (spike-triggered averages) and STA power of attended-in and attended-out groups, calculated for the superficial layer, when the excitatory connections and inhibitory connections to each pyramidal neuron in the attended-in group within \(R_\text{modu}\) in layers 2/3 and 5/6 are reduced to zero.

The simulation results show reduced beta synchronization with attention during a delay period (under certain modulation situations, see Fig. 7.6(a)), and enhanced gamma synchronization due to attention during a stimulation period (Fig. 7.6(b)). In comparison with an idle state for which the dominant frequencies are around 17 Hz, the bottom panel of Fig. 7.6(a) shows that the dominant frequency of the oscillatory synchronization and its STA power in the \(A_\text{in}\) group is decreased by inhibition of the intracortical synaptic inputs. This result agrees qualitatively with experimental findings that low-frequency synchronization is reduced during attention. In comparison with Fig. 7.6(a), the dominant frequency of the STA power spectrum of both \(A_\text{in}\) and \(A_\text{out}\) groups in Fig. 7.6(b) is shifted towards the gamma band due to the stimulation inputs. The STA power of the dominant frequency of the \(A_\text{in}\) group is higher than that of the \(A_\text{out}\) group.

It is apparent that many factors play important roles in the network neurodynamics. These include (i) the interplay of ion channel dynamics and neuromodulation at a micro-scale; (ii) the lateral connection patterns within each layer; (iii) the feedforward and feedback connections between different layers at a meso-scale; and (iv) the top-down and bottom-up circuitries at a macro-scale. The interaction between the top-down attention modulation, and the lateral short-distance excitatory and long-range inhibitory interactions, all contribute to the beta synchronization decrease during the delay period, and to the gamma synchronization enhancement during the stimulation period in the \(A_\text{in}\) group. The top-down cholinergic modulation tends to enhance the excitability of the \(A_\text{in}\) group neurons. The Mexican-hat lateral interactions mediate the competition between \(A_\text{in}\) and \(A_\text{out}\) groups.

Other simulation results (not shown) demonstrate that the top-down attentional or cholinergic effects on individual neurons, and on local and global network connections, are quite different. The effect of facilitating global extracortical connections results in a slight shift of the dominant frequency in the STA power spectrum to higher beta in both the \(A_\text{in}\) and the \(A_\text{out}\) groups. In particular, the higher beta synchronization of the \(A_\text{in}\) group is much stronger than that of the \(A_\text{out}\) group.

7.3 Externally-induced phase transitions

In addition to various internal (natural) causes of phase transitions, there is a number of ways to induce neural phase transitions externally (artificially). Here, we will exemplify this by electrical stimulation and by application of anesthetics. Applying such external inputs may give a further clue to the dynamical features of the neural system under study, in much the same way as the response of any system to an external signal may reveal important system properties.

7.3.1 Electrical stimulation

By the 18\(^\text{th}\) century, when the Italian physicists Galvani and Volta examined electrical properties of living tissues of frogs, it had become clear that nerves and muscles could respond to electrical stimulation. Since then, electricity has been used both to stimulate and to measure nerve activity in the body, and also in the brain itself. The possibility of measuring the electrical component of brain activity with external electrodes was discovered by Berger in the early 20\(^\text{th}\) century [16], and it was not difficult to see that direct electrical stimulation also could affect brain activity. A variety of electric stimulations have been used not only for investigating brain response, but also to treat mental disorders such as depression [17, 42], schizophrenia [83], and neurological disorders such as Parkinson’s disease [82]. In the following, we will give an example of how electrical stimulation can be used to study the relation between structure, dynamics, and function in a mammalian brain. A second example will illustrate how electrical stimulation is used in psychiatry.

7.3.1.1 Electrical pulses to olfactory cortex

When studying the dynamical properties of the olfactory cortex, Freeman and co-workers stimulated the lateral olfactory tract (LOT) of cats and rodents with electric shock pulses of varying amplitude and duration, then recorded the neural response via EEG [27, 26]. A strong pulse gives a biphasic response with a single fast wave moving across the surface, whereas a weak pulse results in an oscillatory response, showing up as a series of waves with diminishing amplitude. When a short pulse is applied to the LOT input corner of the network model, waves of activity move across the model cortex, consistent with corresponding global dynamic behavior of the functioning cortex. In Fig. 7.7, the experimentally measured responses are shown in the upper traces, and the model simulations are shown in the lower traces.

7.3.1.2 Electroconvulsive therapy

A more dramatic example of electrical stimulation comes from psychiatry, where electroconvulsive therapy (ECT) is one of the most successful treatments for depression and other mental disorders [17]. Despite its widespread use and successful results, it is still not known how ECT affects the brain neurologically. It has been suggested that it causes changes in the connectivity of cortical networks, either negatively, by destroying cells and/or synapses, or positively, by stimulating nerve-cell growth and sprouting [1, 86].

Clinical data show that the EEG of patients treated with ECT changes qualitatively over the treatment session, and displays some characteristic behaviors [42]. Due to the complexity of these time-series, analytical work has been difficult and scarce, and the anatomical and physiological basis for the dynamical patterns of post-ECT EEG remain to be elucidated.

In general, the EEG after ECT stimulation exhibits a specific pattern of seizures (see Fig. 7.8), but there are individual differences depending on seizure threshold, stimulus doses, and sub-diagnosis [39, 40, 42, 85]. Apparently, ECT stimulation can induce synchronous oscillations of neuronal populations over large parts of the brain where the oscillatory patterns depend on intrinsic properties, the external input and the treatment procedure. The dynamics of a recorded post-ECT, ictal, EEG time-series shifts between several phases [85]. Generally, in the clinical data one can find a sequence of phases such as preictal, polyspike (tonic), polyspike and slow-wave (clonic), termination, and postictal, respectively [17].

We apply computational methods to address the problem of how ECT might affect cortical structures and their dynamics. We have developed models of neocortical structures to investigate and suggest possible mechanisms underlying the EEG signal, and in particular, how ECT-like input might influence the dynamics of the system. We are able to simulate qualitatively certain ECT EEG patterns [39, 40]. Considering the characteristics of the dynamical shifts between several phases of ECT EEG, we assume that the phase shifts are related to intrinsic local and global network properties, physiological parameters of the cortex, and external ECT stimulus.

We use various versions of a neocortical model similar to that of Sect. 7.2.3.1, but with differently modeled neurons, since we want to compare our results with previous simulations of ECT EEG by Giannakopoulos et al [35]. Network connectivity is varied in terms of cell types, number of neurons, and short- and long-distance connections. In particular, we investigate how a variation in the balance between excitation and inhibition affects the network dynamics. The guiding idea is that ECT primarily acts on network connectivity in stimulating nerve cell growth and sprouting [1, 86].

The model uses, as far as possible, physiological parameter values, and the same equations for describing the dynamics in all of the model variants. The network dynamics is described by Eq. (7.8), and the neurons are modeled as continuous output units of Fitzhugh—Nagumo type, as described by Eqs (7.9) and (7.10). The equations and parameter values are essentially the same as in Ref. [35], but in Eq. (7.8), we also include inputs from inhibitory neurons to other inhibitory and excitatory neurons.

Here, u i is the postsynaptic potential of neuron i; v i is the membrane potential at the axon initial segment; w i is an auxiliary variable; A, b, and C are appropriate positive constants which guarantee the existence of the oscillation interval; and \(e_i^\text{ex(in)}\) is the external signal. The nonlinear function \(g(v)\) describes the relation between the pre- and postsynaptic potential of the neurons, and is monotonically increasing (\(\alpha > 0\)) and nonnegative (\(0 \le m_g < M_g\)). The elements of the connection matrix, \(c_{ik}\), describe the topology of the network, and \(p^+\) and \(p^-\) are the excitatory and inhibitory connection strengths, respectively. The neurons have time-constants \(\tau^\text{ex}\) and \(\tau^\text{in}\). The total time-delay, \(T_{ik}\), consists of a synaptic delay, \(T^\sigma\), and the dendritic and axonal propagation time from neuron k to neuron i. The synaptic membrane conductance of neuron i is denoted by \(\gamma_i\). The EEG signal is calculated as the mean membrane potential over all (excitatory) neurons.

The network connectivity mimics that of the six-layered neocortex, with columns connected via long-range lateral connections, and with a circuitry inspired by Szentagothai and others [75, 77, 81]. In our simulations, we use 100 neurons, of which 80 are excitatory of two types (pyramidal and spiny stellate neurons), and 20 are inhibitory of two types (large basket neurons and short-distance inhibitory interneurons). Each layer consists of 4\(\times\)4 excitatory neurons in a quadratic lattice with lattice spacing 0.2 mm, and four randomly distributed inhibitory neurons. The distance between layers is 0.4 mm. The “regional” network connects four columns by long-distance excitatory connections in layers 2 and 3, with a distance between columns of 4 mm. (A more thorough description of the model is given in Ref. [39].)

In Fig. 7.9, the mean activity of simulated excitatory neurons in layers 2 to 6 is shown, going from top to bottom (layer 1 is considered to consist of fibers only). The duration of the ECT-like input is 200 ms. As seen from the figure, the neurons in each layer begin to oscillate synchronously during the ECT stimulation, but the collective oscillatory patterns vary from layer to layer, depending on the difference in connectivity.

Network response to simulated 200-ms ECT stimulus. From top to bottom, panels show mean membrane potential of excitatory neurons in layers 2–6 respectively. (a) Effect of density and long-distance inhibitory connections; (b) effect of long-distance excitatory lateral connections. Scale for y-axis is arbitrary.

In the left-hand traces of Fig. 7.9, the simulation shows the neural dynamics resulting from long-range inhibitory connections between basket cells in layer 3 of the four columns. In these traces, the mean membrane potential shows rather strong phase shifts in layers 2 and 3 due to the long-distance inhibitory connections in layer 3. In layer 4, mean membrane potential decreased abruptly, long before the ECT stimulus had ended. After the ECT input had ended, oscillations died out immediately in this layer, due to the lack of lateral excitatory connections here. The synchronous oscillations are comparatively strong in layers 5 and 6 due to a reduced neuronal density in these layers.

In the right-hand side of Fig. 7.9, we have replaced the long-range inhibitory connections by long-range excitatory lateral connections between the four pyramidal neurons in the centers of each column within layers 2 and 3. After the ECT stimulation, the synchronous oscillations in layers 2 and 3 show fewer phase shifts between high and low amplitude due to the long-distance excitatory connections. The activity in layer 4 is almost the same as for the case of long-range inhibition. In layers 5 and 6, the mean membrane potential shows more prominent phase shifts between synchronous and desynchronous oscillation after the ECT stimulus has ended.

When we decrease the excitatory connection strength of the network, the synchronous oscillations decrease in each layer. (The network dynamics can also change dramatically if, for example, the time-constants of excitatory and/or inhibitory neurons are changed slightly).

These studies demonstrate that the collective network dynamics varies with connection topology, neuron density in different layers, the balance between excitatory and inhibitory strength, neuronal intrinsic oscillatory properties, and external input.

Clinical EEG data from a series of six consecutive treatments [17] shows a transition from large amplitude oscillatory activity with apparent phase shifts, to low amplitude oscillations with fewer phase shifts. Comparing the model results of Fig. 7.9 with these findings, we may assume that the ECT stimuli could form new long-distance excitatory connections, as these lead to fewer phase shifts, while long-distance inhibitory connections induce more phase shifts. These results support the notion that ECT stimuli can induce regeneration of neurons and the formation of new connections.

7.3.2 Anesthetic-induced phase transitions

Another way of artificially inducing phase transitions in cortical neurodynamics is by using neuroactive drugs, such as certain kinds of anesthetics and anti-epileptics, which clearly can induce transitions between mental states. An important principle in the action of these drugs is the selective blocking or activation of ion channels, which will have differing effects on the neurodynamics depending on the relative selectivity and intrinsic network activity [9, 48, 80]. Likewise, up-regulation of Na and K channels will induce different activity patterns, depending on their relative densities in the cell membrane.

The permeability constants, \(P^*_\text{Na}\) and \(P^*_\text{K}\) (defined as the permeability values for fully open ion channels), depend on the density of ion channels in the cell membrane, so they will be referred to as channel densities here. It has been shown that different combinations of these densities cause different oscillatory behaviors in single-cell dynamics at constant stimulation [8]. There are also combinations of Na and K channel density (\(P^*_\text{Na}/P^*_\text{K}\)) for which there are no oscillations at all.

If the stimulus applied to a given neuron is too strong, the potential cannot drop to the resting potential, and the neuron is not able to maintain an oscillatory activity; whereas if the stimulus is too weak, the neuron cannot be driven above the oscillation threshold. Both the upper and lower limit of the stimulus interval for which a neuron oscillates depend on the \(P^*_\text{Na}/P^*_\text{K}\) ratio.

By constructing computational network models of neurons with different \(P^*_\text{Na}/P^*_\text{K}\) values, we investigate how the network dynamics depend on the density of ion channels at the single-neuron level, thus relating microscopic properties of single neurons to mesoscopic brain dynamics. This is based on the notion that general anesthetics function by blocking specific K channels, thus shifting the affected neurons towards a larger Na:K permeability ratio [9, 33, 47].

7.3.2.1 Neural network model with spiking neurons

In this study, we use a neural network model with spiking neurons described by Frankenhaeuser-Huxley (FH) equations [8]; these deviate slightly from the classical Hodgkin-Huxley formalism, but are more accurate for cortical neurons and better for our purpose here. In our simulations, the only free parameters for the neuronal model are the permeability values (channel densities) \(P^*_\text{Na}\) and \(P^*_\text{K}\). Using this model, we may study the effects of changes in ion-channel composition on the network dynamics as an assumed effect of certain anesthetics.

As a global activity measure (comparable to EEG), we use the arithmetic-mean field potential. Our network model here consists of 6\(\times\)6 neurons, arranged in a square lattice and connected in an all-to-all manner. We use a distance-dependent connectivity, with the connection strength decreasing with distance as \(w \sim 1/r\).

Six (out of 36) homogenously distributed network neurons are inhibitory (with periodic boundary conditions), motivated by the fact that about 20% of the neocortical neurons in the mammalian brain are inhibitory (as in the previous models described above).

The synaptic input enters the single neuron model [8] as an additional input current, \(I_i(t)\):

where \(w_{ij}\) is the synaptic weight between the neurons i and j, \(t_\text{syn}\) (1 ms) is the synaptic delay, and \(\tau_s\) is the synaptic (membrane) time-constant (30 ms). The time \(t^{(f)}\) refers to the arrival of an action potential.

Thus, in a network, the state equation for a neuron, with membrane potential v and capitance C M , becomes a sum of various currents:

I S is the stimulation current, I G is Gaussian noise, \(I_\text{Na}\) is the initial transient current through Na channels, \(I_\text{K}\) is the delayed sustained current through K channels, and \(I_\text{L}\) is the leak current. \(P^*_\text{Na}\) and \(P^*_\text{K}\) enter in the expressions for \(I_\text{Na}\) and \(I_\text{K}\), respectively. (For more details of the model, see Ref. [45].)

7.3.2.2 Variation of network dynamics with channel-density composition

The network dynamics depends on the subcellular densities of Na and K channels (\(P^*_\text{Na}\) and \(P^*_\text{K}\)), and on the synaptic weight factor (w) at the network level; these are the only free parameters in our analysis. All neurons have the same initial conditions, but the spatial homogeneity is broken by the random component in the input. (The stimulus I S is for every run given a value close to the oscillation threshold in each particular case).

The network consists of inhibitory and excitatory neurons with different \(P^*_\text{Na}/P^*_\text{K}\) ratios. Keeping the excitatory neurons fixed at the channel density values, \(P^*_\text{Na}/P^*_\text{K} = 15/7.5\), we vary the K-channel density in the inhibitory neurons. We model the effect of anesthetic by assuming that it blocks specific K channels, primarily in the inhibitory neurons. The arithmetic mean of the transmembrane potential, taken over all neurons, is used as a measure of the collective network dynamics (the “EEG”).

The strength of the stimulus required to make a single neuron oscillate varies depending on the \(P^*_\text{Na}\) and \(P^*_\text{K}\) values for that neuron. There is a general trend that oscillation frequency increases with stimulus, and that neurons with low \(P^*_\text{K}\) values have a low oscillation threshold, but are also more sensitive to over-stimulation than neurons with high \(P^*_\text{K}\) values. (Here, we want to study the effect that these findings have at a network level, where the stimulus varies over time due to synaptic interactions).

Since K channels are important regulators of firing patterns, and since K channels have been suggested to be the main targets for general anesthetics and anti-epileptics [33, 47], we explored the neurodynamical effects of reducing the K-channel density, in particular for the inhibitory neurons. In order to limit the number of simulations, we keep \(P^*_\text{Na}\) constant (at 15), varying only \(P^*_\text{K}\). Fig. 7.10 shows a time series for a network, where the excitatory neurons have a low K-channel density (\(P^*_\text{K} = 3.0\)). The inhibitory neurons initially have a high density of K channels (\(P^*_\text{K} = 12.5\)), but the K channels were blocked in steps every 1000 ms, by decreasing \(P^*_\text{K}\) and shifting the inhibitory neurons from \(P^*_\text{K} = 12.5\), to \(P^*_\text{K} = 7.5\), and finally to \(P^*_\text{K} = 3.0\). When the inhibitory neurons (middle trace) reach \(P^*_\text{K} = 3.0\), both inhibitory and excitatory neurons alternate between periods of high amplitude activity, and periods with over-stimulation and potential drop. The mean network dynamics (bottom trace) is shifted towards a qualitatively different dynamical pattern. In this case, it is clear that the blocking of K channels in inhibitory neurons transforms unsynchronized, high-frequency oscillatory activity to an enveloped and steady slow-wave oscillation, qualitatively mimicking the transformation of EEG-patterns when applying general anesthetics [55].

[Color plate] Model response to stepped reductions in K-channel density in inhibitory neurons. For excitatory neurons, the densities of Na and K channels is kept fixed at the constant ratio \(P^*_\text{Na}/P^*_\text{K} = 15/3\), while for inhibitory neurons the ratio is stepped consecutively from \(P^*_\text{Na}/P^*_\text{K} = 15/12.5\), to \(15/7.5\), and finally to \(15/3\), by decreasing \(P^*_\text{K}\) every 1000 ms. The two upper time-series show the activity of (a) an excitatory neuron (red trace), and (b) an inhibitory neuron (blue trace); (c) lower trace (black) shows the network mean.

These simulations show that the mesoscopic network dynamics can be shifted into, or out of, different oscillatory states by small changes in the ion-channel densities, even for single neurons. Similar effects can also be obtained by changing connection strengths in the network model, which we have shown elsewhere [45]. Both of these phenomena are of pharmacological interest, since some drugs can affect the permeability of ion channels also in the synapses [48]. Our simulations demonstrate that the blocking of specific K channels, as a possible effect of some anesthetics, can change the global activity from high-frequency (awake) states to low-frequency (anesthetized) states, as apparent in recorded and simulated EEG .

7.4 Discussion

In this chapter, I have given a few examples of how computational models can be used to study phase transitions in mesoscopic brain dynamics. As examples of internally/naturally induced phase transitions, I have presented some models with intrinsic noise, neuromodulation, and attention, which in fact, may all be related. In particular, neuromodulation seems to be closely linked to the level of arousal and attention. It may also affect the internal noise level, e.g., by varying the threshold for firing. As examples of externally/artificially induced phase transitions, I have discussed electrical stimulation—both as electric shocks applied directly onto the olfactory bulb and cortex in an experimental setting with animals, and as electroconvulsive therapy applied in a clinical situation in treatment of psychiatric disorders. The final example was a network model testing how certain anesthetics may act on the brain dynamics through selective blocking of ion channels.

In all cases, the mesoscopic scale of cortical networks has been in focus, with an emphasis on network connectivity. The objective has been to investigate how structure is related to dynamics, and how the dynamics at one scale is related to that at another. Other than in passing, we have not discussed how structure and dynamics are related to function, since this is beyond the scope of this chapter, but the general notion is that mesoscopic brain dynamics reflects mental states and processes.

Our model systems have been paleocortical structures, the olfactory cortex and hippocampus, as well as neocortical structures, exemplified by the visual cortex. These structures display a complex dynamics with prominent oscillations in certain frequency bands, often interrupted by irregular, chaotic-like activity. In many cases, it seems that the collective cortical dynamics after external stimulation results from some kind of “resonance” between network connectivity (with negative and positive feedback loops), neuronal oscillators, and external input.

While our models are often aimed at mimicking specific cortical structures and network circuitry at a mesoscopic level, in some cases there is less realism in the connectivity than in the microscopic level of single neurons. The reason for this is that the objective in those cases has been to link the neuronal spiking activity with the collective activity of inter-connected neurons, irrespective of the detailed network structure. Model simulations then need to be compared with spike trains of single neurons, as captured with microelectrodes or patch-clamp techniques. In cases where the network connectivity is in focus, the network nodes may represent large populations of neurons, and their spiking activity is represented by a collective continuous output, more related to LFP or EEG activity.

Models should always be adapted to the problem they are supposed to address, with an appropriate level of detail at the spatial and temporal scales considered. In general, it could be wise to apply Occam’s razor in the modeling process, aiming at a model as simple as possible, and with few (unspecified) parameters. For the brain, due to its great complexity and our still rather fragmented knowledge, it is particularly hard to find an appropriate level of description and to decide which details to include. For example, different models may address the problem of neural computation at different levels, from the single-neuron level [57] to cortical networks and areas [30, 74, 79, 89]. Even though the emphasis may be put at different levels, the different models can often be regarded as complementary descriptions, rather than mutually exclusive. At this stage, it is in general not possible to say which models give the best description, for example when trying to link neural and mental processes, in particular with regard to the significance of phase transitions.

Even though attempts have been made, it is a struggle to include several levels of descriptions in a single model, relating the activity at the different levels to each other [4, 10, 30, 31, 74, 88, 89]. In fact, relating different spatial and temporal scales in the nervous system, and linking them to mental processes, can be seen as the greatest challenges to modern neuroscience.

In the present work, I have focused on how to model phase transitions in mesoscopic brain dynamics, relating the presentation to anatomical and physiological properties, and I have not so much discussed the functional significance of such transitions, which has been done more thoroughly elsewhere [41, 45, 60, 63, 64]. Below, I will just briefly discuss some of these ideas.

The main question concerns the functional significance of the complex cortical neurodynamics described and simulated above, and in particular, the significance of the phase transitions between various oscillatory states and chaotic or noisy states. The electrical activity of the brain, as captured with EEG, is considered by many to be an epiphenomenon, without any information content or functional significance, but this view is challenged by the bulk of research presented, referenced, and discussed here.

Our computer simulations support the view that the complex dynamics makes the neural information processing more efficient, providing a fast and accurate response to external situations. For example, with an initial chaotic-like state, sensitive to small variations in the input signal, the system can rapidly converge to a limit-cycle attractor memory state [61, 62, 90]. Perhaps the most direct effect of cortical oscillations could be to enhance weak signals and speed up information processing, but it may also reflect collective, synchronous activity associated with various cognitive functions, including segmentation of sensory input, learning, perception, and attention.

In addition, a “recruitment” of neurons in oscillatory activity can eliminate the negative effects of noise in the input, by cancelling out the fluctuations of individual neurons. However, noise can also have a positive effect on system performance, as will be discussed briefly below. Finally, from an energy point of view, oscillations in the neuronal activity should be more efficient than if a static neuronal output (from large populations of neurons) was required.

The intrinsic noise found in all neural systems seems inevitable, but it may also have a functional role, being advantageous to the system. What, then, could be the functional role of the microscopic noise on the meso- and macroscopic dynamics? What, if any, could be the role of spontaneous activity in the brain? A traditional answer is that it generates baseline activity necessary for neural survival, and that it perhaps also brings the system closer to threshold for transitions between different neurodynamical states. It has also been suggested that spontaneous activity shapes synaptic plasticity during ontogeny (see references in Ref. [54]), and it has even been argued that spontaneous activity plays a role for conscious processes [7, 11, 12, 70].

Internal system-generated fluctuations can apparently create state transitions, break down one kind of order to make place for and replacing it with a new kind of order. Externally-generated fluctuations can cause increased sensitivity in certain (receptor) cells through the phenomenon of stochastic resonance (SR) [2, 10, 20, 66, 69, 71]. The typical example of this is when a signal with the addition of noise overcomes a threshold, which results in an increased signal-to-noise relation.

The computer simulations we have described above demonstrate that “microscopic” noise can indeed induce global synchronous oscillations in cortical networks and shift the system dynamics from one dynamical state to another. This in turn can change the efficiency in the information processing of the system. Thus, in addition to the (pseudo-)chaotic network dynamics, the noise produced by a few (or many) neurons, could make the system more flexible, increasing the responsiveness of the system and avoiding getting stuck in any undesired oscillatory mode. In particular, we have shown that spontaneous activity can facilitate learning and associative memory. Indeed, simulations with our paleocortical model demonstrated that an increased neuronal noise level can reduce recall time in associative memory tasks, i.e., the time it takes for the system to recognize a distorted input pattern as any of the stored patterns. Consonant with SR theory [2, 20, 71], we found optimal noise values for which the recall-time reached a minimum [61, 62, 69].

In addition, our simulations also show that neuromodulatory control can be used in regulating the accuracy or rate of the recognition process, depending on current demands. Apparently, the complex dynamics of the brain can be regulated by neuromodulators, and perhaps also by noise. By such control, the neural system could be put into an appropriate state for the right response-action dependent on the environmental demand. Operating with a complex neurodynamics, shifting between various oscillatory and (pseudo-)chaotic states, the brain seems to balance between stability and flexibility, increasing performance efficiency and survival probability for the individual.

The kind of phase transitions discussed in this work may reflect transitions between different cognitive and mental levels or states, for example corresponding to various stages of sleep, anesthesia, or wake states with different levels of arousal, which in turn may affect the efficiency and rate of information processing. In some of our previous work, we have also added gap junctions to the ordinary synaptic connections in our paleocortical model, causing rapid synchronization of the network dynamics, and thus further improving neural information processing in associative memory tasks [13, 14].

Even though we are still at an early stage, I believe a combination of computational analysis and modeling methods of the kind discussed here can serve as an essential complement to experimental and clinical methods in furthering our understanding of neural and mental processes. In particular, when concerned with the inter-relation between structure, dynamics and function of the brain and its cognitive functions, this method may be the best way to make progress. The study of phase transitions in the brain dynamics seems to be one of the most fruitful approaches in this respect.

References

Altar, C.A., Laeng, P., Jurata, L.W., Brockman, J.A., Lemire, A., Bullard, J., Bukhman, Y.V., Young, T.A., Charles, V., Palfreyman, M.G.: Electroconvulsive seizures regulate gene expression of distinct neurotrophic signaling pathways. J. Neurosci. 24, 2667–2677 (2004), doi:10.1523/jneurosci.5377-03.2004

Anishchenko, V.S., Neiman, A.B., Safanova, M.A.: Stochastic resonance in chaotic systems. J. Stat. Phys. 70, 183–196 (1993), doi:10.1007/bf01053962

Arbib, M.A. (ed.): The Handbook of Brain Theory and Neural Networks. MIT Press, Cambridge, Mass. (1995)

Arbib, M.A., Érdi, P., Szentágothai, J.: Neural Organization Structure, Function and Dynamics. MIT Press, Cambridge, Mass. (1998)

Århem, P., Blomberg, C., Liljenström, H. (eds.): Disorder Versus Order in Brain Function. World Scientific, London (2000)

Århem, P., Braun, H., Huber, M., Liljenström, H.: Nonlinear state transitions in neural systems: From ion channels to networks. In: H. Liljenström, U. Svedin (eds.), Micro - Meso - Macro: Addressing Complex Systems Couplings, pp. 37–72, World Scientific, London (2005)

Århem, P., Johansson, S.: Spontaneous signalling in small central neurons: Mechanisms and roles of spike-amplitude and spike-interval fluctuations. Int. J. Neural Syst. 7, 369–376 (1996), doi:10.1142/s0129065796000336

Århem, P., Klement, G., Blomberg, C.: Channel density regulation of firing patterns in a cortical neuron model. Biophys. J. 90, 4392–4404 (2006)

Århem, P., Klement, G., Nilsson, J.: Mechanisms of anesthesia: Towards integrating network, cellular and molecular modeling. Neuropsycopharmacology 28, S40–S47 (2003), doi:10.1038/sj.npp.1300142

Århem, P., Liljenström, H.: Fluctuations in neural systems: From subcellular to network levels. In: F. Moss, S. Gielen (eds.), Handbook of Biological Physics, vol. 4, pp. 83–129, Elsevier, Amsterdam (2001)

Århem, P., Liljenström, H.: Beyond cognition - on consciousness transitions. In: H. Liljenström, P. Århem (eds.), Consciousness Transitions - Phylogenetic, Ontogenetic and Physiological Aspects, pp. 1–25, Elsevier, Amsterdam (2007)

Århem, P., Lindahl, B.I.B.: Neuroscience and the problem of consciousness: Theoretical and empirical approaches - an introduction. Theor. Med. 14, 77–88 (1993), doi:10.1007/bf00997268

Aronsson, P., Liljenström, H.: Non-synaptic modulation of cortical network dynamics. Neurocomputing 32-33, 285–290 (2000), doi:10.1016/s0925-2312(00)00176-4

Aronsson, P., Liljenström, H.: Effects of non-synaptic neuronal interaction in cortex on synchronization and learning. Biosystems 63, 43–56 (2001), doi:10.1016/s0303-2647(01)00146-0

Basu, S., Liljenström, H.: Spontaneously active cells induce state transitions in a model of olfactory cortex. Biosystems 63, 57–69 (2001)

Berger, H.: Über das elektroenkephalogramm des menschen. Arch. Psychiatr. Nervenkrankh. 87, 527–570 (1929)

Beyer, J.L., Weiner, R.D., Glenn, M.D.: Electroconvulsive Therapy. American Psychiatric Press, London (1998)

Biedenbach, M.A.: Effects of anesthetics and cholinergic drugs on prepyriform electrical activity in cats. Exp. Neurol. 16, 464–479 (1966), doi:10.1016/0014-4886(66)90110-5

Börgers, C., Epstein, S., Kopell, N.J.: Background gamma rhythmicity and attention in cortical local circuits: A computational study. Proc. Natl. Acad. Sci. USA 102(19), 7002–7007 (2005), doi:10.1073/pnas.0502366102

Bulsara, A., Jacobs, E.W., Zhou, T., Moss, F., Kiss, L.: Stochastic resonance in a single neuron model: Theory and analog simulation. J. Theor. Biol. 152, 531–555 (1991), doi:10.1016/s0022-5193(05)80396-0

Cobb, S.R., Buhl, E.H., Halasy, K., Paulsen, O., Somogyi, P.: Synchronization of neuronal activity in hippocampus by individual gabaergic interneurons. Nature 378, 75–78 (1995), doi:10.1038/378075a0

Corchs, S., Deco, G.: Large-scale neural model for visual attention: Integration of experimental single-cell and fmri data. Cerebr. Cortex 12, 339–348 (2002)

Crick, F., Koch, C.: Towards a neurobiological theory of consciousness. Semin. Neurosci. 2, 263–275 (1990)

Eckhorn, R., Bauer, R., Jordon, W., Brosch, M., Kruse, W., Monk, M., Reitboeck, H.J.: Coherent oscillations: A mechanism of feature linking in the in the visual cortex? Biol. Cybern. 60, 121–130 (1988), doi:10.1007/bf00202899

FitzHugh, R.: Mathematical models of threshold phenomena in the nerve membrane. Bull. Math. Biophys. 17, 257–278 (1955), doi:10.1007/bf02477753

Freeman, W.J.: Distribution in time and space of prepyriform electrical activity. J. Neurophysiol. 22, 644–665 (1959)

Freeman, W.J.: Linear models of impulse inputs and linear basis functions for measuring impulse responses. Exp. Neurol. 10, 475–492 (1964), doi:10.1016/0014-4886(64)90046-9

Freeman, W.J.: Nonlinear gain mediating cortical stimulus-response relations. Biol. Cybern. 33, 237–247 (1979), doi:10.1007/bf00337412

Freeman, W.J.: Societies of Brains - A Study in the Neuroscience of Love and Hate. Lawrence Erlbaum, Hillsdale, NJ (1995)

Freeman, W.J.: Neurodynamics: An Exploration in Mesoscopic Brain Dynamics. Springer, Berlin (2000)

Freeman, W.J.: The necessity for mesoscopic organization to connect neural function to brain function. In: H. Liljenström, U. Svedin (eds.), Micro - Meso - Macro: Addressing Complex Systems Couplings, pp. 25–36, World Scientific, London (2005)

Freeman, W.: Mass Action in the Nervous System. Academic Press, New York (1975)

Friedrich, P., Urban, B.W.: Interaction of intravenous anesthetics with human neuronal potassium currents in relation to clinical concentrations. Anesthesiology 91, 1853–1860 (1999)

Fries, P., Reynolds, J.H., Rorie, A.E., Desimone, R.: Modulation of oscillatory neuronal synchronization by selective visual attention. Science 291, 1560–1563 (2001), doi:10.1126/science.1055465

Giannakopoulos, F., Bihler, U., Hauptmann, C., Luhmann, H.: Epileptiform activity in a neo-cortical network: a mathematical model. Biol. Cybern. 85, 257–268 (2001), doi:10.1007/s004220100257

Gordon, E. (ed.): Integrative Neuroscience: Bringing Together Biological Psychological and Clinical Models of the Human Brain. Harwood Academic Press, New York (2000)

Gray, C.M., Konig, P., Engel, A.K., Singer, W.: Oscillatory responses in cat visual cortex exhibit inter-columnar synchronization which reflects global stimulus properties. Nature 338, 334–337 (1989), doi:10.1038/338334a0

Gray, C.M., Singer, W.: Stimulus-specific neuronal oscillations in orientation columns of cat visual cortex. Proc. Natl. Acad. Sci. USA 86, 1698–1702 (1989)

Gu, Y., Halnes, G., Liljenström, H., von Rosen, D., Wahlund, B., Liang, H.: Modelling ect effects by connectivity changes in cortical neural networks. Neurocomputing 69, 1341–1347 (2006), doi:10.1016/j.neucom.2005.12.104

Gu, Y., Halnes, G., Liljenström, H., Wahlund, B.: A cortical network model for clinical EEG data analysis. Neurocomputing 58-60, 1187–1196 (2004), doi:10.1016/j.neucom.2004.01.184

Gu, Y., Liljenström, H.: A neural network model of attention-modulated neurodynamics. Cognitive Neurodynamics 1, 275–285 (2007), doi:10.1007/s11571-007-9028-7

Gu, Y., Wahlund, B., Liljenström, H., von Rosen, D., Liang, H.: Analysis of phase shifts in clinical EEG evoked by ect. Neurocomputing 65, 475–483 (2005), doi:10.1016/j.neucom.2004.11.004

Haken, H.: Synergetics: An Introduction. Springer-Verlag, Berlin (1983)

Haken, H.: Principles of Brain Functioning. Springer, Berlin (1996)

Halnes, G., Liljenström, H., Århem, P.: Density dependent neurodynamics. Biosystems 89, 126–134 (2007), doi:10.1016/j.biosystems.2006.06.010

Hamker, F.H.: A dynamic model of how feature cues guide spatial attention. Vision Res. 44, 501–521 (2004), doi:10.1016/j.visres.2003.09.033

Harris, T., Shahidullah, M., Ellingson, J., Covarrubias, M.: General anesthetic action at an internal protein site involving the S4-S5 cytoplasmic loop of a neuronal K+ channel. J. Biol. Chem. 275, 4928–4936 (2000)

Hille, B.: Ion Channels of Excitable Membranes. Sinauer, Sunderland, Mass., 3rd edn. (2001)

Hodgkin, A.L., Huxley, A.F.: A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 117, 500–544 (1952)

Hopfield, J.J.: Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA 79, 2554–2558 (1982)

Hopfield, J.J.: Neurons with graded response have collective computational properties like those of two-state neurons. Proc. Natl. Acad. Sci. USA 81, 3088–3092 (1984)

Huber, M.T., Braun, H.A., Krieg, J.C.: Consequences of deterministic and random dynamics for the course of affective disorders. Biol. Psychiatr. 46, 256–262 (1999), doi:10.1016/s0006-3223(98)00311-4

Huber, M.T., Braun, H.A., Krieg, J.C.: Effects of noise on different disease states of recurrent affective disorders. Biol. Psychiatr. 47, 634–642 (2000), doi:10.1016/s0006-3223(99)00174-2

Johansson, S., Århem, P.: Single-channel currents trigger action potentials in small cultured hippocampal neurons. Proc. Natl. Acad. Sci. USA 91, 1761–1765 (1994)

John, E.R., Prichep, L.S.: The anesthetic cascade: A theory of how anesthesia suppresses consciousness. Anesthesiology 102, 447–471 (2005)