Abstract

Earlier in Chaps. 3 and 7, several types of models for lifetime data were discussed through their quantile functions. These will be candidate distributions in specific situations. The selection of one of them or a new one is dictated by how well it can justify the data generating mechanisms and satisfy well other criteria like goodness of fit. Once the question of an initial choice of the model is resolved, the problem is then to test its adequacy against the observed data. This is accomplished by first estimating the parameters of the model and then carrying out a goodness-of-fit test. This chapter addresses the problem of estimation as well as some other modelling aspects.

In choosing the estimates, our basic objective is to get estimated values that are as close as possible to the true values of the model parameters. One method is to seek estimate that match the basic characteristics of the model with those in the sample. This includes the method of percentiles and the method of moments that involve the conventional moments, L-moments and probability weighted moments. These methods of estimation are explained along with a discussion of the properties of these estimates. In the quantile form of analysis, the method of maximum likelihood can also be employed. The approach of this method, when there is no tractable distribution function, is described. Many functions required in reliability analysis are estimated by nonparametric methods. These include the quantile function itself and other functions such as quantile density function, hazard quantile function and percentile residual quantile function. We review some important results in these cases that furnish the asymptotic distribution of the estimates and the proximity of the proposed estimates to the true values.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

9.1 Introduction

In Chaps. 3 and 7, we have seen several types of models, specified by their quantile functions, that can provide adequate representations of lifetime data. These will be candidate distributions in specific real situations. The selection of one of them or a new one is dictated by finding out how well it can justify the data generating mechanism and satisfy well other criteria like goodness of fit. Perhaps, the most important requirement in all modelling problems is that the chosen lifetime distribution captures the failure patterns that are inherent in the empirical data. Often, the features of the failure mechanism are assessed from the data with the aid of the ageing concepts discussed earlier in Chap. 5. For instance, it could be the shape of the hazard or mean residual quantile function, assessed from a plot of the observed failure times. Based on this preliminary knowledge, the choice of the distribution can be limited to one from the corresponding ageing class discussed in Chap. 4. Once the question of a suitable model is resolved as an initial choice, the problem then is to test its adequacy against the observed failure times. This is accomplished by first estimating the parameters of the distribution and then carrying out a goodness-of-fit test. Alternatively, nonparametric methods can also be employed to infer various reliability characteristics. In this chapter, we address both general parametric methods and nonparametric procedures from a quantile-based perspective.

Our basic objective in estimation is to find estimates that are as close as possible to the true values of the model parameters. There are different criteria which ensure proximity between the estimate and the true parameter value and accordingly different approaches can be prescribed that meet the desired criteria. One method is to seek estimates by matching the basic characteristics of the chosen model with those in the sample. This includes the method of percentiles, method of moments involving conventional moments, L-moments and probability weighted moments, and then identifying basic characteristics such as location, dispersion, skewness, kurtosis and tail behaviour. A second category of estimation procedures are governed by optimality conditions that renders the difference between the fitted model and the observed data as small as possible or that provides estimates which are most probable. In the following sections, we describe various methods of estimation as well as the properties of these estimates.

9.2 Method of Percentiles

Recall from Chap. 1 that the pth percentile in a set of observations is the value that has 100p % of values below it and 100(1 − p) % values above it. Let \(X_{1},X_{2},\ldots,X_{n}\) be a random sample from a population with distribution function F(x; Θ), or equivalently quantile function Q(u; Θ), where Θ is a vector of parameters consisting of one or more elements. The sample observations are arranged in order of magnitude with X r: n being the rth order statistic. Then, the sample (empirical) distribution function is defined as

Obviously, F n (x) is the fraction of the sample observations that does not exceed x. The empirical (sample) quantile function then becomes

which is a step function with jump \(\frac{1} {n}\). Notice that (9.1) can be interpreted as a function ξ p such that the number of observations ≤ ξ p is ≥ [np] and the number of observations ≥ ξ p is ≥ [n(1 − p)]. Thus, e.g.,

In practice, some of the other methods of calculating ξ p are as follows:

-

(i)

Set \(p(n + 1) = k + a\), n being the sample size, k an integer, and 0 ≤ a < 1. Then,

$$\displaystyle{\xi _{p} = \left \{\begin{array}{@{}l@{\quad }l@{}} X_{k:n} + a(X_{k+1:n} - X_{k:n}),\quad &0 < k < n \\ X_{1:n}, \quad &k = 0 \\ X_{n:n}, \quad &k = n\end{array} \right.}$$(see Sect. 3.2.1);

-

(ii)

In some software packages, the setting is \(1 + p(n - 1) = k + a\);

-

(iii)

Calculate np. If it is not an integer, round it up to the next higher integer k and X k: n is the value. If np is an integer k, take

$$\displaystyle{\xi _{p} = \frac{1} {2}[X_{k:n} + X_{k+1:n}].}$$The value X [np] + 1: n is popular as it assures the monotonic nature of ξ p in the sense that if x is the p-quantile and y is the q-quantile with p < q, then y < x.

Some properties of ξ p as an estimate of Q(p) are described in the following theorems.

Theorem 9.1.

If there is a unique value of Q(p) satisfying

then ξ p → Q(p) as n →∞ with probability 1.

Theorem 9.2.

Let F(x) have a density f(x) which is continuous. If Q(p) is unique and f(Q(p)) > 0, then

-

(i)

\(\sqrt{n}(\xi _{p} - Q(p)) = {n}^{\frac{1} {2} }\{p - F_{n}(Q(p))\}{[f(Q(p))]}^{-1} + O({n}^{-\frac{1} {4} }{(\log n)}^{\frac{3} {4} })\);

-

(ii)

\(\sqrt{n}(\xi _{p} - Q(p))\) is asymptotically distributed as

$$\displaystyle{N\left (0, \frac{p(1 - p)} {n{[f(Q(p))]}^{2}}\right ).}$$

In particular, the asymptotic distribution of the sample median is normal with mean as the population median and variance \(\frac{{[f(M)]}^{-2}} {4n}\), where \(M = Q(\frac{1} {2})\) is the population median. For proofs of the above theorems and further results on the asymptotic behaviour of ξ p , one may refer to Bahadur [44], Kiefer [324], Serfling [527] and Csorgo and Csorgo [160]. Sometimes, the following bound may be useful in evaluating the bias involved in estimating Q(p) by ξ p . For 0 < p < 1 and unique Q(p), for all n and every ε > 0,

where \(\delta =\min (F(Q(p)+\epsilon ) - p,p - F(Q(p)-\epsilon ))\).

A multivariate generalization of Theorem 9.2 is as follows; see Serfling [527].

Theorem 9.3.

Let \(0 < p_{1} <\ldots < p_{k} < 1\) . Suppose F has a density f in the neighbourhood of \(Q(p_{1}),\ldots,Q(p_{k})\) and f is positive and continuous at \(Q(p_{1})\ldots Q(p_{k})\) . Then, \((\xi _{p_{1}},\xi _{p_{2}},\ldots,\xi _{p_{k}})\) is asymptotically normal with mean vector \((Q(p_{1}),\ldots,Q(p_{k}))\) and covariance \(\frac{1} {n}\sigma _{ij}\) , where

and σ ij = σ ji for i > j.

Since the order statistic X k: n is equivalent to the sample distribution function F n (x), the sample quantile may be expressed as

By inverting this relation, we get

and so any discussion of order statistics could be carried out in terms of sample quantiles and vice versa.

Bahadur [44] has given representations for sample quantiles and order statistics. His results with subsequent modifications are of the following form:

-

1.

If F is twice differentiable at Q(p), 0 < p < 1, with q(p) > 0, then

$$\displaystyle{\xi _{p} = Q(p) + [p - F_{n}(Q(p))]q(p) + R_{n},}$$where, with probability 1,

$$\displaystyle{R_{n} = O({n}^{-\frac{3} {4} }{(\log n)}^{\frac{3} {4} }),\quad n \rightarrow \infty.}$$Alternatively,

$$\displaystyle{{n}^{\frac{1} {2} }(\xi _{p} - Q(p)) = {n}^{\frac{1} {2} }\{p - F_{n}(Q(p))\}q(p) + O({n}^{-\frac{1} {4} }{(\log n)}^{\frac{3} {4} }),}$$n → ∞;

-

2.

\({n}^{\frac{1} {2} }(\xi _{p} - Q(p))\) and \({n}^{\frac{1} {2} }[p - F_{n}(Q(p))]q(p)\) each converge in distribution to N(0, p(1 − p)q 2(p));

-

3.

Writing \(Y _{i} = Q(p) + [p - I(X_{i} \leq Q(p))]q(p)\), \(i = 1,2,\ldots\),

$$\displaystyle{\xi _{p} = \frac{1} {n}\sum _{i=1}^{n}Y _{ i} + O({n}^{-\frac{3} {4} }{(\log n)}^{\frac{3} {4} }),\quad n \rightarrow \infty,}$$or with probability 1. ξ p is asymptotically the mean of the first n values of Y i . Thus, we have a representation of the sample quantile as a sample mean. Consider a sequence of order statistics \(X_{k_{n}:n}\) for which \(\frac{k_{n}} {n}\) has a limit. Provided

$$\displaystyle{\frac{k_{n}} {n} = p + \frac{k} {{n}^{\frac{1} {2} }} O\left ( \frac{1} {{n}^{\frac{1} {2} }} \right ),\quad n \rightarrow \infty,}$$

\({n}^{\frac{1} {2} }(X_{k_{ n}:n} -\xi _{p})\) converge to k q(p) with probability 1, and \({n}^{\frac{1} {2} }(X_{k_{ n}:n} - Q(p))\) converge in distribution to N(kq(p), p(1 − p)q 2(p)).

In other words, \(X_{k_{n}:n}\) and ξ p are roughly equivalent as estimates of Q(p). Rojo [510] considered the problem of estimation of a quantile function when it is more dispersed than distribution function, based on complete and censored samples. Rojo [511] subsequently developed an estimator of quantile function under the assumption that the survival function is increasing hazard rate on the average (IHRA). The estimator of the quantile function in the censored sample case is also given. He has shown that estimators of Q(u) are uniformly strongly consistent.

The percentiles of the population are, in general, functions of the parameter Θ in the model. In the percentile method of estimation, we choose as many percentile points as there are model parameters. Equating these percentile points of the population with the corresponding sample percentiles and solving the resulting equations, we obtain the estimate of Θ. This method ensures that the model fits exactly at the specific points chosen. Since the method does not specify which percentiles are to be chosen in a practical situation, some judgement is necessary in the choice of the percentile points. Issues such as the interpretation of the model parameters and the purpose for which the model is constructed could be some of the guidelines. Shapiro and Gross [535] pointed out that it is a good practice to choose percentiles where inferences are drawn and not to estimate a percentile where interpolation is required between two highest or two lowest values. As a general practice, they recommended using p = 0. 05 and p = 0. 95 for moderate samples and p = 0. 01 and p = 0. 99 for somewhat larger samples. In the case of two parameters, one of the above two sets, when there are three parameters augment these by the median p = 0. 50, and when there are four parameters, the two extreme points along with the quartiles p = 0. 25 and p = 0. 75 are their recommendations. The percentile estimators are generally biased, less sensitive to the outliers, and may not guarantee that the mean and variance of the approximating distribution correspond to the sample values.

Example 9.1.

Suppose we have a sample of 100 observations from the loglogistic distribution with

Choosing the values p = 0. 05 and p = 0. 95 for matching the population and sample quantiles, we look at the order statistics X 5: 100 and X 95: 100. Then, we have the equations

yielding

Upon substituting \(\hat{\alpha }\) in either (9.2) or (9.3) and solving for β, we obtain the estimate of β as

Instead of using percentiles as such, various quantile-based descriptors of the distribution such as median (M), interquantile range (IQR), measures of skewness (S) and Kurtosis (T), mentioned earlier in Sect. 1.4, may also be matched with the corresponding measures in the sample. The idea is that the fitted distribution has approximately the same distributional characteristics as the observed one. The number of equations should be the same as the number of parameters and the characteristics are so chosen that all the parameters are represented. If there is a parameter representing location (scale), it is a good idea to equate it to the median M (IQR). Some results concerning the asymptotic distributions involving the statistics are relevant in this context. See Sects. 1.4 and 1.5 for the definitions of various measures and Chap. 3 in which percentile method is applied for various quantile functions.

Theorem 9.4.

The sample interquartile range

is asymptotically normal

Note that IQR \(= \frac{1} {2}\Big(Q(\frac{3} {4}) - Q(\frac{1} {4})\Big)\), and that iqr is strongly consistent for IQR.

Theorem 9.5.

The sample skewness s and the sample Moor’s kurtosis t possess the following properties:

and

with mean (0,0) ∗ and dispersion matrix ϕ′(c)A(ϕ′(c)) ∗ , where

and ∗ denotes the transpose.

Some other nonparametric estimators of Q(u) have been suggested in literature. Kaigh and Lachenbruch [308] suggested consideration of a subsample of size k without replacement from a complete sample of size n. Then, by defining the total sample estimator of Q(u) as the average of all possible subsamples of size k, they arrived at

where

The choice of k is such that the extreme order statistics should have negligible weight. A somewhat different estimator is proposed in Harrell and Davis [262] of the form

where

with I x (a, b) being the incomplete beta function. Both \(\hat{Q}_{1}(p)\) and \(\hat{Q}_{2}(p)\) have asymptotic normal distribution. Specific cases of estimation of the exponential and Weibull quantile functions have been discussed by Lawless [378] and Mann and Fertig [410].

9.3 Method of Moments

The method of moments is also a procedure that matches the sample and population characteristics. We consider three such characteristics here, viz., the conventional moments, L moments and probability weighted moments.

9.3.1 Conventional Moments

In this case, either the raw moments μ r ′ = E(X r) or the central moments \(\mu _{r} = E{(X-\mu )}^{r}\) are equated to the same type of sample moments. When μ r ′ is used, we construct the equations

where \(X_{1},X_{2},\ldots,X_{n}\) are independent and identically distributed and the number of such equations is the same as the number of parameters in the distribution. The sample moments

as the estimate of μ r ′ have the following properties:

-

(i)

m r ′ is strongly consistent for μ r ′;

-

(ii)

E(m r ′) = μ r ′, i.e., the estimates are unbiased;

-

(iii)

\(V (m_{r}^{\prime}) = \frac{\mu _{2r}^{\prime}-{\mu _{r}^{\prime}}^{2}} {n}\);

-

(iv)

If μ 2r ′ < ∞, the random vector \({n}^{-1}(m_{1}^{\prime} -\mu _{1}^{\prime},m_{2}^{\prime} -\mu _{2}^{\prime},\ldots,m_{n}^{\prime} -\mu _{n}^{\prime})\) converges in distribution to a n-variate normal distribution with mean vector \((0,0,\ldots,0)\) and covariance matrix [σ ij ], \(i,j = 1,2,\ldots,n\), where \(\sigma _{ij} =\mu _{i+j}^{\prime} -\mu _{i}^{\prime}\mu _{j}^{\prime}\).

On the other hand, if we use the central moments, the equations to be considered become

The statistic m r estimates μ r with the following properties:

-

(a)

m r is strongly consistent for μ r ;

-

(b)

\(E(m_{r}) =\mu _{r} + \frac{\frac{1} {2} r(r-1)\mu _{r}-2\mu _{2}-r\mu _{r}} {n} + O({n}^{-2});\)

-

(c)

\(V (m_{r}) = \frac{1} {n}(\mu _{2r} -\mu _{r}^{2} - 2r\mu _{ r-1}\mu _{r+1} + {r}^{2}\mu _{ 2}\mu _{r-1}^{2}) + O( \frac{1} {{n}^{2}} )\);

-

(d)

If μ 2r < ∞, the random vector \({n}^{\frac{1} {2} }(m_{2} -\mu _{2},\ldots,m_{r} -\mu _{r})\) converges in distribution to (r − 1) dimensional normal distribution with mean vector \((0,0,\ldots,0)\) and covariance matrix [σ ij ], \(i,j = 2,3,\ldots,r\), where

$$\displaystyle{\sigma _{ij} =\mu _{i+j+2} -\mu _{i+1}\mu _{j+1} - (i + 1)\mu _{i}\mu _{j+2} - (j + 1)\mu _{i+2}\mu _{j} + (i + 1)(j + 1)\mu _{i}\mu _{j}\mu _{2}.}$$

In general, m r gives biased estimator of μ r . The correction factor required to make them unbiased and the corresponding statistics are

Occasionally, the parameters are also estimated by matching μ 1 ′, μ 2, β 1 and β 2 with the corresponding sample values. For example, the estimation of parameters of the lambda distributions is often done in this manner.

Example 9.2.

Consider the generalized Pareto model (Table 1.1) with

In this case, the first two moments are

and

Hence, we form the equations

and solve them to obtain the moment estimates of a and b as

9.3.2 L-Moments

In the method of L-moments, the logic is the same as in the case of usual moments except that we equate the population L-moments with those of the sample and then solve for the parameters. Here again, the number of equations to be considered is the same as the number of parameters to be estimated. Thus, we consider r equations

where

and

with

when the model to be fitted contains r parameters. The expressions for the first four L-moments L 1 through L 4 are given in (1.34)–(1.37) (or equivalently (1.38)–(1.41)). Next, we have the sample counterparts as

Regarding properties of l r as estimates of L r , we note that l r is unbiased, consistent and asymptotically normal (Hosking [276]). Elamir and Seheult [187] have obtained expressions for the exact variances of the sample L-moments. They have used an equivalent representation of (9.4) in the form

where

and

For a sample of size n, (9.5) is also expressible in the vector form

with \(l = (l_{1},l_{2},\ldots,l_{n})\) and \(b = (b_{0},b_{1},\ldots,b_{k-1})\) and C is a triangular matrix with entries p r − 1, k ∗ . So,

As special cases, we have

where σ 2 is the population variance and

In the case of the first four sample moments,

along with

were used to derive the expressions in (9.7) and (9.8). Furthermore,

and

where G(y) = v and F(x) = u. Also, if we define

then

is a distribution-free unbiased estimator of (9.9). In the above discussion, Y r: n denotes the conceptual order statistics of the population. The expression for V (l 2) is equivalent to the estimate of Nair [455]. In finding the variance of the ratio of two sample L-moments, the approximation

is useful. Thus, approximate variances of the sample L-skewness and kurtosis can be obtained. The sample L-moment ratios are consistent but not unbiased.

A more general sampling scheme involving censoring of observations has been discussed recently. Let \(T_{1},T_{2},\ldots,T_{n}\) be independent and identically distributed lifetimes following distribution function F(x). Assume that lifetimes are censored on the right by independent and identically distributed random variables \(Y _{1},Y _{2},\ldots,Y _{n}\) having common distribution function H(x). Further, let Y i ’s be independent of the T i ’s. Thus, we observe only the right censored data of the form X i = min(T i , Y i ). Define indicator variables

so that Δ i = 1 indicates T i is uncensored and Δ i = 0 indicates T i is censored. The distribution of each X i is then

To estimate the distribution function of the censored samples (X i , Δ i ), the Kaplan–Meir [311] product limit estimator is popular.

The estimator of the survival function is

where \(X_{(1)} \leq \ldots \leq X_{(n)}\) are ordered X i ’s and Δ(j) is the censoring status corresponding to X (j).

The estimator of the rth L-moment for right censored data (Wang et al. [576]) is

where

with X (0) = 0, \(p = r - k\) and \(q = k + 1\).

Now, let T = min(X, C), where X denotes the failure time and C denotes the noninformative censoring time. For a constant 0 ≤ u 0 < 1 and Q(u 0) < T ∗ , where T ∗ is the minimum of the upper most support points of the failure time X and the censoring time C, suppose that F′(x) is bounded on [0, Q(u 0) + Δ], Δ > 0 and \(\inf _{0\leq u\leq u_{0}}f(Q(u)) > 0\). Then (Cheng [145]),

-

(i)

with probability one

$$\displaystyle{\sup _{0\leq u\leq u_{0}}\vert \hat{Q}(u) - Q(u)\vert = O({n}^{-\frac{1} {2} }{(\log \log n)}^{\frac{1} {2} }),}$$ -

(ii)

$$\displaystyle{\sup _{0\leq u\leq u_{0}}\vert {n}^{\frac{1} {2} }f(Q(u))(\hat{Q}(u) - Q(u) - G_{n}(u)\vert = O({n}^{-\frac{1} {3} }{(\log n)}^{\frac{3} {2} }),}$$

where G n (u) is a sequence of identically distributed Gaussian process with zero mean and covariance function

$$\displaystyle{\text{Cov}\,(G_{n}(u_{1}),G_{n}(u_{2})) = (1 - u_{1})(1 - u_{2})\int _{0}^{n} \frac{dt} {{(1 - t)}^{2}[1 - H({F}^{-1}(t))]},}$$with u 1 ≤ u 2, \(H(x) = 1 - (1 - F(x)(1 - G(x)))\) and G(x) is the distribution function of the censoring time C.

Under the above regularity conditions, Wang et al. [576] have shown that, as n → ∞,

-

(i)

\(\hat{L}_{r} = L_{r} + o({n}^{-\frac{1} {2} }{(\log \log n)}^{\frac{1} {2} })\);

-

(ii)

\({n}^{\frac{1} {2} }(\hat{L}_{r} - L_{r})\), \(r = 1,2,\ldots,n\) converges in distribution to multivariate normal (0, Σ), where Σ has its elements as

$$\displaystyle{\Sigma _{rs} =\iint \limits _{x\leq y}\frac{P_{r-1}^{{\ast}}(x)P_{s-1}^{{\ast}}(y) + P_{s-1}^{{\ast}}(x)P_{r-1}^{{\ast}}(y)} {f(Q(x))f(Q(y))} \text{Cov}(G_{n}(x),G_{n}(y))dxdy,}$$with

$$\displaystyle{P_{r-1}^{{\ast}}(u) =\sum _{ k=0}^{r-1}{(-1)}^{r-1-k}\binom{r - 1}{k}\binom{r + k - 1}{k}{u}^{k}}$$is the (r − 1)th shifted Legendre polynomial;

-

(iii)

the vector

$$\displaystyle{{n}^{\frac{1} {2} }(\hat{L}_{1} - L_{1},\hat{L}_{2} - L_{2},\hat{\tau }_{3} -\tau _{3},(\hat{\tau }_{3} -\tau _{3})\ldots (\hat{\tau }_{m} -\tau _{m}))}$$converges in distribution to multivariate normal (0, Λ), where Λ has its elements as

$$\displaystyle{\Lambda _{rs} = \left \{\begin{array}{@{}l@{\quad }l@{}} \Sigma _{rs}, \quad &r \leq 2,\;s \leq 2 \\ \frac{(\Sigma _{rs}-\tau _{r}\Sigma _{2s})} {L_{2}}, \quad &r \geq 2,\;s \leq 2 \\ \frac{\Sigma _{rs}-\tau _{r}\Sigma _{2s}-\tau _{s}\Sigma _{2r}+\tau _{r}\tau _{s}\Sigma _{22}} {L_{2}^{2}},\quad &r \geq 3,\,s \geq 3.\end{array} \right.}$$

There are several papers that deal with L-moments of specific distributions and comparison of the method of L-moments with other methods of estimation. Reference may be made, e.g., to Hosking [277], Pearson [488], Guttman [256], Gingras and Adamowski [217], Hosking [278], Sankarasubramonian and Sreenivasan [517], Chadjiconstantinidis and Antzoulakos [131], Hosking [280], Karvanen [312], Ciumara [150], Abdul-Moniem [3], Asquith [40] and Delicade and Goria [169]. Illustration of the method of L-moments with some real data can be seen in Sect. 3.6 for different models.

9.3.3 Probability Weighted Moments

Earlier in Sect. 1.4, we defined the probability weighted moment (PVM) of order (p, r, s) as

which is the same as

provided that E( | X | P) < ∞. Commonly used quantities are

and

which are the special cases M(1, r, 0) and M(1, 0, r). Since

characterization of a distribution with finite mean by α r or β r is interchangeable. A natural estimate of α r (also called the nonparametric maximum likelihood estimate) based on ordered observations \(X_{1:n},X_{2:n},\ldots,X_{n:n}\) is

The asymptotic covariance of the estimator is

Similarly, the estimate of β r is

with asymptotic covariance

Landwehr and Matalas [373] have shown that

is an unbiased estimator of β r . Similarly, for α r , we have the estimator

Hosking [278] has developed estimates based on censored samples. In Type I censoring from a sample of size n, m are observed and (n − m) are censored above a known threshold T so that m is a random variable with binomial distribution. The estimate based on the uncensored sample of m values is (9.10) with m replacing n and when the n − m censored values are replaced by T, the estimate is given by

Assume that F(t) = v. Conditioned on the achieved value of m, the uncensored values are a random sample of size m from the distribution with quantile function Q(uv), 0 < u < 1. The population PWM’s for this distribution are

On the other hand, the completed sample is of size n from the distribution with quantile function

and hence its PWM’s are

The asymptotic distributions in this case are derived as in the case of the usual PWM’s. Furrer and Naveau [205] have examined the small-sample properties of probability weighted moments.

As in the case of the other two moments, we equate the sample and population probability weighted moments for the estimation of the parameters. In such cases, it is useful to adopt the formulas

and

with p(i) as some ordered suitably chosen probabilities. Gilchrist [215] has prescribed the choice for p (i) as \(\frac{i} {n+1}\), or inverse of the beta function \((0.5,i,n - i + 1)\), or \(p_{i} = \frac{i-0.5} {n}\).

Example 9.3.

Consider the Govindarajulu distribution with

Since we have only two parameters in this case to estimate, the equations to be considered are

or

and

Upon solving these equations, we obtain the estimates

9.4 Method of Maximum Likelihood

We proceed by writing the likelihood function based on a random sample \(x_{1},\ldots,x_{n}\) as

Taking x i = Q(u i ; θ), we then have

The estimate of θ is the solution that maximizes L(θ), or equivalently, we have to solve the equation

for θ. Notice that, in practice, the calculation of L(θ) requires the derivation of u i from the equation x i = Q(u i ; θ). If u i = F(x i ; θ) is explicitly available, a direct solution of the u i ’s are possible from the observed x i in the fit of F(x) after substituting the estimated values for the parameters. Otherwise, one has to use some numerical method to extract u i . The observations are ordered. When U is a uniform random variable, X and Q(U) have identical distributions. Let \(\hat{Q}(u)\) be a fitted quantile function and x (r) = Q(u (r)). If ψ 0 is an initial estimate of u for a given x value, using the first two terms of the Taylor expansion, we have

as an approximation. Solving for u, we get

In practical problems, the initial value could be \(u_{(r)} = \frac{r} {n+1}\). With this value, (9.11) is used iteratively until x differs from Q(u) by ε, a small pre-set tolerance value, in a trial. Gilchrist [215] has provided a detailed discussion on the subject and an example of the estimation of the parameters of the generalized lambda distribution and layout for the calculations. The properties of the maximum likelihood estimates, though widely known, is included here for the sake of completeness.

Theorem 9.6.

Let \(X_{1},X_{2},\ldots X_{n}\) be independent and identically distributed with distribution function F(x: θ) where θ belongs to an open interval Θ in R, satisfying the following conditions:

-

(a)

$$\displaystyle{\frac{\partial \log f(x;\theta )} {\partial \theta },\; \frac{{\partial }^{2}\log f(x;\theta )} {\partial \theta },\; \frac{{\partial }^{3}\log f(x;\theta )} {\partial \theta } }$$

exist for all x;

-

(b)

for each θ 0 ∈ Θ, there exist functions g i (x) in the neighbourhood of θ 0 such that

$$\displaystyle{\left \vert \frac{\partial f} {\partial \theta } \right \vert \leq g_{1}(x),\;\;\left \vert \frac{{\partial }^{2}f} {{\partial \theta }^{2}} \right \vert \leq g_{2}(x),\quad \left \vert \frac{{\partial }^{3}\log f} {{\partial \theta }^{3}} \right \vert \leq g_{3}(x)}$$for all x, and

$$\displaystyle{\int g_{1}(x)dx < \infty,\;\;\int g_{2}(x)dx < \infty,\;\;Eg_{3}(X)dx < \infty }$$in the neighbourhood of θ 0;

-

(c)

\(0 < E{\Big(\frac{\partial \log f(X;\theta )} {\partial \theta } \Big)}^{2} < M < \infty \) for each θ, then with probability 1, the likelihood equations

$$\displaystyle{\frac{\partial L} {\partial \theta } = 0}$$admit a sequence of solutions \(\{\hat{\theta }_{n}\}\) with the following properties:

-

(i)

\(\hat{\theta }_{n}\) is strongly consistent for θ;

-

(ii)

\(\hat{\theta }_{n}\) is asymptotically distributed as

$$\displaystyle{N\left (\theta, \dfrac{1} {nE{\Big(\frac{\partial \log f(X;\theta )} {\partial \theta } \Big)}^{2}}\right ).}$$

When θ contains more than one element, then also the sequence of vector values \((\hat{\theta }_{n})\) satisfies consistency and asymptotic normality \((\theta, \frac{1} {nI(\theta )})\) where I(θ), called the information matrix, has its elements as

$$\displaystyle{E\left (\frac{\partial \log f(X;\theta )} {\partial \theta _{i}}, \frac{\partial \log f(X;\theta )} {\partial \theta _{i}} \right )}$$where \(\theta = (\theta _{1},\ldots,\theta _{K})\) and I(θ) has order K × K.

-

(i)

9.5 Estimation of the Quantile Density Function

The quantile density function q(u) is a vital component in the definitions of reliability concepts like hazard quantile function, mean residual quantile function and total time on test transforms. Moreover, it appears in the asymptotic variances of different quantile-based statistics. Babu [43] has pointed out that the estimate of the bootstrap variance of the sample median needs consistent estimates of q(u).

Assume that q(p) ≥ 0. Then,

as v → p. Thus, to get an approximation for q(p), it is enough to consider Q(v) − Q(p) for v ≥ u near u. As Q(p) is not known, it is replaced by Q n (p). Since all quantiles \(\frac{Q_{n}(v)-Q(p)} {v-p}\) are close to q(p), a linear combination of these two also will be near q(p). With this as the motivating point, Babu [43] has provided the following results.

Let h be a function on the positive real line such that h(y)e y is a polynomial of degree not exceeding k (k ≥ 2 is an integer) and

Then,

and

Defining \(J = (p-\epsilon,p+\epsilon ) \subset (0,1)\), for independent variables \(X_{1},\ldots,X_{n}\) with common distribution function F(x), we have the following two results.

Theorem 9.7.

If F(x) is k times continuously differentiable at Q(p) for p ∈ J such that f(x) at Q(p) is positive and E(X 2 ) < ∞, then uniformly in x > 0,

where

Theorem 9.8.

Let \(f_{i}(x) =\int _{ x}^{\infty }{y}^{i}h(y)dy\) and f j and f 1 do not have common positive roots for any 2 ≤ j ≤ k − 1. If the jth derivative of Q at p is non-zero, for 2 ≤ j ≤ k − 1, then

and that the equality occurs at x = log n. As a result, D(log n,n) is an efficient estimator of q(u) in the mean square sense among the class of estimators {D(x,n)|x > 0}. Also, \({n}^{\beta }(D(\log n,n)( \frac{1} {q(p)} - 1))\) is asymptotically distributed as N(0,σ 2 ).

A histogram type estimator of the form

has been discussed by Bloch and Gastwirth [108] and Bofinger [113]. Its asymptotic distribution is presented in the following theorem.

Theorem 9.9 (Falk [193]).

Let \(0 < p_{1} <\ldots < p_{r} < 1\) and Q(p) be twice differentiable near p j with bounded second derivative, \(j = 1,2,\ldots,r\) . Then, if α nj → 0 and nα jn →∞, \(j = 1,2,\ldots r\),

where

converges in distribution to \(\prod _{j=1}^{r}N(0,{q}^{2}(p_{j}))\) , with Π denoting the product measure.

In the above result, if we further assume that nα jn 3 → 0, then \(\frac{Q(p_{j}+\alpha _{jn})-Q(p_{j}-\alpha _{jn})} {2\alpha _{jn}}\) can be replaced q(p j ). Moreover, if Q is three times differentiable near p with bounded third derivative which is continuous at p, an optimal bandwidth α n ∗ in the sense of mean square is

Falk [193] considered a kernel estimator of q(p) defined by

where h is a real valued kernel function with bounded support and ∫ h(x)dx = 0. Notice that k n (p) is a linear combination of order statistics of the form \(\sum _{i=1}^{n}C_{in}X_{i:n}\), where

Some key properties of the above kernel estimator are presented in the following theorem.

Theorem 9.10.

Let \(0 < p_{1} <\ldots < p_{r} < 1\) and Q be twice differentiable near p j with bounded second derivative, \(j = 1,2,\ldots,r\) . Then, if h j has the properties of h given above and α jn → 0, nα jn 2 →∞,

converges to \(\prod _{j=1}^{r}N(0,{q}^{2}(p_{j}))\int H_{j}^{2}(y)dy\) , where ∏ is the product measure,

and

Using additional conditions \(\int xh(x)dx = -1\) and nα n 3 → 0, k n (p) in Theorem 9.4 can be replaced by q(p). Further, if Q is differentiable (m + 1) times with bounded derivatives which are continuous at p, with nα n 3m + 1 → 0, the approximate bias of \(\hat{k}_{n}(p)\) becomes

and the optimal bandwidth that minimizes the mean squared error \(E{(\hat{k}_{n}(p) - q(p))}^{2}\) is

Mean squared error of the kernel quantile density estimator is compared with that of the estimate \(\tilde{g}(u)\) in Jones [305], where \(\tilde{g}(u)\) is the reciprocal of the kernel density estimator given by

It is proved that the former estimator is better than the latter one in terms of the mean squared error.

Estimation of q(p) in a more general framework and for different sampling strategies has been discussed by Xiang [590], Zhou and Yip [603], Cheng [143] and Buhamra et al. [123].

9.6 Estimation of the Hazard Quantile Function

The hazard quantile function in reliability analysis, as described in the preceding chapters, plays a key role in describing the patterns of failure and also in the selection of the model. Sankaran and Nair [515] have provided the methodology for the nonparametric estimation of the hazard function, by suggesting two estimators, with one based on the empirical quantile density function and the other based on a kernel density approach. The properties of the kernel-based estimator and comparative study of the two estimators have also provided by them. Recall that the hazard quantile function is defined as

Suppose that the lifetime X is censored by a non-negative random variable Z. We observe (T, Δ), where T = min(X, Z) and Δ = I(X ≤ Z), with

If G(x) and L(x) are the distribution functions of Z and T, respectively, under the assumption that Z and X are independent, we have

Let (T i , Δ i ), \(i = 1,2,\ldots,n\), be independent and identically distributed and each (T i , Δ i ) has the same distribution as (T, Δ). This framework includes time censored observations if all the Z i ’s are fixed constants, a Type I censoring when all Z i ’s are the same constant, and Type II censoring if Z i = X r: n for all i. The first estimator proposed by Sankaran and Nair [515] is

where

and T 0: n ≡ 0. From Parzen [486], it follows that q n (p) is asymptotically exponential with mean q(p). Thus, q n (p) is not a consistent estimator of q(p) nor \(\hat{H}(p)\) is for H(p).

A second estimator has been proposed by considering a real valued function K( ⋅) such that

-

(i)

K(x) ≥ 0 for all x and ∫ K(x)dx = 1,

-

(ii)

K(x) has finite support, i.e., K(x) = 0 for | x | > c for some constant c > 0,

-

(iii)

K(x) is symmetric about zero,

-

(iv)

K(x) satisfies the Lipschitz condition

$$\displaystyle{\vert K(x) - H(y)\vert \leq M\vert x - y\vert }$$for some constant M. Further, let {h n } be a sequence of positive numbers such that h n → 0 as n → ∞. Define a new estimator as

$$\displaystyle\begin{array}{rcl} H_{n}(p)& =& \frac{1} {h_{n}}\int _{0}^{1} \frac{1} {[1 - F_{n}(Q_{n}(t))]q_{n}(t)}K\Big(\frac{t - p} {h_{n}} \Big)dt {}\end{array}$$(9.12)$$\displaystyle\begin{array}{rcl} & =& \frac{1} {h_{n}}\sum _{i=1}^{u} \frac{1} {{[1 - F_{n}(T_{i:n})]}^{n}[T_{i:n} - T_{i-1:n}]}\int _{s_{i-1:n}}^{s_{i:n} }K\Big(\frac{t - p} {h_{n}} \Big)dt, {}\end{array}$$(9.13)where

$$\displaystyle{S_{i:n} = \left \{\begin{array}{@{}l@{\quad }l@{}} 0, \quad &i = 0 \\ F_{n}(T_{i:n}),\quad &i = 1,2,\ldots,n - 1 \\ 1, \quad &i = n.\end{array} \right.}$$

When \(S_{i:n} - S_{i-1:n}\) is small, by the first mean value theorem, (9.13) is approximately equal to

When no censoring is present, \(S_{i:n} - S_{i-1:n} = \frac{i} {n}\) for all i. When heavy censoring is present, \(S_{i:n} - S_{i-1:n}\) is large for i = n so that H i ∗ (p) need not be a good approximation for H n (p).

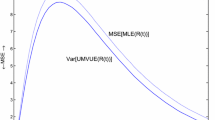

When F is continuous and K( ⋅) satisfies Conditions (i)–(iv) given above, the estimator H n (p) is uniformly strongly consistent and for 0 < p < 1, \((\sqrt{n}H_{n}(p) - H(p))\) is asymptotically normal with mean zero and variance

A simulation was carried out in order to make a small sample comparison of H n (p) and \(\hat{H}(p)\) in terms of mean squared error. The random censorship model with \(F(t) = 1 - {e}^{\lambda t}\) was used by varying λ. Observations were censored with the uniform distribution U(0, 1) with probability 0.3, so that 30 % of the observations were censored. As a choice of the kernel function, the triangular density

was used. The ratios of the mean squared error of \(\hat{H}(p)\) to that of H n (p) were compared in the study, which revealed the following points:

-

(a)

H n (p) gave reasonable performance for h n ≤ 0. 50;

-

(b)

When 0. 05 < h n < 0. 50, for each value of p, there is a range of widths h n for which H n (p) has smaller mean square error. For large h n values h n = 0. 15 gives the smallest discrepancy between the two estimators;

-

(c)

The two estimators \(\hat{H}(p)\) and H n (p) do not perform well when p becomes large. The method of estimation has also been illustrated with a real data by Sankaran and Nair [515].

9.7 Estimation of Percentile Residual Life

As we have seen in the preceding chapters, the residual life distribution plays a fundamental role in inferring the lifetime remaining to a device given that it has survived a fixed time in operation. The percentiles of the residual life quantile function are the percentile residual life defined in (2.19) and its quantile form in (2.19). Classes of lifetime distributions based on monotone percentile residual life functions have been discussed in Sect. 4.3. The (1 − p)th percentile life function, according to the definition in (2.19), is

As before, assume that \(X_{1:n} \leq \ldots \leq X_{n:n}\) are the ordered observations in a random sample of size n from the distribution with quantile function Q(p). The sample analogue of (9.14) is then

Csorgo and Csorgo [160] have discussed the asymptotic distribution of p n (x) for different cases consisting of p n (x), (a) as a stochastic process in x for fixed 0 < p < 1, (b) as a stochastic process in p for a fixed x > 0, and (c) a two-parameter process in (p, x). Using the density function of X, define

where B is a Brownian bridge over (0, 1). For a fixed x > 0, H(x) is distributed as \(N(0,p(1 - p)(1 - F(x)))\). For a fixed p, provided q(p) is positive and continuous at \(1 - p(1 - F(x))\), r n (x) is asymptotically \(N(0,p(1 - p)(1 - F(x)))\). In addition, if f(x) > 0 on (Q(1 − p), ∞) and some r > 0,

and f(x) is ultimately non-increasing as x → ∞, then almost surely

with

A smooth version of the empirical estimator has been studied by Feng and Kulasekera [196]. Following this, as n → ∞, Alam and Kulasekera [33] have established that under the above assumptions,

is asymptotically normal as

and also

almost surely. Since

and

the asymptotic normal distribution has mean zero and variance v λ (x). It is easy to see that the function K( ⋅) denotes the kernel, which the authors assume to be a probability density function centred at the origin. The efficiency of the Csorgo’s estimator r n (x) relative to that of \(\bar{r}_{n}(x)\) is now

The term μ(u, w) occurring in (9.16) is

Assuming that

we have

and

as n → ∞. In the above results,

and

The asymptotic value of the normalized difference between \(\bar{r}_{n}(x)\) and the empirical estimator is given by

where

Alam and Kulasekera [33] have also presented a Monte Carlo study when the underlying distribution is exponential and Weibull using uniform distribution over [ − 1, 1] as the kernel. It has been observed through this study that the kernel estimator provides better results for moderate sample sizes and chosen values of λ.

More properties of P n (x) have been given by Csorgo and Mason [162], Aly [34] and Csorgo and Viharos [163].

Pereira et al. [491] have studied properties of the class of distributions with decreasing percentile residual life (DPRL). They introduced a nonparametric estimator of P(x) based on the fact that

Thus, the estimator of P(x) is given by

where I (x, ∞) denotes the indicator function of the indicated interval. Note that \(\tilde{P}(x)\) is the largest decreasing function that lies below the empirical P n (x). In practice, the estimator \(\tilde{P}(x)\) can be computed easily in the following way. When \(X_{1:n} \leq \ldots \leq X_{n:n}\) are the ordered observations in a random sample of size n from the distribution F(x), find the number of distinct values in the sample, say k. Let \(Y _{1} <\ldots < Y _{k}\) be the resulting ordered values with no ties. Then, the estimate \(\tilde{P}(x)\) is given by

The strong uniform consistency of the estimator \(\tilde{P}(x)\) is presented in the following theorem.

Theorem 9.11.

Let X be a random variable having DPRL property. If the distribution function F(x) of X has a continuous positive density function f(x) such that inf 0≤p≤1 f x (Q(p)) > 0, then \(\tilde{P}(x)\) is a strongly uniformly consistent estimator of P(x).

Note that in order to estimate p(x) under the condition that it increases, an estimator that is a modification of the estimator given in (9.15) can be obtained. It is also strongly uniformly consistent.

9.8 Modelling Failure Time Data

In this and in the subsequent sections, we consider various aspects of the process of modelling lifetime data using distributions. As a problem solving activity, the statistical concepts expressed in terms of quantile functions offer new perspectives that are not generally available in the distribution function approach or at least provides an alternative approach with possibly different interpretations with almost equivalent results. Several factors have to be considered while constructing a model. Generally, the model builder will have some information about the phenomenon under consideration or will be able to extract some features from a preliminary assessment of the observations. The background information about the variables and possible distributions along with the necessary level of details required for the analysis are crucial points. Choice of the appropriate model also depends on the data available to ensure its adequacy and the method of estimation of the parameters. Finally, model parsimony is an attractive feature that prefers a simpler model to a more complex one. For example, models with lesser number of parameters or functional forms that have simpler structure (like constancy or linearity as against nonlinear) will be easier to build and analyse. Qualities such as tractability of the model, ease of analysis and interpretation are often prime considerations. This should be consistent with the ability of the model to represent the essential features of the life distribution that are inherent in the observations. In practice, there are three essential steps in building a model. They are identification of the appropriate model, fitting the model and finally checking its adequacy.

9.9 Model Identification

The procedure involved in model choice is to try out possible candidates and choose the best among them. We have seen in previous chapters (see Table 1.1 and the review of bathtub models in Chap. 7) that a plethora of lifetime distributions have been proposed to represent lifetimes in the distribution function approach. This adds to the complexity of determining the potential initial choice. A generalized version may fit in many practical situations, but more parsimonious solution that render easy analysis and interpretations may exist. The problem is somewhat of a lesser degree when quantile functions are used. We have the generalized lambda distribution (Sect. 3.2.1) or the generalized Tukey lambda family (Sect. 3.2.2) that can take care of a wide variety of practical problems in view of their ability to provide reasonable approximations to many continuous distributions. See the discussion on the structural properties of the two quantile functions. The reliability properties of the models, methods of estimating the parameters and examples of fitting them (Sect. 3.6) have been described in detail in Chap. 3. When there are multiple solutions that give models which fit the data, the one which captures the observed features of the reliability characteristics more closely may be preferred. The reliability characteristic may be the hazard quantile function, mean residual quantile function or any other for which the fitted model is put to good use. We can also make use of other models including those suggested in the distribution function approach with tractable quantile functions. A look at the admissible range of skewness and kurtosis values for the proposed distribution will indicate if it covers the distributional shape that fits the observations. The skewness and kurtosis coefficients of the sample values have to be within the ranges prescribed for the chosen model.

Another useful method to arrive at a realistic model is to compare any of the basic reliability functions that uniquely determines the life distribution, with its sample counterpart. The hazard quantile function, mean residual quantile function, etc. can be used for this purpose. To use the hazard quantile function, recall its definition

Let \(X_{1:n} \leq X_{2:n} \leq \ldots \leq X_{n:n}\) be an ordered set of observations on failure times. Then, the quantile function of the distribution of X r: n is (1.26)

If U has a uniform distribution, then X, where x = Q(p), has quantile function Q(u). Hence, the ordered U r , say u (r), leads to x r: n = Q(u (r)). So, as an approximation, either the mean

or the median

or equivalently

can be used. Gilchrist [215] refers to the function I − 1 as BETAINV and points out that it is a crucial standard function in most spreadsheets and statistical software. The empirical quantile density function \(\hat{q}(u)\) can be obtained from the data, u r , from the median probability. Thus, from the above formula, q(u (r) ∗ ) can be plotted against u r ∗ . Once a graph of

is obtained, its functional form can be obtained by comparing the plot with one of the hazard quantile function forms. Several such forms are available from Table 2.4, Chaps. 3 and 7.

A third alternative in model identification is to start with a simple model and then modify it to accommodate the features of the data. Various properties of quantile functions described in Sect. 1.2 can assist in this regard. For example, the power distribution has an increasing H(u), while the Pareto II has a decreasing hazard quantile function. The product of these two is the power-Pareto distribution discussed in Chap. 3, with a highly flexible form for H(u). We can also use various kinds of transformations to arrive at new models from the initially assumed one. An excellent discussion of these methods along with various illustrations is available in Gilchrist [215].

9.10 Model Fitting and Validation

Once the data is collected and a specific model form is assumed, the next goal is to estimate the parameters. One of the methods of estimation discussed in the preceding sections of this chapter can be employed for this purpose. The only remaining step in the model building process is to ascertain whether the model with the estimated parameters describes the data adequately. This is called model validation. Since the parameters have been estimated from the data using some optimality criteria, the reproducibility of the model will be enhanced if its validation is made by another set of data if it exists. When the data is large, part of it can be used for identification and fitting while the remaining for validation. Sometimes, cross-validation is made use of wherein part of the data used for fitting and the remaining part of the data used for validation are interchanged and the two acts are repeated.

There are graphical methods to ascertain the goodness of fit. One is the Q-Q plot and the other is the box plot mentioned in Chap. 1. The Q-Q plots were illustrated in the modelling of real data using lambda distribution, the power-Pareto and the Govindarajulu distributions in Chap. 3; see, for example, Figs. 3.7–3.9. An advantage of the Q-Q plot is that it can be used to specifically compare the tail areas. We can consider the plots comparing x (r) with its values at 0.90 or 0.95 or 0.05, using the median rankits

The recent work of Balakrishnan et al. [52] constructing optimal plotting points based on Pitman closeness and its performance as a good of fit and comparison with other plotting points is of special interest here. A second method is to apply some goodness-of-fit tests like chi-square. Suppose there are n observations and are divided into m groups each containing the same number of observations. Take \(u_{j} = \frac{j} {m}\), u 0 = 0, u m = 1, \(r = 0,\ldots,m - 1\). If \(p_{j} =\hat{ Q}(u_{r})\) and f j is the frequency of observations in (p r − 1, p r ), the expected value of f r is \(\frac{n} {m}\) for all value of r. Then, the statistic

has approximately a chi-square distribution with n − 1 degrees of freedom. This scheme is more easier to apply than the conventional chi-square procedure. For elaborate details on chi-squared tests and their power properties, one may refer to the recent book by Voinov et al. [575]. The general references on different forms of goodness-of-fit tests of D’Agostino and Stephens [165] and Huber-Carol et al. [289] will also provide valuable information in this regard. See also Gilchrist [214, 215] for methods of estimators when quantile functions are used in modelling statistical data.

References

Abdul-Moniem, I.B.: L-moments and TL-moments estimation of the exponential distribution. Far East J. Theor. Stat. 23, 51–61 (2007)

Alam, K., Kulasekera, K.B.: Estimation of the quantile function of residual lifetime distribution. J. Stat. Plann. Infer. 37, 327–337 (1993)

Aly, E.E.A.A.: On some confidence bands for percentile residual life function. J. Nonparametr. Stat. 2, 59–70 (1992)

Asquith, W.H.: L-moments and TL-moments of the generalized lambda distribution. Comput. Stat. Data Anal. 51, 4484–4496 (2007)

Babu, G.J.: Efficient estimation of the reciprocal of the density quantile function at a point. Stat. Probab. Lett. 4, 133–139 (1986)

Bahadur, R.R.: A note on quantiles in large samples. Ann. Math. Stat. 37, 577–580 (1966)

Balakrishnan, N., Davies, K., Keating, J.P., Mason, R.L.: Computation of optimal plotting points based on Pitman closeness with an application to goodness-of-fit for location-scale families. Comput. Stat. Data Anal. 56, 2637–2649 (2012)

Bloch, D.A., Gastwirth, J.L.: On a simple estimate of the reciprocal of the density function. Ann. Math. Stat. 39, 1083–1085 (1968)

Bofinger, E.: Estimation of a density function using order statistics. Aust. J. Stat. 17, 1–7 (1975)

Buhamra, S.S., Al-Kandari, N.M., Ahmed, S.E.: Nonparametric inference strategies for the quantile functions under left truncation and right censoring. J. Nonparametr. Stat. 21, 1–10 (2007)

Chadjiconstantinidis, S., Antzoulakos, D.L.: Moments of compound mixed Poisson distribution. Scand. Actuarial J. 3, 138–161 (2002)

Cheng, C.: Almost sure uniform error bounds of general smooth estimator of quantile density functions. Stat. Probab. Lett. 59, 183–194 (2002)

Cheng, K.F.: On almost sure representation for quantiles of the product limit estimator with applications. Sankhyā 46, 426–443 (1984)

Ciumara, R.: L-moment evaluation of identically and nonidentically Weibull distributed random variables. In: Proceedings of the Romanian Academy of Sciences, vol. A-8 (2007)

Csorgo, M., Csorgo, S.: Estimation of percentile residual life. Oper. Res. 35, 598–606 (1987)

Csorgo, S., Mason, D.M.: Bootstrapping empirical functions. Ann. Stat. 17, 1447–1471 (1989)

Csorgo, S., Viharos, L.: Confidence bands for percentile residual lifetimes. J. Stat. Plann. Infer. 30, 327–337 (1992)

D’Agostino, R.G., Stephens, M.A.: Goodness-of-Fit Techniques. Marcel Dekker, New York (1986)

Delicade, P., Goria, M.N.: A small sample comparison of maximum likelihood moments and L moment methods for the asymmetric exponential power distribution. Comput. Stat. Data Anal. 52, 1661–1673 (2008)

Elamir, E.A.H., Seheult, A.H.: Trimmed L-moments. Comput. Stat. Data Anal. 43, 299–314 (2003)

Falk, M.: On the estimation of the quantile density function. Stat. Probab. Lett. 4, 69–73 (1986)

Feng, Z., Kulasekera, K.B.: Nonparametric estimation of the percentile residual life function. Comm. Stat. Theor. Meth. 20, 87–105 (1991)

Furrer, R., Naveau, P.: Probability weighted moments properties for small samples. Stat. Probab. Lett. 77, 190–195 (2007)

Gilchrist, W.G.: Modelling with quantile functions. J. Appl. Stat. 24, 113–122 (1997)

Gilchrist, W.G.: Statistical Modelling with Quantile Functions. Chapman and Hall/CRC Press, Boca Raton (2000)

Gingras, D., Adamowski, K.: Performance of flood frequency analysis. Can. J. Civ. Eng. 21, 856–862 (1994)

Guttman, N.B.: The use of L-moments in the determination of regional precipitation climates. J. Clim. 6, 2309–2325 (1993)

Harrell, F.E., Davis, D.E.: A new distribution free quantile estimator. Biometrika 69, 635–640 (1982)

Hosking, J.R.M.: L-moments: analysis and estimation of distribution using linear combination of order statistics. J. Roy. Stat. Soc. B 52, 105–124 (1990)

Hosking, J.R.M.: Moments or L-moments? An example comparing two measures of distributional shape. The Am. Stat. 46, 186–189 (1992)

Hosking, J.R.M.: The use of L-moments in the analysis of censored data. In: Balakrishnan, N. (ed.) Recent Advances in Life Testing and Reliability, pp. 545–564. CRC Press, Boca Raton (1995)

Hosking, J.R.M.: On the characterization of distributions by their L-moments. J. Stat. Plann. Infer. 136, 193–198 (2006)

Huber-Carol, C., Balakrishnan, N., Nikulin, M.S., Mesbah, M. (eds.): Goodness-of-Fit Tests and Model Validity. Birkhäuser, Boston (2002)

Jones, M.C.: Estimating densities, quantiles, quantile densities and density quantiles. Ann. Inst. Stat. Math. 44, 721–727 (1992)

Kaigh, W.D., Lachenbruch, P.A.: A generalized quantile estimator. Comm. Stat. Theor. Meth. 11, 2217–2238 (1982)

Kaplan, E.L., Meier, P.: Nonparametric estimation from incomplete observations. J. Am. Stat. Assoc. 53, 457–481 (1958)

Karvanen, J.: Estimation of quantile mixture via L-moments and trimmed L-moments. Comput. Stat. Data Anal. 51, 947–959 (2006)

Kiefer, J.: On Bahadur’s representation of sample quantiles. Ann. Math. Stat. 38, 1323–1342 (1967)

Landwehr, J.M., Matalas, N.C.: Probability weighted moments compared with some traditional techniques in estimating Gumbel parameters and quantiles. Water Resour. Res. 15, 1055–1064 (1979)

Lawless, J.F.: Construction of tolerance bounds for the extreme-value and Weibull distributions. Technometrics 17, 255–261 (1975)

Mann, N.R., Fertig, K.W.: Efficient unbiased quantile estimators for moderate-size complete samples from extreme-value and Weibull distributions; confidence bounds and tolerance and prediction intervals. Technometrics 19, 87–93 (1977)

Nair, U.S.: The standard error of Gini’s mean difference. Biometrika 34, 151–155 (1936)

Parzen, E.: Concrete statistics. In: Ghosh, S., Schucany, W.R., Smith, W.B. (eds.) Statistics of Quality. Marcel Dekker, New York (1997)

Pearson, C.P.: Application of L-moments to maximum river flows. New Zeal. Stat. 28, 2–10 (1993)

Pereira, A.M.F., Lillo, R.E., Shaked, M.: The decreasing percentile residual life ageing notion. Statistics 46, 1–17 (2011)

Rojo, J.: Nonparametric quantile estimators until order constraints. J. Nonparametr. Stat. 5, 185–200 (1995)

Rojo, J.: Estimation of the quantile function of an IFRA distribution. Scand. J. Stat. 25, 293–310 (1998)

Sankaran, P.G., Nair, N.U.: Nonparametric estimation of the hazard quantile function. J. Nonparametr. Stat. 21, 757–767 (2009)

Sankarasubramonian, A., Sreenivasan, K.: Investigation and comparison of L-moments and conventional moments. J. Hydrol. 218, 13–34 (1999)

Serfling, R.J.: Approximation Theorems of Mathematical Statistics. Wiley, New York (1980)

Shapiro, S.S., Gross, A.J.: Statistical Modelling Techniques. Marcel Dekker, New York (1981)

Voinov, V., Nikulin, M.S., Balakrishnan, N.: Chi-Squared Goodness-of-Fit Tests with Applications. Academic, Boston (2013)

Wang, D., Hutson, A.D., Miecznikowski, J.C.: L-moment estimation for parametric survival models. Stat. Methodol. 7, 655–667 (2010)

Xiang, X.: A law of the logarithm for kernel quantile density estimators. Ann. Probab. 22, 1078–1091 (1994)

Zhou, Y., Yip, P.S.F.: Nonparametric estimation of quantile density functions for truncated and censored data. J. Nonparametr. Stat. 12, 17–39 (1999)

Author information

Authors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer Science+Business Media New York

About this chapter

Cite this chapter

Nair, N.U., Sankaran, P.G., Balakrishnan, N. (2013). Estimation and Modelling. In: Quantile-Based Reliability Analysis. Statistics for Industry and Technology. Birkhäuser, New York, NY. https://doi.org/10.1007/978-0-8176-8361-0_9

Download citation

DOI: https://doi.org/10.1007/978-0-8176-8361-0_9

Published:

Publisher Name: Birkhäuser, New York, NY

Print ISBN: 978-0-8176-8360-3

Online ISBN: 978-0-8176-8361-0

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)