Abstract

The total time on test transform is essentially a quantile-based concept developed in the early 1970s. Apart from its applications in reliability problems, it has also been found useful in other areas like stochastic modelling, maintenance scheduling, risk assessment of strategies and energy sales. When several units are tested for studying their life lengths, some of the units would fail while others may survive the test period. The sum of all observed and incomplete life lengths is the total time on test statistic. As the number of units on test tends to infinity, the limit of this statistic is called the total time on test transform (TTT). The definitions and properties of these two concepts are discussed and the functional forms of TTT for several life distributions are presented in Table 5.1. We discuss the Lorenz curve, Bonferroni curve and the Leimkuhler curve which are closely related to the TTT. Identities connecting various curves, characterizations of distributions in terms of these curves and their relationships with various reliability functions are detailed subsequently. In view of the ageing classes in the quantile set-up introduced in Chap. 4, it is possible to characterize these classes in terms of TTT. Accordingly, we give necessary and sufficient conditions for IHR, IHRA, DMRL, NBU, NBUE, HNBUE, NBUHR, NBUHRA, IFHA*t 0, UBAE, DMRLHA, DVRL, and NBU-t 0 classes in terms of the total time on test transform. Another interesting property of the TTT is that it uniquely determines the lifetime distribution. There have been several generalizations of the TTT. We discuss these extensions and their properties, with special reference to the TTT of order n. Relationships between the reliability functions of the baseline model and those of the TTT of order n (which is also a quantile function) are described and then utilized to describe the pattern of ageing of the transformed distributions. Some life distributions are characterized. The discussion of the applications of TTT in modelling includes derivation of the L-moments and other descriptive measures of the original distribution. Some of the areas in reliability engineering that widely use TTT are preventive maintenance, availability, replacement problems and burn-in strategies.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

5.1 Introduction

The concept of total time on test transform (TTT) was studied in the early 1970s; see, e.g., Barlow and Doksum [67] and Barlow et al. [65]. When several units are tested for studying their life lengths, some of the units would fail while others may survive the test duration. The sum of all observed and incomplete life lengths is generally visualized as the total time on test statistic. When the number of items placed on test tends to infinity, the limit of this statistic is called the total time on test transform. A formal definition of these two concepts will be introduced in the next section. The TTT is essentially a quantile-based concept, although it is discussed often in the literature in terms of F(x).

Many papers on TTT concentrate on reliability and its engineering applications. This include analysis of life lengths and new classes of ageing; see Abouammoh and Khalique [9], Ahmad et al. [25] and Kayid [318]. A special characteristic of TTT is that the basic ageing properties can be interpreted and determined through it. The works of Barlow and Campo [66], Bergman [89], Klefsjö [334], Abouammoh and Khalique [9] and Perez-Ocon et al. [492] are all of this nature. Properties of TTT were used for construction of bathtub-shaped distributions by Haupt and Schabe [266] and Nair et al. [447]. Much of the literature has focused on developing test procedures, most of which are for exponentially against alternatives like IHR, IHRA, NBUE, DMRL and HNBUE. For this, one may refer to Bergman [90], Klefsjö [335, 336], Kochar and Deshpande [348], Aarset [1], Xie [592, 593], Bergman and Klefsjö [96], Wei [579] and Ahmed et al. [25].

Applications of TTT can be found in a variety of fields. Of these, the role of TTT in reliability engineering will be taken up separately in Sect. 5.5. The optimal quantum of energy that may be sold under long-term contracts using TTT is discussed in Campo [125] and risk assessment of strategies in Zhao et al. [601]. TTT plotting of censored data (Westberg and Klefsjö [578]), problem of repairable limits (Dohi et al. [180]), normalized TTT plots and spacings (Ebrahimi and Spizzichino [183]), maintenance scheduling (Kumar and Westberg [357], Klefsjö and Westberg [340]), estimation in stationary observations (Csorgo and Yu [161]) and stochastic modelling (Vera and Lynch [573]) are some of the other topics discussed in the context of total time on test.

5.2 Definitions and Properties

We now give formal definitions of various concepts based on total time on test.

Definition 5.1.

Suppose n items are under test and successive failures are observed at \(X_{1:n} \leq X_{2:n} \leq \ldots \leq X_{n:n}\), and let \(X_{r:n} < t \leq X_{r+1:n}\), where X r: n ’s are order statistics from the distribution of a lifetime random variable X with absolutely continuous distribution function F(x). Then, the total time on test statistic during (0, t) is defined as

The above expression is arrived at by noting that the test time observed between 0 and X 1: n is nX 1: n , that between X 1: n and X 2: n is \((n - 1)(X_{2:n} - X_{1:n})\) and so on, and finally that between X r: n and t is \((n - r)(t - X_{r:n})\). Also, the total time up to the rth failure is

It may also be noted that (5.1) is equivalent to

Definition 5.2.

The quantity

is called the scaled total time on test statistic (scaled TTT statistic).

Noting that \(\bar{X}_{n} = \frac{1} {n}(X_{1:n} +\ldots +X_{n:n})\) is the sample mean of the n order statistics, we have \(\phi _{r:n} = \frac{\tau (X_{r:n})} {n\bar{X}_{n}}\). The empirical distribution function defined in terms of the order statistics is

If there exists an inverse function

we can verify that

and

uniformly in u belonging to [0, 1]. The expression on the right side of (5.4), viz.,

is called the total time on test transform. Accordingly we have, with a slightly different notation T(u) for H F − 1(u), the following definition.

Definition 5.3.

The TTT of a lifetime random variable X is defined as

Example 5.1.

The linear hazard quantile function family of distributions specified by

(see Chap. 2) has

and so, from (5.6), we find

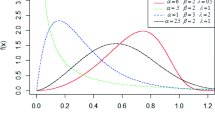

The expressions for TTT for some specific life distributions are presented in Table 5.1.

Some important properties of the TTT in (5.6) are the following:

-

1.

T(0) = 0, T(1) = μ. T(u) is an increasing function if and only if F is continuous. In this case, T(u) is a quantile function and the corresponding distribution is called the transformed distribution;

-

2.

The baseline distribution F is uniquely determined by T(u). To see this, we differentiate (5.6) to get

$$\displaystyle{ T^{\prime}(u) = (1 - u)q(u), }$$(5.7)and thence

$$\displaystyle{Q(u) =\int _{ 0}^{u} \frac{T^{\prime}(p)} {1 - p}dp;}$$ -

3.

From Table 5.1, we see that the graph of the TTT of the exponential distribution is the diagonal line in the unit square;

-

4.

Many identities exist between T(u) and the basic reliability functions introduced earlier in Sects. 2.3–2.6. Directly from (5.7) and (2.30), we have

$$\displaystyle{ T^{\prime}(u) = \frac{1} {H(u)}. }$$(5.8)

Again from (2.35), we find

and consequently

which relates TTT and the mean residual quantile function. On the other hand, from (2.46), we find

and hence

or equivalently

Next, with regard to functions in reversed time, we have

or

and so

Also from (2.50), the reversed mean residual quantile function satisfies

and

and consequently

Finally, we use the reversed variance quantile function

to write

These relationships are used in the next section to characterize the ageing properties in terms of total time on test transform.

Definition 5.4.

We say that

is the scaled total time on test transform, or scaled transform in short, of the random variable X.

Definition 5.5.

The plot of the points \(( \frac{r} {n},\phi _{r,n})\), \(r = 1,2,\ldots,n\), when connected by consecutive straight lines, is called the TTT-plot.

The statistic \(\frac{1} {n}\tau (X_{r:n})\) converges uniformly in u to the TTT as n → ∞ and \(\frac{r} {n} \rightarrow u\). Now, we present the asymptotic distribution, which is due to Barlow and Campo [66]. Let \(\phi _{r,n} = \left \{\phi _{n}(p) = \frac{H_{n}^{-1}(p)} {H_{n}^{-1}(1)},\ 0 \leq p \leq 1\right \}\) be the scaled TTT process. Define

for \(\frac{j-1} {n} \leq p \leq \frac{j} {n}\) and 1 ≤ j ≤ n, with \(S_{n}(0) = S_{n}(1) = 0\). Upon using

we see that

converges to

with probability one and uniformly in 0 ≤ u ≤ 1 as n → ∞, where ν n (u) puts mass \(\frac{1} {n}\) at \(u = \frac{j} {n}\). Next,

where [t] denotes the greatest integer contained in t. Then,

with

and

In the above, \(\{A(u),0 \leq u \leq 1\}\) is the Brownian bridge process.

5.3 Relationships with Other Curves

The similarity between the Lorenz curve used in economics and the TTT and the corresponding results have been discussed by Chandra and Singpurwalla [134] and Pham and Turkkan [493]. If X is a non-negative random variable with finite mean, the Lorenz curve is defined as

which is itself a continuous distribution function with L(0) = 0 and L(1) = 1. It is a bow-shaped curve below the diagonal of the unit square. Used as a measure of inequality in economics, we note that as the bow is more bent, the amount of inequality increases. Also L(u) is convex, increasing and is such that L(u) ≤ u, 0 ≤ u ≤ 1. The Lorenz curve determines the distribution of F up to a scale. Two well-known measures of inequality that are related to the Lorenz curve are the Gini index and the Pietra index. There are many analytic expressions for calculating the Gini index, including

In addition,

where X 1: 2 is the smallest of a sample of size 2 from the population.

Next, the Pietra index is obtained from the maximum vertical deviation between L(u) and the line L(u) = u, given by

It can be seen that P is \(\frac{1} {2\mu }\int _{0}^{\infty }\vert x -\mu \vert f(x)dx\), half the relative mean deviation. A detailed account of the results concerning L(u) and G can be found in Kleiber and Kotz [341].

The cumulative Lorenz curve of X is given by

Chandra and Singpurwalla [133] observed that both L(u) and L − 1(u) are distribution functions, L is convex, L − 1 is concave, and that L(u) is related to the mean residual life function m(x). In the quantile set-up, the Lorenz curve can be related to all the basic reliability functions. For example, we have from (2.34) and (5.15) that

and so

Now, H(u) is recovered from (2.37) and V (u) from (2.46), after substituting for M(u). A much simpler expression results for the reversed mean residual quantile function R(u) as

Also,

and

Example 5.2.

The Pareto distribution is one of the basic distributions used in modelling income data and it plays a role similar to the exponential distribution in reliability. Its quantile function is (Table 1.1)

and so we obtain the following expressions:

Also, the functions Λ(u), R(u) and D(u) can also be similarly found.

Chandra and Singpurwalla [134] obtained the following relationships between T(u), L(u) and the sample analogs corresponding to them:

-

(a)

$$\displaystyle{ T(u) = (1 - u)Q(u) +\mu L(u). }$$(5.19)

Equation (5.19) is obtained by integrating by parts the right-hand side of (5.6) and then using (5.15). Since Q(u) = μ L ′(u), (5.19) has the alternative form

$$\displaystyle{T(u) =\mu [(1 - u)L^{\prime}(u) + L(u)],}$$or equivalently

$$\displaystyle{\phi (u) = (1 - u)L^{\prime}(u) + L(u).}$$Now, upon treating the last relationship as a linear differential equation in u and solving it, we obtain an integral expression for L(u) as

$$\displaystyle{L(u) = (1 - u)\int _{0}^{u} \frac{\phi (p)} {{(1 - p)}^{2}}dp.}$$ -

(b)

We also have

$$\displaystyle{C\phi (u) = 2CL(u),}$$where \(C\phi (u) =\int _{ 0}^{1}\phi (p)dp = \frac{1} {\mu } \int _{0}^{1}T(p)\,dp\) is called the cumulative total time on test transform. To establish the above assertion, we note that

$$\displaystyle\begin{array}{rcl} \int _{0}^{1}\int _{ 0}^{u}Q(p)dp& =& -\int _{ 0}^{1}\left ( \frac{d} {dp}(1 - p)\int _{0}^{u}Q(p)dp\right )du {}\\ & =& \int _{0}^{1}(1 - p)Q(p)dp\text{ (by partial integration),} {}\\ \int _{0}^{u}(1 - p)q(p)dp& =& (1 - u)Q(u) +\int _{ 0}^{u}Q(p)dp. {}\\ \end{array}$$Thus, we get

$$\displaystyle\begin{array}{rcl} C\phi (u)& =& \frac{1} {\mu } \int _{0}^{1}\int _{ 0}^{u}(1 - p)q(p)dpdu {}\\ & =& \frac{1} {\mu } \int _{0}^{1}\left \{(1 - u)Q(u) +\int _{ 0}^{u}Q(p)dp\right \}du {}\\ & =& \frac{1} {\mu } \int _{0}^{1}\left \{\int _{ 0}^{u}Q(p)dp\right \}du + \frac{1} {\mu } \int _{0}^{1}\left \{\int _{ 0}^{u}Q(p)dp\right \}du {}\\ & =& 2CL(u), {}\\ \end{array}$$as required.

-

(c)

\(G = 1 - C\phi (u)\), which is seen as follows:

$$\displaystyle\begin{array}{rcl} G& =& 1 - {2\mu }^{-1}\int _{ 0}^{1}\left \{\int _{ 0}^{u}Q(p)dp\right \}du {}\\ & =& 1 - 2CL(u) = 1 - C\phi (u)\text{ (by using (b)).} {}\\ \end{array}$$If we denote the sample Lorenz curve and the sample Gini index by

$$\displaystyle{L_{n}(u) = \frac{\sum _{r=1}^{[nu]}X_{r:n}} {\sum _{r=1}^{n}X_{r:n}},}$$and

$$\displaystyle{G_{n} = \frac{\sum _{r=1}^{n-1}r(n - r)(X_{r+1:n} - X_{r:n})} {(n - 1)\sum _{r=1}^{n}X_{r:n}},}$$respectively, and the cumulative total time on test statistic by

$$\displaystyle{V _{n} = \frac{1} {n - 1}\sum _{r=1}^{n-1}\phi _{ r:n},}$$then we have

$$\displaystyle{\phi _{r:n} = L_{n}\left ( \frac{r} {n}\right ) + \frac{(n - r)X_{r:n}} {\sum _{j=1}^{n}X_{r:n}} }$$and

$$\displaystyle{V _{n} = 1 - G_{n}.}$$Chandra and Singpurwalla [134] also pointed out the potential of the Lorenz curve in comparing the heterogeneity in survival data and also in characterizing the extremes of life distributions. The latter aspect is illustrated by the following theorem.

Theorem 5.1.

If X is IHR with mean μ, then

and if X is DHR with mean μ, then

Here, F and G are the distribution functions of X and exponential variable with same mean μ, respectively, and D is the distribution degenerate at μ.

The distribution which is degenerate at μ has h(x) = ∞ at μ and so L D (u) = u characterizes distributions which are most IHR. Likewise, distributions with L(u) = 0 for u < 1 and L(u) = 1 for μ = 1 are the most DHR.

Pham and Turkkan [493] established more results in this direction. They pointed out that ϕ(u) strictly increases in the unit square with ϕ(0) = 0 and ϕ(1) = 1. Moreover,

-

(a)

\(\phi (F(\mu )) = 1 - E(\vert X -\mu \vert )\);

-

(b)

\(\phi (\text{Med}\,X) = \frac{1} {2} + \frac{(\text{Med}\,X-E\vert X-\text{Med}\,X\vert )} {2\mu }\);

-

(c)

In the unit square, the area between ϕ(u) and L(u) equals the area below L(u). The area above ϕ(u) is G;

-

(d)

\(L(u) = (1 - u)\int _{0}^{u} \frac{\phi (p)} {{(1 - p)}^{2}}dp\);

-

(e)

If X is NBUE, then the Pietra index is less than the reliability at μ andE( | X − Med X | ) < Med X;

-

(f)

When \(\frac{1} {2} < G \leq 1\) \((0 \leq G < \frac{1} {2})\) and F(x) is a family of IHR (DHR) distributions with common mean μ, F(x) becomes more IHR (DHR) when L(u) gets closer to the diagonal and ϕ(u) get closer to the upper (lower) side. Further, when \(G = \frac{1} {2}\), F(x) is exponential. When 0 ≤ P < e − 1, X is IHR and the closer P is to zero, the more IHR X becomes. X is exponential when \(P = {e}^{-1}\). Also, e − 1 < P < 1 provides DHR and P → 1 corresponding to the most DHR.

Another curve that has been used in the context of income inequality is the Bonferroni curve. For a non-negative random variable X, the first moment distribution of X is defined by the distribution function

The Bonferroni curve is defined in the orthogonal plane as (F(x), B 1(x)) within the unit square, where

In terms of quantile functions, we have

One may refer to Giorgi [218], Giorgi and Crescenzi [219] and Pundir et al. [498] and the references therein for a study of (5.20) and its properties. As u → 0, B(u) has the indeterminate form \(\frac{0} {0}\) and hence the curve does not begin from the origin. It is strictly increasing but can be convex or concave in parts of the plane. Several results concerning B 1(x) have been given by Pundir et al. [498]. We now make a comparative study of B(u) with L(u) and ϕ(u). First, we note that B(u) characterizes the distribution of X through

Also,

and

or equivalently

Solving (5.22) as a linear differential equation, we get

relating scaled TTT and the Bonferroni curve. Equation (5.20) verifies

and hence

by virtue of (5.21). Rewriting the above equation as

and solving it, we see that B(u) is uniquely determined by M(u) as

A more concise relationship exists between B(u) and the reversed mean residual quantile function R(u) in the form

As in the case of L(u), all other reliability functions can be derived using the relations they have with M(u) and R(u). Pundir et al. [498] showed that the Bonferroni index

is such that

The Leimkuhler curve, which is closely related to the Lorenz curve, is also discussed recently for its relationships with the reliability functions. It is used in economics as a plot of cumulative proportion of productivity against cumulative proportion of sources and is also used in studying concentration of bibliometric distributions in information sciences. A general definition of the curve is given in Sarabia [518] and methods of generating such curves have been detailed in Sarabia et al. [519]. Balakrishnan et al. [60] have pointed out the relationships between reliability functions and the Leimkuhler curve. The Leimkuhler curve is defined in terms of quantile function as

Evidently,

and so K(u) characterizes the distribution of X. The relation in (5.23) gives

Similarly, from

and the definition of R(u), we obtain

Since

upon combining the expressions, we obtain

Regarding the geometric properties, it is seen from the definition that K(u) is continuous, concave and increasing with K(0) = 0 and K(1) = 1. The main difference between the Lorenz curve and the Leimkuhler curve K(u) is that in the Lorenz curve the sources are arranged in increasing order of productivity, while in the Leimkuhler curve the sources are arranged in decreasing order. The expressions of B(u), L(u) and K(u) for some distributions are presented in Table 5.2.

5.4 Characterizations of Ageing Concepts

In this section, we discuss the role of TTT in detecting different ageing properties. In this regard, the new definitions offered below in terms of TTT provide alternative ways of interpreting and analysing lifetime data. The proofs given here assume that F is continuous and strictly increasing.

Theorem 5.2 (Barlow and Campo [66]).

A lifetime random variable X is IHR (DHR) if and only if the scaled transform ϕ(u) is concave (convex) for 0 ≤ u ≤ 1.

From (5.8), we have

and so

Thus, H′(u) is positive (negative) or X is IHR (DHR) if and only if T′(u) is negative (positive). This is equivalent to the concavity (convexity) of T(u) or ϕ(u). It now follows that if ϕ(u) has an inflexion point u 0 such that 0 < u 0 < 1 and ϕ(u) is convex (concave) on [0, u 0], and concave (convex) on [u 0, 1], then X has a bathtub (upside-down bathtub)-shaped hazard quantile function. This can be used for constructing life distributions with BT (UBT) hazard quantile functions.

Barlow and Campo [66] have also shown that if X is IHRA (DHRA), then \(\frac{\phi (u)} {u}\) is decreasing (increasing) in 0 < u < 1. This condition is not sufficient as seen from the following life distribution (Barlow [64]) which is not IHRA, but at the same time \(\frac{\phi (u)} {u}\) is decreasing:

In this regard, we have the following results.

Theorem 5.3 (Asha and Nair [39]).

A necessary and sufficient condition for X to be DMTTF (IMTTF) is that \(\frac{\phi (u)} {u}\) is decreasing (increasing).

Theorem 5.4.

A necessary and sufficient condition for X to be IHRA (DHRA) is that

where t(u) = T′(u).

The proof follows from (5.7), (5.8) and the definition of IHRA distributions.

Remark 5.1.

Since T(u) is the quantile function of the transformed distribution, t(u) is the corresponding quantile density function. From (5.7), \(t(u) = (1 - u)q(u)\) and so (5.24) is equivalent to

Bergman [89] has proved that X is NBUE (NWUE) if and only if ϕ(u) ≥ u (ϕ(u) ≤ u). This follows from

The proof in the case of NWUE involves simply reversing the inequalities.

Theorem 5.5 (Klefsjö [333]).

A lifetime random variable X is

-

(a)

DMRL (IMRL) if and only if \(\frac{1-\phi (u)} {1-u}\) is decreasing (increasing) in u;

-

(b)

HNBUE (HNWUE) if and only if

$$\displaystyle{\phi (u) \leq (\geq )1 -\exp [-\frac{Q(u)} {\mu } ],\quad 0 \leq u \leq 1.}$$

These results are direct consequences of (5.9) and the definition of HNBUE (HNWUE).

In view of the definitions of ageing concepts in the quantile set-up in Chap. 4 and the identities between T(u), Q(u), H(u) and M(u), more ageing classes can be characterized in terms of T(u) or ϕ(u) as follows.

Theorem 5.6.

We say that X is

-

(a)

NBUHR (NWUHR) if and only if t(u) ≤ (≥)t(0);

-

(b)

NBUFHA (NWUHRA) if and only if \(-\frac{\log (1-u)} {Q(u)} \leq (\geq )t(0)\);

-

(c)

IHRA*t 0 if and only if

$$\displaystyle{\int _{0}^{u} \frac{t(p)} {(1 - p)}dp \geq \frac{Q(u_{0})} {\log (1 - u_{0})}\log (1 - u)\text{ for all }u \geq u_{0};}$$ -

(d)

UBAE (UWAE) if and only if \(T(u) \leq (\geq )\mu - (1 - u)M(1)\) , where \(T(1) =\lim _{u\rightarrow 1-}T(u)\) is finite;

-

(e)

DMRLHA (IMRLHA) if and only if

$$\displaystyle{- \frac{1} {Q(u)}\log (1 -\phi (u))}$$is increasing (decreasing) in u;

-

(f)

DVRL (IVRL) if and only if

$$\displaystyle{\int _{u}^{1}{\left (\frac{1 -\phi (p)} {1 - p} \right )}^{2}dp \leq (\geq )\frac{{(1 -\phi (u))}^{2}} {1 - u};}$$ -

(g)

NBU (NWU) if and only if

$$\displaystyle{\int _{0}^{u+v-uv}\frac{t(p)dp} {1 - p} \leq (\geq )Q(u) + Q(v),\;0 < v < 1,\;u + v - w < 1;}$$ -

(h)

NBU-t 0 (NWU-u 0 ) if and only if

$$\displaystyle{\int _{0}^{u+u_{0}-uv}\frac{t(p)dp} {1 - p} \leq (\geq )Q(u) + Q(u_{0})}$$for some 0 < u 0 < 1 and all u;

-

(i)

NBU*u 0 (NWU*u 0 ) if and only if

$$\displaystyle{\int _{0}^{u+v-uv} \frac{t(p)} {1 - p}dp \leq (\geq )Q(u + Q(v))}$$for some v ≥ u 0 and all u.

Note that in (g)–(i), Q(s) is evaluated as \(\int _{0}^{s}\frac{t(p)dp} {1-p}\).

Ahmad et al. [25] defined a new ageing class of life distributions called the new better than used in total time on test transform order (NBUT). They defined the class as distributions for which the inequality

is satisfied. It was proved that the NBUT class has the following preservation properties:

-

(i)

Let \(X_{1},X_{2},\ldots,X_{N}\) be a sequence of independent and identically distributed random variables and N be independent of the X i ’s. If X i ’s are NBUT, so is \(\min (X_{1},X_{2},\ldots,X_{N})\);

-

(ii)

The NBUT class is preserved under the formation of series systems provided that the constituent lifetime variables are independent and identically distributed;

-

(iii)

If X 1, X 2 and X 3 are independent and identically distributed, then

$$\displaystyle{E\min (X_{1},X_{2},X_{3}) \geq \frac{2} {3}E\min (X_{1},X_{2}).}$$

This result is used to test exponentiality against non-exponential NBUT alternatives.

5.5 Some Generalizations

Several generalizations of the TTT have been proposed in the literature. The earliest one is that of Barlow and Doksum [67]. If F and G are absolutely continuous distribution functions with positive right continuous densities f and g, respectively, then the generalized total time on test transform is defined as

As before, H F ( ⋅) is a distribution function and \(H_{G}^{-1}(u) = u\), 0 ≤ u ≤ 1.

The generalized version can also be shown to possess properties similar to T(u). For instance, the density C F of H F is such that

where

is referred to as the generalized failure rate function. Further, if S n ( ⋅) is the empirical distribution function based on a sample of size n from life distribution F, then H F − 1 is estimated as

and so

for \(r = 1,2,\ldots,n\). Neath and Samaniego [468] proved that if G is exponential and F is IFRA, then \(\frac{H_{F}^{-1}} {u}\) is decreasing in u. Many reliability properties of the generalized transform like those of T(u) are still open problems. For a study of the order relations of the general form, we refer to Bartoszewicz [73]. Yet another extension due to Li and Shaked [388] is of the form

where h(u) is positive on (0, 1) and zero elsewhere. The usual TTT results when \(h(p) = 1 - p\). While the main focus of Li and Shaked [388] is on stochastic orders, they also point out some applications of the order considered by them in reliability context. Various results regarding orderings can be seen in Bartoszewicz [74, 75] and Bartoszewicz and Benduch [76].

In a slightly different direction, Nair et al. [447] studied higher order TTT by applying Definition 5.3, recursively, to the transformed distributions.

Definition 5.6.

The TTT transform of order n (TTT-n) of the random variable X is defined recursively as

where T 0(u) = Q(u) and \(t_{n}(u) = \frac{dT_{n}(u)} {du}\), provided that \(\mu _{n-1} =\int _{ 0}^{1}T_{n-1}(p)dp < \infty \).

The primary reasons for defining the above generalization are (i) the hierarchy of distributions generated by the iterative process reveals more clearly the reliability characteristics of the transformed models than that of T(u) and (ii) the results obtained from (3.27) subsume those for T(u) = T 1(u) and will generate new models and properties. We denote by Y n the random variable with quantile function T n (u), mean μ n , hazard quantile function H n (u), and mean residual quantile function M n (u). Recall that T(u), the transform of order one, is a quantile function and consequently the successive transforms T n , \(n = 2,3,\ldots\), are also quantile functions with support (0, μ n ). Differentiating (5.26), we obtain the quantile density function of Y n as

and hence

where H(u) is the hazard quantile function of X = Y 0. Thus, we have an identity connecting the hazard quantile function of the baseline distribution F(x) of X and that of Y n in the form

Using (5.9), we have

or equivalently

This, along with \(t_{n+1}(u) = {(1 - u)}^{n}t_{1}(u)\) and

yields a relationship between the mean residual quantile functions of X and Y n as

Incidentially, the definition in (5.26) is also true for negative integers, since Q(u) can be thought of as a transform of T − 1(u) and so on. Thus,

and

A remarkable feature of the recurrent transform T n (u) is that the sequence \(\langle H_{n}(u)\rangle\) increases for positive n and decreases for negative n. Thus, Y n provides a life distribution whose failure rate is larger (smaller) than that of Y n − 1 when n is positive (negative). It is therefore of interest to know and compare the ageing patterns of Y n and Y n − 1.

Theorem 5.7.

-

(i)

If X is IHR, then Y n is IHR for all n;

-

(ii)

If X is DHR, then Y n is DHR (IHR) if \(Q(u) \geq (\leq )Q_{L}(k, \frac{1} {n})\) and is bathtub shaped if there exists a u 0 for which \(Q(u) \geq Q_{L}(k, \frac{1} {n})\) in [0,u 0 ] and \(Q(u) \leq Q_{L}(k, \frac{1} {n})\) in [u 0 ,1], where Q L (α,C) is the quantile function of the Lomax distribution (see Table 1.1).

Proof.

Since \(t_{n+1}(u) = {(1 - u)}^{n}t_{1}(u)\), we have

Thus,

Similarly, when X is DHR, T 1(u) is convex and accordingly

The last part follows from the definition of bathtub-shaped hazard quantile function in Chap. 4.

In a similar manner, by backward iteration of a Q(u) = T 0(u) and using

we get the following result.

Theorem 5.8.

-

(i)

If Y n is DHR, then X is DHR;

-

(ii)

If Y n is IHR, then X is IHR (DHR) if \(T_{n}(u) \leq (\geq )Q_{B}(k{(n + 1)}^{-1},{(n + 1)}^{-1})\) , and is upside-down bathtub shaped if there exists a u 0 for which \(T_{n}(u) \leq Q_{\beta }(k{(n + 1)}^{-1},{(n + 1)}^{-1})\) in [0,u 0 ] and \(T_{n}(u) \geq Q_{B}(k{(n + 1)}^{-1},{(n + 1)}^{-1})\) in [u 0 ,1]. Here, Q B (R,C) denotes the quantile function of the rescaled beta distribution.

Using Theorems 5.7 and 5.8, it is possible to construct BT and UBT distributions with finite range. Generation of BT distributions is facilitated by the choice of DHR distributions for which t n + 1(u) has a point of inflexion. On the other hand, IHR distributions can provide UBT models provided t n + 1(u) has an inflexion point for negative integers n. The following examples illustrate the procedure.

Example 5.3.

Consider the Weibull distribution with

In this case, we have

and

Hence,

Thus, when 0 < λ ≤ 1, T n + 1(u) is convex in [0, u 0] and concave in [u 0, 1], where

It follows that Y n has BT hazard quantile function for n ≥ 1. Notice that with increasing values of n, the change point u 0 becomes larger. For λ ≥ 1 and every n, Y n is IHR.

Example 5.4.

The Burr distribution with k = 1 (see Table 1.1) has

and

Therefore, \(u_{0} = \frac{\frac{1} {\lambda } -1} {n-1}\) is a point of inflexion when nλ > 1. Thus, Y n is BT in this case.

Theorem 5.9.

-

(i)

X is DMRL implies that Y n is DMRL;

-

(ii)

Y n is IMRL implies that X is IMRL.

Proof.

Theorem 5.3 gives the necessary and sufficient condition for X to be DMRL as \({(1 - u)}^{-1}(\mu -T_{1}(u))\) is decreasing in u. This condition is equivalent to

Further,

where

This gives

Hence, X is DMRL according to (5.29). This proves (i) and the proof of (ii) follows similarly by taking n as a negative integer.

Theorem 5.10.

-

(i)

X is IHRA implies that X n is IHRA;

-

(iii)

X n is DHRA implies that X is DHRA.

Proof.

We prove only (i) since the proof of (ii) follows on the same lines. In view of Theorem 5.2, X is IHRA if and only if u − 1 T 1(u) is decreasing, or equivalently

Considering T n (u), we can write

Result in (i) now follows by using (5.31).

Theorem 5.11.

-

(i)

X is NBUE implies that Y n is NBUE;

-

(ii)

Y n is NWUE implies that X n is NWUE.

Proof.

Recall that X is NBUE if and only if \({\mu }^{-1}T_{1}(u) >\mu\) for all u. Hence,

which implies that Y n is NBUE. Part (ii) follows similarly.

From the above theorems, it is evident that when X is ageing positively, the successive transforms are also ageing positively. Similar results can also be established in the case of other ageing concepts discussed in Chap. 4. It is important to mention that the converses of the above theorems need not be true (see next section).

5.6 Characterizations of Distributions

Various identities between the hazard quantile function, mean residual quantile function and the density quantile function of X and Y n enable us to mutually characterize the distributions of X and Y n . A preliminary result is that T n (u) characterizes the distribution of X. This follows from

and

The following theorems have been proved by Nair et al. [447].

Theorem 5.12.

The random variable Y n, \(n = 1,2,\ldots\) , has rescaled beta distribution

if and only if X is distributed as either exponential, Lomax or rescaled beta.

Proof.

To prove the if part, we observe that in the exponential case

and

which is the quantile function of the rescaled beta distribution with parameters \(({(\lambda n)}^{-1},{n}^{-1})\) in the support \((0, \frac{1} {n\lambda })\). Similar calculations show that when X is Lomax, Y n is rescaled beta \((\alpha {(nC - 1)}^{-1},C{(nC - 1)}^{-1})\) with support \((0,\alpha {(nC - 1)}^{-1})\), and when X is rescaled beta (R, C), Y n has the same distribution with parameters \((R{(1 + nC)}^{-1},C{(1 + nC)}^{-1})\). Conversely, if we now assume that Y n is rescaled beta, its quantile function has the form

for some constants R n and C n > 0. This gives

The last equation means that (1 − u)n is a factor of the left-hand side and so

for some real k n . Thus,

Since q(u) is independent of n, taking n = 1, we have

Hence, for k 1 > 0, X follows rescaled beta distribution \((0,R_{1}k_{1}^{-1}(k_{1} + 1))\), Lomax law for − 1 < k 1 < 0, and exponential distribution as k 1 → 0. Hence, the theorem.

Theorem 5.13.

The random variable X follows the generalized Pareto distribution with quantile function (see Table 1.1 )

if and only if, for all \(n = 0,1,2,\ldots\) and 0 < u < 1,

Proof.

Assuming (5.33) to hold, we have

and then using the identity (5.29), we get

The above equation simplifies to

solving which we get

Noting that \(M(0) =\mu = b\), we have K = b. Since the mean residual quantile function determines the distribution uniquely, we see from (2.48) that X has a generalized Pareto distribution with parameters (a, b). Next, we assume that X has the specified generalized Pareto distribution. Then,

and

and so

Using the expression (see Table 2.5)

the relationship in (5.33) is easily verified. Hence, the theorem.

There are other directions in which characterizations can be established. For instance, the relationship T(u) has with any reliability function is a characteristic property. It is easy to see that the simple identity

holds true if and only if X follows the linear hazard quantile distribution. Recall that T(u) is also a quantile function representing some distribution. Thus, when X has a life distribution, the corresponding T(u) may also be a known life distribution. As an example, X follows power distribution if and only if the associated T(u) corresponds to the Govindarajulu distribution.

5.7 Some Applications

A direct approach to see the application of TTT in data analysis is through the model selection for an observed data. One can either derive a model based on physical conditions or postulate one that gives a reasonable fit. The TTT can then be derived and the data is analysed therefrom. An alternative approach is to start with a functional form of TTT and then choose the parameter values that give a satisfactory fit for the observations. The main point here is that the functional form should be flexible enough to represent different data situations. Since many of the quantile functions discussed in Chap. 3 provide great flexibility, their TTTs can provide candidates for this purpose. In such cases, to compute the descriptive measures of the distribution, one need not revert the TTT to the corresponding quantile function. We show that the descriptors can be obtained directly from T(u) and its derivative t(u).

For this purpose, we recall (1.38)–(1.41) and the identity \(t(u) = (1 - u)q(u)\). Then, the first four L-moments are as follows:

Example 5.5.

The quantile function of the generalized Pareto distribution (see Table 1.1) yields

Then, direct calculations using the above formulas result in

With these L-moments, descriptive measures like L-skewness and L-kurtosis can be readily derived from the formulas presented in Chap. 1.

In preventive maintenance policies, TTT has an effective role to play. At time x = 0, a unit starts functioning and is replaced upon age T or its failure which ever occurs first, with respective costs C 1 and C 2, with C 1 < C 2. If the unit lifetime is X, the first renewal occurs at Z = min(X, T) and

The mean cost for one renewal period is

and so the cost per unit time under age replacement model is

This is equivalent to

where \(K = C_{2} - C_{1}\). The simple replacement problem is to find an optimal interval T = T ∗ such that it minimizes (5.34). In practice, one may not know the life distribution but only some observations, and so the optimal age replacement interval has to be estimated from the data. Assuming K = 1, without loss of generality, a value u ∗ determined by u ∗ = F(T ∗ ) maximizes

or one that maximizes

Bergman [89] and Bergman and Klefsjö [95] provide a nonparametric estimation concerning age replacement policies. Let \((X_{1:n},X_{2:n},\ldots,X_{n:n})\) be an ordered sample from an absolutely continuous distribution. For estimating ϕ(u), we use

and determine

where v is such that

Then,

-

(i)

\(C(\hat{T}_{n})\) tends with probability one to C(T ∗ ) as n → ∞;

-

(ii)

the optimal cost C(T ∗ ) may be estimated by \(C_{n}(\hat{T}_{n})\), where

$$\displaystyle{C_{n}(X_{r:n}) = \frac{C_{1} + F_{n}(X_{r:n})} {\int _{0}^{X_{r:n}}\bar{F}_{n}(t)dt} }$$which is strongly consistent. If a unique optimal age replacement interval exists, then \(\hat{T}_{n}\) is strongly consistent. Bergman [89] explains a graphical method of determining T ∗ . Draw the line passing through \((-\frac{C} {K},0)\) which touches the scaled transform ϕ(u) and has the largest slope. The abscissa of the point of contact is u ∗ . One important advantage of the graphical method is that it is convenient for performing sensitivity analysis. For example, T ∗ may be compared for different combinations of K and C 1. Suppose that instead of age replacement at T ∗ , replacement can be thought of at T 1 and T 2 satisfying \(T_{1} < {T}^{{\ast}} < T_{2}\). Which of these ages give the minimum cost per unit time can also be addressed with the help of TTT (Bergman [91]).

The term availability refers to the probability that a system is performing satisfactorily at a given time and is equal to the reliability if no repair takes place. A second optimality criterion is to replace the unit at age T for which the asymptotic availability is maximized. This is equivalent to minimizing

where m 1 is the mean time of preventive maintenance and m 2 is the mean time of repair (Chan and Downs [132]). Since this expression is similar to (5.34), the same method of analysis can be adopted here as well.

Klefsjö [338] discusses the age replacement problem with discounted costs, minimal repair and replacements to extend system life. When costs have to be discounted at a constant rate α, the problem ends up to minimizing

see Bergman and Klefsjö [92] for details. The above expression has a minimum at the same value of T as

which is of the same form as (5.34) in which \(\bar{F}(t)\) is replaced by \(\bar{G}(t) = {e}^{-\alpha T}\bar{F}(t)\). Consequently, the optimization problem permits the usual analysis with ϕ(u) for \(\bar{G}\). The estimation problem is also dealt with likewise by minimizing

where

\(X_{r:n} \leq t \leq X_{r+1:n}\), for \(r = 0,1,\ldots,n - 1\).

The condition of replacement that the unit replacing the older one is as good as new is not always tenable. We assume a milder condition that the replacement is done by a new unit with probability p and a minimal repair is accomplished with probability (1 − p). In other words, the unit is repaired to the same state with the same hazard rate as just before failure.

If C ∗ denotes the average repair cost, the long run average cost per unit is (Cleroux et al. [151])

Using the transform of F p, the above expression can also be brought to the standard form in (5.34). When the costs are discounted, the same kind of analysis is available in this case also.

Assume that the main objective is to extend system life, where the system has a vital component for which n spares are available. When the vital component fails, the system fails. Derman et al. [171] and Bergman and Klefsjö [93] then discussed the schedule of replacements of the vital component such that the system life is as long as possible. If v n is the expected life when an optimal schedule is used, they showed that v 0 = μ and

Draw a line touching the ϕ(u) curve which is parallel to the line \(y = \frac{v_{n-1}} {\mu }\). If the touching point is \((u_{n},\phi (u_{0}))\), then the optimal replacement age is x n obtained by solving F(x n ) = u n .

It is customary to test certain devices, which have high initial hazard rates under conditions of field operation, to eliminate or reduce such early failures before sending them to the customers. Such an operation of screening equipments for the above purpose is called burn-in. If the burn-in is excessive, it will result in a loss to the manufacturer in terms of several kinds of costs. On the other hand, if burn-in is on a reduced scale, the problem of early failures may still persist among a percentage of products thus resulting in a return cost. So, an important problem in conducting the test is the determination of the optimal time point up to which the test has to be carried out. Test procedures based on hazard rate, mean residual life, coefficient of variation of residual life and so on have been proposed in the literature. Consider the case when a non-repairable component is scrapped if it fails during the burn-in period. Our problem is to determine the length T 0 of the burn-in period for which C(T), the expected long run cost per unit time of useful operation is minimized. Let b be the fixed cost per unit and d be the cost per unit time of burn-in. A unit which fails in useful operation after the burn-in results in a cost C 1. Then, Bergman and Klefsjö [94] have shown that

which is minimized for the same value of T as

where \(\alpha = (1 + b + d\mu + C_{1})C_{1}^{-1}\). Hence, T 0 is obtained by first graphically determining the value of u, say u 0, for which

is minimized and then solving F(T 0) = u 0; see Klefsjö [339]. Klefsjö and Westberg [340] point out that if the life distribution \(\bar{F}(T)\) is not known, it has to be estimated from the data. For complete samples, the empirical distribution function is the estimate of F. If the data is censored, i.e., in a set of n observations, k parts are observed to fail and n − k are withdrawn from observation, then the Kaplan–Meier estimator

where r runs through integer values for which t j: n ≤ t and t j: n are observed failure times, could be used. The optimal replacement age is found by (1) drawing the TTT plot based on times to failure, (2) drawing a line from \((-\frac{C_{1}} {K},0)\) which touches TTT plot and has largest possible slope, and (3) taking the optimum replacement age as the failure time corresponding to the optimal point of contact. If the point of contact is (1,1), no preventive maintenance is necessary. Another major aspect of analysis of failure data for repairable systems is the possible trend in inter-failure times. Kvaloy and Lindqvist [365] used some tests based on TTT for this purpose. Some test statistics have also been proposed for testing exponentiality against IFRA alternative (Bergman [89]), for testing whether one distribution is more IFR than another (Wie [580]) and for testing exponentiality against IFR (DFR) alternative (Wie [579]).

References

Aarset, M.V.: The null distribution of a test of constant versus bathtub failure rate. Scand. J. Stat. 12, 55–62 (1985)

Abouammoh, A.M., Khalique, A.: Some tests for mean residual life criteria based on the total time on test transform. Reliab. Eng. 19, 85–101 (1997)

Ahmad, I.A., Li, X., Kayid, M.: The NBUT class of life distributions. IEEE Trans. Reliab. 54, 396–401 (2005)

Asha, G., Nair, N.U.: Reliability properties of mean time to failure in age replacement models. Int. J. Reliab. Qual. Saf. Eng. 17, 15–26 (2010)

Balakrishnan, N., Sarabia, J.M., Kolev, N.: A simple relation between the Leimkuhler curve and the mean residual life function. J. Informetrics 4, 602–607 (2010)

Barlow, R.E.: Geometry of the total time on test transforms. Nav. Res. Logist. Q. 26, 393–402 (1979)

Barlow, R.E., Bartholomew, D.J., Bremner, J.M., Brunk, H.D.: Statistical Inference Under Order Restrictions. Wiley, New York (1972)

Barlow, R.E., Campo, R.: Total time on test process and applications to fault tree analysis. In: Reliability and Fault Tree Analysis, pp. 451–481. SIAM, Philadelphia (1975)

Barlow, R.E., Doksum, K.A.: Isotonic tests for convex orderings. In: The Sixth Berkeley Symposium in Mathematical Statistics and Probability I, Statistical Laboratory of the University of California, Berkeley, pp. 293–323, 1972

Bartoszewicz, J.: Stochastic order relations and the total time on test transforms. Stat. Probab. Lett. 22, 103–110 (1995)

Bartoszewicz, J.: Tail orderings and the total time on test transforms. Applicationes Mathematicae 24, 77–86 (1996)

Bartoszewicz, J.: Application of a general composition theorem to the star order of distributions. Stat. Probab. Lett. 38, 1–9 (1998)

Bartoszewicz, J., Benduch, M.: Some properties of the generalized TTT transform. J. Stat. Plann. Infer. 139, 2208–2217 (2009)

Bergman, B.: Crossings in the total time on test plot. Scand. J. Stat. 4, 171–177 (1977)

Bergman, B.: On age replacement and total time on test concept. Scand. J. Stat. 6, 161–168 (1979)

Bergman, B.: On the decision to replace a unit early or late: A graphical solution. Microelectron. Reliab. 20, 895–896 (1980)

Bergman, B., Klefsjö, B.: The TTT-transforms and age replacements with discounted costs. Nav. Res. Logist. Q. 30, 631–639 (1983)

Bergman, B., Klefsjö, B.: The total time on test and its uses in reliability theory. Oper. Res. 32, 596–606 (1984)

Bergman, B., Klefsjö, B.: Burn-in models and TTT-transforms. Qual. Reliab. Eng. Int. 1, 125–130 (1985)

Bergman, B., Klefsjö, B.: The TTT-concept and replacements to extend system life. Eur. J. Oper. Res. 28, 302–307 (1987)

Bergman, B., Klefsjö, B.: A family of test statistics for detecting monotone mean residual life. J. Stat. Plann. Infer. 21, 161–178 (1989)

Campo, R.A.: Probabilistic optimality in long-term energy sales. IEEE Trans. Power Syst. 17, 237–242 (2002)

Chan, P.K.W., Downs, T.: Two criteria for preventive maintenance. IEEE Trans. Reliab. 27, 272–273 (1968)

Chandra, M., Singpurwalla, N.D.: The Gini index, Lorenz curve and the total time on test transform. Technical Report, George Washington University, Washington, DC, 1978

Chandra, M., Singpurwalla, N.D.: Relationships between some notions which are common to reliability theory and economics. Math. Oper. Res. 6, 113–121 (1981)

Cleroux, P., Dubuc, S., Tilquini, C.: The age replacement problem with minimal repair costs. Oper. Res. 27, 1158–1167 (1979)

Csorgo, M., Yu, H.: Estimation of total time on test transforms for stationary observations. Stoch. Proc. Appl. 68, 229–253 (1997)

Derman, C., Lieberman, G.J., Ross, S.M.: On the use of replacements to extend system life. Oper. Res. 32, 616–627 (1984)

Dohi, T., Kiao, N., Osaki, S.: Solving problems of a repairable limit using TTT concept. IMA J. Math. Appl. Bus. Ind. 6, 101–115 (1995)

Ebrahimi, N., Spizzichino, F.: Some results on normalised total time on test and spacings. Stat. Probab. Lett. 36, 231–243 (1997)

Giorgi, G.M.: Concentration index, Bonferroni. In: Encyclopedia of Statistical Sciences, vol. 2, pp. 141–146. Wiley, New York (1998)

Giorgi, G.M., Crescenzi, M.: A look at the Bonferroni inequality measure in a reliability framework. Statistica 61(4), 571–583 (2001)

Haupt, E., Schäbe, H.: The TTT transformation and a new bathtub distribution model. J. Stat. Plann. Infer. 60, 229–240 (1997)

Kayid, M.: A general family of NBU class of life distributions. Stat. Meth. 4, 1895–1905 (2007)

Klefsjö, B.: HNBUE and HNWUE classes of life distributions. Nav. Res. Logist. Q. 29, 615–626 (1982)

Klefsjö, B.: On ageing properties and total time on test transforms. Scand. J. Stat. 9, 37–41 (1982)

Klefsjö, B.: Some tests against ageing based on the total time on test transform. Comm. Stat. Theor. Meth. 12, 907–927 (1983)

Klefsjö, B.: Testing exponentiality against HNBUE. Scand. J. Stat. 10, 67–75 (1983)

Klefsjö, B.: TTT-transforms: A useful tool when analysing different reliability problems. Reliab. Eng. 15, 231–241 (1986)

Klefsjö, B.: TTT-plotting: A tool for both theoretical and practical problems. J. Stat. Plann. Infer. 29, 99–110 (1991)

Klefsjö, B., Westberg, U.: TTT plotting and maintenance policies. Qual. Eng. 9, 229–235 (1996–1997)

Kleiber, C., Kotz, S.: Statistical Size Distributions in Economics and Actuarial Sciences. Wiley, Hoboken (2003)

Kochar, S.C., Deshpande, J.V.: On exponential scores for testing against positive ageing. Stat. Probab. Lett. 3, 71–73 (1985)

Kumar, D., Westberg, U.: Maintenance scheduling under age replacement policy using proportional hazards model and TTT-plotting. Eur. J. Oper. Res. 99, 507–515 (1997)

Kvaloy, J.T., Lindqvist, B.H.: TTT based tests for trend in repairable systems data. Reliab. Eng. Syst. Saf. 60, 13–28 (1998)

Li, H., Shaked, M.: A general family of univariate stochastic orders. J. Stat. Plann. Infer. 137, 3601–3610 (2007)

Nair, N.U., Sankaran, P.G., Vineshkumar, B.: Total time on test transforms and their implications in reliability analysis. J. Appl. Probab. 45, 1126–1139 (2008)

Neath, A.A., Samaniego, F.J.: On the total time on test transform of an IFRA distribution. Stat. Probab. Lett. 14, 289–291 (1992)

Perez-Ocon, R., Gamiz-Perez, M.L., Ruiz-Castro, J.E.: A study of different ageing classes via total time on test transform and Lorenz curves. Appl. Stoch. Model. Data Anal. 13, 241–248 (1998)

Pham, T.G., Turkkan, M.: The Lorenz and the scaled total-time-on-test transform curves, A unified approach. IEEE Trans. Reliab. 43, 76–84 (1994)

Pundir, S., Arora, S., Jain, K.: Bonferroni curve and the related statistical inference. Stat. Probab. Lett. 75, 140–150 (2005)

Sarabia, J.M.: A general definition of Leimkuhler curves. J. Informetrics 2, 156–163 (2008)

Sarabia, J.M., Prieto, F., Sarabia, M.: Revisiting a functional form for the Lorenz curve. Econ. Lett. 105, 61–63 (2010)

Vera, F., Lynch, J.: K-Mart stochastic modelling using iterated total time on test transforms. In: Modern Statistical and Mathematical Methods in Reliability, pp. 395–409. World Scientific, Singapore (2005)

Westberg, U., Klefsjö, B.: TTT plotting for censored data based on piece-wise exponential estimator. Int. J. Reliab. Qual. Saf. Eng. 1, 1–13 (1994)

Wie, X.: Test of exponentiality against a monotone hazards function alternative based on TTT transformations. Microelectron. Reliab. 32, 607–610 (1992)

Wie, X.: Testing whether one distribution is more IFR than another. Microelectron. Reliab. 32, 271–273 (1992)

Xie, M.: Testing constant failure rate against some partially monotone alternatives. Microelectron. Reliab. 27, 557–565 (1987)

Xie, M.: Some total time on test quantities useful for testing constant against bathtub shaped failure rate distributions. Scand. J. Stat. 16, 137–144 (1989)

Zhao, N., Song, Y.H., Lu, H.: Risk assessment strategies using total time on test transforms. IEEE (2006). doi: 10.1109/PES.2006.1709062

Author information

Authors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer Science+Business Media New York

About this chapter

Cite this chapter

Nair, N.U., Sankaran, P.G., Balakrishnan, N. (2013). Total Time on Test Transforms. In: Quantile-Based Reliability Analysis. Statistics for Industry and Technology. Birkhäuser, New York, NY. https://doi.org/10.1007/978-0-8176-8361-0_5

Download citation

DOI: https://doi.org/10.1007/978-0-8176-8361-0_5

Published:

Publisher Name: Birkhäuser, New York, NY

Print ISBN: 978-0-8176-8360-3

Online ISBN: 978-0-8176-8361-0

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)