Abstract

One of the objectives of quantile-based reliability analysis is to make use of quantile functions as models in lifetime data analysis. Accordingly, in this chapter, we discuss the characteristics of certain quantile functions known in the literature. The models considered are the generalized lambda distribution of Ramberg and Schmeiser, the generalized Tukey lambda family of Freimer, Kollia, Mudholkar and Lin, the four-parameter distribution of van Staden and Loots, the five-parameter lambda family and the power-Pareto model of Gilchrist, the Govindarajulu distribution and the generalized Weibull family of Mudholkar and Kollia.

The shapes of the different systems and their descriptive measures of location, dispersion, skewness and kurtosis in terms of conventional moments, L-moments and percentiles are provided. Various methods of estimation based on moments, percentiles, L-moments, least squares and maximum likelihood are reviewed. Also included are the starship method, the discretized approach specifically introduced for the estimation of parameters in the quantile functions and details of the packages and tables that facilitate the estimation process.

In analysing the reliability aspects, one also needs various functions that describe the ageing phenomenon. The expressions for the hazard quantile function, mean residual quantile function, variance residual quantile function, percentile residual life function and their counter parts in reversed time given in the preceding chapters provide the necessary tools in this direction. Some characterization theorems show the relationships between reliability functions unique to various distributions. Applications of selected models and the estimation procedures are also demonstrated by fitting them to some data on failure times.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

3.1 Introduction

Probability distributions facilitate characterization of the uncertainty prevailing in a data set by identifying the patterns of variation. By summarizing the observations into a mathematical form that contains a few parameters, distributions also provide means to analyse the basic structure that generates the observations. In finding appropriate distributions that adequately describe a data set, there are in general two approaches. One is to make assumptions about the physical characteristics that govern the data generating mechanism and then to find a model that satisfies such assumptions. This can be done either by deriving the model from the basic assumptions and relations or by adapting one of the conventional models from other disciplines, such as physical, biological or social sciences with appropriate conceptual interpretations. These theoretical models are later tested against the observations by the use of a goodness-of-fit test, for example. A second approach to modeling is entirely data dependent. Models derived in this manner are called empirical or black box models. In situations wherein there is a lack of understanding of the data generating process, the objective is limited to finding the best approximation to the data or because of the complexity of the model involved, a distribution is selected to fit the data. The usual procedure in such cases is to first make a preliminary assessment of the features of the available observations and then decide upon a mathematical formulation of the distribution that can approximate it. Empirical modelling problems usually focus attention on flexible families of distributions with enough parameters capable of producing different shapes and characteristics. The Pearson family, Johnson system, Burr family of distributions, and some others, which include several commonly occurring distributions, provide important aids in this regard. In this chapter, we discuss some families of distributions specified by their quantile functions that can be utilized for modelling lifetime data. Various quantile-based properties of distributions and concepts in reliability presented in the last two chapters form the background material for the ensuing discussion. The main distributions discussed here are the lambda distributions, power-Pareto model, Govindarajulu distribution and the generalized Weibull family. We also demonstrate that these models can be used as lifetime distributions while modelling real lifetime data.

3.2 Lambda Distributions

A brief historical account of developments on the lambda distributions was provided in Sect. 1.1. During the past 60 years, considerable efforts were made to generalize the basic model of Hastings et al. [ 264 ] and Tukey [ 567 ] and also to find new applications and inferential procedures. In general, the applications of different versions span a variety of fields such as inventory control (Silver [ 540 ]), logistic regression (Pregibon [ 497 ]), meteorology (Osturk and Dale [ 476 ]), survival analysis (Lefante Jr. [ 380 ]), queueing theory (Robinson and Chan [ 508 ]), random variate generation and goodness-of-fit tests (Cao and Lugosi [ 128 ]), fatigue studies (Bigerelle et al. [ 100 ]) process control (Fournier et al. [ 200 ]), biochemistry (Ramos-Fernandez et al. [ 505 ]), economics (Haritha et al. [ 260 ]), corrosion (Najjar et al. [ 456 ]) and reliability analysis (Nair and Vineshkumar [ 452 ]).

The basic model from which all other generalizations originate is the Tukey lambda distribution with quantile function

defined for all non-zero lambda values. As λ → 0, we have

corresponding to the logistic distribution. van Dyke [ 571 ] compared a normalized version of (3.1) with the t-distribution. Model (3.1) was studied by Filliben [ 197 ] who used it to approximate symmetric distributions with varying tail weights. Joiner and Rosenblatt [ 304 ] studied the sample range and Ramberg and Schmeiser [ 503 ] discussed the application of the distribution in generating symmetric random variables. For λ = 1 and λ = 2, it is easy to verify that (3.1) becomes uniform over ( − 1, 1) and \((-\frac{1} {2}, \frac{1} {2})\), respectively. The density functions are U shaped for 1 < λ < 2 and unimodal for λ < 1 or λ > 2. With (3.1) being symmetric and having range for negative values of X, it has limited use as a lifetime model.

Remark 3.1.

The Tukey lambda distribution defined in (3.1) is an extremal distribution that gets characterized by means of largest order statistics. To see this, let \(X_{1:n} < \cdots < X_{n:n}\) be the order statistics from a random sample of size n from a symmetric distribution F with mean 0 and variance σ 2. Then, due to the symmetry of the distribution, we have \(E(X_{n:n}) = -E(X_{1:n})\), and so we can write from (1.23) and (1.24) that

By applying Cauchy–Schwarz inequality to (3.2), we readily find

where B(a, b) = Γ(a)Γ(b) ∕ Γ(a + b), a, b > 0, is the complete beta function. Note that, from (3.3), by setting n = 2 and n = 3, we obtain the bounds

The bound in (3.3) was established originally by Hartley and David [ 263 ] and Gumbel [ 229 ]. It is useful to note that the bound in (3.3), derived from (3.2), is attained if and only if

When n = 2 and 3, we thus find \(Q(u) \propto 2u - 1\), which corresponds to the uniform distribution; see Balakrishnan and Balasubramanian [ 50 ] for some additional insight into this characterization result. Thus, we observe from (3.2) that the Tukey lambda distribution with integral values of λ is an extremal distribution and is characterized by the mean of the largest order statistic in (3.3). The same goes for the Tukey lambda distribution in (3.1) for positive real values in terms of fractional order statistics, in view of Remark 1.1.

3.2.1 Generalized Lambda Distribution

Asymmetric versions of (3.1) in various forms such as

and

were studied subsequently (Joiner and Rosenblatt [ 304 ], Shapiro and Wilk [ 536 ]). All such versions are subsumed in the more general form

introduced by Ramberg and Schmeiser [ 503 ], which is called the generalized lambda distribution. This is the most discussed member of the various lambda distributions, because of its versatility and special properties. In (3.4), λ 1 is a location parameter, λ 2 is a scale parameter, while λ 3 and λ 4 determine the shape. The distribution takes on different supports depending on the parameters λ 2, λ 3 and λ 4, while λ 1, being the location parameter, can take values on the real line in all cases (Table 3.1).

As a life distribution, the required constraint on the parameters is

The quantile density function is

and accordingly the density quantile function is

which has to remain non-negative for (3.4) to represent a proper distribution. This places constraints on the parameter space. A special feature of (3.4) is that it is a valid distribution only in the regions (λ 3 ≤ − 1, λ 4 ≥ 1), (λ 3 ≥ 1, λ 4 ≤ − 1), (λ 3 ≥ 0, λ 4 ≥ 0), (λ 3 ≤ 0, λ 4 ≤ 1), and for values in ( − 1 < λ 3 < 0, λ 4 > 0) for which

and values in \((\lambda _{3} > 1,-1 <\lambda _{4} < 0)\) for which

see Karian and Dudewicz [ 314 ] for a detailed study in this respect. Since

from (1.30), the mean is simply

Since λ 1 is not present in the central moments, we set λ 1 = 0. Ramberg et al. [ 502 ] find that

from which we obtain the following central moments:

where

and

The rth moment exists only if \(-\frac{1} {r} <\min (\lambda _{3},\lambda _{4})\). When \(\lambda _{3} =\lambda _{4}\), it is verified that μ 3 = 0 and the generalized lambda distribution is symmetric in this case. A detailed study of the skewness and kurtosis for different values of λ 3 and λ 4 is given in Karian and Dudewicz [ 315 ]. The \((\beta _{1},\beta _{2})\) diagram includes the skewness values corresponding to the uniform, t, F, normal, Weibull, lognormal and some beta distributions. One limitation that needs to be mentioned regarding skewness is that the generalized lambda family does not cover the entire area as some other systems (like the Pearson system) do; but, it also covers some new areas that are not covered by others. This four-parameter distribution includes a wide range of shapes for its density function; see Fig. 3.1 for some selection shapes.

Density plots of the generalized lambda distribution (Ramberg and Schmeiser [ 503 ] model) for different choices of \((\lambda _{1},\lambda _{2},\lambda _{3},\lambda _{4})\). (a) (1,0.2,0.13,0.13); (b) (1,0.6,1.5,-1.5); (c) (1,0.6,1.75,1.2); (d) (1,0.2,0.13,0.013); (e) (1,0.2,0.0013,0.13); (f) (1,1,0.5,4)

The basic characteristics of the distribution can also be expressed in terms of the percentiles. Using (1.6)–(1.9), we have the following:

- the median:

-

$$\displaystyle{ M =\lambda _{1} + \frac{1} {\lambda _{2}} \left [{\left (\frac{1} {2}\right )}^{\lambda _{3}} -{\left (\frac{1} {2}\right )}^{\lambda _{4}}\right ], }$$(3.11)

- the interquantile range:

-

$$\displaystyle{ \text{IQR} = \frac{1} {\lambda _{2}} \left [\frac{{3}^{\lambda _{3}} - 1} {{4}^{\lambda _{3}}} + \frac{{3}^{\lambda _{4}} - 1} {{4}^{\lambda _{4}}} \right ], }$$(3.12)

- Galton’s measure of skewness:

-

$$\displaystyle{ S = \frac{{4}^{-\lambda _{3}}({3}^{\lambda _{3}} - {2}^{\lambda _{3}+1} - 1) - {4}^{\lambda _{4}}(1 + {3}^{\lambda _{4}} - {2}^{\lambda _{4}+1})} {\frac{{3}^{\lambda _{3}}-1} {{4}^{\lambda _{3}}} + \frac{{3}^{\lambda _{4}}-1} {{4}^{\lambda _{4}}} } \, }$$(3.13)

- and Moors’ measure of kurtosis:

-

$$\displaystyle{ T = \frac{{8}^{-\lambda _{3}}(1 + {3}^{\lambda _{3}} + {5}^{\lambda _{3}} + {7}^{\lambda _{3}}) - {8}^{-\lambda _{4}}(1 + {3}^{\lambda _{4}} + {5}^{\lambda _{4}} + {7}^{\lambda _{4}})} {{4}^{-\lambda _{3}}({3}^{\lambda _{3}} - 1) + {4}^{-\lambda _{4}}({3}^{\lambda _{4}} - 1)}. }$$(3.14)

For this distribution, the L-moments have comparatively simpler expressions than the conventional moments. One can use (1.34)–(1.37) to calculate these. To simplify their expressions, we employ the notation

and

to denote the ascending and descending factorials, respectively. Then, the first four L-moments are as follows (Asquith [ 40 ]):

Thus, the L-skewness and L-kurtosis become

and

All the L-moments exist for every \(\lambda _{3},\lambda _{4} > -1\). On the other hand, the conventional moments require \(\lambda _{3},\lambda _{4} > -\frac{1} {4}\) for the evaluation of Pearson’s skewness β 1 and kurtosis β 2. Thus, L-skewness and kurtosis permit a larger range of values in the parameter space. The problem of characterizing the generalized lambda distribution has been considered in Karvanen and Nuutinen [ 313 ]. For the symmetric case, they have derived the boundaries analytically and in the general case, numerical methods have been used. They found that with an exception of the smallest values of τ 4, the family (3.4) covers all possible \((\tau _{3},\tau _{4})\) pairs and often there are two or more distributions sharing the same τ 3 and τ 4. A wider set of generalized lambda distributions can be characterized when L-moments are used than by the conventional moments. This is an important advantage in the context of data analysis while seeking appropriate models.

The moments of order statistics have closed forms as well. For example, the expectation of order statistics from a random sample of size n is obtained from (1.28) as

In particular, from (3.21), we obtain

Also, the distributions of X 1: n and X n: n are given by

Since there exist members of generalized lambda family with support on the positive real line, its scope as a lifetime model is apparent. However, this fact has not been exploited much. The hazard quantile function (2.30) has the simple form

Similarly, the mean residual quantile function is obtained from (2.43) as

Note that, in this case,

or

The above expression is a general condition to be used whenever the left end of the support is greater than zero. The variance residual quantile function is calculated as

where

and \(B_{x}(m,n) =\int _{ 0}^{x}{t}^{m-1}{(1 - t)}^{n-1}dt\) is the incomplete beta function.

The term

is of interest in reliability analysis, being the quantile version of E(X | X > x). It is called the conditional mean life or the vitality function. One may refer to Kupka and Loo [ 363 ] for a detailed exposition of the properties of the vitality function and its role in explaining the ageing process. We see that from (3.23), Q(u) can be recovered up to an additive constant as

and therefore functional forms of μ(u) will enable us to identify the life distribution. Thus, a generalized lambda distribution is determined as

if the conditional mean quantile function μ(u) satisfies

for real a, b, c and d for which Q(0) ≥ 0.

The αth percentile residual life is calculated from (2.50) as

Various functions in reversed time presented in (2.50), (2.51) and (2.53) yield

where

and

Like the function μ(u), one can also consider

which is the quantile formulation of E(X | X ≤ x). This latter function’s relationship with reversed hazard function has been used in Nair and Sudheesh [ 451 ] to characterize distributions. It has applications in several other fields like economics and risk analysis. For example, when X is interpreted as the income and x is the poverty level, the above expectation denotes the average income of the poor people and is an essential component for the evaluation of poverty index and income inequality. The form of (3.24) is convenient in identifying models, like

determining the generalized lambda distribution. The formula for calculating Q(u) from θ(u) is

Finally, the reversed percentile residual life function is (2.50)

There is no conflict of opinion regarding the potential of the generalized lambda family in empirical data modelling because of its flexibility to represent different kinds of data situations. However, the difficulties experienced in the estimation problem, especially on the computational front, have stimulated extensive research on various methods, conventional as well as new. A popular approach for estimation of parameters of quantile functions is the method of moments, in which the first four moments of the generalized lambda distribution are matched with the corresponding moments of the sample. Instead of choosing the first four moments directly, Ramberg and Schmeiser [ 504 ] opted for the equations

where μ and σ 2 are as given in (3.7) and (3.8), \(\gamma _{1} ={ \frac{\mu _{3}} {\sigma }^{3}}\) and \(\gamma _{2} ={ \frac{\mu _{4}} {\sigma }^{4}}\) with values for μ 3 and μ 4 as in (3.9) and (3.10). Since γ 1 and γ 2 contain only λ 3 and λ 4, the solutions of (3.28) and (3.29) give λ 3 and λ 4. From the remaining two equations, λ 1 and λ 2 can be readily found. Even though theoretically the method looks simple, in practice, one has to apply numerical methods to solve the equations as they are nonlinear. Dudewicz and Karian [ 181 ] have provided extensive tables from which the parameters can be determined for a given choice of skewness and kurtosis of the data. They also describe an algorithm that summarizes the steps in the calculation. A second method to obtain a best solution is to use computer programs that ensure the solutions of (3.26)–(3.29) to satisfy

for some prefixed tolerance ε > 0. This is accomplished by starting with a good set of initial values for the parameters. Then search is made through algorithms that satisfy (3.30). However, there is no guarantee that a given set of initial values necessarily end up resulting in a solution nor that it improves upon the value of ε in each iteration. See Karian and Dudewicz [ 315 ] for such a computational program. In both the methods described above, the region specified by \(1 +\gamma _{ 1}^{2} <\gamma _{2} < 1.8 + 1.7\gamma _{1}^{2}\) is not attained and one may not arrive at a set of lambda values that satisfy a goodness-of-fit test. These and other problems are explained in Karian and Dudewicz [ 315, 317 ].

A similar logic applies to the method of L-moments prescribed in Asquith [ 40 ] and Karian and Dudewicz [ 315 ]. The equations to be solved in the latter work are

where \(L_{1},L_{2},\tau _{3}\) and τ 4 have the expressions in (3.15), (3.16), (3.19) and (3.20), where

where

Clearly, (3.32) and (3.33) do not contain λ 1 and λ 2 and are therefore solvable for λ 3 and λ 4. The other two parameters are then found from (3.31) by using the estimates of λ 3 and λ 4.

In the work of Asquith [ 40 ], estimates of λ 3 and λ 4 are values that minimize

where \(\hat{\tau }_{i}\) (i = 3, 4) is the estimated value of τ i . After choosing initial values of λ 3 and λ 4, we arrive at the optimal value according to (3.34) and then check whether the solutions obtained meet the requirements − 1 < τ 3 < 1 and \(\frac{1} {4}(5\tau _{3}^{2} - 1) \leq \tau _{ 4} < 1\). If not, we need to choose another set of initial values and repeat the above steps. After solving for λ 2 from (3.31), compute \(\hat{\tau }_{5}\) using the expression

and seek the values that minimize \((t_{5} -\hat{\tau }_{5})\). Finally, we need to substitute it into (3.31) to find \(\hat{\lambda }_{1}\).

A third method is to match the percentiles of the distribution with those of the data. As a first step, the sample percentiles are computed as

where \((n + 1)p = r + \frac{a} {b}\) in which r is a positive integer and \(0 < \frac{a} {b} < 1\). Karian and Dudewicz [ 315 ] considered the following four equations:

Solving the above system of equations, we obtain the percentile-based estimates. For this purpose, either numerical methods have to be resorted to or refer to the tables in Appendix D of Karian and Dudewicz [ 315 ] which gives the values of λ 1, λ 2, λ 3 and λ 4 based on the sample values for the LHS of the above four equations.

In all the three methods discussed so far, the question of more than one set of lambda values in the admissible regions may be possible. The choice of the appropriate set depends on the data and some goodness-of-fit procedure. Karian and Dudewicz [ 314 ] compared the relative merits of the two-moment approaches and the percentile method. Using the p-values of the chi-square goodness-of-fit test, the quality of fit was ascertained. They noted that, in general, percentile and L-moment methods gave better fits more frequently. Further, in terms of the L 2-norm, which measures the discrepancy between two functions f(x) and g(x) by

the method of percentiles was found to be better than the method of moments over a broad range of values in the \((r_{1},r_{2})\) space in samples of size 1,000.

Another useful estimation procedure based on the least-square approach was proposed by Osturk and Dale [ 477 ]. Let X r: n (\(r = 1,\ldots,n\)) denote the order statistics of the data and U r: n the order statistics of the corresponding uniformly distributed random variable F(X) for \(r = 1,2,\ldots,n\). The least-square method is to find λ i such that the sum of squared differences between the observed and expected order statistics is minimum. This is achieved by minimizing

From the density function of uniform order statistics given by (Arnold et al. [ 37 ])

we have

and similarly

Owing to the difficulties in simultaneously minimizing (3.35) with respect to the four parameters, first minimize (3.35) with respect to λ 1 and λ 2 by treating λ 3 and λ 4 as constants. As in the case of simple linear regression, setting the derivatives of (3.35) to zero, we can solve for λ 1 and λ 2 as

and

where \(\nu _{r} = M_{r} - N_{r}\) and \(\bar{\nu }= \frac{1} {n}\sum \nu _{r}\). Then, upon substituting (3.37) and (3.38) in (3.35), we get

Thus, λ 3 and λ 4 are found by minimizing

Finally, the solutions from (3.39), when substituted into (3.37) and (3.38), give \(\hat{\lambda }_{1}\) and \(\hat{\lambda }_{2}\).

A second version of percentile method in Karian and Dudewicz [ 314 ] proposes equating the population median M, the interdecile range

the tail weight ratio

and the tail weight factor

with the corresponding sample quantities. These give rise to the equations

Since (3.42) and (3.43) involve only λ 3 and λ 4, they are solved first and then insert these values in (3.40) and (3.41) to estimate λ 1 and λ 2. All the equations involve u and therefore a choice of u lying between 0 and \(\frac{1} {4}\) is suggested by Karian and Dudewicz [ 314 ]. They also provide a table of

as pairs of values and the corresponding solutions, the algorithm and illustrations of how to use the tables.

King and MacGillivray [ 326 ] have introduced a new procedure called the starship method, which involves estimation of the parameters along with a goodness-of-fit test. Laying a four-dimensional grid over a region in the four-dimensional space that covers the range of the parameter values, a goodness of fit is performed over the points in the grid. If the fit is not satisfied with one point, another is selected and so on, with the procedure terminating with parameter values that have the best measure of fit. Lakhany and Mausser [ 371 ] and Fournier et al. [ 201 ] have pointed out that the starship method is quite time consuming especially for large samples.

In practice, in most of the methods described above, the parameters obtained need not produce an adequate model. There can also be cases where multiple solutions exist and the solutions do not span the entire data set. So, goodness-of-fit tests have to be carried out separately after estimation or such a test must be embedded in the procedure as with the starship method. There have been several attempts to device procedures that automate the restart of the algorithms and also do the necessary tests. Lakhany and Mausser [ 371 ] devised a modification to the starship method. Instead of using a full four-dimensional grid, they used successive simplex from random starting points until the goodness of fit does not reject the distribution. It cannot, however, be said that always the best fit is realized. The GLIDEX package provides fitting methods using discretized and numerical maximum likelihood approach (Su [ 549 ]) and the starship methods. King and MacGillvray [ 327 ] have suggested a method of estimation with the aid of location and scale free shape functionals

and

by minimizing the distance between the sample and population values of the functionals. Fournier et al. [ 201 ] proposed another method that minimizes the \(D =\max \vert S_{n}(x) - F(x)\vert \), where S n (x) is the empirical distribution function in a two-dimensional grid representing the \((\lambda _{3},\lambda _{4})\) space. Two other works in this context are the estimation of parameters for grouped data (Tarsitano [ 564 ]) and for censored data (Mercy and Kumaran [ 416 ]). Karian and Dudewicz [ 316 ] discuss the computational difficulties encountered in the estimation procedure of the generalized lambda distribution.

3.2.2 Generalized Tukey Lambda Family

A major limitation of the generalized lambda family discussed above is that the distribution is valid only for certain regions in the parameter space. Freimer et al. [ 203 ] introduced a modified generalized lambda distribution defined by

which is well defined for the values of the shape parameters λ 3 and λ 4 over the entire two-dimensional space. The quantile density function has the simple form

Since our interest in (3.44) is as a life distribution, we should have

in which case the support becomes \((\lambda _{1} - \frac{1} {\lambda _{2}\lambda _{3}},\lambda _{1} + \frac{1} {\lambda _{2}\lambda _{4}} )\) whenever \(\lambda _{3} >\lambda _{4} > 0\) and \((\lambda _{1} - \frac{1} {\lambda _{2}\lambda _{3}},\infty )\) if λ 3 > 0 and λ 4 ≤ 0. This is a crucial point to be verified when the distribution is used to model data pertaining to non-negative random variables. The exponential distribution is a particular case of the family as \(\lambda _{3} \rightarrow \infty \) and \(\lambda _{4} \rightarrow 0\). All the approximation that are valid for the modified generalized lambda family are valid in (3.44) as well.

The first four raw moments of this distribution are as follows:

In order to have a finite moment of order k, it is necessary that \(\min (\lambda _{3},\lambda _{4}) > -\frac{1} {k}\). An elaborate discussion on the skewness and kurtosis has been carried out in Freimer et al. [ 203 ]. The family completely covers the β 1 values with two disjoint curves corresponding to any \(\sqrt{\beta _{ 1}}\) except zero. As one of the parameters is held fixed, the behaviour of skewness is as follows. At \(\lambda _{3} = -\frac{1} {3}\), \(\sqrt{\beta _{ 1}} = -\infty \) then increases monotonically to zero for λ 3 in \((-\frac{1} {3},1)\) and then tends to ∞ as λ 3 → ∞. Similarly, as λ 2 increases from \(-\frac{1} {3}\) to 1 to ∞, \(\sqrt{\beta _{ 1}}\) decreases from ∞ to 0 and to − ∞. The family attains symmetry at \(\lambda _{3} =\lambda _{4}\), but \(\sqrt{\beta _{1}}\) may be zero even if \(\lambda _{3}\neq \lambda _{4}\). Considerable richness is seen in density shapes, there being members that are unimodal, U-shaped, J-shaped and monotone, which are symmetric or skew with short, medium and long tails; see, e.g., Fig. 3.2. Also, there are members with arbitrarily large values for kurtosis, though it does not contain the lowest possible β 2 for a given β 1. There can be more than one set of \((\lambda _{3},\lambda _{4})\) corresponding to a given \((\beta _{1},\beta _{2})\).

Compared to the conventional central moments, the L-moments have much simpler expressions:

The measures of location, spread, skewness and kurtosis based on percentiles are as follows:

It could be seen that when λ 3 = 1, \(\lambda _{4} \rightarrow \infty \) and also when \(\lambda _{3} \rightarrow \infty \) and λ 4 = 1, we have S = 0. The expected value of the rth order statistic X r: n is

Setting r = 1 and n, we get

and

The distributions of X 1: n and X n: n are given by

and

Various reliability functions of the model have closed-form algebraic expressions, except for the variances which contain beta functions. The hazard quantile function is

Mean residual quantile function simplifies to

The variance residual quantile function is

where

and

Percentile residual life function becomes

Expression for the reversed hazard quantile function is

The reversed mean residual quantile function is

the reversed percentile residual life function is

and the reversed variance residual quantile function is

where

and

Although the problem of estimating the parameters of (3.44) is quite similar and all the methods described earlier for the generalized lambda distribution are applicable in this case also, there is comparatively less literature available on this subject. The moment matching method and the least-square approach were discussed by Lakhany and Massuer [ 371 ]. Since these methods involved only replacement of the corresponding expressions for (3.44) in the previous section, the details are not presented here for the sake of brevity. Su [ 550 ] discussed two new approaches—the discretized approach and the method of maximum likelihood for the estimation problem, by tackling it on two fronts: (a) finding suitable initial values and (b) selecting the best fit through an optimization scheme. For the distribution in (3.44), the initial values of λ 3 and λ 4 consist of low discrepancy quasi-random numbers ranging from − 0. 25 to 1.5. After generating these random values, they were used to derive λ 1 and λ 2 by the method of moments as in Lakhany and Massuer [ 371 ]. From these initial values, the GLDEX package (Su [ 551 ]) is employed to find the best set of initial values for the optimization process. In the discretized approach, the range of the data is divided into equally spaced classes, and after arranging the observations in ascending order of magnitude, the proportion falling in each class is ascertained. Then, the differences between the observed (d i ) and theoretical (t i ) proportions are minimized through either

where k is the number of classes.

In the maximum likelihood method, the u i values corresponding to each x i in the data are to be computed first using Q(u). A numerical method such as the Newton-Raphson can be employed for this purpose. Then, with the help of Nelder–Simplex algorithm, the log likelihood function

is maximized to get the final estimates. The GLDEX package provides diagnostic tests that assess the quality of the fit through the Kolmogorov–Smirnov test, quantile plots and agreement between the measures of location, spread, skewness and kurtosis of the data with those of the model fitted to the observations.

Haritha et al. [ 260 ] adopted a percentile method in which they matched the measures of location (median, M), spread (interquartile range), skewness (Galton’s coefficient, S) and kurtosis (Moors’ measure, T) of the population in (3.49)–(3.52) and the data. Among the solutions of the resulting equations, they chose the parameter values that gave

for the smallest ε.

3.2.3 van Staden–Loots Model

A four-parameter distribution that belongs to lambda family proposed by van Staden and Loots [ 572 ], but different from the two versions discussed in Sects. 3.2.1 and 3.2.2, will be studied in this section. The distribution is generated by considering the generalized Pareto model in the form

and its reflection

A weighted sum of these two quantile functions with respective weights λ 3 and 1 − λ 3, 0 ≤ λ 3 ≤ 1, along with the introduction of a location parameter λ 1 and a scale parameter λ 2, provide the new form. Thus, the quantile function of this model is

Equation (3.55) includes the exponential, logistic and uniform distributions as special cases. The support of this distribution is as follows for different choices of λ 3 and λ 4:

For (3.55) to be a life distribution, one must have \(\lambda _{1} -\lambda _{2}(1 -\lambda _{3})\lambda _{4}^{-1} \geq 0\). This gives members with both finite and infinite support, depending on whether λ 4 is positive or negative.

As for descriptive measures, the mean and variance are given by

and

One attractive feature of this family is that its L-moments have very simple forms, and they exist for all λ 4 > − 1, and are as follows:

where S = 1 when r is odd and S = 0 when r is even. These values give L-skewness and L-kurtosis to be

and

respectively. van Staden and Loots [ 572 ] note that, as in the case of the two four-parameter lambda families discussed in the last two sections, there is no unique \((\lambda _{3},\lambda _{4})\) pair for a given value of \((\tau _{3},\tau _{4})\). When \(\lambda _{3} = -\frac{1} {2}\), the distribution is symmetric.

L-skewness covers the entire permissible span ( − 1, 1) and the kurtosis is independent of λ 3 with a minimum attained at \(\lambda _{4} = \sqrt{6} - 1\). The percentile-based measures also have simple explicit forms and are given by

The quantile density function is

and so the density quantile function is

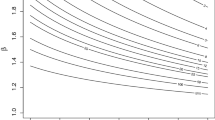

Figure 3.3 displays some shapes of the density function.

Density plots of the GLD proposed by van Staden and Loots [ 572 ] for varying \((\lambda _{1},\lambda _{2},\lambda _{3},\lambda _{4})\). (a) (1,1,0.5,2); (b) (2,1,0.5,3); (c) (3,2,0.25,0.5); (d) (1,2,0.1, − 1)

The expectations of the order statistics from (3.55) are as follows:

Since there are members of the family with support on the positive real line, the model will be useful for describing lifetime data. In this context, the hazard quantile function is

Similarly, the mean residual quantile function is

the reversed hazard quantile function is

and the reversed mean residual quantile function is

Further, the form

determines the quantile function in (3.55) as

where θ(u) is as defined in (3.24).

van Staden and Loots [ 572 ] prescribed the method of L-moments for the estimation of the parameters. With the aid of

where t 4 is the sample L-kurtosis, λ 4 can be estimated. Using \(\hat{\lambda }_{4}\), the estimate \(\hat{\lambda }_{3}\) of \(\lambda _{3}\) can be determined from

where t 3 is the sample L-skewness. The other two-parameter estimates are computed as

with l 1 and l 2 being the usual first two sample L-moments.

The method of percentiles can also be applied for parameter estimation. In fact,

provides λ 4, t and s being the Moors and Galton measures, respectively, evaluated from the data. This is used in (3.59) to find \(\hat{\lambda }_{3}\), and then \(\hat{\lambda }_{2}\) and \(\hat{\lambda }_{1}\) are determined from (3.56) and (3.58) by equating IQR and M with iqr and m, respectively.

3.2.4 Five-Parameter Lambda Family

Gilchrist [ 215 ] proposed a five-parameter family of distributions with quantile function

as an extension to the Freimer et al. [ 203 ] model in (3.44). Tarsitano [ 564 ] studied this model and evaluated various estimation methods for this family. The family has its quantile density function as

In (3.63), λ 1 controls the location parameter though not exclusively, λ 2 ≥ 0 is a scale parameter and λ 3, λ 4 and λ 5 are shape parameters. It is evident that the generalized Tukey lambda family in (3.44) is a special case when λ 3 = 0. The support of the distribution is given by

In the case of non-negative random variables, the condition

would become necessary. The density function may be unimodal with or without truncated tails, U-shaped, S-shaped or monotone. The family also includes the exponential distribution when \(\lambda _{4} \rightarrow \infty \) and \(\lambda _{5} \rightarrow 0\), the generalized Pareto distribution when \(\lambda _{4} \rightarrow \infty \) and \(\vert \lambda _{5}\vert < \infty \), and the power distribution when \(\lambda _{5} \rightarrow 0\) and \(\vert \lambda _{4}\vert < \infty \). Some typical shapes of the distribution are displayed in Fig. 3.4. Tarsitano [ 564 ] has provided close approximations to various symmetric and asymmetric distributions using (3.63) and went on to recommend the usage of the model when a particular distributional form cannot be suggested from the physical situation under consideration. Setting \(Z = \frac{2(X-\lambda _{1})} {\lambda _{2}}\), Tarsitano [ 564 ] expressed the raw moments in the form

provided λ 4 and λ 5 are greater than \(\frac{1} {r}\), where B(⋅,⋅) is the complete beta function, as before. The mean and variance are deduced from the above expression as

The L-moments take on simpler expressions in this case, and the first four are as follows:

Percentile-based measures of location, spread, skewness and kurtosis can also be presented, but they involve rather cumbersome expressions. For example, the median is given by

The means of order statistics are as follows:

Tarsitano [ 564 ] discussed the estimation problem through nonlinear least-squares and least absolute deviation approaches. For a random sample \(X_{1},X_{2},\ldots,X_{n}\) of size n from (3.59), under the least-squares approach, we consider

and then seek the parameter values that minimize

In terms of expectations of order statistics in (3.60), realize that X r: n is an estimate of the expectation in (3.64), which incidentally is linear in λ 1, λ 2 and λ 3 and nonlinear in λ 4 and λ 5. So, as in the case of Osturk and Dale method discussed earlier, we may fix \((\lambda _{4},\lambda _{5})\) and determine \(\lambda _{1},\lambda _{2}\) and λ 3. Then, \((\lambda _{4},\lambda _{5})\) can be found such that (3.65) is minimized. In the least absolute deviation procedure, the objective function to be minimized is

where

3.3 Power-Pareto Distribution

As seen earlier in Table 1.1, the quantile function of the power distribution is of the form

while that of the Pareto distribution is

A new quantile function can then be formed by taking the product of these two as

where C > 0, \(\lambda _{1},\lambda _{2} > 0\) and one of the λ’s may be equal to zero. The distribution of a random variable X with (3.66) as its quantile function is called the power-Pareto distribution. Gilchrist [ 215 ] and Hankin and Lee [ 259 ] have studied the properties of this distribution. It has the quantile density function as

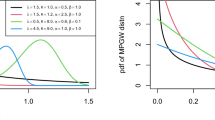

In (3.66), C is a scale parameter, λ 1 and λ 2 are shape parameters, with λ 1 controlling the left tail and λ 2 the right tail. The shape of the density function is displayed in Fig. 3.5 for some parameter values when the scale parameter C = 1.

Conventional moments of (3.66) are given by

which exists whenever \(\lambda _{2} < \frac{1} {r}\). From this, the mean and variance are obtained as

and

The range of skewness and kurtosis is evaluated over the range λ 1 > 0, \(0 \leq \lambda _{2} < \frac{1} {4}\). Minimum skewness and minimum kurtosis are both attained at λ 2 = 0, and both these measures show increasing trend with respect to λ 1 and λ 2. Kurtosis is also seen to be as an increasing function of skewness. Hankin and Lee [ 259 ] have mentioned that the distribution is more suitable for positively skewed data and can provide good approximations to gamma, Weibull and lognormal distributions.

Percentile-based measures are simpler and are given by

Further, the first four L-moments are as follows:

where B(⋅,⋅) is the complete beta function. From these expressions, we readily obtain the L-skewness and L-kurtosis measures as

and

The expected value of the rth order statistic is

while the quantile functions of X 1: n and X n: n are given by

and

It is easily seen that the hazard quantile function is

the mean residual quantile function is

the reversed hazard quantile function is

and the reversed mean residual quantile function is

Next, upon denoting the quantile function of the distribution by \(Q(u;C,\lambda _{1},\lambda _{2})\), we have the following characterization results for this family of distributions (Nair and Sankaran [ 443 ]).

Theorem 3.1.

A non-negative variable X is distributed as \(Q(u;C,\lambda _{1},0)\) if and only if

-

(i)

\(H(u) = k_{1}u{[(1 - u)Q(u)]}^{-1}\) , k 1 > 0;

-

(ii)

\(M(u) = k_{1}{[Q(u)]}^{-1}\) ;

-

(iii)

R(u) = k 2 Q(u), k 2 < 1;

-

(iv)

Λ(u)R(u) = k 3, k 2 < 1,

where k i ’s are constants.

Theorem 3.2.

A non-negative random variable X is distributed as Q 1 (u;C,0,λ 2 ) if and only if

-

1.

\(H(u) = A_{1}{[Q_{1}(u)]}^{-1}\) , A 1 > 0;

-

2.

\(\Lambda (u) = A_{1}(1 - u){[uQ_{1}(u)]}^{-1}\) ;

-

3.

\(M(u) = A_{2}Q_{1}(u)\) ;

-

4.

M(u)H(u) = A 3, A 3 > 0,

where A 1, A 2 and A 3 are constants.

An interesting special case of (3.66) arises when \(\lambda _{1} =\lambda _{2} =\lambda > 0\) in which case it becomes the loglogistic distribution (see Table 1.1). A detailed analysis of theloglogistic model and its applications in reliability studies have been made by Cox and Oakes [ 158 ] and Gupta et al. [ 237 ]. In this case, we deduce the following characterizations from the above.

Theorem 3.3.

A non-negative random variable X has loglogistic distribution with

if and only if one of the following conditions hold:

-

(i)

\(H(u) = \frac{ku} {Q(u)}\) ;

-

(ii)

\(\Lambda (u) = \frac{k(1-u)} {Q(u)}\) .

Hankin and Lee [ 259 ] proposed two methods of estimation—the least-squares and the maximum likelihood. In the least-squares method, they use

since logX r: n and logQ(U r: n ) have the same distribution, where U r: n is the rth order statistic from a sample of size n from the uniform distribution. Thus, from (3.36), we have

and

Then, the model parameters estimated by minimizing

Substituting (3.74) and (3.75) into the expression of E(logX r: n ) in (3.73), we have an ordinary linear regression problem and is solved by standard programs available for the purpose. Maximum likelihood estimates are calculated as described earlier in Sect. 3.2.2 by following the steps described in Hankin and Lee [ 259 ]. In a comparison of the two methods by means of simulated variances, Hankin and Lee [ 259 ] found the least-squares method to be better for small samples when the parameters λ 1 and λ 2 are roughly equal and the maximum likelihood method to be better otherwise.

3.4 Govindarajulu’s Distribution

Govindarajulu’s [ 224 ] model is the earliest attempt to introduce a quantile function, not having an explicit form of distribution function, for modelling data on failure times. He considered the quantile function

He used it to model the data on the failure times of a set of 20 refrigerators that were run to destruction under advanced stress conditions. Even though the validity of the model and its application to nonparametric inference were studied by him, the properties of the distribution were not explored. We now present a detailed study of its properties and applications.

The support of the distribution in (3.76) is (θ, θ + σ). Since we treat it as a lifetime model, θ is set to be zero so that (3.76) reduces to

Note that there is no loss of generality in studying the properties of this distribution based on (3.77) since the transformation Y = X + θ, where X has its quantile function Q(u) as in (3.77), will provide the corresponding results for (3.76). From (3.77), the quantile density function is

Equation (3.78) yields the density function of X as

Thus, this model belongs to the class of distributions, possessing density function explicitly in terms of the distribution function, discussed by Jones [ 307 ]. Further, by differentiating (3.78), we get

from which we observe that the density function is monotone decreasing for β ≤ 1, and q′(u) = 0 gives \(u_{0} {=\beta }^{-1}(\beta -1)\). Thus, when β > 1, there is an antimode at u 0. Figure 3.6 shows the shapes of the density function for some choices of β.

The raw moments are given by

In particular, the mean and variance are

Moreover, we have the median as

the interquartile range as

the skewness as

and the kurtosis as

as percentile-based descriptive measures. Much simpler expressions are available for the L-moments as follows:

Consequently, we have

With τ 3 being an increasing function of β, its limits are obtained as β → 0 and β → ∞. These limits show that τ 3 lies between \((-\frac{1} {2},1)\), and so it does not cover the entire range ( − 1, 1). But the distribution has negatively skewed, symmetric (at β = 2) and positively skewed members. The L-kurtosis τ 4 is nonmonotone, decreasing initially, reaching a minimum in the symmetric case, and then increasing to unity.

A particularly interesting property of Govindarajulu’s distribution is the distribution of its order statistics. The density function of X r: n is

upon using (3.79). So, we have

In particular,

and

The quantile functions of X 1: n and X n: n are

and

All the reliability functions also have tractable forms. The hazard quantile function is given by

and the mean residual quantile function is

From the expression of the quantile function, it is evident that the parameter β largely controls the left tail and therefore facilitates in modelling reliability concepts in reversed time. Accordingly, the reversed hazard and reversed mean residual quantile functions are given by

respectively. The reversed variance residual quantile function has the expression

We further note that the function

is a homographic function of u.

Characterization problems of life distributions by the relationship between the reversed hazard rate and the reversed mean residual life in the distribution function approach have been discussed in literature; see Chandra and Roy [ 135 ]. In this spirit, from (3.82), we have the following theorem.

Theorem 3.4.

For a non-negative random variable X, the relationship

holds for all 0 < u < 1 if and only if

provided that a and b are real numbers satisfying

Proof.

Suppose (3.83) holds. Then, we have

Equation (3.86) simplifies to

Upon integrating the above equation, we obtain

or

with the use of (3.85). By differentiation, we then obtain

Integrating the last expression from 0 to u and setting Q(0) = 0, we get (3.84). The converse part follows from the equations

and

Remark 3.2.

Govindarajulu’s distribution is secured when \(a = {(1+\beta )}^{-1}\). The condition imposed on a and b in (3.85) can be relaxed to provide a more general family of quantile functions.

Regarding the estimation of the parameters σ and β, all the conventional methods like method of moments, percentiles, least-squares and maximum likelihood can be applied to the distribution quite easily. For example, in the method of moments, equating the mean and variance, we obtain

Thus, we get

which may be solved to get β. Then, by substituting it in (3.87), the estimate of σ can be found. There may be more than one solution for β and in this case a goodness of fit may then be applied to locate the best solution. The method of L-moments and some results comparing the different methods are presented in Sect. 3.6. Compared to the more flexible quantile functions discussed in the earlier sections, the estimation problem is easily resolvable in this case with no computational complexities. One of the major limitations of Govindarajulu’s model, as mentioned earlier, is that it cannot cover the entire skewness range. In the admissible range, however, it provides good approximations to other distributions as will be seen in Sect. 3.6.

3.5 Generalized Weibull Family

This particular family of distributions is different from the distributions discussed so far in this chapter in the sense that it has a closed-form expression for the distribution function. So, all conventional methods of analysis are possible in this case. As a generalization of the Weibull distribution, the generalized Weibull family is defined by Mudholkar et al. [ 428 ] as

for α, σ > 0 and real λ. The corresponding distribution function is

with support (0, ∞) for λ ≤ 0 and \((0,{ \frac{\sigma } {\lambda }^{\alpha }})\) for λ > 0. The quantile density function has the form

The density function has a wide variety of shapes that include U-shaped, unimodal and monotone increasing or decreasing shapes. The raw moments are given by

Moments of all orders exist for α > 0, λ > 0. If λ < 0, then E(X r) exists if α λ > − r − 1. The expressions for the percentile-based descriptive measures are as follows:

For the calculation of L-moments, we use the result

in (1.34)–(1.37). Various reliability characteristics are determined as follows:

The parameters of the model are estimated by the method of maximum likelihood as discussed in Mudholkar et al. [ 428 ]. Due to the variety of shapes that the hazard functions can assume (see Chap. 4 for details), it is a useful model for survival data. This distribution has also appeared in some other discussions including assessment of tests of exponentiality (Mudholkar and Kollia [ 425 ]), approximations to sampling distributions, analysis of censored survival data (Mudholkar et al. [ 428 ]), and generating samples and approximating other distributions. Chi-squared goodness-of-fit tests for this family of distributions have been discussed by Voinov et al. [ 575 ].

3.6 Applications to Lifetime Data

In order to emphasize the applications of quantile function in reliability analysis, we demonstrate here that some of the models discussed in the preceding sections can serve as useful lifetime distributions. The conditions in the parameters that make the underlying random variables non-negative have been obtained. We now fit these models for some real data on failure times. Three representative models, one each from the lambda family, the power-Pareto and Govindarajulu’s distributions, will be considered for this purpose. The first two examples have been discussed in Nair and Vineshkumar [ 452 ].

The four-parameter lambda distribution in (3.44) is applied to the data of 100 observations on failure times of aluminum coupons (data source: Birnbaum and Saunders [ 104 ] and quoted in Lai and Xie [ 368 ]). The last observation in the data is excluded from the analysis to extract equal frequencies in the bins. Distribute the data into ten classes, each containing ten observations in ascending order of magnitude. For estimating the parameters, we use the method of L-moments. The first four sample L-moments are l 1 = 1, 391. 79, l 2 = 215. 683, l 3 = 3. 570 and l 4 = 20. 7676. Thus, the model parameters need to be solved from

Among the solutions, the best fitting one, determined by the chi-square test (i.e., the parameter estimates that gave the least chi-square value), is

Further, the upper limit of the support is 2,750.7, and thus the estimated support (256.28, 2,750.7) covers the range of observations (370, 2,240) in the data. Using (3.44) for \(u = \frac{1} {10}, \frac{2} {10},\cdots \), and the fact that if U has a uniform distribution on [0, 1] then X and Q(u) have identical distributions, we find the observed frequencies in the classes to be 10, 10, 9, 12, 8, 11, 8, 12 and 10. Of course, under the uniform model, the expected frequencies are 10 in each class. Thus, the optimized chi-square value for the fit is χ 2 = 1. 8 which does not lead to rejection of the model in (3.44) for the given data. The Q-Q plot corresponding to the model is presented in Fig. 3.7.

The second example concerns the power-Pareto distribution in (3.66). To ascertain the potential of the model, we fit it to the data on the times to first failure of 20 electric carts, presented by Zimmer et al. [ 604 ], and also quoted in Lai and Xie [ 368 ]. Here again, the method of L-moments is adopted. The sample L-moments are l 1 = 14. 675, l 2 = 7. 335 and l 3 = 2. 4678. Equating the population L-moments L 1, L 2 and L 3 presented in Sect. 3.3 to l 1, l 2 and l 3 and solving the resulting equations, we obtain

The corresponding Q-Q plot is presented in Fig. 3.8.

Govindarajulu’s distribution has already been shown as a suitable model for failure times in the original paper of Govindarajulu [ 224 ]. We reinforce this by fitting it to the data on the failure times of 50 devices, reported in Lai and Xie [ 368 ]. Equating the first two L-moments with those of the sample, the estimates of the model parameters are obtained as

Dividing the data into five groups of ten observations each, we find by proceeding as in the first example that the chi-square value is 1.8, which does not lead to the rejection of the considered model. Figure 3.9 presents the Q-Q plot of the fit obtained.

The objectives of these illustrations were limited to the purpose of demonstrating the use of quantile function models in reliability analysis. A full theoretical analysis and demonstration to real data situations of all the reliability functions vis-a-vis their ageing properties will be taken up subsequently in Chap. 4.

References

Arnold, B.C., Balakrishnan, N., Nagaraja, H.N.: A First Course in Order Statistics. Wiley, New York (1992)

Asquith, W.H.: L-moments and TL-moments of the generalized lambda distribution. Comput. Stat. Data Anal. 51, 4484–4496 (2007)

Balakrishnan, N., Balasubramanian, K.: Equivalence of Hartley-David-Gumbel and Papathanasiou bounds and some further remarks. Stat. Probab. Lett. 16, 39–41 (1993)

Bigerelle, M., Najjar, D., Fournier, B., Rupin, N., Iost, A.: Application of lambda distributions and bootstrap analysis to the prediction of fatigue lifetime and confidence intervals. Int. J. Fatig. 28, 233–236 (2005)

Birnbaum, Z.W., Saunders, S.C.: A statistical model for life length of materials. J. Am. Stat. Assoc. 53, 151–160 (1958)

Cao, R., Lugosi, G.: Goodness of fit tests based on the kernel density estimator. Scand. J. Stat. 32, 599–616 (2005)

Chandra, N.K., Roy, D.: Some results on reversed hazard rates. Probab. Eng. Inform. Sci. 15, 95–102 (2001)

Cox, D.R., Oakes, D.: Analysis of Survival Data. Chapman and Hall, London (1984)

Dudewicz, E.J., Karian, A.: The extended generalized lambda distribution (EGLD) system for fitting distribution to data with moments, II: Tables. Am. J. Math. Manag. Sci. 19, 1–73 (1996)

Filliben, J.J.: Simple and robust linear estimation of the location parameter of a symmetric distribution. Ph.D. thesis, Princeton University, Princeton (1969)

Fournier, B., Rupin, N., Bigerelle, M., Najjar, D., Iost, A.: Application of the generalized lambda distributions in a statistical process control methodology. J. Process Contr. 16, 1087–1098 (2006)

Fournier, B., Rupin, N., Bigerelle, M., Najjar, D., Iost, A., Wilcox, R.: Estimating the parameters of a generalized lambda distribution. Comput. Stat. Data Anal. 51, 2813–2835 (2007)

Freimer, M., Mudholkar, G.S., Kollia, G., Lin, C.T.: A study of the generalised Tukey lambda family. Comm. Stat. Theor. Meth. 17, 3547–3567 (1988)

Gilchrist, W.G.: Statistical Modelling with Quantile Functions. Chapman and Hall/CRC Press, Boca Raton (2000)

Govindarajulu, Z.: A class of distributions useful in life testing and reliability with applications to nonparametric testing. In: Tsokos, C.P., Shimi, I.N. (eds.) Theory and Applications of Reliability, vol. 1, pp. 109–130. Academic, New York (1977)

Gumbel, E.J.: The maxima of the mean largest value and of the range. Ann Math. Stat. 25, 76–84 (1954)

Gupta, R.C., Akman, H.O., Lvin, S.: A study of log-logistic model in survival analysis. Biometrical J. 41, 431–433 (1999)

Hankin, R.K.S., Lee, A.: A new family of non-negative distributions. Aust. New Zeal. J. Stat. 48, 67–78 (2006)

Haritha, N.H., Nair, N.U., Nair, K.R.M.: Modelling incomes using generalized lambda distributions. J. Income Distrib. 17, 37–51 (2008)

Hartley, H.O., David, H.A.: Universal bounds for mean range and extreme observations. Ann. Math. Stat. 25, 85–99 (1954)

Hastings, C., Mosteller, F., Tukey, J.W., Winsor, C.P.: Low moments for small samples: A comparative study of statistics. Ann. Math. Stat. 18, 413–426 (1947)

Joiner, B.L., Rosenblatt, J.R.: Some properties of the range of samples from Tukey’s symmetric lambda distribution. J. Am. Stat. Assoc. 66, 394–399 (1971)

Jones, M.C.: On a class of distributions defined by the relationship between their density and distribution functions. Comm. Stat. Theor. Meth. 36, 1835–1843 (2007)

Karvanen, J., Nuutinen, A.: Characterizing the generalized lambda distribution by L-moments. Comput. Stat. Data Anal. 52, 1971–1983 (2008)

Karian, A., Dudewicz, E.J.: Fitting Statistical Distributions, the Generalized Lambda Distribution and Generalized Bootstrap Methods. Chapman and Hall/CRC Press, Boca Raton (2000)

Karian, A., Dudewicz, E.J.: Comparison of GLD fitting methods, superiority of percentile fits to moments in L 2 norm. J. Iran. Stat. Soc. 2, 171–187 (2003)

Karian, A., Dudewicz, E.J.: Computational issues in fitting statistical distributions to data. Am. J. Math. Manag. Sci. 27, 319–349 (2007)

Karian, A., Dudewicz, E.J.: Handbook of Fitting Statistical Distributions with R. CRC Press, Boca Raton (2011)

King, R.A.R., MacGillivray, H.L.: A starship estimation method for the generalized λ distributions. Aust. New Zeal. J. Stat. 41, 353–374 (1999)

King, R.A.R., MacGillivray, H.L.: Fitting the generalized lambda distribution with location and scale-free shape functionals. Am. J. Math. Manag. Sci. 27, 441–460 (2007)

Kupka, J., Loo, S.: The hazard and vitality measures of ageing. J. Appl. Probab. 26, 532–542 (1989)

Lai, C.D., Xie, M.: Stochastic Ageing and Dependence for Reliability. Springer, New York (2006)

Lakhany, A., Mausser, H.: Estimating parameters of the generalized lambda distribution. Algo Res. Q. 3, 47–58 (2000)

Lefante, J.J., Jr.: The generalized single hit model. Math. Biosci. 83, 167–177 (1987)

Mercy, J., Kumaran, M.: Estimation of the generalized lambda distribution from censored data. Braz. J. Probab. Stat. 24, 42–56 (2010)

Mudholkar, G.S., Kollia, G.D.: The isotones of the test of exponentiality. In: ASA Proceedings, Statistical Graphics, Alexandria (1990)

Mudholkar, G.S., Srivastava, D.K., Kollia, G.D.: A generalization of the Weibull distribution with applications to the analysis of survival data. J. Am. Stat. Assoc. 91, 1575–1583 (1996)

Nair, N.U., Sankaran, P.G.: Characterization of multivariate life distributions. J. Multivariate Anal. 99, 2096–2107 (2008)

Nair, N.U., Sudheesh, K.K.: Characterization of continuous distributions by properties of conditional variance. Stat. Methodol. 7, 30–40 (2010)

Nair, N.U., Vineshkumar, B.: L-moments of residual life. J. Stat. Plann. Infer. 140, 2618–2631 (2010)

Najjar, D., Bigerelle, M., Lefevre, C., Iost, A.: A new approach to predict the pit depth extreme value of a localized corrosion process. ISIJ Int. 43, 720–725 (2003)

Osturk, A., Dale, R.F.: A study of fitting the generalized lambda distribution to solar radiation data. J. Appl. Meteorol. 12, 995–1004 (1982)

Osturk, A., Dale, R.F.: Least squares estimation of the parameters of the generalized lambda distribution. Technometrics 27, 81–84 (1985)

Pregibon, D.: Goodness of link tests for generalized linear models. Appl. Stat. 29, 15–24 (1980)

Ramberg, J.S., Dudewicz, E., Tadikamalla, P., Mykytka, E.: A probability distribution and its uses in fitting data. Technometrics 21, 210–214 (1979)

Ramberg, J.S., Schmeiser, B.W.: An approximate method for generating symmetric random variables. Comm. Assoc. Comput. Mach. 15, 987–990 (1972)

Ramberg, J.S., Schmeiser, B.W.: An approximate method for generating asymmetric random variables. Comm. Assoc. Comput. Mach. 17, 78–82 (1974)

Ramos-Fernandez, A., Paradela, A., Narajas, R., Albar, J.P.: Generalized method for probability based peptitude and protein identification from tandem mass spectrometry data and sequence data base searching. Mol. Cell. Proteomics 7, 1745–1754 (2008)

Robinson, L.W., Chan, R.R.: Scheduling doctor’s appointment, optimal and empirically based heuristic policies. IIE Trans. 35, 295–307 (2003)

Shapiro, S.S., Wilk, M.B.: An analysis of variance test for normality. Biometrika 52, 591–611 (1965)

Silver, E.A.: A safety factor approximation based upon Tukey’s lambda distribution. Oper. Res. Q. 28, 743–746 (1977)

Su, S.: A discretised approach to flexibly fit generalized lambda distributions to data. J. Mod. Appl. Stat. Meth. 4, 408–424 (2005)

Su, S.: Numerical maximum log likelihood estimation for generalized lambda distributions. Comput. Stat. Data Anal. 51, 3983–3998 (2007)

Su, S.: Fitting single and mixture of the generalized lambda distributions via discretized and maximum likelihood methods: GILDEX in (r). J. Stat. Software 21, 1–22 (2010)

Tarsitano, A.: Comparing estimation methods for the FPLD. J. Probab. Stat. 1–16 (2010)

Tukey, J.W.: The practical relationship between the common transformations of percentages of count and of amount. Technical Report 36, Princeton University, Princeton (1960)

van Dyke, J.: Numerical investigation of the random variable y = c(u λ − (1 − u)λ). Unpublished working paper. National Bureau of Standards, Statistical Engineering Laboratory (1961)

van Staden, P.J., Loots, M.T.: L-moment estimation for the generalized lambda distribution. In: Third Annual ASEARC Conference, New Castle, Australia (2009)

Voinov, V., Nikulin, M.S., Balakrishnan, N.: Chi-Squared Goodness-of-Fit Tests with Applications. Academic, Boston (2013)

Zimmer, W., Keats, J.B., Wang, F.K.: The Burr XII distribution in reliability analysis. J. Qual. Technol. 20, 386–394 (1998)

Author information

Authors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer Science+Business Media New York

About this chapter

Cite this chapter

Nair, N.U., Sankaran, P.G., Balakrishnan, N. (2013). Quantile Function Models. In: Quantile-Based Reliability Analysis. Statistics for Industry and Technology. Birkhäuser, New York, NY. https://doi.org/10.1007/978-0-8176-8361-0_3

Download citation

DOI: https://doi.org/10.1007/978-0-8176-8361-0_3

Published:

Publisher Name: Birkhäuser, New York, NY

Print ISBN: 978-0-8176-8360-3

Online ISBN: 978-0-8176-8361-0

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)