Abstract

A probability distribution can be specified either in terms of the distribution function or by the quantile function. This chapter addresses the problem of describing the various characteristics of a distribution through its quantile function. We give a brief summary of the important milestones in the development of this area of research. The definition and properties of the quantile function with examples are presented. In Table 1.1, quantile functions of various life distributions, representing different data situations, are included. Descriptive measures of the distributions such as location, dispersion and skewness are traditionally expressed in terms of the moments. The limitations of such measures are pointed out and some alternative quantile-based measures are discussed. Order statistics play an important role in statistical analysis. Distributions of order statistics in quantile forms, their properties and role in reliability analysis form the next topic in the chapter. There are many problems associated with the use of conventional moments in modelling and analysis. Exploring these, and as an alternative, the definition, properties and application of L-moments in describing a distribution are presented. Finally, the role of certain graphical representations like the Q-Q plot, box-plot and leaf-plot are shown to be useful tools for a preliminary analysis of the data.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1.1 Introduction

As mentioned earlier, a probability distribution can be specified either in terms of the distribution function or by the quantile function. Although both convey the same information about the distribution, with different interpretations, the concepts and methodologies based on distribution functions are traditionally employed in most forms of statistical theory and practice. One reason for this is that quantile-based studies were carried out mostly when the traditional approach either is difficult or fails to provide desired results. Except in a few isolated areas, there have been no systematic parallel developments aimed at replacing distribution functions in modelling and analysis by quantile functions. However, the feeling that through an appropriate choice of the domain of observations, a better understanding of a chance phenomenon can be achieved by the use of quantile functions, is fast gaining acceptance.

Historically, many facts about the potential of quantiles in data analysis were known even before the nineteenth century. It appears that the Belgian sociologist Quetelet [499] initiated the use of quantiles in statistical analysis in the form of the present day inter-quantile range. A formal representation of a distribution through a quantile function was introduced by Galton (1822–1911) [206] who also initiated the ordering of observations along with the concepts of median, quartiles and interquartile range. Subsequently, the research on quantiles was directed towards estimation problems with the aid of sample quantiles, their large sample behaviour and limiting distributions (Galton [207, 208]). A major development in portraying quantile functions to represent distributions is the work of Hastings et al. [264], who introduced a family of distributions by a quantile function. This was refined later by Tukey [568]. The symmetric distribution of Tukey [568] and his articulation of exploratory data analysis sparked considerable interest in quantile functional forms that continues till date. Various aspects of the Tukey family and generalizations thereto were studied by a number of authors including Hogben [273], Shapiro and Wilk [536], Filliben [197], Joiner and Rosenblatt [304], Ramberg and Schmeiser [504], Ramberg [501], Ramberg et al. [502], MacGillivray [407], Freimer et al. [203], Gilchrist [215] and Tarsitano [563]. We will discuss all these models in Chap. 3. Another turning point in the development of quantile functions is the seminal paper by Parzen [484], in which he emphasized the description of a distribution in terms of the quantile function and its role in data modelling. Parzen [485–487] exhibits a sequential development of the theory and application of quantile functions in different areas and also as a tool in unification of various approaches.

Quantile functions have several interesting properties that are not shared by distributions, which makes it more convenient for analysis. For example, the sum of two quantile functions is again a quantile function. There are explicit general distribution forms for the quantile function of order statistics. In Sect. 1.2, we mention these and some other properties. Moreover, random numbers from any distribution can be generated using appropriate quantile functions, a purpose for which lambda distributions were originally conceived. The moments in different forms such as raw, central, absolute and factorial have been used effectively in specifying the model, describing the basic characteristics of distributions, and in inferential procedures. Some of the methods of estimation like least squares, maximum likelihood and method of moments often provide estimators and/or their standard errors in terms of moments. Outliers have a significant effect on the estimates so derived. For example, in the case of samples from the normal distribution, all the above methods give sample mean as the estimate of the population mean, whose values change significantly in the presence of an outlying observation. Asymptotic efficiency of the sample moments is rather poor for heavy tailed distributions since the asymptotic variances are mainly in terms of higher order moments that tend to be large in this case. In reliability analysis, a single long-term survivor can have a marked effect on mean life, especially in the case of heavy tailed models which are commonly encountered for lifetime data. In such cases, quantile-based estimates are generally found to be more precise and robust against outliers. Another advantage in choosing quantiles is that in life testing experiments, one need not wait until the failure of all the items on test, but just a portion of them for proposing useful estimates. Thus, there is a case for adopting quantile functions as models of lifetime and base their analysis with the aid of functions derived from them. Many other facets of the quantile approach will be more explicit in the sequel in the form of alternative methodology, new opportunities and unique cases where there are no corresponding results if one adopts the distribution function approach.

1.2 Definitions and Properties

In this section, we define the quantile function and discuss some of its general properties. The random variable considered here has the real line as its support, but the results are valid for lifetime random variables which take on only non-negative values.

Definition 1.1.

Let X be a real valued continuous random variable with distribution function F(x) which is continuous from the right. Then, the quantile function Q(u) of X is defined as

For every − ∞ < x < ∞ and 0 < u < 1, we have

Thus, if there exists an x such that F(x) = u, then F(Q(u)) = u and Q(u) is the smallest value of x satisfying F(x) = u. Further, if F(x) is continuous and strictly increasing, Q(u) is the unique value x such that F(x) = u, and so by solving the equation F(x) = u, we can find x in terms of u which is the quantile function of X. Most of the distributions we consider in this work are of this form and nature.

Definition 1.2.

If f(x) is the probability density function of X, then f(Q(u)) is called the density quantile function. The derivative of Q(u), i.e.,

is known as the quantile density function of X. By differentiating F(Q(u)) = u, we find

Some important properties of quantile functions required in the sequel are as follows.

-

1.

From the definition of Q(u) for a general distribution function, we see that

-

(a)

Q(u) is non-decreasing on (0, 1) with Q(F(x)) ≤ x for all − ∞ < x < ∞ for which 0 < F(x) < 1;

-

(b)

F(Q(u)) ≥ u for any 0 < u < 1;

-

(c)

Q(u) is continuous from the left or Q(u − ) = Q(u);

-

(d)

\(Q(u+) =\inf \{ x: F(x)> u\}\) so that Q(u) has limits from above;

-

(e)

Any jumps of F(x) are flat points of Q(u) and flat points of F(x) are jumps of Q(u).

-

(a)

-

2.

If U is a uniform random variable over [0, 1], then X = Q(U) has its distribution function as F(x). This follows from the fact that

$$\displaystyle{P(Q(U) \leq x) = P(U \leq F(x)) = F(x).}$$This property enables us to conceive a given data set as arising from the uniform distribution transformed by the quantile function Q(u).

-

3.

If T(x) is a non-decreasing function of x, then T(Q(u)) is a quantile function. Gilchrist [215] refers to this as the Q-transformation rule. On the other hand, if T(x) is non-increasing, then T(Q(1 − u)) is also a quantile function.

Example 1.1.

Let X be a random variable with Pareto type II (also called Lomax) distribution with

Since F(x) is strictly increasing, setting F(x) = u and solving for x, we obtain

Taking T(X) = X β, β > 0, we have a non-decreasing transformation which results in

When T(Q(u)) = y, we obtain, on solving for u,

which is a Burr type XII distribution with T(Q(u)) being the corresponding quantile function.

Example 1.2.

Assume X has Pareto type I distribution with

Then, working as in the previous example, we see that

Apply the transformation T(X) = Y = X − 1, which is non-increasing, we have

and equating this to y and solving, we get

G(y) is the distribution function of a power distribution with T(Q(1 − u)) being the corresponding quantile function.

-

4.

If Q(u) is the quantile function of X with continuous distribution function F(x) and T(u) is a non-decreasing function satisfying the boundary conditions T(0) = 0 and T(1) = 1, then Q(T(u)) is a quantile function of a random variable with the same support as X.

Example 1.3.

Consider a non-negative random variable with continuous distribution function F(x) and quantile function Q(u). Taking \(T(u) = {u}^{\frac{1} {\theta } }\), for θ > 0, we have T(0) = 0 and T(1) = 1. Then,

Further, if y = Q 1(u), \({u}^{\frac{1} {\theta } } = y\) and so the distribution function corresponding to Q 1(u) is

The random variable Y with distribution function G(x) is called the proportional reversed hazards model of X. There is considerable literature on such models in reliability and survival analysis. If we take X to be exponential with

so that

then

provides

the generalized or exponentiated exponential law (Gupta and Kundu [250]). In a similar manner, Mudholkar and Srivastava [429] take the baseline distribution as Weibull. For some recent results and survey of such models, we refer the readers to Gupta and Gupta [240]. In Chap. 3, we will come across several quantile functions that represent families of distributions containing some life distributions as special cases. They are highly flexible empirical models capable of approximating many continuous distributions. The above transformation on these models generates new proportional reversed hazards models of a general form. The analysis of lifetime data employing such models seems to be an open issue.

Remark 1.1.

From the form of G(x) above, it is clear that for positive integral values of θ, it is simply the distribution function of the maximum of a random sample of size θ from the exponential population with distribution function F(x) above. Thus, G(x) may be simply regarded as the distribution function of the maximum from a random sample of real size θ (instead of an integer). This viewpoint was discussed by Stigler [547] under the general idea of ‘fractional order statistics’; see also Rohatgi and Saleh [509].

Remark 1.2.

Just as G(x) can be regarded as the distribution function of the maximum from a random sample of (real) size θ from the population with distribution function F(x), we can consider \({G}^{{\ast}}(x) = 1 - {(1 - F(x))}^{\theta }\) as a generalized form corresponding to the minimum of a random sample of (real) size θ. The model G ∗ (x) is, of course, the familiar proportional hazards model. It is important to mention here that these two models are precisely the ones introduced by Lehmann [382], as early as in 1953, as stochastically ordered alternatives for nonparametric tests of equality of distributions.

Remark 1.3.

It is useful to bear in mind that for distributions closed under minima such as exponential and Weibull (i.e., the distributions for which the minima have the same form of the distribution but with different parameters), the distribution function G(x) would provide a natural generalization while, for distributions closed under maxima such as power and inverse Weibull (i.e., the distributions for which the maxima have the same form of the distribution but with different parameters), the distribution function G ∗ (x) would provide a natural generalization.

-

5.

The sum of two quantile functions is again a quantile function. Likewise, two quantile density functions, when added, produce another quantile density function.

-

6.

The product of two positive quantile functions is a quantile function. In this case, the condition of positivity cannot be relaxed, as in general, there may be negative quantile functions that affect the increasing nature of the product. Since we are dealing primarily with lifetimes, the required condition will be automatically satisfied.

-

7.

If X has quantile function Q(u), then \(\frac{1} {X}\) has quantile function 1 ∕ Q(1 − u).

Remark 1.4.

Property 7 is illustrated in Example 1.2. Chapter 3 contains some examples wherein quantile functions are generated as sums and products of quantile functions of known distributions. It becomes evident from Properties 3–7 that they can be used to produce new distributions from the existing ones. Thus, in our approach, a few basic forms are sufficient to begin with since new forms can always be evolved from them that match our requirements and specifications. This is in direct contrast to the abundance of probability density functions built up, each to satisfy a particular data form in the distribution function approach. In data analysis, the crucial advantage is that if one quantile function is not an appropriate model, the features that produce lack of fit can be ascertained and rectification can be made to the original model itself. This avoids the question of choice of an altogether new model and the repetition of all inferential procedures for the new one as is done in most conventional analyses.

-

8.

The concept of residual life is of special interest in reliability theory. It represents the lifetime remaining in a unit after it has attained age t. Thus, if X is the original lifetime with quantile function Q(u), the associated residual life is the random variable X t = (X − t | X > t). Using the definition of conditional probability, the survival function of X t is

$$\displaystyle{\bar{F}_{t}(x) = P(X_{t}> x) = \dfrac{\bar{F}(x + t)} {\bar{F}(t)} \,}$$where \(\bar{F}(x) = P(X> x) = 1 - F(x)\) is the survival function. Thus, we have

$$\displaystyle{ F_{t}(x) = \frac{F(x + t) - F(t)} {1 - F(t)}. }$$(1.3)Let F(t) = u 0, F(x + t) = v and F t (x) = u. Then, with

$$\displaystyle{x + t = Q(v),\quad x = Q_{1}(u),\text{ say,}}$$we have

$$\displaystyle{Q_{1}(u) = Q(v) - Q(u_{0})}$$and consequently from (1.3),

$$\displaystyle{u(1 - u_{0}) = v - u_{0}}$$or

$$\displaystyle{v = u_{0} + (1 - u_{0})u.}$$Thus, the quantile function of the residual life X t becomes

$$\displaystyle{ Q_{1}(u) = Q(u_{0} + (1 - u_{0})u) - Q(u_{0}). }$$(1.4)Equation (1.4) will be made use of later in defining mean residual quantile function in Chap. 2.

-

9.

In some reliability and quality control situations, truncated forms of lifetime models arise naturally, and the truncation may be on the right or on the left or on both sides. Suppose F(x) is the underlying distribution function and Q(u) is the corresponding quantile function. Then, if the distribution is truncated on the right at x = U (i.e., the observations beyond U cannot be observed), then the corresponding distribution function is

$$\displaystyle{F_{RT}(x) = \frac{F(x)} {F(U)},\quad 0 \leq x \leq U,}$$and its quantile function is

$$\displaystyle{Q_{RT}(x) = Q(u{Q}^{-1}(U)).}$$Similarly, if the distribution is truncated on the left at x = L (i.e., the observations below L cannot be observed), then the corresponding distribution func- tion is

$$\displaystyle{F_{LT}(x) = \frac{F(x) - F(L)} {1 - F(L)},\quad x \geq L,}$$and its quantile function is

$$\displaystyle{Q_{LT}(u) = Q(u + (1 - u){Q}^{-1}(L)).}$$Finally, if the distribution is truncated on the left at x = L and also on the right at x = U, then the corresponding distribution function is

$$\displaystyle{F_{DT}(x) = \frac{F(x) - F(L)} {F(U) - F(L)},\quad L \leq x \leq U,}$$and its quantile function is

$$\displaystyle{Q_{DT}(u) = Q(u{Q}^{-1}(U) + (1 - u){Q}^{-1}(L)).}$$

Example 1.4.

Suppose the underlying distribution is logistic with distribution function F(x) = 1 ∕ (1 + e − x) on the whole real line \(\mathbb{R}\). It is easily seen that the corresponding quantile function is \(Q(u) =\log \left ( \frac{u} {1-u}\right )\). Further, suppose we consider the distribution truncated on the left at 0, i.e., L = 0, for proposing a lifetime model. Then, from the expression above and the fact that \({Q}^{-1}(0) = \frac{1} {2}\), we arrive at the quantile function

corresponding to the half-logistic distribution of Balakrishnan [47, 48]; see Table 1.1.

1.3 Quantile Functions of Life Distributions

As mentioned earlier, we concentrate here on distributions of non-negative random variables representing the lifetime of a component or unit. The distribution function of such random variables is such that F(0 − ) = 0. Often, it is more convenient to work with

which is the probability that the unit survives time (referred to as the age of the unit) x. It is also called the reliability or survival function since it expresses the probability that the unit is still reliable at age x.

In the previous section, some examples of quantile functions and a few methods of obtaining them were explained. We now present in Table 1.1 quantile functions of many distributions considered in the literature as lifetime models. The properties of these distributions are discussed in the references cited below each of them. Models like gamma, lognormal and inverse Gaussian do not find a place in the list as their quantile functions are not in a tractable form. However, in the next chapter, we will see quantile forms that provide good approximations to them.

1.4 Descriptive Quantile Measures

The advent of the Pearson family of distributions was a major turning point in data modelling using distribution functions. The fact that members of the family can be characterized by the first four moments gave an impetus to the extensive use of moments in describing the properties of distributions and their fitting to observed data. A familiar pattern of summary measures took the form of mean for location, variance for dispersion, and the Pearson’s coefficients \(\beta _{1} = \frac{\mu _{3}^{2}} {\mu _{2}^{3}}\) for skewness and \(\beta _{2} = \frac{\mu _{4}} {\mu _{2}^{2}}\) for kurtosis. While the mean and variance claimed universal acceptance, several limitations of β 1 and β 2 were subsequently exposed. Some of the concerns with regard to β 1 are: (1) it becomes arbitrarily large or even infinite making it difficult for comparison and interpretation as relatively small changes in parameters produce abrupt changes, (2) it does not reflect the sign of the difference (mean-median) which is a traditional basis for defining skewness, (3) there exist asymmetric distributions with β 1 = 0 and (4) instability of the sample estimate of β 1 while matching with the population value. Similarly, for a standardized variable X, the relationship

would mean that the interpretation of kurtosis depends on the concentration of the probabilities near μ ± σ as well as in the tails of the distribution.

The specification of a distribution through its quantile function takes away the need to describe a distribution through its moments. Alternative measures in terms of quantiles that reduce the shortcomings of the moment-based ones can be thought of. A measure of location is the median defined by

Dispersion is measured by the interquartile range

where Q 3 = Q(0. 75) and Q 1 = Q(0. 25).

Skewness is measured by Galton’s coefficient

Note that in the case of extreme positive skewness, Q 1 → M while in the case of extreme negative skewness Q 3 → M so that S lies between − 1 and + 1. When the distribution is symmetric, \(M = \frac{Q_{1}+Q_{3}} {2}\) and hence S = 0. Due to the relation in (1.5), kurtosis can be large when the probability mass is concentrated near the mean or in the tails. For this reason, Moors [421] proposed the measure

as a measure of kurtosis. As an index, T is justified on the grounds that the differences Q(0. 875) − Q(0. 625) and Q(0. 375) − Q(0. 125) become large (small) if relatively small (large) probability mass is concentrated around Q 3 and Q 1 corresponding to large (small) dispersion in the vicinity of μ ± σ.

Given the form of Q(u), the calculations of all the coefficients are very simple, as we need to only substitute the appropriate fractions for u. On the other hand, calculation of moments given the distribution function involves integration, which occasionally may not even yield closed-form expressions.

Example 1.5.

Let X follow the Weibull distribution with (see Table 1.1)

Then, we have

and

The effect of a change of origin and scale on Q(u) and the above four measures are of interest in later studies. Let X and Y be two random variables such that Y = aX + b. Then,

If Q X (u) and Q Y (u) denote the quantile functions of X and Y, respectively,

or

So, we simply have

Similar calculations using (1.7), (1.8) and (1.9) yield

Other quantile-based measures have also been suggested for quantifying spread, skewness and kurtosis. One measure of spread, similar to mean deviation in the distribution function approach, is the median of absolute deviation from the median, viz.,

For further details and justifications for (1.10), we refer to Falk [194]. A second popular measure that has received wide attention in economics is Gini’s mean difference defined as

where f(x) is the probability density function of X. Setting F(x) = u in (1.11), we have

The expression in (1.13) follows from (1.12) by integration by parts. One may use (1.12) or (1.13) depending on whether q(u) or Q(u) is specified. Gini’s mean difference will be further discussed in the context of reliability in Chap. 4.

Example 1.6.

The generalized Pareto distribution with (see Table 1.1)

has its quantile density function as

Then, from (1.12), we obtain

where \(B(m,n) =\int _{ 0}^{1}{t}^{m-1}{(1 - t)}^{n-1}dt\) is the complete beta function. Thus, we obtain the simplified expression

Hinkley [271] proposed a generalization of Galton’s measure of skewness of the form

Obviously, (1.14) reduces to Galton’s measure when u = 0. 75. Since (1.14) is a function of u and u is arbitrary, an overall measure of skewness can be provided as

Groeneveld and Meeden [227] suggested that the numerator and denominator in (1.14) be integrated with respect to u to arrive at the measure

Now, in terms of expectations, we have

and thus

The numerator of (1.15) is the traditional term (being the difference between the mean and the median) indicating skewness and the denominator is a measure of spread used for standardizing S 3. Hence, (1.15) can be thought of as an index of skewness in the usual sense. If we replace the denominator by the standard deviation σ of X, the classical measure of skewness will result.

Example 1.7.

Consider the half-logistic distribution with (see Table 1.1)

and hence \(S_{3} =\log (\frac{4} {3})/\log (\frac{64} {27})\).

Instead of using quantiles, one can also use percentiles to define skewness. Galton [206] in fact used the middle 50 % of observations, while Kelly’s measure takes 90 % of observations to propose the measure

For further discussion of alternative measures of skewness and kurtosis, a review of the literature and comparative studies, we refer to Balanda and MacGillivray [63], Tajuddin [559], Joannes and Gill [299], Suleswki [552] and Kotz and Seier [355].

1.5 Order Statistics

In life testing experiments, a number of units, say n, are placed on test and the quantity of interest is their failure times which are assumed to follow a distribution F(x). The failure times \(X_{1},X_{2},\ldots,X_{n}\) of the n units constitute a random sample of size n from the population with distribution function F(x), if \(X_{1},X_{2},\ldots,X_{n}\) are independent and identically distributed as F(x). Suppose the realization of X i in an experiment is denoted by x i . Then, the order statistics of the random sample \((X_{1},X_{2},\ldots,X_{n})\) are the sample values placed in ascending order of magnitude denoted by \(X_{1:n} \leq X_{2:n} \leq \cdots \leq X_{n:n}\), so that \(X_{1:n} =\min _{1\leq i\leq n}X_{i}\) and \(X_{n:n} =\max _{1\leq i\leq n}X_{i}\). The sample median, denoted by m, is the value for which approximately 50 % of the observations are less than m and 50 % are more than m. Thus

Generalizing, we have the percentiles. The 100p-th percentile, denoted by x p , in the sample corresponds to the value for which approximately np observations are smaller than this value and n(1 − p) observations are larger. In terms of order statistics we have

where the symbol [t] is defined as [t] = r whenever r − 0. 5 ≤ t < r + 0. 5, for all positive integers r. We note that in the above definition, if x p is the ith smallest observation, then the ith largest observation is x 1 − p . Obviously, the median m is the 50th percentile and the lower quartile q 1 and the upper quartile q 3 of the sample are, respectively, the 25th and 75th percentiles. The sample interquartile range is

All the sample descriptive measures are defined in terms of the sample median, quartiles and percentiles analogous to the population measures introduced in Sect. 1.4. Thus, iqr in (1.18) describes the spread, while

and

where \(e_{i} = \frac{i} {8}\), i = 1, 3, 5, 7, describes the skewness and kurtosis.

Parzen [484] introduced the empirical quantile function

where F n (x) is the proportion of \(X_{1},X_{2},\ldots,X_{n}\) that is at most x. In other words,

which is a step function with jump \(\frac{1} {n}\). For u = 0, \(\bar{Q}(u)\) is taken as X 1: n or a natural minimum if one is available. In the case of lifetime variables, this becomes \(\bar{Q}(0)\). When a smooth function is required for \(\bar{Q}(u)\), Parzen [484] suggested the use of

for \(\frac{r-1} {n} \leq u \leq \frac{r} {n}\), r = 1, 2, …, n. The corresponding empirical quantile density function is

In this set-up, we have \(q_{i} =\bar{ Q}( \frac{i} {4})\), i = 1, 3 and \(e_{i} =\bar{ Q}( \frac{i} {8})\), i = 1, 3, 7, 8.

It is well known that the distribution of the rth order statistic X r: n is given by Arnold et al. [37]

In particular, X n: n and X 1: n have their distributions as

and

Recalling the definitions of the beta function

and the incomplete beta function ratio

where

we have the upper tail of the binomial distribution and the incomplete beta function ratio to be related as (Abramowitz and Stegun [15])

Comparing (1.22) and (1.25) we see that, if a sample of n observations from a distribution with quantile function Q(u) is ordered, then the quantile function of the rth order statistic is given by

where

and I − 1 is the inverse of the beta function ratio I. Thus, the quantile function of the rth order statistic has an explicit distributional form, unlike the expression for distribution function in (1.22). However, the expression for Q r (u r ) is not explicit in terms of Q(u). This is not a serious handicap as the I u ( ⋅, ⋅) function is tabulated for various values of n and r (Pearson [489]) and also available in all statistical softwares for easy computation. The distributions of X n: n and X 1: n have simple quantile forms

and

The probability density function of X r: n becomes

and so

This mean value is referred to as the rth mean rankit of X. For reasons explained earlier with reference to the use of moments, often the median rankit

which is robust, is preferred over the mean rankit.

The importance and role of order statistics in the study of quantile function become clear from the discussions in this section. Added to this, there are several topics in reliability analysis in which order statistics appear quite naturally. One of them is system reliability. We consider a system consisting of n components whose lifetimes \(X_{1},X_{2},\ldots,X_{n}\) are independent and identically distributed. The system is said to have a series structure if it functions only when all the components are functioning, and the lifetime of this system is the smallest among the X i ’s or X 1: n . In the parallel structure, on the other hand, the system functions if and only if at least one of the components work, so that the system life is X n: n . These two structures are embedded in what is called a k-out-of-n system, which functions if and only if at least k of the components function. The lifetime of such a system is obviously X n − k + 1: n .

In life testing experiments, when n identical units are put on test to ascertain their lengths of life, there are schemes of sampling wherein the experimenter need not have to wait until all units fail. The experimenter may choose to observe only a prefixed number of failures of, say, n − r units and terminate the experiment as soon as the (n − r)th unit fails. Thus, the lifetimes of r units that are still working get censored. This sampling scheme is known as type II censoring. The data consists of realizations of \(X_{1:n},X_{2:n},\ldots,X_{n-r:n}\). Another sampling scheme is to prefix a time T ∗ and observe only those failures that occur up to time T ∗ . This scheme is known as type I censoring, and in this case the number of failures to be observed is random. One may refer to Balakrishnan and Cohen [51] and Cohen [154] for various methods of inference for type I and type II censored samples from a wide array of lifetime distributions. Yet another sampling scheme is to prefix the number of failures at n − r and also a time T ∗ . If (n − r) failures occur before time T ∗ , then the experiment is terminated; otherwise, observe all failures until time T ∗ . Thus, the time of truncation of the experiment is now min(T, X n − r: n ). This is referred to as type I hybrid censoring; see Balakrishnan and Kundu [53] for an overview of various developments on this and many other forms of hybrid censoring schemes. A third important application of order statistics is in the construction of tests regrading the nature of ageing of a device; see Lai and Xie [368]. For an encyclopedic treatment on the theory, methods and applications of order satistics, one may refer to Balakrishnan and Rao [56, 57].

1.6 Moments

The emphasis given to quantiles in describing the basic properties of a distribution does not in any way minimize the importance of moments in model specification and inferential problems. In this section, we look at various types of moments through quantile functions. The conventional moments

are readily expressible in terms of quantile functions, by the substitution x = Q(u), as

In particular, as already seen, the mean is

The central moments and other quantities based on it are obtained through the well-known relationships they have with the raw moments μ ′ r in (1.30).

Some of the difficulties experienced while employing the moments in descriptive measures as well as in inferential problems have been mentioned in the previous sections. The L-moments to be considered next can provide a competing alternative to the conventional moments. Firstly, by definition, they are expected values of linear functions of order statistics. They have generally lower sampling variances and are also robust against outliers. Like the conventional moments, L-moments can be used as summary measures (statistics) of probability distributions (samples), to identify distributions and to fit models to data. The origin of L-moments can be traced back to the work on linear combination of order statistics in Sillito [537] and Greenwood et al. [226]. It was Hosking [276] who presented a unified theory on L-moments and made a systematic study of their properties and role in statistical analysis. See also Hosking [277, 279, 280] and Hosking and Wallis [282] for more elaborate details on this topic.

The rth L-moment is defined as

Using (1.28), we can write

Expanding (1 − u)k in powers of u using binomial theorem and combining powers of u, we get

Jones [306] has given an alternative method of establishing the last relationship. In particular, we obtain:

Sometimes, it is convenient (to avoid integration by parts while computing the integrals in (1.34)–(1.37)) to work with the equivalent formulas

Example 1.8.

For the exponential distribution with parameter λ, we have

Hence, using (1.38)–(1.41), we obtain

More examples are presented in Chap. 3 when properties of various distributions are studied.

The L-moments have the following properties that distinguish themselves from the usual moments:

-

1.

The L-moments exist whenever E(X) is finite, while additional restrictions may be required for the conventional moments to be finite for many distributions;

-

2.

A distribution whose mean exists is characterized by (L r : r = 1, 2, …). This result can be compared with the moment problem discussed in probability theory. However, any set that contains all L-moments except one is not sufficient to characterize a distribution. For details, see Hosking [279, 280];

-

3.

From (1.12), we see that \(L_{2} = \frac{1} {2}\Delta\), and so L 2 is a measure of spread. Thus, the first (being the mean) and second L-moments provide measures of location and spread. In a recent comparative study of the relative merits of the variance and the mean difference Δ, Yitzhaki [596] noted that the mean difference is more informative than the variance in deriving properties of distributions that depart from normality. He also compared the algebraic structure of variance and Δ and examined the relative superiority of the latter from the stochastic dominance, exchangability and stratification viewpoints. For further comments on these aspects and some others in the reliability context, see Chap. 7;

-

4.

Forming the ratios \(\tau _{r} = \frac{L_{r}} {L_{2}}\), r = 3, 4, …, for any non-degenerate X with μ < ∞, the result | τ r | < 1 holds. Hence, the quantities τ r ’s are dimensionless and bounded;

-

5.

The skewness and kurtosis of a distribution can be ascertained through the moment ratios. The L-coefficient of skewness is

$$\displaystyle{ \tau _{3} = \frac{L_{3}} {L_{2}} }$$(1.42)and the L-coefficient of kurtosis is

$$\displaystyle{ \tau _{4} = \frac{L_{4}} {L_{2}}. }$$(1.43)These two measures satisfy the criteria presented for coefficients of skewness and kurtosis in terms of order relations. The range of τ 3 is ( − 1, 1) while that of τ 4 is \(\frac{1} {4}(5\tau _{3}^{2} - 1) \leq \tau _{ 4} <1\). These results are proved in Hosking [279] and Jones [306] using different approaches. It may be observed that both τ 3 and τ 4 are bounded and do not assume arbitrarily large values as β 1 (for example, in the case of F(x) = 1 − x − 3, x > 1);

-

6.

The ratio

$$\displaystyle{ \tau _{2} = \frac{L_{2}} {L_{1}} }$$(1.44)is called L-coefficient of variation. Since X is non-negative in our case, L 1 > 0, L 2 > 0 and further

$$\displaystyle{L_{2} =\int _{ 0}^{1}u(1 - u)q(u)du <\int _{ 0}^{1}(1 - u)q(u)du = L_{ 1}}$$so that 0 < τ 2 < 1.

The above properties of L-moments have made them popular in diverse applications, especially in hydrology, civil engineering and meteorology. Several empirical studies (as the one by Sankarasubramonian and Sreenivasan [517]) comparing L-moments and the usual moments reveal that estimates based on the former are less sensitive to outliers. Just as matching the population and sample moments for the estimation of parameters, the same method (method of L-moments) can be applied with L-moments as well. Asymptotic approximations to sampling distributions are better achieved with L-moments. An added advantage is that standard errors of sample L-moments exist whenever the underlying distribution has a finite variance, whereas for the usual moments this may not be enough in many cases.

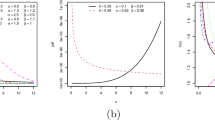

When dealing with the conventional moments, the (β 1, β 2) plot is used as a preliminary tool to discriminate between candidate distributions for the data. For example, if one wishes to choose a distribution from the Pearson family as a model, (β 1, β 2) provide exclusive classification of the members of this family. Distributions with no shape parameters are represented by points in the β 1-β 2 plane, those with a single shape parameter have their (β 1, β 2) values lie on the line 2β 2 − 3β 1 − 6 = 0, while two shape parameters in the distribution ensure that for them, (β 1, β 2) falls in a region between the lines 2β 2 − 3β 1 − 6 = 0 and β 2 − β 1 − 1 = 0. These cases are, respectively, illustrated by the exponential distribution (which has (β 1, β 2) = (4, 9) as a point), the gamma family and the beta family; see Johnson et al. [302] for details. In a similar manner, one can construct (τ 2, τ 3)-plots or (τ 3, τ 4)-plots for distribution functions or quantile functions to give a visual identification of which distribution can be expected to fit a given set of observations. Vogel and Fennessey [574] articulate the need for such diagrams and provide several examples on how to construct them. Some refinements of the L-moments are also studied in the name of trimmed L-moments (Elamir and Seheult [187], Hosking [281]) and LQ-moments (Mudholkar and Hutson [424]).

Example 1.9.

The L-moments of the exponential distribution were calculated earlier in Example 1.8. Applying the formulas for τ 2, τ 3 and τ 4 in (1.44), (1.42) and (1.43), we have

Thus, \((\tau _{2},\tau _{3}) = (\frac{1} {2}, \frac{1} {3})\) and \((\tau _{3},\tau _{4}) = (\frac{1} {3}, \frac{1} {6})\) are points in the τ 2-τ 3 and τ 3-τ 4 planes, respectively.

Example 1.10.

The random variable X has generalized Pareto distribution with

Then, straightforward calculations yield

so that

Then, eliminating a between τ 2 and τ 3, we obtain

Thus, the plot of (τ 2, τ 3) for all values of a and b lies on the curve (τ 2 + 1)(3 − τ 3) = 4. Note that the exponential plot is \((\frac{1} {2}, \frac{1} {3})\) which lies on the curve when a → 0. The estimation and other related inferential problems are discussed in Chap. 7.

We now present probability weighted moments (PWM) which is a forerunner to the concept of L-moments. Introduced by Greenwood et al. [226], PWMs are of considerable interest when the distribution is expressed in quantile form. The PWMs are defined as

where p, r, s are non-negative real numbers and E | X | p < ∞. Two special cases of (1.45) in general use are

and

Like L-moments, PWMs are more robust to outliers in the data. They have less bias in estimation even for small samples and converge rapidly to asymptotic normality.

Example 1.11.

The PWMs of the Pareto distribution with (see Table1.1)

are

Similarly, for the power distribution with (see Table 1.1)

we have

Further specializing (1.46) for p = 1, we see that the L-moments are linear combination of the PW moments. The relationships are

in the first four cases. Generally, we have the relationship

The conventional moments can also be deduced as M p, 0, 0 or β p, 0 or α p, 0. The role of PW moments in reliability analysis will be taken up in the subsequent chapters. In spite of its advantages, Chen and Balakrishnan [140] have pointed out some infeasibility problems in estimation. While estimating the parameters of some distributions like the generalized forms of Pareto, the estimated distributions have an upper or lower bound and one or more of the data values lie outside this bound.

1.7 Diagrammatic Representations

In this section, we demonstrate a few graphical methods other than the conventional ones. The primary goal is fixed as the choice of model for the data represented by a quantile function. An important tool in this category is the Q-Q plot. The Q-Q plot is the plot of points (Q(u r ), x r: n ), r = 1, 2, …, n, where \(u_{r} = \frac{r-0.5} {n}\).Footnote 1 For application purposes, we may replace Q(u r ) by the fitted quantile function. One use of this plot is to ascertain whether the sample could have arisen from the target population Q(u). In the ideal case, the graph should show a straight line that bisects the axes, since we are plotting the sample and population quantiles. However, since the sample is random and the fitted values of Q(u) are used, the points lying approximately around the line is indicative of the model being adequate. The points in the Q-Q plot are always non-decreasing when viewed from left to right.

The Q-Q plot can also be used for comparing two competing models by plotting the rth quantile of one against the rth quantile of the other. When the two distributions are similar, the points on the graph should show approximately the straight line y = x. A general trend in the plot, like steeper (flatter) than y = x, will mean that the distribution plotted on the y-axis (x-axis) is more dispersed. On the other hand, S-shaped plots often suggest that one of the distributions exhibits more skewness or tail-heaviness. It should also be noted that the relationship in quantile plot can be linear when the constituent distributions are linearly related. This procedure is direct when the data sets from two distributions contain the same number of observations. Otherwise, it is necessary to use interpolated quantile estimates in the shorter set to equal the number in the larger sets. Often, Q-Q plots are found to be more powerful and informative than histogram comparisons.

Example 1.12.

The times to failure of a set of 10 units are given as 16, 34, 53, 75, 93, 120, 150, 191, 240 and 390 h (Kececioglu [322]). A Weibull distribution with quantile function

is proposed for the data. The parameters of the model were estimated by the method of maximum likelihood as \(\hat{\sigma }= 146.2445\) and \(\hat{\lambda }= 1.973\). The Q-Q plot pertaining to the model is presented in Fig. 1.1. From the figure, it is seen that the above model seems to be adequate.

A second useful graphical representation is the box plot introduced by Tukey [569]. It depicts graph of the numerical data through a five-figure summary in the form of extremes, quartiles and the median. The steps required for constructing a box plot are (Parzen [484])

-

(i)

compute the median \(m =\bar{ Q}(0.50)\), the lower quartile \(q_{1} =\bar{ Q}(0.25)\) and the upper quartile \(q_{3} =\bar{ Q}(0.75)\);

-

(ii)

draw a vertical box of arbitrary width and length equal to q 3 − q 1;

-

(iii)

a solid line is marked within the box at a distance m − q 1 above the lower end of the box. Dashed lines are extended from the lower and upper ends of the box at distances equal to x n: n − q 3 and x 1: n − q 1. This constitutes the H-plot, H standing for hinges or quartiles. Instead, one can use \(\bar{Q}(0.125) = e_{1}\) and \(\bar{Q}(0.875) = e_{7}\) resulting in E-box plots. Similarly, the quantiles \(\bar{Q}(0.0625)\) and \(\bar{Q}(0.9375)\) constitute the D-box plots;

-

(iv)

A quantile box plot consists of the graph of \(\bar{Q}(u)\) on [0, 1] along with the three boxes in (iii), superimposed on it.

Parzen [484] proposed the following information to be drawn from the plot. By drawing a perpendicular line to the median line at its midpoint and of length \(\pm {n}^{-\frac{1} {2} } - (q_{3} - q_{1})\), a confidence interval for the median can be obtained. The graph \(x =\bar{ Q}(u)\) exhibiting sharp rises is likely to have a density with more than one mode. If such points lie inside the H-box, the presence of several distinct populations generating the data is to be suspected, while, if they are outside the D-box, presence of outliers is indicated. Horizontal segments in the graph may be the results of the discrete distributions. By calculating

for u values, one can get a feel for skewness with a value near zero suggesting symmetry. Parzen [484] also suggested some measures of tail classification.

Example 1.13.

The box plot corresponding to the data in Example 1.12 is exhibited in Fig. 1.2. It may be noticed that the observation 390 is a likely outlier.

A stem-leaf plot can also be informative about some meaningful characteristics of the data. To obtain such a plot, we first arrange the observations in ascending order. The leaf is the last digit in a number. The stem contains all other digits (When the data consists of very large numbers, rounded values to a particular place, like hundred or thousand, are used a stem and leaves). In the leaf plot, there are two columns, first representing the stem, separated by a line from the second column representing the leaves. Each stem is listed only once and the leaves are entered in a row. The plot helps to understand the relative density of the observations as well as the shape. The mode is easily displayed along with the potential outliers. Finally, the descriptive statistics can be easily worked out from the diagram.

Example 1.14.

We illustrate the stem-leaf plot for a small data set: 36, 57, 52, 44, 47, 51, 46, 63, 59, 68, 66, 68, 72, 73, 75, 81, 84, 106, 76, 88, 91, 41, 84, 68, 34, 38, 54.

Notes

- 1.

There are different choices for these plotting points and recently Balakrishnan et al. [52] discussed the determination of optimal plotting points by the use of Pitman closeness criterion.

References

Abromowitz, M., Stegun, I.A.: Handbook of Mathematical Functions: Formulas, Graphs and Mathematical Tables. Applied Mathematics Series, vol. 55. National Bureau of Standards, Washington, DC (1964)

Adamidis, K., Loukas, S.: A lifetime distribution with decreasing failure rate. Stat. Probab. Lett. 39, 35–42 (1998)

Arnold, B.C., Balakrishnan, N., Nagaraja, H.N.: A First Course in Order Statistics. Wiley, New York (1992)

Avinadav, T., Raz, T.: A new inverted hazard rate function. IEEE Trans. Reliab. 57, 32–40 (2008)

Balakrishnan, N.: Order statistics from the half logistic distribution. J. Stat. Comput. Simulat. 20, 287–309 (1985)

Balakrishnan, N. (ed.): Handbook of the Logistic Distribution. Marcel Dekker, New York (1992)

Balakrishnan, N., Aggarwala, R.: Relationships for moments of order statistics from the right-truncated generalized half logistic distribution. Ann. Inst. Stat. Math. 48, 519–534 (1996)

Balakrishnan, N., Cohen, A.C.: Order Statistics and Inference: Estimation Methods. Academic, Boston (1991)

Balakrishnan, N., Davies, K., Keating, J.P., Mason, R.L.: Computation of optimal plotting points based on Pitman closeness with an application to goodness-of-fit for location-scale families. Comput. Stat. Data Anal. 56, 2637–2649 (2012)

Balakrishnan, N., Kundu, D.: Hybrid censoring: Models, inferential results and applications (with discussions). Comput. Stat. Data Anal. 57, 166–209 (2013)

Balakrishnan, N., Rao, C.R.: Order Statistics: Theory and Methods. Handbook of Statistics, vol. 16. North-Holland, Amsterdam (1998)

Balakrishnan, N., Rao, C.R.: Order Statistics - Applications. Handbook of Statistics, vol. 17. North-Holland, Amsterdam (1998)

Balakrishnan, N., Sandhu, R.: Recurrence relations for single and product moments of order statistics from a generalized half logistic distribution, with applications to inference. J. Stat. Comput. Simulat. 52, 385–398 (1995)

Balakrishnan, N., Wong, K.H.T.: Approximate MLEs for the location and scale parameters of the half-logistic distribution with Type-II right-censoring. IEEE Trans. Reliab. 40, 140–145 (1991)

Balanda, K.P., MacGillivray, H.L.: Kurtosis: a critical review. Am. Stat. 42, 111–119 (1988)

Chen, G., Balakrishnan, N.: The infeasibility of probability weighted moments estimation of some generalized distributions. In: Balakrishnan, N. (ed.) Recent Advances in Life-Testing and Reliability, pp. 565–573. CRC Press, Boca Raton (1995)

Cohen, A.C.: Truncated and Censored Samples: Theory and Applications. Marcel Dekker, New York (1991)

Dimitrakopoulou, T., Adamidis, K., Loukas, S.: A life distribution with an upside down bathtub-shaped hazard function. IEEE Trans. Reliab. 56, 308–311 (2007)

Elamir, E.A.H., Seheult, A.H.: Trimmed L-moments. Comput. Stat. Data Anal. 43, 299–314 (2003)

Erto, P.: Genesis, properties and identification of the inverse Weibull lifetime model. Statistica Applicato 1, 117–128 (1989)

Falk, M.: On MAD and comedians. Ann. Inst. Stat. Math. 45, 615–644 (1997)

Filliben, J.J.: Simple and robust linear estimation of the location parameter of a symmetric distribution. Ph.D. thesis, Princeton University, Princeton (1969)

Freimer, M., Mudholkar, G.S., Kollia, G., Lin, C.T.: A study of the generalised Tukey lambda family. Comm. Stat. Theor. Meth. 17, 3547–3567 (1988)

Fry, T.R.L.: Univariate and multivariate Burr distributions. Pakistan J. Stat. 9, 1–24 (1993)

Galton, F.: Statistics by inter-comparison with remarks on the law of frequency error. Phil. Mag. 49, 33–46 (1875)

Galton, F.: Enquiries into Human Faculty and Its Development. MacMillan, London (1883)

Galton, F.: Natural Inheritance. MacMillan, London (1889)

Gilchrist, W.G.: Statistical Modelling with Quantile Functions. Chapman and Hall/CRC Press, Boca Raton (2000)

Greenwich, M.: A unimodal hazard rate function and its failure distribution. Statistische Hefte 33, 187–202 (1992)

Greenwood, J.A., Landwehr, J.M., Matalas, N.C., Wallis, J.R.: Probability weighted moments. Water Resour. Res. 15, 1049–1054 (1979)

Groeneveld, R.A., Meeden, G.: Measuring skewness and kurtosis. The Statistician 33, 391–393 (1984)

Gupta, R.C., Akman, H.O., Lvin, S.: A study of log-logistic model in survival analysis. Biometrical J. 41, 431–433 (1999)

Gupta, R.C., Gupta, P.L., Gupta, R.D.: Modelling failure time data with Lehmann alternative. Comm. Stat. Theor. Meth. 27, 887–904 (1998)

Gupta, R.C., Gupta, R.D.: Proportional reversed hazards model and its applications. J. Stat. Plann. Infer. 137, 3525–3536 (2007)

Gupta, R.D., Kundu, D.: Generalized exponential distribution. Aust. New Zeal. J. Stat. 41, 173–178 (1999)

Hahn, G.J., Shapiro, S.S.: Statistical Models in Engineering. Wiley, New York (1967)

Hastings, C., Mosteller, F., Tukey, J.W., Winsor, C.P.: Low moments for small samples: A comparative study of statistics. Ann. Math. Stat. 18, 413–426 (1947)

Hinkley, D.V.: On power transformations to symmetry. Biometrika 62, 101–111 (1975)

Hogben, D.: Some properties of Tukey’s test for non-additivity. Ph.D. thesis, The State University of New Jersey, New Jersey (1963)

Hosking, J.R.M.: L-moments: analysis and estimation of distribution using linear combination of order statistics. J. Roy. Stat. Soc. B 52, 105–124 (1990)

Hosking, J.R.M.: Moments or L-moments? An example comparing two measures of distributional shape. The Am. Stat. 46, 186–189 (1992)

Hosking, J.R.M.: Some theoretical results concerning L-moments. Research Report, RC 14492. IBM Research Division, Yorktown Heights, New York (1996)

Hosking, J.R.M.: On the characterization of distributions by their L-moments. J. Stat. Plann. Infer. 136, 193–198 (2006)

Hosking, J.R.M.: Some theory and practical uses of trimmed L-moments. J. Stat. Plann. Infer. 137, 3024–3029 (2007)

Hosking, J.R.M., Wallis, J.R.: Regional Frequency Analysis: An Approach based on L-Moments. Cambridge University Press, Cambridge (1997)

Joannes, D.L., Gill, C.A.: Comparing measures of sample skewness and kurtosis. The Statistician 47, 183–189 (1998)

Johnson, N.L., Kotz, S., Balakrishnan, N.: Continuous Univariate Distributions, vol. 2, 2nd edn. Wiley, New York (1995)

Joiner, B.L., Rosenblatt, J.R.: Some properties of the range of samples from Tukey’s symmetric lambda distribution. J. Am. Stat. Assoc. 66, 394–399 (1971)

Jones, M.C.: On some expressions for variance, covariance, skewness and L-moments. J. Stat. Plann. Infer. 126, 97–108 (2004)

Kececioglu, D.B.: Reliability and Lifetesting Handbook, vol. 1. DEStech Publications, Lancaster (2002)

Kotz, S., Seier, E.: An analysis of quantile measures of kurtosis, center and tails. Stat. Paper. 50, 553–568 (2009)

Kus, C.: A new lifetime distribution. Comput. Stat. Data Anal. 51, 4497–4509 (2007)

Lai, C.D., Xie, M.: Stochastic Ageing and Dependence for Reliability. Springer, New York (2006)

Lan, Y., Leemis, L.M.: Logistic exponential survival function. Nav. Res. Logist. 55, 252–264 (2008)

Lehmann, E.L.: The power of rank tests. Ann. Math. Stat. 24, 23–42 (1953)

MacGillivray, H.L.: Skewness properties of asymmetric forms of Tukey-lambda distribution. Comm. Stat. Theor. Meth. 11, 2239–2248 (1982)

Marshall, A.W., Olkin, I.: A new method of adding a parameter to a family of distributions with application to exponential and Weibull families. Biometrika 84, 641–652 (1997)

Marshall, A.W., Olkin, I.: Life Distributions. Springer, New York (2007)

Moors, J.J.A.: A quantile alternative for kurtosis. The Statistician 37, 25–32 (1988)

Mudholkar, G.S., Hutson, A.D.: Analogues of L-moments. J. Stat. Plann. Infer. 71, 191–208 (1998)

Mudholkar, G.S., Kollia, G.D.: Generalized Weibull family–a structural analysis. Comm. Stat. Theor. Meth. 23, 1149–1171 (1994)

Mudholkar, G.S., Srivastava, D.K., Freimer, M.: The exponentiated Weibull family: A reanalysis of bus motor failure data. Technometrics 37, 436–445 (1995)

Mudholkar, G.S., Srivastava, D.K.: Exponentiated Weibull family for analysing bathtub failure data. IEEE Trans. Reliab. 42, 299–302 (1993)

Murthy, D.N.P., Xie, M., Jiang, R.: Weibull Models. Wiley, Hoboken (2003)

Paranjpe, S.A., Rajarshi, M.B., Gore, A.P.: On a model for failure rates. Biometrical J. 27, 913–917 (1985)

Parzen, E.: Nonparametric statistical data modelling. J. Am. Stat. Assoc. 74, 105–122 (1979)

Parzen, E.: Unifications of statistical methods for continuous and discrete data. In: Page, C., Lepage, R. (eds.) Proceedings of Computer Science-Statistics. INTERFACE 1990, pp. 235–242. Springer, New York (1991)

Parzen, E.: Quality probability and statistical data modelling. Stat. Sci. 19, 652–662 (2004)

Pearson, K.: Tables of Incomplete Beta Function, 2nd edn. Cambridge University Press, Cambridge (1968)

Quetelet, L.A.J.: Letters Addressed to HRH the Grand Duke of Saxe Coburg and Gotha in the Theory of Probability. Charles and Edwin Laton, London (1846). Translated by Olinthus Gregory Downs

Ramberg, J.S.: A probability distribution with applications to Monte Carlo simulation studies. In: Patil, G.P., Kotz, S., Ord, J.K. (eds.) Model Building and Model Selection. Statistical Distributions in Scientific Work, vol. 2. D. Reidel, Dordrecht (1975)

Ramberg, J.S., Dudewicz, E., Tadikamalla, P., Mykytka, E.: A probability distribution and its uses in fitting data. Technometrics 21, 210–214 (1979)

Ramberg, J.S., Schmeiser, B.W.: An approximate method for generating asymmetric random variables. Comm. Assoc. Comput. Mach. 17, 78–82 (1974)

Rohatgi, V.K., Saleh, A.K.Md.E.: A class of distributions connected to order statistics with nonintegral sample size. Comm. Stat. Theor. Meth. 17, 2005–2012 (1988)

Sankarasubramonian, A., Sreenivasan, K.: Investigation and comparison of L-moments and conventional moments. J. Hydrol. 218, 13–34 (1999)

Shapiro, S.S., Wilk, M.B.: An analysis of variance test for normality. Biometrika 52, 591–611 (1965)

Sillitto, G.P.: Derivation of approximants to the inverse distribution function of a continuous univariate population from the order statistics of a sample. Biometrika 56, 641–650 (1969)

Stigler, S.M.: Fractional order statistics with applications. J. Am. Stat. Assoc. 72, 544–550 (1977)

Suleswki, P.: On differently defined skewness. Comput. Meth. Sci. Technol. 14, 39–46 (2008)

Tajuddin, I.H.: A simple measure of skewness. Stat. Neerl. 50, 362–366 (1996)

Tarsitano, A.: Estimation of the generalised lambda distributions parameter for grouped data. Comm. Stat. Theor. Meth. 34, 1689–1709 (2005)

Tukey, J.W.: The future of data analysis. Ann. Math. Stat. 33, 1–67 (1962)

Tukey, J.W.: Exploratory Data Analysis. Addisson-Wesley, Reading (1977)

Vogel, R.M., Fennessey, N.M.: L-moment diagrams should replace product moment diagrams. Water Resour. Res. 29, 1745–1752 (1993)

Xie, M., Tang, Y., Goh, T.N.: A modified Weibull extension with bathtub-shaped failure rate function. Reliab. Eng. Syst. Saf. 76, 279–285 (2002)

Yitzhaki, S.: Gini’s mean difference: A superior measure of variability for nonnormal distributions. Metron 61, 285–316 (2003)

Zimmer, W., Keats, J.B., Wang, F.K.: The Burr XII distribution in reliability analysis. J. Qual. Technol. 20, 386–394 (1998)

Author information

Authors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer Science+Business Media New York

About this chapter

Cite this chapter

Nair, N.U., Sankaran, P.G., Balakrishnan, N. (2013). Quantile Functions. In: Quantile-Based Reliability Analysis. Statistics for Industry and Technology. Birkhäuser, New York, NY. https://doi.org/10.1007/978-0-8176-8361-0_1

Download citation

DOI: https://doi.org/10.1007/978-0-8176-8361-0_1

Published:

Publisher Name: Birkhäuser, New York, NY

Print ISBN: 978-0-8176-8360-3

Online ISBN: 978-0-8176-8361-0

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)