Abstract

This note shows that a semi-Markov process with Borel state space is regular under a fairly weak condition on the mean sojourn or holding times and assuming that the embedded Markov chain satisfies one of the following conditions: (a) it is Harris recurrent; (b) it is recurrent and the “recurrent part” of the state space is reached with probability one for every initial state; (c) it has a unique invariant probability measure. Under the latter condition, the regularity property is only ensured for almost all initial states with respect to the invariant probability measure.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

18.1 Introduction

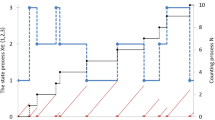

A semi-Markov process (SMP) combines the probabilistic structure of a Markov chain and a renewal process as follows: it makes transitions according to a Markov chain, but the times spent between successive transitions are random variables whose distribution functions depend on the “present” state of the system. Observe that a continuous-time Markov chain is a SMP with exponentially distributed transition times. Thus, it is raised the question of whether the SMP experiences finite or infinitely many transitions in bounded time periods. If the former property holds, the SMP is said to be regular (or nonexplosive), and irregular (or explosive) otherwise.

A natural way to obtain the regularity property is to impose conditions that guarantee that transitions do not take place too quickly, and the most popular condition to do this is that used by Ross [7, Proposition 5.1, p. 88] and Çinlar [2, Chap. 10, Proposition 3.19, p. 327]. Roughly speaking, this condition requires the transition times to be greater than some γ > 0 with a probability of at least ε > 0, independently of the present state of the system [see (18.6) below]. Under this condition, both authors obtain the regularity of the SMP for the countable state space case only, but using a key remark of Bhattacharya and Majumdar [1] (see Remark 18.3.1, below), this result can also be proved for Borel spaces (see Theorem 18.3.2). It is worth mentioning that Çinlar’s proof [2, Chap. 10, Proposition 3.19, p. 327] also extends directly to the general case of Borel spaces.

Moreover, for the countable state space case, Ross [7, Proposition 5.1, p. 88] and Çinlar [2, Chap. 10, Corollary 3.17, p. 327] prove that the regularity property holds whenever the “embedded” Markov chain reaches a recurrent state with probability one for every initial state. Thus, in particular, the regularity property holds if the embedded Markov chain is recurrent. However, their proofs cannot be extended, or at least not directly, to the case of Borel state space because they rely on the renewal process formed by the successive times at which a recurrent state is visited, which typically involves events of probability zero if the state space is uncountable. In fact, to the best of our knowledge, there is no counterpart of these results for Borel spaces.

The aim of this note is to fill this gap by extending the latter results to SMP with Borel state space. More precisely, imposing a fairly weak condition on the sojourn or holding time distribution, we show that the regularity property holds under each one of the following conditions: (a) the embedded Markov chain is Harris recurrent; (b) the embedded Markov chain is recurrent and the “recurrent part” of the state space is reached with probability one for each initial state; (c) the embedded Markov chain has a unique invariant probability measure. Under the latter condition, the regularity property is only ensured for almost all initial state with respect to the invariant probability measure.

18.2 Preliminary Concepts

This section briefly introduces the SMPs. The readers are referred to Limnios and Oprişan [5] for a rigorous and detailed description. Next, we have some notation which is used through the note. Let \((\mathbb{X},\mathcal{B})\) be a measurable space where \(\mathbb{X}\) is a Borel space and ℬ is its Borel σ-algebra. We denote by ℝ + and ℕ 0 the sets of nonnegative real numbers and nonnegative integers, respectively, while ℕ stands for the positive integers. Set \(\Omega := {(\mathbb{X} \times {\mathbb{R}}_{+})}^{\infty }\) and denote by ℱ the corresponding product σ-algebra.

Consider a fixed stochastic kernel Q( ⋅, ⋅ | ⋅) on \({\mathbb{X} \times \mathbb{R}}_{+}\) given \(\mathbb{X}.\) Then, for each “initial” state \(x \in \mathbb{X},\) there exists a probability measure ℙ x and a Markov chain {(X n, δ n + 1) : n ∈ ℕ 0} defined on the canonical measurable space (Ω, ℱ) such that

for all \(B\in \mathcal{B},t \in {\mathbb{R}}_{+},y \in \mathbb{X}.\)

The process {(X n, δ n + 1) : n ∈ ℕ 0} is called Markov renewal process and usually thought of as a model of a stochastic system evolving as follows: it is observed at time t = 0 in some initial state \({X}_{0} = x \in \mathbb{X}\) in which it remains up to a (nonnegative) random time δ1. The distribution function of δ1 is given by

which is called the sojourn or holding time distribution in the state x. Thus, the mean sojourn or holding time function is defined as

Next, at time δ1, the system jumps to a new state, say \({X}_{1} = y \in \mathbb{X},\) according to the probability measure

Once the transition occurs, the system remains in the new state X 1 = y up to a (nonnegative) random time δ2, and so on.

The state of the systems is tracked in continuous time by the process

where

The continuous-time process {Z t : t ∈ ℝ + } is called semi-Markov process (SMP) with (semi-Markov) kernel Q( ⋅, ⋅ | ⋅).

Note, by (18.2), that the process {X n : x ∈ ℕ 0} is a Markov chain on \(\mathbb{X}\) with one-step transition probability P( ⋅ | ⋅). Thus, it is called the embedded Markov chain in the SMP {Z t : t ∈ ℝ + }.

Now observe that the kernel Q( ⋅, ⋅ | ⋅) can be “disintegrated” as

where G( ⋅ | x, y) is a distribution function on ℝ + for all \(x,y \in \mathbb{X},\) while G(t | ⋅, ⋅) is a measurable function on \(\mathbb{X} \times \mathbb{X}\) for each t ∈ ℝ + . Thus

Then, using the Markov property of the Markov renewal process and (18.3), it is easy to prove that the random variables \(\{{\delta }_{n} : n \in \mathbb{N}\}\) are (conditionally) independent given the state process \(\{{X}_{n} : n \in {\mathbb{N}}_{0}\}\) and also that

18.3 The Regularity Property, Recurrence and Invariant Measures

Let {(X n, δ n + 1) : n ∈ ℕ 0} be a Markov renewal process with stochastic kernel Q( ⋅, ⋅ | ⋅) on \(\mathbb{X} \times {\mathbb{R}}_{+}\) given \(\mathbb{X}.\)

Definition 18.3.1.

A state \(x \in \mathbb{X}\) is said to be regularif

The SMP is said to be regular if every state \(x \in \mathbb{X}\) is regular.

Define

and observe that 0 < Δ( ⋅) ≤ 1. Also note that

Clearly, to guarantee the regularity property holds, it is required to exclude this degenerate case occurs for all or “almost all” states. The most popular way to do this is by means of the following assumption: there exist positive constants γ and ε < 1 such that

Ross [7, Proposition 5.1, p. 88] and Çinlar [2, Chap. 10, Proposition 3.19, p. 327] prove that the SMP is regular assuming condition (18.6) holds. Here, for the sake of completeness, we provide other proof based in the following remark due to Bhattacharya and Majumdar [1].

Remark 18.3.1.

It follows from the conditional independence of the random variables \(\{{\delta }_{n} : n \in \mathbb{N}\}\) and (18.4) that

Hence,

This follows directly from (18.7) after noting that \({Z}_{n} :=\exp (-{T}_{n})\) and W n : = Δ(X 0)⋯Δ(X n ), n ∈ ℕ, are bounded and nonincreasing sequences.

Theorem 18.3.1.

If condition (18.6) holds, then the SMP is regular.

Proof of Theorem 18.3.1. This follows directly from (18.8) after noting that condition (18.6) implies that

□

The regularity can also be guaranteed asking condition (18.6) holds only for states in a proper subset \(C \subset \mathbb{X}\) provided it is accompanied by an appropriate “recurrence” property [see Remark 18.3.6(b) below].

Next, we prove the regularity of the SMP holds under some “recurrence” conditions which seems to be the weakest possible ones. To state these assumptions, we need several concepts and results from Markov chain theory which are collected from Hernández-Lerma and Lasserre[3] and Meyn and Tweedie [6].

A Markov chain {Y n : n ∈ ℕ 0} with state space \(\mathbb{X}\) is said to be irreducible if there exists a nontrivial σ-finite measure ν( ⋅) on \((\mathbb{X},\mathcal{B})\) such that

whenever ν(B) > 0, B ∈ ℬ; in this case, ν( ⋅) is called an irreducibility measure. If the Markov chain {Y n : n ∈ ℕ 0} is irreducible, there exists a maximal irreducibility measure ψ( ⋅), which means that ψ( ⋅) is an irreducibility measure and that any other irreducibility measure ν( ⋅) is absolutely continuous with respect to ψ( ⋅). Moreover, if ψ(B) = 0, then

which means that the set of initial states for which the Markov chain enters to a ψ-null set is also a ψ-null set [6, Proposition 4.2.2, p. 88].

Let {Y n : n ∈ ℕ 0} be an irreducible Markov chain and ψ( ⋅) a maximal irreducibility measure. The Markov chain {Y n : n ∈ ℕ 0} is said to be recurrent if

where \({\mathcal{B}}^{+} :=\{ B \in \mathcal{B} : \psi (B) > 0\}\). Note that ℬ + is well defined because all maximal irreducibility measures are equivalent. If instead of condition (18.10) we have

then the Markov chain is said to be Harris recurrent. It is proved in Meyn and Tweedie [6, Theorem 9.1.4, p. 204] that a Harris recurrent Markov chain satisfies the (apparently) stronger condition

We now come back to the discussion of the regularity property with the following remark.

Remark 18.3.2.

Suppose the embedded Markov chain {X n : n ∈ ℕ 0} is irreducible. If the SMP is regular, due to property (18.8), the Markov chain {X n : n ∈ ℕ 0} visits the set

infinitely often ℙ x -a.s for every initial state \(x \in \mathbb{X}\). Moreover, the set L belongs to ℬ + ; otherwise, by (18.9),

which obviously is a contradiction.

Remark 18.3.3.

Suppose the embedded Markov chain {X n : n ∈ ℕ 0} is irreducible. Then, L ∈ ℬ + if and only if \({B}_{\alpha } :=\{ x \in \mathbb{X} : \Delta (x) \leq \alpha \} \in {\mathcal{B}}^{+}\) for some α ∈ (0, 1). This claim follows noting that \(L= {\cup }_{n=1}^{\infty }{B}_{n}\) where \({B}_{n} :=\{ x \in \mathbb{X} : \Delta (x) \leq {\alpha }_{n}\}\) and α n ↑ 1.

We now state the first result of this note.

Theorem 18.3.2.

Suppose the embedded Markov chain is Harris recurrent. Then, the SMP is regular if and only if L ∈ℬ +.

Proof of Theorem 18.3.2. Note that the “only if” part is proved in Remark 18.3.2. To prove the other part, take B α as in Remark 18.3.3 and for each n ∈ ℕ define

and

Now observe that

on the set [S n ≠0]. Thus, since the embedded Markov chain {X n : n ∈ ℕ 0} is Harris recurrent and ψ(B α) > 0, S n → ∞ ℙ x -a.s. for all \(x \in \mathbb{X};\) hence

which, by (18.8), proves that the process is regular. □

The regularity property of the SMP can also be obtained assuming that the embedded Markov chain {X n : n ∈ ℕ 0} is recurrent. However, as in Ross [7, Proposition 5.1, p. 88] and Çinlar [2, Chap. 10, Corollary 3.17, p. 327], we need to assume additionally that the “recurrent part” of the state space is reached with probability one for every initial state. To state this condition precisely, we require the following important result (see, e.g., Hernández-Lerma and Lasserre [3, Proposition 4.2.12, p. 50] or Meyn and Tweedie [6, Theorem 9.0.1, p. 201]).

Remark 18.3.4.

If the embedded Markov chain{X n : n ∈ ℕ 0} is recurrent, then

where the measurable set H is full and absorbing (i.e., ψ(N) = 0 and P(H | x) = 1 for all x ∈ H, respectively). Moreover, the Markov chain restricted to H is Harris recurrent, that is

Theorem 18.3.3.

If the embedded Markov chain is recurrent, L ∈ℬ + and

then the SMP is regular.

Proof of Theorem 18.3.3. The proof follows the same arguments given in the proof of Theorem 18.3.2 but considering \({\overline{B}}_{\alpha } := {B}_{\alpha } \cap H\) instead of the set B α. □

Note that Theorems 18.3.2 and 18.3.3state that the regularity property holds for all initial state \(x \in \mathbb{X}\) under a recurrence condition independently of whether the embedded Markov chain admits an invariant probability measure μ( ⋅), that is, a probability measure satisfying the condition

Recurrence (and then Harris recurrence) may be dispensed if one supposes the existence of a unique invariant probability measure with the cost that the regularity property will be ensured only for almost all initial states (see Theorem 18.3.4 below). The proof uses a pathwise ergodic theorem which is borrowed from Hernández-Lerma and Lasserre [3, Corollary 2.5.2]. To state this result, we need the following notation: for a measurable function v( ⋅) and measure λ( ⋅) on \((\mathbb{X},\mathcal{B})\), let

whenever the integral is well defined. Moreover, denote by L 1(λ) the class of measurable functions v( ⋅) on \(\mathbb{X}\) such that λ( | v | ) < ∞.

Remark 18.3.5.

-

(a)

Suppose that {X n : n ∈ ℕ 0} has a unique invariant probability measure μ( ⋅). Then, for each function v ∈ L 1(μ), there exists a set B v ∈ ℬ, with μ(B v ) = 1, such that

$$\frac{1} {n}\sum\limits_{k=0}^{n-1}v({X}_{ n}) \rightarrow \mu (v)\;\;\;{\mathbb{P}}_{x}\text{ -a.s. }\forall x \in {B}_{v}.$$(18.11) -

(b)

If in addition the Markov chain is Harris recurrent, then (18.11) holds for all \(x \in \mathbb{X}\) (see Hernández-Lerma and Lasserre [3, Theorem 4.2.13, p.51]).

Theorem 18.3.4.

Suppose the following conditions hold: (a) the embedded Markov chain has a unique invariant probability measure μ(⋅); (b) \(\mu (\Delta ) ={ \int \nolimits \nolimits }_{\mathbb{X}}\Delta (x)\mu (\mathrm{d}x) < 1.\) Then, the SMP is regular for μ-almost all \(x \in \mathbb{X}.\) If in addition the embedded Markov chain is Harris recurrent, then the regularity property holds for all \(x \in \mathbb{X}.\)

Proof of Theorem 18.3.4. Observe that

Thus, by condition (a) and Remark 18.3.5(a), there exists a set B Δ ∈ ℬ such that

with μ(B Δ ) = 1. Therefore

The second statement of the theorem follows from Theorem 18.3.2 because the property μ(Δ) < 1 implies that L ∈ ℬ + . □

Remark 18.3.6.

-

(a)

Let μ be a probability measure on \((\mathbb{X},\mathcal{B}).\)Observe that (18.5) implies that \(\{x \in \mathbb{X} : \tau (x) > 0\} =\{ x \in \mathbb{X} : \Delta (x) < 1\}.\) Then

$$\mu (\tau ) > 0 \Leftrightarrow \mu (\Delta ) < 1.$$Thus, the conclusions in Theorem 18.3.4 remain valid if condition (b) is replaced by the condition μ(τ) > 0.

-

(b)

Schäl [8] and Jaśkiewicz [4] considered the following weakened version of condition (18.6): there exist positive constants γ, ε < 1, and a subset C ∈ ℬ such that

$$1 - F(\gamma \vert x) > \varepsilon \ \ \forall x \in C.$$This condition by itself does not imply the regularity of the SMP (see the example in Ross [7, p. 87]); however, it does provided that C ∈ ℬ + , and a suitable recurrence condition holds, e.g., the embedded Markov chain is Harris recurrent. To see this is true, note that

$$\sup\limits_{x\in C}\Delta (x) \leq (1 - \varepsilon ) + \varepsilon \exp (-\gamma ) < 1,$$which implies that \(L =\{ x \in \mathbb{X} : \Delta (x) < 1\} \in {\mathcal{B}}^{+}.\) Hence, from Theorem 18.3.2, the SMP is regular.

References

Bhattacharya, R.N., Majumdar, M. (1989), Controlled semi-Markov models–The discounted case, J. Statist. Plann. Inf.21, 365–381.

Çinlar, E. (1975), Introduction to Stochastic Processes, Prentice-Hall, Englewood Cliffs, New Jersey.

Hernández-Lerma, O., Lasserre, J.B. (2003), Markov Chains and Invariant Probabilities, Birkhäuser, Basel.

Jaśkiewicz, A. (2004), On the equivalence of two expected average cost criteria for semi-Markov control processes, Math. Oper. Research 29, 326–338.

Limnios, N., Oprişan, G. (2001), Semi-Markov Processes and Reliability, Birkhaüser, Boston.

Meyn, S.P., Tweedie, R.L. (1993), Markov Chains and Stochastic Stability, Springer-Verlag, London.

Ross, S.M. (1970), Applied Probability Models with Optimization Applications, Holden-Day, San Francisco.

Schäl, M. (1992), On the second optimality equation for semi-Markov decision models, Math. Oper. Research 17, 470–486.

Acknowledgements

The author takes this opportunity to thank to Professor Onésimo Hernández-Lerma for his constant encouragement and support during the author’s academic career and, in particular, for his valuable comments on an early version of present work. Thanks are also due to the referees for their useful observations which improve the writing and organization of this note.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2012 Springer Science+Business Media, LLC

About this chapter

Cite this chapter

Vega-Amaya, Ó. (2012). On the Regularity Property of Semi-Markov Processes with Borel State Spaces. In: Hernández-Hernández, D., Minjárez-Sosa, J. (eds) Optimization, Control, and Applications of Stochastic Systems. Systems & Control: Foundations & Applications. Birkhäuser, Boston. https://doi.org/10.1007/978-0-8176-8337-5_18

Download citation

DOI: https://doi.org/10.1007/978-0-8176-8337-5_18

Published:

Publisher Name: Birkhäuser, Boston

Print ISBN: 978-0-8176-8336-8

Online ISBN: 978-0-8176-8337-5

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)