Abstract

The goal of this contribution is threefold:

-

1.

Highlight the richness of fluorescence observables and parameters that enable fluorescent molecules (or biomacromolecule’s fluorescent groups) to be important reporters for biological studies

-

2.

Provide a brief overview of current instrumental techniques and methods available for fluorescence spectroscopy studies from steady-state versions to high time resolution in bulk as well as in single-molecule studies

-

3.

Based on the previous knowledge (1) and (2), to provide a “helping hand” to molecular biologists and biochemists when they are to decide about fluorescence spectroscopy application for a given research task

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Anisotropy

- Fluorescence decay

- Nonlinear time-resolved techniques

- Photon counting and timing

- Polarization

- Time-resolved fluorescence

1 Basics and a Bit of History

A mysterious light emitted by some materials – solid or soft matter, inorganic or organic, biological tissue, or living organisms – has been known and attracting attention since ancient times. Already Aristotle knew about the luminescence of dead fish and flesh as well as that of fungi. Emanation of light is described in Chinese, Japanese, and Indian mythologies and ancient histories. Natural examples of light-emitting glow worms, luminescent wood, and even live creatures such as fire-flies were also known already then.

We could consider the year 1603 to be the beginning of modern luminescent material study, though unintended. In that year, the alchemist Vincenzo Cascariolo ground natural mineral barite (BaSO4) found near Bologna and heated it under reducing conditions in the hope of obtaining gold. Instead, he noticed persistent luminescence of this material, which has since then been called Bolognian stone. It is not known which dopants were responsible for its luminescence, but Bologna stone became the first scientifically documented material exhibiting a persistent luminescence. In 1640, Fortunius Licetus published Litheosphorus Sive de Lapide Bononiensi, devoted to Bologna stone luminescence.

The term “luminescence” (as opposed to incandescence) was first used in 1888 by Eilhardt Wiedemann who classified six kinds of it according to the method of excitation. This classification – photoluminescence, electroluminescence, thermoluminescence, chemiluminescence, triboluminescence, and crystalloluminescence – has been used to this day.

In 1845, Fredrick W. Herschel discovered that UV light can excite a quinine solution (e.g., tonic water) to emit blue light. This kind of luminescence came to be known as fluorescence.

A comprehensive history of luminescence is described, e.g., in A History of Luminescence from the Earliest Times Until 1900 by E. Newton Harvey [1].

1.1 Nobel Prizes Related to Fluorescence

Over the last 100 years, fluorescence has gained in importance and has become an important tool in biophysics. This is evidenced by Nobel prizes awarded for research related to fluorescence. First of all, there was an effort to increase time resolution in order to follow the dynamics of chemical processes in real time. For their fundamental studies “of extremely fast chemical reactions, effected by disturbing the equilibrium by means of very short pulses of energy,” Manfred Eigen, Ronald George Wreyford Norrish, and George Porter were awarded the Nobel Prize in Chemistry in 1967 [2,3,4].

They established nanosecond flash photolysis as a very effective way to study intermediates of fast photo-initiated processes (then on the ns time scale). Soon afterward – in 1974 – G. Porter, E.S. Reid, and C. J. Tredwell implemented mode-locked Nd3+ glass laser with a CS2 cell for optical sampling and published fluorescence lifetimes of fluorescein (3.6 ns), eosin (900 ps), and erythrosine (110 ps) – the first such reproducible experimental data on the picosecond (ps) time scale [5].

This breakthrough into the ps region provided a method to follow faster and faster photo-initiated processes in real time. New femtosecond (fs) non/linear spectroscopic techniques were developed, e.g., by Graham Fleming and his group, in order to elucidate key features of natural photosynthesis. In 1999, a Nobel Prize for Chemistry was awarded to Ahmed H. Zewail “for his studies of the transition states of chemical reactions using femtosecond spectroscopy” [6].

The synergistic effects of new instrumental laser techniques yielding new, formerly inaccessible, experimental data and the development of quantum theory calculations launched a new field of femto-chemistry and molecular biology, where a molecule in a new role – such as a fluorophore acting as reporter in a biomolecular superstructure – could be fully utilized. Subsequently, a Nobel Prize for Chemistry was awarded to Roger Y. Tsien, Osamu Shimomura, and Martin Chalfie “for the discovery and development of the green fluorescent protein” in 2008 [7].

When the study of fs processes in real time – the “ultimate time scale” for chemistry – became feasible, new research fields emerged: single-molecule study and spectroscopic techniques with high spatial resolution – i.e., combination of advanced time-resolved (TR) spectroscopy with microscopy. Once again, a Nobel Prize for Chemistry was awarded for significant advancement in this new field to Eric Betzig, Stefan W. Hell, and William E. Moerner “for the development of super-resolved fluorescence microscopy” in 2014 [8].

1.2 Fluorescence as a Tool of Growing Importance

1.2.1 Jabloński Diagram and Stokes Shift

Alexander Jabloński used the Franck-Condon principle to explain the main features of luminescence from molecular liquid solutions. A simple diagram he developed in 1933 makes it possible to explain the main spectral and kinetic features of this emission. Subsequently, Jabloński, together with Kasha, Lewis, and Terenin, proved a singlet and triplet character of the energy levels involved in the process of emission, and the specific terms fluorescence, phosphorescence, and delayed fluorescence were established (Fig. 1).

This textbook-style energy level diagram illustrates the most typical photophysical processes during excitation and de-excitation of fluorophores utilized in biology. At normal laboratory (or physiological) temperature in a biological sample, practically all fluorophores are in their vibrationally relaxed (v = 0), lowest electronic ground state, S0. Absorption of a photon carrying enough energy to transition the molecule to a higher electronically excited state causes vibrational excitation, too. The excess vibrational energy is dissipated due to very fast vibrational relaxation, typically by molecular collisions. (These are non-radiative transitions.) By emission of a photon (i.e., fluorescence), the molecule loses most of the absorbed energy and returns to a vibrationally excited level of its electronic ground state. Again, fast vibrational relaxation follows, re-forming the vibrationally relaxed, electronic ground state. However, in fluorescence spectroscopy, the observables are the energy of absorbed and emitted photons, more precisely, the distribution of those energies: transition probabilities of absorbing and emitting photons with certain energies. In a Jabloński diagram, the lengths of straight (non-wavy) arrows are proportional to the absorbed or emitted photon energy. Thus, a typical fluorescence emission spectrum is red-shifted (shifted to lower energies) in comparison to the absorption spectrum. The resulting spectral shift, called the Stokes shift, is one of the important practical consequences of non-radiative relaxations involved in this sequence of events. Vibrational relaxation is not the only source of Stokes shift. In fluid media, solvent relaxation around an excited state (in general, relaxation of its surrounding molecular environment) can lower its energy and contributes significantly to Stokes shifts [9, 10].

1.2.2 Fluorescence as a Reporter of Molecular Properties and Interactions

The fluorescence of a molecule is the light emitted spontaneously due to transition from an excited singlet state (usually S1) to various vibrational levels of the electronic ground state, i.e., S1,0 → S0,v. For many years, just the main parameters of fluorescence, i.e., the spectral distribution of the emission intensity (i.e., emission spectrum) and fluorescence quantum yield, were studied together with study of symmetry relating to the excitation and emission spectra and Stokes shift. This line of research was later followed by studies of fluorescence lifetimes.

Initially fluorescence spectroscopy was used for systematic study of organic molecules and biomolecules with the aim to obtain new information about the molecule itself – e.g., energies of its electronic states and transition rate constants. The rapid development of instrumental techniques – both steady-state and time-resolved spectrofluorometers – helped advance the field to study interactions between the fluorescent molecule and its molecular surroundings and on the ways these interactions influence many observable properties of fluorescence. In such studies, the fluorescent molecule reports on its environment, rather than being the main target of study. This shift of emphasis promoted increased attention to the parameters of excitation and of emitted fluorescence that could carry any information on the interactions between a fluorescent molecule and any kind of molecular surroundings – whether it was a solvent or other subunits of a complex biomolecular structure where the fluorescent subunit constitutes just its part.

1.3 Basic Characteristics of Excitation and Emission

Current advanced experimental techniques allow control and/or analysis of many parameters of the excitation and the emission during a fluorescence study. The information that is sought determines which parameters need to be controlled in a particular study. Currently, the following experimental parameters and modes can be investigated.

Excitation |

Intensity Iexc at the given wavelength |

Excitation spectral profile Iexc = Iexc(λ) |

Polarization (often linear, sometimes circular, sometimes undefined) |

CW or pulsed, constant or modulated |

Pulse time profile and pulse repetition rate |

Single-photon excitation (this is the most common modality) |

Two-photon excitation and entangled two-photon excitation |

Excitation by structured multi-mode light |

Excitation beam geometry, mode structure |

Excited sample volume – bulk (e.g., in a cuvette) or confined to a diffraction-limited spot (volume) |

Fixed position or scanning |

Fluorescence |

For a fixed excitation intensity and excitation wavelength: |

Total fluorescence intensity within a given emission wavelength region |

Total anisotropy |

Emission spectrum: Iem = Iem(λ) |

Emission polarization or anisotropy spectrum |

For variable excitation wavelength at constant excitation intensity |

Excitation spectrum at λem = constant; emission intensity is a function of excitation wavelength, Iem = Iem(λexc) |

Fluorescence quantum yield |

Excitation polarization or anisotropy spectrum |

Under pulsed or modulated excitation |

Fluorescence intensity decay |

Fluorescence anisotropy decay (depolarization) |

Various fluorescence decay parameters, e.g., multi-exponential, energy transfer model |

With spatial resolution |

Emission intensity map |

Emission spectral map |

Intensity decay (lifetime) map, FLIM |

Just looking at the above list of parameters to be taken into account when a fluorescence measurement is considered, it is clear that the instrumentation required could be either simple or very sophisticated – depending on which fluorescence parameter is expected to provide the new information we seek. Today, the fluorescent molecule (or a fluorescent subunit of a molecular structure) is mostly used as a reporter of intra- and/or inter-molecular interactions which means that the fluorescence kinetic parameters are typically the most important. Consequently, sophisticated instrumental setups with high-time resolution are proliferating even to traditional biochemistry and molecular biology laboratories.

1.4 Kinetics of Fluorescence

The most important molecular fluorescence feature (parameter) – after steady-state fluorescence excitation and emission spectra were measured – is the fluorescence decay kinetics. In the simplest case, we assume that excited state relaxation is governed by mutually independent, spontaneous processes and each of them can be characterized by a pseudo-first-order rate constant k. The following simplified Jabloński diagram (Fig. 2) labels individual relaxation processes with relevant rate constants.

Rate constants in Jabloński diagram. kF – fluorescence rate constant, kNR – sum of various non-radiative decay processes, typically internal conversion and intersystem crossing, kQ – quenching rate constant, accounting for unavoidable quenching mechanisms induced by the molecular environment of the fluorophore

If the assumption of spontaneity holds, then the fluorescence decay is exponential and the one parameter to be extracted from such a measurement is the rate constant of this decay. In practice, this means measurement of the fluorescence lifetime.

Spontaneous fluorescence decay is an example of a first-order process. A fundamental molecular parameter, unique for each fluorophore, is the fluorescence (radiative) rate constant, kF. If fluorescence were the only process depopulating the relaxed excited S1 state, then the measured excited state lifetime would be simply τF = 1/kF and the intensity decay I(t) would be described by a simple exponential function of form:

where I(0) means the initial intensity. In this particular case, τF would be called the radiative lifetime.

However, as the Jabloński diagram in Fig. 2 shows, non-radiative processes compete with fluorescence to cause decay of the excited singlet state population. These parallel running processes depopulating the excited state produce an overall decay rate that is the sum of all active rate constants. Let us denote this sum by:

The observable lifetime is then:

and the experimentally observable decay curve takes the following form:

It is important to realize that only the radiative rate constant, kF (and thus τF), is unique to a particular fluorophore, and it is impossible to determine this fundamental molecular parameter directly. In fluorescence decay curve measurements, also known as lifetime measurements, τDecay is the observable parameter. Both kNR and kQ usually depend on the molecular environment of the dissolved fluorescent dye. For example, solvent polarity, pH, dissolved oxygen content, presence of ions, and ability of hydrogen bonding can strongly affect τDecay. This is the reason why decay time of a fluorophore should be specified together with the relevant environment.

Consider the case of Fluorescein, a widely used fluorophore. A single-exponential decay with a lifetime of 4.1 ns can be observed from Fluorescein only when dissolved in aqueous solution with pH larger than 10. Under these conditions, Fluorescein, a dicarboxylic acid, is completely dissociated, and it is in di-anionic form. When this dye is dissolved in slightly acidic buffer, the observed decay curve is an undefined mixture of emissions from three different forms of Fluorescein. Although the sensitivity of decay rate to the environment sometimes complicates analyses, it also enables probing of a fluorescent molecule’s microscopic environment in a biological sample.

The Jabloński diagram is also useful for understanding the concept of fluorescence quantum yield, QF. The definition of QF is intuitive: it is the ratio of the number of emitted photons to the number of absorbed excitation photons. If fluorescence were the only process depopulating the excited S1 state, then all excitation photons would be converted to fluorescence and QF would equal 1. Owing to the competing non-radiative decay processes mentioned above, a fraction of the excited state population returns to ground state via alternative pathways, without photon emission. Observed quantum yields are often much less than 1. Using the corresponding rate constants, QF can be expressed as:

The significance of the last equation becomes obvious after rearranging in the following way:

and realizing that QF and τDecay are experimental observables. One must measure both quantities in order to calculate the fundamental fluorescence rate constant of the dye, kF.

So far we assumed the simplest possible case, a mono-exponential decay that is initiated with an infinitely short light pulse. However, the technical reality of time-domain lifetime measurements is different. Laser pulses have a finite duration. This means the time zero, “the moment of excitation,” is not defined precisely. Proper data analysis has to take into account the duration and time profile of the excitation as well, since it is modifying the decay curve, especially at the very beginning of the decay. An instrument’s electronic components also have finite response times, and their overall effect has to be accounted for.

Figure 3 shows a real example: a measurement of fluorescence decay from a mixture of Oxazin1 and Oxazin4 fluorophores (two red emitting laser dyes) dissolved in ethanol, collected using a time-correlated single-photon counting (TCSPC) instrument. The excitation source was a laser diode emitting 635 nm (red) pulses with approximately 80 ps duration. Fluorescence with λ > 650 nm has been collected. In Fig. 3a, the data are plotted using a linear intensity scale. At a first sight, the decay curve (plotted with blue color) indeed resembles a standard exponential decay function. The red curve is the so-called instrument response function (IRF hereafter), which was obtained by measuring scattered laser photons using the same instrument shortly after the fluorescence decay measurement. The IRF indeed looks like a sharp, short pulse.

An example decay and instrument response function (IRF) obtained by time-correlated single-photon counting (TCSPC) method. (a) Plotted on a linear intensity scale. (b) Plotted on a logarithmic intensity scale, also showing the fitted theoretical model decay function. The plot of weighted residuals at the bottom of the graph shows the deviation of experimental data from the fitted model decay

Figure 3b shows the same data, now plotted using a logarithmic intensity scale. This is the proper way to present and analyze TCSPC decay measurements. The logarithmic plot reveals many useful details. The decay curve is obviously not single exponential. If it were, then the fluorescence plot would be a straight line, whereas here we see that the blue curve bends. This is consistent with the expected double-exponential decay behavior. Oxazin1 and Oxazin4 dissolved in ethanol have lifetimes of 1 and 3.5 ns, respectively. We also clearly see a shoulder in the IRF (red curve). The excitation pulse shape is not symmetric. Both the IRF and decay curve have a small background (offset) due to unavoidable noise, so-called detector dark counts, collected during data acquisition. Although the laser pulses had approximately 80 ps optical duration, the collected IRF is considerably broader than that. It has more than 300 ps width at half of the peak value. This broadening of the acquired result is caused by the detector and instrument electronics. The fluorescence decay curve is subject to the same broadening. This is the reason we need both the fluorescence and IRF curves for the data analysis to evaluate the decay parameters of the fitted model. The measured fluorescence decay curve is analyzed by assuming it is a convolution of a theoretical decay function (e.g., single or double exponential) with the shape of the IRF. Our goal is to obtain the parameters of the theoretical decay function. The most common data analysis method for TCSPC data is called iterative re-convolution. This means generating decay curves as a convolution of the IRF with a selected model of the fluorescence decay. The result is then compared to the experimentally obtained decay data. The adjustable model parameters are iterated until the calculated decay matches the experimental one.

Figure 3b shows such a re-convoluted, fitted decay model, plotted in black. The bottom panel of Fig. 3b is the plot of weighted residuals. Its purpose is to show the deviation of experimental data points from the fitted theoretical decay curve. “Good fit” results in a plot of residuals that are randomly distributed around zero, with no obvious trends in the deviations.

But how do we know which decay model is appropriate to fit to the data? For an unknown sample, the appropriate decay model is also unknown. In the above example of a binary mixture of dyes, where each features a single-exponential decay, the choice of a double-exponential function was easy and logical. Observing anything else would be a surprise, demanding an explanation.

It is common to start with the simplest possible model, the mono-exponential decay. If the model does not fit, add – step by step – one or more exponential terms. However, the model has to be kept as simple as possible. Practically any decay curve can be very well described by using just five-exponential terms, but this does not necessarily mean that this a correct decay model. A decay curve can be non-exponential, for example, and there are also cases where there is a certain continuous distribution of decays times instead of several discrete lifetimes.

What can cause a multi-exponential, or even more complicated decay curve of a single fluorophore (probe or label)? One trivial reason is a presence of some fluorescent impurity in the sample. Another reason – very common in biology – is the heterogeneity of the emitter’s environment. (As already mentioned above, the environment usually has a strong effect on the observed lifetime.)

Yet another possible reason is energy transfer, i.e., FRET (Förster resonant energy transfer), a process utilized in biology to measure molecular distances. Because of the popularity of this method, let us briefly explain the basic effects of FRET on the involved decay curves. FRET is the non-radiative transfer of excited state energy from an excited state molecule (the donor) to a second molecule (the acceptor). When the donor’s fluorescence is detected, FRET acts as a quenching process and shortens the lifetime of the donor. Two things must be kept in mind: (1) Not all donor molecules are sufficiently close to an acceptor to undergo FRET; thus, a fraction of donors will emit with their original, unquenched lifetime. (2) The rate and efficiency of FRET is strongly distance dependent, so a distribution of donor–acceptor distances produces a distribution of observable donor lifetimes.

When acceptor fluorescence is monitored after selective excitation of the donor, FRET can be regarded as a relayed excitation of acceptor. The result is a rising component in the acceptor’s fluorescence decay curve, which requires an additional exponential term with negative amplitude.

Fluorescence kinetics is a vast topic and in-depth introduction is beyond the scope of this chapter. The reader is referred to the classic textbooks of J. R. Lakowicz [11] J. N. Demas [12], and B. Valeur [13], A shorter introduction can be found, e.g., in [14]. For an overview of photon counting data analysis, see [15,16,17].

1.5 Time-Resolved Fluorescence Anisotropy

Together with wavelength and intensity, polarization of the excitation and emitted light are potentially informative parameters of a fluorescence measurement – see Sect. 1.3. When the excitation beam’s polarization is controlled and specified, analysis of polarization properties of the emitted fluorescence provides useful characterization of the fluorophore molecule and about its mobility and interactions.

Every electronic transition (i.e., photon absorption and emission) has an associated transition moment vector. (This is a simplified, but usually entirely sufficient, approximation.) The different electronic absorption bands in a molecule have transition moment vectors of different magnitudes and orientations. The orientation of these vectors relative to the structure of the fluorophore is fixed and characteristic for each fluorescent molecule.

Let us have a look at what is special when linearly polarized light is used for excitation. For simplicity, consider a homogenous fluid solution at room temperature. Due to rotational Brownian motion, the orientation of molecules is random and fluctuating. At the instant the exciting light passes through solution, only those molecules whose absorption transition moment is close to parallel with the exciting light’s polarization orientation (i.e., the light’s electric field direction) can absorb the polarized exciting light. Linear polarized excitation does an orientation selection of molecules in the solution. This is called photo-selection. As a result, the initial population of excited state molecules has a well-defined set of alignments (Fig. 4).

This initial ensemble of excited, aligned fluorophores will start to decay to their electronic ground state. Simultaneously, the excited molecules undergo random rotations due to Brownian motion, thus randomizing their initial alignment. The length of time between an excited state’s formation and its emission of a photon is random. (The decay kinetics discussed in previous Sect. 1.4 describes the statistical behavior of an ensemble, not of individual excited states.) The polarization of an emitted photon depends on the orientation of the fluorophore’s emission transition moment at the instant of photon emission. The orientation of molecules that decay immediately after excitation is well-defined by the light absorption process (the molecule had no time to rotate). As more time elapses between excitation and photon emission, molecular rotation has more opportunity to randomize the orientations of the remaining excited molecules. Their increasingly random orientation is reported by the polarization plane of the emitted light. In other words, the fraction of various polarization planes of detected emission will change, and increasingly randomize, during a decay. Simply put, at the beginning of a decay, the emission will be strongly polarized. Toward the end of the decay of the whole ensemble, the detected emission usually will be almost depolarized. Measurement of the speed and kinetics of this change, the depolarization, is the subject of time-resolved fluorescence anisotropy. By analyzing the intensity of fluorescence in orthogonal polarization planes as the decay proceeds, we can get important new information on the structure and dynamics of the fluorophore molecule, as well as about its environment.

Note that standard fluorescence spectrometers use the so-called L-geometry observation mode: the emission is detected in a direction perpendicular to the excitation. The direction of these two beams (excitation and emission) thus defines a plane: the detection plane. During standard anisotropy measurements, the excitation beam polarization is oriented vertically relative to the detection plane, as illustrated in Fig. 4. This vertical polarization plane defines 0°. Thus, the perpendicular (90°) polarization orientation is actually oriented horizontally, which is parallel to the detection plane. In fluorescence spectrometer software and manuals, I∥(t) and I⊥(t) are often written as IVV(t) and IVH(t), respectively. In the following text, we shall use the notation using I∥(t) and I⊥(t).

Time-resolved fluorescence anisotropy is defined as [11, 18, 19]:

I∥(t) and I⊥(t) are experimental observables: fluorescence intensity decay curves recorded with the emission polarizer set parallel to and perpendicular to the exciting light’s polarization orientation, respectively. Parallel (0°) and perpendicular (90°) orientation is meant relative to the polarization plane orientation of excitation that defines 0°.

The parallel and perpendicular polarized decay curves must be recorded under identical conditions (other than the detection polarizer orientation) because our major interest is the tiny difference represented by the numerator. Note that this difference term is divided by a special sum of decays. It can be shown that this sum in the denominator is equivalent to the total fluorescence intensity decay, IT(t), which is independent of molecular motion and polarization effects. (Such a polarization independent decay curve, directly proportional to IT(t), is the primary interest during standard lifetime measurements. When using a time-resolved fluorimeter, the necessary conditions for standard lifetime measurements are usually accomplished with an emission polarizer set to the so-called magic angle, 54.7°, relative to the polarization plane of excitation.)

Since both the numerator and denominator have a physical dimension of intensity, r(t) is a dimensionless quantity. Owing to the intensity normalization (denominator), anisotropy is independent of total emission intensity, which is a great advantage. Figure 5 shows an example of input data (I∥(t) and I⊥(t) decay curves), as well as an anisotropy decay function r(t) calculated directly, according to the formula presented above.

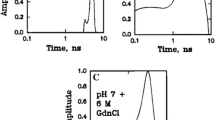

Polarized intensity decay curves and calculated anisotropy decay of Coumarin6 dissolved in ethylene glycol at room temperature. (a) Parallel and perpendicular polarized intensity decay curves, as primary observables. The IRF is also shown for comparison, plotted with dotted line. (b) Anisotropy decay curve calculated point by point. A single-exponential decay model was fitted to an appropriate portion of r(t)

Figure 5 illustrates a fluorescent system where the anisotropy decay is clearly visible within the I∥(t) and I⊥(t) decay curves. The high viscosity of ethylene glycol solvent at room temperature greatly slows the rotation of Coumarin6 dye molecules. The different shapes of I∥(t) and I⊥(t) in Fig. 5a are apparent during the first few nanoseconds, but at later times, the two decays curves are identical, entirely dominated by the spontaneous fluorescence decay of Coumarin6 with a τDecay = 2.3 ns in this sample. When those decay curves are substituted into the definition formula of r(t), we obtain the anisotropy decay depicted in Fig. 5b. The anisotropy decay displays the kinetics of the diminishing difference between I∥(t) and I⊥(t). It is remarkable that a simple single-exponential decay curve with an apparent decay time of 1.6 ns can be fitted to r(t):

where the initial value of the decay, r0, is called the fundamental anisotropy (of Coumarin6 molecule in this particular case) and θ is the molecule’s rotational correlation time.

More viscous solvents or more rigid molecular environments (e.g., membrane or cellular environment) produce longer rotational correlation times and slower decay of fluorescence anisotropy. In the above example, r(t) approaches zero as time proceeds. This is expected, because rotation of dye molecules is not restricted in a fluid solvent, leading to complete randomization of molecular orientation. In biological samples we sometimes encounter restricted molecular motion leading to hindered rotation. An example is a rod-like probe molecule embedded in a lipid bilayer, or a fluorescent label covalently attached to a protein. In this case, r(t) may not reach 0 at long enough t. A non-zero residual anisotropy value, r∞, is a sign of hindered rotation. In this case:

In theory, only molecules classified as spherical rotors exhibit single exponential r(t). However, for small polar molecules dissolved in polar solvents, this model is a remarkably good approximation. A general r(t) decay function can have up to five-exponential terms, but resolving (observing) more than two exponentials places extraordinary demands on the quality of input data I∥(t) and I⊥(t). A typical r(t) of a covalently labeled protein features a fast but hindered decay component corresponding to the “wagging” motion of the label and another, slower one due to local mobility of the labeled protein segment. The “rigid” rotational motion of an entire large protein molecule is typically too slow to create a measurable effect on I∥(t) and I⊥(t).

The fundamental anisotropy, r0, has a characteristic value for each fluorophore: r0 is determined by the angle, β, between the molecule’s absorption transition moment vector at the exciting wavelength and its emission transition moment vector at the detection wavelength. The fundamental anisotropy is independent of environment.

This formula is valid for single-photon excitation, the most common case. It follows that the limiting values of r0 are 0.4 and −0.2, for β = 0° (the molecule’s transition vectors are parallel) and for β = 90° (the molecule’s transition vectors are perpendicular), respectively. If the solution were frozen, blocking the rotation (θ → ∞), then there would be no observable kinetics of r(t). Only a constant r(t) = r0 value would be observed. In the example depicted in Fig. 5b, the estimated r0 value is very close to 0.4, indicating that the two transition vectors are close to parallel in a Coumarin6 molecule (λExc = 420–470 nm, λEm = 500–550 nm). It makes this dye a very good probe for anisotropy dynamics studies. An example of a fluorophore with β = 90° is pyrene when excited into the S2 excited state (structured absorption band between 300 and 340 nm) and its normal S1 → S0 fluorescence (λ > 370 nm) is observed. r(t) of pyrene in fluid solvent would show a rise (note: not a “decay”) from −0.2 to zero. A hypothetical fluorophore that had an angle between orientation of absorption and emission transition vectors exactly of “magic angle” (β = 54.7°) would show zero anisotropy and depolarized emission, regardless of the nature of its environment.

The steady-state anisotropy value at a given observation wavelength, r, of a sample of interest in biology is usually easily measurable using standard fluorescence spectrometers equipped with polarizers. r and parameters θ, r0, and τDecay accessible from time-resolved fluorescence measurements are related to each other. Thorough derivation and explanation of the underlying principles is beyond the scope of this introductory text. Nevertheless, let us discuss two useful relations:

and

where η, V, R, and T are viscosity of the environment, volume of the rotating particle, universal gas constant, and thermodynamic temperature, respectively.

The first equation is just an approximation that can be derived assuming single exponential IT(t) and r(t) decays. It is one form of the Perrin equation that links together several observables. When combined with the second formula, it explains why the value measured for the steady-state anisotropy r increases and approaches r0 when:

-

(a)

The sample is cooled down. The increase of θ is caused not only by decreased T in the denominator but also by increased viscosity η at lower T.

-

(b)

The sample is made more viscous, for example, by adding sucrose to the solution.

-

(c)

The size of the rotating fluorophore (or assembly) is increased, for example by complexation to proteins.

-

(d)

The fluorophore used as a reporter has very short τDecay. Such a dye can report only about the early stages of rotational diffusion upon excitation, when the anisotropy is still high.

It should be obvious that time-resolved anisotropy measurements provide a much more detailed picture of molecular motion than steady-state anisotropy measurements. However, an r(t) function often cannot be obtained in the neat form depicted in Fig. 5b. Anisotropy can be evaluated only where there is sufficient fluorescence intensity. As I∥(t) and I⊥(t) intensities are vanishing, the directly calculated r(t) function becomes noisy. Another problem is at the beginning of r(t). Due to finite duration of the IRF, the beginning of I∥(t) and I⊥(t) decays are strongly affected by convolution effects, as explained previously in Sect. 1.4. These convolution effects blur the region of t = 0 and, thus, the apparent value of r0 and the decay shape of any fast component of r(t). The rotational correlation time of Coumarin6 in ethylene glycol was found to be θ = 1.6 ns, which is much longer than the IRF of the instrument. It allowed us to neglect the convolution effects in r(t). However, Coumarin6 in less viscous solvents, like ethanol or acetone at room temperature, rotates much more rapidly: θ is then in the order of 100 s of picoseconds. As a rule of thumb, when the rotational correlation time is shorter than the width of the IRF, a simple direct analysis of r(t) (as depicted in Fig. 5b) becomes impossible. Note that deconvolution of IRF influence or re-convolution fitting of a model to a directly calculated r(t) is not applicable, because r(t) is a ratio function.

The state-of-the-art solution is to perform global (simultaneous) fitting of I∥(t) and I⊥(t) decays, instead of directly calculating r(t) [20]. The shapes of I∥(t) and I⊥(t) decay curves, experimental observables illustrated in Fig. 5a, are not entirely independent of each other. They are linked together with the same r(t) function (illustrated in Fig. 5b) whose parameters we seek to evaluate. They are further constrained by the pure, polarization independent, fluorescence intensity decay, IT(t) of the probe. It can be shown that:

regardless of particular mathematical models used to describe r(t) and IT(t) [21, 22].

Global analysis in this context means the following: we have two input data sets, I∥(t) and I⊥(t). Based on prior knowledge about the fluorophore and expected behavior of rotation, we choose appropriate model functions for the anisotropy decay r(t) and the fluorescence intensity decay IT(t). As an example, let us consider the simplest case of spherically symmetric rotor (molecule) with single-exponential fluorescence decay. The adjustable parameters of r(t) are r0 and θ. The adjustable parameters of IT(t) are τDecay and I(0). These four parameters are the same in both I∥(t) and I⊥(t) decay curves. Global analysis means simultaneous fitting of two fairly constrained decay models to I∥(t) and I⊥(t) functions, respectively.

Substituting the simplest possible functional forms of r(t) and IT(t) (single-exponential functions) into the formulas above, one obtains the resulting I∥(t) and I⊥(t) that are both double-exponential decays with identical component lifetimes. In both decay curves, one exponential component has a lifetime of τDecay. The other exponential term has an apparent lifetime of τ = (τDecay ∙ θ)/(τDecay + θ). The relative amplitudes of these exponentials are linked by the value of r0.

This global analysis strategy is very robust and the formulas are relatively easy to extend for more complicated models of r(t) and IT(t). The main advantage is that the analysis involves iterative re-convolution with an IRF, therefore short decay or correlation times, as well as r0 can be resolved. However, the complexity of the fitted model rapidly increases. A double exponential r(t) combined with a single exponential IT(t) leads to a triple-exponential model, while a double exponential r(t) combined with a double exponential IT(t) produces a highly complex four-exponential model.

Technical remarks:

-

For the sake of simplicity, the discussion above assumed equal detection sensitivity for emissions with various polarizations. However, in a typical fluorescence spectrometer, the efficiency of light detection may vary as the detection polarization plane changes. This has to be taken into account: the so-called G-factor is a correction factor that expresses the ratio of detection sensitivities of parallel and perpendicular polarized signal components. Incorporation of this correction into the definition formula of anisotropy is straightforward: it involves a simple multiplication of I⊥(t) with the experimentally determined G-factor:

where G is the detection sensitivity for I∥(t) divided by sensitivity for I⊥(t).

-

In spectrometers using L-geometry observation, determination of this sensitivity ratio is relatively easy. It is enough to rotate the excitation polarization plane to 90°, that is, to horizontal. This leads to a photo-selection which is isotropic when viewed from the detector’s side. In other words, there is no anisotropy in the emitted fluorescence. Under these conditions, any change of detected signal intensity when the emission polarizer is rotated from position corresponding to I∥ to the angle corresponding to I⊥ directly reflects the sensitivity of detection for these two principal polarization planes.

-

Performing quantitative anisotropy measurements using microscopes is considerably more complicated. The detection geometry is co-linear: the microscope objective delivers the excitation light as well as collects the emission. This makes it impossible to obtain isotropic photo-selection – necessary for G-factor determination – by rotating the polarization plane of excitation. Yet, another problem is that the excitation beam is entering to (and the emission is collected from) the focus area as a light cone under considerable angles. In effect, this creates and mixes various polarization planes. The problem is more serious for high magnification objectives with higher numerical apertures [23,24,25].

2 Time-Resolved Spectroscopy and Microscopy

2.1 Time-Resolved Techniques and Their Development

Time-resolved fluorescence spectra and fluorescence kinetics parameters provide important detailed information on a fluorescing molecule and its interactions with its environment. A variety of technical challenges arise in fluorescence measurements with high (ns–ps–fs) time resolution. For many years, achievable time resolution was mostly limited by the properties of photo-detectors. Enormous development of pulsed lasers in the last quarter of the twentieth century made short pulsed excitation available before there were sufficiently fast photo-detectors. Currently, there exists a pulsed laser and/or LED for any fluorescence spectroscopy needs, while detection with a high time resolution, dynamic range, and speed of measurement has been the main topic of improvements. The following list briefly summarizes the main development stages of the time-resolved fluorescence measurement instrumentation.

Direct Measurement

of fluorescence decay with a photomultiplier (PMT) and oscilloscope. The time resolution, depending on instrumentation used, was typically in ns region. Such direct measurement was simple but had a low dynamic range. Cost of the instrumentation was mostly determined by the price of the oscilloscope. Currently, fast digitizer boards, 10 GHz oscilloscopes, and fast photo-detectors together with data analysis software provide 10 ps resolution. So, this technique is used in research laboratories where the cost of the fast oscilloscope and complexity of the data analysis are not a problem.

Indirect Measurement with a Box-Car Integrator

Better (faster) electronic switching circuits allowed us to cut-off or switch fluorescence decay signal at any chosen time. By integrating the rest of the decay signal after switching, and repeating this step by step through the whole fluorescence decay period, it is possible to reconstruct the decay profile. Compact instrument working on this principle had ns resolution and slightly better dynamic range than previous direct measurement. This technique is not really in use now, except for special purposes.

Time-Correlated Single-Photon Counting (TCSPC)

Under conditions that detect just one photon from the whole fluorescence response following pulsed excitation, and using time-to-amplitude conversion, one can reconstruct an excitation–emission time difference histogram that corresponds to the fluorescence decay. Switching electronics and time-to-amplitude conversion units available in the 1970s allowed us to materialize such measurement system with ns resolution, which proved to have many advantages – among them high dynamic range and high accuracy. So, the implementation of TSCPC detection represented a breakthrough in time-resolved fluorescence spectroscopy. Currently after many improvements in switching and time-tagging electronics and introduction of ultrafast solid-state detectors, modern versions of TCSPC with ps resolution are the top-class techniques for exact fluorescence decay measurement. For that reason, Sect. 2.2.1 Time-Resolved Photon Counting is solely devoted to the description of TCSPC and some new variants of the photon counting-based detection.

Phase and Amplitude Modulation Technique

This technique uses exciting light that is amplitude-modulated at various frequencies, and it measures a phase shift of the resulting modulated fluorescence emission intensity. Time resolution down to ps can be achieved, but this technique does not provide information on the character (exponential/non-exponential) of the fluorescence decay. Phase/amplitude modulation methods are now commercially available mainly in set-ups for FLIM microscopy (see below), or they are used in wide-field camera detection format. The key advantage of frequency-domain FLIM is its fast lifetime image acquisition, making it suitable for dynamic applications such as live cell research. The entire field of view is excited semi-continuously – using relatively broad excitation pulses – and read out simultaneously. Hence, frequency-domain lifetime imaging can be near instantaneous.

Streak-Camera Detection

The most advanced commercial streak cameras have fs time resolution and can function in a single-shot regime! This type of streak camera is extremely expensive and provides only limited dynamic range. Currently, streak cameras are used in femto- and atto-second plasma research, XUV, and Rtg imaging studies.

Description of the techniques and instrumentation used in time-resolved fluorescence/spectroscopic studies can be found in many fluorescence-related textbooks, e.g., [11,12,13]. To follow a development of the top-class techniques in each historical stage, see, e.g., [26] and follow, e.g., a relevant chapter in each of the 19 volumes of Springer Chemical Physics Ultrafast Phenomena series published between 1978 and 2014.

2.2 State-of-the-Art Techniques for ps–fs Time Resolution

2.2.1 Time-Resolved Photon Counting

Arguably, this is the most widely used technique today. A fundamental advantage of the method is that it records the true shape of the decay curve (time evolution of emission intensity) without any assumption about the particular decay model. Another advantage is its inherent sensitivity. Counting and timing photons, one by one, means working at the quantum limit of light detection.

The basic idea is as follows: a fluorescence decay initiated with a short excitation pulse is a very fast process, typically completed in a few dozens of nanoseconds. Moreover, such a single decay is very weak in terms of signal intensity, because there are practical limits for the maximum excitation pulse energy. (The limitation today is typically not the available power of suitable sources, but comes from the sample: bleaching, photo-toxicity, local heating, etc., all to be avoided.) The solution is to excite the sample repetitively, with low energy pulses, many times. Instead of attempting to obtain a decay curve (shape) from a single excitation pulse, only one fluorescence photon is detected after each excitation pulse. High-performance timing electronics determines the time elapsed between excitation of the sample and detection of an emitted photon. An approximation of the true decay curve is obtained by constructing a histogram of these elapsed times (measured on ps–ns or longer time ranges) from many excitation–emission cycles. If excitation pulse rates are high enough (kHz in the past, but tens of MHz today), a reasonable approximation of the decay curve (i.e., histogram) with sufficient signal to background ratio can be achieved relatively quickly.

The general term “time-resolved photon counting” includes the well-known methods of time-correlated single-photon counting (TCSPC) and multi-channel scaling (MCS). The former was the breakthrough method in the past century to reach the time resolution necessary for fluorescence studies. MCS is another traditional pulse counting method used typically on microsecond and longer time scales. Both are popular and still widely used today, thus deserving a brief description here.

One can imagine the simplest, generic TCSPC data acquisition as a repeated stopwatch measurement. An excitation pulse starts the watch and the first detected photon stops it. The watch has a finite maximum time range divided into, say, thousands of time bins, very short discrete time intervals. Thus, the digitized readout of a time-delay measurement is an integer value from the interval < 0..number of time bins>. Let us have a linear array of memory cells corresponding to these possible integer readout values. These cells are all empty at the beginning. When the stopwatch returns a digital value, the content of corresponding memory cell is incremented by one. Repeating this process many times, one finally obtains a histogram: the distribution of various time intervals between the excitation pulse and a subsequent photon arrival. As explained in the classic book [27] this distribution is nothing else than the shape of the decay curve we are after, provided the photons we have detected are really “rare events.” So rare that in the above-described stopwatch measurement we can encounter only a single photon within the whole fluorescence response to one excitation pulse, and there are certainly no more “later” photons arriving in the same excitation–emission cycle. This requirement is easy to understand if one considers the unavoidable time period needed to process such a precise timing. That is called dead time of the electronics, lasting dozens of nanoseconds up to microseconds in the past. If there were more photons arriving after the start of the stopwatch, the first one, the earliest one, would be always timed, counted, and histogrammed, but the later ones would be always lost. Such a bias toward the first detected photon would introduce a systematic distortion of the recorded histogram. Early photons (actually, the first ones) arriving to the detector would be over-represented. This is traditionally called “pile-up effect” [27,28,29] and can (must) be avoided by limiting the photon counting rate. Considering Poisson statistics relevant for detection of rare events it can be shown [27] that if the photon detection probability (i.e., average photon counting rate) is just a few percent of the excitation pulse rate, then encountering more than one photon in a single excitation–emission (start–stop) cycle is negligible. In simple terms, the fluorescence signal must be so weak that – on average – one has to send hundreds of excitation pulses to the sample in order to obtain at least one (single) photon as a response to one excitation pulse. This may sound as a very inefficient approach, but in practice it is not a real limitation. Usually the low quantum yield of the sample, low overall detection efficiency, or the limited available (or allowable) excitation intensity ensures these conditions.

The above-described TCSPC process was the standard in the past, approximately up to the 80s of the past century, when common excitation sources had relatively low pulse repetition rates. It is important to realize that starting a stopwatch with every excitation pulse, knowing that most pulses will not lead to photon detection, is not really economical. The logical solution is to abandon this nicely causal measurement sequence: to reverse the meaning of start and stop signals. Let us start the stopwatch with the inherently rare detected single photon and stop it with the subsequent laser pulse, which is guaranteed to always be there! The recorded (histogrammed) time intervals will convey the same information; only the histogram will appear reversed in time. Reversing the time axis back to the natural one is trivial arithmetic, assuming the time interval between excitation pulses is constant, which is normally the case. In contemporary TCSPC electronics, the start pulses are those from photon detectors and stop pulses are derived from the pulsed excitation source (called SYNC signal). The common term for this modern scenario is therefore “reversed start–stop mode.”

In comparison to the above-described classic TCSPC, multi-channel scaling, MCS, by design, detects more photons per excitation cycle. Consequently, more than one photon can be sorted into the same time bin in the same measurement cycle. The simple stopwatch analogy as described above is not valid here anymore. In MCS, an excitation pulse starts a so-called sweep, when detected photons are counted and sorted into subsequent time bins. Imagine it as a fast counting process in a fast-moving time window, which lasts just for a moment, as short as the time width of the bin. The number of photons counted during that time window is stored in the corresponding memory cell. The counter is reset to zero when the time window moves and continues counting for the next time bin, and so on, until the end of the sweep. Timing resolution of MCS cannot compete with TCSPC, but MCS is more efficient in terms of photons collected per excitation pulse.

As TCSPC instrumentation evolved, the electrical time resolution was increasing (down to a few ps), and the size of the necessary electronics was shrinking. Contemporary instruments are often computer boards, while they were as large as a cabinet around 1980, when TCSPC became widespread in photophysics. There is a growing need for multi-channel devices, that is, for timing electronics that can process signals from multiple detectors simultaneously. Multiplying the number of TCSPC devices was (and still is) very expensive, and their mutual synchronization is not trivial at all. A viable solution was the use of a so-called router unit that accepted inputs from several detectors. Those pulses were routed to a single TCSPC timing unit that processed them as they came, one by one. Using the routing information, the result was sorted into several histograms, one for each detector. The basic principle of TCSPC limits the maximum signal counting rate anyway, and this kind of statistical time-sharing of the TCSPC processing unit works very efficiently as far as the coincident detector signals (pulses) are still rare [30, 31] (Fig. 6).

Simplified block diagram of classical TCSPC electronics (top) and its extension with a router (bottom). Top: Start and stop signals are detector pulses and synchronization pulses from a pulsed light source, respectively. TAC is time-to-amplitude converter. It is based on a fast voltage ramp, started and stopped by corresponding input signals. Its output is a voltage value (amplitude). ADC is analog (amplitude) to digital converter, which converts the TAC output to a digital number. MCA, multi-channel analyzer, sorts these digital values into a histogram. Bottom: A router unit is able to collect signals from multiple detectors. Whenever an input pulse is received from any of the detectors, this signal is forwarded to the TAC as a start signal and the event is processed as usual. However, the router also generates routing information that is fed to the MCA. Based on this routing information, for each detector a separate histogram is collected

However, the demand for truly parallel processing of multi-channel detection was increasing. Capturing coincident photons (or lack of their coincidence) is a basic requirement in order to study phenomena like photon antibunching, bunching, and cross-correlation in fluorescence correlation spectroscopy. Using a router unit and single-channel TCSPC electronics does not allow detection of photon coincidences: such events have to be discarded, because they cannot be reliably routed. Another disadvantage of the very convoluted timing process involving the cascade of TAC, ADC, and MCA units is the relatively long dead-time of this kind of instrument. Even in fairly modern instruments, dead-time is in the order of hundreds of nanoseconds, seriously limiting the maximum total photon detection rate.

The latest generation of TCSPC electronics uses time-to-digital converters. The job of TAC (time-to-amplitude converter) and ADC (analog-amplitude-to-digital converter) is performed in a single, integrated circuit, TDC (time to digital converter). TDCs are not only much faster; they cost significantly less than high-performance TAC- and ADC-based solutions [32, 33]. The role of MCA, which is sorting the measured start–stop times into histograms, can be performed using very fast FPGA (field programmable gate arrays) today [34]. This new technology made it possible to develop devices capable of truly parallel, simultaneous timing on several input channels [35,36,37].

Yet another important development step was including a global clock into TCSPC electronics, measuring the absolute time of photon detection (measured from the start of data acquisition) on a macroscopic time scale [29, 32,33,34]. This feat is different from the fundamental process of picosecond precise (laser pulse to first photon) time-delay measurement, as performed in TCSPC. When the goal is to obtain a simple TCSPC histogram, i.e., the shape of a decay curve, only the various ps delays of photons measured from the laser pulses do matter. Histogramming is a kind of online data reduction scheme, when the order of photon arrival is irrelevant, and therefore that information is not preserved. However, there are many experimental scenarios (e.g., single-molecule detection, fluorescence correlation spectroscopy FCS, and as we shall see later, fluorescence lifetime imaging, FLIM) where the global detection time of a single photon is equally important as its ps delay.

Thus, modern TCSPC solutions became ultrafast time taggers [36, 37]. Instead of just histogramming delay times of special event pairs (laser pulse to photon detection), they are capable to attach time tags to each individual detected event. The full information content of the measurement is preserved (Fig. 7).

Detector (photon) pulses are basically treated in the same way as synchronization pulses from laser pulses, as well as any other timing relevant signal received from other devices. All these inputs generate “events” that are precisely time-tagged. The overall event rate may be very high; therefore, the time tags are entering a high-speed first-in-first-out (FIFO) event buffer, in order to be able to process large momentary event rates. The recorded events are pre-processed in high-speed programmable hardware. Finally, they are output in digital form, typically as an ordered list of events containing information about the kind of the signal (identification number of input channel) and their precise time tags.

The most generic data output is then a list of various kinds of events, each with its time-tag. Each entry of the list contains the identification number of the input channel where the event was detected. The pulsed laser’s synchronization signal is treated as one of the possible events. Certain input channels can be dedicated to special events, e.g., frame, line, or pixel markers in the case of image scanning.

Classical TCSPC decay curve measurement can be then regarded as a special, very simple, case of processing of available event time tags: The time tag of a SYNC event (that marks the moment of excitation by a laser pulse) is subtracted from the time tag of a detected fluorescence photon. The result is equivalent to the delay time of photon measured, e.g., by old-style TAC-ADC-based electronics. These delays are histogrammed in post-processing of the original list of events. The advantage of obtaining a decay curve in this way is that the overall measurement time can be arbitrarily divided into shorter time periods, and a dedicated histogram can be calculated for each such a period. This is a very important feature with many applications.

Consider for instance a single molecule undergoing reversible conformational changes on millisecond time scale observed with a confocal microscope. If the conformation affects the lifetime of its fluorescence, the fluctuation of the lifetime can be recorded and studied in detail. Intensity traces, as well as lifetime traces, can be calculated; thus, the kinetics of those changes is revealed.

The same basic idea is involved in fluorescence lifetime imaging microscopy, FLIM. A FLIM image is always a composite image consisting of an intensity (grayscale) image overlaid with a false color layer, where the colors are mapped to different lifetime values. The most common way of getting a FLIM image using time-resolved photon counting involves scanning. (There are several scanning modalities: e.g., the sample or the microscope objective is moved with piezo-actuators, or the laser beam is deflected, as in laser scanning confocal microscopes.) The goal is to obtain a lifetime value (thus color) for every pixel of the image. This is not complicated at all, if we are able to map the motion of the scanner in time. Recording, time-tagging, and storing special marker events, namely line-start and line-stop markers in the list of “events,” makes it possible to reconstruct the FLIM image in post-processing. It is enough to realize that the time tag of a photon identifies its spatial origin, since we know the moment of start and stop of every line during scanning. Assuming regular scanner motion, every photon in the event list can be unambiguously mapped to an image pixel, based on comparison of its time tag with that of the line markers. Once we sorted the photons into pixels, histogramming their delay times measured from SYNC pulses is straightforward. Analysis of these histograms, unique for each pixel, yields the lifetime value that is then mapped to a color.

The FLIM concept described above is the most common these days and is typically implemented using a confocal microscope. However, there are alternative devices that achieve a time-domain photon counting-based FLIM image without scanning, in wide-field observation mode. These are basically multi-pixel photon counting detectors with integrated timing electronics [38,39,40,41].

2.2.2 Fluorescence Up-Conversion (Decay Sampling)

Fluorescence decay sampling is a nonlinear optical technique. A schematic representation of how the up-conversion technique works is shown in Fig. 8a, taken from [42]. Fluorescence is excited by UV pump beam short pulse and focused to a nonlinear BBO crystal. Simultaneously, a fs 800 nm gate pulse, delayed by a chosen Δt after the pump pulse, overlaps with the fluorescence signal at the BBO crystal. Up-converted (sum) signal appears at the output of BBO only when both the fluorescence and probe signals are overlapping. This up-converted signal is detected by a PMT. A time delay between the pump pulse that excites fluorescence and the probe pulse is defined by the optical delay stage – see Fig. 8b. Thus, by changing the Δt delay while measuring intensity of the up-converted signal one can register a fluorescence decay profile. The time resolution of this technique is given by the profile (duration) of the probe pulse which – today – can be easily in tens of fs or shorter (but expensive laser set-up is needed for that). These days routine pulse response function of this technique is illustrated in Fig. 9 below.

(a) Scheme of fs-resolved fluorescence up-conversion and (b) the corresponding experimental setup. Reprinted from [42] published under an open access Creative Common CC BY license

A typical pulse response function of an up-conversion spectrofluorometer. Reprinted from [42] published under an open access Creative Common CC BY license

Recently, fluorescence decay sampling technique was combined with other nonlinear optical spectroscopies. Such combination spectroscopy provides an extremely detailed information on molecular dynamics [43].

Recent progress in instrumentation and technology resulted in compact spectrofluorometers that combine femtosecond fluorescence up-conversion and TCSPC in one module, even with automated switching between those two techniques – e.g., HARPIA TF from Light Conversion [44].

3 Some Nonlinear Techniques and New Trends

3.1 Multi-photon Excitation Techniques

Multi-photon excitation is a widely used nonlinear technique for both fluorescence spectroscopy and microscopy.

When the excitation is done by a focused single beam, the quadratic dependence of the two-photon absorption on excitation light intensity leads to much smaller excited volume within the sample. In case of excitation by two laser beams, the probability of two-photon absorption depends on both spatial and temporal overlap of the incident beams. Two-photon and even three-photon excited FLIM is relatively common now [45, 46].

Using a quantum light – entangled two photons is the simplest case – as a powerful spectroscopic tool to reveal novel information about complex molecules is a new emerging field [47].

Great attention has been already devoted to absorption/excitation with entangled photon pairs (E2PA) at low photon flux – with the expectation that using entangled photon pairs could bring (large?) quantum advantages. A comprehensive comparison of so far experimental reports, together with a discussion of critical experimental features that influence each measurement of the E2PA cross-section, is in [48, 49].

Regardless of current ambiguities about expected quantum advantages, and regardless of both the experimental complexity and the advanced theory needed for correct interpretation of such spectroscopic results, there is no doubt that using a quantum light in nonlinear optical spectroscopies will bring a qualitatively new information, in particular about bio-macromolecules and bio-functional molecular aggregates.

Surprisingly, recent results show that time-energy entanglement of near-IR photons preserves through a thick biological media even at room temperature! [49] That brings about hope for entanglement-enhanced fluorescence imaging.

3.2 Emerging New Techniques: Excitation by Structured Photons

Interesting light patterns can be achieved by cleverly combining different electromagnetic modes. Such modal superposition can occur in the spatial and/or temporal domain. Resulting light patterns – called structured light or structured photons – provide new possibilities for many fields, including spectroscopy and microscopy. A comprehensive overview of both light shaping techniques and some application of structured light in microscopy is presented, e.g., in “Roadmap on multimode light shaping” [50].

3.3 Combined Nonlinear Optical and Fluorescence Spectroscopies and Microscopy

Since fs nonlinear 2D optical spectroscopies – comprising, e.g., four- and six-wave mixing and stimulated transitions – became kind of “advanced research laboratory standard,” some such research setups are using fluorescence emission as “reporter” on the complex molecular excited states that were generated by the previous nonlinear processes. Currently, this approach demands complex and extensive instrumentation in combination with an advanced theory to interpret results correctly. But its potential to provide very detailed information on the excited states of complex molecular systems is obvious and fast advances in instrumentation are promising.

A recent example of this approach is the study of one-exciton and bi-exciton properties of molecular systems by using coherently and fluorescence-detected two-dimensional electronic spectra of increasing order of nonlinearity, for example, four-wave and six-wave mixing [51]. On top of comparison of fluorescence- and coherent-detection properties [52], they suggest a general relation between nonlinear fluorescence-detected signal and the order of nonlinear wave-mixing scheme.

The increasing number of studies that use (non-linear) fluorescence signal as a reporter on electronic and vibrational transient states properties of complex- and biomolecular systems indicates a new field for further fluorescence spectroscopy development.

Related nonlinear vibrational, electronic, or vibrational-electronic spectroscopy can now be carried out with multiple mode-locked lasers with highly stabilized repetition frequencies. Such arrangement allows extremely fast automatic scanning – i.e., asynchronous optical sampling – to investigate molecular relaxation processes in one sweep. An advanced overview of techniques of coherent nonlinear spectroscopy with multiple mode-locked lasers is in [53].

A very promising new approach that combines fluorescence and interferometric microscopy is described in [54].

They demonstrate that fluorescence microscopy combined with interferometric scattering (iSCAT) microscopy – a high-speed and time-unlimited imaging technique – can uncover, e.g., the real-time dynamics of nanoscopic nascent adhesions in living cells. See their instrumental setup in Fig. 10.

Schematic representation of the combined setup of iSCAT and epifluorescence microscopy. The laser beam (red) steered by the two-axis AOD impinges on the sample surface through the 100× objective lens. The scanning beam is collimated by a set of telecentric lenses (T1, T2). The reflected and scattered lights are collected back through the objective lens, split with a 50R:50 T beam splitter (BS), and imaged onto the sCMOS camera via a tube lens (TL1). The optical bandpass filter (F1) only transmits light in the blue range out of the broad spectrum of the white LED to excite GFP. The emission filter (F2) only transmits light in the emission spectrum of GFP. The fluorescent signal is projected onto the EMCCD camera via a tube lens (TL2). Reprinted (adapted) with permission from Park J-S, Lee I-B, Moon H-M, Ryu J-S, Kong S-Y, Hong S-C, et al. Fluorescence-Combined Interferometric Scattering Imaging Reveals Nanoscale Dynamic Events of Single Nascent Adhesions in Living Cells. J Phys Chem Lett 2020 11(23):10233–41. Available from: https://pubs.acs.org/doi/10.1021/acs.jpclett.0c02103. Copyright 2020 American Chemical Society

This study shows that the combination of fluorescence and interferometric scattering microscopy – high-speed and time-unlimited imaging technique – can uncover the real-time dynamics of nanoscopic processes such as cell adhesions onto the extracellular matrix.

4 Conclusions

After a brief introduction providing a historical view of fluorescence and its measurement, we have shown in this chapter that currently available methods and corresponding instrumentation of time-resolved fluorescence spectroscopy cover the whole possible time range of biological processes’ dynamics – from steady-state to any real-time molecular dynamics, up to femtosecond region.

Currently, the most universal techniques for the time-resolved fluorescence studies are based on time-correlated single-photon counting approach with great improvements and expansions by using extremally fast single-photon timing and tagging, together with tunable short pulse excitation. But, regardless of the high quality of the data provided by such instrumentation (time resolution, dynamic range, reproducibility, etc.), misinterpretation of the data can occur if the technique is applied without reasonable understanding to both the technique’s limitations and critical aspects and complexity of photophysics of the sample under investigation.

Thanks to the quality and a broad scope of fluorescence parameters that can be reliably measured with current instrumentation, fluorescent molecules – native or purposely incorporated into a functional biomolecular complex – have become efficient reporters about the structure, intra- and inter-molecular interactions, and/or biological functions of the molecular complex studied. It is possible to study dynamics of biomolecular functions in real time or study just one molecule at a time.

Tremendous instrumental and technology development of recent years allowed new combinations of fluorescence with other optical nonlinear spectroscopic techniques with extremely high time resolution (in fs region) or combinations of time-resolved nonlinear optical spectroscopies with microscopy up to a single-molecule resolution. Just a small illustration of such combined techniques (far from any systematic overview!) is in the last paragraph of this chapter. In this direction, we can certainly expect an explosive development in years to come.

References

Bernard CI, Newton HE (1958) A history of luminescence from the earliest times until 1900. Am Hist Rev [Internet] 63(4):937. https://www.jstor.org/stable/10.2307/1848952?origin=crossref

NobelPrize.org (1967) Manfred Eigen – biographical [Internet]. Nobel Prize Outreach AB 2022. https://www.nobelprize.org/prizes/chemistry/1967/eigen/biographical/

NobelPrize.org (1967) Ronald G.W. Norrish – biographical [Internet]. Nobel Prize Outreach AB 2022. https://www.nobelprize.org/prizes/chemistry/1967/norrish/biographical/

NobelPrize.org (1967) George Porter – biographical [Internet]. Nobel Prize Outreach AB 2022. https://www.nobelprize.org/prizes/chemistry/1967/porter/biographical/

Porter G, Reid ES, Tredwell CJ (1974) Time resolved fluorescence in the picosecond region. Chem Phys Lett [Internet] 29(3):469–472. https://linkinghub.elsevier.com/retrieve/pii/000926147485147X

NobelPrize.org (1999) Press release [Internet]. Nobel Prize Outreach AB 2022. https://www.nobelprize.org/prizes/chemistry/1999/press-release/

NobelPrize.org (2008) Press release [Internet]. Nobel Prize Outreach AB 2022. https://www.nobelprize.org/prizes/chemistry/2008/press-release/

NobelPrize.org (2014) Press release [Internet]. Nobel Prize Outreach AB 2022. https://www.nobelprize.org/prizes/chemistry/2014/press-release/

Horng ML, Gardecki JA, Papazyan A, Maroncelli M (1995) Subpicosecond measurements of polar solvation dynamics: Coumarin 153 revisited. J Phys Chem [Internet] 99(48):17311–17337. https://pubs.acs.org/doi/abs/10.1021/j100048a004

Sýkora J, Kapusta P, Fidler V, Hof M (2002) On what time scale does solvent relaxation in phospholipid bilayers happen? Langmuir [Internet] 18(3):571–574. https://pubs.acs.org/doi/10.1021/la011337x

Lakowicz JR (2006) Principles of fluorescence spectroscopy [Internet].3rd edn. Springer, Boston. http://springerlink.bibliotecabuap.elogim.com/10.1007/978-0-387-46312-4

Demas JN (1983) Excited state lifetime measurements.1st edn. Academic Press, New York

Valeur B, Berberan-Santos MN (2013) Molecular fluorescence. Principles and applications.2nd edn. Wiley

Birch DJS, Chen Y, Rolinski OJ (2015) Fluorescence. In: Photonics, biomedical photonics, spectroscopy, and microscopy IV [Internet]. Wiley, pp 1–58. https://onlinelibrary.wiley.com/doi/10.1002/9781119011804.ch1

Patting M (2008) Evaluation of time-resolved fluorescence data: typical methods and problems. In: Standardization and quality assurance in fluorescence measurements I [Internet]. Springer, Berlin, pp 233–258. http://springerlink.bibliotecabuap.elogim.com/10.1007/4243_2008_020

Bevington PR (1992) Data reduction and error analysis for the physical sciences. McGraw-Hill

O’Connor DV, Ware WR, Andre JC (1979) Deconvolution of fluorescence decay curves. A critical comparison of techniques. J Phys Chem [Internet] 83(10):1333–1343. https://pubs.acs.org/doi/abs/10.1021/j100473a019

Birch DJS, Imhof RE (1991) Time-domain fluorescence spectroscopy using time-correlated single-photon counting. In: Lakowicz JR (ed) Topics in fluorescence spectroscopy, vol 1. Springer, pp 64–71

Steiner RF (2002) Fluorescence anisotropy, theory and applications. In: Lakowicz JR (ed) Topics in fluorescence spectroscopy, vol 2, pp 1–51

Crutzen M, Ameloot M, Boens N, Negri RM, De Schryver FC (1993) Global analysis of unmatched polarized fluorescence decay curves. J Phys Chem [Internet] 97(31):8133–8145. https://pubs.acs.org/doi/abs/10.1021/j100133a005

Kapusta P, Erdmann R, Ortmann U, Wahl M (2003) Time-resolved fluorescence anisotropy measurements made simple. J Fluoresc 13:179–183

Trevor S, Kenneth GP (2015) A review of the analysis of complex time-resolved fluorescence anisotropy data. Methods Appl Fluoresc [Internet] 3(2):022001. https://iopscience.iop.org/article/10.1088/2050-6120/3/2/022001

Koshioka M, Sasaki K, Masuhara H (1995) Time-dependent fluorescence depolarization analysis in three-dimensional microspectroscopy. Appl Spectrosc [Internet] 49(2):224–228. http://journals.sagepub.com/doi/10.1366/0003702953963652

Schaffer J, Volkmer A, Eggeling C, Subramaniam V, Striker G, Seidel CAM (1999) Identification of single molecules in aqueous solution by time-resolved fluorescence anisotropy. J Phys Chem A [Internet] 103(3):331–336. https://pubs.acs.org/doi/10.1021/jp9833597

Devauges V, Marquer C, Lécart S, Cossec J-C, Potier M-C, Fort E et al (2012) Homodimerization of amyloid precursor protein at the plasma membrane: a homoFRET study by time-resolved fluorescence anisotropy imaging. PLoS One [Internet] 7(9):e44434. https://dx.plos.org/10.1371/journal.pone.0044434

Fleming GR (1986) Chemical applications of ultrafast spectroscopy. Oxford University Press

Desmond V, O’Connor DP (1984) Time-correlated single photon counting [Internet]. Academic Press. https://linkinghub.elsevier.com/retrieve/pii/B9780125241403X50011

Patting M, Wahl M, Kapusta P, Erdmann R (2007) Dead-time effects in TCSPC data analysis. In: Prochazka I, Migdall AL, Pauchard A, Dusek M, Hillery MS, Schleich WP (eds) Photon counting applications, quantum optics, and quantum cryptography, vol 6583, pp 72–81. http://proceedings.spiedigitallibrary.org/proceeding.aspx?doi=10.1117/12.722804

Becker W (2005) Castleman AW, Toennies JP, Zinth W (eds) Advanced time-correlated single photon counting techniques [Internet]. Springer series in chemical physics, vol 81. Springer, Berlin. http://springerlink.bibliotecabuap.elogim.com/10.1007/3-540-28882-1

Birch DJS, McLoskey D, Sanderson A, Suhling K, Holmes AS (1994) Multiplexed time-correlated single-photon counting. J Fluoresc [Internet] 4(1):91–102. http://springerlink.bibliotecabuap.elogim.com/10.1007/BF01876663