Abstract

Crystal structure prediction is a long-standing challenge in the physical sciences. In recent years, much practical success has been had by framing it as a global optimization problem, leveraging the existence of increasingly robust and accurate free energy calculations. This optimization problem has often been solved using evolutionary algorithms (EAs). However, many choices are possible when designing an EA for structure prediction, and innovation in the field is ongoing. We review the current state of evolutionary algorithms for crystal structure and composition prediction and discuss the details of methodological and algorithmic choices. Finally, we review the application of these algorithms to many systems of practical and fundamental scientific interest.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Structure prediction

- Genetic algorithm

- Ccrystal structure

- Energy landscape

- Heuristic optimization

- Phase diagram

1 Introduction

Many of the most crucial technological challenges today are essentially materials problems. Materials with specific properties are needed but unknown, and new materials must be found or designed. In some cases experiments can be performed to search for and characterize new materials [1], but these methods can be expensive and difficult. Thus, computational approaches can be complementary or advantageous. Theoretical prediction of many materials properties is possible once the atomic structure of a material is known, but structure prediction remains a challenge. However, a number of new methods have been proposed in recent years to address this problem [2–8]. These techniques are often faster and less expensive than experimental work, they dispense with the need to work with sometimes toxic chemicals, and they can be used to explore materials systems under conditions that are still inaccessible to experiment, such as very high pressures.

Unless kinetically constrained, materials tend to form structures that are in thermodynamic equilibrium, i.e., have the lowest Gibbs free energy. Thus, in order to predict a material's structure, we must find the arrangement of atoms that minimizes the Gibbs free energy, given by

Here, U is the internal energy, p the pressure, V the volume, T the temperature, and S the entropy. The entropy is comprised of three contributions: electronic, vibrational, and configurational. The vibrational and configurational components are expensive to calculate, and much of the error introduced by neglecting the entropy vanishes when taking energy differences [9, 10]. For these reason, the entropy is often neglected, effectively constraining the search to the T = 0 regime. That is, the enthalpy H = U + pV is frequently used to approximate the Gibbs free energy. Finite temperature effects can be included as a post-processing step once particularly promising structures have been identified. We note that, at high temperatures, anharmonic contributions to the vibrational entropy can stabilize phases that are mechanically or dynamically unstable at low temperature [11]. However, in order to search for stable materials at low temperature and fixed composition, the function we need to minimize, known as the objective function, is the enthalpy per atom.

A thermodynamic ensemble is not always used as the objective function. Bush et al. devised an objective function based on Pauling's valence rules and only performed energy calculations on the best structures identified thereby [2, 12]. Although this approach is computationally efficient, it is limited to ionic materials and is not as reliable as a direct search over the correct thermodynamic quantity.

1.1 Potential Energy Landscape

Given the atomic structure, there exist efficient methods for approximating the enthalpy. A complete description of a crystal structure includes six lattice parameters and 3N − 3 atomic coordinates, where N is the number of atoms in the unit cell. Thus, the function we seek to minimize can be thought of as a surface in a 3N + 3-dimensional space. These surfaces are referred to as energy landscapes. The lowest enthalpy structure is located at the deepest, or global, minimum of the energy landscape. In this way, the physical problem of predicting a material's atomistic structure is expressed as a mathematical optimization problem. In order to understand the search for the global minimum of an energy landscape, it is helpful to examine some general properties of energy landscapes of materials, as follows:

-

Much of the configuration space corresponds to structures with unphysical small interatomic distances. These areas of the configuration space can be neglected.

-

The energy landscape is effectively partitioned into basins of attraction by the use of a local optimization routine. The local optimizer takes any two structures in the same basin into the local minimum located at the bottom of the basin.

-

The number of local minima on the energy landscape scales exponentially with the dimensionality of the search space, i.e., with the number of atoms in the cell [13]. Venkatesh et al. calculated the number of local minima as a function of system size for clusters containing up to 14 Lennard–Jones particles, illustrating this exponential trend [14].

-

Deeper basins tend to occupy larger volumes in the multidimensional space. Specifically, a power law distribution describes the relationship between the depth of a basin and its hyper-volume. Combined with our capability for local minimization, this greatly simplifies the search for the optimum structure [15].

-

The barrier to reach a neighboring basin is usually low if that basin has a deeper minimum than the current basin. This is a consequence of the Bell–Evans–Polanyi principle [7].

-

Low-energy minima in the landscape usually correspond to symmetrical structures [7].

-

Low-lying minima are usually located near each other on the energy landscape. This tendency gives the landscape an overall structure that can be exploited while searching for the global minimum [16].

No analytical form exists for the enthalpy as a function of atomic configuration. We can only sample the enthalpy and its derivatives at discrete points on the energy landscape using methods such as density functional theory (DFT). Thus, one often resorts to heuristic search methods. One such class of methods that has proven successful is the evolutionary algorithm. This approach draws inspiration from biological evolution. Efficient local optimization utilizes the derivative information and is very beneficial in the solution of the optimization problem (see Sect. 4). Figure 1 illustrates how local optimization transforms the continuous potential energy landscape into a discrete set of basins of attractions, which dramatically simplifies the search space.

1.2 Evolutionary Algorithms

In nature, genetic information is carried in organisms. It is maintained in a population's gene pool if it is passed on from parents to offspring. New information can be introduced through mutation events, but these are rare (and usually lethal). The success that an organism has in passing on its genes is called the organism's fitness.

The fitness of an organism is not universal but depends on its environment. Many species which are very successful in their native habitats would do poorly in other environments. More subtly, there is variance of traits within a single species. In some cases these differences can lead to a difference in the organisms' fitness. The genes of low-fitness individuals are less likely to be passed on, so traits of the high-fitness individuals are likely to be more common in subsequent generations. In this way, populations (but not individuals) evolve to be well suited to their environment. This assumes, of course, that relevant traits are passed on, to varying degrees, from parents to offspring. The correlation between a trait in a parent and that in an offspring is known as the heritability of a trait. In order for environmental pressure to cause quick evolution of a trait, that trait must have high heritability.

Evolutionary algorithms leverage the power of this process to “evolve” solutions to optimization problems. Initial efforts to apply evolutionary algorithms to the structure prediction problem were aimed at finding the lowest energy conformation of large organic molecules [17–21]. Evolutionary search techniques were also successfully applied to atomic clusters [22, 23], and soon the method was extended to 3D periodic systems [2, 12, 24].

It has been observed that evolutionary algorithms (EAs) are well suited to the structure prediction problem for several reasons [25]. First, they can efficiently find the global minimum of multidimensional functions. EAs require little information and few assumptions about the lowest energy structure, which is advantageous when searching for structures about which little is known a priori. Finally, if designed correctly, an EA can take advantage of the structure of the energy landscape discussed in Sect. 1.1.

The evolutionary approach to structure prediction is modeled after the natural process. Each crystal structure is analogous to a single organism. In nature, the fitness of an organism is based on how well its phenotype is suited to its environment and, in particular, how successful it is in reproducing. In an evolutionary algorithm, fitnesses are assigned to the organisms based on their objective function values, and they are allowed to reproduce based on those fitnesses. Pressures analogous to those which force species to adapt to their environments will thus lead to crystal structures with lower energies.

Organisms in an EA are often grouped into generations. The algorithm proceeds by creating successive generations. The methods by which an offspring generation is made from parents are called variation operations or variations and include operations that are analogous to genetic mutation and crossover. A single offspring organism can be created from either one or two parents, depending on which variation is used. Every offspring organism must meet some minimum standards to be considered viable, analogous to the “growing up” process in nature. This is known as the development process. The algorithm terminates when some user-defined stopping criteria are met (see Sect. 2.13).

Improvements are made to the biological analogy when possible. In particular, we would rather not let the optimal solution worsen from one algorithmic iteration to the next. To prevent this, a promotion operator is used to advance some of the best organisms from one generation directly to the next. Also, mutations in nature are usually detrimental. When searching for structures, one might try to use mutation variations that are likely to introduce valuable new information to the gene pool.

Figure 2 outlines how a typical evolutionary algorithm for structure prediction proceeds, such as that implemented by Tipton et al. [26, 27]. The EA starts by creating an initial population (Sect. 2.2) and calculating the fitness of each organism in it (Sect. 2.3). Organisms are then selected to act as parents (Sect. 2.4) or to be promoted to the next generation (Sect. 2.5). The parents create offspring structures via mating (Sect. 2.6) or mutation (Sect. 2.7). The offspring are developed (Sect. 2.9) and the energy of each offspring organism is calculated using some external energy code, followed by a post-evaluation development step. If successful, the offspring structures are then added to the next generation, and their fitnesses are calculated. Unless the EA has converged (Sect. 2.13), the current children become parents in the next generation.

2 Details of the Method

2.1 Representation of Structures

A total of 3N + 3 dimensions describe the atomic coordinates and lattice vectors of a crystal structure. Additionally, the number of atoms, N, itself must be determined for ab initio structure predictions. However, these degrees of freedom are not all truly independent. Alternate choices of lattice vectors provide infinitely many ways to represent the same crystal structure, as illustrated in Fig. 3. Additionally, for molecular crystals, the dimensionality of the search space is effectively reduced since the molecular units typically stay intact in these crystal structures. This is due to the separation of energy scales, with strong intramolecular covalent interactions and much weaker intermolecular van der Waals interactions. In this case, structure search algorithms can take advantage of this trait by treating complete molecules, instead of individual atoms, as indivisible structural building blocks [28, 29]. Since the solution space is somewhat more complicated than it has to be, the task of searching that space is also more complicated than necessary. This difficulty may be addressed by attempting to standardize the way structures are represented in the computer.

2.1.1 Standardization of Representation

Two techniques are employed to standardize the representation of structures. The first and most widely used method is to impose hard constraints on the structures. These constraints include minimum interatomic distances and lattice parameter magnitudes. Limits on the maximum interatomic distances and lattice vector magnitudes are sometimes enforced as well [26, 27, 30, 31]. In addition, most authors constrain the range of angles between the lattice vectors [26, 27, 30–33]. If the algorithm varies the number of atoms per cell, this value is also constrained [27]. A restriction on the total volume of the cell is an additional possibility [30, 31, 34].

Physical considerations must be taken into account when choosing the constraints. For the constraint of the minimum interatomic distances, choosing 80% of the typical bond length or of the sum of the covalent radii of the two atoms under consideration has been proposed [30, 33]. The minimum lattice length has been chosen by adding the typical bond length and the diameter of the largest atom in the system [30, 32, 33]. Bahmann et al. set the maximum lattice vector length to the sum of the covalent diameters of all atoms in the cell [30]. Several authors limit angles between lattice vectors to lie between 60° and 120° [31, 32], although a more liberal range of 45–135° has also been used [30, 33]. Ji et al. fix the volume of the cell during the search [34], and Lonie et al.'s algorithm can be set to use either a fixed cell volume or to constrain the volume to a user-specified range [31]. In the work of Bahmann et al. the cell volume is constrained to the range defined by the volume of the close-packed structure and four times that value [30].

Additional constraints may be used when one wishes to limit the search to a particular geometry. For example, Bahmann et al. restrict the allowable atomic positions and increase the maximum allowable lattice length in one direction to facilitate a search for two-dimensional structures [30]. Woodley et al. use an EA to search for nanoporous materials by incorporating “exclusion zones,” or regions in which atoms are forbidden to reside [35].

Several advantages are gained by using these constraints. Many energy models behave poorly when faced with geometries with very small interatomic distances, so enforcing this constraint from the start helps prevent failed structural relaxations and energy calculations. As mentioned in Sect. 1.1, large regions of the potential energy surface correspond to unphysical structures, and constraints help limit the search to regions that do contain physical minima.

Additionally, they help to ensure that structures are represented similarly. Removing as much redundancy as possible from the space of solutions makes the problem easier without limiting our set of possible answers or introducing any a priori assumptions as to the form of the solution. On the other hand, it is more dangerous to remove merely unlikely regions of the space from consideration, since doing so would bring into question both the validity of results and the claim to first-principles structure prediction.

The second method used to help standardize structure representation involves transforming the cells of all organisms to a unique and physically compact representation when possible. One way to do this is the Niggli cell reduction [27, 36]. There is a Niggli cell for any lattice that is both unique and has the shortest possible lattice lengths. Figure 3 illustrates the representation problem and the Niggli cell reduction. A similar transformation is used by Lonie et al. and Oganov et al. [31, 37]. In addition to simplifying the space that must be searched, removing redundancy by standardizing the representation of structures usually helps to increase the quality of the offspring produced by the mating variation, as is discussed in Sect. 2.6.

2.2 Initial Population

If no experimental data are available for the system under study, then the organisms in the initial population are generated randomly, subject to the constraints discussed above. The initial population generated in this way should sample the entire potential energy landscape within the constraints. If experimental data is available, such as from X-ray diffraction analysis, it can be used to seed the initial population with likely organisms. If one is predicting an entire phase diagram (see Sect. 3), the correct elemental and binary phases may already be known experimentally and can be used in the initial population. The use of pre-existing knowledge has the potential to decrease significantly the time needed to find the global minimum

When searching for molecular crystals, one typically places coherent molecular units instead of individual atoms into the structure [28]. Zhu et al. made an additional modification to the generation of the initial population to facilitate their study of molecular solids [29]. Instead of placing molecules at completely random locations within the cell, structures are built from randomly selected space groups. The authors found that this provides the algorithm with a more diverse initial population and improves the success of the search.

2.3 Fitness

The fitness of an organism is the property on which evolutionary pressure acts, and it depends on the value of the objective function. It is defined so that better solutions have higher fitness, and thus minimizing the energy means maximizing the fitness. It is usually defined as a linear function of the objective function, relative to the other organisms in the population. Exponential and hyperbolic fitness functions have also been used as an alternative way to introduce more flexibility into the selection algorithm (see Sect. 2.4) [38, 39]. In one frequently-applied scheme, an organism with a formation energy per atom, E f, is assigned a fitness

where E maxf and E minf are the highest and lowest formation energies per atom, respectively, in the generation [26, 27, 31, 38]. In this case, the organism with the lowest energy in the generation is assigned a fitness of 1, and the organism with the highest energy has a fitness of 0. An alternative approach is to rank the organisms within a generation by their objective function values. The fitness of an organism is then defined as its rank [32, 34]. For cases when the stoichiometry and number of atoms in the cell is fixed, the fitness can simply be defined as the negative of the energy of the organism [30, 33].

2.4 Selection

The selection method determines which organisms will act as parents. Generally, structures with higher fitnesses are more likely to reproduce. The selection method is a crucial component of the search because it is the only way that the algorithm applies pressure on the population to improve towards the global minimum. Three commonly used strategies are elitist (or truncated) selection, roulette wheel selection, and tournament selection. In elitist selection the top several organisms are allowed to reproduce with equal probability while the rest are prevented from mating [39]. In roulette wheel selection, a random number d between the fitnesses of the best and worst organisms is generated for each organism, and if d is less than the fitness of the organism, it is allowed to reproduce [38]. In this way, it is possible for any organism except the worst one to reproduce, but it is more likely for organisms of higher fitness. Finally, in tournament selection, all of the organisms in the parent generation are randomly divided into small groups, usually pairs, and the best member of each group is allowed to reproduce.

Tipton et al. employ another approach to selection which is essentially a generalization of the three outlined above. Organisms are selected on the basis of a probability distribution over their fitnesses [26, 27]. Two parameters are used to describe the distribution: the number of potential parents and an exponent which determines the shape of the probability distribution. The number of parents specifies how many of the best organisms in the current generation have nonzero probabilities of acting as parents. The exponent describes a power law. This method allows fine-grained control over the trade-off between convergence speed and the probability of finding the ground state. An aggressive distribution that puts a lot of pressure on the population to improve leads to faster convergence, but the algorithm is more likely to converge to only a local minimum. On the other hand, a less aggressive distribution will probably take more time to converge, but the algorithm has a better chance of finding the global minimum because a higher degree of diversity is maintained in the population. Several choices of selection probability distribution are illustrated in Fig. 4.

Authors employ these strategies in a variety of ways. One approach is to use elitist selection to remove some fraction of the parent generation, and then grow the resulting group back to its original size by creating offspring organisms from the remaining parent organisms with equal probability [30, 33, 34] or with a linear or quadratic probability distribution over their fitnesses [32]. Abraham et al. use roulette wheel selection. When the number of offspring organisms equals the number of parent organisms, either roulette wheel or elitist selection are used on the combined pool of structures to determine which organisms will make up the next generation. Elitist selection was found to be preferable in the final step [38]. Lonie et al. employ a linear probability distribution over the fitnesses to select organisms to act as parents. A continuous workflow is used instead of a generational scheme, so that offspring organisms are immediately added to the breeding population when they are created [31].

2.5 Promotion

A new generation is created from the structures in the previous one by applying selection in conjunction with promotion and variation. The promotion operation places some of the organisms in the old generation directly into the new generation without undergoing any changes. This is used to ensure that good genetic material is maintained in the population. Many authors use elitist selection to choose which organisms to promote. Lyakhov et al. refined selecting for promotion by only promoting structures whose fingerprints were significantly different (see Sect. 2.11 for fingerprinting) [40]. This was done to prevent loss of population diversity due to promoting similar organisms.

2.6 Mating

The goal of the mating variation is to combine two parents and preserve their structural characteristics in a single offspring organism. In its most basic form, mating consists of slicing parent organisms (cells) into two sections each and then combining one from each parent to produce an offspring organism. This is illustrated in Fig. 5.

It is important that the mating operation be designed so that traits which are important to the energy minimization problem have high heritability. The most energetically-important interactions in materials come from species located close to one another. This suggests that there is some amount of spatial separability in the energy-minimization problem, with the energy depending primarily on the local structure. The mating variation works by exploiting this feature of the problem. The slicing mating variation maintains much of the local structure of each parent in a very direct way, and we will now detail this operation.

2.6.1 Slice Plane Location and Orientation

After two parent organisms have been selected, the next step is choosing the planes along which to slice them. In order to mate organisms that do not have identical lattices, a fractional space representation is used. The positions of atoms in a cell are expressed in the coordinate system of the cell's lattice vectors. As a result, the fractional coordinates of all atoms within the cell have values within the interval [0;1).

Authors have used various techniques to choose the orientation and location of the slice plane. In one method, a lattice vector A and a fractional coordinate s along A are randomly chosen [31, 32]. All atoms in one of the parent organisms with a fractional coordinate greater than s along A and all atoms in the second parent with fractional coordinate less than or equal to s are copied to the new child. Restricting the range of allowed values of s can be used to specify the minimum contribution by each parent to the offspring organism [31].

Alternatively, one may randomly select two planes that are parallel to a randomly chosen facet of the cell. Atoms that lie between these planes are then exchanged between the two parents. By “exchanged” we mean that each atom in the offspring has the same species-type and fractional coordinates as in the corresponding parent. This approach is equivalent to performing a translation operation on the atoms in the cell and then using the single slice plane method outlined above. Both of these methods choose slice planes that are parallel in real space to one of the cell facets of the parent structures [33, 34, 38]. Tipton et al. selects the two slice planes slightly differently: the fractional coordinate corresponding to the center of the sandwiched slab is randomly selected. The width of the sandwiched slab is then randomly chosen from a Gaussian distribution, and the two slice planes are placed accordingly [26, 27]. Another approach is simply to fix the locations of the two slice planes. For example, Abraham et al. specify that the two cuts be made at fractional coordinates of 1/4 and 3/4 along the chosen lattice vector [38].

Abraham et al. introduced a periodic slicing operation [38]. In this case, the value s described above becomes a cell-periodic function of the fractional coordinates along the cell lattice vectors other than A. A sine curve is often used, with the amplitude and wavelength drawn from uniform distributions. The wavelength is commonly constrained to be larger than the typical interatomic distance and smaller than the dimensions of the cell. The amplitude should also be small enough to ensure that no portion of the slice exceeds the boundaries of the cell. Abraham et al. found that periodic slicing improved the mean convergence time of their algorithm over planar slicing [38].

Constraining the degree of contribution of each parent by, for example, stipulating a minimum parental contribution can help prevent the mating operation from reproducing one or other of the parent structures essentially unchanged. Once the contribution of each parent has been determined, lattice vectors must be chosen for the offspring structure. Frequently, a randomly weighted average of the parents' lattice vectors is assigned to the offspring [31–33]. Simply averaging the lattice vectors of the parent organisms, i.e., fixing the weight at 0.5, is another common choice [26, 27, 34].

2.6.2 Number of Atoms and Stoichiometry of Offspring

An offspring organism produced via mating as described so far may have a different number of atoms or a different composition than its parents. This presents a difficulty if one wishes to perform a search with a fixed cell size or at a single composition. The simplest way to deal with these issues is simply to reject all offspring organisms that do not meet the desired constraints [33, 38]. Alternatively, nonconforming offspring can be made acceptable by the addition or removal of atoms. It may be best to add and remove atoms from locations near the slice plane [34]. These corrections minimize disruption to the structure transmitted from the parent organisms. Glass et al. use a slightly different approach: atoms to be removed or added are selected randomly from the discarded fragments of one of the parent organisms [31, 32]. Atomic order parameters have also been used to decide which excess atoms to remove (see Sect. 2.11) [40]. Those with the lower degrees of local order are more likely to be removed.

2.6.3 Modifications to the Mating Variation

Several additional modifications of the mating variation have been explored. The first involves shifting all the atoms in a cell by the same amount before mating [32, 41]. These shifts may happen with different probabilities along the axis where the cut is made and an additional random axis. This removes any bias caused by the implicit correlation between the coordinate s on the axis A in one crystal with the coordinate s on the axis A in the other. A similar effect may be obtained by selecting a random vector and shifting all atoms by this vector prior to making the cut [31]. In practice, these shifts help repeat good local structures to other parts of the cell.

In a further innovation, the parent organisms are subjected to random rotations and reflections prior to mating. This procedure removes bias toward any given orientation [31]. Additionally, an order parameter may be used to inform the choice of contribution from each parent (see Sect. 2.11). Several trial slabs of equal thickness are cut from the parents at random locations, and the slabs with the highest degree of order parameters are passed to the offspring [40, 41].

The simple slicing mating operator is not appropriate for molecular crystals, since it does not respect the integrity of the molecular units. Zhu et al. adapted the mating variation to search for molecular crystals [29]. In their scheme, each molecule is treated as an indivisible unit, and the location of the geometric center of a molecule is used to determine its location for the purposes of the mating operator.

2.6.4 Shortcomings of the Slicing Mating Variation

The mating variation acts directly on the particular representation of a structure in the computer. Since it is performed in fractional space, the mating variation can be applied to any two parent organisms, regardless of their cell shapes. However, the offspring structure may not always be successful. An offspring organism that has little in common with either parent can be produced if their representations (in particular the lattice parameters or number of atoms in the cells) are sufficiently different. As a result, the offspring will often have low fitness. Thus the mating variation is most successful when the parents are represented similarly because this increases the heritability of important traits.

The constraints and cell transformations discussed in Sect. 2.1 combat this issue through standardization of structure representation. Another method to increase the similarity of representation prior to mating is to use a supercell of one of the parent structures during mating [26, 27]. If one of the parent structures contains more than twice as many atoms as the other, a supercell of the smaller parent is used in the mating process. This technique ensures that both parent organisms are approximately the same size before mating, which aids in the creation of successful offspring.

Lyakhov et al. use an additional technique to help increase the viability of the offspring. If the distance between the parent organisms' fingerprints (see Sect. 2.10) exceeds a user-specified value, the would-be parents are not allowed to mate. The rationale behind this stipulation is that if two parents are from different funnels in the energy landscape, then their offspring would likely be located somewhere between those funnels and therefore have low fitness [40, 41].

2.6.5 Other Mating Operations

Not all evolutionary algorithms employ the previously described slicing method for mating. Bahmann et al. use a general recombination operation instead, where an offspring structure is produced by combining the lattice vectors and atomic positions of the two parent organisms [30]. This can be done in two ways: intermediate recombination takes a weighted average of the parents' values, and discrete recombination takes some values from each parent without changing them. Smith et al. used binary strings to represent structures on a fixed lattice, with each character in the string indicating the type of atom at a point on the lattice [24]. Mating was carried out by splicing together the strings of two parent structures. Jóhannesson et al. used a similar approach to search for stable alloys of 32 different metals [117]. Although all of these methods combine traits from each of the parents, they may not be as successful in passing the important local structural motifs of parents to the offspring.

2.7 Mutation

The goal of the mutation operation is to introduce new genetic material into the population. Its utility lies in its ability to explore the immediate vicinity of promising regions of the potential energy surface that have been found via the mating variation. The most common mutation entails randomly perturbing the atomic positions or lattice vectors of a single parent organism to produce an offspring organism. Some approaches call for mutating both the lattice vectors and the atomic positions [26, 27, 33], while others affect only one type of variable. To apply a mutation to the lattice vectors, they are subjected to a randomly generated symmetric strain matrix of the form

where the components e i are taken from uniform or Gaussian distributions [31–33].

Mutations of the atomic positions are achieved in a similar fashion. Each of the three spatial atomic coordinates is perturbed by a random amount, often obtained from a uniform or Gaussian distribution [26, 27]. To keep the size of these perturbations reasonable, either an allowed range or a standard deviation is set by the user. Most formulations do not mutate every atom in the cell but instead specify a probability that any given atom in the cell will be displaced. The approach of Abraham et al. combines mutation with the mating variation, perturbing atomic positions after mating has been performed [38]. However, most authors treat mutation as a separate operation.

Glass et al. claim that randomly mutating atomic positions is not necessary because enough unintentional change occurs during mating and local optimization to make it mostly redundant [32]. However, in later work, Lyakhov et al. use a “smart” mutation operation where atoms with low-order local environments (see Sect. 2.11) are shifted more [40, 41]. A further refinement to mutation has been made by shifting all the atoms along the eigenvector of the softest phonon mode [40, 41].

Permutation is another mutation-type operation for multi-component systems that swaps the positions of different types of atoms in the cell. Generally, the user specifies which types of atoms can be exchanged, and the algorithm performs a certain number of these exchanges each time the permutation variation is used on a parent organism [26, 27, 37]. The extent to which exchanging atomic positions affects the energy is strongly system-dependent. For ionic systems, exchanging an anion with a cation is likely to result in a much larger energy change than exchanges between two different types of cations or anions. In metals, on the other hand, the change in energy under permutation corresponding to anti-site defects is generally small. It is often helpful to use a permutation variation when studying these systems in order to find the minimum among several competing low energy configurations.

The number of swaps carried out can be random within a specified range, or can be pulled from a user-specified distribution. Randomly exchanging all types of atoms has the drawback that many energetically unfavorable exchanges may be performed, especially in ionic systems. If the number of atoms in the cell is small, Trimarchi et al. do an exhaustive search of all possible ways to place the atoms on the atomic sites [33].

Lonie et al. employ a “ripple” variation, in which all atoms in the cell are shifted by varying amounts [31]. First, one of the three lattice vectors is randomly chosen and then atomic displacements are made parallel to this axis. The amount by which each atom is shifted is sinusoidal with respect to the atom's fractional coordinates along the other two lattice vectors. This produces a ripple effect through the cell. Lonie et al. argue that this variation makes sense because many materials display ripple-like structural motifs. Combining the ripple variation with other variations such as the lattice vector mutation and the permutation leads to hybrid variations that can improve the performance of the EA by reducing the number of redundant structures encountered in the search [31].

Zhu et al. employ an additional mutation when searching for molecular solids. Since molecules are not usually spherically symmetric, a rotational mutation operator was introduced, in which a randomly selected molecule is rotated by a random angle [29].

2.8 System Size

The number of atoms per cell, N, is an important parameter that needs to be considered. If N is fixed to a value which is not a multiple of the size of the ground state primitive cell of the material, the search cannot identify the correct global minimum. However, N is a difficult parameter to search over. In the case of other degrees of freedom for the solution, such as interatomic distances and cell volume, the local optimization performed by the energy code helps to find the best values. No analogous operation is possible in the case of N. Furthermore, the energy hypersurface is not particularly well behaved with respect to this parameter. It is likely that values of N surrounding the optimum will lead to structures quite high in energy while values of N further from the ideal may lead to closer-to-ideal structures.

Several approaches exist to search over this parameter. The first is simply to “guess” the correct value of N [31–33]. Guessing N can make it easy to miss the global minimum, especially for systems about which little is known a priori. To increase the chances of finding the right number, searches can be performed at several different values of N, but this is inefficient. A second technique is to allow the cell size of candidate solutions to vary during the search. This can be done passively through the mating variation by not enforcing a constraint on the number of atoms in the offspring structure [27, 34, 38]. Incorporating a mutation-type variation specifically designed for varying N is an additional option.

Another way to aid the search for the correct number of atoms per cell is to use large cells. Large supercells effectively allow several possible primitive cell sizes to be searched at once because the cells can be supercells of multiple smaller cells. For example, a search with a 50-atom supercell is capable of finding ground state structures with primitive unit cells containing 1, 2, 5, 10, 25, and 50 atoms. However, because the number of local minima of the energy landscape increases exponentially with N [13], and because individual energy calculations are much more expensive for larger structures, efficiency suffers.

Lyakhov et al. describe another difficulty with the large supercell approach that arises when generating the initial population. Randomly generated large cells almost always have quite poor formation energies, and disordered glass-like structures dominate. This discovery implies that there exists an upper limit to the size of randomly generated structures that can provide a useful starting point for the search. Starting an evolutionary algorithm with a low-diversity initial population comprised of low fitness structures provides a small chance of finding the global minimum [40, 41]. To obtain reasonably good large cells for the initial population, Lyakhov et al. generate smaller random cells of 15–20 atoms, and then take supercells of these [40, 41]. In this way, the organisms in the initial population can still contain many atoms, but they possess some degree of order and therefore tend to be more successful.

An alternative approach is to start with smaller supercells and encourage them to grow through the course of the search [26, 27]. This is achieved by occasionally doubling the cell size of one of the parents prior to performing the mating variation. The speed of cell growth in the population can be controlled through the frequency of the random doubling. The advantage of this technique is that it searches over N while still gaining (eventually) the benefits of large supercells. In addition, considering smaller structures first ensures that the quicker energy calculations are performed early in the search, and the more expensive energy calculations required for larger cells are only carried out once the algorithm has already gained some knowledge about what makes good structures.

2.9 Development and Screening

After a new organism has been created by one of the variations, it is checked against the constraints described in Sect. 2.1 and tested for redundancy (see Sect. 2.10). At this stage, many EAs scale the atomic density of the new organisms using an estimate of the optimal density [26, 27, 32]. Starting from an initial guess of the optimal density ρ 0, the density estimate is updated each generation by taking a weighted average of the old best guess ρ i and the average density of the best few structures in the most recent generation ρ ave:

where w is the density weighting factor. Then any time a new organism is made it is scaled to this atomic density before local relaxation. The primary reason for the density scaling is a practical one. Many minimization algorithms are quite time consuming if the initial solution is far from a minimum. This scaling is an easy first pass at moving solutions towards a minimum. Because the density scaling of an organism alters the interatomic distances, etc., the constraints checks are performed after the scaling of the density.

2.10 Maintaining Diversity in the Population

As the evolutionary algorithm searches the potential energy surface, equivalent structures sometimes occur in the population. If a pair of structures mates more than once, they are likely to create similar offspring. If the set of best structures does not change from generation to generation due to promotion, the set of parents, and thus the resulting set of children, can also be very similar. In addition, as the generation as a whole converges to the global minimum, all the organisms are likely to become more similar. What is worse, once a couple of low energy, often selected organisms are in the population, they can reproduce and similar structures will effectively fill up the next generations.

Duplicate structures hinder progress for several reasons. The most computationally expensive part of the algorithm is the energy calculations, and performing multiple energy calculations on the same structure is wasteful. However, this is exactly what happens if duplicate structures are not identified and removed from the population. Furthermore, low diversity in the population makes it difficult for the algorithm to escape local minima and to explore neighboring regions of the potential energy surface. This leads to premature convergence which is in practice indistinguishable from convergence to the correct global minimum. For these reasons, it is desirable to maintain the diversity of the population by identifying and removing equivalent structures. This is not a trivial task because, as discussed in Sect. 2.1, there exist infinitely many ways to represent a structure. Numerical noise adds to the difficulty of identifying equivalent structures.

Some authors directly compare atomic positions to determine whether two structures are identical. Lonie et al. developed an algorithm for this purpose, and it correctly identified duplicate structures that had been randomly rotated, reflected, or translated, and had random cell axes [42].

Tipton et al. also use a direct comparison of structures, with a slight modification [26, 27]. During the search, two lists of previously-observed structures are maintained. The first contains all the structures, relaxed and unrelaxed, that the algorithm has seen. If a new unrelaxed offspring structure matches one of the structures in the list, it is discarded. The assumption is that if it was good enough to keep the first time, it was promoted, and if not, there is no reason to spend more effort on it. A second list contains the relaxed structures of all the organisms in the current generation. If a new relaxed offspring structure matches one of the structures in this list, it is discarded to avoid having duplicate structures in the generation. This approach both minimizes the number of redundant calculations performed and prevents the population from stagnating.

Wang et al. employ a bond characterization matrix to identify duplicate structures in the population [8]. The components of the matrix are based on bond lengths and orientations, and the types of atoms participating in the bond. Bahmann et al. identify duplicate structures by choosing a central atom in each organism and comparing the bond lengths between the central atom and the other atoms in a supercell [30]. These authors introduced an additional technique to help prevent the population from stagnating by stipulating an organism age limit. If an organism survives unchanged (via promotion) for a user-specified number of generations, it is removed from the population. This feature is meant to prevent a small number of good organisms from dominating the population and reducing its diversity.

Another method involves defining a fingerprint function which describes essential characteristics of a structure. When two organisms are found to have the same fingerprints, they are likely identical, and one is discarded. Several fingerprint functions have been used. The simplest is just the energy [22, 39]. The logic is that if two structures are in fact identical, they should have the same energies to within numerical noise. An interval is chosen to account for the noise. However, the size of the interval is fairly arbitrary and system-dependent, and this method is prone to false positives. Eliminating good unique organisms from the population can be even more detrimental to the search than not removing any organisms at all [42]. Lonie et al. expanded the fingerprint function to include three parameters: energy, space group, and the volume of the cell [31]. Again, intervals were set on the volume and energy. This is an improvement over simply using the energy as a fingerprint, but it can still occasionally fail, especially for low-symmetry structures or when atoms are displaced slightly from their ideal positions. Lonie et al. found that their direct comparison algorithm outperformed their fingerprint function at identifying duplicate structures [42].

Valle et al. employ a fingerprint function that is based on the distributions of the distances between different pairs of atom types in an extended cell [41, 43, 44]. For example, a binary system contains three interatomic distance distributions. The fingerprint function takes all three distributions into account. They used this fingerprint function to define an order parameter (see Sect. 2.11). Zhu et al. modified this fingerprint function slightly when searching for molecular solids; since distances between atoms within molecules do not change significantly, these distances are not considered when calculating structures' fingerprints [29].

Discarding duplicate structures from the population is not the only method employed to maintain diversity. Abraham et al. use a fingerprint function to determine how similar all the structures in a generation are to the lowest energy structure in the generation. Instead of simply removing similar structures, a modified fitness function is used which penalizes organisms based on their similarity to this best structure [45].

2.11 Order Parameters

Order parameters give a measure of the degree of order of an entire structure and also of the local environment surrounding individual atoms. Since energy is often correlated with local order, this can be a useful tool. Valle et al. extended their fingerprint function by using it to define an order parameter [40, 41, 44]. They used it to guide the algorithm at various points, as mentioned previously.

2.12 Frequency of Promotion and Variations

The user-specified parameters of an evolutionary algorithm affect its performance. However, running hundreds or thousands of structure searches to optimize these parameters can be prohibitively expensive, especially if an ab initio energy model is used. Furthermore, optimal values depend on the system under study. Physical and chemical intuition can be used to specify some of the parameters, such as the minimum interatomic distance constraint, but there exists no clear way to determine many of the others without performing enough searches to obtain reliable statistics.

Many authors arbitrarily choose how much each variation contributes to the next generation [32–34]. Lonie et al. performed thousands of searches for the structure of TiO2 using empirical potentials to determine the best set of parameters for their algorithm [31]. They found that the relative frequency of the different variations did not significantly affect the success rate of the algorithm. However, the parameters associated with each variation did. For example, the lattice mutation variation was found to produce more duplicate structures when the magnitude of the mutation was small. This is likely to be due to structures relaxing back to their previous local minima when only slightly perturbed.

There is an important distinction between the relative frequency with which a given variation is called by the algorithm and the actual proportion of organisms in the next generation that are produced by that variation. The difference arises because not all the variations have the same likelihood of creating viable offspring. For example, mating is more likely to give good offspring structures than mutation of atomic positions because the latter will more frequently produce offspring that violate the minimum interatomic distance constraint. For this reason, the researcher's intention may be more clearly communicated if the proportion of offspring created by each variation is specified rather than the frequency that each variation is called by the algorithm.

Sometimes a situation arises in which it is not possible for one of the variations to produce a viable offspring organism. This could happen, for example, if the variation increases the size of the structures in the system, but all the potential parent organisms are already close to the maximum allowed cell size. In this case, the search will stop unless there is some way for the algorithm to get around the user-specified requirement that a certain percentage of the offspring come from this variation. Setting an upper limit on the number of failed attempts per variation is one way to achieve this [26, 27].

2.13 Convergence Criteria: Have We Found the Global Minimum?

When searching for an unknown structure, there is no known criterion that guarantees that the best structure encountered by the evolutionary algorithm is in fact the global minimum. One common technique to analyze the success rate of a heuristic search algorithm was given by Hartke [46]. In this method, many independent structure searches are performed on the same system, using the same set of parameters for the algorithm. For each search the energy of the best structure in each generation is recorded. These values are then used to create a plot of the energy vs generation number (or the total number of energy evaluations) that contains three curves: the energy of the highest-energy best structure, the energy of the lowest-energy best structure, and the average energy of the best structures. One shortcoming of the Hartke plot is that the lowest and highest best energies encountered are outliers, and in practice they depend strongly on the choice of the number of independent structure searches.

Tipton et al. employs a statistically more relevant approach to quantifying an EA's performance in which the median, the 10th percentile, and the 90th percentile energies of the best structures are plotted [27]. The 10th and 90th percentiles offer a better characterization of the distribution of results and are less susceptible to outliers and the number of independent structure searches performed to characterize the efficiency of the algorithm.

Figure 6 shows an example of a performance distribution plot for Zr2Cu2Al that was obtained by performing 100 independent runs of an EA with an embedded atom model potential [27]. These plots provide insight into the expected performance of the algorithm for the given material system and parameter settings and enable statistical comparisons of the performance of different methodologies or parameterizations of an EA. Of course, the strength of these conclusions depends on how many searches were used to construct the performance distribution plot, and the algorithm must be tested on systems with known ground state structures to be certain when the search was successful.

Performance distribution plot for 100 structure searches at fixed composition for Zr2Cu2Al using an embedded atom model potential [27]. The energy of the 90th percentile best structure is shown in red, the tenth percentile best structure in blue, and the median best structure in black

Lonie et al. showed that a decaying exponential fits the average-best energy curve of a Hartke plot well for a system with a known ground state structure [31]. The halflife of the exponential fit provides a measure of how fast the algorithm converges and can be used to determine a stopping criterion for the search. However, not all of the searches find the global minimum, so allowing a search to run for many halflives still does not guarantee that the global minimum will be found, but it does increase confidence in the result.

A more common approach is to stop the search after a user-specified number of generations has elapsed without improvement of the best organism [26, 27, 30, 31]. Stopping once an allocated amount of computational resources has been expended is a popular alternative. Bahmann et al. have determined convergence when population diversity falls below a certain threshold or when all the organisms have very similar energies [30].

Although most authors use one of the fairly simple convergence criteria mentioned above, a quantitative statistical approach has been proposed by Venkatesh et al. [14]. Using Bayesian analysis, they determined the distribution of local minima based on the number found by a random search. This distribution was then used to calculate how many attempts would be required to find the global minimum with a specified probability.

3 Phase Diagram Searching

Even when one can say with a reasonable degree of confidence that the evolutionary algorithm has converged to the global minimum of the potential energy landscape, the result might still not represent the lowest energy structure that would be observed in nature. Skepticism is justified for several reasons [47]. First, as discussed in Sect. 2.8, unless the number of atoms in the cell is correctly guessed or allowed to vary, the EA cannot find the global minimum. Second, the structure identified as the global minimum might not be mechanically or dynamically stable, which would be reflected in energy-lowering imaginary phonon modes for the proposed global minimum crystal structure. Third, the reported global minimum might actually represent a metastable phase that decomposes into two or more structures with different stoichiometries. To determine whether this is the case, a phase diagram search must be performed. In addition to predicting the decomposition of structures into phases of other stoichiometries, phase diagrams are of great interest for many practical applications.

In order to perform a phase diagram search, we make use of the convex hull construction [9]. The formation energies of all structures with respect to the elemental constituents are plotted vs the composition. To determine the elemental references, one can either refer to the literature or perform preliminary searches. The smallest convex surface bounding these points is the convex hull, and the lowest energy facet for each composition is of physical interest. Thus, the convex hull is a graphical representation of the lowest energy a system can attain at each composition, and the points that lie on the convex hull correspond to stable structures. Figure 7 is an example of a convex hull for the Li–Si binary system [48].

A phase diagram search of the Li–Si binary system by Tipton et al. [48] using the method of Trimarchi et al. [49] showed that a search for relatively small unit cell structures could approximate the structural and energetic characteristics of the known very large experimental structures and thus be used to predict the voltage characteristics of a Li–Si battery anode. The search also identified a previously unknown member of the low-temperature phase diagram with composition Li5Si2

Two approaches have been used to construct the convex hull. The first is to perform fixed-stoichiometry searches at many compositions [9, 50]. The lowest energy structure found in each search is then placed on an energy vs composition plot and the convex hull is constructed. However, this method is computationally expensive because it requires many separate searches to adequately sample the composition space [27].

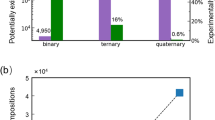

The second approach entails modifying the evolutionary algorithm to search over composition space in the course of a single run. This requires two changes to the standard algorithm. The first is that the stoichiometry of structures the algorithm considers must be allowed to vary. This can be achieved simply by giving the initial population random stoichiometries and removing stoichiometry constraints on offspring structures [49]. The second modification involves the objective function. The algorithm constructs a current convex hull for each generation of structures, and a structure's objective function is defined as its distance from the current convex hull [26, 27, 49]. In this way, structures that lie on the current convex hull have the highest fitness, and those above the convex hull have lower fitnesses. Selection then acts on this value in the standard way described in Sect. 2.4. As the search progresses, the true convex hull of the system is approached.

As discussed in Sect. 2.8, the global minimum cannot be found if the cell does not contain an integer multiple of the correct number of atoms. Since the structures lying on the convex hull often do not contain the same numbers of atoms, allowing the number of atoms to vary (Sect. 2.8) during the phase diagram search helps the algorithm find the correct convex hull. An alternative approach is to perform several composition searches with different, fixed system sizes. Each search generates a convex hull, and these hulls can be overlaid to obtain the overall lowest convex hull [49]. It should also be noted that the stoichiometries accessible to the algorithm are constrained by the number of atoms in the cell. For example, a cell containing four atoms in a binary system provides the algorithm with only five possible compositions (0, 25, 50, 75, and 100% A or B). The use of larger system sizes may be necessary for the algorithm to find the correct convex hull.

Another difficulty with phase diagram searches is inadequate sampling of the entire composition range. Mating between parents with different stoichiometries tends to produce offspring structures of intermediate composition. Because of this, over time the population as a whole may drift toward the middle region of the composition range, making it difficult to sufficiently sample more extreme compositions. Two solutions to this problem have been proposed [26, 27]. The first is to modify the selection criteria in such a way that mating between parents with similar compositions is encouraged. The second is to divide the composition range into sections and perform separate searches over each section. Agglomerating the results from all the sections gives the overall convex hull.

4 Energy Calculations and Local Relaxation

The potential energy landscape over which an evolutionary algorithm searches is defined by the code used for the energy calculations. These energy codes approximate the true potential energy landscape of the system, so the global minimum found by an EA will only represent the true global minimum of the system insofar as the approximate Hamiltonian accurately represents the physics of the system.

As discussed in Sect. 1.1, the potential energy surface is divided into basins of attraction by the local structure optimization or relaxation available in most energy codes (see Fig. 1). In order to find the global minimum, we must only sample a structure that resides in its basin of attraction, and the local optimizer will do the rest. This tremendously reduces the effective size of the space that must be searched; a relatively sparse sampling of a region can identify most of the local minima [7]. Local optimization is therefore crucial to the success of the search. Although the method depends on the energy code used, the local optimization problem is relatively well understood and its solutions are generally stable.

Glass et al. observed that the energies of a relaxed and unrelaxed structure are only weakly correlated [32]. This implies that the energy of an unrelaxed structure is not a reliable indicator of how close that structure is to a minimum in the potential energy landscape. Although omitting local optimization is computationally cheaper, an evolutionary search performed this way is unlikely to be successful. Woodley et al. compared the performance of an evolutionary algorithm with and without local relaxation and found that locally relaxing every structure greatly improved the efficiency and success rate of the algorithm [51].

Both empirical and ab initio energy codes have successfully been used in evolutionary algorithms to perform energy calculations and local relaxations. Due to their approximate nature, empirical potential energy landscapes often contain unphysical minima [52]. In addition, the cut-off distances imposed in many interatomic potentials leave discontinuities in the energy landscape, which can impede local relaxation. Although they mimic the true potential energy landscape more accurately than empirical potentials, density functional theory (DFT) calculations are also capable of misguiding the search if care is not taken. Pickard et al. found that insufficiently dense k-point sampling can lead to false minima, and for calculations at high pressures, pseudopotentials with small enough core radii must be used to give accurate results [7].

Many EA implementations are interfaced with multiple energy codes, and more than one type of energy calculation may even be used in a single search. Ji et al. employed both empirical potentials and DFT calculations when searching for structures of ice at high pressures [53]. Lennard-Jones potentials were used for most energy calculations, but ab initio calculations were performed periodically and the parameters of the Lennard-Jones potentials were fitted to the DFT results. In this way, the empirical potential improved as the search progressed, and fewer computational resources were consumed than if ab initio methods alone had been used.

5 Summary of Methods

Tables 1 and 2 list the salient details of several implementations of evolutionary algorithms for structure prediction. The codes listed in Table 1 are production codes available to other users; the codes in Table 2 are research codes. In the following we summarize some of the distinguishing features of these evolutionary algorithms.

The Genetic Algorithm for Structure Prediction (GASP) is interfaced with VASP, GULP, LAMMPS, and MOPAC and has phase diagram searching capability [26, 27]. In addition, GASP can perform searches with a variable number of atoms in the cell, and it implements a highly tunable probability distribution for selecting organisms for mutation, mating, and promotion. The Open-Source Evolutionary Algorithm for Crystal Structure Prediction (XTALOPT) is interfaced with VASP, PWSCF, and GULP. It incorporates a unique ripple mutation, as well as hybrid mutations. It does not use a generational scheme but rather allows offspring structures to act as parents as soon as they are created [31]. The Universal Structure Predictor: Evolutionary Xtallography (USPEX) code is interfaced with VASP, SIESTA, PWSCF, GULP, DMACRYS, and CP2K. It incorporates a unique order parameter, both for cells and individual atoms, that is used to help guide the search [32, 40]. The Evolutionary Algorithm for Crystal Structure Prediction (EVO) is interfaced with PWSCF and GULP. It applies an age limit to structures encountered in the search, and it also employs a mating operation that is different from the cut-and-splice technique used in most other evolutionary structure searches [30]. Finally, the Module for Ab Initio Structure Evolution (MAISE) is interfaced with VASP. Both planar and periodic slices can be used during mating, and it has the option to perform mating and mutation in a single variation [54].

Trimarchi et al. developed an evolutionary algorithm for structure prediction that is interfaced with VASP [33]. They later extended the algorithm to include phase diagram searching [49]. Abraham et al. designed an evolutionary algorithm with several unique features, including periodic slicing during the mating operation and mutation of atomic positions only after mating [38]. The algorithm also accepts offspring structures with different numbers of atoms than the parents. It is interfaced with CASTEP. The evolutionary algorithm of Ji et al. is interfaced with VASP, and it constrains the structures it considers to a constant volume [34]. Bush et al. developed an evolutionary algorithm that incorporates a surrogate objective function [2].

6 Applications

Evolutionary algorithms have been used to solve the structures of many types of systems including molecules, clusters, surfaces, nanowires, and nanoporous materials [39, 55–57]. Here we focus on applications of EAs to bulk, 3D periodic systems. Within this constraint, we have made an effort to provide a comprehensive review of prior applications. We grouped the application into six categories based on the type of material studied: pure elements, hydrogen-containing compounds, intermetallics, minerals, molecular solids, and other inorganic compounds. Tables 3, 4, 5, 6, 7, and 8 correspond to these categories and list the applications of the method. For each study, we indicate the system studied, the number of atoms in the configuration space searched over, the energy code used, and the lead author.

6.1 Elemental Solids

Table 3 describes searches for elemental solids. Some elemental phase diagrams are still not fully characterized, especially under extreme conditions such as high pressure. Several elements have been predicted to display unusual properties at high pressure, such as superconductivity. Ma and Oganov studied several different elements under pressure. They found a new phase of boron with 28 atoms in the unit cell that is predicted to be stable in the pressure range 19–89 GPa [58]. A search of carbon under high pressures led to the prediction that the bc8 structure is more stable than diamond above 1 TPa [25]. Oganov et al. predict several new superconducting phases of calcium at pressures up to 120 GPa [61]. A study of hydrogen at pressures up to 600 GPa predicted that it remained a molecular solid throughout this pressure range [25]. The interesting case of hydrogen under pressure will be further described below in the discussion of hydrogen-containing compounds. Ma et al. predict that potassium and rubidium follow the same sequence of phase transitions under pressure (40–300 GPa) observed experimentally for cesium, but predict a new cubic phase of lithium above 300 GPa [63]. Ma et al. also studied nitrogen under pressure, predicting new polymeric insulating phases above 188 GPa, and studied sodium at pressures up to 1 TPa, predicting a new optically transparent, insulating phase above 320 GPa [65]. They have also reported a monoclinic, metallic, molecular phase of oxygen in the range of 100–250 GPa whose calculated XRD diffraction pattern is in agreement with experiment [66].

Bi et al. searched for phases of europium at pressures up to 100 GPa and predicted several nearly degenerate structures in the range 16–45 GPa, which may help explain the mixed phase structure observed experimentally in this pressure regime [62] and the occurrence of superconductivity and magnetism in these phases [109]. In a novel application of evolutionary algorithms, Park et al. used an EA to verify that a new modified embedded atom potential for molybdenum accurately reproduced the energy landscape of molybdenum [52].

6.2 Hydrogen-Containing Compounds

Table 4 summarizes EA structure searches performed on hydrogen-containing compounds. Ashcroft suggested in 1968 that hydrogen could become a high-temperature superconductor under pressure [110] and in 2006 that doping hydrogen to form chemically precompressed hydrogen-rich materials could be a potential route to reduce the pressure required for superconductivity [111].

Hooper, Lonie, and Zurek have performed several studies on polyhydrides of alkali and alkaline earth metals under pressure. A metallic phase of LiH6 was predicted to be stable above 110 GPa [50], and a stable phase of NaH9 was predicted to metallize at 250 GPa [68]. Stable rubidium polyhydride phases were predicted to metallize at pressures above 200 GPa [70]. A stable, superconducting phase of MgH12 was identified under pressure, with a predicted T c of 47–60 K at 140 GPa [73]. Lonie et al. also identified a new phase of BeH2 above 150 GPa, as well as a stable superconducting phase of BaH6, with a T c of 30–38 K at 100 GPa [72].

Several studies have been performed on the group IV hydrides ranging from methane to plumbane. Gao et al. searched for methane structures under pressure and predicted that it dissociates into ethane and hydrogen at 95 GPa, butane and hydrogen at 158 GPa, and finally carbon and hydrogen at 287 GPa [75]. Martinez et al. looked at silane under pressure and predicted two new phases, one stable from 25 to 50 GPa, and the other from 220 to 250 GPa. The latter was predicted to be superconducting, with a T c of 16 K at 220 GPa [76]. Gao et al. searched for germane and stannane under pressure. Germane was predicted to be stable with respect to decomposition into pure germanium and hydrogen above 196 GPa, and it was predicted to be superconducting, with a T c of 64 K at 220 GPa [77]. Two stannane isomers were predicted – one stable from 96 to 180 GPa, and the other occurring above 180 GPa. Both phases were calculated to be superconductors [78]. Zaleski et al. performed evolutionary structure searches for plumbane (PbH4) under pressure and predicted that it forms a stable non-molecular solid at pressures greater than 132 GPa [79]. Wen et al. predicted five low energy three-dimensional structures of graphane in the pressure range 0–300 GPa, and each was either semiconducting or insulating [80].

Zhou et al. investigated platinum hydrides under pressure and predicted a superconducting hexagonal phase of PtH to be stable above 113 GPa [81]. Hu et al. searched for LiBeH3 at pressures up to 530 GPa and predicted two new insulating phases [83]. Zhou et al. found two new tetragonal structures of Mg(BH4)2 under pressure whose densities, bulk moduli, and XRD patterns match experimentally measured values [88].

6.3 Intermetallic Compounds

Table 5 summarizes searches for intermetallic compounds. Trimarchi et al. have studied many intermetallics. Their algorithm identified the correct lattice of Au2Pd, which is known to be fcc, but it failed to find the lowest energy atomic configuration [33] because the system exhibits several nearly degenerate structures. In another study by these authors, a new phase of IrN2 was discovered [89]. They performed a phase diagram search on the Al–Sc system, which exhibit several crystal structures across the composition range, and successfully identified the experimentally known ground state phases [49]. Xie et al. discovered two new superconducting phases of CaLi2, one stable from 35 to 54 GPa and the other stable from 54 to 105 GPa. Furthermore, they predict that CaLi2 is unstable with respect to dissociation into the constituents at pressures greater than 105 GPa [90].

Sometimes the underlying lattices for these systems are known empirically, and the search reduces to finding the lowest energy arrangement of atoms on the lattice. For these cases, an efficient method to search over permutations of atomic positions is crucial. D'Avezac et al. employed a virtual atom technique, in which the species type of an atom is “relaxed” to determine if exchanging atom types at that site would likely lead to a lower energy configuration [112]. In contrast to real space mating operations, these authors also employed a reciprocal space mating scheme [112]. With this technique, the structure factors of two parent organisms are combined to form the offspring organism's structure factor, which is then transformed to real space, giving the offspring organism. A cluster expansion fitted to DFT calculations was leveraged to perform energy calculations.

6.4 Minerals

Table 6 contains a summary of EA searches for the structures of several minerals. Many of these studies were carried out at high pressure in order to simulate the conditions in planetary interiors. Oganov et al. found two new phases of MgCO3 under pressure. In addition, a new phase of CaCO3 was reported to be stable above 137 GPa [37], and the structure of the post-aragonite phase of CaCO3, stable from 42 to 137 GPa, was solved [16]. Iron carbides of various compositions were explored under pressure, and it was predicted that the cementite structure of Fe3C is unstable at pressures above 310 GPa, indicating that this phase does not exist in the Earth's inner core [82]. Zhang et al. searched for iron silicide structures under pressure and predicted that only FeSi with a cesium chloride structure is stable at pressures greater than 20 GPa. Ono et al. investigated the structure of FeS under pressure and reported several new phases up to 135 GPa [92]. Wu et al. predicted a new low temperature post-perovskite phase of SiO2 with the Fe2P-type structure [96].

6.5 Molecular Crystals

Table 7 summarizes searches for molecular crystals. Applications for molecular solids include high-energy materials, pharmaceuticals, pigments, and metal-organic frameworks [113–115]. Molecular solids are not always in their thermodynamic ground states. Instead, the system is kinetically trapped and the molecular units are maintained. Zhu et al. made several changes to the standard EA to facilitate searching for molecular crystals, and they applied their algorithm to search for structures of ice, methane, ammonia, carbon dioxide, benzene, glycine, and butane-1,4-diammonium dibromide. Experimentally known structures were recovered by the algorithm [29]. Ji et al. searched for ice at terapascal pressures and predicted three new phases [53]. Lennard-Jones potentials were used to model the system, but ab initio calculations were periodically performed and the results used to fit the empirical potentials. Hermann et al. performed evolutionary searches for high-pressure phases of ice and predicted that ice becomes metallic at 4.8 TPa [116]. Oganov et al. predicted that the β-cristobalite structure is the most stable for CO2 between 19 and 150 GPa [37].

6.6 Inorganic Compounds

Table 8 summarizes searches for inorganic compounds. Many of these studies aimed to clarify regions of various phase diagrams. Others sought to identify phases with desirable properties, such as superconductivity.

Tipton et al. applied an evolutionary algorithm to investigate Li-Si anode battery materials and carried out a phase diagram search on the Li–Si system. They discovered a new stable phase with composition Li5Si2 [48]. Hermann et al. searched for structures in the Li–B system under pressure and found several stable structures. LiB was found to become increasingly stable as the pressure was increased beyond 300 GPa [99, 100]. Hermann et al. also predicted a new stable phase of LiBeB at ambient pressure and several additional phases under pressures up to 320 GPa [108]. Kolmogorov et al. searched for Fe–B structures at several different compositions and reported new phases with compositions FeB4 and FeB2.

Xu et al. discovered a new orthorhombic phase of CsI that is predicted to be stable from 42 GPa up to at least 300 GPa [98]. This material is predicted to metallize at 100 GPa and to become superconducting at 180 GPa. Zhu et al. searched for xenon oxides under pressure and found three stable compounds: XeO above 83 GPa, XeO2 above 102 GPa, and XeO3 above 114 GPa [104]. Bush et al. solved the structure of Li3RuO4 at zero pressure. They used an alternative fitness function in their EA and only calculated energies at the end of the search [2]. Li et al. resolved the structure of superhard BC2N and found it to have a rhombohedral lattice [107].

7 Conclusions