Abstract

The idea that there is a self-controlled learning advantage, where individuals demonstrate improved motor learning after exercising choice over an aspect of practice compared to no-choice groups, has different causal explanations according to the OPTIMAL theory or an information-processing perspective. Within OPTIMAL theory, giving learners choice is considered an autonomy-supportive manipulation that enhances expectations for success and intrinsic motivation. In the information-processing view, choice allows learners to engage in performance-dependent strategies that reduce uncertainty about task outcomes. To disentangle these potential explanations, we provided participants in choice and yoked groups with error or graded feedback (Experiment 1) and binary feedback (Experiment 2) while learning a novel motor task with spatial and timing goals. Across both experiments (N = 228 participants), we did not find any evidence to support a self-controlled learning advantage. Exercising choice during practice did not increase perceptions of autonomy, competence, or intrinsic motivation, nor did it lead to more accurate error estimation skills. Both error and graded feedback facilitated skill acquisition and learning, whereas no improvements from pre-test performance were found with binary feedback. Finally, the impact of graded and binary feedback on perceived competence highlights a potential dissociation of motivational and informational roles of feedback. Although our results regarding self-controlled practice conditions are difficult to reconcile with either the OPTIMAL theory or the information-processing perspective, they are consistent with a growing body of evidence that strongly suggests self-controlled conditions are not an effective approach to enhance motor performance and learning.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The underlying source of errors in skilled actions is often ambiguous and difficult to assign as the learner must rely on noisy and delayed sensory information. Feedback from an external source, such as a coach or computer display, can facilitate or augment this process (Sigrist et al., 2013). Knowledge of results feedback (Salmoni et al., 1984) can provide varying amounts of information to learners depending on its characteristics. Error feedback provides precise information about the magnitude and direction of the error (e.g., –42 cm), graded feedback provides coarse information about either the magnitude or direction of the error (e.g., “too far”), and binary feedback indicates only success or failure information (e.g., “miss”) (Luft, 2014).Footnote 1 When to provide this feedback is often decided by an external agent; however, this feedback decision can also be made by the learner, a form of self-controlled learning. These self-controlled feedback schedules have typically enhanced motor skill learning compared to yoked feedback schedules, wherein learners experience the feedback schedule created by a self-controlled counterpart, but without any choice (see Sanli et al., 2013; Ste-Marie et al., 2020 for reviews).

Why self-controlled learning advantages emerge has garnered considerable attention in the motor skill-learning literature. Within their OPTIMAL (Optimizing performance through intrinsic motivation and attentional learning) theory of motor learning, Wulf and Lewthwaite (2016) have argued that providing participants the opportunity to exercise choice, as in a self-controlled group, creates a virtuous cycle. Specifically, choice leads to increased (perceived) autonomy, leading to enhanced expectancies (e.g., perceived competence) and increased (intrinsic) motivation. These motivational influences lead to improved motor performance, creating a positive feedback loop that ultimately enhances motor learning compared to those not given the same choice opportunities. Support for this view has been drawn from experimental work where participants exercise choice over task-irrelevant or incidental choices. Exercising choice over the color of golf balls to putt (Lewthwaite et al., 2015 Experiment 1) or the mat underneath a target (Wulf et al., 2018 Experiment 1), which picture to hang in a lab (Lewthwaite et al., 2015 Experiment 2), hand order in a maximal force production task (Iwatsuki et al., 2017), which photos to look at while running (Iwatsuki et al., 2018), and the order of exercises to perform (Wulf et al., 2014) have been suggested to improve motor performance or learning. Other research, however, have failed to replicate this benefit of task-irrelevant or incidental choices on motor performance or learning (Carter & Ste-Marie 2017a; Grand et al., 2017; McKay & Ste-Marie, 2020, 2022).

Rather than a motivational account, others have forwarded an information-processing explanation. From this perspective, exercising choice allows learners to tailor practice to their individual needs (Chiviacowsky & Wulf, 2005, 2002) by engaging in performance-contingent strategies (Carter et al., 2014, 2016; Laughlin et al., 2015: Pathania et al., 2019) to reduce uncertainty about movement outcomes (Barros et al., 2019; Carter et al., 2014; Carter & Ste-Marie, 2017a, 2017b; Grand et al., 2015). Evidence for this view has come from experiments that showed the timing of the feedback decision relative to task performance matters (Carter et al., 2014; Chiviacowsky & Wulf, 2005), that task-relevant choices are more effective than task-irrelevant choices (Carter and Ste-Marie 2017a, cf. Wulf et al., 2018 Experiment 2), that interfering with information-processing activities during (Couvillion et al., 2020; Woodard & Fairbrother, 2020) or after (Carter & Ste-Marie, 2017b; Woodard & Fairbrother, 2020) task performance eliminates self-controlled learning benefits, and that the ability to accurately estimate one’s performance is enhanced in choice compared to yoked groups (Carter et al., 2014; Carter & Patterson, 2012). Thus, further investigation is required to test predictions from these two explanations to better understand why exercising choice during practice confers an advantage for motor skill learning.

To dissociate between the motivational and information-processing accounts of the self-controlled learning advantage, we manipulated the amount of information participants in choice and yoked (i.e., no-choice) groups experienced with their feedback schedule during acquisition of a novel motor task. In Experiment 1, participants received error or graded feedback to assess how high and moderate levels of informational value impact the self-controlled learning advantage. Given both error and graded feedback provide salient information about how to correct one’s behavior relative to the task goal (i.e., both generate an error signal), in Experiment 2 we provided participants with binary feedback. As binary feedback is devoid of information about the necessary change to improve one’s behavior (i.e., does not generate an error signal), we could better isolate the motivational nature of choice to test between the two explanations for the self-controlled learning advantage. Motor learning was assessed using delayed (˜24 hours) retention and transfer tests. If the OPTIMAL theory is correct, we hypothesized that the characteristics of one’s feedback schedule would not matter for the self-controlled learning advantage as this advantage arises from the opportunity for choice—a common feature of all choice groups. Thus, we predicted that all choice groups would demonstrate superior performance and learning compared to the yoked groups. Alternatively, if the information-processing account is correct, we hypothesized that the characteristics of one’s feedback schedule would matter for the self-controlled learning advantage as feedback with greater informational value would be more effective for reducing uncertainties about movement outcomes. Thus, we predicted that choice over an error feedback schedule would be the most effective pairing for performance and learning. We also included self-report measures of perceptions of autonomy, competence, and intrinsic motivation, and assessments of error estimation abilities to respectively test auxiliary assumptions of the OPTIMAL theory and information-processing explanations.

Methods

We report how we determined our sample size, all data exclusions (if any), all manipulations, and all measures in the study (Simmons et al., 2012). All data and R scripts can be accessed here: https://github.com/cartermaclab/expt_sc-feedback-characteristics.

Participants

Experiment 1

One hundred and fifty-two right-handed (Oldfield, 1971), healthy adults participated in Experiment 1 (Mage = 20.64 years, SDage = 2.45, 88 females). Sample size was determined from an a priori power analysis using the ANOVA: fixed effects, main effects and interactions option in G*Power (Faul et al., 2009) with the following parameters: α = 0.05, β = .20, f = 0.23, numerator = 1, and groups = 4. This revealed a required sample of 151 participants. Our chosen effect size was based on a meta-analytic estimate (f = .32) by McKay et al., (2014); however, we used a more conservative estimate given the uncertainty of how choice would interact with our feedback characteristic manipulation. Participants were compensated $15 CAD or with course-credit for their time. All participants gave written informed consent and the experiment was approved by McMaster University’s Research Ethics Board.

Experiment 2

A new sample of 76 right-handed (Oldfield, 1971), healthy adults participated in Experiment 2 (Mage = 20.18 years, SDage = 3.18, 47 females). Sample size was selected so group size matched that used in Experiment 1. Participants were compensated $15 CAD or with course-credit for their time. All participants gave written informed consent and the experiment was approved by McMaster University’s Research Ethics Board.

Task

In Experiments 1 and 2, participants sat in a chair facing a monitor (1920x1080 resolution) with their left arm in a custom manipulandum that restricted movement to the horizontal plane. Their elbow was bent at approximately 90∘ and they grasped a vertical handle with their left hand. Handle position was adjusted as needed to ensure the central axis of rotation was about the elbow. The task required a rapid “out-and-back” movement such that the reversal happened at 40∘ (in pre-test, acquisition, and retention) or 60∘ (in transfer). The starting point for all trials was 0∘. Participants were instructed to make a smooth movement to the reversal and back without hesitating when reversing their movement. The movement time goal to the reversal was always 225 ms. The task and instructions were similar to those used by (Sherwood 1996, 2009). Vision of the manipulandum and limb were occluded during all phases of the experiment. Angular displacement for the elbow was collected via a potentiometer attached to the axis of rotation of the custom manipulandum. Potentiometer data were digitally sampled at 1000 Hz (National Instruments PCIe-6321) using a custom LabVIEW program and stored for offline analysis.

Procedure

Experiment 1

The first 76 participants were randomly assigned to either the Choice+Error-Feedback group (n = 38; Mage = 20.24 years, SDage = 2.37, 22 females) or the Choice+Graded-Feedback group (n = 38; Mage = 20.76 years, SDage = 3.02, 26 females). This is typical in the self-controlled learning literature as the self-controlled participants’ self-selected feedback schedules are required for providing feedback to the participants in the yoked (i.e., control) groups. The remaining 76 participants were randomly assigned to either the Yoked+Error-Feedback group (n = 38; Mage = 20.53 years, SDage = 2.13, 23 females) or the Yoked+Graded-Feedback group (n = 38; Mage = 21.03 years, SDage = 2.32, 22 females).

Data collection consisted of two sessions separated by approximately 24 h.Footnote 2 Session one included a pre-test (12 trials) and an acquisition phase (72 trials). Session two included the delayed retention (12 trials) and transfer (12 trials) tests. No feedback about motor performance was provided in the pre-test, retention, or transfer. Prior to the pre-test, all participants received instructions about the task and its associated spatial and timing goals. Additionally, half of the participants in each group were randomly selected to verbally estimate their performance on the spatial and timing goals after each trial in the pre-test. Only a subset of participants were asked to estimate their performance in pre-test to mitigate the potential that doing so would prompt participants to adopt this strategy during the experiment as error estimation has been suggested (e.g., Chiviacowsky & Wulf, 2005) to be adopted spontaneously by participants controlling their feedback schedule. However, asking participants to estimate their performance during pre-test is necessary to be able to assess how this skill develops as a function of one’s practice condition.

Participants were reminded of the instructions about the task and its associated goals at the start of the acquisition phase. Group-specific instructions regarding feedback were also provided. Participants in the Choice+Error-Feedback group and the Choice+Graded-Feedback group were told they could choose their feedback schedule, with the restriction that they must select feedback on 24 of the 72 acquisition trials. They were informed that if the number of remaining feedback requests equaled the number of remaining acquisition trials, these trials would default to feedback trials. This feedback restriction was implemented to ensure that the relative frequency of feedback was equated across all groups. Similar restrictions have been used in past research involving multiple-choice groups (e.g., Chiviacowsky & Wulf, 2005). Participants in the Yoked+Error-Feedback group and the Yoked+Graded-Feedback group were told they may or may not receive feedback following a trial based on a predetermined schedule. Thus, participants in these groups were not aware that their feedback schedule was actually created by a participant in a corresponding choice group. While this yoking procedure ensures that the total number of feedback trials and their relative placement during acquisition are identical, the content of the feedback reflected each participant’s own performance. Error feedback for the spatial and timing goals was provided as the difference between the participant’s actual performance and the task goal (i.e., constant error). Graded feedback for the spatial goal was provided as “too short” if performance was < 40 degrees (or 60 degrees in transfer), “hit” if exactly 40 degrees, and “too far” if > 40 degrees. For the timing goal, graded feedback was provided as “too fast” when performance was < 225 ms, “hit” if exactly 225 ms, and “too slow” if > 225 ms. All participants were shown a sample feedback display that corresponded to their experimental group and were asked to interpret it aloud for the researcher to verify understanding.

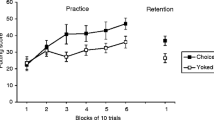

A typical acquisition trial (see Fig. 1) began with the current trial number displayed (500 ms), followed by a visual “Get Ready!” and a visual go-signal (800 ms apart). Participants were free to begin their movement when ready following the visual go-signal (i.e., green circle) as this was not a reaction time task. The computer screen was blank while participants made their movement. When participants returned to the starting position, a red circle was displayed on the monitor. Following a 2000-ms feedback delay interval, the feedback decision prompt was presented for the self-controlled groups. The number of remaining feedback trials was also displayed during this feedback delay interval. If feedback was not selected, a blank screen was displayed for 3000 ms. If feedback was selected via verbal response (or imposed on the yoked groups), it was also displayed for 3000 ms.

Overview of a typical acquisition trial for the choice groups showing the sequence of events a participant in the choice groups experienced during the acquisition phase. Trials began by informing participants the trial number (500 ms) they were on in acquisition. Shortly after, the text “Get Ready!” appeared on the screen and 800 ms later, a visual go-signal was presented in the form of a green circle in the center of the screen. Participants began their movement when ready after seeing the visual go-signal, as we were not interested in reaction time. While participants completed their rapid out-and-back movement, the computer screen was blank. Upon returning to the starting position, a red circle appeared in the center of the screen. A 2000-ms feedback delay interval was used and this interval was followed by the feedback prompt. The feedback prompt also displayed an updated counter representing the number of feedback trials they had left. If the number of remaining feedback trials matched the number of acquisition trials left, these trials automatically defaulted to feedback trials. On trials where feedback was not requested, a blank screen (a) was shown for 3000 ms. When feedback was selected via verbal response, feedback was provided for both the spatial and timing goals according to their experimental group. The error feedback group (b) saw their constant error, the graded feedback group (c) saw either “too far” or “too short” for the spatial goal and “too fast” or “too slow” for the timing goal, and the binary feedback group (d) saw either “hit” or “miss” for the task goals. The sequence of events was the same for the yoked groups with the exception they did not see a feedback prompt. The sequence of events was similar in pre-test, retention, and transfer except all trials were no-feedback trials

Before the retention and transfer tests, participants were reminded about the task and its associated goals. All participants were asked to verbally estimate their performance after each trial in retention and transfer. After the pre-test, trials 12 and 72 in acquisition, and before the delayed retention test, participants verbally answered a series of questions pertaining to perceived competence, task interest and enjoyment, and perceived autonomy.Footnote 3 The perceived competence and task interest and enjoyment questions were from the Intrinsic Motivation Inventory (McAuley et al., 1989; Ryan, 1982) and the perceived autonomy questions were used in earlier work (Barros et al., 2019; Carter and Ste-Marie, 2017a; St. Germain et al., 2022). Cronbach’s alpha values for each questionnaire at each time point are reported in Table 1.

Experiment 2

Similar to Experiment 1, the first half of participants were assigned to the Choice+Binary-Feedback group (n = 38; Mage = 22.37 years, SDage = 3.13, 19 females) and the remaining participants were assigned to the Yoked+Binary-Feedback group (n = 38; Mage = 18.00 years, SDage = 0.93, 28 females). Binary feedback for the spatial goal was provided as “hit” if performance was exactly 40 degrees and as “miss” for everything else. For the timing goal, binary feedback was provided as “hit” when performance was exactly 225 ms and as “miss” for everything else. Data collection was identical to that of Experiment 1, except in the acquisition instructions participants in both groups were shown a sample binary feedback display and were asked to interpret it aloud for the researcher to verify understanding.

Data analysis

Movement trajectories for all trials were visually inspected by a researcher and trials with errors (e.g., technical issues, moving before the “go” signal) were removed. A total of 4.03% (662/16146) and 3.73% (306/8208) of trials for Experiments 1 and 2 were removed, respectively. Trials were aggregated into blocks of 12 trials, resulting in one block of trials for pre-test, retention, and transfer, and six blocks of trials for acquisition. Our primary performance outcome variable was total error (E) (Henry, 1974, 1975) and was computed using the equation:

where xi is the score on the i th trial, T is the target goal, and n is the number of trials in a block.

To test for performance differences in pre-test, retention, and transfer, total error for the spatial and timing goals were analyzed in separate mixed ANOVAs (Experiment 1: 2 Choice x 2 Feedback x 3 Test,Experiment 2: 2 Choice x 3 Test). To test for performance differences during acquisition, total error for the spatial and timing goals during acquisition were analyzed in separate mixed ANOVAs (Experiment 1: 2 Choice x 2 Feedback x 6 Block,Experiment 2: 2 Choice x 6 Block). Model diagnostics of total error for the spatial and timing goals revealed skewed distributions. We therefore conducted sensitivity analyses using the shift function, which is a robust statistical method well suited for skewed distributions (Rousselet & Wilcox, 2020; Wilcox, 2021). The results of these analyses (see Supplementary A) were consistent with those of the mixed ANOVAs, which we report below. Our primary psychological outcome variables were intrinsic motivation (i.e., interest/enjoyment), perceived competence, and perceived autonomy. The mean score of the responses for these constructs at each time point was calculated for each participant and analyzed in separate mixed ANOVAs (Experiment 1: 2 Choice x 2 Feedback x 4 Time; Experiment 2: 2 Choice x 4 Time). Of secondary interest, error estimation abilities were assessed as total error between a participant’s estimation and actual performance in pre-test (50% of the participants in each group in Experiments 1 and 2), retention, and transfer (see Supplementary B).

Alpha was set to .05 for all statistical analyses. Corrected degrees of freedom using the Greenhouse–Geisser technique are always reported for repeated measures with more than two levels. Generalized eta squared \(\eta^2_G\) is provided as an effect size statistic (Bakeman, 2005; Lakens, 2013; Olejnik & Algina, 2003) for all omnibus tests. Post hoc comparisons were Holm–Bonferroni corrected to control for multiple comparisons. Statistical tests were conducted using R (Version 4.1.2, R Core Team, 2021) and the R-packages afex (Version 1.1.1, Singmann et al., 2021), computees (Re, 2013), ggResidpanel (Version 0.3.0.9000, Goode and Rey 2022), kableExtra (Version 1.3.4, Zhu 2021), metafor (Version 3.4.0, Viechtbauer 2010), papaja (Version 0.1.0.9999, Aust and Barth 2020), patchwork (Version 1.1.0.9000, Pedersen 2020), rogme (Version 0.2.1, Rousselet et al., 2017), tidyverse (Version 1.3.1, Wickham et al., 2019), and tinylabels (Version 0.2.3, Barth 2022) were used in this project.

Results

Pre-test, retention, and transfer

Experiment 1

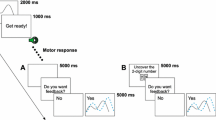

Spatial (Fig. 2a) and timing (Fig. 2b) error decreased from the pre-test to the retention and transfer tests. There was a main effect of test for spatial error, F(1.33,196.52) = 40.20, p < .001, \(\eta^2_G\) = .138, where performance was less errorful in retention and transfer than pre-test (p’s < .001) and performance in retention was better than transfer (p < .001). A main effect of test was also found for timing error, F(1.08,160.23) = 81.21, p < .001, \(\eta^2_G\) = .245, with pre-test performance more errorful than both retention and transfer (p’s < .001), and retention was less errorful than transfer (p < .001). The main effect of choice was not significant for both spatial, F(1,148) = .52, p = .471, \(\eta^2_G\) = .001, and timing, F(1,148) = .32, p = .547, \(\eta^2_G\) < .001, error.

Experiment 1 data. The choice with error feedback (Choice+Error) group is shown in dark blue circles, the choice with graded feedback (Choice+Graded) group is shown in light blue squares, the yoked with error feedback (Yoked+Error) group is shown in red triangles, and the yoked with graded feedback (Yoked+Graded) group is shown in yellow crosses. Error bars denote 95% bootstrapped confidence intervals. (a) Spatial total error (degrees) and (b) timing total error (ms) averaged across blocks and participants within each group. Dotted vertical lines denote the different experimental phases. Pre-test and acquisition occurred on day 1 and retention and transfer occurred approximately 24 h later on day 2. Self-reported scores for perceived autonomy (c), perceived competence (d), and intrinsic motivation (e) after the pre-test and after blocks 1 and 6 of acquisition on day 1, and before the retention test on day 2. Scores could range on a Likert scale from 1 (Strongly disagree) to 7 (Strongly agree). Dots represent individual data points

Experiment 2

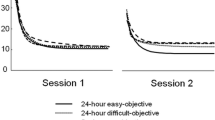

Spatial (Fig. 3a) and timing (Fig. 3b) error did not change considerably from the pre-test to the retention and transfer tests. The main effect of choice was not significant for both spatial, F(1,74) = .23, p = .631, \(\eta^2_G\) = .002, and timing, F(1,74) = .11, p = .738, \(\eta^2_G\) = .001, error. All other main effects and interactions were also not significant.

Experiment 2 data. The choice with binary feedback (Choice+Binary) group is shown in green circles and the yoked with binary feedback (Yoked+Binary) group is shown in purple squares. Error bars denote 95% bootstrapped confidence intervals. (a) Spatial total error (degrees) and (b) timing total error (ms) averaged across blocks and participants within each group. Dotted vertical lines denote the different experimental phases. Pre-test and acquisition occurred on day 1 and retention and transfer occurred approximately 24 h later on day 2. Self-reported scores for perceived autonomy (c), perceived competence (d), and intrinsic motivation (e) after the pre-test and after blocks 1 and 6 of acquisition on day 1, and before the retention test on day 2. Scores could range on a Likert scale from 1 (Strongly disagree) to 7 (Strongly agree). Dots represent individual data points

Acquisition

Experiment 1

All groups of participants improved their performance of the spatial goal during the acquisition phase (Fig. 2a). This was supported by a significant main effect of block, F(2.41,357.13) = 60.18, p < .001, \(\eta^2_G\) = .130, where block 1 was less accurate than all other blocks (p’s < .001), block 2 was less accurate than all subsequent blocks (p’s ≤ .021), and blocks 3 and 4 were more errorful than block 6 (p’s ≤ .015). The main effect of choice was not significant, F(1,148) = .06, p = .813 \(\eta^2_G\) < .001. Timing error also decreased during the acquisition period (Fig. 2b). The significant main effect of block, F(1.75,259.59) = 55.44, p < .001, \(\eta^2_G\) = .138, was superseded by a significant Feedback x Block interaction, F(1.75,259.59) = 3.56, p = .035, \(\eta^2_G\) = .010. Post hoc comparisons showed that timing error for those receiving error feedback was reduced from block 1 in all subsequent blocks (p’s < .001), but performance plateaued from block 2 onward in acquisition (p’s ≥ .257). Timing error for the participants that received graded feedback was also reduced from block 1 in all subsequent blocks (p’s < .001); however, these participants continued to improve across acquisition blocks as block 2 was more errorful than blocks 3 to 6 (p’s ≤ .028). The main effect of choice was not significant, F(1,148) = .54, p = .465 \(\eta^2_G\) = .002. Descriptives for the number of “hit” trials for each group are provided in Table 2.

Experiment 2

Spatial (Fig. 3a) and timing (Fig. 3b) error remained relatively flat from block 1 to block 6 in the acquisition period. The main effect of choice for both the spatial, F(1,74) = .08, p = .776, \(\eta^2_G\) < .001, and the timing, F(1,74) = .37, p = .542, \(\eta^2_G\) = .004, goals were not significant. Al other main effects and interactions for both task goals were not significant. Descriptives for the number of “hit” trials for each group are provided in Table 2.

Psychological variables

Experiment 1

Perceptions of autonomy (Fig. 2c) showed a slight decrease across time points, supported by a main effect of time, F(2.25,332.95) = 3.69, p = .022, \(\eta^2_G\) = .003. Perceived autonomy was higher after block 1 of acquisition compared to self-reported ratings prior to completing the retention test (p = .031). The main effect of choice was not significant, F(1,148) = 2.38, p = .125, \(\eta^2_G\) = .014. Self-ratings for perceived competence (Fig. 2d) were similar across groups after the pre-test, but then began to diverge after block 1 based on feedback characteristic. Main effects of time, F(1.92,283.47) = 3.43, p = .036, \(\eta^2_G\) = .006, and feedback, F(1,148) = 47.36, p < .001, \(\eta^2_G\) = .188, were superseded by a Feedback x Time interaction, F(1.92,283.47) = 28.04, p < .001, \(\eta^2_G\) = .050. Perceived competence scores were not significantly different after the pre-test (p = .232); however, perceptions of competence were significantly lower in those participants receiving graded feedback compared to error feedback at all other time points (p’s < .001). The main effect of choice was not significant, F(1,148) = 0.03, p = .862, \(\eta^2_G\) < .001. Self-reported scores for intrinsic motivation (Fig. 2e) generally decreased after block 1, which was supported by a main effect of time, F(2.40,355.90) = 14.69, p < .001, \(\eta^2_G\) = .012. Intrinsic motivation scores initially increased following the pre-test to after block 1 (p = .003); however, scores after block 1 of acquisition were greater than those reported at the end of acquisition (i.e., block 6) and before retention (p’s < .001). Self-reported ratings were also lower before retention compared to after the pre-test (p = .043). The main effect of choice was not significant, F(1,148) = 1.69, p = .195, \(\eta^2_G\) = .010.

Experiment 2

Self-reported scores for perceived autonomy (Fig. 3c) were similar across all time points. The main effect of choice was not significant, F(1,74) = 0.07, p = .792, \(\eta^2_G\) < .001. All other main effects and interactions were also not significant. Perceptions of competence (Fig. 3d) showed a considerable decrease after the pre-test, F(1.85,136.91) = 106.10, p < .001, \(\eta^2_G\) = .298, where scores were significantly greater after the pre-test compared to all other time points (p’s < .001), and were higher after block 1 of acquisition than before retention (p = .004). The main effect of choice was not significant, F(1,74) = 0.25, p = .620, \(\eta^2_G\) = .002. Self-ratings for intrinsic motivation generally decreased across time points, which was supported by a main effect of time, F(2.37,175.55) = 15.31, p < .001, \(\eta^2_G\) = .018. Intrinsic motivation was higher after the pre-test than after block 6 of acquisition and before retention (p’s < .001), and higher after block 1 than after block 6 and before retention (p’s < .026). The main effect of choice was not significant, F(1,74) = 1.04, p = .312, \(\eta^2_G\) < .013.

Equivalence analysis

Our main comparison of interest was between choice and yoked (i.e., no-choice) groups. To evaluate the self-controlled learning effect, Hedges’ g for the spatial and timing goals were aggregated within each experiment while accounting for within-subject dependencies (see Supplementary C for the psychological data). Next, random effects meta-analyses were conducted on the retention test dataFootnote 4 to generate a summary point estimate and 90% confidence intervals (CI) with Experiments 1 and 2 combined and also separate. The overall estimated effect when combining both experiments was g = .05 (favoring self-controlled) and 90% CI [-.12, .23]. The overall estimated effect for Experiment 1 was g = .03 (favoring self-controlled) and 90% CI [-.19, .25]. For Experiment 2, it was g = .09 (favoring self-controlled) and 90% CI [-.19, .37].

Equivalence tests can be conducted to evaluate whether the observed differences are significantly smaller than a pre-determined smallest effect size of interest (see Harms & Lakens, 2018 for a discussion). Typically, a two one-sided tests (TOST) procedure is used to compare the observed effect to upper and lower equivalence bounds, and if the effect is significantly smaller than both bounds then the hypothesis that the effect is large enough to be of interest is rejected (Lakens, 2017; Schuirmann, 1987). However, we did not pre-specify a smallest effect of interest, so instead we report the 90% confidence intervals (see above). All effect sizes outside this interval would be rejected by the TOST procedure while all values inside the interval would not. Based on the combined overall estimate the present experiments can be considered inconsistent with all effects larger than g = ±.23.

Discussion

The purpose of the present experiments was to test between motivational and information-processing accounts of the putative self-controlled learning advantage (see Ste-Marie et al., 2020 for a review). According to the OPTIMAL theory (Wulf & Lewthwaite, 2016), self-controlled practice or choice conditions are advantageous because the provision of choice increases perceptions of autonomy and competence, which increase intrinsic motivation and ultimately both motor performance and learning. Conversely, others have argued that self-controlled feedback is effective because it provides the opportunity to request feedback in a performance-dependent way that reduces uncertainty about movement outcomes relative to task goals (Carter et al., 2014; Carter & Ste-Marie, 2017b; Grand et al., 2015) to enhance error detection and correction abilities (Barros et al., 2019; Carter et al., 2014; Chiviacowsky & Wulf, 2005). In contrast to these predictions, we did not find evidence that providing learners with choice over their feedback schedule was beneficial for motor learning, despite collecting a much larger sample (N = 228 across both Experiments) than those commonly used in self-controlled learning experiments (median sample size N = 36 in a meta-analysis by McKay et al., 2022) and motor learning experiments in general (median n/group = 11 in a review by Lohse et al., 2016). Further, exercising choice in practice did not enhance perceptions of autonomy, competence, or intrinsic motivation, and also did not result in more accurate performance estimations in delayed tests of motor learning. Overall, we found no support for the OPTIMAL theory or information-processing perspective. Our results challenge the prevailing view that the self-controlled learning benefit is a robust effect.

The failed replication of a self-controlled learning advantage was surprising given the dominant view for the past 25 years has been that it is a robust effect and one that should be recommended to coaches and practitioners (Sanli et al., 2013; Ste-Marie et al., 2020; Wulf & Lewthwaite, 2016). Our findings are, however, consistent with a growing list of relatively large—often pre-registered—experiments that have not found self-controlled learning benefits (Bacelar et al., 2022; Grand et al., 2017; Leiker et al., 2019; McKay & Ste-Marie 2020, 2022; St. Germain et al., 2022; Yantha et al., 2022). One possible explanation for this discrepancy between earlier and more recent experiments may be that the self-controlled learning advantage was the result of underpowered designs, which has been highlighted as a problem in motor learning research (see Lohse et al., 2016 for a discussion). When underpowered designs find significant results, they are prone to be false positives with inflated estimates of effects (Button et al., 2013; Lakens & Evers, 2014), which can be further exaggerated with questionable research practices such as p-hacking and selective reporting (e.g., Munafò et al., 2017; Simmons et al., 2011). Thus, a self-controlled learning advantage may not actually exist. Alternatively, if one does exist, then it seems likely that it is a much smaller effect than originally estimated and requires considerably larger samples to reliability detect than those commonly used in motor learning research. Consistent with these ideas, a recent meta-analysis provided compelling evidence that the self-controlled learning advantage is not robust and its prominence in the motor learning literature is due to selective publication of statistically significant results (McKay et al., 2022). We estimated the overall effect of self-controlled practice in retention collapsed across experiments to be significantly smaller than any effect larger than g = .23. This is consistent with the estimates from McKay et al. (2022) after accounting for publication bias (g = -.11 to .26), which suggested either no effect or a small effect in an unknown direction. Taken together, we argue that it may be time for the self-controlled learning advantage to be considered a non-replicable effect in motor learning.

Given our current replication failure with those in recent years (Bacelar et al., 2022; Grand et al., 2017; Leiker et al., 2019; McKay & Ste-Marie 2020, 2022; St. Germain et al., 2022; Yantha et al., 2022) and the conclusions from McKay et al., (2022), motivational (i.e., OPTIMAL theory) versus information-processing explanations seem moot. Nevertheless, the present results are incompatible with both perspectives.Footnote 5 Specifically, having choice opportunities during practice did not enhance perceptions of autonomy, competence, or intrinsic motivation in either experiment, inconsistent with OPTIMAL theory. Similarly, self-controlled feedback schedules did not enhance error estimation skills compared to yoked schedules (see Supplementary B) and choice did not interact with feedback characteristics, inconsistent with the information-processing perspective. Instead, the results from Experiments 1 and 2 suggest that feedback characteristics were a more important determinant of motor performance during acquisition and delayed tests of learning than the opportunity to choose. When feedback provided information about the direction of an error or when it contained both direction and magnitude of an error, participants were able to improve at the task throughout acquisition and retain these improvements in skill relative to pre-test. However, when feedback was binary and direction and magnitude of an error was absent, there was no improvement in skill from baseline levels. This is in contrast with past research that has shown that people can learn motor tasks with binary feedback (e.g., Cashaback et al., 2017, 2019; Izawa and Shadmehr 2011). One possible explanation for this discrepancy may be the amount of practice trials (Magill and Wood, 1986). Practicing with binary feedback may inherently require a longer training period for learning to occur compared to graded and error feedback, which both have greater precision. Additionally, we used a strict criteria with binary feedback where any outcome other than zero error was considered a miss. Thus, binary feedback may be more effective when paired with a tolerance zone such as that used in the bandwidth technique (see Anderson et al., 2020 for a review; Cauraugh et al., 1993; Lee and Carnahan 1990).

Although unexpected, the influence of feedback characteristics on perceptions of competence may hint to a dissociation between informational and motivational impacts of knowledge-of-results feedback. In Experiment 1, participants who received graded feedback reported significantly lower perceptions of competence than participants who received error feedback. Yet, despite these lower expectations for success, the graded feedback groups did not demonstrate degraded performance or learning compared to the error feedback group. Participants in Experiment 2 who received binary feedback reported the lowest perceptions of competence and were also the only participants who did not show improvements in task performance from pre-test. The number of “hits” for the spatial and timing goals were quite low for all groups. Although this may have impacted perceptions of competence, the relatively low “hit” rate did not seem to differentially impact intrinsic motivation as self-reported levels were quite similar for all groups. Future research is necessary to better understand this dissociation of informational and motivational influences of feedback characteristics and how it interacts with the task, individual, and environment.

In two experiments, we failed to observe the predicted benefits of self-controlled feedback on motor learning. Similarly, we failed to find the predicted motivational and informational consequences of choice in either experiment, challenging both the OPTIMAL theory and information-processing explanation of the so-called self-controlled learning advantage. Although the present experiments were not pre-registered, the analysis plan was determined prior to viewing the data. In addition, a suite of sensitivity analyses were conducted to determine the extent to which the present results depended on the chosen analysis methods (see Supplementary A). The sensitivity analyses supported the conclusions of the primary analyses and are consistent with research that has followed pre-registered analysis plans (Bacelar et al., 2022; Grand et al., 2017; Leiker et al., 2019; McKay & Ste-Marie 2020, 2022; St. Germain et al., 2022; Yantha et al., 2022). Lastly, our results and conclusions are in line with a recent meta-analysis (McKay et al., 2022) that suggests the apparent benefits of self-controlled practice are due to selection bias rather than true effects.

Data availability

Can be accessed here: https://github.com/cartermaclab/expt_sc-feedback-characteristics.

Notes

Others have referred to error feedback as quantitative feedback and graded feedback as qualitative feedback (e.g., Magill & Wood, 1986). We use the terminology error, graded, and binary feedback because graded and binary feedback are different forms of qualitative feedback.

Six participants (three Choice+Error-Feedback and three Choice+Graded-Feedback) had their second session completed approximately 48 h later because a snowstorm closed the university.

The questionnaires can be found in the publicly available project repository in the materials directory.

We report an estimate for retention tests to facilitate comparison to a recent meta-analysis (McKay et al., 2022) that produced estimated effects of self-controlled learning at retention specifically.

Although the lack of performance improvements in Experiment 2 are compatible with the information-processing perspective, we do not interpret this as support for this view over the motivational one given the conclusions from McKay et al., (2022) recent meta-analysis.

References

Anderson, D.I., Magill, R.A., Mayo, A.M., & Steel, K.A. (2020). Enhancing motor skill acquisition with augmented feedback. In Skill acquisition in sport: Research, theory and practice. (3rd edn.) Routledge.

Aust, F., & Barth, M. (2020). papaja: Prepare reproducible APA journal articles with R Markdown. https://github.com/crsh/papaja

Bacelar, M.F.B., Parma, J.O., Cabral, D., Daou, M., Lohse, K.R., & Miller, M.W. (2022). Dissociating the contributions of motivational and information processing factors to the self-controlled feedback learning benefit. Psychology of Sport and Exercise, 59, 102119. https://doi.org/10.1016/j.psychsport.2021.102119

Bakeman, R. (2005). Recommended effect size statistics for repeated measures designs. Behavior Research Methods, 37(3), 379–384. https://doi.org/10.3758/bf03192707

Barros, J.A.C., Yantha, Z.D., Carter, M.J., Hussien, J., & Ste-Marie, D.M. (2019). Examining the impact of error estimation on the effects of self-controlled feedback. Human Movement Science, 63, 182–198. https://doi.org/10.1016/j.humov.2018.12.002

Barth, M. (2022). tinylabels: Lightweight variable labels. https://cran.r-project.org/package=tinylabels

Button, K.S., Ioannidis, J.P.A., Mokrysz, C., Nosek, B.A., Flint, J., Robinson, E.S.J., & Munafò, M. R. (2013). Power failure: why small sample size undermines the reliability of neuroscience. Nature Reviews Neuroscience, 14(5), 365–376. https://doi.org/10.1038/nrn3475

Carter, M.J., Carlsen, A.N., & Ste-Marie, D.M. (2014). Self-controlled feedback is effective if it is based on the learner’s performance: A replication and extension of Chiviacowsky and Wulf (2005). Frontiers in Psychology, 5, 1–10. https://doi.org/10.3389/fpsyg.2014.01325

Carter, M.J., & Patterson, J.T. (2012). Self-controlled knowledge of results: Age-related differences in motor learning, strategies, and error detection. Human Movement Science, 31(6), 1459–1472. https://doi.org/10.1016/j.humov.2012.07.008

Carter, M.J., Rathwell, S., & Ste-Marie, D.M. (2016). Motor skill retention is modulated by strategy choice during self-controlled knowledge of results schedules. Journal of Motor Learning and Development, 4, 100–115. https://doi.org/10.1123/jmld.2015-0023

Carter, M.J., & Ste-Marie, D.M. (2017a). Not all choices are created equal: Task-relevant choices enhance motor learning compared to task-irrelevant choices. Psychonomic Bulletin & Review, 24(6), 1879–1888. https://doi.org/10.3758/s13423-017-1250-7

Carter, M.J., & Ste-Marie, D.M. (2017b). An interpolated activity during the knowledge-of-results delay interval eliminates the learning advantages of self-controlled feedback schedules. Psychological Research Psychologische Forschung, 81(2), 399–406. https://doi.org/10.1007/s00426-016-0757-2

Cashaback, J.G.A., Lao, C.K., Palidis, D.J., Coltman, S.K., McGregor, H.R., & Gribble, P.L. (2019). The gradient of the reinforcement landscape influences sensorimotor learning. PLoS Computational Biology, 15(3), e1006839. https://doi.org/10.1371/journal.pcbi.1006839

Cashaback, J.G.A., McGregor, H.R., Mohatarem, A., & Gribble, P.L. (2017). Dissociating error-based and reinforcement-based loss functions during sensorimotor learning. PLoS Computational Biology, 13 (7), e1005623. https://doi.org/10.1371/journal.pcbi.1005623

Cauraugh, J.H., Chen, D., & Radio, S.J. (1993). Effects of traditional and reversed bandwidth knowledge of results on motor learning. Research Quarterly for Exercise and Sport, 64(4), 413–417. https://doi.org/10.1080/02701367.1993.10607594

Chiviacowsky, S., & Wulf, G. (2005). Self-controlled feedback is effective if it is based on the learner’s performance. Research Quarterly for Exercise and Sport, 76(1), 42–48. https://doi.org/10.1080/02701367.2005.10599260

Chiviacowsky, S., & Wulf, G. (2002). Self-controlled feedback: Does it enhance learning because performers get feedback when they need it? Research Quarterly for Exercise and Sport, 73(4), 408–415.

Couvillion, K.F., Bass, A.D., & Fairbrother, J.T. (2020). Increased cognitive load during acquisition of a continuous task eliminates the learning effects of self-controlled knowledge of results. Journal of Sports Sciences, 38(1), 94–99. https://doi.org/10.1080/02640414.2019.1682901

Faul, F., Erdfelder, E., Buchner, A., & Lang, A. -G. (2009). Statistical power analyses using g*power 3.1: Tests for correlation and regression analyses. Behavior Research Methods, 41(4), 1149–1160. https://doi.org/10.3758/BRM.41.4.1149

Goode, K., & Rey, K. (2022). ggResidpanel: Panels and interactive versions of diagnostic plots using ’ggplot2’. https://goodekat.github.io/ggResidpanel/

Grand, K.F., Bruzi, A.T., Dyke, F.B., Godwin, M.M., Leiker, A.M., Thompson, A.G., ..., Miller, M.W. (2015). Why self-controlled feedback enhances motor learning: Answers from electroencephalography and indices of motivation. Human Movement Science, 43, 23–32. https://doi.org/10.1016/j.humov.2015.06.013

Grand, K.F., Daou, M., Lohse, K.R., & Miller, M.W. (2017). Investigating the mechanisms underlying the effects of an incidental choice on motor learning. Journal of Motor Learning and Development, 5 (2), 207–226. https://doi.org/10.1123/jmld.2016-0041

Harms, C., & Lakens, D. (2018). Making ’null effects’ informative: Statistical techniques and inferential frameworks. Translational Research, 3(Suppl 2), 382–393. https://doi.org/10.18053/jctres.03.2017S2.007

Henry, F.M. (1974). Variable and constant performance errors within a group of individuals. Journal of Motor Behavior, 6(3), 149–154. https://doi.org/10.1080/00222895.1974.10734991

Henry, F.M. (1975). Absolute error vs “e” in target accuracy. Journal of Motor Behavior, 7(3), 227–228. https://doi.org/10.1080/00222895.1975.10735039

Iwatsuki, T., Abdollahipour, R., Psotta, R., Lewthwaite, R., & Wulf, G. (2017). Autonomy facilitates repeated maximum force productions. Human Movement Science, 55, 264–268. https://doi.org/10.1016/j.humov.2017.08.016

Iwatsuki, T., Navalta, J.W., & Wulf, G. (2018). Autonomy enhances running efficiency. Journal of Sports Sciences, 37(6), 685–691. https://doi.org/10.1080/02640414.2018.1522939

Izawa, J., & Shadmehr, R. (2011). Learning from sensory and reward prediction errors during motor adaptation. PLoS Computational Biology, 7(3), e1002012. https://doi.org/10.1371/journal.pcbi.1002012

Lakens, D. (2017). Equivalence tests: A practical primer for t tests, correlations, and meta-analyses. Social Psychological and Personality Science, 8(4), 355–362. https://doi.org/10.1177/1948550617697177

Lakens, D. (2013). Calculating and reporting effect sizes to facilitate cumulative science: A practical primer for t-tests and ANOVAs. Frontiers in Psychology, 4, 863. https://doi.org/10.3389/fpsyg.2013.00863

Lakens, D., & Evers, E.R.K. (2014). Sailing from the seas of chaos into the corridor of stability: Practical recommendations to increase the informational value of studies. Perspectives on Psychological Science, 9(3), 278–292.

Laughlin, D.D., Fairbrother, J.T., Wrisberg, C.A., Alami, A., Fisher, L.A., & Huck, S.W. (2015). Self-control behaviors during the learning of a cascade juggling task. Human Movement Science, 41, 9–19. https://doi.org/10.1016/j.humov.2015.02.002

Lee, T.D., & Carnahan, H. (1990). Bandwidth knowledge of results and motor learning: More than just a relative frequency effect. The Quarterly Journal of Experimental Psychology, 42(4), 777–789. https://doi.org/10.1080/14640749008401249

Leiker, A.M., Pathania, A., Miller, M.W., & Lohse, K.R. (2019). Exploring the neurophysiological effects of self-controlled practice in motor skill learning. Journal of Motor Learning and Development, 7(1), 13–34. https://doi.org/10.1123/jmld.2017-0051

Lewthwaite, R., Chiviacowsky, S., Drews, R., & Wulf, G. (2015). Choose to move: The motivational impact of autonomy support on motor learning. Psychonomic Bulletin & Review, 22(5), 1383–1388. https://doi.org/10.3758/s13423-015-0814-7

Lohse, K.R., Buchanan, T., & Miller, M.W. (2016). Underpowered and overworked: Problems with data analysis in motor learning studies. Journal of Motor Learning and Development, 4(1), 37–58. https://doi.org/10.1123/jmld.2015-0010

Luft, C.D.B. (2014). Learning from feedback: The neural mechanisms of feedback processing facilitating better performance. Behavioural Brain Research, 261, 356–368. https://doi.org/10.1016/j.bbr.2013.12.043

Magill, R.A., & Wood, C.A. (1986). Knowledge of results precision as a learning variable in motor skill acquisition. Research Quarterly for Exercise and Sport, 57(2), 170–173. https://doi.org/10.1080/02701367.1986.10762195

McAuley, E., Duncan, T., & Tammen, V.V. (1989). Psychometric properties of the intrinsic motivation inventory in a competitive sport setting: A confirmatory factor analysis. Research Quarterly for Exercise and Sport, 60(1), 48–58. https://doi.org/10.1080/02701367.1989.10607413

McKay, B., Carter, M.J., & Ste-Marie, D.M. (2014). Self-controlled learning: A meta analysis. Journal of Sport and Exercise Psychology, 36, s1.

McKay, B., & Ste-Marie, D.M. (2020). Autonomy support and reduced feedback frequency have trivial effects on learning and performance of a golf putting task. Human Movement Science, 71, 102612. https://doi.org/10.1016/j.humov.2020.102612

McKay, B., & Ste-Marie, D.M. (2022). Autonomy support via instructionally irrelevant choice not beneficial for motor performance or learning. Research Quarterly for Exercise and Sport, 93, 64–76. https://doi.org/10.1080/02701367.2020.1795056

McKay, B., Yantha, Z.D., Hussien, J., Carter, M.J., & Ste-Marie, D.M. (2022). Meta-analytic findings in the self-controlled motor learning literature: Underpowered, biased, and lacking evidential value. Meta-Psychology, 6, 1–32. https://doi.org/10.15626/MP.2021.2803

Munafò, M. R., Nosek, B.A., Bishop, D.V.M., Button, K.S., Chambers, C.D., Percie du Sert, N., ..., Ioannidis, J. (2017). A manifesto for reproducible science. Nature Human Behaviour, 1(1), 1–9. https://doi.org/10.1038/s41562-016-0021

Oldfield, R.C. (1971). The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia, 9(1), 97–113. https://doi.org/10.1016/0028-3932(71)90067-4

Olejnik, S., & Algina, J. (2003). Generalized eta and omega squared statistics: Measures of effect size for some common research designs. Psychological Methods, 8(4), 434–447. https://doi.org/10.1037/1082-989X.8.4.434

Pathania, A., Leiker, A.M., Euler, M., Miller, M.W., & Lohse, K.R. (2019). Challenge, motivation, and effort: Neural and behavioral correlates of self-control of difficulty during practice. Biological Psychology, 141, 52–63. https://doi.org/10.1016/j.biopsycho.2019.01.001

Pedersen, T.L. (2020). Patchwork: The composer of plots. https://patchwork.data-imaginist.com/

R Core Team (2021). R: A language and environment for statistical computing. R Foundation for Statistical Computing. https://www.R-project.org/

Re, A.C.D. (2013). Compute.es: Compute effect sizes. In: R Package. https://cran.r-project.org/package=compute.es

Rousselet, G.A., Pernet, C.R., & Wilcox, R.R. (2017). Beyond differences in means: Robust graphical methods to compare two groups in neuroscience. European Journal of Neuroscience, 46(2), 1738–1748.

Rousselet, G.A., & Wilcox, R.R. (2020). Reaction times and other skewed distributions: Problems with the mean and the median. Meta-Psychology, 4, 1–39.

Ryan, R.M. (1982). Control and information in the intrapersonal sphere: An extension of cognitive evaluation theory. Journal of Personality and Social Psychology, 43(3), 450–461. https://doi.org/10.1037/0022-3514.43.3.450

Salmoni, A.W., Schmidt, R.A., & Walter, C.B. (1984). Knowledge of results and motor learning: A review and critical reappraisal. Psychological Bulletin, 95(3), 355–386. https://doi.org/10.1037/0033-2909.95.3.355

Sanli, E.A., Patterson, J.T., Bray, S.R., & Lee, T.D. (2013). Understanding self-controlled motor learning protocols through the self-determination theory. Frontiers in Psychology, 3. https://doi.org/10.3389/fpsyg.2012.00611

Schuirmann, D.J. (1987). A comparison of the two one-sided tests procedure and the power approach for assessing the equivalence of average bioavailability. Journal of Pharmacokinetics and Biopharmaceutics, 15(6), 657–680. https://doi.org/10.1007/BF01068419

Sherwood, D.E. (1996). The benefits of random variable practice for spatial accuracy and error detection in a rapid aiming task. Research Quarterly for Exercise and Sport, 67(1), 35–43. https://doi.org/10.1080/02701367.1996.10607923

Sherwood, D.E. (2009). Spatial error detection in rapid unimanual and bimanual aiming movements. Perceptual and Motor Skills, 108(1), 3–14. https://doi.org/10.2466/PMS.108.1.3-14

Sigrist, R., Rauter, G., Riener, R., & Wolf, P. (2013). Augmented visual, auditory, haptic, and multimodal feedback in motor learning: A review. Psychonomic Bulletin & Review, 20(1), 21–53. https://doi.org/10.3758/s13423-012-0333-8

Simmons, J.P., Nelson, L.D., & Simonsohn, U. (2012). A 21 Word Solution. https://doi.org/10.2139/ssrn.2160588

Simmons, J.P., Nelson, L.D., & Simonsohn, U. (2011). False-positive psychology: Undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychological Science, 22(11), 1359–1366. https://doi.org/10.1177/0956797611417632

Singmann, H., Bolker, B., Westfall, J., Aust, F., & Ben-Shachar, M.S. (2021). Afex: Analysis of factorial experiments. https://CRAN.R-project.org/package=afex

St. Germain, L., Williams, A., Balbaa, N., Poskus, A., Leshchyshen, O., Lohse, K.R., & Carter, M.J (2022). Increased perceptions of autonomy through choice fail to enhance motor skill retention. Journal of Experimental Psychology: Human Perception and Performance, 48(4), 370–379. https://doi.org/10.1037/xhp0000992

Ste-Marie, D. M., Carter, M. J., & Yantha, Z. D. (2020). Self-controlled learning: Current findings, theoretical perspectives, and future directions. In Skill acquisition in sport: Research, theory and practice. (3rd edn.) Routledge.

Viechtbauer, W. (2010). Conducting meta-analyses in R with the metafor package. Journal of Statistical Software, 36(3), 1–48. https://doi.org/10.18637/jss.v036.i03

Wickham, H., Averick, M., Bryan, J., Chang, W., McGowan, L.D., François, R., ..., Yutani, H (2019). Welcome to the tidyverse. Journal of Open Source Software, 4(43), 1686. https://doi.org/10.21105/joss.01686

Wilcox, R.R. (2021). Introduction to robust estimation and hypothesis testing (5th edn). Academic Press.

Woodard, K.F., & Fairbrother, J.T. (2020). Cognitive loading during and after continuous task execution alters the effects of self-controlled knowledge of results. Frontiers in Psychology 11. https://doi.org/10.3389/fpsyg.2020.01046

Wulf, G., Freitas, H.E., & Tandy, R.D. (2014). Choosing to exercise more: Small choices increase exercise engagement. Psychology of Sport and Exercise, 15(3), 268–271. https://doi.org/10.1016/j.psychsport.2014.01.007

Wulf, G., Iwatsuki, T., Machin, B., Kellogg, J., Copeland, C., & Lewthwaite, R. (2018). Lassoing skill through learner choice. Journal of Motor Behavior, 50(3), 285–292. https://doi.org/10.1080/00222895.2017.1341378

Wulf, G., & Lewthwaite, R. (2016). Optimizing performance through intrinsic motivation and attention for learning: The OPTIMAL theory of motor learning. Psychonomic Bulletin & Review, 23(5), 1382–1414. https://doi.org/10.3758/s13423-015-0999-9

Yantha, Z. D., McKay, B., & Ste-Marie, D. M. (2022). The recommendation for learners to be provided with control over their feedback schedule is questioned in a self-controlled learning paradigm. Journal of Sports Sciences, 40(7), 769–782. https://doi.org/10.1080/02640414.2021.2015945

Zhu, H. (2021). kableExtra: Construct complex table with ’kable’ and pipe syntax. https://CRAN.R-project.org/package=kableExtra

Funding

This work was supported by the Natural Sciences and Engineering Research Council (NSERC) of Canada (RGPIN-2018-05589; MJC) and McMaster University (MJC). LSG was supported by an NSERC Postgraduate Scholarship. JGAC was supported by an NIH U45GM104941 grant. AW and AP were supported by NSERC Undergraduate Student Research Awards.

Author information

Authors and Affiliations

Contributions

Conceptualization (LSG, BM, JGAC, MJC); Data curation (LSG, BM, MJC); Formal analysis (LSG); Funding acquisition (MJC); Investigation (LSG, AP, AW, OL, SF); Methodology (LSG, BM, JGAC, MJC); Project administration (LSG, MJC); Software (LSG, MJC); Supervision (LSG, MJC); Validation (BM, MJC); Visualization (LSG, BM, JGAC, MJC); Writing – original draft (LSG, BM, AP, AW, OL, SF, JGAC, MJC); Writing – review & editing (LSG, BM, AP, AW, OL, SF, JGAC, MJC)

Corresponding authors

Ethics declarations

Ethics approval

This project was approved and conducted in accordance with the approval of the McMaster University Research Ethics Board.

Consent to participate

All participants gave informed consent.

Consent for Publication

All authors approve the manuscript.

Competing interests

None.

Conflict of interest

None.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

ESM 1

(PDF 363 KB)

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

St. Germain, L., McKay, B., Poskus, A. et al. Exercising choice over feedback schedules during practice is not advantageous for motor learning. Psychon Bull Rev 30, 621–633 (2023). https://doi.org/10.3758/s13423-022-02170-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-022-02170-5