Abstract

The item-specific proportion congruency (ISPC) effect—that Stroop effects are reduced for items that are more likely to be incongruent than congruent—indicates that humans have the remarkable capacity to resolve conflict when it is associated with statistical regularities in the environment. It has been demonstrated that an ISPC signal induced by mostly congruent and mostly incongruent inducer items transfers to a set of distinct but visually similar transfer items that are equally likely to be congruent and incongruent; however, it is unclear what the ISPC signal is associated with to allow its transfer. To investigate this issue, an animal Stroop task was used to evaluate whether the ISPC signal would transfer to animal pictures that were different but visually similar same-category members (e.g., retrievers to retrievers, Experiment 1), visually dissimilar same-category members with broadly similar features (e.g., retrievers to bulldogs, Experiment 2), and visually dissimilar different-category members with broadly similar features (e.g., retrievers to house cats, Experiment 3). It was revealed that an ISPC effect was observed for the transfer items of each experiment, suggesting that these conflict signals can be linked based on broad feature similarity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Even without conscious awareness, humans have the remarkable ability to resolve conflict based on statistical regularities in the visual environment. A way conflict can be measured is by using the Stroop (1935) task, where an observer must identify the perceptual color of a color word that can be either congruent (e.g., the word “red” displayed in red) or incongruent (e.g., the word “blue” displayed in yellow) with its semantic color. The well-known Stroop effect is that observers can more quickly identify the perceptual color of the color word when it is congruent than incongruent. The general notion underlying this result is that Stroop performance is slowed for incongruent relative to congruent items because the observer must suppress the automatic tendency of reading the word.

While the Stroop effect is extremely robust, it can be affected by both strategic and associative learning processes. An example of this is the congruency sequence effect (Gratton et al., 1992; Schmidt & De Houwer, 2011). That is, incongruent Stroop items are more quickly responded to when the observer responded to an incongruent than to a congruent item on the previous trial. Lindsay and Jacoby (1994) demonstrated that the Stroop effect can also be affected by what has been termed the proportion congruency effect (see also Logan & Zbrodoff, 1979; Logan et al., 1984; Lowe & Mitterer, 1982). Here, participants performed a Stroop task where the proportion of incongruent Stroop items was manipulated within a block of trials such that blocks could contain either a relatively high or low proportion of incongruent items. They showed that the Stroop effect was attenuated when participants performed trials of the mostly incongruent (MI) than mostly congruent (MC) block, suggesting that participants had some strategic control over their ability to resolve Stroop conflict.

As an important extension of the proportion congruency effect, Jacoby et al. (2003) varied the likelihood that particular Stroop items were congruent or incongruent within a block of trials. For example, in one of the counterbalancing schemes, the words “red,” “yellow,” and “white” were assigned to a MC group such that they were congruent on 80% of trials, and the words “blue,” “black,” and “green” were assigned to a MI group such that they were incongruent on 80% of trials. Despite there being an overall equal proportion of congruent and incongruent items, Stroop effects were smaller for items belonging to the MI than to the MC group. This phenomenon has been termed the item-specific proportion congruency (ISPC) effect, and Jacoby et al. speculated it was due to either an associative learning process that linked particular words to particular colors or an inhibitory process that suppressed the word reading of items that were likely to be incongruent.

While there is a robust literature on the ISPC effect (Braem et al., 2019; Bugg & Crump, 2012; Schmidt, 2013), it is not clear how conflict is signalled to the observer so it may be efficiently resolved (Bugg & Hutchison, 2013; Cochrane & Pratt, 2022; Schmidt & Besner, 2008; Spinelli & Lupker, 2020). Bugg et al. (2011; Bugg & Dey, 2018) made headway on this issue by investigating the ISPC effect using an animal Stroop task (although see Schmidt, 2014, 2019). Instead of using color words, participants were shown line drawings of animals (i.e., birds, cats, dogs, or fish) that were overlaid by animal words (i.e., “bird,” “cat,” “dog”, or “fish”). Much like the color Stroop task, participants more quickly responded to the animal pictures that were overlaid by congruent rather than incongruent words. Further, these stimuli also produced the ISPC effect, such that Stroop effects were reduced for the animal picture–word pairings that belonged to the MI than MC grouping. The ingenuity of this study was that, in addition to the animal picture–word pairings of the MC and MI groups (i.e., inducer items), there were also unique animal pictures of the same animal categories as the inducer items that were equally likely to be congruent or incongruent with the overlaid word (i.e., transfer items). Despite this equal likelihood, Stroop effects were reduced for transfer items that belonged to the same animal category as the inducer items of the MI than MC group.

The finding of Bugg et al. (2011) offers valuable insight into the basis of the ISPC signal as it indicated that conflict was not linked to precise stimulus representations (see also Bugg et al., 2008). At the same time, it is not clear the extent to which the ISPC signal can transfer. That is, while the transfer items used by Bugg et al. were always unique pictures relative to the inducer items, they were also almost always nearly identical. For example, both inducer and transfer items of the bird category were always similar-looking songbirds, and both inducer and transfer items of the dog category were always similar-looking retrievers, and so forth (although see Bugg & Dey, 2018, Experiment 4b).

Accordingly, the present study investigated the extent to which the ISPC signal transferred based on feature similarity and category belongingness. Experiment 1 evaluated whether the ISPC signal would transfer to visually similar animal pictures like that demonstrated by Bugg et al. (2011; Bugg & Dey, 2018; e.g., retrievers to retrievers). Experiment 2 tested if the ISPC signal would transfer to animal pictures of the same animal category that were visually dissimilar but broadly shared visual features (e.g., retrievers to bulldogs). Experiment 3 then examined whether the ISPC signal would transfer to dissimilar animal pictures of a different ontological category that broadly shared visual features (e.g., retrievers to house cats). If close visual similarity is necessary for the transfer of the ISPC signal, ISPC transfer should only occur in Experiment 1. If broad feature similarity is sufficient for the transfer of the ISPC signal, ISPC transfer should occur in Experiments 1 and 2. If broad feature similarity is sufficient for the transfer of the ISPC signal across ontological categories, ISPC transfer should occur in all three experiments.

Experiment 1

The purpose of Experiment 1 was to evaluate whether the ISPC signal transferred to visually similar animal pictures—thus, being a close replication of Bugg and Dey (2018, Experiment 1).

Method

Participants

Thirty-six undergraduates at the University of Toronto participated in exchange for course credit (22 female, 17–24 years, M = 18.36 years). All participants reported normal or corrected-to-normal vision and to be native English speakers. A sample size of 36 participants was chosen based on the sample size of Bugg and Dey (2018), which was reported to have greater than .95 power for an alpha level of .05.

Apparatus and stimuli

The experiment was conducted using PsychoPy (Version 3.1.5) and an LED monitor with a 144-Hz refresh rate. All displays had a white background with a luminance value of 98.11 cd/m2. The stimuli were identical to those of Bugg and Dey (2018; Bugg et al., 2011), which were 12 line drawings of animals: three birds, three cats, three dogs, and three fish. The bird drawings were songbirds, the cat drawings were shorthaired house cats, the dog drawings were retrievers, and the fish drawings were typical Osteichthyes. All line drawings subtended approximately 10° of horizontal and vertical visual angle. The words “bird,” “cat,” “dog,” and “fish” were capitalized and presented in Helvetica font and superimposed over each line drawing. Each of these words subtended an approximate vertical visual angle of 1° and a horizontal visual angle of 3°. Both words and line drawings were displayed in black that had a luminance value of 0.23 cd/m2.

Procedure

Participants were seated approximately 60 cm from the computer monitor. At the beginning of each experimental trial, a blank display was presented for 1,000 to 1,200 ms. Following this display, an animal line drawing was presented on screen with an animal word overlaid. A standing microphone was positioned approximately 10 cm in front of the participants’ mouth. Participants were instructed to name the animal by saying either “cat,” “dog,” “bird,” or “fish” while ignoring the overlaying word. As soon as a vocal response onset was detected by the microphone, the stimulus was removed from the screen. An experimenter remained in the room with participants during the experimental session and encoded each response as either correct, incorrect, or scratch by key press. A scratch trial constituted the situation when the microphone either failed to detect the participants’ first response or detected a sound that did not clearly indicate one of the response words (e.g., “uhh”). To avoid experimenter bias, the experimenter made correct, incorrect, and scratch judgments based on a monitor that only displayed what the correct response ought to be.

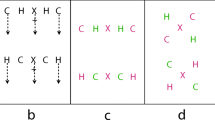

Participants performed three blocks of 144 experimental trials each (432 trials total). There were 216 congruent and 216 incongruent picture–word pairings, which order was randomized over the course of the experimental session. Two of the three animal line drawings in each category were assigned as inducer items and the remaining animal line drawing was assigned as the transfer item. The two inducer items in each animal category were assigned together to either the MC or MI grouping. For the MC inducer items, the picture–word pairings were congruent on 75% of trials and incongruent on 25% of trials. For the MI inducer items, the picture–word pairings were incongruent on 75% of trials and congruent on 25% of trials. For all transfer items, the picture–word pairings were congruent and incongruent on 50% of trials. Both the assignment of the inducer and transfer items as well as the MC and MI groupings were fully counterbalanced across participants. Examples of the picture–word pairings are presented in Fig. 1 and the frequency of the picture–word pairings are depicted in Table 1.

Prior to the experimental trials, participants performed 12 practice trials. These practice trials contained an equal number of congruent and incongruent picture–word pairings without the associated likelihood information of the MC and MI groupings. Prior to the practice session, the experimenter instructed participants to “say aloud whether the animal picture was a bird, dog, cat, or fish, while ignoring the overlaying word.” During the practice session, the microphone was adjusted to ensure vocal responses were being adequately detected, and the experimenter reiterated the instructions if the participant made an incorrect response. Participants were also encouraged to name the animal picture while avoiding extraneous utterances (e.g., “uhh”). During the practice and experimental sessions, when a vocal response was not adequately detected, participants were instructed to move closer to the microphone or to increase their vocal response volume.

Results

Across all experiments, an alpha criterion of .05 was used to determine statistical significance. For brevity, only analyses relevant to the research question were included in the manuscript—complete analyses of all experiments are reported in the online supplement (osf.io/sywm3). A speed–accuracy trade-off interpretation could not explain any of the results of the present study.

Vocal onset response times (RTs) that were less than 200 ms, greater than 3,000 ms, or were scratch trials were removed from analysis, which removed 3.91% of observations. The remaining correct RTs were submitted to a non-recursive outlier elimination procedure (Van Selst & Jolicoeur, 1994), which ensured observations were not systematically excluded based on the number of observations in each cell. This procedure removed an additional 2.45% of observations. The remaining mean RTs and corresponding error percentages were submitted to a within-subject ANOVA that treated trial congruency (congruent/incongruent), proportion congruency (MC/MI), and item type (inducer/transfer) as factors. The mean RTs and error percentages are depicted in Fig. 2.

The three-way interaction of trial congruency, proportion congruency, and item type (p = .36) and the main effect of item type (p = .61) were not significant. Additional within-subject ANOVAs that treated trial congruency (congruent/incongruent) and proportion congruency (MC/MI) as factors were conducted for the inducer and transfer item types separately. The analysis of the inducer item type revealed a significant interaction of trial congruency and proportion congruency, F(1, 35) = 7.31, p = .011, ηp2 = .17, reflecting a larger Stroop effect for the MC (congruent: M = 594 ms, SD = 49 ms; incongruent: M = 702 ms, SD = 38 ms) than MI (congruent: M = 612 ms, SD = 30 ms; incongruent: M = 696 ms, SD = 27 ms) group. The analysis of the transfer item type also revealed a significant interaction of trial congruency and proportion congruency, F(1, 35) = 13.81, p < .001, ηp2 = .28, reflecting a larger Stroop effect for the MC (congruent: M = 597 ms, SD = 33 ms; incongruent: M = 714 ms, SD = 59 ms) than MI (congruent: M = 600 ms, SD = 34 ms; incongruent: M = 684 ms, SD = 34 ms) group.

Discussion

The present findings revealed that the ISPC effect was present for both the inducer and transfer items, and the ISPC effect did not differ across the inducer and transfer item types. This confirms that the findings of Bugg and Dey (2018; Bugg et al., 2011) are observable upon replication—that the ISPC signal can transfer to visually similar members of the same category.

Experiment 2

Having confirmed that the ISPC signal can transfer to stimuli that are unique but visually similar category members, we now investigate whether the ISPC signal can transfer to visually dissimilar animal pictures of the same animal category that shared broadly similar visual features.

Method

Participants

Thirty-six undergraduates at the University of Toronto participated in exchange for course credit (22 female, ages 17–22 years, M = 18.75 years). All participants reported normal or corrected-to-normal vision and to be native English speakers.

Apparatus and stimuli

The apparatus and stimuli were identical to Experiment 1 except the transfer items were now visually distinct line drawings of the same animal category as the inducer items. Specifically, the bird transfer item was now a line drawing of an ostrich, the dog transfer item was now a line drawing of a bulldog, the cat transfer item was now a line drawing of a hairless cat, and the fish transfer item was now a line drawing of a puffer fish.

Procedure

The procedure was identical to that of Experiment 1.

Results

Vocal onset RTs that were less than 200 ms, greater than 3,000 ms, or were scratch trials were removed from analysis, which removed 5.66% of observations. The nonrecursive outlier elimination procedure (Van Selst & Jolicoeur, 1994) removed an additional 2.10% of observations. The remaining mean RTs and corresponding error percentages were submitted to a within-subject ANOVA that treated trial congruency (congruent/incongruent), proportion congruency (MC/MI), and item type (inducer/transfer) as factors. The mean RTs and error percentages are depicted in Fig. 3.

The mean RTs and error percentages of Experiment 2. The error bars represent the standard error of the mean corrected to remove between-subject variability (Cousineau, 2005; Morey, 2008)

The three-way interaction of trial congruency, proportion congruency, and item type (p = .72) and the main effect of item type (p = .26) were not significant. Additional within-subject ANOVAs that treated trial congruency (congruent/incongruent) and proportion congruency (MC/MI) as factors were conducted for the inducer and transfer item types separately. The analysis of the inducer item type revealed a significant interaction of trial congruency and proportion congruency, F(1, 35) = 14.28, p < .001, ηp2 = .29, reflecting a larger Stroop effect for the MC (congruent: M = 607 ms, SD = 31 ms; incongruent: M = 718 ms, SD = 38 ms) than MI (congruent: M = 623 ms, SD = 30 ms; incongruent: M = 708 ms, SD = 25 ms) group. The analysis of the transfer item type also revealed a significant interaction of trial congruency and proportion congruency, F(1, 35) = 6.95, p = .012, ηp2 = .17, reflecting a larger Stroop effect for the MC (congruent: M = 623 ms, SD = 28 ms; incongruent: M = 728 ms, SD = 58 ms) than MI (congruent: M = 620 ms, SD = 34 ms; incongruent: M = 703 ms, SD = 29 ms) group.

Discussion

Like Experiment 1, the present findings revealed that the ISPC effect was present, and did not differ, for inducer and transfer items. Thus, an ISPC signal can transfer to visually dissimilar members of the same category.

Experiment 3

In this experiment we will determine if conflict signals can cross category boundaries. To do so, we will use broadly similar animal pictures of different animal categories.

Method

Participants

Thirty-six undergraduates at the University of Toronto participated in exchange for course credit (22 female, ages 18–22 years, M = 18.49 years). All participants reported normal or corrected-to-normal vision and to be native English speakers.

Apparatus and stimuli

The apparatus and stimuli were identical to previous experiments with two exceptions. First, we introduced a bug inducer category that was composed of three line drawings of ants, and the cat category was removed. Second, the transfer items were now visually distinct animal line drawings of a different animal category relative to the inducer items, such that the transfer item of the bird category was now a line drawing of a pterodactyl, the transfer item of the bug category was a crab, the transfer item of the dog category was now a shorthaired house cat (i.e., one of the cat line drawings from Experiments 1 and 2), and the transfer item of the fish category was now a whale.

Procedure

The procedure was identical to the previous experiments with three exceptions. First, the bug inducer and transfer items were included, which participants responded to by saying “bug” aloud. Second, prior to the practice session, one inducer item and the transfer item of each animal category were displayed sequentially on the computer monitor, and participants were required to indicate the animal category the item belonged to by typing their response into a textbox presented beneath each line drawing. The line drawings did not include the overlaying word of the animal Stroop task. Third, at the beginning of the practice session, participants were instructed that they were to label the transfer items in accordance with their corresponding inducer category. For example, if the transfer item was the whale picture participants were to say “fish” aloud and so forth.

Results

All participants correctly labelled the animal categories of the inducer and transfer items in the initial evaluation, barring one participant who labelled the pterodactyl as “dragon.” Superordinate (e.g., a response of “dinosaur” for pterodactyl) and subordinate (e.g., a response of “finch” for the songbird) classifications were deemed correct responses. During the experimental trials, mislabeling the transfer items with their ontological category (e.g., a response of “whale” to the whale transfer item) were extremely rare, as these errors accounted for 0.05% of observations.

Vocal onset RTs that were less than 200 ms, greater than 3,000 ms, or were scratch trials were removed from analysis, which removed 1.81% of observations. The nonrecursive outlier elimination procedure (Van Selst & Jolicoeur, 1994) removed an additional 1.63% of observations. The remaining mean RTs and corresponding error percentages were submitted to a within-subject ANOVA that treated trial congruency (congruent/incongruent), proportion congruency (MC/MI), and item type (inducer/transfer) as factors. The mean RTs and error percentages are depicted in Fig. 4.

The three-way interaction of trial congruency, proportion congruency, and item type (p = .63) was not significant. There was a significant main effect of item type, F(1, 35) = 121.81, p < .001, ηp2 = .78, reflecting faster responses to the inducer than transfer items. Additional within-subject ANOVAs that treated trial congruency (congruent/incongruent) and proportion congruency (MC/MI) as factors were conducted for the inducer and transfer item types separately. The analysis of the inducer item type revealed a significant interaction of trial congruency and proportion congruency, F(1, 35) = 8.88, p = .005, ηp2 = .20, reflecting a larger Stroop effect for the MC (congruent: M = 685 ms, SD = 43 ms; incongruent: M = 793 ms, SD = 51 ms) than MI (congruent: M = 714 ms, SD = 52 ms; incongruent: M = 799 ms, SD = 46 ms) group. The analysis of the transfer item type also revealed a significant interaction of trial congruency and proportion congruency, F(1,35) = 6.79, p = .013, ηp2 = .16, reflecting a larger Stroop effect for the MC (congruent: M = 758 ms, SD = 49 ms; incongruent: M = 875 ms, SD = 57 ms) than MI (congruent: M = 769 ms, SD = 38 ms; incongruent: M = 857 ms, SD = 49 ms) group.

Discussion

Like the previous experiments, the present findings revealed that the ISPC effect was present for both the inducer and transfer items and did not differ between item types. Thus, the ISPC signal can transfer to visually dissimilar members of a different animal category based on broad feature similarity.

Experiment 4

The conclusions of the previous experiments rely on the presumed difference between similar and dissimilar stimuli. To confirm this important presumption, we had participants perform a similarity judgment task whereby two animal pictures from the previous experiments were presented and participants rated their similarity on a 5-point Likert scale.

Method

Participants

Sixty-four participants were recruited online (prolific.co) to participate in exchange for £1.25 monetary compensation (34 female, ages 19–25 years, M = 21.77 years). Participants were reported to be Native English speakers, born in North America or the United Kingdom, and under 25 years old. The approximate sample size was determined a priori.

Apparatus and stimuli

The experiment was conducted online (pavlovia.org) using PsychoPy (Version 2021.1.2) and was presented in a full screen browser window using the participants’ browser, monitor, and computer. All displays had a gray background. The identical line drawings of the previous experiments with the overlaid word removed were used. The positioning and size of the stimuli were based on a 16-by-10 grid that scaled with the browser window. Each line drawing was presented in a white box that was 5 units of height and width. Two boxes were position 1 unit above the horizontal center of the grid and 3 units left and right of the vertical center of the grid. The 5-point rating scale was 7 units in width and 1.4 units of height and was centered on the vertical center of the grid, and 2.5 units below the horizontal center of the grid. The rating scale contained text in Arial font stating “rate the similarity of the animal pictures” positioned above the scale, the digits one through five positioned above each notch of the scale, and the description of each rating below each notch of the scale. The rating descriptions were “not similar at all,” “slightly similar,” “moderately similar,” “very similar,” and “extremely similar,” respectively.

Procedure

The experiment began with the depiction of an example trial, a QWERTY keyboard with the response keys highlighted, and the experiment instructions, which read:

In this study you will judge the similarity of two animal pictures. Report similarity using the number keys such that 1 = not similar at all, 2 = slightly similar, 3 = moderately similar, 4 = very similar, and 5 = extremely similar. This experiment should take 5–10 minutes and we ask you to please try your best when judging the animal pictures. Press the space bar when you are ready to start.

With the press of the space bar, a blank gray display was presented for 1,000 ms followed by an experimental trial, which was composed of two animal line drawings and the rating scale. Participants then pressed one of the corresponding number keys on their keyboard. Each rating was followed by a 1,000 ms blank gray interval, then the next experimental trial.

The experimental trials were organized into five blocks based on the animal category. Participants pressed the space bar to initiate each block of trials. For each animal category, participants compared the three visually similar category members, the visually dissimilar category member, and the visually dissimilar non-category member animal line drawings to each other. As a baseline, participants also compared each line drawing to itself. The order of each comparison within a block and block order were randomized. Participants performed a total of 65 similarity judgments across the experimental session.

Results and discussion

The similarity ratings when the animal pictures were compared across the different similar same-category, dissimilar same-category, and dissimilar different-category members was the primary dependent variable. The bird, dog, and fish categories were assessed with separate within-subject ANOVAs that treated similar same-category, dissimilar same-category, and dissimilar different-category as levels of the similarity factor. The cat category was assessed with a paired t test that treated similar same-category and dissimilar same-category as levels of the similarity factor. The bug category was assessed with a paired t test that treated similar same-category and dissimilar different-category as levels of the similarity factor. The specific results of the overall analyses and all item-by-item comparisons are reported in the online supplement (osf.io/sywm3). The mean similarity ratings are reported in Figure 5. All analyses revealed significant effects indicating that same-category members were reported as more similar to each other than the visually dissimilar same-category members (all ps < .001), and that the visually dissimilar same-category members were more similar to the similar same-category members than the visually dissimilar different-category members were to the similar same-category members (all ps ≤ .007). Overall, the present experiment suggests that the similar and dissimilar designations were appropriate.

The mean similarity ratings of Experiment 4

General discussion

The present study investigated whether the ISPC signal was linked based on broad visual features. Across Experiments 1 through 3, we found that the ISPC signal transferred to visually similar members of the same category, visually dissimilar members with broadly similar features that were of the same category, and visually dissimilar members with broadly similar features that were of a different ontological category. Experiment 4 confirmed the notion that the transfer items were indeed visually dissimilar in Experiments 2 and 3. In all, the most parsimonious explanation for these results is that conflict signals can transfer to visually dissimilar representations based on broad feature similarity.

While the most parsimonious explanation for the present results is that ISPC transfer was based on broad feature similarity, there may be another explanation. Specifically, in addition to broad feature similarity being consistent across the inducer and transfer items, so too was the response made to these items. This is particularly notable given the findings of Bugg and Dey (2018) in their fourth experiment. Here, participants performed an ISPC transfer task like that of the present study, but that instead of responding by saying the category name, they were to say the specific animal type. For example, for a Labrador member of the dog category, they were to say “lab” instead of “dog,” and for an oriole member of the bird category, they were to say “oriole” instead of “bird,” and so forth. Under these conditions, the ISPC signal did not transfer to the transfer items. However, when a different group of participants performed this identical task but responded by saying the category name, the ISPC transfer effect was present. Bugg and Dey proposed that this finding indicated that the basis of the response modulated whether participants engaged in a category or item level of cognitive control. That is, when the task goal was to label the animal picture based on its category, cognitive control settings were extended to all category members, and when the task goal was to label the animal pictures based on their specific identity, cognitive control settings were linked on an item-by-item basis. While the present results and those of Bugg and Dey’s fourth experiment are not easily bridged, the fact that the ISPC effect was associated with response commonality here and that ISPC transfer was eliminated when removed in Bugg and Dey, leaves open the possibility that response may play a central role in the transfer of the ISPC signal.

It is worth highlighting the processes underlying the ISPC transfer effect in Experiment 3 may have differed relative to the first two experiments. First, participants were slower to respond to the transfer than inducer items. We suspect that the reason for this finding was that responding to the transfer items entailed the additional processing step of determining the response associated with the picture, as the pictures did not directly indicate the response that ought to be made like they did for the inducer items (see also Neely, 1977). Also, it is not clear whether an instructional level of conflict played a role (i.e., responding “dog” to a picture of a cat). That is, there were Stroop and instructional levels of conflict, and it is an open question as to what resolving multiple forms of conflict means for Stroop task performance. Further, there may have been differences in the level of instructional conflict across the animal categories—that is, it is likely that responding “dog” to a cat picture is more conflicting than responding “fish” to a whale picture. In all, the findings of Experiment 3 ought to be interpreted cautiously until this experimental approach has been investigated thoroughly.

Regardless of the precise nature of the processes underlying ISPC transfer, the present findings allow us to speculate on its general operating principles. When we encounter an object in the world, it is critically important that information on how to interact with it is encoded so that we can efficiently act when we inevitably encounter the object again. At the same time, a system that only associates specific object representations with conflict serves little utility as identical instances of the same object are highly unlikely. That is, a useful system will need to generalize to other objects, especially those that are responded to in the same manner. This is what is precisely demonstrated by this study—that conflict signals can generalize to representations based on broad feature similarity to promote the associated actions. To put another way, you would not live long if you could not generalize a poisonous berry (or other things like them) to the rest of the berry bush.

References

Braem, S., Bugg, J. M., Schmidt, J. R., Crump, M. J. C., Weissman, D. H., Notebaert, W., & Tobias, E. (2019). Measuring adaptive control in conflict tasks. Trends in Cognitive Science, 23(9), 769–783.

Bugg, J. M., & Crump, M. J. C. (2012). In support of a distinction between voluntary and stimulus-driven control: A review of literature on proportion congruent effect. Frontiers in Psychology, 3(367), 1–16.

Bugg, J. M., & Dey, A. (2018). When stimulus-driven control settings compete: On the dominance of categories as cue for control. Journal of Experimental Psychology: Human Perception and Performance, 44(12), 1905–1932.

Bugg, J. M., & Hutchison, K. A. (2013). Converging evidence for control of color-word Stroop interference at the item level. Journal of Experimental Psychology: Human Perception and Performance, 39(2), 433–449.

Bugg, J. M., Jacoby, L. L., & Chanani, S. (2011). Why it is too early to lose control in accounts of item-specific proportion congruency effects. Journal of Experimental Psychology: Human Perception and Performance, 37(3), 844–859.

Bugg, J. M., Jacoby, L. L., & Toth. (2008). Multiple levels of control in the Stroop task. Memory & Cognition, 36(8), 1484–1494.

Cochrane, B. A., & Pratt, J. (2022). The item-specific proportion congruency effect can be contaminated by short-term repetition priming. Attention, Perception, & Psychophysics, 84(1), 1–9.

Cousineau, D. (2005). Confidence intervals in within-subject designs: a simpler solution to Loftus and Masson’s method. Tutorials in Quantitative Methods for Psychology, 1(1), 42–45.

Gratton, G., Coles, M. G., & Donchin, E. (1992). Optimizing the use of information: Strategic control of activation of responses. Journal of Experimental Psychology: General, 121(4), 480–506.

Jacoby, L. L., Lindsay, D. S., & Hessel, S. (2003). Item-specific control of automatic processes: Stroop process dissociations. Psychonomic Bulletin & Review, 10(3), 638–644.

Lindsay, D. S., & Jacoby, L. L. (1994). Stroop process dissociation: The relationship between facilitation and interference. Journal of Experimental Psychology: Human Perception and Performance, 20(2), 219–234.

Logan, G. D., & Zbrodoff, N. J. (1979). When it helps to be misled: Facilitative effects of increasing the frequency of conflicting stimuli in a Stroop-like task. Memory & Cognition, 7(3), 166–174.

Logan, G. D., Zbrodoff, N. J., & Williamson, J. (1984). Strategies in the color-word Stroop task. Bulletin of the Psychonomic Society, 22(2), 135–138.

Lowe, D. G., & Mitterer, J. O. (1982). Selective and divided attention in a Stroop task. Canadian Journal of Psychology, 36(4), 684–700.

Morey, R. D. (2008). Confidence intervals from Normalized data: a correction to Cousineau (2005). Tutorial in Quantitative Methods for Psychology, 4(2), 61–64.

Neely, J. H. (1977). Semantic priming and retrieval from lexical memory: Roles of inhibitionless spreading of activation and limited-capacity attention. Journal of Experimental Psychology: General, 106(3), 226–254.

Schmidt, J. R. (2013). Questioning conflict adaptation: proportion congruent and Gratton effects reconsidered. Psychonomic Bulletin & Review, 20(4), 615–630.

Schmidt, J. R. (2014). List-level transfer effects in temporal learning: Further complications for the list-level proportion congruent effect. Journal of Cognitive Psychology, 26(4), 373–385.

Schmidt, J. R. (2019). Evidence against conflict monitoring and adaptation: An updated review. Psychonomic Bulletin & Review, 26(3), 753–771.

Schmidt, J. R., & Besner, D. (2008). The Stroop effect: Why proportion congruent has nothing to do with congruency and everything to do with contingency. Journal of Experimental Psychology: Learning, Memory, and Cognition, 34(3), 514–523.

Schmidt, J. R., & De Houwer, J. (2011). Now you see it, now you don’t: Controlling for contingencies and stimulus repetitions eliminates the Gratton effect. Acta Psychologica, 138(1), 176–186.

Spinelli, G., & Lupker, S. J. (2020). Item-specific control of attention in the Stroop task: Contingency learning is not the whole story in the item-specific proportion-congruent effect. Memory & Cognition, 48, 426–435.

Stroop, J. R. (1935). Studies on interference in serial verbal reactions. Journal of Experimental Psychology, 18(6), 643–662.

Van Selst, M., & Jolicoeur, P. (1994). A solution to the effect of sample size on outlier elimination. The Quarterly Journal of Experimental Psychology, 47A(3), 631–650.

Funding

Financial support for this study was provided in part by a Natural Science and Engineering Research Council of Canada Discovery Grant (2016-06359) awarded to Jay Pratt. The funding agreement ensured the authors’ independence in designing the study, interpreting the data, writing, and publishing the report.

Author information

Authors and Affiliations

Corresponding author

Additional information

Open practices statement

The experiments reported in this article were not preregistered. The data is publicly available at the Center of Open Science website (osf.io/sywm3). Requests for materials can be sent via email to the corresponding author (brett.cochrane@utoronto.ca).

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Cochrane, B.A., Pratt, J. The item-specific proportion congruency effect transfers to non-category members based on broad visual similarity. Psychon Bull Rev 29, 1821–1830 (2022). https://doi.org/10.3758/s13423-022-02104-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-022-02104-1