Abstract

In this paper, we study the asymptotic properties of the Maximum Likelihood Estimator (MLE) for a Zero-Inflated Bell regression model. Under some regularity conditions, we establish that the estimator is consistent and asymptotically normal. This lends a substantial support to the empirical findings that have already been obtained by some authors. Monte Carlo simulations are conducted to numerically illustrate the main results. The model is applied to a dataset of healthcare demand in USA.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 INTRODUCTION

Statisticians have long recognized the difficulties of working with count data. Poisson and binomial [26] regression models are the usual tools for analyzing count data. They are applied to many fields such as health economics, epidemiology or environmental sciences. Generalized linear models [25] provide a powerful framework for analysing such data. But real-life applications continuously raise new challenging problems and a huge amount of work has been done to extend the scope of these models. For example, count data often show excess of zeros (that is, a proportion of zeros that cannot be explained by models based on standard distributional assumptions). The variance is considered as a function of the mean. These relationships between variance and mean make sense in a theoretical context where, for example, all the processes leading to the presence/absence or abundance of the species are well modeled and measured without error [16, 20]. In practice, it is illusory to think that all the variables that can affect these processes can be incorporated into the model, either because they are unknown or because they are impossible to measure in the field, and this can lead to a mis-specification of the variance, with the mean remaining theoretically unaffected. The most frequent situation is overdispersion; i.e., when the variance of the observations is higher than the one expected under the model used. Many solutions have been proposed to take into account this overdispersion [20] for an overview among which the development of new models, the negative binomial regression and the zero-inflated models such as Zero-Inflated Poisson (ZIP) and Zero-Inflated Binomial (ZIB). Recently, [3] introduced an alternative distribution model called Bell regression model (BRM) to model count data with overdispersion. BRM is generally preferred over the Poisson regression model to overcome the restriction that the mean equals the variance. In the presence of excess of zeros in the count data (which is very frequent in many applications), the Bell regression model is limited. In order to remedy this situation, [22] have recently proposed a zero-inflation Bell regression. In their works, they have addressed the inference by using the maximum likelihood estimator (MLE). Some simulation results show that, the estimate obtained is approaching the true value of the parameter when the sample size increases; which suggests that the MLE is consistent for this model. However, it is important to note that the asymptotic behaviour of the estimator has not yet been established. The increasing interest for Zero-Inflated Bell regression model in recent years (see [3, 24]) render necessary to establish such result for the parameter estimate. For example, the asymptotic properties are essential when we want to construct a statistic that can test the nullity of some parameter’s components, which in particular, allows us to assess the relevance of the exogenous covariates.

In this new contribution, we provide conditions for the strong consistency and derive the asymptotic distribution of the MLE.

The current paper fills this gap and thus provide rigorous proofs of consistency and asymptotic normality of the maximum likelihood estimators. We also conduct a simulation study to evaluate finite-sample performance of these estimators. All these results provide a firm basis for making statistical inference in the Zero-Inflated Bell regression model.

The rest of this paper is organized as follows. We briefly review ZIBell regression model, basics notations and maximum likelihood estimation are introduced in Section 2. In Section 3 we investigate asymptotic properties of maximum likelihood estimates in this model. Results of a simulation study are reported in Section 4. An application of the proposed ZIBell regression model to the analysis of health-care demand data is described in Section 5. Discussion and perspectives are given in Section 6. Technical proofs are postponed to an Appendix.

2 ZERO-INFLATED BELL REGRESSION MODEL AND BASIC NOTATIONS

This section will briefly illustrate the Zero-Inflated Bell (ZIBell) regression model and introduces some basic notations.

2.1 The ZIBell Regression Model

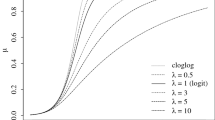

Let \(y_{i}\) be a zero-inflated count response and \(\mathbf{S}_{i}\) a vector of explanatory variables. Let \(\mathbf{X}_{i}\) and \(\mathbf{Z}_{i}\) subsets of \(\mathbf{S}_{i}\), which may overlap one another. The Zero-Inflated Bell regression model is defined by [22]

where \(\beta=(\beta_{1},\dots,\beta_{p})^{\top}\in\mathbb{R}^{p}\) and \(\gamma=(\gamma_{1},\dots,\gamma_{q})^{\top}\in\mathbb{R}^{q}\) are parameters of the regression models to be estimated, \(\top\) denotes the transpose operator, and \(\mathbf{X}_{i}=(X_{i1},X_{i2},\dots,X_{ip})^{\top}\) and \(\mathbf{Z}_{i}=(Z_{i1},Z_{i2},\dots,Z_{iq})^{\top}\) are the corresponding design matrices and \(\text{ZIBell}(\mu,\pi)\) denotes the ZIBell distribution with the probability mass function

where \(\mu>0\), \(W(\cdot)\) is the Lambert function [4], and \(B_{y}\) is the Bell number defined by (see also [1])

Let \(\theta:=(\beta^{\top},\gamma^{\top})^{\top}\in\Theta\subset\mathbb{R}^{p+q}\) denotes the unknown \(k\)-dimensional \((k=p+q)\) parameter in the model (2.1). Assume that the observations \((Y_{1},\mathbf{X}_{1},\mathbf{Z}_{1}),\ldots,(Y_{n},\mathbf{X}_{n},\mathbf{Z}_{n})\) are generated from (2.1) according to the true parameter \(\theta_{0}=\left(\beta_{0}^{\top},\gamma_{0}^{\top}\right)^{\top}\) which is unknown. The log-likelihood function is given by

where \(\delta_{i}=1_{\{Y_{i}=0\}}\). The MLE \(\widehat{\theta}_{n}:=(\hat{\beta}^{\top}_{n},\hat{\gamma}^{\top}_{n})^{\top}\) of \(\theta_{0}\) is

This is obtained by solving the score equation

which can be achieved by nonlinear optimization. In this paper, all estimates are obtained using the R function maxLik [19], which implements Newton-type algorithms.

2.2 Some Further Notations

To obtain the first and second partial derivatives of the log-likelihood function, we make use of the following intermediate notations:

The first partial derivatives of the log-likelihood function for the ZIBell model are as follows:

Then, some tedious albeit not difficult algebra shows that

We set \(S_{n}(\theta)=\partial\ell_{n}(\theta)/\partial\theta\), \(H_{n}(\theta)=-\partial^{2}\ell_{n}(\theta)/\partial\theta\partial\theta^{\top}\), \(F_{n}(\theta)=\mathbb{E}\left(H_{n}(\theta)\right)\) and let \(\mathbb{I}_{r}\) be the identity matrix of order \(r\). \(H_{n}(\theta)\) is assumed to be positive definite.

3 LARGE-SAMPLE PROPERTIES FOR THE MLE

In this section, we start by giving some regularity conditions that are needed to ensure the large-sample properties of the MLE. Now denote by \(N_{n}(\delta)=\{\theta\in\mathcal{C}:|\ ||F_{n}^{\top/2}(\theta-\theta_{0})||\ |\leq\delta^{2}\}\) a neighborhood of the unknown true parameter \(\theta_{0}\) for \(\delta>0\). To study the consistency and the asymptotic normality of the MLE, we consider the following regularity assumptions.

(C1) The covariates are bounded that is, there exist compact sets \(\mathcal{X}\subset\mathbb{R}^{p}\) and \(\mathcal{Z}\subset\mathbb{R}^{q}\) such.

(C2) \(\beta_{0}\) and \(\gamma_{0}\) lie in the interior of known compact sets \(\mathcal{B}\subset\mathbb{R}^{p}\) and \(\mathcal{G}\subset\mathbb{R}^{q}\), respectively.

(C3) There exists a positive constant \(c_{1}\) such that \(n/\mu_{\textrm{min}}\left(F_{n}(\theta_{0})\right)\leq c_{1}\) for every \(n=1,2,\dots\), where \(c_{1}\) is a positive constant.

(C4) For all \(\delta>0\), \(\underset{\theta\in N_{n}(\delta)}{\text{max}}||V_{n}(\theta)-\mathbb{I}_{r}||\rightarrow 0\), where \(V_{n}(\theta):=F_{n}^{-\frac{1}{2}}H_{n}(\theta)F_{n}^{\frac{\top}{2}}\).

Conditions C1–C3 are classical in generalized linear regression and zero-inflated regression models (see [5, 14]). Condition C4 is required in the convergence in probability of \(V_{n}(\theta)\) to \(\mathbb{I}_{r}\) uniformly in \(\theta\in N_{n}(\delta)\), as \(n\rightarrow\infty\).

In what follows, the space \(\mathbb{R}^{r}\) of \(r\)-dimensional (column) vectors is provided with the Euclidean norm \(||\cdot||_{2}\) and the space of (\(r\times r\)) real matrices is provided with the norm \(|\ ||A||\ |_{2}:=\text{max}_{||x||_{2}=1}||Ax||_{2}\) (for notations simplicity, we use \(||\cdot||\) for both norms). Recall that for a symmetric real (\(r\times r\))-matrix \(A\) with eigenvalues \(\mu_{1},\dots,\mu_{r},||A||=\text{max}_{i}|\mu_{i}|\) (from now on, \(\mu_{\textrm{min}}(A)\) and \(\mu_{\textrm{max}}(A)\) will denote the smallest and largest eigenvalues of \(A\), respectively). Our first result states that the solution of (2.3) exists, lies in the neighbourhood \(N_{n}(\delta)\) of \(\theta_{0}\) when \(n\) is sufficiently large and is consistent for \(\theta_{0}\). The following theorem gives the consistency of \(\hat{\theta}_{n}\).

Theorem 3.1. Assume that C1–C4 hold. We have:

(i) \(\mathbb{P}\left(S_{n}(\hat{\theta}_{n})=0\right)\rightarrow 1\) (asymptotic existence),

(ii) \(\hat{\theta}_{n}\overset{p}{\longrightarrow}\theta_{0}=(\beta_{0}^{\top},\gamma_{0}^{\top})^{\top}\) as \(n\rightarrow\infty\) (weak consistency).

The following theorem gives the asymptotic normality of \(\hat{\theta}_{n}\).

Theorem 3.2. Assume that conditions C1–C4 hold. Then, \(F_{n}^{\frac{T}{2}}(\hat{\theta}_{n}-\theta_{0})\) converges in distribution to the Gaussian vector \(\mathcal{N}(0,\mathbb{I}_{r})\), as \(n\rightarrow\infty.\)

To prove the asymptotic normality, we show weak convergence of \(F_{n}^{-\frac{1}{2}}(\hat{\theta}_{n}-\theta_{0})\). More precisely, a Taylor series expansion of \(S_{n}:=S_{n}(\theta_{0})\) about \(\hat{\theta}_{n}\) can be used. By Lemma 6.1 in Appendix, it follows that for every \(a\in\mathbb{R}^{r},a^{\top}F_{n}^{-1/2}S_{n}\) converges in distribution to \(\mathcal{N}(0,1)\). An application of Cramer–Wold device concludes the proof. All details are given in Appendix.

4 A SIMULATION STUDY

This section contains a brief review of the data generation with a discussion of the various factors that are considered in the simulation study, including the zero-inflation proportions and the sample size. To evaluate the performance of the MLE, we report some simulations results.

4.1 The Design of the Experiment

The data are generated from a ZIBell such that:

and

Here, we take \(\beta=(0.7,-0.3,0.5,-0.8,0.5)^{\top}\). We consider successively three values for \(\gamma\), namely :

-

firt setting: \(\gamma=(-0.4,0.9,-0.3,1,0.5,-0.5,0,0)^{\top},\)

-

second setting: \(\gamma=(-0.5,-0.5,0.9,1.3,-0.7,-0.3,0.9,0)^{\top}\),

-

third setting: \(\gamma=(0.2,0.5,0.7,-1,0.8,1.5,0,0)^{\top},\)

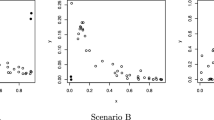

with average proportions of zero-inflated 25, 50, and 90%, respectively. The covariates \(X_{i2},\dots,X_{i5}\) and \(Z_{i3},\dots,Z_{i7}\) are generated as follows: \(X_{i2}\sim\mathcal{N}(0,1)\), \(X_{i3}\sim\mathcal{U}[1,3]\), \(X_{i4}\sim\mathcal{B}(1,1.5)\), \(X_{i5}\sim\mathcal{N}(-2,1)\) and \(Z_{i3}\sim\mathcal{N}(-1,1)\), \(Z_{i4}\sim\mathcal{E}(1)\), \(Z_{i5}\sim\mathcal{N}(0,1)\), \(Z_{i6}\sim\mathcal{B}(0.8)\), \(Z_{i7}\sim\mathcal{U}[2,5]\). Linear predictors in \(\textrm{log}(\mu_{i}(\beta))\) and \(\textrm{logit}(\pi_{i}(\gamma))\) are allowed to share common terms by letting \(X_{i2}=Z_{i2}\). We consider the following sample sizes: \(n=500\), \(1000\), and \(1500\). Finally, for each combination of the simulation design parameters (sample size, proportions of zero-inflation, we simulate \(N=1000\) samples and we calculate the maximum likelihood estimate \(\hat{\theta}.\) Simulations are conducted by using the statistical software R. To solve the likelihood equation, we use the package maxLik [19] which implements various Newton–Raphson-like algorithms.

4.2 Numerical Results

For each combination [sample size \(\times\) proportion of zero-inflation] of the simulation parameters, we calculate the average absolute relative bias (as a percentage) of the estimates \(\hat{\beta}_{j,n}\) and \(\hat{\gamma}_{j,n}\) over the \(N\) simulated samples (for example, the absolute relative bias of \(\hat{\beta}_{j,n}\) is obtained as \(N^{-1}\sum_{t=1}^{N}|(\hat{\beta}_{j,n}^{t}-\beta_{j})|/\beta_{j}\times 100\) , where \(\hat{\beta}_{j,n}^{t}\) denotes the MLE estimate of \(\beta_{j}\) in the \(t\)th simulated sample). We also obtain the mean and root mean square error (RMSE) for the regression parameters. Finally, we provide the empirical coverage probability and average length of \(95\%\)-level Wald confidence intervals for the \(\beta_{j}\) and \(\gamma_{k}\). The numerical results are given in Tables 1–3. As in [22], our study shows that the performance of the MLE is quite good, exhibiting small average absolute relative bias and respectable RMSE in all cases considered; that is, the MLE are quite stable and, more importantly, are very close to the true values. The average absolute relative bias and RMSE of all estimators decrease as the sample size increases and that the empirical coverage probabilities are close to the nominal confidence level, even for moderate sample size. Additional observations arise from our study. In particular, for fixed \(n\), we observe that the performances of \(\hat{\beta}_{j,n}\) remain stable when the average proportion of zero-inflation varies from small to moderate values (here, from 25 to 50% ) and deteriorate when zero-inflation achieves higher values, and the performances of \(\hat{\gamma}_{k,n}\)’s improve and then deteriorate as zero-inflation increases. To assess the normal approximation stated in Theorem 3.2, the estimated densities and normal Q–Q plots of \((\hat{\beta}_{j,n}-\beta_{j})/se(\hat{\beta}_{j,n})\) and \((\hat{\gamma}_{k,n}-\gamma_{k})/se(\hat{\gamma}_{k,n})\), \(j=1,\ldots,5,k=1,\ldots,8\) (with \(se\) denotes the standard error) are plotted in Figs. 3 and 4 for \(n=1500\). From these figures, we remark that the estimated density is very close to that of the normal distribution; which confirms the results of Theorem 3.2.

5 REAL DATA APPLICATION

In this section, we describe an application of the ZIBell regression model to the analysis a real data example. To this end, we consider data from the USA National Medical Expenditure Survey in 1987 and 1988 (NMES) are considered. NMES data have been widely used in the literature to model the demand for medical care [6]. As an illustration, we use the number of physician visit (OFP) as the response variable and the following covariates: number of hospital stays (hosp), sex (gender: 1 for female, 0 for male), age (in years, divided by 10), marital status, education level (school), perceived health level (‘‘health1’’ if health is poor, 0 otherwise and ‘‘health2’’ if health is excellent, 0 otherwise), number of chronic conditions (numchron) and a binary variable indicating whether the individual is covered by medicaid or not (we code 1 if the individual is covered and 0 otherwise). Table 4 shows the mean and standard deviation of the selected variables.

First, we determine appropriate predictors for zero-inflation modelling. We fit a logistic regression model to the indicators \(1_{\{Y_{i}=0\}}\), \(i=1,\dots,n\), considered as the response variable. Note that this is not a model for zero-inflation since some of the 0 may arise from the count distribution. However, we may expect that this rough procedure will still identify a relevant subset of predictors, that will be used in a second step in the logistic model for \(\pi_{i}\). Given the very large number of potential predictors, we use Wald tests to select significant covariates (the least significant covariate at the level \(5\%\) is removed and the model is fitted again, until all remaining covariates are significant ; note that the AIC and BIC criterions decreases at each step of this procedure). For comparison, we run the similar analysis using the ZIP model. Results are provided in Table 5.

Secondly, we will check goodness-of-fit. This is an important aspect of regression modeling. We suggest some additional empirical tools and apply them to our model. Note that, in the ZIBell model, the variance of \(Y_{i}\) is:

Based on this, we define the Pearson residual for the \(i\)th observation as:

where \(\hat{\mu}_{i}\) and \(\hat{\pi}_{i}\) are obtained by replacing \(\beta\) and \(\gamma\) by their estimates in \(\mu_{i}\) and \(\pi_{i}\). If the model is correct, one may expect these residuals to lie within a limited range (e.g., no more than \(5\%\) should be greater than \(1.96\) in absolute value). In our final model, the proportion of residuals greater than \(1.96\) is \(7.6\%\), which is only slightly greater than \(5\%\) and suggests that our model fits reasonably well the data.

We also propose to calculate the Pearson chi-squared statistic

where \(N\) is the number of distinct covariate patterns (i.e., observations with the same values for all covariates) and \(\tilde{Y}_{\ell}\) is the sum of the \(Y_{i}\)’s over individuals \(i\) with the same covariate pattern. We compare \(X^{2}\) with the \(\alpha\)-quantile of the \(\chi^{2}(N-\ell)\), where \(\ell\) is the number of parameters estimated. The result of the test for our final model is significant (\(p\)-value \(=0.029\)), suggesting that the model does not fit well the data. However, this apparent bad fit is due to outliers with the largest residuals. Removing them from the analysis yields an \(X^{2}\) \(p\)-value equal to 0.132, suggesting that our model fits the data well. We performed a similar analysis for the ZIP model and obtained similar results. However, according to the AIC and BIC criteria in Table 5, our model provides a slightly better fit than the ZIP model.

6 DISCUSSION

The ZIBell regression is often used to model count data thanks its simplicity. In this paper, we provide a rigorous basis for maximum likelihood inference in this model. Precisely, we establish consistency and asymptotic normality of the MLE in ZIBell regression. Moreover, our simulation study suggests that the estimator performs well under a wide range of conditions pertaining to sample size and proportion of zero-inflation.

Several other generalizations of ZIBell regression may be developed to account the increasing complexity of experimental data. For example, random effects could be incorporated to the model, in order to take account of correlation among the individuals. Non linear effects may also be introduced in the linear predictors, through unknown functions of the covariates. Asymptotic properties of the statistical inference in these generalizations are still unknown and their rigorous derivation remains an open problem. This is a topic for our future work.

REFERENCES

E. T. Bell, ‘‘Exponential polynomials,’’ Ann. Math. 35, 258-277 (1934a).

P. Billingsley, Convergence of Probability Measures (John Wiley and Sons, 2013).

F. Castellares, S. L. Ferrari, and A. J. Lemonte, ‘‘On the Bell distribution and its associated regression model for count data,’’ Applied Mathematical Modelling. https://doi.org/10.1016/j.apm.2017.12.014

R. M. Corless, G. H. Gonnet, D. E. GHare, D. Jeffrey, and D. E. Knuth, ‘‘On the LambertW function,’’ Adv. Comput. Math. 5 329-359 (1996).

C. Czado and A. Min, Consistency and Asymptotic Normality of the Maximum Likelihood Estimator in a Zero-Inflated Generalized Poisson Regression, Collaborative Research Center 386, Discussion Paper 423 (Ludwig-Maximilians-Universität, München, 2005).

P. Deb and P. K. Trivedi, ‘‘Demand for medical care by the elderly: a finite mixture approach,’’ Journal of Applied Econometrics 12 (3), 313–336 (1997).

D. Deng and Y. Zhang, ‘‘Score tests for both extra zeros and extra ones in binomial mixed regression models,’’ Communications in Statistics—Theory and Methods 44, 2881–2897 (2015).

A. Diop, A. Diop, and J.-F. Dupuy, ‘‘Maximum likelihood estimation in the logistic regression model with a cure fraction,’’ Electronic Journal of Statistics 5, 460–483 (2011).

A. Diop, A. Diop, and J.-F. Dupuy, ‘‘Simulation-based inference in a zero-inflated Bernoulli regression model,’’ Communications in Statistics—Simulation and Computation 45 (10), 3597–3614 (2016).

E. Dietz and D.Böhning, ‘‘On estimation of the Poisson parameter in zero-modifed Poisson models,’’ Computational Statistics and Data Analysis 34 (4), 441–459 (2000).

J.-F. Dupuy, Statistical Methods for Overdispersed Count Data (ISTE Press—Elsevier, 2018).

Essoham Ali, A simulation-based study of ZIP regression with various zero-inflated submodels, Communications in Statistics-Simulation and Computation, https://doi.org/10.1080/03610918.2022.2025840

F. Eicker, ‘‘A multivariate central limit theorem for random linear vector forms,’’ The Annals of Mathematical Statistics 37 (6), 1825–1828 (1966).

L. Fahrmeir and H. Kaufmann, ‘‘Consistency and asymptotic normality of the maximum likelihood estimator in generalized linear models,’’ The Annals of Statistics 13 (1), 342–368 (1985)

J. Feng and Z. Zhu, ‘‘Semiparametric analysis of longitudinal zero-inflated count data,’’ Journal of Multivariate Analysis 102, 61–72 (2011).

R. A. Fisher, ‘‘The negative binomial distribution,’’ Annals od Eugenics 11 (1), 182–187 (2011).

R. V. Foutz, ‘‘On the unique consistent solution to the likelihood equations,’’ Journal of the American Statistical Association 72, 147–148 (1977).

D. B. Hall, ‘‘Zero-inflated Poisson and binomial regression with random effects: a case study,’’ Biometrics 56 (4), 1030–1039 (2000).

A. Henningsen and O. Toomet, ‘‘maxLik: A package for maximum likelihood estimation in R,’’ Computational Statistics 26 (3), 443–458 (2011).

J. Hinde and C. G. B. Demetrio, ‘‘Overdispersion: models and estimation,’’ Computational Statistics and Data Analysis 27, 151–170 (1998).

D. Lambert, ‘‘Zero-inflated Poisson regression, with an application to defects in manufacturing,’’ Technometrics 34, 1–14 (2019).

A. J. Lemonte, G. Moreno-Arenas, and F. Castellares, ‘‘Zero-inflated Bell regression models for count data,’’ J. Appl. Stat. 47 (2), 265–286 (2019).

R. A. Maller, ‘‘Asymptotics of regressions with stationary and nonstationary residuals,’’ Stochastic Processes and Their Applications 105 (1), 33–67 (2003).

Muhammad Amin, Muhammad Nauman Akram and Abdul Majid, On the estimation of Bell regression model using ridge estimator, Communications in Statistics-Simulation and Computation, (2021). https://doi.org/10.1080/03610918.2020.1870694

P. McCullagh and J. A. Nelder, Generalized Linear Models, 2nd ed., Monographs on Statistics and Applied Probability (Chapman and Hall, London, 1989).

J.A. Nelder and R. W. M. Wedderburn, ‘‘Generalized linear models,’’ J. Roy. Statist. Soc. Ser. A 135, 370–384 (1972).

V. T. Nguyen and J.-F. Dupuy, ‘‘Asymptotic results in censored zero-inflated Poisson regression,’’ Communications in Statistics—Theory and Methods 50 (12), 2759–2779 (2021).

R Core Team, A Language and Environment for Statistical Computing. R Foundation for Statistical Computing (Vienna, Austria, 2020). https://www.R-project.org/

M. Ridout, J. Hinde, and C. G. B. Demetrio, ‘‘A score test for testing a zero-inflated Poisson regression model against zero-inflated negative binomial alternatives,’’ Biometrics 57(1), 219–223 (2001).

G. A. F. Seber and A. J. Lee, Linear Regression Analysis. Wiley Series in Probability and Statistics (Wiley, 2012).

ACKNOWLEDGMENTS

Authors are grateful to a referee and the Associate Editor for their comments and suggestions that led substantial improvements of this paper.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

The authors declare that they have no conflicts of interest.

PROOFS OF ASYMPTOTIC RESULTS

PROOFS OF ASYMPTOTIC RESULTS

Proof of Theorem 3.1. This proof is inspired by the proof of consistency of the MLE of [29]

(i) Proof of the asymptotic existence of \(\hat{\theta}_{n}.\)

Let \(\mathcal{D}_{n}=\ell_{n}(\theta)-\ell_{n}(\theta_{0}).\) For any \(n,\delta>0\), we have the event

where \(\partial N_{n}(\delta)\) is the boundary \(\{\theta\in\mathcal{C}:(\theta-\theta_{0})^{\top}F_{n}(\theta-\theta_{0})\leq\delta^{2}\}\) of \(N_{n}(\delta).\)

It is show below that for any \(\eta>0\), there exist \(\delta>0\) and \(n_{1}\in\mathbb{N}\) such that,

This will imply the existence of a local maximum of \(\ell_{n}\) in \(N_{n}(\delta).\) Due to the positive definition of \(H_{n}\) and the convexity of \(\mathcal{C}\) there is a most one zero of the score function. This ensures that this maximum is global and unique.

Similar to (6.1), we show that for every \(\eta>0\) there exists \(\delta\) and \(n_{1}\in\mathbb{N}\), such that, for some \(\theta\in\partial N_{n}(\delta)\), we have

More precisely, a Taylor series expansion at \(\theta_{0}\) yields

where \(\theta^{\ast}_{0}=\tau\theta+(1-\tau)\theta\) with \(0\leq\tau\leq 1\) lies between \(\theta\) and \(\theta_{0}\). Let \(0<\lambda<\frac{1}{2}\) and for some \(\theta\in\partial N_{n}(\delta)\) we have:

where \(I=\{(\theta-\theta_{0})^{\top}S_{n}(\theta)\geq\lambda\delta^{2},\ \text{for some}\ \theta\in\partial N_{n}(\delta)\}\) and \(J=\{U_{n}(\theta)\leq\lambda\delta^{2},\ \text{for some}\ \theta\in\partial N_{n}(\delta)\}\), respectively. Assuming \(w_{n}(\theta)=\frac{1}{\delta}F^{-\frac{1}{2}}\left(\theta-\theta_{0}\right)\). Then

As a result, it follows that \(\mathbb{P}(I)\leq\mathbb{P}\left(||F^{-\frac{1}{2}}S_{n}(\theta)||>\lambda\delta\right)\). Based on Theorem 1.5 of Seber and Lee (2012), we have \(\mathbb{E}||F^{-\frac{1}{2}}S_{n}(\theta)||^{2}=k\) and Chebyshev’s inequality implies

Now, for event \(J\), we have

Thus \(\mathbb{P}(J)\leq\mathbb{P}(\{\mu_{\text{min}}(F^{-\frac{1}{2}}H_{n}(\theta^{\ast})F^{-\frac{1}{2}})\leq 2\lambda\})\). By Condition C4, \(F_{n}^{-\frac{1}{2}}H_{n}(\theta)F_{n}^{-\frac{1}{2}}\) converges in probability to \(\mathbb{I}_{r}\) uniformly in \(\theta\in N_{n}(\delta)\), as \(n\rightarrow\infty\). Thus, by [23], \(\mu_{\text{min}}\left(F^{-\frac{1}{2}}H_{n}(\theta^{\ast})F^{-\frac{1}{2}}\right)\) converges in probability to 1 uniformly in \(\theta\in N_{n}(\delta)\), as \(n\rightarrow\infty\).

If \(\theta^{\ast}_{0}=\tau\theta+(1-\tau)\theta\) for some \(0\leq\tau\leq 1\) and \(\theta\in N_{n}(\delta)\), then

From the above, it follows that \(\mu_{\text{min}}(F^{-\frac{1}{2}}H_{n}(\theta^{\ast})F^{-\frac{1}{2}})\) converges in probability to 1 as \(n\rightarrow\infty\), since \(|\mu_{\text{min}}(F^{-\frac{1}{2}}H_{n}(\theta^{\ast})F^{-\frac{1}{2}})-1|\leq\text{max}_{\theta\in N_{n}(\delta)}|\mu_{\text{min}}(F^{-\frac{1}{2}}H_{n}(\theta^{\ast})F^{-\frac{1}{2}})-1|\). Therefore for \(n\geq n_{1},\mathbb{P}(\text{there exists}\;\theta\in\partial N_{n}(\delta)\text{ such that }\;\mu_{\text{min}}(F^{-\frac{1}{2}}H_{n}(\theta^{\ast})F^{-\frac{1}{2}})\leq 2\lambda)\leq\eta/2,\) since \(2\lambda<1\). This implies that \(\mathbb{P}(J)\leq\eta/2\). Finally,

hence the proof of (6.1) and thus, the existence of a unique global maximum of \(\ell_{n}\) on \(N_{n}(\delta)\), which coincides with \(\hat{\theta}_{n}.\)

(ii) Proof of the consistency of \(\hat{\theta}_{n}\)

with probability tending to 1 as \(n\rightarrow\infty\), by (i). By condition C3, \(\mu_{\text{min}}(F_{n})\) tend to \(\infty\) as \(n\rightarrow\infty\). Therefore \(||\hat{\theta}_{n}-\theta_{0}||\) converges to 0 with probability tending to 1 as \(n\rightarrow\infty\), which concludes the proof. \(\Box\)

Proof of Theorem 3.2.

Lemma 6.1. Assume conditions C1–C3 hold. Then \(F_{n}^{-\frac{1}{2}}S_{n}\) converges in distribution to \(\mathcal{N}(0,\mathbb{I}_{r})\), as \(n\rightarrow\infty\), where \(\mathcal{N}(0,\mathbb{I}_{r})\) is \(r\)-dimensional normal distribution with mean vector 0 and covariance matrix \(\mathbb{I}_{r}\).

Proof of Lemma 6.1. It is sufficient to show that a linear combination of \(F_{n}^{-\frac{1}{2}}S_{n}\) converges in distribution to \(\mathcal{N}(0,a^{\top}a)\) for any vector \(a\in\mathbb{R}^{r}(a\neq 0)\). Without loss of generality, we fix \(\|a\|=1\). We notice that \(S_{n}\) can be written as the sum of independent random vectors, namely

where \(S_{n,i}=\left(S_{n,i,1},S_{n,i,2},\dots,S_{n,i,r}\right)\).

Under conditions C1, C2, and C4, components of \(S_{n,i}\) are bounded by some finite positive constant \(c_{2}\) that is, \(|S_{n,i,\ell}|<c+2,\,\ell=1,\dots,r.\) Therefore, \(\|S_{n,i}\|^{2}<c_{3}.\)

Further, define independent random variables \(\xi_{n,i}\) by \(\xi_{n,i}:=a^{\top}F_{n}^{-\frac{1}{2}}S_{n,i}\).

Since \(\mathbb{E}(\xi_{n,i})=0\) and var\((\sum_{i=i}^{n}\xi_{n,i})=1\) , let’s show that Lindeberg’s condition is verified, that is

Let \(\varepsilon>0\), we have:

Now, \(\{|\xi_{n,i}|>\varepsilon\}\) implies that \(\{\mu_{\text{min}}(F_{n})<c_{3}/\varepsilon^{2}\}\), therefore, \(1_{\{|\xi_{n,i}|>\varepsilon\}}\leq 1_{\{\lambda_{\text{min}}(F_{n})<c_{3}/\varepsilon^{2}\}}\). Thus,

Under C3, \(\mu_{\text{min}}(F_{n})\rightarrow\infty\) as \(n\rightarrow\infty.\) Therefore,

Then it follows that, \(\forall a\neq\mathbb{R}^{r},a^{\top}F_{n}^{-\frac{1}{2}}S_{n}\) converges in distribution to \(\mathcal{N}(0,1)\) and by Cramer–Wold device \(F_{n}^{-\frac{1}{2}}S_{n}\) converges in distribution to \(\mathcal{N}(0,\mathbb{I}_{r}).\)

To prove \(F_{n}^{-\frac{1}{2}}(\hat{\theta}_{n}-\theta_{0})\overset{\ell}{\longrightarrow}\mathcal{N}(0,\mathbb{I}_{r})\), the Taylor expansion of \(S_{n}\) at \(\hat{\theta}_{n}\) can be used. From the mean value theorem for vector valued functions (e.g., Heuser, 1981, p. 278), we have

and

By pre-multiplying \(F^{\frac{T}{2}}_{n}\) and integrating with respect to \(\tau\) on \(\left[0,1\right]\), we have

Under Condition C4 we have

It follows from Lemma 6.1 and the continuous mapping theorem [2], that Theorem 3.2 holds. \(\Box\)

About this article

Cite this article

Ali, E., Diop, M.L. & Diop, A. Statistical Inference in a Zero-Inflated Bell Regression Model. Math. Meth. Stat. 31, 91–104 (2022). https://doi.org/10.3103/S1066530722030012

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3103/S1066530722030012