Abstract

Background

Objective criteria for predicting residents' performance do not exist. The purpose of this study was to test the hypothesis that global assessment by an intern selection committee (ISC) would correlate with the future performance of residents.

Methods

A prospective study of 277 residents between 1992 and 1999. Global assessment at the time of interview was compared to subsequent clinical (assessed by chief residents) and cognitive performance (assessed by the American Board of Pediatrics in-service training examination).

Results

ISC ratings correlated significantly with clinical performance at 24 and 36 months of training (r = 0.58, P < .001; and r = 0.60, P < .001 respectively). ISC ratings also correlated significantly with in-service exam scores in the 1st, 2nd, and 3rd years of training (r = 0.35, P = .0016; r = 0.39, P = 0.0003; r = 0.50, P = 0.005 respectively).

Conclusions

Global assessment by an ISC predicted residents' clinical and cognitive performances.

Similar content being viewed by others

Background

Intern selection committees exist to select appropriate medical students from the universe of students willing to enter that training program. This is often a challenging task. Members of intern selection committees devote huge resources toward achieving the dual goals of selecting applicants who hold future promise and predicting the future performance of applicants [1, 2]. There have been several attempts in the past to develop objective and valid criteria for predicting the performance of medical students as residents [2–4]. Many studies have found no relationship between objective measures of medical school performance and subsequent performance during residency [5–8]. However, other studies have demonstrated a modest correlation between medical school performance and residency performance [9–15].

Many training programs, including ours, rely on global subjective assessments made by experienced medical educators during the intern selection process. However, we found very little published data to support this practice. Thus, this study was designed to test the hypothesis that global assessment by an intern selection committee would correlate with the future performance of residents. Specifically, we aimed to answer the following question: Does the global assessment of an intern selection committee correlate with the clinical and cognitive performance of a resident?

Methods

Subjects and setting

We studied prospectively 277 residency applicants who subsequently matched to our pediatric training program between 1992 and 1997. The study was based at the Children's Hospital at Montefiore/Albert Einstein College of Medicine, Bronx, New York. All applicants to the residency program were rated by an Intern Selection Committee (ISC), on a numerical scoring system ranging from 1 (best) to 4 (worst). Applicants judged to be "outstanding, extremely strong, superior" were assigned a score of "1". Those considered to be "strong, excellent" received a score of "2", and those judged as "acceptable, competent" received a score of "3". The full ISC committee determined the final score for each applicant. The ISC was composed of a twenty-member panel with an average of 10 years experience (range 7 to 25 years) in intern selection. The same 20 members were on the selection committee during the duration of the study. Factors that were considered by the ISC included performance during the interview, dean's letter, narrative comments from clinical rotations, clinical grades, and letters of recommendation. The process of assigning scores was two-staged. First, a member of the ISC reviewed the applicant's file and assigned a preliminary score. Then, all applicants were reviewed by the full committee to determine a final ISC score. During the study period, the full committee upheld the initial ISC score 99.3% of the time. The ISC rating was assigned at the end of the interview process, prior to the submission of rank lists to the National Resident Matching Program.

Evaluation of clinical and cognitive performance

Clinical performance was assessed at the end of 12, 24, and 36 months of training for each resident. Four chief residents, at the Post-Graduate Year 4 level, were asked to rate the clinical performance of each resident using the same scoring scale as the ISC. In our program, the chief residents provide the most direct clinical supervision of all residents and are intimately familiar with the residents' clinical work. The chief residents were blinded to the ISC scores and to residents' scores on the in-service training examination. Chief residents were also blinded to each other's global assessments.

Cognitive performance was assessed by absolute test scores on the American Board of Pediatrics (ABP) in-service training examination administered at the beginning of the 1st, 2nd, and 3rd years of training.

Statistical methods

Agreement among chief resident raters was assessed with the multi-rater hierarchical kappa-type statistics for categorical variables [16]. Linear regression analysis was used to compare ISC ratings with subsequent mean clinical performance ratings and standardized examination scores. Differences in proportions were tested by chi-square statistics. Significance was set at p < 0.01.

Results

There was significant agreement among the four chief residents in the clinical ratings assigned to the 227 residents (kappa = .75, P < .0001).

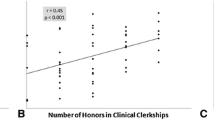

After 24 months of training, there was a significant correlation between the ISC rating and clinical performance rating (Table 1). This association was maintained at 36 months of observation (Figure 1). Applicants with the highest ISC scores (corresponding to poorer rankings) were more likely to perform poorly clinically, as illustrated by a greater degree of clustering of scores in that range (Figure 1).

There was a significant correlation between the ISC rating and cognitive performance on the American Board of Pediatrics in-service training examinations (Table 2). The level of this correlation became stronger as residents advanced through the training program. The relationship between ISC rating and cognitive performance on the American Board of Pediatrics in-service training examinations is also displayed in Figure 2.

Discussion

In this study, the combined experience of a group of faculty had modest but significant validity in predicting resident's clinical and cognitive performances. Overall, the committee was better at identifying those applicants who were less likely to perform well clinically. The observed degrees of correlation between ISC ratings and future clinical performance compare quite favorably with those reported for objective measures of medical school performance and even exceed those reported for objective criteria such as National Board of Medical Examination scores, medical school grade point average, computerized academic class ranking and Alpha Omega Alpha membership [9, 11, 17, 18].

Other studies have found that performance in medical school can be predictive of performance in different specialties [19–21]. Hojat et al examined the issue of predicting performance during residency and found that associations varied by specialty and at different levels of performance for different measures [22]. Brown and colleagues found that most residents who did poorly had problems with attitude and motivation, as opposed to knowledge and skills [23]. However, the authors found little evidence in the medical school record that might have predicted such sub-optimal performance during residency [23].

Noteworthy in our study was the consistent increase in the strength of the relationship between ISC ratings and performance as residents advanced during residency. This higher level of correlation between ISC ratings and clinical ratings as the residents progressed through their training may be related to the fact that the roles of interns and residents are quite different in our training program. While residents are expected to synthesize clinical data and problem-solve around patient care issues, interns are mostly required to be compulsive about executing the management plans on their patients. It is possible, therefore, that the potential expressed in the ISC score would not become manifest until applicants assume the resident role in our training program.

While it is possible that these findings represent a "self-fulfilling prophecy", we do not believe that this is the case. First, the ISC interviewed and rated over 1,500 applicants during the study period. Next, all ratings are deleted from applicant files once the resident matching process is completed. Also, in subsequent discussions during resident performance reviews, the members of the ISC were often unable to consistently recall the ratings assigned to specific residents.

Conclusions

This study was designed to test the hypothesis that the subjective global assessment of an intern selection committee was a valid predictor of an applicant's clinical and cognitive performance during residency. We found that there was a significant relationship between the assessment of applicants at the time of selection and their subsequent performance as residents, both clinically and academically. The specific components of faculty "experience" that contributed to the determination of the ISC global subjective rating are currently under investigation. However, our findings suggest that the opinions of experienced faculty should be regarded as a valuable asset deserving of consideration on the list of criteria for residency applicant selection.

References

Sklar DP, Tandbert DT: The value of self-estimated scholastic standing in residency selection. J Emerg Med. 1995, 13: 683-685. 10.1016/0736-4679(95)00082-L.

Sklar DP, Tandberg DT: The relationship between national resident match program rank and perceived performance in an emergency medicine residency. Am J Emerg. Med. 1996, 14: 170-172.

Brown E, Rosinksi EF, Altman DF: Comparing medical school graduates who perform poorly in residency with graduates who perform well. Acad Med. 1993, 68: 1041-1043.

Papp KK, Polk HC, Richardson JD: The relationship between criteria used to select residents and performance during residency. Am J. Surg. 1997, 173: 326-329. 10.1016/S0002-9610(96)00389-3.

Wood PS, Smith AL, Altmaier EM, Tarico VS, Franken EA: A prospective study of cognitive and noncognitive selection criteria as predictors of resident performance. Invest Radiol. 1990, 25: 855-859.

Kron IL, Kaiser DL, Nolan SP, Rudolf LE, Muller WH, Jones RS: Can success in the surgical residency be predicted from preresidency evaluation?. Am Surg. 1985, 202: 694-695.

Smith SR: Correlations between graduates' performances as first-year residents and their performances as medical students. Acad. Med. 1993, 68: 633-634.

Borowitz SM, Saulsbury FT, Wilson WG: Information collected during the residency match process does not predict clinical performance. Arch Pediatr Adolesc Med. 2000, 154: 256-260.

Markert RJ: The relationship of academic measures in medical school to performance after graduation. Acad. Med. 1993, 68 (suppl 2): S31-S34.

Arnold L, Willoughby TL: The empirical association between student and resident physician performances. Acad. Med. 1993, 68 (suppl 2): S35-S40.

Fincher RM, Lewis LA, Kuske TT: Relationship of interns' performances to their self-assessments of their preparedness for internship and to their academic performances in medical school. Acad. Med. 1993, 68 (suppl 2): S476-S50.

Case SM, Swanson DB: Validity of the NBME Part I and Part II scores for selection of residents in orthopaedic surgery, dermatology, and preventive medicine. Acad. Med. 1993, 68 (suppl 2): S51-S56.

Erlandson EE, Calhoun JG, Barrack FM, et al: Resident selection: applicant selection criteria compared with performance. Surgery. 1982, 92: 270-275.

Amos DE, Massagli TL: Medical school achievements as predictors of performance in a physical medicine and rehabilitation residency. Acad. Med. 1996, 71: 678-680.

Kesler RW, Hayden GF, Lohr JA, Saulsbury FT: Intern ranking versus subsequent house officer performance. South Med. J. 1986, 79: 1562-1563.

Landis JR, Koch GG: An application of hierarchical kappa-type statistics in the assessment of majority agreement among multiple observers. Biometrics. 1977, 33: 363-374.

Fine PL, Hayward RA: Do the criteria of resident selection committees predict residents' performances?. Acad Med. 1995, 70 (9): 834-8.

George JM, Young D, Metz EN: Evaluating selected internship candidates and their subsequent performances. Acad Med. 1989, 64 (8): 480-2.

Calhoun KH, Hokanson JA, Bailey BJ: Predictors of residency performance: a follow-up study. Otolaryngol Head Neck Surg. 1997, 116: 647-651.

Dirschl DR, Dahners LE, Adams GL, Crouch JH, Wilson FC: Correlating selection criteria with subsequent performance as residents. Clin Orthop. 2002, 399: 265-274. 10.1097/00003086-200206000-00034.

Boyse TD, Patterson SK, Cohan RH, Korobkin M, Fitzgerald JT, Oh MS, Gross BH, Quint DJ: Does medical school performance predict radiology resident performance?. Acad Radiol. 2002, 9: 437-445. 10.1016/S1076-6332(03)80189-7.

Hojat M, Gonnella JS, Veloski JJ, Erdmann JB: Is the glass half full or half empty? A reexamination of the associations between assessment during medical school and clinical competence after graduation. Acad Med. 1993, 68 (2 Suppl): S69-76.

Brown E, Rosinski EF, Altman DF: Comparing medical school graduates who perform poorly in residency with graduates who perform well. Acad Med. 1993, 68: 806-808.

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1472-6920/2/7/prepub

Acknowledgements

I thank Mary McGuire for her assistance with data collection and data entry.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing Interests

None

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Ozuah, P.O. Predicting residents' performance: A prospective study. BMC Med Educ 2, 7 (2002). https://doi.org/10.1186/1472-6920-2-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1472-6920-2-7