Abstract

As the driving force of the evolutionary game, the strategy update mechanism is crucial to the evolution of cooperative behavior. At present, there has been a lot of research on the update mechanism, which mainly involves two aspects. On one hand, all players in the network use the same rule to update strategies; on the other hand, players use heterogeneous update rules, such as imitate and innovate. A sophisticated update mechanism is available. However, most of these studies are based on node dynamics, that is, individuals adopt the same strategy to their neighbors at the same time. Considering that in real life, faced with complex social relationships, the code of conduct generally followed by individuals is to adopt different decision-making behaviors for different opponents. Therefore, here, we are based on edge dynamics, which allows each player to adopt different strategies for different opponents. We analyze how the mixing ratio of the two mechanisms in the network affects the evolution of cooperative behavior based on imitation and myopic. The parameter u is introduced to represent the proportion of myopic players. The simulation results show that in the edge dynamics behavior patterns, compared with the myopic rule, the imitate rule plays a leading role in promoting the group to achieve a high level of cooperation, even when the temptation to defect is relatively large. Furthermore, for players who adopt the imitate update mechanism, individuals with high cooperation rate dominate when u is relatively high, and individuals with low cooperation rate dominate when u is relatively large. For players who adopt the myopic update rule, regardless of the value of u, the individual’s cooperation rate is 0.25 and 0.5 is dominant.

Graphic abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

As indicated, our human beings are prone to be selfish [1]; whereas in practice, cooperation widely exists and contributes to society’s maintenance and prosperity, though this seems to be contradicted with natural selection [3,4,5]. Hence, it seems to be a tremendous challenge to sustain the large-scale cooperation in natural. Then, game theory emerges as an efficient means to characterize the conflict between cooperation and selfish behaviors [2,3,4,5]. Numerous scholars have devoted their endless effort to solving this social dilemma either from the perspective of theoretical analyses or experimental discussions [6,7,8,9].

Among these existed works, evolutionary game theory seems to be regarded as a simple and effective method [10,11,12,13,14,15]. The authors in [6] introduced this method into a networked population, and they find that cooperative behaviors are greatly promoted due to the existence of compact clusters being composed of cooperators. Thus, cooperators can be prevented from being exploited by defectors. Aiming to promote the level of cooperation, different mechanisms are proposed during the past decades, i.e., kin selection [16], direct reciprocity [17], group selection [18], indirect reciprocity [19], and network reciprocity. Moreover, various factors are included to study corresponding effects on cooperation, such as expressing willingness to cooperate [20], age [21], memory [22], and co-evolution [23]. Furthermore, different rules are also proposed, such as learning [24], teaching [25], myopic strategy [26], and imitation strategy [27, 28] for updating the strategy to be applied.

Furthermore, in response to the study of individual diversity, in [29], social diversity is used via scaling factors drawn randomly from a given distribution to address extrinsic factors that determine the mapping of original payoffs to individual fitness. The majority of the current studies are conducted based on the assumption that the focal player applies the same strategy when confronted with different neighbors [30]. Whereas the focal player is inclined to adjust its strategy based on the evaluation of the environment when participating in the games with different neighbors. We refer to the behavioral patterns of link players as interactive diversity, meaning that they can adopt different strategies towards different neighbors at the same time. Similarly, the traditional behavior of players who adopt the same strategy towards all their neighbors is called interactive identity. Current research suggests that interactive heterogeneity can greatly enhance cooperation [31]. Even if different factors, such as game metaphors and population types, are incorporated, this promotion effect is robust; while in [32], the effect of two typical interaction patterns, i.e., interactive identity and interactive diversity, is also studied. In [33], the authors also considered the influence of two different strategy updating rules, i.e., learning and teaching are studied thoroughly.

At present, there has been a lot of research on a complex model involving two different update rules [34,35,36]. However, at present, we focus on edge dynamics and discuss two different updating rules can be adopted by the players, i.e., myopic strategy and imitation strategy. Thus, the link agents can be classified into two categories accordingly, while the type of link agent remains once determined during the experimental trial. Hence, the proportion of link agents adopting different updating rules can be characterized by a parameter u and the effects of varying u on the frequency of cooperation can be investigated under the interactive diversity scenario. While for simplicity, we suppose the link agent pair participates in the traditional Prisoner’s dilemma (PD) game, and the strategy adopted by the link agent can only be either cooperation (C) or defection (D). Then, the evolutionary dynamics of the PD games can be investigated through Monte Carlo (MC) and the effects of varying the proportion of myopic strategy and imitation strategy on cooperative behaviors can be reflected by extensive simulations.

Here, the whole manuscript is myopic provided as follows: the proposed evolving mechanism is illustrated in the Model section explicitly in which two updating rules can be adopted. Then, extensive experiments are conducted with corresponding simulation results being provided. At the same time, analyses of the results are given to illustrate the role of the proposed mechanism in promoting cooperation. Eventually, conclusions are given.

2 Model

For the studies in this manuscript, experiments are conducted on square lattice networks which are supposed to be with periodic boundaries; while corresponding network size is assumed to be \(L\times L\). Each player occupies a node in the network and there exists no empty nodes. Hence, each player is anticipated to have four neighbors. The network structure will remain once given, while interactions are carried out via edges connecting players.

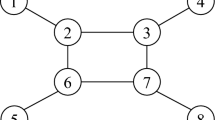

Similar as previous works, square lattice networks with periodic boundaries are chosen to conduct extensive experiments for further discussion. The network size where \(L=200\). Thus, there are totally \(L^{2}\) individuals participating in the evolutionary game. Due to the interactive diversity assumption, a focal individual x is supposed to interact with its four neighbors, while against a different neighbor, a new strategy might be applied. Here, an individual is supposed to consist of four link agents, as illustrated in Fig. 1. For each link agent pair, two agents are anticipated to participate in the traditional PD game, while the initial strategy of each link agent is randomly assigned to be either cooperation (C) or defection (D). Thus, on the square lattice network, the strategies are evenly distributed. For each link agent pair, a reward of R will be received if both sides cooperate. Whereas a punishment of P will be incurred if they choose to defect simultaneously. Nevertheless, if different strategies are applied against each other, the link agent who cooperates will undertake the sucker’s payoff S, whereas the other receives the highest payoff T. For the PD game, the following requirement is to be satisfied, i.e., T >R >\(P>S\), 2R >\(S + P\). In this manuscript, the considered payoff matrix for the PD game is given as

where b denotes the dilemma strength (1 \(<b<\) 2).

For certain link agent pair, the earned profit by the focal individual x can be denoted as \(P_{xy}\). After interacting with all the neighbors, the accumulated payoff, i.e., \(P_{x}\), can be calculated as

where \(\phi _{x}\) denotes the neighboring set of the focal individual x and k indicates the total number of elements in \(\phi _{x}\) (for the square lattice network considered, \(k =\) 4).

For the strategy updating, two types of rules are considered, i.e., myopic strategy (or short-sighted behavior) and imitation strategy, as illustrated in Fig. 1a, b, respectively. Details of the updating rules above are illustrated as follows (For simplicity, link agent at certain direction is provided, while the updating processes for the other link agents of player x are similar):

Myopic strategy [26]: it is also referred to as short-sighted behavior. Here, \(y_{2}\) is selected for an illustration. The strategy applied to \(y_{2}\) by the focal player x is \(s_{xy_{2}}\), while \(s_{xy_{2}}\) is cooperation as in Fig. 1a. After considering all the possible interactions, an accumulated payoff \(P_{x}\) can be obtained. Whereas, if the focal player x applies a reversed strategy \(s_{xy_{2}}^{'}\), i.e., defection, to \(y_{2}\) for the example in Fig. 1a, while the other conditions remain, a different accumulated payoff \(P_{x}^{'}\) will be derived. Through comparing \(P_{x}\) and \(P_{x}^{'}\), the strategy with better performance is to be adopted with the Femi updating rule [37] applied. This indicates that the focal player x is anticipated to change the strategy from \(s_{xy_{2}}\) to \(s_{xy_{2}}^{'}\) with the following probability:

where K denotes the selection intensity or the stochastic noises [29]; similar as in [12], K is set to be 0.1, while this holds for the imitation strategy.

Imitation strategy [27]: aiming to update the strategy against certain neighbor, a reference is required. Here, a parameter p is introduced, and the reference selection is described as: when updating the strategy of the focal player x against \(y_{1}\), then \(y_{1}\) is to be selected as the reference with p; while \(y_{2}\), \(y_{3}\), or \(y_{4}\) might be chosen with a probability of (1-p)/3. As in [38, 39], p is set to be 0. 919.

After determining the reference, the focal player x will update the strategy against \(y_{1}\) by imitating the strategy of the selected reference under the Femi updating rule [34]. Corresponding probability is given as

Similarly, the strategy updating against the other neighbors can be conducted. Please refer to [38, 39]. for more information; the mechanism adopted here is similar.

Hence, link agents are classified into two categories based on the updating rules adopted and a parameter u is defined to characterize such a division. We suppose, for a given u, \(4uL^{{2}}\) link agents are supposed to choose the myopic strategy, while the rest select the imitation strategy. For \({0<}u<1\), both myopic strategy and imitation strategy coexist. Thus, the effects of applying different updating rules on the level of cooperation can be reflected by selecting different u. For the strategy adopted by the link agent, it is assigned to be either cooperation or defection according to u. Here, we suppose the types of link agents remain throughout the simulation process once determined.

In this manuscript, Monte Carlo (MC) simulation is conducted to analyze the evolutionary process, while the adopted MC steps are equal to 100,000. Aiming to ensure the accuracy, the obtained simulation results are averaged over 15 independent experiments.

3 Results and analysis

Aiming to reveal the effects of varying the proportion of link agents adopting different updating rules on cooperation, extensive experiments are conducted with analyses of the simulation results being provided.

First, as aforementioned, a pre-defined parameter u is used to indicate the possibility of a certain link agent adopting either myopic strategy or imitation strategy. Extensive experiments are conducted for scenarios with different parameter combinations of b and u, while corresponding frequencies of cooperation can be obtained accordingly as provided in Fig. 2. As illustrated, for a fixed b that is larger than 1.1, the frequency of cooperation seems to decrease first and then increases if the proportion of link agents adopting imitation strategy decreases (i.e., increasing the value of u). Whereas for \(b{<1.1}\), the frequency of cooperation decreases if the value of u increases. While for a fixed value of u, the frequency of cooperation decreases if a large temptation b is considered. Furthermore, we can see that the frequency of cooperation seems to be lowest when the temptation is relatively large (around 2), and the proportion of link agents adopting myopic strategy is approximately 40%.

To further understand the effect of varying the proportion of link agents adopting the myopic strategy, we also conducted experiments for several selected scenarios (\(u = 0, 0.2, 0.5, 0.8, 1\)). Corresponding evolutionary dynamics are presented in Fig. 3, while b equals 1.8. As presented, frequencies of cooperation start from 0.5 regardless of the updating rules adopted by the link agents; while this is incurred by the fact that cooperation and defection are randomly assigned to the link agents on the lattice network. If \(u=0\), all the link agents will adopt the imitation strategy. Under such a scenario, the frequency of cooperation reduces rapidly and remains relatively low for a long time; then, such value increases rapidly until the stationary state is reached. As illustrated, we can clearly see that the frequency of cooperation is relatively high (approximately 90%) when stationary states are arrived, even though the temptation to defect is relatively large. This is referred to as the well-known inverse feedback phenomenon for evolutionary game theory, this outstanding contribution has been proved by scholars as in [40, 41]. If \(u=0.2\), there is a relatively small fraction of link agents adopting the myopic strategy; the frequency of cooperation is approximately 55% at the stationary state even if the inverse feedback phenomenon still exists. Compared with the scenario with \(u=0\), we can conclude that the introduction of the myopic strategy plays an important role in inhibiting cooperation. When considering the scenario of \(u=0.5\), the inverse feedback phenomenon disappears, while the frequency of cooperation decreases gradually and remains a relatively low level of 25%. For the scenario of \(u=0.8\), there exist a relatively large fraction of link agents adopting myopic strategy; while the frequency of cooperation can be improved compared with the scenario of \(u=0.5\). When the proportion of link agents adopting myopic strategy increases to 1, the frequency of cooperation increases slightly, whereas the promotion effect is not that significant. Among the scenarios incorporated, we can find that the frequency of cooperation seems to be the lowest when \(u=0.5\).

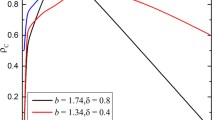

Later, we devote our efforts to investigate the relationship between the frequency of cooperation and the parameter u. Simulations are conducted for scenarios with different values of b (\(b = 1.2, 1.5\), and 1.8), with corresponding results being provided in Fig. 4a. We can see that the effect of u on the frequency of cooperation seems to be in a V-shaped form and there exists a threshold for each scenario, being denoted as \(u_{d}\). If \(u<u_{d}\), the frequency of cooperation first decreases rapidly if u is increased, and then increases slightly. Whereas if \(u>u_{d}\), the cooperation can be improved if more link agents are inclined to adopt the myopic strategies; nevertheless, this promotion effect is insignificant. Moreover, the threshold \(u_{d}\) varies for different b and \(u_{d}\) is usually smaller for larger b. In short, in terms of promoting cooperation, the imitate rule is more sensitive to b, while the myopic update rule is more insensitive. Therefore, for a fixed value of b, the cooperation rate first decreases and then increases with u value. Furthermore, the relationship between the number of different types of cooperative link agents and u is also provided in Fig. 4b, while b is set to be 1.8. Here, the link agents who cooperate are classified into different categories according to the updating rules adopted. Let TC, MC, and IC indicate the total number of cooperative link agents, the number of cooperators with the myopic strategy, and the number of cooperators with the imitation strategy, respectively. As illustrated, IC decreases with an increase of u and the decreasing trend becomes much slower for larger u. While MC increases slowly with the increase of u. Overall, TC decreases rapidly first and then increases slowly. Hence, we can find that the cooperation can be greatly improved if the link agents adopt the imitation strategies.

a Illustration of the relationships between the frequencies of cooperation and u for scenarios with different b; b the relationships between TC, MC, and IC with u for the scenario with \(b = 1.8\). Here, TC, MC, and IC indicate the total number of cooperative link agents, the number of cooperators with the myopic strategy, and the number of cooperators with the imitation strategy, respectively

To understand the cooperative behavior of the individuals from a micro perspective, in Fig. 5a–c, the distribution of link agents’ cooperative probability with different types of updating rules is provided for scenarios with \(u = 0.1, 0.5, 0.9\) when the stationary state is achieved. As indicated, the majority of the link agents mainly adopt the imitation strategy for \(u = 0.1\) while individuals with cooperative probabilities of 1 occupy almost 40%. Whereas for \(u = 0.5\), most individuals seem to have low cooperative probability, especially a cooperative probability of 0. Though the number of link agents adopting the myopic strategy is almost the same as those adopting the imitation strategy, the individual with link agents adopting the myopic strategy usually seems to possess a high cooperative probability. Under such a scenario, a few individuals with the probabilities to cooperate being equal to 1, but the link agents of these individuals will adopt the myopic strategy. When \(u = 0.9\), the above phenomenon is more obvious. Overall, we can conclude that the imitation strategy plays an important role in promoting cooperation of the whole system, whereas individuals adopting the myopic strategies seem to a high probability to be cooperative.

For simulations conducted previously, K is fixed as 0.1. We know that the variation of K also affects the evolutionary dynamics of cooperation; hence, extensive simulations are also conducted for scenarios with different parameter combinations of u and K, while b equals 1.8. Especially, it is impossible to assign K to 0; thus, the minimum value of K considered here equals 0.001. As illustrated, when all link agents adopt imitation strategy, i.e., \(u=0\), cooperative behaviors can be significantly promoted, and the level of cooperation is relatively high; for a relatively small u, the frequency of cooperation decreases if K increases when a fixed u is considered. Whereas for a large u, the frequency of cooperation increases if K increases when u is fixed. While for a fixed K, the cooperation behavior will be inhibited if a large value of u is considered (Fig. 6). Whereas when u becomes slightly larger, we can find that the frequency of cooperation decreases first and then increases if we increase the value of K. This confirms the previous conclusion that myopic strategy’s effect on the frequency of cooperation seems to be V-shaped.

As it is well known that network topology plays an important role in affecting the frequency of cooperation. Thus, to derive a general conclusion for the effect of myopic strategy, experiments are performed on different networks. Here, the SW network and Random network are selected for illustrations with corresponding phase diagrams being provided in Fig. 7. As illustrated, if fewer link agents adopt myopic strategies (i.e., smaller u), the frequency of cooperation seems to be increased, especially when u is smaller than 0.1. For a fixed b, we can see that the frequency of cooperation decreases first and then increases if more link agents adopt the myopic strategies, while the overall effect of different parameter combinations on the frequency of cooperation seems to be in a V-shaped form. Considering the random network, a similar phenomenon exists, while the overall effect seems to be in a U-shaped form. By considering the phase diagram for the lattice network, we can find that the heterogeneity seems to play a negative role in affecting the cooperation in networks: the performance on the lattice network is much better than that on the SW network, while corresponding performance on the random network is the worst.

4 Conclusion

In this manuscript, we mainly devote our effort to investigate the effects of varying the proportion of link agents adopting different strategy updating rules on the level of cooperation when interactive diversity is considered. There have been a lot of studies on individuals adopting multiple update rules, such as a complex model with imitate and innovate rules. What is innovative here is that agents follow heterogeneous interactions, that is, individuals are allowed to adopt inconsistent strategies to different neighbors at the same time. The evolutionary dynamics on the regular lattice network are mainly investigated. The players can adopt two different updating rules, i.e., myopic strategy (i.e., short-sighted behavior) and imitation strategy. Thus, the link agents can be classified into two categories accordingly. Hence, the proportion of link agents adopting different updating rules can be characterized by a parameter u and the effects of varying u on the frequency of cooperation can be investigated accordingly. The simulation results show that in terms of promoting cooperation, the imitate update rule is more sensitive to the temptation to defect, while the myopic update rule is more insensitive. Therefore, for a fixed value of b, the cooperation rate first decreases and then increases as the proportion of myopic people increases. Although increasing the temptation to defect will not result in a significant decrease in the cooperative behavior of myopic groups, imitate rule plays a leading role in achieving high cooperation rates across the entire network. Furthermore, in the steady state, the distribution of the cooperation rate of the two updating rules shows a big difference. For imitating players, when the value of u is small, individuals with a high cooperation rate are dominant, and individuals with a low cooperation rate are dominant when u is relatively large. For myopic players, regardless of the u value, the cooperation rate is mainly 0.25 and 0.5. Simulation is conducted on different networks, we can find that when only introducing the heterogeneity of individual behavior patterns, and the social dilemma is alleviated. However, when considering the heterogeneity of the network structure at the same time, cooperation does not improve, implying that when multiple mechanisms promoting cooperation exist at the same time, the opposite effect may be obtained, and this conclusion has been proved in Su’s research [38, 39]. We hope that the insights in this manuscript help solve social dilemmas.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: The data can be generated by the program which implements the model, while the details of the model are already provided.]

References

K. Sigmund, The calculus of selfishness (Princeton University Press, Princeton, 2016)

M. Perc, J.J. Jordan, D.G. Rand et al., Statistical physics of human cooperation. Phys. Rep. 687, 1–51 (2017)

C. Gao, J. Liu, Network-based modeling for characterizing human collective behaviors during extreme events. IEEE Trans. Syst. Man Cybern. Syst. 47(1), 171–183 (2017)

C.P. Roca, J.A. Cuesta, A. Sánchez, Evolutionary game theory: temporal and spatial effects beyond replicator dynamics. Phys. Life Rev. 6(4), 208–249 (2009)

Y. Han, Z. Song, J. Sun et al., Investing the effect of age and cooperation in spatial multigame. Phys. A 541, 123269 (2020)

M.A. Nowak, R.M. May, Evolutionary games and spatial chaos. Nature 359(6398), 826–829 (1992)

D. Vilone, V. Capraro, J.J. Ramasco, Hierarchical invasion of cooperation in complex networks. J. Phys. Commun. 2(2), 025019 (2018)

M.G. Zimmermann, V.M. Eguíluz, Cooperation, social networks, and the emergence of leadership in a prisoner’s dilemma with adaptive local interactions. Phys. Rev. E 72(5), 056118 (2005)

G. Szabó, J. Vukov, A. Szolnoki, Phase diagrams for an evolutionary prisoner’s dilemma game on two-dimensional lattices. Phys. Rev. E 72(4), 047107 (2005)

J.M. Pacheco, A. Traulsen, H. Ohtsuki et al., Repeated games and direct reciprocity under active linking. J. Theor. Biol. 250(4), 723–731 (2008)

P. Zhu, X. Wang, D. Jia et al., Investigating the co-evolution of node reputation and edge-strategy in prisoner’s dilemma game. Appl. Math. Comput. 386, 125474 (2020)

Z. Wang, M. Jusup, L. Shi et al., Exploiting a cognitive bias promotes cooperation in social dilemma experiments. Nat. Commun. 9(1), 1–7 (2018)

A. Szolnoki, M. Perc, Vortices determine the dynamics of biodiversity in cyclical interactions with protection spillovers. New J. Phys. 17(11), 113033 (2015)

J. Shi, D. Hu, R. Tao et al., Interaction between populations promotes cooperation in voluntary prisoner’s dilemma. Appl. Math. Comput. 392, 125728 (2021)

C. Chu, X. Hu, C. Shen et al., Self-organized interdependence among populations promotes cooperation by means of coevolution. Chaos 29(1), 013139 (2019)

C. Vertebrates, Breeding together: kin selection and mutualism in. Science 296, 69 (2002)

M.A. Nowak, Five rules for the evolution of cooperation. Science 314(5805), 1560–1563 (2006)

S.A. West, A.S. Griffin, A. Gardner, Social semantics: altruism, cooperation, mutualism, strong reciprocity and group selection. J. Evol. Biol. 20(2), 415–432 (2007)

M.A. Nowak, K. Sigmund, Evolution of indirect reciprocity by image scoring. Nature 393(6685), 573–577 (1998)

Y. Jiao, T. Chen, Q. Chen, The impact of expressing willingness to cooperate on cooperation in public goods game. Chaos Solitons Fractals 140, 110258 (2020)

A. Szolnoki, M. ž Perc, G. Szabó, H.-U. Stark, Impact of aging on the evolution of cooperation in the spatial prisoner’s dilemma game. Phys. Rev. E 80, 021901 (2009)

W. Lu, J. Wang, C. Xia, Role of memory effect in the evolution of cooperation based on spatial prisoner’s dilemma game. Phys. Lett. A 382(42–43), 3058–3063 (2018)

M. Perc, A. Szolnoki, Coevolutionary games—a mini review. BioSystems 99, 109–125 (2010)

G. Cimini, A. Sánchez, Learning dynamics explains human behaviour in Prisoner’s dilemma on networks. J. R. Soc. Interface 11(94), 20131186 (2014)

A. Szolnoki, G. Szabó, Cooperation enhanced by inhomogeneous activity of teaching for evolutionary Prisoner’s dilemma games. EPL (Europhysics Letters) 77(3), 30004 (2007)

M. Sysi-Aho, J. SaramäKi, J. Kertész et al., Spatial snowdrift game with myopic agents. Eur. Phys. J. B 44(1), 129–135 (2005)

M.A. Nowak, R.M. May, Evolutionary games and spatial chaos. Nature 359, 826–829 (1992)

C. Gao, C. Liu, D. Schenz, X. Li, Z. Zhang, M. Jusup, Z. Wang, M. Beekman, T. Nakagaki, Does being multi-headed make you better at solving problems? A survey of physarum-based models and computations. Phys. Life Rev. 29, 1–26 (2019)

M. Perc, A. Szolnoki, Social diversity and promotion of cooperation in the spatial prisoner’s dilemma game. Phys. Rev. E 77, 011904 (2008)

A. Szolnoki, X. Chen, Gradual learning supports cooperation in spatial prisoner’s dilemma game. Chaos Solitons Fractals 130, 109447 (2020)

A. Szolnoki, M. Perc, Leaders should not be conformists in evolutionary social dilemmas. Sci. Rep. 6, 23633 (2016)

A. Szolnoki, X. Chen, Competition and partnership between conformity and payoff-based imitations in social dilemmas. New J. Phys. 20(9), 093008 (2018)

P. Zhu, X. Hou, Y. Guo et al., Investigating the effects of updating rules on cooperation by incorporating interactive diversity. Eur. Phys. J. B 94(2), 58 (2021)

Z. Danku, Z. Wang, A. Szolnoki, Imitate or innovate: competition of strategy updating attitudes in spatial social dilemma games. EPL 121, 18002 (2018)

A. Szolnoki, Z. Danku, Dynamic-sensitive cooperation in the presence of multiple strategy updating rules. Phys. A 511, 371–377 (2018)

M.A. Amaral, M.A. Javarone, Heterogeneous update mechanisms in evolutionary games: mixing innovative and imitative dynamics. Phys. Rev. E 97, 042305 (2018)

J. Vukov, G. Szabó, A. Szolnoki, Cooperation in the noisy case: prisoner’s dilemma game on two types of regular random graphs. Phys. Rev. E 73(6), 067103 (2006)

Q. Su, A. Li, L. Zhou, L. Wang, Interactive diversity promotes the evolution of cooperation in structured populations. New J. Phys. 18, 103007 (2016)

Q. Su, A. Li, L. Wang, Evolutionary dynamics under interactive diversity. New J. Phys. 19, 103023 (2017)

M. Perc, A. Szolnoki, G. Szab’o, Restricted connections among distinguished players support cooperation. Phys. Rev. E 78, 066101 (2008)

A. Szolnoki, M. Perc, Promoting cooperation in social dilemmas via simple coevolutionary rules. Eur. Phys. J. B 67, 337–344 (2009)

Acknowledgements

This work was supported in part by National Key R&D Program of China (Grant no. 2018AAA0100905), National Natural Science Foundation of China (Grant No. 62073263), and Key Technology Research and Development Program of Science and Technology-Scientific and Technological Innovation Team of Shaanxi Province (Grant No. 2020TD-013).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Li, X., Cheng, L., Niu, X. et al. Highly cooperative individuals’ clustering property in myopic strategy groups. Eur. Phys. J. B 94, 126 (2021). https://doi.org/10.1140/epjb/s10051-021-00136-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjb/s10051-021-00136-5