Abstract

This paper deals with the numerical approximation of a stick–slip system, known in the literature as Burridge–Knopoff model, proposed as a simplified description of the mechanisms generating earthquakes. Modelling of friction is crucial and we consider here the so-called velocity-weakening form. The aim of the article is twofold. Firstly, we establish the effectiveness of the classical Predictor–Corrector strategy. To our knowledge, such approach has never been applied to the model under investigation. In the first part, we determine the reliability of the proposed strategy by comparing the results with a collection of significant computational tests, starting from the simplest configuration to the more complicated (and more realistic) ones, with the numerical outputs obtained by different algorithms. Particular emphasis is laid on the Gutenberg–Richter statistical law, a classical empirical benchmark for seismic events. The second part is inspired by the result by Muratov (Phys Rev 59:3847–3857, 1999) providing evidence for the existence of traveling solutions for a corresponding continuum version of the Burridge–Knopoff model. In this direction, we aim to find some appropriate estimate for the crucial object describing the wave, namely its propagation speed. To this aim, motivated by LeVeque and Yee (J Comput Phys 86:187–210, 1990) (a paper dealing with the different topic of conservation laws), we apply a space-averaged quantity (which depends on time) for determining asymptotically an explicit numerical estimate for the velocity, which we decide to name LeVeque–Yee formula after the authors’ name of the original paper. As expected, for the Burridge–Knopoff, due to its inherent discontinuity of the process, it is not possible to attach to a single seismic event any specific propagation speed. More regularity is expected by performing some temporal averaging in the spirit of the Cesàro mean. In this direction, we observe the numerical evidence of the almost convergence of the wave speeds for the Burridge–Knopoff model of earthquakes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Earthquakes occur along fractures in the Earth’s crust, named faults, characterized by a steady accumulation of tension, when big quantities of energy are suddenly released due to the relative motion between the two sides involved. For a better understanding, we recall that Earth’s lithosphere includes the crust and is also composed of a part of the upper mantle. Moreover, it presents a complex structure divided into distinct blocks, the tectonic plates. Indeed, it is along the borders of tectonic plates that the great accumulation of tension we mentioned above takes place.

A pivotal role in our analysis is played by the friction. Indeed, although the existence of forces able to solicit plates is an important factor to explain seismic events, nothing would happen if friction did not inhibit the relative motion between the two different sides of an active fault. Strongly connected with these concepts is the stick–slip phenomenon, associated with the earthquakes by Brace and Byerlee [7]. The borders of a fault exhibit asperities which make the local slip very difficult: As a consequence, tension increases and the motion is inhibited by the balance between tension and friction. Once this equilibrium is compromised, due to the steady accumulation of stress, a slip of the sides involved occurs and a great quantity of energy is released, thus generating an earthquake. The alternation between period of quiescence, in which tension increases, and phases in which tension is released along the fault is a typical example of the stick–slip behavior.

In the last decades, a great effort has been made to investigate the statistical properties of earthquakes. This way of thinking is strongly connected with the idea of self-organized criticality, SOC, developed by Bak et al. [3] and its influence on seismic events [2]. When critical states are reached, little perturbations affecting the elements belonging to the systems can propagate and involve items of any size [40]. In such a framework, this behavior is often illustrated by basic laws collecting the statistical properties of the process studied. As concerns the earthquakes, two important power laws would be a concrete manifestation of the SOC principles: the Gutenberg–Richter law [18] for the magnitude distributions and the Omori law for the aftershocks sequences [36]. In order to point out the SOC paradigm, the earthquake models are often analyzed with the cellular automaton approach, as in the work by Olami, Feder and Christensen [2, 32].

One of the most famous mathematical models developed to study earthquakes and its statistical properties is the Burridge–Knopoff model, proposed in 1967 [8], whose exploration provides a deep insight into the basic mechanism driving the seismic events. Such model has been deeply investigated in order to pursue a statistical study of earthquakes [19] and continues to be a landmark in this research field, due to its non-triviality but, at the same time, its simplicity [13]. On a mathematical level, the associated differential system exhibits a discontinuous right-hand side, arising from the choice of the friction law, the only source of nonlinearity in the model. Lots of models arising from applications exhibit similar characteristics and require careful analysis. An analytical study of a non-smooth friction–oscillator model, qualitatively very close to the Burridge–Knopoff model, is provided in [21]. Also the related numerical problem must be adequately approached: In this sense, some numerical methods are employed for non-smooth systems [1] and suitable regularizations have been performed [17]. Finally, let us remark that also stochastic variations of the Burridge–Knopoff model have been considered [20, 39]. However, we stress the fact that we are going to limit the analysis to the deterministic evolutive case, restricting the random part to the choice of the initial data.

The main original contributions of this paper are two. Firstly, we take advantage of a classical Predictor–Corrector strategy [33] to describe the discontinuous dynamics. The idea, new as a specific application to the Burridge–Knopoff model, is inspired by the necessity of providing a good initial guess to start fixed-point iterations when an implicit method is invoked. Indeed, because several function evaluations are generally required by using the fixed-point method, trying to reduce the computational cost becomes important. So the basic idea consists of using an explicit multistep method to compute a better initial guess and take advantage of this value by employing an implicit multistep method. The procedure is then divided into two parts: The first one is the prediction phase, where an explicit algorithm, named Predictor, furnishes an adequate initial guess, and the second one is the correction phase, where an implicit algorithm is invoked, possibly also several times, to realize the fixed-point scheme. The implicit method used is defined the Corrector because it acts on the predicted initial value. However, it is important to notice that the overall strategy is totally explicit because the predicted value is employed within the implicit method where the dependence on the incoming time instant appears. The second original contribution has been inspired by the fact that some continuous versions of the Burridge–Knopoff model exhibit propagating fronts (see [26, 31]). Hence, we wanted to explore if there is some numerical evidence of similar kind of structures for the original model, where it is known that the analysis is much more intricated due to the inherent discrete structure. The main tool which we consider is a time-dependent and space-averaged value of the solution, which we call LeVeque–Yee formula [24], which has been originally proposed in the field of conservation laws (for details, see later on). As a natural consequence of the inherent discontinuities of the process, it is not possible to attach to a given evolution a given speed of propagation, since the behavior exhibits a number of large oscillations. Much better results are obtained by performing a time average in the spirit of the Cesàro mean which is, on its turn, generalized to the concept of almost convergence [4, 25] of wave speeds: We proceed as in the context of traveling wave theory, specifically, by numerically recognizing the existence of an asymptotic speed.

The contents are organized as follows. In Sect. 2, we introduce the model and describe a particular version among those available as developments of the original one proposed by Burridge and Knopoff, mentioning the important connection with the Gutenberg–Richter law. In Sect. 3, we present the numerical algorithm and analyze the computational strategy used; also, we comment on the results of simulations by starting from the simplest case in order to increase the complexity and consider more articulated configurations. In Sect. 4, we perform simulations aimed at detecting the almost convergence of wave speeds, by means of a suitable wave speed estimate, to get information about traveling fronts: We numerically prove the existence of an asymptotic threshold and discuss a phenomenological explanation concerning the convergence of the wave speed averages. Finally, in Sect. 5 a summary of our results is provided and perspectives on future work are considered.

2 The Burridge–Knopoff model

The model, originally proposed by Burridge and Knopoff in [8], is a spring–block model aiming to reproduce the typical dynamics which take place along an active fault. The goal is pursued through a discrete representation given by a chain of N identical blocks, with mass m, mutually connected by linear springs with elastic constant \(k_c\). A sort of one-dimensional array is generated (Fig. 1). It is also possible studying the dynamics produced by a grid of blocks, within a multidimensional version of the system, thus focusing on a two-dimensional array [28, 29].

The blocks are supposed to rest on a rough surface, where F describes a friction term, and are connected to a moving upper plate by linear springs with elastic constant \(k_p\). As regards the approximation of a real fault, the opposite sides of two different tectonic plates are assumed to be represented by the rough surface and the chain of blocks. The upper surface is supposed to be in motion, precisely at constant velocity V: This contribution induces a solicitation explainable thinking about the role of the external forces acting on a fault. It is assumed that the blocks are initially equally spaced and that the reciprocal distance is a. This means that a does not explicitly appear within the equation of motion for the block i, which is

where \(x_{i}\) is the displacement from the initial equilibrium position. Let us investigate the structure of (1) by analyzing each contribution.

Internal elastic energy As concerns the horizontal springs, it is assumed that a linear interaction takes place among the blocks. This is the conventional adjustment adopted within the Burridge–Knopoff model, but a linear coupling is not the only possibility. For instance, the eventuality of a nonlinear coupling is considered in [12]. Due to the chain structure, producing two neighbors for each block, the internal elastic solicitation consists of two contributions, obviously except for the masses at the edges. (In this case, adequate boundary conditions are required as we will discuss in Sect. 3.) By considering the elastic forces and recalling that the expression \(x_{i}\) is associated with the displacements from equilibrium position, the contribution provided by springs with stiffness \(k_c\) takes the form of the one-dimensional discrete Laplace operator.

External forces We said above that the action of the external forces is realized within the model by the upper surface, in motion with constant velocity V. The blocks deal with this external element through the springs with stiffness \(k_p\). So each mass is affected by another elastic solicitation besides that produced by the horizontal coupling. Of course, to quantify the vertical elastic force, it is necessary to take into account the elongation of springs caused by the upper plate. This consideration simply justifies the presence of the product Vt. It just has to combine this quantity with \(x_{i}\) and \(k_p\) according to the linear elasticity as in (1).

Friction The friction force \(F(\dot{x}_{i})\) is velocity-dependent. This law allows to reproduce the typical stick–slip behavior and introduces an essential instability inside the model. Modelling friction is one of the most delicate issues. Following Carlson and Langer [9,10,11], we consider the so-called velocity-weakening friction (whose specific formula will be given later on). Different choices could have been considered such as the Dieterich–Ruina friction law [15, 16, 34, 37] or the Coulomb friction law used by Muratov in [31]. In addition, another choice has to be made between two different qualitative behaviors: the asymmetric and symmetric versions. Here, the main difference is the constraint of nonnegative block velocity, meaning that back slip is inhibited for each block. We assume this constraint according to [11, 22, 27, 30, 35, 41] and adopt the following (multivalued) functional form

where \(v=\dot{x}_{i}\). This double structure is easily understandable because a law based on the stick–slip dynamics must exhibit a discontinuity, as a consequence of the alternation between sticking and sliding motion for each block. The back sliding motion is forbidden by formally imposing \(F(v)=-\infty\) for \(v<0\). The value \(F_{0}\) corresponds to the maximum static frictional force, so the static friction formally may range in the interval \((-\infty ,F_{0}]\). During a sticking period the elastic resultant force acting on a block is perfectly balanced by the static friction, which means no motion: In this case, equation (1) simply becomes \(m\ddot{x}_{i}=0\) with initial zero velocity. When the resultant force exceeds the threshold \(F_{0}\), a slipping period starts with dynamic friction. As the velocity increases, the value of friction becomes lower, decaying monotonically to zero (see Fig. 2).

Another important feature of the friction law is the role of the parameters \(\sigma\) and \(\alpha\). The first one quantifies a small drop of the friction at the end of a sticking period, and the second one provides information about the decreasing of the dynamic friction force in relation to the increasing of the sliding velocity.

One of the most interesting features of the Burridge–Knopoff model consists of the reproducibility of some important properties related to complex phenomena as real earthquakes, although the system exhibits a relatively simple structure. Among these typical behaviors, the Gutenberg–Richter law plays a significant role and can be used as a powerful instrument to assess the reliability of the model. Such power law establishes that, in a seismic zone, the relationship between the number N of earthquakes with intensity greater than or equal to a given magnitude M and the magnitude itself has the form \(\log _{10}{N}= a-bM\) for some parameters a and b. In order to represent the rate of seismic events, by introducing the total number of events expressed as \(N_{T}=10^{a}\), it is possible to reformulate the previous relationship as

This substitution allows us to understand the meaning of the quantity a in terms of total seismicity rate of an active zone. Finally, as regards the parameter b, in real situations its values are usually very close to 1 in seismic zones [13].

As pointed out in [19], the version under investigation of the Burridge–Knopoff model is not capable of reproducing the smaller earthquakes that follows the main event, as described empirically by the Omori law. However, the modification of the original model has been proposed to include such aftershocks field [5] and it would be a very interesting issue to include such effect into the general picture.

3 Numerical algorithm and its reliability

To start with, we discuss the computational strategy adopted and the numerical algorithm chosen. Once the number of blocks N is established, it is possible to obtain a differential system with equations like (1), namely

for \(i=1\), ..., N, where we assume that \(x_{0}=x_{1}\) and \(x_{N+1}=x_{N}\) for the boundary conditions [8]. The choice of such Neumann-type condition, corresponding to a zero-flux boundary condition, is dictated by the idea that no external effect—except the force of sliding upper plate—should influence the behavior of the block system.

Initial conditions Following [42], in order to avoid a periodic evolution and with the aim of reproducing realistic local tension along a fault, we assign small random displacements from the equilibrium positions for each mass. The blocks are supposed to be at rest, so we force the velocities to be zero in such a case. Remembering that the blocks are initially equally spaced with distance a, the equilibrium positions are \(P_{i}=a(i-1)\) for \(i=1, \dots , N\). Because zero speeds are imposed, all blocks are initially stuck. However, if a simulation would have started with the actual initial conditions, some irregular dynamical motion would be recognized, due to the action of spring and friction forces. On the contrary, we wish to appreciate a realistic charge cycle; that is why we identify the next incoming time of global stick, \(\bar{t}\), and select this one as initial time. This implies that the original initial conditions, and corresponding perturbations, must be updated in \(\bar{t}\): The simulation is now ready to be restarted by setting \(t=0\).

Stick–slip detection To identify a time of stick for a block within the numerical code, we use a criterion based on both the resultant force and velocities: A block is stuck if and only if the elastic resultant force is less than the maximum static frictional force and the velocity is equal to zero. Being back slip inhibited, sign changes of the velocity are interpreted as the tendency of being stuck, so negative values are suppressed and replaced by zero values. Such a strategy allows us to take advantage of the nonnegative velocity constraint characterizing the asymmetric friction law, thus overcoming the need for a further parameter to be used: Indeed, being difficult to numerically detect an exact zero velocity, a tolerance is typically introduced when working with the asymmetric friction framework.

Seismic events Concerning the statistical properties of the model, and specifically referring to the Gutenberg–Richter law, we assume an operative definition to determine whether a seismic event is happening: An earthquake occurs when a blocks starts to slip and ends only where all the blocks are stuck again. This definition implies that, during an event, a block can slip and become stuck alternately; moreover, the elastic coupling produces a sort of propagation along the chain of masses, because a block can trigger the slipping of its neighbors. In order to quantify the magnitude of an earthquake, we introduce the following definition for the magnitude M:

where \(\Delta x_{i}\) is the cumulative displacement of the block i during a given earthquake.

3.1 Numerical adjustment

As regards the numerical integration of the Burridge–Knopoff model with velocity-weakening friction, various methodologies have been employed by using either explicit methods, such as explicit Runge–Kutta [27,28,29,30, 41], or implicit methods, such as implicit Euler [42]. Here, we propose to adopt a Predictor–Corrector strategy, which is, to our knowledge, applied here for the first time to the model. To convince the reader that the algorithm is reliable, we compare it with the results given by different methods, showing that these are the same, also quantitatively.

For reader’s convenience, we briefly recall the scheme [33]. First of all, we consider a general implicit multistep method, for instance, by selecting Adams–Moulton methods from the Adams family:

If \(b_{-1}\ne 0\), an implicit method, named Adams–Moulton, is generated; otherwise, when \(b_{-1}=0\), an explicit method, named Adams–Bashforth, is obtained. That is why we assume \(b_{-1}\ne 0\). In (4), \(y_{n}\) indicates the approximate solution evaluated at time \(t_{n}\); the symbol \(f_{n-j}\), corresponding to \(f(t_{n-j},y_{n-j})\), is the vector field; h is the step size; \(b_{j}\in \mathbb {R}\); \(p\in \mathbb {N}\) is used to quantify the number of steps of the method, precisely \(p+1\), without including the implicit part associated with \(j=-1\). We recall that the Adams methods are derived from the integral representation of the Cauchy’s problem for a given differential system, namely

by using interpolating polynomials in the Lagrange form to approximate the vector field. In order to solve a Cauchy’s problem by using an implicit method such as (4), it is necessary to approach a nonlinear equation. We can rewrite (4) as follows

By taking advantage of (5), we can adopt fixed-point iterations and thus solve the nonlinear equation. For \(k=0,1\ldots ,\) we get

However, the procedure triggered by (6) requires several function evaluations to achieve convergence, due to the iterations needed. The idea behind a Predictor–Corrector strategy, which is inspired by the purpose of reducing the computational cost, is to compute a good initial guess for the fixed-point iterations by recalling an explicit multistep method. This method, called Predictor, provides an adequate guess to be used within the fixed-point scheme (6) generated by the implicit algorithm (4). The implicit method, named Corrector, can be invoked m times, with \(m\ge 1\). When \(m>1,\) the procedure is called Predictor–Multicorrector. In this paper, we choose \(m=1\), so we will continue using simply the wording Predictor–Corrector. The algorithm produced by starting from the Adams methods can be summed up as follows

where the Evaluation step of the vector field f is included. The superscript (0) denotes the guess provided by the Predictor, and the superscript (1), instead, indicates the values provided by the Corrector. The abbreviation usually employed for the overall procedure is PEC. We notice that a Predictor–Corrector strategy, also in the general case \(m\ge 1\), is by construction totally explicit. As regards our numerical adjustment, we adopt the Predictor–Corrector technique in a bit different form, called PECE, in which a further evaluation of f is performed at the end of the sequence. Moreover, the second-order Adams–Bashforth scheme (AB2) is used as Predictor, while the third-order Adams–Moulton method (AM3) is chosen as Corrector. We thus obtain

We point out that the order, q, of the PECE procedure, can be computed as follows

where \(q_{p}\) and \(q_{c}\) are the orders of the Predictor and the Corrector steps, respectively. Therefore, we have generated an overall third-order method.

Evaluating the pros and the cons of the Predictor–Corrector technique, the main advantages consist, firstly, in avoiding to solve an implicit system at every step, whose size would increase with the number of blocks, and, secondly, in ensuring a stronger stability when compared to standard explicit methods. On the other hand, we are forced to adopt a small time step—here chosen equal to \(h=0.001\)—to achieve a good approximation of the solution to the Burridge–Knopoff model [30, 41].

3.2 One block

The aim is to become familiar with the specific trend of the stick–slip dynamics, we start with the evolution in which only one block is involved (see Fig. 3).

The equation of motion can be deduced from (1) by omitting the elastic term associated with the horizontal connecting springs, so that we get

For the values of the parameters, we adopt the list shown in Table 1, as in [35].

To complete the list, we set \(\sigma =0.01\) according to [27] and arbitrarily choose \(\alpha =1\). We anticipate that the quantity \(\alpha\) plays a crucial role for the configurations involving a large amount of distinct blocks.

As initial conditions, we impose \(x(0)=0\) and \(\dot{x}(0)=0\). The evolution does not changes its qualitative behavior by assuming a more realistic small displacement at \(t=0\), and the only difference consists of the duration of the first stick period. When only one block is involved, indeed, the motion exhibits a periodic trend. Figures 4 and 5 show the results in the case of a single block.

Displacement for a single block. All the values used to perform the simulation are available in Table 1 (\(\alpha =1\))

We recognize the alternation between sticking and slipping periods from the qualitative behavior of the graph in Fig. 4: A steep trend characterizes the sliding motion, in opposition to the flat one produced when there is no motion.

When the block is sliding, its velocity achieves some pronounced peaks as shown in Fig. 5a. In Fig. 5b, a qualitative summary is provided by the phase portrait. Although this kind of system is very far from being an accurate representation for seismic events, because lots of other contributions would be required, on a geophysical level we could think about a slipping period as an earthquake and a sticking one as a charge cycle.

Velocity graph (left) and displacement/velocity plane (right) for a single block (for parameters values, see Table 1 with \(\alpha =1\))

Incidentally, let us observe that choosing a reference frame moving at the speed V of the part sliding on top, i.e. setting \(u=x-Vt\), we obtain the autonomous second-order differential equation

which can be, as usual, rephrased as a first-order system by setting

Then, the dynamics can be represented in the phase plane; see Fig. 6.

Phase–plane analysis in the plane (u, v) for system (8) with two different values of \(\alpha\) (\(\alpha =1\) red line , \(\alpha =4\) blue line)

In principle, similar graphs can be provided for any number of blocks being V constant, even if we chose not to use such representation.

Let us stress that, for the case of a single block, different types of models can be considered (among others, see [6] for singular perturbation analysis). In principle, such models are amenable of being extended to the multiblock case and we regard such topic as very riveting. Nevertheless, we decide to focus on the original Burridge–Knopoff model leaving such and other variations for future investigation.

3.3 More blocks

Next, we examine a model involving five masses with the same parameters listed in Table 1 and assuming random initial conditions random. The associated differential system is defined by using (1) and paying attention to include the boundary conditions. For instance, the equation for the first block becomes

In Fig. 7a, we plot the displacements of the blocks, while in Fig. 7b a zoom-in for these trajectories is provided. It is possible to recognize some great slipping phases in which all the blocks are involved; on the other hand, very small displacements happen in regions where the slope is flatter. The pronounced peaks correspond to the most powerful shocks allowed for such a configuration. Finally, looking at the zoom-in Fig. 7b, a sequence of smaller narrow events happens in the period preceding one of the large vertical peaks, interpreted here as foreshocks.

A system involving five blocks. The graph of the displacements as a function of time and its zoom-in of the rectangular region (for parameters values, see Table 1)

Although five blocks are not enough to exhibit satisfying dynamics, it has been helpful to investigate the results in a qualitative way. As can be observed, indeed, the behavior is more complex than in the case of a single block. To support this point of view, in Fig. 8 we take one block as sample and plot the velocity and the phase portrait (trends are very similar for the remaining blocks).

3.4 Many more blocks and the Gutenberg–Richter law

The next steps in our analysis are aimed at arguing the reliability of the Burridge–Knopoff model using the Gutenberg–Richter law (2). In order to achieve this purpose, we increase the number of blocks and consider a system with \(N=200\). In Table 2, the parameters used in this last part of Sect. 3 are listed.

We collect information about the seismic events generated by using the criterion described before to distinguish when an earthquake is occurring. As regards the magnitude, the relationship (3) provides a quantitative definition. First of all, to discuss the results, it is absolutely necessary focusing on the role of the parameter \(\alpha\) introduced by the friction law [10, 22, 27, 41]. As stated in Sect. 2, \(\alpha\) expresses the rate of slipping friction modifying the sliding velocity. As a result, if \(\alpha\) decreases, friction becomes more dissipative; on the contrary, larger values of \(\alpha\) mean less dissipation because the slipping friction decreases more quickly with sliding velocity. On a quantitative level, the value \(\alpha =1\) is an important threshold: The system cannot exhibit large earthquakes for \(\alpha <1\) and different behaviors are noticeable in the case \(\alpha \ge 1\). Indeed, by following [42], we introduce the earthquake distribution \(P=P(M)\), which is the ratio between the number of earthquakes greater than or equal to a given magnitude M, see (3), and the total number of events \(N_{T}\). Operatively we classify magnitudes by establishing the belonging to different ranges such as \([M, M+dM]\), where dM is fixed to be equal to 0.2. According to (2), we represent the distribution of earthquakes by the graph of the function \(M\mapsto \log _{10}[P(M)]\). In the first case considered, \(\alpha =1\) is employed and the result is shown in Fig. 9a.

Earthquakes distribution for a system with 200 blocks: graph of the function \(M\mapsto \log _{10}[P(M)]\) (parameters listed in Table 2)

By observing the graph, it is possible to recognize a behavior very close to a straight line in the central part (between \(M=-3.7\) and \(M=1.7\), approximately), which can be interpreted coherently with the Gutenberg–Richter law. By adopting the linear least squares method (see Fig. 9a), we estimate an exponent \(B\simeq 0.42\) for the power-law trend. We point out that the exponent derived from the simulations of the Burridge–Knopoff model cannot be directly matched to the b-value appearing in the Gutenberg–Richter law (2); indeed, a rescaling would be required in order to make a comparison with the real data [13, 19]. We can take as a reference the relationship \(b=\frac{3}{2}B\) described in [19]. Moreover, according to the results in [27], we notice that by varying the ratio between the stiffness of the springs, \(k_{c}/k_{p}\), usually called \(l^2\) in the literature, a different exponent B is computed: The lower the ratio, the higher the exponent, of course preserving the constraint \(k_{c}>k_{p}\). For instance, by imposing \(l^2=36\) we find \(B\simeq 0.45\) or choosing \(l^2=9\) we obtain \(B\simeq 0.54\). As regards the data providing the results plotted in Fig. 9, a ratio \(l^2=100\) can be deduced from Table 2.

All these considerations make the value of the parameter b ranging in the interval [0.63, 0.81], meaning a flatter slope for the graph in Fig. 9a, being even smaller than the empirical value \(b=1\) in (2). Finding a flatter slope is consistent with other observations available in the literature, as in [11, 27, 42]. At very small magnitude, we notice a steep linear segment in the graph, probably caused by the discreteness of the model [27, 42].

By increasing \(\alpha\), a different qualitative behavior is provided by simulations. For instance, we assume now \(\alpha =4\) (see Fig. 9b) in order to show the main differences by employing more values of \(\alpha\), in what follows. All the other parameters are the same as in the case \(\alpha =1\). In Fig. 9b, we recognize a deviation from the Gutenberg–Richter law at large magnitudes: It is noticeable a sort of peak structure; the trend close to a straight line persists instead in the middle–small range of magnitude, in agreement with the empirical expectation. Finally, at smallest magnitude, as in the case analyzed before, a steep linear segment is observable due to the discreteness of the model. All these qualitative behaviors are consistent with previous works, for example, [11, 27, 35, 42].

Next, we discuss the crucial role played by the parameter \(\alpha\). We have already observed that the lower the value of \(\alpha\) the higher the dissipation; correspondingly, in agreement with [10], we notice that in each earthquake the displacements become smaller. Figure 10 compare the results for \(\alpha =1\) and \(\alpha =4\), showing that the displacements are effectively smaller, confirming the findings in [35].

Next, let us investigate how the distribution of earthquakes changes when \(\alpha\) increases. In Fig. 11 some distributions are plotted by assuming \(\alpha \in \{1,1.5,2,3,4\}\). Hence, the peak structure persists when \(\alpha \not =1\) and, in the interval where the linear behavior agrees qualitatively with the Gutenberg–Richter law, the slope is steeper as \(\alpha\) increases.

Earthquakes distribution for a system with 200 blocks: graph of the function \(M\mapsto \log _{10}[P(M)]\) with \(\alpha \in \{1,1.5,2,3,4\}\). The other parameters used are listed in Table 2

To continue the comparison with previous results, let us recast the definition of in (3) by replacing the decimal logarithm M with the natural one \(M_1\), denoting the magnitude. If the quantity \(P(M_{1})\) is defined as for P(M), an qualitatively equivalent of Fig. 11 is obtainable, very similar to the correspondent graphs shown in [42]. Moreover, if we define by \(R(M_{1})\) the rate of seismic events with magnitudes equal to \(M_{1}\) (operatively in a range such as \([M_{1}, M_{1}+dM_{1}]\)) we achieve the results shown in Fig. 12.

With the aim of expressing the qualitative behavior affecting the dynamics when \(\alpha \not = 1\), in Fig. 12b we have chosen as example the value \(\alpha =2\). It is possible to explain the deviation from the Gutenberg–Richter law in terms of a peak structure by pointing out that, in such a regime, large events are too frequent, consistently with the conclusion in [10, 11, 27].

4 Wave speed estimate and almost convergence

In the previous sections, we have performed numerical simulations aimed at providing a comprehensive overview of the typical dynamics the Burridge–Knopoff model is ruled by, through an effective numerical method based on a Predictor–Corrector strategy. All these achievements define a helpful background to reach the core of our study: Specifically, we take advantage of the discrete structure of the model in order to get results related to the propagating fronts theory, on the ground of the proceedings described before as far as the numerical discretization.

By means of some simple qualitative considerations, it is possible to recognize that the assessment of mechanisms hypothetically attributable to the traveling fronts field, is something worth working on in seismology and related applied mathematics. So far, we have often pointed out how much the Burridge–Knopoff model behavior is bound to be influenced by phenomena of perturbations spreading, due to the intrinsic structure of the system: Indeed, the information about occurring events is conveyed by the connecting springs, allowing distant blocks slipping, within a chain reaction process in which each block can trigger its neighbors motion.

From a mathematical point of view, a suitable analysis is needed in order to prove that such qualitative and empirical features might be framed by a systematic theory based on traveling fronts. In this respect, a class of systems consisting of elastic media and characterized by friction laws allowing the typical stick–slip phenomena, has been proved to be suitable for producing traveling fronts as shocks. In [31], by using the Coulomb friction law and taking into account a continuum version of the model, propagating fronts are investigated, specifically, their existence is shown and corresponding wave speed computation is performed.

Here, we wish to realize something similar by employing a different approach: Basically, we are interested in detecting a specific wave speed, which means recognizing the propagating fronts phenomenon, only by making use of the discrete version of the model provided with the velocity-weakening friction investigated so far. As a consequence, a wave speed estimate is needed: Our choice falls on the formulation provided by LeVeque and Yee in [24], suitably modified and upgraded in order to get a version contextualized in this discrete field. The standard estimate, indeed, has been originally meant to be exploited within the PDE framework, being employed in the conservation laws field, and reads as

where \(c_{n}\) is the space-averaged wave speed estimate, at time \(t^{n}\), related to the traveling wave v approximated over a uniform spatiotemporal mesh (\(\Delta x\) and \(\Delta t\) are the fixed spatial and time steps, respectively), while \([v] {:}{=}v_{+}-v_{-}\) with \(v_\pm\) stationary states. The exploration of the LeVeque–Yee formula (9) has been explored in [23] in the context of hyperbolic reaction–diffusion equations, where its reliability has been proposed.

Recalling that h is the fixed time step and N the total number of blocks, our modified version of formula (9) arises from the requirement of dealing with a system of ODEs, thus implying some specific adjustments leading to

where \(z_{i}^{n}\) denotes the position of the ith block at time \(t^n\) and \([z]=z_{N}^{n}-z_{1}^{n}\) plays the role of the term including the stationary states in (9). As regards the quantity \(\Delta x_{i}^{n}\), we have:

The relation (10) is still a space-averaged wave speed estimate for the velocity c evaluated at time \(t^{n}\) where the multivalued function \(\Delta x_{i}^{n}\) measures the distance between adjacent blocks, replacing the spatial contribution \(\Delta x\) involved in (9).

As a preliminary test on the wave speed, we consider the simplest conceivable trend for the velocity, and we regard at c as a function of the discrete time \(t^{n}\). As regards the parameters, we adopt the same quantities listed in Table 2 with \(\alpha =1\), and we choose \([0,10^{5}]\) as time interval.

The evolution of the wave speed, computed by \(c^n\) given in (10), is shown in Fig. 13, exhibiting an evident oscillatory and non-convergent trend, similarly to the plot in Fig. 5a or in Fig. 8a. All these graphs provide information about the velocity trend; that is why getting profiles characterized by a collection of pronounced peaks (corresponding to seismic events) consistently with the heuristic expectation, regardless of the number of blocks involved. Such graphs are natural effect of the particular dynamics analyzed which is inherently discontinuous, as a consequence of being based on a stick–slip mechanism.

The detection of a specific wave speed is still open and further analysis is required. To this aim, let us define the average quantity

For each n, the relation (11) takes into account the arithmetic mean of the first n available wave speed estimates. In Fig. 14, we can see the results: A convergence towards an asymptotic threshold is now appreciable and the limit value numerically computed is \(L \simeq 1.04\cdot 10^{-3}\) (to be compared with \(V=1.00\cdot 10^{-3}\)).

Wave speed average from (11) as a function of the time \(t^{n}\) for a system involving 200 blocks. Right: zoom in \([0,9\cdot 10^4]\times [0.9\cdot 10^{-3},1.1\cdot 10^{-3}]\)

At this stage, from the mathematical point of view, a discussion is required: The wave speed provided by the limit L of the sequence \(\{\gamma _{n}\}\) is related to the averages convergence for the sequence \(\{c_{n}\}\); that is why we have numerically proved the summability in the sense of Cesàro. Specifically, leaning on the Cesàro means theorem [38], it is well known that if a sequence \(\{\beta _{n}\}\) is convergent to some limiting value b as \(n\rightarrow +\infty\), then the sequence of the averages \(\sigma _{n}:=\frac{1}{n}\sum _{i=1}^{n} \beta _{i}\) converges to b as \(n\rightarrow +\infty\). Thus, although we have computationally proved that a convergence for the sequence \(\{c_{n}\}\) is not recognizable (see Fig. 13), we can certainly state that, if a weaker convergence is verified, it has to happen towards the value L, i.e. the limit for the averages sequence \(\gamma _n\).

Motivated by this result, we want to show that there is numerical evidence of the so-called almost convergence (for details, see [4, 25]) for the sequence \(\{c_{n}\}\). To this purpose, we introduce a new average

which turns out to be equivalent to (11) if \(p=1\). By invoking the Lorentz Theorem [4, 25], stating that a bounded real sequence \(c_n\) is almost convergent to L if and only if

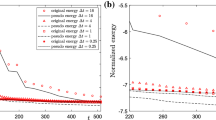

it is possible to provide numerical evidence of almost convergence analyzing the sequence of the averages \(\gamma _{p,n}\). Specifically, we test the almost convergence of the sequence \(\{c_{n}\}\) by computing the (p, n)-dependent mean for different choices of the parameter p, as a function of the discrete time \(t^{n}\). The results are depicted in Fig. 15 starting from the case \(p=1\). The parameters exploited to carry out the numerical test are listed in Table 3.

All the graphs converge towards an asymptotic threshold, whose value is \(L \simeq 1.04\cdot 10^{-3}\), namely the limit already recognized in Fig. 14, thus testifying the almost convergence for \(\{c_{n}\}\) to a value which is distinct from \(V=1\cdot 10^{-3}\) with a relative error equal to \((L-V)/V=4\cdot 10^{-2}\)

Wave speed averages from (12) for different values of the parameter p, as a function of the time \(t^{n}\); the system involves 200 blocks. Right: zoom in \([0,9\cdot 10^4]\times [0.9\cdot 10^{-3},2\cdot 10^{-3}]\)

Such an outcome issues an interesting interpretation in terms of real phenomena as well. Indeed, while the plot in Fig. 13, reproducing the wave speed trend, can be described as a collection of mutually independent events, the function involving the wave speed averages (12) and the related graphs in Fig. 15, imply a dependence from the past sequences. The latter interpretation is somehow more acceptable thinking about the mechanism of mutual induction affecting earthquakes along a fault and the reasonable influence that past earthquakes might have on future seismic events. Moreover, speaking about the traveling fronts theory, the numerical evidence of a limit for the wave speed averages paves the way to state the existence of propagating fronts starting from the specific discrete version of the Burridge–Knopoff model provided with the velocity-weakening friction. To our knowledge, such kind of result is not available at the present.

To conclude this section, we also performed simulations in order to investigate the almost convergence sensitivity of the wave speed relative to the numerical scheme. By considering increasing number of blocks, namely, \(N=512\) and \(N=1024\), we have checked the stability of the asymptotic propagation speed providing always the same value for the limit \(L\simeq 10^{-3}\) which suggests the independence on the number of blocks for N large.

5 Conclusions

In this paper, a systematic investigation of the Burridge–Knopoff model for earthquakes detection has been performed through several numerical simulations. The system is a spring–block model, characterized by a discontinuous right-hand side. Among the different versions for the friction law available in the literature, our choice fell on the one known as velocity-weakening form.

The analysis is carried out with a twofold aim. Firstly, by comparing with the numerical simulations performed by means of different schemes in other papers, we found an excellent (qualitative and quantitative) agreement applying for the first time the classical Predictor–Corrector strategy to the model. In order to provide a complete and accurate investigation of the main features of the model, numerical experiments have been performed starting from the simplest cases, allowing a deep understanding of the so-called stick–slip dynamics, basically the physical process the earthquakes mechanism is ruled by. Afterwards, by increasing the number of blocks, we explored more complex configurations: In particular, attention has been devoted to the Gutenberg–Richter statistical law (2), a standard benchmark in seismology.

Secondly, by taking advantage of the more realistic framework defined by consistently increasing the size of the system, we moved toward the exploration of propagating fronts giving numerical evidence of the almost convergence of wave speeds produced by the dynamics, properly computed through a suitable space-averaged estimate, known as LeVeque–Yee formula in its discrete version (10). The need for a weaker convergence has been justified showing that the standard sequence given by the wave speeds does not converge, as a natural consequence of the inherent discontinuities of the process. Instead, the result involving the convergence of the wave speed averages not only proves itself to be a perfect ground to test the upgraded version of the LeVeque–Yee formula, but also leads to an interesting phenomenological interpretation, in terms of reciprocal influence in time among seismic events. Furthermore, in regard to the traveling fronts theory, getting numerical evidence of a limit for the wave speed averages is a starting point to address the existence of propagating fronts by considering the discrete Burridge–Knopoff model with the velocity-weakening friction.

In terms of perspectives, many more issues are raised by the Burridge–Knopoff model. Future works might be conceived in order to understand completely the discrete model and its dynamics, looking for other evidences related to the presence of propagating fronts. The attempt to find such a correlation is expected to be fruitful and worth being deeply understood, since the model belongs to a class of systems which supports traveling waves. Additionally, a crucial one is to determine the origin of the external force driving the upper plate (at constant speed in the present version). Actually, the main external force causing such accumulation is currently matter of debate: Some scientists suggest this stress is a byproduct of internal mantle convection [13], others propose that the external gravitational contribution is crucial [14]. In this respect, a modified Burridge–Knopoff model is conceivable with periodic forcing with frequency/amplitude dictated by the principal cause of the motion. Modifying such frequency/amplitude parameters could, in principle, provide an indirect proof by comparing the result with real seismological data.

Finally, from the computational point of view, the possibility of improving the simulation effectiveness relying on the parallel computation paradigm is doubtless promising.

References

Acary V, Brogliato B (2008) Numerical methods for nonsmooth dynamical systems: applications in mechanics and electronics, vol 35. Springer Verlag, Berlin

Bak P, Tang C (1989) Earthquakes as a self-organized critical phenomenon. J Geophys Res 94(B11):15635–15637

Bak P, Tang C, Wiesenfeld K (1987) Self-organized criticality: an explanation of 1/f noise. Phys Rev Lett 59(4):381–384

Bennett G, Kalton NJ (1974) Consistency theorems for almost convergence. Trans Am Math Soc 198:23–43

Biswas S, Ray P, Chakrabarti BK (2013) Equivalence of the train model of earthquake and boundary driven Edwards–Wilkinson interface. Eur Phys J B 86:388–395

Bossolini E, Brøns M, Kristiansen KU (2017) Singular limit analysis of a model for earthquake faulting. Nonlinearity 30:2805–2834

Brace WF, Byerlee JD (1966) Stick–slip as a mechanism for earthquakes. Science 153(3739):990–992

Burridge R, Knopoff L (1967) Model and theoretical seismicity. Bull Seismol Soc Am 57(3):341–371

Carlson JM, Langer JS (1989) Properties of earthquakes generated by fault dynamics. Phys Rev Lett 62(22):2632–2635

Carlson JM, Langer JS (1989) Mechanical model of an earthquake fault. Phys Rev A 40(11):6470–6484

Carlson JM, Langer JS, Shaw BE, Tang C (1991) Intrinisc properties of a Burridge–Knopoff model of an earthquake fault. Phys Rev A 44(2):884–897

Comte JC, Tchofo Dinda P, Remoissenet M (2002) Discrete Burridge–Knopoff model, with exact solitonic or compactlike traveling wave solution. Phys Rev E 65:026615

de Arcangelis L, Godano C, Grasso JR, Lippiello E (2016) Statistical physics approach to earthquake occurrence and forecasting. Phys Rep 628:1–91

Doglioni C, Carminati E, Petricca P, Riguzzi F (2015) Normal fault earthquakes or graviquakes. Sci Rep 5:12110

Erickson B, Birnir B, Lavallée D (2008) A model for aperiodicity in earthquakes. Nonlinear Process Geophys 15:1–12

Erickson B, Birnir B, Lavallée D (2011) Periodicity, chaos and localization in a Burridge–Knopoff model of an earthquake with rate-and-state friction. Geophys J Int 187:178–198

Guglielmi N, Hairer E (2015) Classification of hidden dynamics in discontinuous dynamical systems. SIAM J Appl Dyn Syst 14(3):1454–1477

Gutenberg B, Richter CF (1956) Magnitude and energy of earthquakes. Ann Geophys 9(1):1–15

Kawamura H, Hatano T, Kato N, Biswas S, Chakrabarti BK (2012) Statistical physics of fracture, friction, and earthquakes. Rev Mod Phys 84(2):839–884

Kostic S, Vasović N, Franović I, Todorović K, Klinshov V, Nekorkin V (2017) Dynamics of fault motion in a stochastic spring-slider model with varying neighboring interaction and time-delayed coupling. Nonlinear Dyn. 87:2563–2575

Kunze M, Küpper T (1997) Qualitative bifurcation analysis of a non-smooth friction-oscillator model. Z Angew Math Phys 48(1):87–101

Langer JS, Carlson JM, Myers CR, Shaw BE (1996) Slip complexity in dynamic models of earthquake faults. Proc Natl Acad Sci USA 93:3825–3829

Lattanzio C, Mascia C, Plaza RG, Simeoni C (2016) Analytical and numerical investigation of traveling waves for the Allen–Cahn model with relaxation. Math Models Methods Appl Sci 26(5):931–985

LeVeque RJ, Yee HC (1990) A study of numerical methods for hyperbolic conservation laws with stiff source terms. J Comput Phys 86(1):187–210

Lorentz GG (1948) A contribution to the theory of divergent sequences. Acta Math 80(1):167–190

Morales JE, James G, Tonnelier A (2018) Solitary waves in the excitable Burridge–Knopoff model. Wave Motion 76:103–121

Mori T, Kawamura H (2006) Simulation study of the one-dimensional Burridge–Knopoff model of earthquakes. J Geophys Res 111:B07302

Mori T, Kawamura H (2008) Simulation study of earthquakes based on the two-dimensional Burridge–Knopoff model with long-range interactions. Phys Rev E 77:051123

Mori T, Kawamura H (2008) Simulation study of the two-dimensional Burridge–Knopoff model of earthquakes. J Geophys Res 113:B06301

Mori T, Kawamura H (2008) Spatiotemporal correlations of earthquakes in the continuum limit of the one-dimensional Burridge–Knopoff model. J Geophys Res 113:B11305

Muratov CB (1999) Traveling wave solutions in the Burridge–Knopoff model. Phys Rev E 59(4):3847–3857

Olami Z, Feder HJS, Christensen K (1992) Self-organized criticality in a continuous, nonconservative cellular automaton modeling earthquakes. Phys Rev Lett 68(8):1244–1248

Quarteroni A, Sacco R, Saleri F (2000) Numerical mathematics, vol 37. Springer Verlag, Berlin

Ruina A (1983) Slip instability and state variable friction laws. J Geophys Res 88(B12):10359–10370

Saito T, Matsukawa H (2007) Size dependence of the Burridge–Knopoff model. J Phys Conf Ser 89:012016

Scholz CH (1990) The Mechanics of earthquakes and faulting. Cambridge University Press, Cambridge

Scholz CH (1998) Earthquakes and friction laws. Nature 391:37–42

Shawyer BLR, Watson B (1994) Borel’s methods of summability: theory and applications. Oxford University Press, Oxford

Vasović N, Kostić S, Franović I, Todorović K (2016) Earthquake nucleation in a stochastic fault model of globally coupled units with interaction delays. Commun Nonlinear Sci Numer Simul 38:129–171

Winslow N (1997) Introduction to self-organized criticality & Earthquakes. Dept of Geological Sciences, University of Michigan. http://www2.econ.iastate.edu/classes/econ308/tesfatsion/SandpileCA.Winslow97.htm

Xia J, Gould H, Klein W, Rundle JB (2008) Near-mean-field behavior in the generalized Burridge–Knopoff earthquake model with variable-range stress transfer. Phys Rev E 77:031132

Xiong X, Kikuuwe R, Yamamoto M (2015) Implicit Euler simulation of one-dimensional Burridge–Knopoff model of earthquakes with set-valued friction laws. Adv Comput Math 41(6):1039–1057

Acknowledgements

We sincerely thank Dr. Xiaogang Xiong for the fruitful exchange of information on various topics related to the Burridge–Knopoff model; we also like to thank Dr. Chiara Simeoni for her suggestions about the possible developments related to the numerical issues. Last, but not least, we are grateful to the referees for their useful suggestions, which permitted us to produce a better version of the original manuscript.

Funding

Open access funding provided by Università degli Studi di Roma La Sapienza within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors do not have any conflicts of interest to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mascia, C., Moschetta, P. Numerical evidences of almost convergence of wave speeds for the Burridge–Knopoff model. SN Appl. Sci. 2, 2053 (2020). https://doi.org/10.1007/s42452-020-03856-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-020-03856-y