Abstract

Good usability and user-friendliness of cyberinfrastructure tools and technologies are crucial for increasing their usage and broader adoption in scientific communities. However, there is limited empirical evidence on effectively doing this in practice. This systematic literature review aims to uncover, analyze, and present how user experience (UX) evaluations have been applied in cyberinfrastructure for scientific research. The Preferred Reporting Items for Systematic Reviews and Meta-Analysis (PRISMA) framework is applied to analyze 15 articles on UX studies in cyberinfrastructure, published between 2015 and 2022. Results show that cyberinfrastructure use multiple methods for UX evaluation: interviews, think-aloud, and user surveys. However, the UX studies on cyberinfrastructure primarily focus on the technical effectiveness of the system. This review identified limitations in the current evaluation methodologies, including a need for more agreement on what constitutes a UX evaluation process for cyberinfrastructure. Implications are that UX studies of cyberinfrastructure should report clear evidence of user feedback collected during the evaluations. This study highlights the necessity of addressing sociotechnical issues in cyberinfrastructure design by including the voice of all representative stakeholders and keeping the social context in loop during the system development process.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Scientific research, such as that conducted in the fields of healthcare and bioinformatics, is becoming increasingly cross-disciplinary and collaborative, requiring access to heterogenous technologies and very large data streams, distributed across multiple institutions and organizations. In many cases, the required resources for large-scale scientific research are geographically distributed, and therefore, are very difficult for researchers to access them in real-time. With the increasing complexity of scientific problems, the last two decades have seen a strong need to explore, invest, and build large-scale distributed scientific collaborations, also known as ‘cyberinfrastructure,’ a vision led by the NSF blue-ribbon committee [2]. For example, the iPlant cyberinfrastructure is developed to support plant biology research [16].

Cyberinfrastructure is a “collaborative virtual organization infrastructure” [20, p. 19] which broadly refers to the assembly of high-speed computing systems, advanced instrumentation technologies, large-scale data storage, visualization systems, and skilled personnel that support scientific research collaborations through high-speed networks across distributed organizations [7, 8, 20, 31]. One part of this assembly is the technological infrastructure of high-performance data collection instruments, sensors, and imaging technologies, and the other part, is the social infrastructure of heterogenous scientific disciplines connected through a massive integration of networking, computation, communication, and storage technologies [54]. The formal definition of cyberinfrastructure as defined in the field of Computer Supported Cooperative Work (CSCW) states:

Cyberinfrastructure is a comprehensive technological and sociological solution to complex scientific problems, constituting a research ecosystem of computational systems, data and information management, advanced instruments, visualization environments, and human collaboration, all interconnected through software and advanced networks. Its purpose is to improve scholarly productivity and enable knowledge breakthroughs and discoveries not otherwise possible [62], [63]

The design of novel cyberinfrastructure is complex due to the interconnectedness of human, technical, and social components embedded within more extensive infrastructures [7, 27, 31]. The complexity of cyberinfrastructure is further exacerbated by the diverse range of technologies producing and using large, heterogenous data streams, a constantly changing hardware and software environment, and varied stakeholder groups [8, 10, 46, 70]. These stakeholder groups include resource providers, computer scientists, domain scientists, and governing bodies. These stakeholders interact with the cyberinfrastructure at different levels – while some interact directly with the system, others may never interact directly but may be heavily invested in the system [30]. Customizing the cyberinfrastructure software to accommodate the diverse needs of the multiple stakeholder groups poses a significant challenge [54]. Good usability and user-friendliness are crucial for increasing the usage and broader adoption of cyberinfrastructure tools and technologies in the scientific communities [26, 59, 60, 68].

According to the international standards of interaction principles, i.e., ISO 9241 − 110:2020 [24], user experience (UX) focuses on user response and behavior; and usability, as a subset of UX, measures effectiveness, efficiency, and user satisfaction in a specified context of use. Although the need for applying usability testing and UX evaluations in cyberinfrastructure for scientific research is well-documented [30, 49, 50], empirical studies on building and implementing usable cyberinfrastructure is lacking. This gap is attributed to the limitations in the current evaluation methodologies for cyberinfrastructure designed for multidisciplinary scientific user groups and a lack of engagement of scientific stakeholders in the system development process [30, 50]. Moreso, a lack of agreement on the UX evaluation process for cyberinfrastructure hinders theory development and generation of evidence-based practices in the field.

To address the gap in understanding how to conduct UX and usability evaluations in cyberinfrastructure, a systematic literature review is conducted using the PRISMA framework [33] to uncover, analyze, and present trends and issues in UX studies of cyberinfrastructure. The limitations of current UX evaluation methodologies applied to cyberinfrastructure are discussed. This understanding will be used to inform the field of cyberinfrastructure research on how UX evaluations can be integrated in cyberinfrastructure development in the future.

Background

This section points out the explorative gaps in the field of UX studies of cyberinfrastructure and, motivated by these gaps, describes the central research questions and the goal of this paper.

Research on cyberinfrastructure demonstrates the utilization of cutting-edge and emerging technologies, such as machine learning and artificial intelligence, to effectively develop cyberinfrastructure that facilitate scientific discovery and innovation [22]. One instance involves employing artificial intelligence or machine learning models to aid scientific stakeholders in making well-informed decisions regarding cyberinfrastructure-resource allocations [42]. Several cyberinfrastructure research have presented frameworks and systems, such as a cloud-based cyberinfrastructure for managing various types of sensor measurement data [25], facilitating scientific assessments of river basins [65], and enabling quick virus detection [36]. The application of UX in building this cyberinfrastructure has certain limitations in that none of these studies examine the understandability of these systems by their intended audience.

A major concern with these rigorous computer science studies is their emphasis on the demonstration of the usage of cyberinfrastructure through correct technical scenarios rather than designing systems that are inherently interpretable by humans. This approach is commonly known as the black box approach in the scientific community [56], where the inner workings or processes of a system or model are not transparent or interpretable by humans. In other words, while humans can observe the input and output of the system, they cannot comprehend the specific details of how the system or model arrived at its predictions or decisions [35, 56].

From a usability standpoint, it is of the utmost importance to have usable and user-friendly cyberinfrastructure systems that enable users to easily access scientific data, conduct accurate scientific visualizations, and derive meaningful insights from the visualized data [10, 26, 46, 60, 68]. In the absence of usable and user-friendly cyberinfrastructure systems, users cannot accurately interpret or make sense of such complex computational environments, which leads to bad decisions [57, 60].

A key aspect of cyberinfrastructure is its design maturity, which refers to its current stage of development. A high design maturity means that the cyberinfrastructure is fully operational, while a low design maturity suggests that the cyberinfrastructure is in its early stage of development [70]. The current research on cyberinfrastructure has primarily focused on the conceptual design and development [1, 34, 40]. As the cyberinfrastructure development progresses from conceptual demonstrations to fully functional software, the need for user-friendly interfaces for cyberinfrastructure software becomes apparent [34, 40, 46, 60, 68]. The user-facing aspects of these scientific software face usability issues. Further, tailoring the scientific interfaces of cyberinfrastructure software to the specific needs of the science community can present communication, coordination, and knowledge exchange challenges [54, 64]. It is important to note that the scientific interfaces of cyberinfrastructure software are different from the conventional bidirectional user interfaces [30, 45, 49, 50]. In this context, traditional usability testing may not suffice for evaluating the usability of scientific interfaces of these software [10, 30, 38] because scientific software is far more complex than simple simulation software and is used to run the algorithms, gather, store, analyze, and distribute outcomes (from simulations, experiments, or observations), and facilitate various aspects of collaborative scientific endeavors [49].

Research suggests that effective engagement of stakeholders is essential for creating functional and sustainable cyberinfrastructure within their organizational contexts [5, 8, 52]. However, it remains unclear if the findings on stakeholder engagement can be extrapolated to all types of cyberinfrastructure as the diverse backgrounds and affiliations of the scientific group of stakeholders can pose challenges in engaging them at the required level [5, 27, 52].

Successful development of cyberinfrastructure must consider the interests of all stakeholder groups and their technological, social, and organizational characteristics [6, 40]. There is a need for a more intentional, user-friendly design of cyberinfrastructure to ensure their effectiveness. In this context, this systematic literature review will uncover, analyze, and present trends and issues in the application of UX studies in cyberinfrastructure development to address this gap. The following research questions guided the study:

RQ1

How do cyberinfrastructure designers report the objectives of their user experience studies?

RQ2: To what extent do user experience studies of cyberinfrastructure reflect the various aspects of usability, namely: (a) the kind of infrastructure, (b) the context, (c) the usability testing approach, and (d) sensitivity to the target audience?

RQ3.a

What are the research outcomes of the user experience studies of cyberinfrastructure?

RQ3.b

What are the challenges identified in applying these user experience studies?

Methodology

To address the research aims, the Preferred Reporting Items for Systematic Reviews and Meta-Analysis (PRISMA) method comprising a 27-item checklist and four phases [33], is followed in this study. The Population, phenomenon of Interest, Context (PICo) approach described by Stern et al. [61] guides the review’s focus. The PICo approach is remodeled as a mnemonic for qualitative reviews. In this systematic review, the Population is referred to as the cyberinfrastructure researchers, the phenomenon of Interest as the UX design and research process of developing usable and user-friendly cyberinfrastructure, and the Context as the cyberinfrastructure created for scientific research [61].

The study’s design is based on the systematic review process of Liberati et al. [33] and is developed with the collaboration of two researchers, including the author and a Human-Centered Design (HCD) expertFootnote 1 who was also an associate professor of Information Science and Learning Technologies and Director of the UX lab at the institute. The next sections will discuss the search strategy, item selection criteria and procedure, data extraction, and analysis.

Eligibility criteria

Scholarly articles published between 2015 and 2022 were selected as this period reflected a more mature design of cyberinfrastructure ready to be accepted and used by the stakeholders and end-users. In addition, an increased emphasis on developing usable and sustainable cyberinfrastructure has made the application of UX studies in building and maintaining cyberinfrastructure increasingly important [52]. Table 1 shows the inclusion and exclusion criteria used to analyze the eligibility of the articles.

Data sources

All searches were conducted in November 2022. A range of online databases, journals, and conference proceedings were selected for this study, guided by library recommendations for engineering and computer science fields. The databases searched for this study included ACM Digital Library (ACM DL), IEEE Xplore, Scopus, Web of Science, ScienceDirect, and Wiley Interscience. An additional search was conducted via Google Scholar for the thoroughness of the literature search [18]. Although commonly used for searching academic literature, Google Scholar lacks several search features necessary for creating customized queries. Consequently, Google Scholar was employed as a secondary tool for searching literature in this study. The search focused on the titles of the articles, primarily considering the first 200 to 300 results [18]. The Google Scholar search query was ‘sorted by relevance’ to give results from 2015 to the date when the search was conducted (i.e., November 2022).

Search strategy and reliability of search results

The search strategy was developed through an iterative process. Initial searches were conducted, and the results were examined to understand the content returned by the search. The search strategy was refined and executed multiple times to improve the results. Ultimately, two key search terms, ‘cyberinfrastructure’ and ‘user experience,’ were finalized for the study. Synonyms and alternate terms were identified, and a list of search terms was prepared.

The final list of search terms was reviewed by the HCD expert to ensure the correct selection of the research context of applying UX studies in creating cyberinfrastructure. A high agreement was suggested between the HCD expert and the author of this paper during the review of the search terms. Search terms such as experiential, product experience, useful, and emotion were removed to align with the inclusion criteria of the systematic review. For example, experiential was removed because it was pulling studies related to curriculum design, teaching, and game-based studies, which were not a focus of this literature review. Hence, experiential was removed from the final list of search terms. Table 2 presents an example query string with the final search terms and Boolean operators.

For the reliability of the search results, a trained graduate student conducted another round of the database search a week after the initial search. The same search strategy, keywords, and filters were applied by the trained graduate student to confirm the number of returned literature. No difference was found in the number of records returned from the search. The search filters applied across the selected databases remained the same: year range as 2015–2022, language as English, and article type as conferences and journals.

Article selection procedure and quality assessment

The article selection process consisted of the following five steps:

-

Step 1: Identification – An initial search was conducted, and all results were imported to Microsoft Excel.

-

Step 2: Screening – Screening was performed to identify duplicates using Excel automated searches and manual review. Identified duplicates were removed.

-

Step 3: Eligibility – Eligibility of the remaining articles was assessed by reviewing the titles and abstracts and applying the inclusion and exclusion criteria.

-

Step 4: Selected – Full text of the remaining records were reviewed. The quality of the articles was assessed, and inclusion and exclusion criteria were applied.

-

Step 5: Selected – Final result selection.

Based on the retrieved results, an additional forward and backward search was conducted by author [32]. The references of the final selected articles were also searched to include any article missed in the database searches [37, 69]. The same inclusion and exclusion criteria were applied to the articles that appeared eligible for consideration.

For the quality assessment in Step 4, the articles had to provide sufficient details of the empirical UX or usability study design and analysis, such as the objectives of the UX study in each article, UX study design, participant demographics, and UX study outcomes [3].

Data items

Following Liberati et al.’s [33] guidelines for systematic review, the following data items were extracted from the final corpus of 15 articles to answer the research questions:

-

RQ1. How do cyberinfrastructure designers report the objectives of their user experience studies?

-

Source of publication: An APA reference of each article was generated.

-

Objectives of UX study: Identification of the overarching objective (s) reported for applying UX studies in cyberinfrastructure.

-

-

RQ2. To what extent do user experience studies of cyberinfrastructure reflect the various aspects of usability?

-

Description of the cyberinfrastructure: A description of the cyberinfrastructure studied in the article. A description of the integrated technologies (including hardware and software) and expected user interaction was also identified.

-

The context of cyberinfrastructure: A description of the context of use of the cyberinfrastructure (e.g., environment, geographical, educational).

-

Usability testing approach and measures: A description of the system evaluation-setting and usability measures applied (e.g., observations, interviews, think-aloud, etc.).

-

Target audience: A summary of users’ demographics, stakeholders, or participants.

-

-

RQ3.a What are the research outcomes of the user experience studies of cyberinfrastructure?

-

A summary of the research outcome (s) of usability/UX study or user feedback reported in articles.

-

-

RQ3.b What are the challenges identified in applying these user experience studies? –Challenges?

-

A summary of the challenges identified in the application of UX studies in the cyberinfrastructure.

-

Data synthesis and analysis

The final 15 selected articles were uploaded to ATLAS.tiFootnote 2, a qualitative data analysis and research software. ATLAS.ti was used to analyze the articles, extract the data, and categorize the findings. The full text of the 15 uploaded articles was read several times to understand the context of cyberinfrastructure, the usability methods and evaluations, and the overall structure of the articles. Each article was analyzed using an inductive approach, i.e., a data-driven coding and data extracting based on the eight identified data items (Sect. 3.5), moving from general to more specific levels [17]. The Results in this paper summarize the findings of the selected articles.

Results

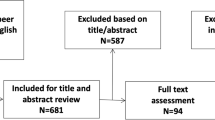

There were four main steps in article selection: Identification, Screening, Eligibility, and Inclusion (Fig. 1). A total of 540 papers were extracted in the identification phase from the selected databases using a keyword search strategy. After importing the 540 results to Microsoft Excel, results were screened to identify duplicates using Excel automated searches and manual review. After removing 194 duplicates, a total of 346 articles remained were screened for eligibility. Titles and Abstracts were reviewed for eligibility, and inclusion and exclusion criteria were applied. After excluding 287 articles, 59 articles were retained after review for relevance. The full text of the 59 remaining articles was analyzed for quality in the eligibility phase. Inclusion and exclusion criteria were also applied. Out of 59, 21 articles were selected for full qualitative review that met the inclusion criteria. During the data extraction and analysis, six articles were removed in quality assessment.

The final 15 articles were categorized according to the research purpose(s) reported for studying the UX of cyberinfrastructures. These categories are shown in Table 3. See Appendix A for the complete bibliography and Appendix B and C for an overview of the publication sources of the 15 articles.

RQ1: How do cyberinfrastructure designers report the objectives of their user experience studies?

The articles were categorized according to the research purpose(s) reported for studying the UX of cyberinfrastructure. Some articles reported more than one research purpose. Table 3 gives an overview of the inductive categories of objectives of UX studies reported across the 15 articles. Four articles focused on improving the overall UX of the cyberinfrastructure (e.g [12, 68]; see complete bibliography in Appendix A). Three articles conducted a usage study to explore ways to enhance stakeholder engagement for the sustainability of the cyberinfrastructure (e.g [52, 53, 68]). Four articles aimed to enhance ease of access to the scientific data (e.g [39, 60]). Three articles focused on improving social and technical interaction to enhance scientific collaborations and resource management [29, 48, 51]. Three articles aimed to enable informed decision-making [48, 60, 65]. Two articles focused on providing easy-to-use cloud services for educational settings [14] and serving multiple distributed scientific communities [38]. One article explored the effectiveness of user support for cyberinfrastructure tools [11].

RQ2: To what extent do user experience studies of cyberinfrastructure reflect the various aspects of usability, namely: (a) the kind of infrastructure, (b) the context, (c) the usability testing approach, and (d) sensitivity to the target audience?

Examination of the final 15 articles identified 12 distinct cyberinfrastructure (Table 4). Articles associated with the 12 cyberinfrastructure were thoroughly reviewed, and data were extracted along the lines of (a) the kind of infrastructure, (b) the context, (c) the usability approach, and (d) are sensitive to the target audience (Table 4).

The kind of infrastructure?

Analysis of the articles associated with the 12 cyberinfrastructure projects found that four cyberinfrastructure projects were reported as fully functional open infrastructures (i.e., Apache Airvata, GENI, ESGF, Teragrid). Out of the four, Apache Airvata, GENI, and Teragrid were funded by the National Science Foundation (NSF)Footnote 3, a United States federal government agency supporting science and engineering research and education. The ESGF cyberinfrastructure is reported as a multiple country (i.e., United States, Europe, Australia) sponsored open-source platform. Three cyberinfrastructure projects were reported as fully functional national-scale infrastructures (i.e., DataONE, Jetstream, OOI), where DataONE and Jetstream aimed to service multiple scientific disciplines. Other cyberinfrastructure reported as fully functional infrastructures were ArcheoGIS, OnBoard, and Basin Futures created for specific science disciplines. One cyberinfrastructure, i.e., KB Commons, was reported as a prototype for bioinformatics tool in a campus cyberinfrastructure. Another cyberinfrastructure, i.e., SWATShare, was reported as a conceptual model for hydrology science.

Five cyberinfrastructure projects discussed the integration of the developed cyberinfrastructure with a larger cyberinfrastructure or its utilization for resource allocation. Examples included TeraGrid and XSEDE which deliver research services to academic institutions and non-profit research organizations ( [29], see Appendix A for full bibliography), and Global Environment for Network Innovations (GENI), which consists of four cyberinfrastructure systems [52, 53]. Jetstream [14] and SWATShare [48] also utilized existing cyberinfrastructure for resource allocations. OnBoard utilized a larger cyberinfrastructure of sensors installed in every operating room for managing surgical workflow [51].

Four cyberinfrastructure projects reported that the design of cyberinfrastructure was based on a modeling component. For example, Basin Futures included two modeling components, a Rainfall-Runoff and a Reach Model Engine for water management [65]. ArcheoGIS was based on the Geographic Information Systems (GIS) model to expand the analytical capabilities of virtual globes [13]. KBCommons [60] and SWATShare [48] were based on the OnTimeURB and SWAT model, respectively.

The context of the cyberinfrastructure?

Analysis of the articles associated with the 12 distinct cyberinfrastructure projects found that seven cyberinfrastructure projects clearly reported their context of use, such as biology science (KBCommons), surgery (OnBoard, part of larger cyberinfrastructure), archaeology (ArcheoGIS), ocean science (OOI) (Table 4). Five cyberinfrastructure projects did not report the context of use clearly, rather implied the usage of cyberinfrastructure for supporting multiple different scientific endeavors, i.e., DataONE, Jetstream, Apache Airvata, TeraGrid, and GENI. With such varied descriptions of usage of cyberinfrastructure, it was difficult to examine the user community and stakeholder groups interested in the cyberinfrastructure, unless it was clearly reported in the article.

Of the 11 identified contexts of use, three projects (four articles) were designed for educational settings: Jetstream [14], GENI [52, 53], and TeraGrid and XSEDE [29]. Three projects (three articles) focused on domain specific research in academic institutions: KBCommons for biology science [60], OOI for ocean science [39], and SWATShare for hydrology science [48].

The remaining five projects (seven articles) reported the context of use for domain specialists in general. Examples include Earth System Grid Federation (ESGF) for climate scientists (e.g [9]) , OnBoard for surgical workflow management [51], Basin Futures for water professionals [65] and 4D Archaeological Geographic Information System (ArcheoGIS) for archaeology experts [13].

What kinds of usability testing approaches?

Across the 15 articles / 12 cyberinfrastructure, the use of usability testing approaches, methods, and measures varied. The description of usability testing methodologies needed more consistency in the identified literature of cyberinfrastructure design and development. Out of 15 articles, 11 articles used multiple methods to examine the usage of the cyberinfrastructure (e.g [60]. , , see Appendix A for full bibliography), six articles reported using surveys (e.g [14]). , , and one utilized case studies to assess the usage of the system (i.e [48]). , . A total of eight articles reported a single measurement to assess the usability of the system, such as a survey or interviews (e.g [11, 14, 52]). , . While some studies used standardized surveys, such as the System Usability Scale (SUS) [60, 68], others used open-ended surveys [14, 39]. Except for ArcheoGIS [13], DataONE [68], and KB Commons [60], no study reported assessing any UX element (e.g., effectiveness, efficiency, user satisfaction) while evaluating the usability of the system. ArcheoGIS [13], DataONE [68], and KB Commons [60] reported using usage testing of prototypes by providing tasks to the participants and encouraging them to think-aloud concurrently.

Eight articles did not report specific details about the usability instruments used (e.g., what kind of survey, what questionnaire, how many tasks) or how they measured the effectiveness of the cyberinfrastructure system through usability tests. Interviews were the most commonly used method (nine articles) to examine the usage of the cyberinfrastructure, followed by observations (seven articles) and surveys (six articles). Think-aloud was commonly employed alongside usability tests in four studies (refer to Table 4). Except for KBCommons [60], no study reported the results of quantitative data collected by the surveys which suggests a gap of using non-standardized instruments to collect usability data. Studies that used qualitative measures, such as think-aloud, interviews, or observations did not report details of data analysis process or any thematic categories of user feedback which suggests a gap in the understanding and execution of qualitative data analysis in the context of cyberinfrastructure.

For what kinds of target audience?

The UX studies of cyberinfrastructure targeted domain experts, scientists, and educators, but the reporting of necessary levels of demographic details of the participants needed to be more consistent. UX evaluations are most effective when there is an equal participation of all representative stakeholder groups who have a direct or an indirect stake in creating the cyberinfrastructure. Analysis of the 15 articles revealed that except for KBCommons [60] and ArcheoGIS [13], no study provided demographic information of the recruited participants, e.g., age, sex ratio, or highest education. Specifically, KBCommons [60] conducted usability studies with 40 participants and ArcheoGIS [13] with eight. Both studies documented the participants’ demographics, including age, gender, and highest education. The most reported demographic information of the participants in the rest of the articles was their research domain, such as students, software developers, PIs of the project. Most articles reported conducting the usage test with domain experts and educators from various fields, such as water professionals, archaeology professionals, climate scientists, bioinformatics experts, oceanography educators, and surgical staff (Table 4). While students were observed in classroom settings, the articles only mentioned the domain details of the educators and the classes they taught.

RQ3.a: What are the research outcomes of the user experience studies of cyberinfrastructure?

The majority of the selected articles did not explicitly report the UX outcomes but implied the presence of usability in the studied system. When reported, the findings were often closely tied to the system’s design, limiting generalizability to other contexts. This finding poses challenges in synthesizing and summarizing the available information on UX and usability in cyberinfrastructure (Table 5).

It is possible to get a sense of the broad descriptions and implications of the UX in the selected cyberinfrastructure literature. For example, several usability studies reported improved access to the scientific data and information in cyberinfrastructure [13, 39, 51, 60, 68]. Specifics of how this access is achieved are reported in a highly contextualized manner detailing the system’s technical design, such as the connection established between ArcheoGIS and the geo-database using PostGIS [13]. Similarly, Singh et al. [60] reported improved access to the biology data through prescriptive systems utilizing the CyVerse computational technology. The usability studies highlighted the significant role of accurate data analysis and visualization for effective decision-making [48, 60, 65], thereby suggesting a need for user-friendly systems that can be easily comprehended by all user groups. Additionally, the survey data collected to test the usability of the ESGF cyberinfrastructure reported that the lack of an effective user support system negatively impacted the overall UX of the system [9, 11, 12].

RQ3.b: What are the challenges identified in applying these user experience studies?

The 15 articles were examined to review the challenges reported across the 12 distinct cyberinfrastructure projects concerning the application of UX studies (Table 5). However, reporting of the challenges across the cyberinfrastructure in the selected articles was not consistent. While five cyberinfrastructure projects did not report any challenges in the application of UX in the cyberinfrastructure development (i.e [13, 29, 48, 51, 65]). , , the remaining eight cyberinfrastructure presented the UX challenges in a highly contextualized manner. For example, the UX studies of the ESGF cyberinfrastructure identified a need for regular UX evaluations of the user support system to enhance user satisfaction by using interviews or user satisfaction surveys or questionnaire [9, 11, 12]. However, most of the selected cyberinfrastructure did not report having a dedicated user support system. In the light of this finding, it remains unclear if the findings concerning the UX challenges within a specific cyberinfrastructure project can be extrapolated to other projects. Overall, all cyberinfrastructure studies emphasized the importance of effective stakeholder engagement for increased system usage and adoption [14, 38, 39, 52, 53]. However, in the absence of detailed demographic information on the usability testing participants, it remained unclear if the UX study of the cyberinfrastructure employed all representative stakeholders. Out of the 12 cyberinfrastructure projects, five explicitly reported the benefits of the application of UX evaluations in cyberinfrastructure design and development (e.g [51, 60, 68]). , . For instance, Volentine et al. [68] reported improved system usability, increased stakeholder engagement, and enhanced trust among working members through iterative UX evaluations.

A significant challenge identified was the lack of a sociotechnical design framework for sustained usage over time (e.g [29, 53, 60]. , . These studies were driven by a sociotechnical lens for cyberinfrastructure design and advocated employing a sociotechnical design framework for usable and sustainable systems. For example, Singh et al. [60] specifically highlighted the need for a sociotechnical design process to address user problems comprehending the system’s functioning, as well as enable users to effectively engage with complex cyberinfrastructure systems. Similarly, Randall et al. [53] pointed to the need to create a sociotechnical environment for sustained usage of cyberinfrastructure.

Discussion

The main contributions of this systematic review can be summarized as follows. Firstly, analyzing the 15 articles in the field of UX studies of cyberinfrastructure for scientific research reveals publication trends, objectives of the UX studies, and the context of the cyberinfrastructure developed. Secondly, the analysis demonstrates the extent to which the usability aspects are addressed in these studies by presenting the type of infrastructure, its context, target audience, and the usability testing approach. It also highlights the research outcomes and challenges of UX studies in cyberinfrastructure for scientific research. Thirdly, this review reflects on the limitations of these UX studies and provides implications for future design and development of cyberinfrastructure for scientific research.

Discussion by research questions

RQ1: How do cyberinfrastructure designers report the objectives of their user experience studies?

All cyberinfrastructure projects clearly reported their UX objectives, such as developing user-friendly tools, providing improved service delivery, or providing easy access to scientific data (Table 3). However, digging deeper into these studies, the review found that the majority of the studies (n = 7) did not report any usability goal (effectiveness, efficiency, user satisfaction) or a UX metric (performance, issue, behavioral) that they aimed to assess towards their objective of building a usable software (Tables 4 and 5) [23, 43]. For example, Apache Airvata [38] and Basin Futures [65] reported their UX objective as building an easy-to-use platform for scientific research but used single measures such as interviews and surveys, respectively, which alone do not suffice for a usability testing approach or a UX evaluation process [66]. Several other studies (n = 5) (e.g [29, 38, 65]). , did not report any user feedback against the UX objectives.

From a usability standpoint, scientific research objectives such as informed decision-making or access to scientific data should be assessed based on user feedback [66]. For a successful system and comprehensive UX measurement, it is essential to collect user feedback through multiple methods and at different times during system development [66]. UX evaluation and usability testing utilize several measures, including task-based performance, heuristic evaluation, observations, and think-aloud, to build a usable system [43]. In the systematic reviews of UX design in other fields, authors have reported the use of varied UX evaluation approaches that align with the objectives of the studies reviewed. For example, Kim et al. [28] reviewed the UX studies of Virtual Reality (VR) systems and outlined the various UX evaluation methods (i.e., task-based usability tests and user satisfaction surveys) used to assess the user environment and user activity in VR systems. In a similar approach, Pellas et al. [47] reviewed the UX studies of game-based interventions in a 3D virtual environment and reported the use of mixed-method approaches for UX evaluation and development of usable and user-friendly VR environments (e.g., surveys, tests, observations, interviews, student work).

These findings highlight the complexity and variability of cyberinfrastructure design, and they also align with the findings of UX design in other fields (e.g [28, 47]. , that note a need for a specific set of UX elements that can assess the UX of cyberinfrastructure for scientific research. In the future, researchers are encouraged to reflect upon and attempt to establish a connection between the scientific objective of cyberinfrastructure and the chosen UX evaluation methods.

RQ2: To what extent do user experience studies of cyberinfrastructure reflect the various aspects of usability, namely: (a) the kind of infrastructure, (b) the context, (c) the usability testing approach, and (d) sensitivity to the target audience?

Results from the second research question indicate that the reporting of the infrastructure and its use for scientific research is highly contextual. Most studies (n = 7) failed to establish a connection between the infrastructure, its use, and its usability. These studies did not reflect on how the technology design influenced its usability or vice versa. As a result, the usability testing approach used by these studies to gauge the usability goals (i.e., effectiveness, efficiency, and user satisfaction) and the overall UX objectives remained unclear. For instance, the study on SWATShare [48] observed classrooms to evaluate the accuracy of the water assessments performed by the cyberinfrastructure tool but failed to include any usability element (e.g., time taken to complete tasks, system errors) or corresponding UX metrics (e.g., learnability, user satisfaction) in their claims about the tool’s usability. A possible reason for this finding might be that cyberinfrastructure comprises a diverse range of technologies, heterogenous data streams, and varied stakeholder groups, which can be challenging to design for [8, 10, 46, 70]. However, this finding is particularly problematic for researchers when trying to unpack the UX design characteristics, including learnability, efficiency, errors, and satisfaction of cyberinfrastructure of similar contexts.

Studies on KB Commons [60] and DataONE [68] employed a range of UX evaluation methods, including eye-tracking, observations, task-based usability tests, and user satisfaction surveys, such as the System Usability Scale. These two studies documented the various UX methods utilized to conduct usability studies and user feedback they gathered through these methods. One prevalent observation in the remaining studies was the absence of detailed information about the usability testing procedures, including observation protocols, interview questions, and survey questionnaires used to assess the system. This finding makes it challenging to grasp the theoretical and practical strategies employed by cyberinfrastructure designers in creating a user-friendly system. Moreover, it raises concerns about whether the evaluation methods employed by these designers genuinely reflect UX measures.

In a similar pattern, details of participants’ characteristics were lacking in 70% of the reviewed articles, and the distinction between representative stakeholders and end-users was not clearly made. Including the demographic information of users and engaging diverse stakeholder groups in UX research is recommended [66]. This aspect of UX studies is especially crucial when recruiting educators, scientists, and student groups as participants to represent the diverse group of stakeholders for whom the cyberinfrastructure is designed. Details of participants’ characteristics facilitate understanding of specific usage of the cyberinfrastructure in varied contexts and can provide valuable insights into the usability needs and preferences of target stakeholder and end-user groups [28].

In the light of the insights derived from this literature review, researchers and practitioners aiming to conduct UX studies in cyberinfrastructure, can use these findings as a resource for adapting to cyberinfrastructure projects and address key aspects of usability studies, while contributing to the establishment of UX design characteristics of cyberinfrastructure (Table 6).

RQ3.a: What are the research outcomes of the user experience studies of cyberinfrastructure?

Among the reviewed studies, the majority (n = 8) documented findings from usage and usability testing conducted to evaluate the system. For example, the study on ArcheoGIS [13] reported enhanced service delivery as an outcome of usage testing. However, two critical issues emerge from this body of work. First, it remains unclear how these studies arrived at their claims of developing a usable tool because the evaluation methods employed in these studies do not align with the established usability testing methods or UX evaluation metrics. Second, these studies primarily focus on assessing the system’s effectiveness (tasks it can perform), rather than assessing the users’ experience, engagement, and satisfaction with the system. This finding highlights a noticeable disconnect between the intended UX outcomes and the actual design of the cyberinfrastructure for scientific research.

It is crucial to recognize that a research outcome goes beyond just an improved usability score. Usage studies conducted on cyberinfrastructure, such as GENI [52, 53] and KBCommons [60] have demonstrated research outcomes that highlight a need for sociotechnical framework to create cyberinfrastructure tools and technologies that are both usable and sustainable. The current literature on UX studies of cyberinfrastructure requires a more consistent approach when reporting research outcomes from UX evaluations of cyberinfrastructure tools and technologies. Therefore, to advance the state of UX studies in the field of cyberinfrastructure design and development, it is essential to address two key issues: one, standardizing evaluation methods; and second, broadening the focus from simply evaluating a system’ effectiveness to also include efficiency, learnability, user satisfaction, and engagement.

RQ3.b: What are the challenges identified in applying these user experience studies?

This section discusses the challenges identified through the findings of this systematic review. First, these UX studies reveal the absence of an established framework for designing usable cyberinfrastructure. In context of the UX outcomes, some studies claim usability and user satisfaction of prime importance (e.g [14]). , , others emphasize a need for stakeholder outreach and engagement for cyberinfrastructure sustainability (e.g [52]). , . A handful of studies show need for a robust user support system for a usable cyberinfrastructure (e.g [9]). , . However, there is a disconnect between the UX outcomes and the evaluation methods used to arrive at the outcomes. Future research should go beyond assessing the effectiveness of cyberinfrastructure and include evaluations of efficiency, learnability, error prevention, and recovery [66].

Second, the reviewed articles reported usage studies with limited stakeholder groups, suggesting missed opportunities for broader user involvement and increased system usage (e.g., some studies examined the UX with only three to four domain experts). This finding suggests that cyberinfrastructure designers are not taking full advantage of system evaluation with the broader stakeholder and end-user groups (e.g., students, researchers, practitioners). Furthermore, this raises questions about the effectiveness of UX evaluation process conducted by the cyberinfrastructure designers. Several of the selected studies do not present any operational definition of usability in the context of the cyberinfrastructure studied and report the successful completion of tasks in a highly contextual manner. It becomes clear that designers of cyberinfrastructure often cite the benefits of usability testing and UX evaluations but fail to evaluate these cyberinfrastructure projects for their full potential.

Third, some UX studies have presented results as a one-time evaluation of the system without actively involving end-users. These studies have primarily relied on successful execution of technical ‘use cases’ rather than presenting user feedback, which hinder evidence-based discussions of usability and benefits of the UX studies (e.g [48, 65]). , . ‘Use cases’ are prevalent in requirements analysis of engineering systems design to understand the context of the system and are used as a starting point for building a model, followed by a low or high-fidelity user interface prototype [58]. Usability testing comes much later as a validation phase of user requirements from the users’ perspective. This finding concerning the execution of technical use-cases instead of usability testing is problematic because any discussions about the usability of cyberinfrastructure, how it can be used, or its benefits are not possible if there is no clear evidence-based discussion of users’ perspectives.

Finally, results from the review indicate that the most common need identified for designing a usable and sustainable cyberinfrastructure was a sociotechnical design framework that considers both the social and technological aspects of cyberinfrastructure development. Sociotechnical studies on cyberinfrastructure outline issues concerning the sustainability of cyberinfrastructure over long periods [7]. Cyberinfrastructure as a sociotechnical system is embedded into a pre-existing technological network, organizational arrangements, and social relations; therefore, it needs to evolve continually, address the changing user needs, and realign the relationships among stakeholders/users, technologies, and organizations [6, 7]. A sociotechnical design framework uses the sociotechnical lenses to develop a comprehendible technological design, including the social and the organizational context in which the cyberinfrastructure will be used, and the stakeholders will operate. In the absence of a sociotechnical design, complex data-intensive computational systems, such as cyberinfrastructure, are considered ‘noisy black boxes’ where the users cannot comprehend the system [19, 56, 67].

The analyses of the articles found that no study implemented or focused on a sociotechnical cyberinfrastructure design. Instead, the focus of the development was primarily on technical innovation. This finding empirically confirms what others have suggested, that consideration of the social and technical aspects of cyberinfrastructure development can enrich explanations of how cyberinfrastructure can be sustained over the long term (e.g [52, 55]). , . Simultaneously, a lack of social and technical considerations can hamper wider adoption of the cyberinfrastructure in the scientific community [26, 27].

Implications for practice and future research

This review points to two significant gaps in the literature. First, the majority of the UX studies of cyberinfrastructure primarily focused on technological innovation and proving the effectiveness of the system for technical activities but failed to report investigation of user experiences with the system or identifying potential usability issues (e.g [29, 38, 65]). , . In this context, future research should go beyond assessing the effectiveness of cyberinfrastructure and include evaluations of efficiency, learnability, error prevention, and recovery [66]. For instance, the reviewed articles aimed to improve access to scientific data, enable informed decisions, and enhance the service delivery to researchers. Since cyberinfrastructure for scientific research is inherently specialized and is designed for the diverse user community, future research must identify targeted disciplines and sub-disciplines of users to assess the potential usability issues before delivering the system (Table 7).

Second, the systematic review reveals the complexity of designing socially innovative [21] cyberinfrastructure. Socially innovative design implies developing meaningful ideas and solutions for creating a sustainable design in response to social needs [21, 41]. While user-centered design approaches and prototyping have been found useful for social innovation, research indicates a need for close collaboration with other disciplines involved in the system development to scale up social innovations [21, 41]. Although the articles reported user-centered perspectives and prototyping design approaches, the reviewed studies failed to report a socially innovative cyberinfrastructure design. Designing usable cyberinfrastructure is a complex and difficult task that poses many challenges for practitioners. Given the diversity of scientific data and the range of stakeholders involved, practitioners struggle to find a comprehensive UX evaluation framework suitable for diverse users. Usability evidence is often inadequate, relying on small case studies or use cases rather than solid evidence or comparative studies. In this context, Frauenberger [15] argues that designing socially innovative cyberinfrastructure is complex due to the entanglement of technological, social, and human interaction. The stakeholders’ and end-users social and organizational contexts are essential factors that must be considered during the cyberinfrastructure design and development [15].

Limitation of the study

There are some limitations to consider when interpreting the findings and implications of this systematic review. A single researcher extracted the data and may have errors, a common issue in research on large-scale qualitative literature reviews and can arise due to the complexity of the data [4]. To address this limitation, multiple publications were included for the same cyberinfrastructure project to understand the context of the cyberinfrastructure fully. In some cases, the reported information was ambiguous or incomplete, necessitating data triangulation across multiple studies. Some studies used the terms collaboratories, e-infrastructure, e-Science, and science gateway to discuss cyberinfrastructure, which led to difficulties in determining unique cyberinfrastructure studies. These terms are sometimes described as equivalent to cyberinfrastructure, but this seems not exactly right. e-Science has a sense of being more about cyber-enabled science and somewhat less about the underlying infrastructure [8, 44, 62]. Similarly, collaboratories are more about large scale distributed scientific collaborations [20]. There were instances where multiple studies presented data for the tools and technologies that were part of a larger cyberinfrastructure but did not explicitly provide connections between them. This review documented 15 cyberinfrastructure studies that showed presence of usage evaluation which is a low number to yield generalized conclusions. However, the low number of studies underscores a significant deficiency in UX integration within cyberinfrastructure. This gap indicates an urgent need to establish a consensus around UX and usability evaluation methods for cyberinfrastructure for scientific research. This review adhered to a specific definition of cyberinfrastructure [62]. Future studies may consider screening the literature using related terminologies for cyberinfrastructure.

Conclusion

In conclusion, the review of the selected UX studies of cyberinfrastructure reveals a need for established frameworks for designing usable systems and a lack of reporting of user feedback in several of the studies. Developing usable cyberinfrastructure is filled with challenges, and the impact of usability and UX evaluation approaches on the usage of cyberinfrastructure remains to be determined. There is still significant uncertainty regarding how cyberinfrastructure should be developed to address the stakeholders and end-users social and technical needs. The findings of this review show that three of the 15 articles suggested a need for sociotechnical design framework for cyberinfrastructure research. The lack of examples, therefore, makes it hard for future researchers to follow a sociotechnical design process for cyberinfrastructure. This review highlights the necessity of paying attention to the social context of cyberinfrastructure during its design and development. Future researchers must focus on understanding which technologies work for which type of user group, which organization, in which context, and for what purpose. Future research on cyberinfrastructure design and development must keep the social context (i.e., organizational practices) in the loop to design cyberinfrastructure for reliable functioning.

Appendix A

Bibliography of the final qualified 15 articles in American Psychological Association (APA) reference style.

Citation | APA Reference of the article |

|---|---|

Chunpir et al. (2016) | Chunpir, H.I., Curri, E., Zaina, L., & Ludwig, T. (2016). Improving User Interfaces for a Request Tracking System: Best Practical RT. In HIMI 2016: Human Interface and the Management of Information: Applications and Services, 9735 (pp. 391–401). Springer, Cham. |

Chunpir et al. (2015) | Chunpir, H. I., Rathmann, T., & Ludwig, T. (2015). The need for a tool to support users of e-Science infrastructures in a virtual laboratory environment. Procedia Manufacturing, 3, 3375–3382. |

Chunpir et al. (2017) | Chunpir, H.I., Williams, D., & Ludwig, T. (2017). User Experience (UX) of a Big Data Infrastructure. In HIMI 2017: Human Interface and the Management of Information: Supporting Learning, Decision-Making and Collaboration, 10,274 (pp. 467–474). Springer, Cham. |

De Roo et al. (2016) | De Roo, B., Lonneville, B., Bourgeois, J., & De Maeyer, P. (2016). From Virtual Globes to ArcheoGIS: Determining the Technical and Practical Feasibilities. Photogrammetric Engineering & Remote Sensing, 82(9), 677–685. |

Fischer et al. (2017) | Fischer, J., Hancock, D. Y., Lowe, J. M., Turner, G., Snapp-Childs, W., & Stewart, C. A. (2017, October). Jetstream: A cloud system enabling learning in higher education communities. In Proceedings of the 2017 ACM SIGUCCS Annual Conference (pp. 67–72). ACM |

Knepper & Chen (2016) | Knepper, R., & Chen, Y. C. (2016, June). Situating Cyberinfrastructure in the Public Realm: The TeraGrid and XSEDE Projects. In dg.o ‘16: Proceedings of the 17th International Digital Government Research Conference on Digital Government Research (pp. 129–135). ACM. |

Marru et al. (2021) | Marru, S., Kuruvilla, T., Abeysinghe, E., McMullen, D., Pierce, M., Morgan, D. G., Tait, S. L., & Innes, R. W. (2021, May). User-Centric Design and Evolvable Architecture for Science Gateways: A Case Study. In 2021 IEEE/ACM 21st International Symposium on Cluster, Cloud and Internet Computing (CCGrid) (pp. 267–276). IEEE. |

McDonnell et al. (2018) | McDonnell, J., Decharon, A., Lichtenwalner, C. S., Hunter-Thomson, K., Halversen, C., Schofield, O., Glenn, S., Ferraro, C., Lauter, C., & Hewlett, J. (2018). Education and public engagement in OOI: Lessons learned from the field. Oceanography, 31(1), 138–146. |

Rajib et al. (2016) | Rajib, M. A., Merwade, V., Kim, I. L., Zhao, L., Song, C., & Zhe, S. (2016). SWATShare–A web platform for collaborative research and education through online sharing, simulation and visualization of SWAT models. Environmental Modelling & Software, 75, 498–512. |

Rambourg et al. (2018) | Rambourg, J., Gaspard-Boulinc, H., Conversy, S., & Garbey, M. (2018, November). Welcome OnBoard: An Interactive Large Surface Designed for Teamwork and Flexibility in Surgical Flow Management. In Proceedings of the 2018 ACM International Conference on Interactive Surfaces and Spaces (pp. 5–17). ACM. |

Randall et al. (2015) | Randall, D. P., Diamant, E. I., & Lee, C. P. (2015, April). Creating sustainable cyberinfrastructure. In CHI’15: Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (pp. 1759–1768). ACM. |

Randall et al. (2018) | Randall, D. P., Paine, D., & Lee, C. P. (2018, October). Educational outreach & stakeholder role evolution in a cyberinfrastructure project. In 2018 IEEE 14th International Conference on e-Science (e-Science) (pp. 201–211). IEEE. |

Singh et al. (2020) | Singh, K., Li, S., Jahnke, I., Pandey, A., Lyu, Z., Joshi, T., & Calyam, P. (2020, December). A Formative Usability Study to Improve Prescriptive Systems for Bioinformatics Big Data. In 2020 IEEE International Conference on Bioinformatics and Biomedicine (BIBM) (pp. 735–742). IEEE. |

Taylor et al. (2021) | Taylor, P., Rahman, J., O’Sullivan, J., Podger, G., Rosello, C., Parashar, A., Sengupta, A., Perraud, J.M., Pollino, C., & Coombe, M. (2021). Basin futures, a novel cloud-based system for preliminary river basin modelling and planning. Environmental Modelling & Software, 141, 105,049, (pp. 1–20). |

Volentine et al. (2021) | Volentine, R., Specht, A., Allard, S., Frame, M., Hu, R., & Zolly, L. (2021). Accessibility of environmental data for sharing: The role of UX in large cyberinfrastructure projects. Ecological Informatics, 63, 101,317, (pp. 1–8). |

Appendix B

Overview of publication sources and impact of the final qualified 15 articles.

Journals (n = 6) | f | Article | IF | H-Index | SJR | GS Citations | Year | |

|---|---|---|---|---|---|---|---|---|

Topic of Environment | 4 | |||||||

Environmental Modelling & Software | McDonnell et al. (2018) | 5.5 | 146 | 1.43 | 3 | 2021 | ||

Environmental Modelling & Software | Rajib et al. (2016) | 5.5 | 146 | 1.43 | 72 | 2016 | ||

Oceanography | Taylor et al. (2021) | 3.4 | 95 | 1.03 | 6 | 2018 | ||

Ecological Informatics | Volentine et al. (2021) | 4.5 | 60 | 0.87 | 1 | 2021 | ||

Topic of Geography | 2 | |||||||

Procedia Manufacturing | Chunpir et al. (2015) | - | 55 | 0.504 | 13 | 2015 | ||

Photogrammetric Engineering and Remote Sensing | De Roo et al. (2016) | 1.083 | 132 | 0.48 | 3 | 2016 | ||

Conference Proceedings (n = 9) | ||||||||

Topic of Engineering | 4 | |||||||

IEEE International Conference on Bioinformatics and Biomedicine (BIBM) | Marru et al. (2021) | - | 8 | - | 2 | 2020 | ||

IEEE/ACM 21st International Symposium on Cluster, Cloud and Internet Computing (CCGrid) | Randall et al. (2015) | - | 7 | - | - | 2021 | ||

2018 IEEE 14th International Conference on e-Science (e-Science) | Randall et al. (2018) | - | 7 | - | - | 2018 | ||

CHI ‘15: Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems | Singh et al. (2020) | - | 202 | 0.54 | 19 | 2015 | ||

Topic of Technology in Higher Education | 1 | |||||||

ACM SIGUCCS Annual Conference | Fischer et al. (2017) | - | 180 | 2.51 | 8 | 2017 | ||

Topic of Human Computer Interaction | 3 | |||||||

Human Interface and the Management of Information: Supporting Learning, Decision-Making and Collaboration | Chunpir et al. (2016) | - | - | - | 4 | 2017 | ||

Human Interface and the Management of Information: Applications and Services | Chunpir et al. (2017) | - | - | - | 4 | 2016 | ||

ACM International Conference on Interactive Surfaces and Spaces | Rambourg et al. (2018) | - | 4 | 0 | 4 | 2018 | ||

Topic of Government Research | 1 | |||||||

dg.o ‘16: Proceedings of the 17th International Digital Government Research Conference on Digital Government Research | Knepper & Chen (2016) | - | - | - | 2 | 2016 | ||

Appendix C

Overview of number of articles (journal and conference) published per year from 2015 to 2022. Subplot shows the geographical coverage of the number of articles published per country. Note USA – The United States of America

Data availability and materials

The anonymized datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Notes

HCD Expert: Dr. Isa Jahnke’s Profile (Linkedin; Founding VP at Technische Universität Nürnberg).

ATLAS.ti: https://atlasti.com.

Dr. Isa Jahnke: Linkedin Profile; Technische Universität Nürnberg Profile.

Dr. Prasad Calyam: Linkedin Profile; University of Missouri-Columbia Profile.

Hillary Gould: Profile and Homepage.

References

Alarcon ML, Nguyen M, Debroy S, Bhamidipati NR, Calyam P, Mosa A (2021) Trust Model for Efficient Honest Broker based Healthcare Data Access and Processing. In: 2021 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events (PerCom Workshops), IEEE, pp. 201–6

Atkins DE, Droegemeier KK, Feldman SI, Garcia-Molina H, Klein ML, Messerschmitt DG et al (2003) Revolutionizing Science and Engineering Through Cyberinfrastructure: Report of the National Science Foundation Blue-Ribbon Advisory Panel on Cyberinfrastructure, National Science Foundation. https://www.nsf.gov/cise/sci/reports/atkins.pdf Accessed 24 Jan 2024

Bano M, Zowghi D, Kearney M, Schuck S, Aubusson P (2018) Mobile learning for science and mathematics school education: a systematic review of empirical evidence. Comput Educ 121:30–58

Belur J, Tompson L, Thornton A, Simon M (2021) Interrater Reliability in systematic review methodology: exploring variation in Coder decision-making. Sociol Methods Res 50(2):837–865

Berente N, Howison J, King JL, Ahalt S, Winter S (2018) Organizing and the Cyberinfrastructure Workforce. https://papers.ssrn.com/abstract=3260715. Accessed 24 Jan 2024

Bietz MJ, Baumer EPS, Lee CP (2010) Synergizing in Cyberinfrastructure Development. Comput Supported Coop Work 19(3):245–281

Bietz MJ, Ferro T, Lee CP (2012) Sustaining the development of cyberinfrastructure: an organization adapting to change. In: Proceedings of the ACM 2012 conference on Computer Supported Cooperative Work, Seattle Washington USA, ACM, pp 901–10

Bietz MJ, Paine D, Lee CP (2013) The work of developing cyberinfrastructure middleware projects. In: Proceedings of the 2013 conference on Computer supported cooperative work, San Antonio Texas USA: ACM, pp 1527–38

Chunpir HI, Curri E, Zaina L, Ludwig T (2016) Improving user interfaces for a request Tracking System: best practical RT. In: Yamamoto S (ed) Human interface and the management of information: applications and services. Springer International Publishing, Cham, pp 391–401

Chunpir HI, Ludwig T, Badewi A (2014) A Snap-Shot of User Support Services In Earth System Grid Federation (ESGF): A Use Case Of Climate Cyber-Infrastructures, pp 166–713

Chunpir HI, Rathmann T, Ludwig T (2015) The need for a Tool to support users of e-Science infrastructures in a virtual Laboratory Environment. Procedia Manuf 3:3375–3382

Chunpir HI, Williams D, Ludwig T (2017) User experience (UX) of a Big Data infrastructure. In: Yamamoto S (ed) Human interface and the management of information: supporting Learning, decision-making and collaboration. Springer International Publishing, pp 467–474

De Roo B, Lonneville B, Bourgeois J, De Maeyer P (2016) From virtual globes to ArcheoGIS: determining the Technical and practical feasibilities. Photogrammetric Eng Remote Sens 82(9):677–685

Fischer J, Hancock DY, Lowe JM, Turner G, Snapp-Childs W, Stewart CA (2017) Jetstream: A Cloud System Enabling Learning in Higher Education Communities. In: Proceedings of the 2017 ACM SIGUCCS Annual Conference, Seattle Washington USA: ACM, pp 67–72

Frauenberger C (2019) Entanglement HCI the Next Wave? ACM Trans Comput-Hum Interact 27(1):2:1–2

Goff SA, Vaughn M, McKay S, Lyons E, Stapleton AE, Gessler D, Matasci N, Wang L, Hanlon M, Lenards A, Muir A (2011) The iPlant collaborative: cyberinfrastructure for plant biology. Front Plant Sci 2:1–16

Graneheim UH, Lindgren BM, Lundman B (2017) Methodological challenges in qualitative content analysis: a discussion paper. Nurse Educ Today 56:29–34

Haddaway NR, Collins AM, Coughlin D, Kirk S (2015) The role of Google Scholar in evidence reviews and its applicability to grey literature searching. PLoS ONE 10(9):1–17

Herrmann T, Pfeiffer S (2023) Keeping the organization in the loop: a socio-technical extension of human-centered artificial intelligence. AI Soc 38(4):1523–1542

Hey T, Trefethen A (2008) E-science, cyber-infrastructure, and scholarly communication. In: Scientific collaboration on the Internet. In: Gary O, Ann Z, Nathan B (eds) Scientific collaboration on the Internet, The MIT Press, pp 15–31

Hillgren PA, Seravalli A, Emilson A (2011) Prototyping and infrastructuring in design for social innovation. CoDesign 7(3–4):169–183

Huerta EA, Khan A, Davis E, Bushell C, Gropp WD, Katz DS et al (2020) Convergence of artificial intelligence and high performance computing on NSF-supported cyberinfrastructure. J Big Data 7(88):1–12

Interaction Design Foundation (2016) What is Usability - The Ultimate Guide. https://www.interaction-design.org/literature/topics/usability Accessed 24 Jan 2024

International Standard Organization (2020) ISO 9241 – 110:2020(en), Ergonomics of human-system interaction — Part 110: Interaction principles. https://www.iso.org/obp/ui/#iso:std:iso:9241:-110:ed-2:v1:en. Accessed 24 Jan 2024

Jeong S, Hou R, Lynch JP, Sohn H, Law KH (2019) A scalable cloud-based cyberinfrastructure platform for bridge monitoring. Struct Infrastruct Eng 15(1):82–102

Kee KF, McCain JC (2018) What is Good Feedback in Big Data Projects for Cyberinfrastructure Diffusion in e-Science? In: 2018 IEEE International Conference on Big Data (Big Data), IEEE, pp 2804–12

Kee KF, Schrock AR (2019) Best social and organizational practices of successful science gateways and cyberinfrastructure projects. Future Generation Comput Syst 94:795–801

Kim YM, Rhiu I, Yun MH (2020) A systematic review of a virtual reality system from the perspective of user experience. Int J Human–Computer Interact 36(10):893–910

Knepper R, Chen YC (2016) Situating Cyberinfrastructure in the Public Realm: The TeraGrid and XSEDE Projects. In: Proceedings of the 17th International Digital Government Research Conference on Digital Government Research, ACM, pp 129–35

Lee CP, Bietz MJ, Thayer A (2010) Research-driven stakeholders in cyberinfrastructure use and development. In: 2010 International Symposium on Collaborative Technologies and Systems, pp 163–72

Lee CP, Dourish P, Mark G (2006) The human infrastructure of cyberinfrastructure. In: Proceedings of the 2006 20th anniversary conference on Computer supported cooperative work, Banff Alberta Canada: ACM, pp 483–92

Levy Y, Ellis TJ (2006) A systems approach to conduct an effective literature review in support of information systems research. Informing Sci 9:182–212

Liberati A (2009) The PRISMA Statement for reporting systematic reviews and Meta-analyses of studies that evaluate Health Care interventions: explanation and elaboration. Ann Intern Med 151(4):1–34

Lougee B, Robinson M, Stackpole C, Watson M, Woodward G (2020) Bear: cyberinfrastructure for Long-Tail Researchers at the Federal Reserve Bank of Kansas City. Practice and experience in Advanced Research Computing. Portland OR USA: ACM, pp 49–55

Loyola-Gonzalez O (2019) Black-box vs. white-box: understanding their advantages and weaknesses from a practical point of view. IEEE Access 7:154096–154113

Maghded HS, Ghafoor KZ, Sadiq AS, Curran K, Rawat DB, Rabie K (2020) A novel AI-enabled framework to diagnose coronavirus COVID-19 using smartphone embedded sensors: design study. In: 2020 IEEE 21st International Conference on Information Reuse and Integration for Data Science (IRI) [Internet]. IEEE, pp 180–187

Manganello J, Blake N (2010) A Study of Quantitative Content Analysis of Health Messages in U.S. Media from 1985 to 2005. Health Commun 25(5):387–396

Marru S, Kuruvilla T, Abeysinghe E, McMullen D, Pierce M, Morgan DG et al (2021) User-Centric Design and Evolvable Architecture for Science Gateways: A Case Study. In: 2021 IEEE/ACM 21st International Symposium on Cluster, Cloud and Internet Computing (CCGrid), IEEE, pp 267–76

McDonnell J, deCharon A, Lichtenwalner CS, Hunter-Thomson K, Halversen C, Schofield O et al (2018) Education and public engagement in OOI: lessons learned from the field. Oceanography 31(1):138–146

Michener WK, Allard S, Budden A, Cook RB, Douglass K, Frame M et al (2012) Participatory design of DataONE—Enabling cyberinfrastructure for the biological and environmental sciences. Ecol Inf 11:5–15

Murray R, Caulier-Grice J, Mulgan G (2010) The Open Book of Social Innovation. Young Foundation, Nesta, London

Nasari A, Le H, Lawrence R, He Z, Yang X, Krell M et al (2022) Benchmarking the performance of accelerators on National Cyberinfrastructure resources for Artificial Intelligence / Machine Learning workloads. Practice and experience in Advanced Research Computing. ACM, Boston MA USA, pp 1–9

Nur AI, Santoso HB, Hadi PPO (2021) The Method and Metric of User Experience Evaluation: A Systematic Literature Review. In: 2021 10th International Conference on Software and Computer Applications, Kuala Lumpur Malaysia: ACM, pp 307–17

Olson JS, Hofer EC, Bos N, Zimmerman A, Olson GM, Cooney D, Faniel I (2008) A theory of remote scientific collaboration. In: In: Gary O, Ann Z, Nathan B (eds) Scientific collaboration on the Internet, The MIT Press, pp 73–97

Paine D, Ghoshal D, Ramakrishnan L (2020) Experiences with a flexible user research process to build data change tools. J Open Res Softw 8(1):1–8

Pancake CM (2003) Usability issues in developing tools for the grid — and how visual representations can help. Parallel Process Lett 13(02):189–206

Pellas N, Mystakidis S, Christopoulos A (2021) A systematic literature review on the user experience design for game-based interventions via 3D virtual worlds in K-12 education. Multimodal Technol Interact 5(6):1–23

Rajib MA, Merwade V, Kim IL, Zhao L, Song C, Zhe S (2016) SWATShare–A web platform for collaborative research and education through online sharing, simulation and visualization of SWAT models. Environ Model Softw 75:498–512

Ramakrishnan L, Gunter D (2017) Ten principles for creating usable software for science. In: 2017 IEEE 13th International Conference on e-Science (e-Science), IEEE, pp 210–218

Ramakrishnan L, Poon S, Hendrix V, Gunter D, Pastorello GZ, Agarwal D (2014) Experiences with user-centered design for the Tigres workflow API. In: 2014 IEEE 10th International Conference on e-Science, IEEE, pp 290–297

Rambourg J, Gaspard-Boulinc H, Conversy S, Garbey M (2018) Welcome OnBoard: An Interactive Large Surface Designed for Teamwork and Flexibility in Surgical Flow Management. In: Proceedings of the 2018 ACM International Conference on Interactive Surfaces and Spaces, Tokyo Japan: ACM, pp 5–17

Randall DP, Diamant EI, Lee CP (2015) Creating Sustainable Cyberinfrastructures In: CHI’15: Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems ACM, pp 1759–68

Randall DP, Paine D, Lee CP (2018) Educational outreach & stakeholder role evolution in a cyberinfrastructure project. In: 2018 IEEE 14th International Conference on e-Science (e-Science) IEEE, pp 201–211

Ribes D (2006) Universal informatics: Building cyberinfrastructure, interoperating the geosciences. Dissertation, University of California, San Diego: ProQuest Dissertations Publishing

Ribes D, Lee CP (2010) Sociotechnical studies of Cyberinfrastructure and e-Research: current themes and future trajectories. Comput Supported Coop Work 19(3–4):231–244

Rudin C (2019) Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat Mach Intell 1(5):206–215

Rudin C, Carlson D (2019) The Secrets of Machine Learning: Ten Things You Wish You Had Known Earlier to Be More Effective at Data Analysis. Netessine S, Shier D, Greenberg HJ (eds) Operations Research & Management Science in the Age of Analytics, pp 44–72

Seffah A, Djouab R, Antunes H (2001) Comparing and reconciling usability-centered and use case-driven requirements engineering processes. In: Proceedings Second Australasian User Interface Conference AUIC 2001, IEEE, p 132–9

Singh K, Jahnke I, Mosa A, Calyam P (2021) The winding road of requesting healthcare data for analytics purposes: using the one-interview mental model method for improving services of health data governance and big data request processes. J Bus Analytics 6(1):1–18

Singh K, Li S, Jahnke I, Pandey A, Lyu Z, Joshi T A formative usability study to improve prescriptive systems for bioinformatics big data. In: 2020 IEEE International Conference on Bioinformatics and, Biomedicine et al (2020) (BIBM) IEEE, pp 735–742

Stern C, Jordan Z, McArthur A (2014) Developing the review question and inclusion criteria. AJN Am J Nurs 114(4):53–56

Stewart C, Pepin J, Odegard J, Hauser T, Fratkin S, Almes G et al (2008) Developing a coherent cyberinfrastructure from local campus to National facilities: challenges and strategies. EDUCAUSE Campus Cyberinfrastructure Working Group and Coalition for Academic Scientific Computation

Stewart CA, Simms S, Plale B, Link M, Hancock DY, Fox GC (2010) What is cyberinfrastructure. In: Proceedings of the 38th annual ACM SIGUCCS fall conference: navigation and discovery, Norfolk Virginia USA: ACM, pp 37–44

Stocks KI, Schramski S, Virapongse A, Kempler L (2019) Geoscientists’ perspectives on cyberinfrastructure needs: a Collection of user scenarios. Data Sci J 18(21):1–15

Taylor P, Rahman J, O’Sullivan J, Podger G, Rosello C, Parashar A et al (2021) Basin futures, a novel cloud-based system for preliminary river basin modelling and planning. Environ Model Softw 141(105049):1–20

Tullis T, Albert B (2013) Measuring the user experience: collecting, analyzing, and presenting UX Metrics. Elsevier Inc

Varshney LR (2016) Fundamental limits of data analytics in sociotechnical systems. Front ICT 3(2):1–7

Volentine R, Specht A, Allard S, Frame M, Hu R, Zolly L (2021) Accessibility of environmental data for sharing: the role of UX in large cyberinfrastructure projects. Ecol Inf 63(101317):1–8

Webster J, Watson RT (2002) Analyzing the past to prepare for the future: writing a literature review. MIS Q 26(2):xiii–xxiii

Zimmerman A (2007) A socio-technical framework for cyber-infrastructure design. In: Proceedings of e-Social Science, pp 1–10

Acknowledgements

I am deeply grateful to my doctoral advisor, Dr. Isa JahnkeFootnote 4, for her professional advice, support with study design, and reviews regarding the direction of this paper. I sincerely thank Dr. Prasad CalyamFootnote 5 for the opportunity to work as a user centered design researcher in the design, development, and evaluation of the NSF-funded campus cyberinfrastructure project. I also want to thank Hillary GouldFootnote 6, a graduate student, for helping with initial search of articles to establish reliability. I am sincerely grateful to the reviewers for their valuable feedback to help me improve the paper.

Funding

No funding was obtained for the study.

Author information

Authors and Affiliations

Contributions

The author conceptualized the study design, performed the literature search and data analysis, and wrote the original manuscript.

Corresponding author

Ethics declarations

Conflict of interest

Author has no conflict of interest to declare.

Competing interests

The author has no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Singh, K.P. A systematic literature review of the application of user experience studies in cyberinfrastructure for scientific research. Qual User Exp 9, 4 (2024). https://doi.org/10.1007/s41233-024-00069-8

Received:

Published:

DOI: https://doi.org/10.1007/s41233-024-00069-8