Abstract

This paper investigates the interplay between the algebraic properties of the rings, the combinatorial properties of their corresponding zero-divisor graphs, and the associated Hermitian matrix of such graphs. For a finite ring R, its zero-divisor graph may contain both directed edges and undirected edges; such graphs are called mixed graphs. The Hermitian matrices of mixed graphs are natural generalizations of the adjacency matrices of undirected graphs. In this paper, we completely determine the structure and the Hermitian eigenvalues of the zero-divisor graph \(\Gamma (D\times R)\) by using the structure and the Hermitian eigenvalues of the zero-divisor graph \(\Gamma (R)\). As applications, we investigate \(\Gamma (D\times R)\) for some special R and extend some known results on this topic.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The concept of zero-divisor graphs was originally developed to explore the relationship between the graph-theoretic properties of these graphs and the corresponding ring-properties of commutative finite rings. Beck [4] first defined the zero-divisor graph \(\Gamma (R)\) of a commutative ring R as the graph with vertex set R, where two vertices x and y are adjacent if and only if their product xy equals zero. This definition was later modified by Anderson and Livingston [2], who defined the zero-divisor graph \(\Gamma (R)\) of a commutative ring R as the graph with vertex set \(Z(R)^*=Z(R){\setminus }{0}\), which consists of the nonzero zero divisors, and two vertices x and y are adjacent if and only if their product xy equals zero. This modified definition has gained favor among mathematicians and has led to many significant contributions in the field, including works by Anderson [1, 3], Lucas [13], and Mulay [15].

Redmond [16] extended the concept of zero-divisor graphs to non-commutative rings. Let R be a non-commutative ring, and let \(Z_l(R)\) and \(Z_r(R)\) denote the sets of left and right zero divisors, respectively, where an element x is a left (right) zero divisor if there exists \(y\in R\) such that \(xy=0\) (resp. \(yx=0\)). The set \(Z(R)=Z_l(R)\cup Z_r(R)\) is the set of zero divisors of R, and its complement \(\overline{Z}(R)=R{\setminus } Z(R)\) is the set of cancellable elements. For any subset \(S\subseteq R\), we use \(S^*\) to denote the set of nonzero elements in S, i.e., \(S^*=S{\setminus }\{0\}\). The zero-divisor graph of R has vertex set \(Z(R)^*\), and there is a directed edge from x to y if and only if \(xy=0\).

This definition is also suitable for commutative rings, where if R is commutative, then a directed edge exists from x to y if and only if there is a directed edge from y to x. Throughout this paper, two directed edges sharing the same end-points and opposite directions are regarded as one undirected edge. For a commutative ring R, the zero-divisor graph defined by Redmond is equivalent to that defined by Anderson and Livingston. Thus, Redmond’s definition can be considered a natural extension of that given by Anderson and Livingston. Recently, Lu et al. [12] introduced the concept of signed zero-divisor graphs by considering the nilpotent elements of the ring and generalized some results on zero-divisor graphs.

A graph \(\Gamma \) contains both undirected edges and directed edges is called a mixed graph. If there is a directed edge from x to y, then we write \(x\rightarrow y\) and this edge is denoted by (x, y). If \(x\rightarrow y\) and \(y\rightarrow x\), then we write \(x\leftrightarrow y\) and these two directed edges are regarded as one undirected edge \(\{x,y\}\). For a subset \(U\subseteq V(\Gamma )\), the induced mixed subgraph \(\Gamma [U]\) is the mixed graph obtained from \(\Gamma \) by deleting all vertices in \(V(\Gamma ){\setminus } U\) and their associated edges. The underlying graph \(\overline{\Gamma }\) is the undirected graph with vertex set \(V(\Gamma )\) and two vertices x and y are adjacent, denoted by \(x\sim y\), if at least one of \(x\rightarrow y\) and \(y\rightarrow x\) holds. If \(\overline{\Gamma }\) is connected then \(\Gamma \) is called connected. If for any pair of vertices x, y, there exists a directed path from x to y, then \(\Gamma \) is called strongly connected. For a vertex \(v\in V(\Gamma )\), the in-neighbor of v is \(N^+(v)=\{u\mid u\rightarrow v, v\not \rightarrow u\}\), the out-neighbor of v is \(N^-(v)=\{u\mid v\rightarrow u,u\not \rightarrow v\}\) and the in-out-neighbor of v is \(N^{\#}(v)=\{u\mid u\leftrightarrow v\}\). The in-degree, out-degree and in-out-degree of v are, respectively, \(d^+(v)=|N^+(v)|\), \(d^-(v)=|N^-(v)|\) and \(d^{\#}(v)=|N^{\#}(v)|\). The maximum in-degree, out-degree and in-out-degree are denoted by \(\Delta ^+(\Gamma )\), \(\Delta ^-(\Gamma )\) and \(\Delta ^{\#}(\Gamma )\). The minimum in-degree, out-degree and in-out-degree are denoted by \(\delta ^+(\Gamma )\),\(\delta ^-(\Gamma )\) and \(\delta ^{\#}(\Gamma )\). We would like to write, for example, \(d^+_{\Gamma }(v)\) for \(d^+(v)\) if we want to emphasize which graph we mean. For other notations in graph theory, we would like to refer the reader to [5].

The Hermitian matrix of a mixed graph is a natural generalization of the adjacency matrix of an undirected graph, which was introduced by Liu and Li [11] and Guo and Mohar [7] independently. For a mixed graph \(\Gamma \) the Hermitian matrix of \(\Gamma \) is a square matrix \(H(\Gamma )=(h_{uv})\) indexed by the vertex set of \(\Gamma \) with

where i is the imaginary unit. It is clear that the Hermitian matrix of \(\Gamma \) is coincident with the adjacency matrix of \(\Gamma \) when \(\Gamma \) is undirected. Since \(H(\Gamma )\) is Hermitian, all its eigenvalues are real and can be listed as \(\lambda _1\ge \lambda _2\ge \cdots \ge \lambda _n\). The collection of all these eigenvalues is the spectrum of \(\Gamma \), denoted by \({\text {Sp}}(\Gamma )=\left\{ [\lambda _1]^{m_1},[\lambda _2]^{m_2},\ldots ,[\lambda _s]^{m_s}\right\} \), where \(\lambda _1,\ldots ,\lambda _s\) are distinct eigenvalues and \(m_1,\ldots ,m_s\) are multiplicities of them. The rank of \(\Gamma \) is defined to be the rank of \(H(\Gamma )\).

It is well known that a finite, commutative ring with identity can be expressed as a direct sum of local rings. As a result, the study of the zero-divisor graph over a sum of two rings would be the key to investigating the zero-divisor graphs over general rings. Motivated by this thought, in this paper, we investigate the zero-divisor graph \(\Gamma (D\times R)\) over the ring \(D\times R\), where D is an integral domain and R is an arbitrary ring (not necessarily be commutative). Firstly, we completely determine the structure of \(\Gamma (D\times R)\) with respect to the structure of \(\Gamma (R)\) (Theorem 1). Next, we obtain the spectrum of \(\Gamma (D\times R)\) with respect to the spectrum of \(\Gamma (R)\) (Theorem 2). At last, we present some applications of our results, which extend some existing results in [10, 14, 17].

2 The Structure of \(\Gamma (D\times R)\)

In this part, we investigate the structure of the zero-divisor graph \(\Gamma (D\times R)\) for an integral domain D and a non-trivial ring R. To make the definition of zero-divisor graphs clear, we start off with a simple example.

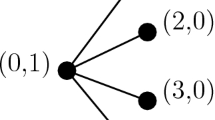

Example 1

Let R be the ring of upper triangular matrices of size \(2\times 2\) over the field \(\mathbb {Z}_2\). The zero-divisor graph \(\Gamma \) of R is presented in Fig. 1.

Let R be a non-trivial ring with zero-divisor graph \(\Gamma \) and D an integral domain. In what follows, we always denote by \(\Gamma '\) the zero-divisor graph of \(D\times R\).

Lemma 1

The vertex set of \(\Gamma '\) is \(Z(D\times R)^*=\left( D^*\times \{0\}\right) \cup (\{0\}\times R^*)\cup \left( D^*\times Z(R)^*\right) \).

Proof

Note that \((a,0)(0,b)=(0,0)\) for any \(a\in D^*\) and \(b\in R^*\). It means that \(D^*\times \{0\}\cup \{0\}\times R^*\subseteq Z(D\times R)^*\). Moreover, for any \((a,b)\in D^*\times Z(R)^*\), since \(b\in Z(R)^*\), there exists \(d\ne 0\) such that \(bd=0\) or \(db=0\). Therefore, \((a,b)(0,d)=0\) or \((0,d)(a,b)=0\), which implies that \(D^*\times Z(R)^*\subseteq Z(D\times R)^*\). Thus, we have \((D^*\times {0})\cup ({0}\times R^*)\cup (D^*\times Z(R)^*)\subseteq Z(D\times R)^*\).

Conversely, it needs to show that \( Z(D\times R)^*\subseteq (D^*\times \{0\})\cup (\{0\}\times R^*)\cup (D^*\times Z(R)^*)\). Assume that \((a,b)\in Z(D\times R)^*\). If \(a=0\) or \(b=0\) then \((a,b)\in (D^*\times \{0\})\cup (\{0\}\times R^*)\). Therefore, it suffices to show that \((a,b)\in D^*\times Z(R)^*\) when \(a,b\ne 0\). As \(a\in D^*\) holds whenever \(a\ne 0\), it only needs to show \(b\in Z(R)^*\). Since \((a,b)\in Z(D\times R)^*\), there exists a nonzero element \((c,d)\in D\times R\) such that \((a,b)(c,d)=0\) or \((c,d)(a,b)=0\). If the former occurs, then \(ac=0\) and \(bd=0\). It leads to \(c=0\) since \(a\in D^*\), thereby \(d\ne 0\) because \((c,d)\ne (0,0)\). It means that \(b\in Z_l(R)^*\). If the latter occurs, then \(ca=0\) and \(db=0\). Similarly, we have \(b\in Z_r(R)^*\). Both cases lead to \(b\in Z(R)^*\). \(\square \)

Though Lemma 1 gives a partition of the vertex set \(V(\Gamma ')\), we need another partition that could reveal the relations between the vertices more clearly.

Lemma 2

The vertex set \(V(\Gamma ')\) has a partition \(\Pi :\) \(V(\Gamma ')=(D^*\times \{0\})\cup (\{0\}\times \overline{Z}(R))\cup (\{0\}\times Z(R)^*)\cup (D^*\times Z(R)^*)\). Furthermore, the following statements hold.

-

(i)

The vertex sets \(D^*\times \{0\}\), \(\{0\}\times \overline{Z}(R)\) and \(D^*\times Z(R)^*\) are all independent sets.

-

(ii)

For each vertex \(x_1\in D^*\times \{0\}\), \(d^+_{\Gamma '}(x_1)=d^-_{\Gamma '}(x_1)=0\) and \(d^{\#}_{\Gamma '}(x_1)=|R|-1\).

-

(iii)

For each vertex \(x_2\in \{0\}\times \overline{Z}(R)\), \(d^+_{\Gamma '}(x_2)=d^-_{\Gamma '}(x_2)=0\) and \(d^{\#}_{\Gamma '}(x_2)=|D|-1\).

-

(iv)

For each vertex \(x_3=(0,\gamma )\in \{0\}\times Z(R)^*\), \(d^+_{\Gamma '}(x_3)=|D|d^+_{\Gamma }(\gamma )\), \(d^-_{\Gamma '}(x_3)=|D|d^-_{\Gamma }(\gamma )\) and \(d^{\#}_{\Gamma '}(x_3)=|D|(d^{\#}_{\Gamma }(\gamma )+1)-1\).

-

(v)

For each vertex \(x_4=(\alpha ,\gamma )\in D^*\times Z(R)^*\), \(d^+_{\Gamma '}(x_4)=d^+_{\Gamma }(\gamma )\), \(d^-_{\Gamma '}(x_4)=d^-_{\Gamma }(\gamma )\) and \(d^{\#}_{\Gamma '}(x_4)=d^{\#}_{\Gamma }(\gamma )\).

Proof

From Lemma 1, \((D^*\times \{0\})\cup (\{0\}\times R^*)\cup (D^*\times Z(R)^*)\) is a partition of \(V(\Gamma ')\). Note that \(R^*=\overline{Z}(R)\cup Z(R)^*\) is a partition of \(R^*\). We get the partition \(\Pi \).

For any two vertices \((\alpha ,0),(\alpha ',0)\in D^*\times \{0\}\), since

we have \((\alpha ,0)\not \sim (\alpha ',0)\) and thus the vertex set \(D^*\times \{0\}\) is an independent set. For two vertices \((0,\beta ),(0,\beta ')\in \{0\}\times \overline{Z}(R)\), since

we have \((0,\beta )\not \sim (0,\beta ')\) and thus \(\{0\}\times \overline{Z}(R)\) is an independent set. For any two vertices \((\alpha ,\beta ),(\alpha ',\beta ')\in D^*\times Z(R)^*\), since

we have \((\alpha ,\beta )\not \sim (\alpha ',\beta ')\), and thereby \(D^*\times Z(R)^*\) is an independent set. Thus, (i) holds.

Assume that \(x_1=(\alpha ,0)\) is an arbitrary vertex in \( D^*\times \{0\}\). For any \((0,b)\in \{0\}\times R^*\), we have

and thus \(x_1\leftrightarrow (0,b)\). For any \((a,b)\in D^*\times Z(R)^*\), since

we have \(x_1\not \sim (a,b)\). Inasmuch as \(D^*\times \{0\}\) is an independent set and \(V(\Gamma ')=(D^*\times \{0\})\cup (0\times R^*)\cup (D^*\times Z(R)^*)\), we have obtained the relations between \(x_1\) and all other vertices. It indicates that \(d^+(x_1)=d^-(x_1)=0\) and \(d^{\#}(x_1)=|\{0\}\times R^*|=|R|-1\). Thus (ii) holds.

Assume that \(x_2=(0,\beta )\) is an arbitrary vertex in \(\{0\}\times \overline{Z}(R)\). It has been proved that \(\{0\}\times \overline{Z}(R)\) is an independent set and \(x_2\leftrightarrow x_1\) for any \(x_1\in D^*\times \{0\}\). For any vertex \((0,b)\in \{0\}\times Z(R)^*\), since

we have \(x_2\not \sim (0,b)\). Similarly, one could easily verify that \(x_2\not \sim (a,b)\) for any \((a,b)\in D^*\times Z(R)^*\). Therefore, we have obtained the relations between \(x_2\) and all other vertices. It implies that \(d^+(x_2)=d^-(x_2)=0\) and \(d^{\#}(x_2)=|D^*\times \{0\}|=|D|-1\). Thus (iii) holds.

Assume that \(x_3=(0,\gamma )\) is an arbitrary vertex in \(\{0\}\times Z(R)^*\). The relations between \(x_3\) and \((D^*\times \{0\})\cup (\{0\}\times \overline{Z}(R))\) have already been obtained. For any \((0,b)\in \{0\}\times Z(R)^*\), we have \((0,\gamma )\rightarrow (0,b)\) if and only if \((0,\gamma )(0,b)=(0,\gamma b)=(0,0)\) if and only if \(\gamma b=0\) if and only if \(\gamma \rightarrow b\) in \(\Gamma \); similarly, one could verify that \((0,b)\rightarrow (0,\gamma )\) if and only if \(b\rightarrow \gamma \) in \(\Gamma \). For any \((a,b)\in D^*\times Z(R)^*\), we have \((0,\gamma )\rightarrow (a,b)\) if and only if \((0,\gamma )(a,b)=(0,\gamma b)=(0,0)\) if and only if \(\gamma b=0\) if and only if \(\gamma \rightarrow b\) in \(\Gamma \); similarly, one could verify that \((a,b)\rightarrow (0,\gamma )\) if and only if \(b\rightarrow \gamma \) in \(\Gamma \). Therefore, the relations between \(x_3\) and all other vertices have been obtained. It implies that \(d^{+}_{\Gamma '}(x_3)=|D|d^+_{\Gamma }(\gamma )\), \(d^-_{\Gamma '}(x_3)=|D|d^-_{\Gamma }(\gamma )\) and \(d^{\#}_{\Gamma '}(x_3)=|D|d^{\#}_{\Gamma }(\gamma )+|D^*\times \{0\}|=|D|(d^{\#}_{\Gamma }(\gamma )+1)-1\). Thus (iv) holds.

Assume that \(x_4=(\alpha ,\gamma )\) is an arbitrary vertex in \(D^*\times Z(R)^*\). The relations between \(x_4\) and \((D^*\times \{0\})\cup (\{0\}\times \overline{Z}(R))\cup (\{0\}\times Z(R)^*)\) have already been obtained and \(D^*\times Z(R)^*\) is an independent set. We get the relations between \(x_4\) and all other vertices. It leads to \(d^+_{\Gamma '}(x_4)=d^+_{\Gamma }(\gamma )\), \(d^-_{\Gamma '}(x_4)=d^-_{\Gamma }(\gamma )\) and \(d^{\#}_{\Gamma '}(x_4)=d^{\#}_{\Gamma }(\gamma )\). Thus (v) holds.

The proof is completed. \(\square \)

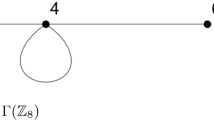

In fact, the proof of Lemma 2 gives the relation between every pair of vertices of \(\Gamma '\), and thereby the structure of \(\Gamma '\) is legible. In order to rigorously describe this graph, some definitions of graph operations are needed. For two graphs \(\Gamma _1\) and \(\Gamma _2\), the join \(\Gamma _1\nabla \Gamma _2\) is the graph with vertex set \(V(\Gamma _1)\cup V(\Gamma _2)\) and edge set \(E(\Gamma _1)\cup E(\Gamma _2)\cup \{\{u,v\}\mid u\in V(\Gamma _1),v\in V(\Gamma _2)\}\). In other words, \(\Gamma _1\nabla \Gamma _2\) is obtained from \(\Gamma _1\) and \(\Gamma _2\) by adding all undirected edges between them. Now we give a more general definition. For a subset \(S_1\subseteq V(\Gamma _1)\) and \(S_2\subseteq V(\Gamma _2)\), the partial join \((\Gamma _1,S_1)\nabla (\Gamma _2,S_2)\) is the graph with vertex set \(V(\Gamma _1)\cup V(\Gamma _2)\) and edge set \(E(\Gamma _1)\cup E(\Gamma _2)\cup \{\{u,v\}\mid u\in S_1, v\in S_2\}\). For a graph \(\Gamma \), the double cover \(C(\Gamma )\) is the graph with vertex set \(V(\Gamma )\times \{0,1\}\), and \((u,j)\rightarrow (v,k)\) in \(C(\Gamma )\) if and only if \(u\rightarrow v\) in \(\Gamma \) and \(j\ne k\). Denote by \(V^0(C(\Gamma ))=\{(v,0)\mid v\in V(\Gamma )\}\) and \(V^1(C(\Gamma ))=\{(v,1)\mid v\in V(\Gamma )\}\). The strong double cover \({\text {SC}}(\Gamma )\) is obtained from \(C(\Gamma )\) by adding the directed edges ((u, 0), (v, 0)) when \(u\rightarrow v\) in \(\Gamma \), that is, \({\text {SC}}(\Gamma )\) is obtained from \(C(\Gamma )\) by embedding \(\Gamma \) in \(V^0(C(\Gamma ))\) correspondingly. For a positive integer m, the blow-up \(B_m(\Gamma )\) is the graph obtained from \(\Gamma \) by replacing each vertex u with an independent set \(\{u_1,u_2,\ldots ,u_m\}\), and if \(u\rightarrow v\) in \(\Gamma \) then \(u_j\rightarrow v_k\) for any \(1\le j,k\le m\). For a subset \(S\subseteq V(\Gamma )\), define a function \(f_S\) of \(V(\Gamma )\) by \(f(v)=(m-1)\delta _S(v)+1\), where \(\delta _S(v)=1\) if \(v\in S\) and 0 otherwise. The partial blow-up \(B_m(\Gamma ,S)\) is the graph obtained from \(\Gamma \) by replacing each vertex \(u\in V(\Gamma )\) with an independent set \(\{u_1,u_2,\ldots ,u_{f_S(u)}\}\), and if \(u\rightarrow v\) in \(\Gamma \) then \(u_j\rightarrow v_k\) for any \(1\le j\le f_S(u)\) and \(1\le k\le f_S(v)\). One could access the meaning of these graph operations in Fig. 2, in which the fat lines between two parts mean every vertex in one part is adjacent to every vertex of the other part by an undirected edge.

Now we are at the position to the main result of this part.

Theorem 1

Let R be a ring with zero-divisor graph \(\Gamma \) and D an integral domain. Assume that \(|D|=s\), \(|R|=t\) and \(|V(\Gamma )|=|Z(R)^*|=l\). Then, the zero-divisor graph \(\Gamma '\) of \(D\times R\) satisfies:

-

(i)

\(\Gamma '=((t-l-1)K_1\nabla (s-1)K_1, V_1)\nabla (B_{s-1}({\text {SC}}(\Gamma ),V_2),V_3)\) (as shown in Fig. 3), where \(V_1=V((s-1)K_1)\), \(V_2=V^{1}(C(\Gamma ))\) and \(V_3=V^0(C(\Gamma ))\);

-

(ii)

\(|V(\Gamma ')|=(s-1)(l+1)+t-1\);

-

(iii)

\(\Delta ^+(\Gamma ')=s\Delta ^+(\Gamma )\), \(\Delta ^-(\Gamma )=s\Delta ^-(\Gamma )\) and \(\Delta ^{\#}(\Gamma ')=\max \{s(\Delta ^{\#}+1)-1,t-1\}\);

-

(iv)

\(\delta ^+(\Gamma ')=\delta ^-(\gamma ')=0\) and \(\delta ^{\#}(\Gamma ')=\min \{s-1,\delta ^{\#}(\Gamma )\}\);

-

(v)

the underlying graph \(\overline{\Gamma '}\) of \(\Gamma '\) is connected with diameter \(d(\overline{\Gamma '})\le 3\);

-

(vi)

\(\Gamma '\) is strongly connected if and only if \(\Gamma \) is strongly connected.

Proof

According to the proof of Lemma 2, the structure of \(\Gamma '\) is clear and could be shown as Fig. 3, where each fat undirected (directed) line between two parts means that there are undirected (resp. directed) edges between every vertex of one part and every vertex of the other part. The first four statements follows. The fifth statement follows from [16, Theorem 3.1]. In what follows, we show (vi).

For convenience, set \(V_0=\{0\}\times \overline{Z}(R)\), \(V_1=D^*\times \{0\}\), \(V_3=\{0\}\times Z(R)^*\) and \(V_4=D^*\times Z(R)^*\) (we use \(V_4\) because the notation \(V_2\) has been used as the vertex set \(V^1(C(\Gamma ))\); in fact, \(V_4\) is obtained from \(V_2\) by replacing each vertex of \(V_2\) with an independent set of size \(s-1\)). Note that \(\Gamma \) is an induced subgraph of \(\Gamma '\), i.e., \(\Gamma =\Gamma '[V_3]\). Therefore, \(\Gamma \) is strongly connected when \(\Gamma '\) is strongly connected. Now we will prove \(\Gamma '\) is strongly connected when \(\Gamma \) is strongly connected. It suffices to show that there is a directed path from x to y for any \(x,y\in V(\Gamma ')\).

Assume that \(x\in V_0\). The case for \(y\in V_0\cup V_1\cup V_3\) is clear. If \(y=(a,b)\in V_4\), since \(\Gamma \) is strongly connected, there exists \(b'\in V(\Gamma )\) such \(b'\rightarrow b\). Therefore, by taking any \(x_1\in V_1\), we have \(x\rightarrow x_1\rightarrow (0,b')\rightarrow (a,b)=y\). The case for \(x\in V_1\) is similar.

Assume that \(x=(0,b)\in V_3\). The case for \(y\in V_0\cup V_1\) is clear. If \(y\in V_3\) then the statement holds since \(\Gamma =\Gamma '[V_3]\) is strongly connected. If \(y=(a,b')\in V_4\), since \(\Gamma \) is strongly connected, there exists a directed path \(b\rightarrow b_1\rightarrow b_2\rightarrow \cdots \rightarrow b_x\rightarrow b'\). Therefore, we have \(x=(0,b)\rightarrow (0,b_1)\rightarrow (0,b_2)\rightarrow \cdots \rightarrow (0,b_x)\rightarrow (a,b'')=y\). The case for \(x\in V_4\) is similar, and we omit the details. \(\square \)

It is known that the zero-divisor graph \(\Gamma (R)\) of the ring R is strongly connected if and only if \(Z_l(R)=Z_r(R)\) [16, Theorem 2.3]. Therefore, Theorem 1 (vi) gives a combinatorial explanation of the following simple fact.

Corollary 1

Let R be a ring and D an integral domain. Then \(Z_l(D\times R)=Z_r(D\times R)\) if and only if \(Z_l(R)=Z_r(R)\).

3 The Hermitian Matrix of \(\Gamma (D\times R)\)

In this part, we investigate the Hermitian matrix of the zero-divisor graph \(\Gamma (D\times R)\). We start up with some basic definitions on matrices. For a square matrix X, the trace \({\text {tr}}(X)\) is the sum of all diagonal entries of X. Let \(A=(a_{ij})\) be an \(m\times n\) matrix and \(B=(b_{ij})\) a \(p\times q\) matrix. The rank of A is denoted by \({\text {rank}}(A)\). The Kronecker product \(A\times B\) is the \(pm\times qn\) block matrix

We present some well-known properties on Kronecker product.

Lemma 3

[9] Let A, B, C and D are matrices with suitable size. Then we have

-

(i)

\((A\otimes B)^T=A^T\otimes B^T\);

-

(ii)

\({\text {tr}}(A\otimes B)={\text {tr}}(A){\text {tr}}(B)\);

-

(iii)

\({\text {rank}}(A\otimes B)={\text {rank}}(A){\text {rank}}(B)\);

-

(iv)

\((A\otimes B) (C\otimes D)=(AC)\otimes (BD)\).

As usual, we write \(\textbf{0}_{m,n}\) for the zero matrix of size \(m\times n\), \(I_n\) for the identity matrix of order n, and \(J_{m,n}\) for the all-one matrix of size \(m\times n\).

Assume that R is a ring with \(|R|=t\) and \(|Z(R)^*|=l\), and D is an integral domain with \(|D|=s\). Suppose that \(\Gamma =\Gamma (R)\) is the zero-divisor graph of R with Hermitian matrix H. According to the structure of \(\Gamma '=\Gamma (D\times R)\) obtained in Theorem 1, the Hermitian matrix \(H'\) of \(\Gamma '\) is

It leads to the main result of this part.

Theorem 2

Let R be a ring with zero-divisor graph \(\Gamma \) and let D be an integral domain. If \(\Gamma '\) is the zero-divisor graph of \(D\times R\), then the rank of \(\Gamma '\) is \({\text {rank}}(\Gamma ')=2{\text {rank}}(\Gamma )+2\). Furthermore, the spectrum of \(\Gamma '\) is

where \(s=|D|\), \(t=|R|\), \(l=|Z(R)^*|\), \(r={\text {rank}}(\Gamma )\), \(\theta _s^+(\lambda )=(\lambda +\sqrt{(4s-3)\lambda ^2})/2\) and \(\theta _s^-(\lambda )=(\lambda -\sqrt{(4s-3)\lambda ^2})/2\).

Proof

Assume that H is the Hermitian matrix of \(\Gamma \). Since the elementary transformations of a matrix do not change its rank, we have

In what follows, we get the eigenvalues of \(H'\) by constructing eigenvectors. Assume that \(r={\text {rank}}(H)\), \(x_1,\ldots ,x_r\) are the eigenvectors of H corresponding to the nonzero eigenvalues \(\lambda _1,\ldots ,\lambda _r\), and \(y_1,\ldots ,y_{l-r}\) are the eigenvectors of H corresponding to the eigenvalue 0. For any positive integer m and \(2\le j\le m\), let \(\eta _m(j)\in \mathbb {C}^m\) be the vector whose first entry is 1, j-th entry is \(-1\) and other entries are 0. For \(2\le j_1\le t-l-1\), \(2\le j_2\le s-1\), \(1\le j_3,j_5\le l-r\), \(2\le j_4\le s-1\) and any nonzero vector \(x\in \mathbb {C}^{l}\), by immediate calculations, all the vectors

are linear independent eigenvectors corresponding to the eigenvalue 0. Therefore, the multiplicity of 0 is at least

Since \(|V(\Gamma ')|=(l+1)(s-1)+t-1\) and \({\text {rank}}(\Gamma ')=2r+2\), such eigenvectors form a basis of the eigenspace of 0.

Now we deduce the remaining \(2r+2\) nonzero eigenvalues. For any nonzero eigenvalue \(\lambda \) of H, let \(\theta _s^+(\lambda )=\frac{\lambda +\sqrt{(4s-3)\lambda ^2}}{2}\) and \(\theta _s^-(\lambda )=\frac{\lambda -\sqrt{(4s-3)\lambda ^2}}{2}\). Note that \(\theta _s^+(\lambda )\) and \(\theta _s^-(\lambda )\) are both nonzero complex numbers if \(\lambda \ne 0\). For \(1\le j\le r\), we construct the vectors \(z_j=\left( \begin{array}{c}\textbf{0}_{t-l-1,1}\\ \textbf{0}_{s-1,1}\\ \theta ^+(\lambda _j)x_j\\ \lambda _j x_{j}\otimes J_{s-1,1}\end{array}\right) .\) By immediate calculations, in view of Lemma 3, we have

Note that \(\theta _s^+(\lambda _j)\lambda _j+(s-1)\lambda _j^2=(\theta _s^+(\lambda _j))^2\). It leads to \(H'z_j=\theta _s^+(\lambda _j)z_j\). Therefore, \(\theta _s^+(\lambda _j)\) is a nonzero eigenvalue of \(H'\). Similarly, replacing \(\theta _s^+(\lambda _j)\) by \(\theta _s^-(\lambda _j)\), we obtain that \(\theta _s^-(\lambda _j)\) is also a nonzero eigenvalue of \(H'\). There are totally 2r nonzero eigenvalues. Suppose the remaining two nonzero eigenvalues are \(\alpha \) and \(\beta \). Note that \({\text {tr}}(H')=0\) and

By using the spectral moment of \(\Gamma '\) [6, Page 52], we have

It yields that \(\alpha =\sqrt{(s-1)(t-1)}\) and \(\beta =-\sqrt{(s-1)(t-1)}\).

The proof is completed. \(\square \)

4 Applications

In this part, we present some applications of Theorems 1 and 2 for both commutative rings and non-commutative rings. Note that if R is a commutative ring then \(\Gamma (R)\) is an undirected graph and every undirected graph can be viewed as a mixed graph, and the adjacency matrix of \(\Gamma (R)\) and the Hermitian matrix of \(\Gamma (R)\) are coincident. Theorems 1 and 2 are applicable when R is commutative. Note that \(\Gamma (\mathbb {Z}_p)\) is a null graph for any prime p. For a number a, recall that \(\theta _{a}^+(\cdot )\) and \(\theta _{a}^-(\cdot )\) are functions given in Theorem 2. For convenience, denote by \(\theta _a^{\pm }(\pm \lambda )\) the four numbers \(\theta _a^{+}(\lambda )\), \(\theta _a^{-}(\lambda )\), \(\theta _a^{+}(-\lambda )\) and \(\theta _a^{-}(-\lambda )\). Since \(\theta _a^+\) and \(\theta _a^-\) are functions, the multiplication of them is defined as the composition of functions. For convenience, denote by \((\theta _a^{\pm })^k(\pm \lambda )\) the numbers \(f_1f_2\cdots f_k(x)\) for \(f_1,\ldots ,f_k\in \{\theta _a^+,\theta _a^-\}\) and \(x\in \{\pm \lambda \}\). It could be interpreted that \((\theta _a^{\pm })^k(\pm \lambda )\) represents totally \(2^{k+1}\) numbers. We get the following result.

Lemma 4

For a prime p and an integer \(m\ge 2\), let \(R_m=\underbrace{\mathbb {Z}_p\times \mathbb {Z}_p\times \cdots \times \mathbb {Z}_p}_m\). If \(\Gamma _m\) is the zero-divisor graph of \(R_m\), then we have

-

(i)

\(|\Gamma _m|=p^{m}-(p-1)^{m}-1\);

-

(ii)

\(\Delta (\Gamma _m)=p^{m-1}-1\);

-

(iii)

\(\delta (\Gamma _m)=p-1\);

-

(iv)

\({\text {rank}}(\Gamma _{m})=2^m-2\);

-

(v)

\({\text {Sp}}(\Gamma _m)=\big \{[0]^{p^{m}-(p-1)^{m}-2^m+1},\pm \sqrt{(p-1)(p^{m-1}-1)},{(\theta _p^{\pm })^{m-1-j}}(\pm \sqrt{(p-1)(p^j-1)})\mid \) \(1\le j\le m-2\big \}\).

Proof

We prove these statements by induction on m. Since \(\Gamma (R_1)\) is a null graph, i.e., a graph with no vertex, Theorem 1 indicates that \(\Gamma (R_2)=(p-1)K_1\nabla (p-1)K_1\). Therefore, it is clear that all the statements hold for \(m=2\). Next, assume that all the statements hold for \(m=k\) and it suffices to show that they all hold for \(m=k+1\).

Since \(\Gamma _{k+1}=\Gamma (\mathbb {Z}_p\times R_{k})\), Theorem 1 (ii) implies that

Hence (i) holds. Theorem 1 (iii) implies that

and thus (ii) holds. Theorem 1 (iv) indicates that \(\delta (\Gamma _{k+1})=\min \{p-1,\delta (\Gamma _{k})\}=p-1\), thus (iii) holds. Theorem 2 means that

and hence (iv) holds. From (i) and (iv), the multiplicity of 0 is obtained. By inductive assumption, the nonzero eigenvalues of \(\Gamma _{k}\) are

for \(1\le j\le k-2\). Therefore, Theorem 2 implies that the nonzero eigenvalues of \(\Gamma _{k+1}\) are

for \(1\le j\le k-2\). Note that

Therefore, the spectrum of \(\Gamma _{k+1}\) is as the form of (v) and thereby (v) holds.

The proof is completed. \(\square \)

Recently, Mönius [14] gave the spectra of \(\Gamma (R_3)\) and \(\Gamma (R_4)\), in which the author considered the zero-divisor graph as an undirected graph with loops. As an example, we get the spectrum of \(\Gamma _3\) from Lemma 4.

Corollary 2

The spectrum of \(\Gamma _3\) is

Remark 1

Though Lemma 4 only considers the case of \(\mathbb {Z}_p\times \mathbb {Z}_p\times \cdots \times \mathbb {Z}_p\), the case of \(\mathbb {Z}_{p_{_1}}\times \mathbb {Z}_{p_{_2}}\times \cdots \times \mathbb {Z}_{p_{_r}}\) is similar. For example, for two distinct primes p and q with \(p>q\), along the similar lines of the proof of Lemma 4, we have

Similarly, for three primes \(p_{_1},p_{_2},p_{_3}\) with \(p_{_1}>p_{_2}>p_{_3}\), we have

In fact, for any primes \(p_{_1},p_{_2},\ldots ,p_{_r}\) (not necessarily be distinct), the structure and the spectrum of \(\Gamma (\mathbb {Z}_{p_{_1}}\times \mathbb {Z}_{p_{_2}}\times \cdots \times \mathbb {Z}_{p_{_r}})\) could be obtained easily according to Theorems 1 and 2.

Since \(\Gamma (\mathbb {Z}_{p^2})=K_{p-1}\) is the complete graph [10], we get the following result immediately from Theorems 1 and 2.

Corollary 3

Let \(\Gamma \) be the zero-divisor graph of \(\mathbb {Z}_q\times \mathbb {Z}_{p^2}\) for primes p and q. Then,

Now we turn our eyes to non-commutative rings. We consider the non-commutative ring \(U_2(\mathbb {Z}_p)\) for prime p, where \(U_2(\mathbb {Z}_p)\) is the set of \(2\times 2\) upper triangular matrices with entries from \(\mathbb {Z}_p\).

If \(A=\left( \begin{array}{cc}x&{}y\\ 0&{}z\end{array}\right) \in Z_l(U_2(\mathbb {Z}_p))^*\), then there exists \(B\ne 0\) such that \(AB=0\). It means that the columns of B are solutions of \(Ax=0\). Since \(B\ne 0\), we have \(\det (A)=0\). Therefore, \(xz=0\) and thus at least one of x and z is 0. If \(x=0\) and \(z\ne 0\) then y could be any entry in \(\mathbb {Z}_p\), and thus there are totally \((p-1)p\) choices of A. Similarly, if \(x\ne 0\) and \(z=0\), there are also \((p-1)p\) choices of A. If \(x=0\) and \(z=0\), then \(y\ne 0\), and thus, there are \(p-1\) choices of A. Hence, \(|Z_l(U_2(\mathbb {Z}_p))^*|=(p-1)(2p+1)\).

It is clear that \(Z_r(U_2(\mathbb {Z}_p))=Z_l(U_2(\mathbb {Z}_p))\). To investigate the zero-divisor graph \(\Gamma (U_2(\mathbb {Z}_p))\), we partition \(Z(U_2(\mathbb {Z}_p))^*\) as \(V_1\cup V_2\cup V_3\cup V_4\cup V_5\), where

Assume that \(n_i=|V_i|\) for \(1\le i\le 5\). It is clear that \(n_1=n_3=n_5=p-1\) and \(n_2=n_4=(p-1)^2\). For any \(\left( \begin{matrix}x&{}0\\ 0&{}0\end{matrix}\right) ,\left( \begin{matrix}x'&{}0\\ 0&{}0\end{matrix}\right) \in V_1\), since

we conclude that there is no edge between any pair of vertices in \(V_1\). For any \(\left( \begin{matrix}x&{}\quad 0\\ 0&{}\quad 0\end{matrix}\right) \in V_1\) and \(\left( \begin{matrix}x'&{}\quad y\\ 0&{}\quad 0\end{matrix}\right) \in V_2\), since

we conclude that there is no edges between \(V_1\) and \(V_2\). For any \(\left( \begin{matrix}x&{}\quad y\\ 0&{}\quad 0\end{matrix}\right) ,\left( \begin{matrix}x'&{}\quad y'\\ 0&{}\quad 0\end{matrix}\right) \in V_2\), since

we conclude that there is no edge between any pair of vertices in \(V_2\). Thus \(V_1\cup V_2\) is an independent set. Similarly, \(V_3\cup V_4\) is an independent set and \(V_5\) induces a clique. By similar calculations, the structure of \(\Gamma (U_2(\mathbb {Z}_p)\) could be obtained as the following result. The tedious calculations are omitted.

Lemma 5

Let \(\Gamma \) be the zero-divisor graph of \(U_2(\mathbb {Z}_p)\) for a prime p, and let \(\pi :\) \(V(\Gamma )=V_1\cup V_2\cup V_3\cup V_4\cup V_5\) be the partition defined above. Then, the edge set of \(\Gamma \) is \(E=E_{1,3}\cup A_{4,1}\cup A_{3,2}\cup X_{2,4}\cup A_{5}\), where

Remark 2

Figure 4 reveals the structure of \(\Gamma (U_2(\mathbb {Z}_p))\), where the fat lines mean adding all undirected edges between the two parts, the fat directed lines mean adding all directed edges between the two parts, and the blue fat line represents the edge set \(X_4\) which contains both directed edges and undirected edges. For \(p=2\), the graph is shown in Fig. 1 with \(V_1=\{u_1\}\), \(V_2=\{u_4\}\), \(V_3=\{u_3\}\), \(V_4=\{u_5\}\) and \(V_5=\{u_2\}\).

From Lemma 5, we get the following result.

Lemma 6

For a prime p, let \(\Gamma \) be the zero-divisor graph of \(U_2(\mathbb {Z}_p)\). Then, we have

-

(i)

\(|\Gamma |=(p-1)(2p+1)\);

-

(ii)

\(\Delta ^+(\Gamma )=(p-1)p\), \(\Delta ^-(\Gamma )=(p-1)p\) and \(\Delta ^{\#}(\Gamma )=p-1\);

-

(iii)

\(\delta ^+(\Gamma )=\delta ^-(\Gamma )=0\) and \(\delta ^{\#}(\Gamma )=p-2\).

Proof

Inasmuch as \(|Z(U_2(\mathbb {Z}_p))^*|=(p-1)(2p+1)\), statement (i) holds. From Fig. 4, the degrees of all vertices but those in \(V_2\cup V_4\) are known. In what follows, we discuss the vertex in \(V_2\cup V_4\). For \(v_2=\left( \begin{matrix}x&{}\quad y\\ 0&{}\quad 0\end{matrix}\right) \) and \(v_4=\left( \begin{matrix}0&{}\quad y'\\ 0&{}\quad z\end{matrix}\right) \), if \(xy'+yz=0\) then \(y'=-yz/x\). By noticing \(z\ne 0\), there are \(p-1\) vertices in \(V_4\) adjacent to \(v_2\) by undirected edges. Therefore, the degrees of vertices in \(\Gamma \) are present in Table 1. The result follows. \(\square \)

Combining Lemma 6 and Theorem 1, the following result is obtained.

Corollary 4

For two primes p and q, let \(\Gamma '\) be the zero-divisor graph of \(\mathbb {Z}_q\times U_2(\mathbb {Z}_p)\). Then, we have

-

(i)

\(|\Gamma '|=p^3 + (2q -2)p^2 - (q-1)p -1\);

-

(ii)

\(\Delta ^+(\Gamma ')=(p-1)pq\), \(\Delta ^-(\Gamma ')=(p-1)pq\) and \(\Delta ^{\#}(\Gamma ')=\max \{pq-1,p^3-1\}\);

-

(iii)

\(\delta ^+(\Gamma ')=\delta ^-(\Gamma ')=0\) and \(\delta ^{\#}(\Gamma ')=\min \{q-1,p-2\}\).

To obtain the Hermitian matrix of \(\Gamma (U_2(\mathbb {Z}_p))\), we should investigate the edges between \(V_2\) and \(V_4\) further.

For vertices \(v_2=\left( \begin{matrix}x&{}\quad y\\ 0&{}\quad 0\end{matrix}\right) , v_2'=\left( \begin{matrix}x'&{}\quad y'\\ 0&{}\quad 0\end{matrix}\right) \in V_2\), we say that they have relation, denoted by \(v_2\simeq _2 v_2'\), if \(y/x=y'/x'\). It is clear that ‘\(\simeq _2\)’ is an equivalence relation, and the equivalence classes are \([1],[2],\ldots ,[p-1]\), where \([j]=\left\{ \left( \begin{matrix}x&{}\quad y\\ 0&{}\quad 0\end{matrix}\right) \mid y/x=j\right\} \) for \(1\le j\le p-1\). Hence \(V_2=\cup _{j=1}^{p-1}[j]\). Similarly, for two vertices \(v_4=\left( \begin{matrix}0&{}\quad y\\ 0&{}\quad z\end{matrix}\right) , v_4'=\left( \begin{matrix}0&{}\quad y'\\ 0&{}\quad z'\end{matrix}\right) \in V_4\), if \(y/z=y'/z'\) then the two vertices have relation, denoted by \(v_4\simeq _4 v_4'\). It is clear that ‘\(\simeq _4\)’ is an equivalence relation, and the equivalence classes are \([1'],[2'],\ldots ,[(p-1)']\), where \([j']=\left\{ \left( \begin{matrix}0&{}\quad y\\ 0&{}\quad z\end{matrix}\right) \mid y/z=-j\right\} \) for \(1\le j\le p-1\). Hence, \(V_4=\cup _{j=1}^{p-1}[j']\). Thus, the undirected edge set between \(V_2\) and \(V_4\) is \(E_{2,4}=\cup _{j=1}^{p-1}\left\{ \{v_2,v_4\}\mid v_2\in [j],v_4\in [j']\right\} \), and the directed edge set between \(V_2\) and \(V_4\) is \(A_{2,4}=\{(v_4,v_2)\mid v_4\in [j],v_2\in [k],\text { for }1\le j,k\le p-1\text { and }j\ne k\}\). Hence, \(X_{2,4}=E_{2,4}\cup A_{2,4}\). By Lemma 5, the Hermitian matrix of \(\Gamma (U_2(\mathbb {Z}_p))\) is given by

where \(\bar{B}^T\) is conjugate transpose of B and

Now we could deduce the spectrum of \(\Gamma \).

Lemma 7

Let \(\Gamma \) be the zero-divisor graph of \(U_2(\mathbb {Z}_p)\). The spectrum of \(\Gamma \) is

where \(\mu _1,\ldots ,\mu _5\) are the roots of the function

Proof

(Sketch of the proof) Suppose \(X=(X_1,X_2,X_3,X_4,X_5)^\textrm{T}\) is a vector with \(X_1,X_3,X_5\in \mathbb {C}^{p-1}\) and \(X_2,X_4\in \mathbb {C}^{(p-1)^2}\).

Firstly, we construct the eigenvectors corresponding to the eigenvalue 0. For a positive integer \(m\ge 2\) and \(2\le j\le m\), recall that \(\eta _m(j)\in \mathbb {C}^{m}\) is the vector with first entry being 1, the j-th entry being \(-1\), and all other entries being 0. Construct the following four types of vectors:

-

(i)

\(X_1=\eta _{p-1}(j)\) for \(2\le j\le p-1\) and \(X_2=X_3=X_4=X_5=\varvec{0}\);

-

(ii)

\(X_3=\eta _{p-1}(j)\) for \(2\le j\le p-1\) and \(X_1=X_2=X_4=X_5=\varvec{0}\);

-

(iii)

\(X_1=X_3=X_4=X_5=\varvec{0}\) and \(X_2=(X_{2,1},X_{2,2},\cdots ,X_{2,p-1})\), where \(X_{2,k}=\eta _{p-1}(j)\) for \(1\le k \le p-1\), \(2\le j \le p-1\) and \(X_{2,k'}=\varvec{0}\) for \(k'\ne k\).

-

(iv)

\(X_1=X_2=X_3=X_5=\varvec{0}\) and \(X_4=(X_{4,1},X_{4,2},\cdots ,X_{4,p-1})^\textrm{T}\), where \(X_{4,k}=\eta _{p-1}(j)\) for \(1\le k \le p-1\), \(2\le j \le p-1\) and \(X_{4,k'}=\textbf{0}\) for \(k'\ne k\).

By immediate calculations, all these vectors are linear independent eigenvectors of H corresponding to 0. Hence, 0 is an eigenvalue of H with multiplicity at least \(2p(p-2)\).

Secondly, we construct the eigenvectors corresponding to \(-1\). By taking \(X_1=X_2=X_3=X_4=\varvec{0}\) and \(X_5=\eta _{p-1}(j)\) for \(2\le j\le p-1\), we have \(HX=-X\). Therefore, \(-1\) is an eigenvalue of H with multiplicity at least \(p-2\).

Thirdly, we, respectively, construct the eigenvectors corresponding to \(\lambda \) where \(\lambda \in \{\pm (p-1)\sqrt{2}\}\). By immediate calculations, \(B\bar{B}^T/(p-1)=(p-3)J_{p-1\times p-1}\otimes J_{p-1\times p-1}+2I_{p-1}\otimes J_{p-1,p-1}\). Therefore, we have

for \(2\le j\le p-1\). Taking \(X_1=X_3=X_5=\textbf{0}\), \(X_2=\eta _{p-1}(j)\otimes J_{p-1,1}\) and \(X_4=(\bar{B}^T\eta _{p-1}(j)\otimes J_{p-1,1})/\lambda \), we have

Thus, \(\lambda \) is an eigenvalue of H with multiplicity at least \(p-2\).

At last, we obtain the other eigenvalues by using the well-known technique of equitable partitions [8, Page 195]. To avoid being over length, we do not present the details of the knowledge of equitable partitions but use it immediately. It is clear that \(\pi \): \(V(\Gamma )=V_1\cup V_2\cup V_3\cup V_4\cup V_5\) is an equitable partition with the quotient matrix

The theory of equitable partition implies that the other eigenvalues of \(\Gamma \) are those of \(H_{\pi }\). By immediate calculations, the characteristic function of \(H_\pi \) is

Thus, the other five eigenvalues are exactly the roots of f(x).

The proof is completed. \(\square \)

According to Theorem 2, the following result is obtained.

Corollary 5

For two primes p and q, let \(\Gamma '\) be the zero-divisor graph of \(\mathbb {Z}_q\times U_2(\mathbb {Z}_p)\). Then, we have

where \(m_1=(2p + 1)(p - 1) - 6p + p^3 + ((2p + 1)(p - 1) + 1)(q - 2)\) and \(m_2=p-2\).

References

Anderson, D.F., Badawi, A.: On the zero-divisor graph of a ring. Commun. Algebra 36, 2073–3092 (2008)

Anderson, D.F., Livingston, P.S.: The zero-divisor graphs of a commutative ring. J. Algebra 217, 434–447 (1999)

Anderson, D.F., Mulay, S.B.: On the diameter and girth of a zero-divisor graph. J. Pure Appl. Algebra 210(2), 543–550 (2007)

Beck, I.: Coloring of commutative rings. J. Algebra 116, 208–226 (1988)

Bondy, J.A., Murty, U.S.R.: Graph Theory with Applications. North-Holland, Amsterdam (1976)

Cvetković, D., Rowlinson, P., Simić, S.: An Introduction to the Theory of Graph Spectra. Cambridge University Press, Cambridge (2010)

Guo, K., Mohar, B.: Hermitian adjacency matrix of digraphs and mixed graphs. J. Graph Theory 85(1), 217–248 (2016)

Godsil, C., Royle, G.: Algebraic Graph Theory. Springer, Berlin (2001)

Horn, R.A., Johnson, C.R.: Topics in Matrix Analysis. Cambridge University Press, Cambridge (1991)

Kuntala, P., Priyanka, B.P.: On the adjacency matrix and neighborhood associated with zero-divisor graph for direct product of finite commutative rings. Int. J. Comput. Appl. Technol. Res. 2(3), 315–323 (2013)

Liu, J., Li, X.: Hermitian-adjacency matrices and Hermitian energies of mixed graphs. Linear Algebra Appl. 466, 182–207 (2015)

Lu, L., Feng, L.H., Liu, W.J.: Signed zero-divisor graphs over commutative rings. Commun. Math. Stat. (2022). https://doi.org/10.1007/s40304-022-00297-4

Lucas, T.G.: The diameter of a zero-divisor graph. J. Algebra 301, 174–193 (2006)

Mönius, K.: Eigenvalues of zero-divisor graphs of finite commutative rings. J. Algebraic Comb. 54, 787–802 (2021)

Mulay, S.B.: Cycles and symmetries of zero-divisors. Commun. Algebra 30(7), 3533–3558 (2002)

Redmond, S.P.: The zero-divisor graph of a non-commutative ring. Int. J. Commun. Rings 1(4), 203–211 (2002)

Young, M.: Adjacency matrices of zero-divisor graphs of integers modulo \(n\). Involve 8(5), 753–762 (2015)

Acknowledgements

The authors would like to thank the editors and the anonymous referees for their many valuable suggestions toward improving this paper. L. Feng and W. Liu were supported by NSFC (Grant Nos. 12271527, 12071484) and Natural Science Foundation of Hunan Provincial (Grant Nos. 2018JJ2479, 2020JJ4675). L. Lu was supported by NSFC (Grant No. 12001544) and Natural Science Foundation of Hunan Provincial (Grant No. 2021JJ40707). G. Yu was supported by NSFC (Grant No. 11861019) and Natural Science Foundation of Guizhou ([2020]1Z001, [2021]5609).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest to this work.

Additional information

Communicated by Wen Chean Teh.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lu, L., Feng, L., Liu, W. et al. Zero-Divisor Graphs of Rings and Their Hermitian Matrices. Bull. Malays. Math. Sci. Soc. 46, 130 (2023). https://doi.org/10.1007/s40840-023-01519-w

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40840-023-01519-w