Abstract

Partial least squares discriminant analysis (PLS-DA) is one the popular tool for the analysis of data in chemometrics and bioinformatics. As a dimension reduction tool, PLS-DA aims to find a projection of high-dimensional data onto a lower-dimensional subspace such that the different classes in the data are well separated. Despite its successes in discrimination and preserving linear structures, the performance of PLS-DA has been reported to degrade under nonlinear conditions. Its performance in treating multi-class data is also still widely debated. In this paper, we present a new algorithm for supervised nonlinear dimension reduction, called Kernel Locality Preserving PLS-DA (KLPPLS-DA). KLPPLS-DA combines nonlinear subspace learning with local structure preservation to enhance the discrimination of class structures. KLPPLS-DA learns the discriminant structure in the reproducing kernel Hilbert space (RKHS) while at the same time minimizing the locality-preserving within-class distance to provide more compact local class structures in the low-dimensional embedding. Two synthetic datasets are used to demonstrate the dimension reduction properties of KLPPLS-DA in comparison with other PLS-DA-based methods. The effective role of KLPPLS-DA is further demonstrated from the perspective of multi-class tumor classification from gene expression data. Experimental results show that the newly proposed KLPPLS-DA algorithm consistently outperforms linear PLS-DA-based methods, thus highlighting the presence of certain nonlinear features in the datasets which cannot be effectively captured by linear methods. The performance of the KLPPLS-DA is also far better compared to kernelized PLS-DA without locality-preserving features.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In many areas of research such as chemometrics, bioinformatics, and machine learning, data samples are usually represented as points in high-dimensional spaces. Researchers in this field are often confronted with tasks involving clustering and classification. Most clustering and classification algorithms work well with small dimensionality, usually less than 100. The efficiency and effectiveness of these algorithms drop rapidly as the dimensionality increases and this is usually referred to as the “curse of dimensionality” [1]. With the recent advances in computer technologies, there has been an explosion in the amount of data used for classification. This is certainly true for gene expression data generated using advanced microarray technologies. The data have high dimensionality and contain thousands to tens of thousands of genes. Another tricky part of the problem is that publicly available data size is very small, and some have sizes below 100. This situation is well-known as the small sample size problem where the number of predictor variables by far exceeds the number of samples. Clustering or classification of such data set is almost infeasible. Thus, dimensionality reduction is often needed prior to classification to reduce dimension and extract features that contain most of the necessary information in the data. Two of the most widely used dimension reduction algorithms are principal component analysis (PCA) [2,3,4,5] and partial least squares discriminant analysis (PLS-DA) [6,7,8,9,10,11].

PLS-DA is a supervised dimension reduction algorithm that attempts to maximize between-class covariance in the projected space. Its aim is to capture the global variability of the processed data and maximize between-class separation. Although PLS-DA is seen as an alternative to linear discriminant analysis (LDA), it has been shown in [12, 13] that the eigenstructure of PLS-DA does not capture any within-class information. In [13], Aminu and Ahmad proposed a modification of the PLS-DA algorithm called locality preserving PLS-DA (LPPLS-DA) which encodes the within-class information via an affinity graph. LPPLSDA then finds a projection that respects the graph structure while minimizing the within-class distance. The affinity graph represents a sub-manifold embedded in the ambient space that allows for the modeling of local within-class data structure. The effectiveness of the approach in increasing the discriminating power of the conventional PLS-DA was demonstrated through experiments on appearance-based face recognition and complex chemical data set [14]. Another effort to improve the discriminant power of PLS-DA is the locally weighted PLS-DA (LW-PLS-DA) [15]. LW-PLS-DA extended the locally weighted PLS approach described in [16] where locally weighted regression [17] was integrated into PLS-DA. It is also possible to incorporate other local manifold modeling techniques into PLS-DA [18]. These methods include Laplacian eigenmaps (LE) [19, 20], locally linear embedding (LLE) [21] and neighborhood preserving embedding (NPE) [22]. Combining a global method such as PLS-DA with a local manifold learning method in the form of a global–local structure preserving framework is among the most current trend in feature extraction, and very promising results are being reported [23,24,25,26,27,28,29]. It was also emphasized in [30] that, for multi-clustered data in particular, a global–local structure preserving framework can help to preserve information on both global and local clustering structures in the low dimensional embedding.

Both PLS-DA and LPPLS-DA are linear methods and are not equipped to handle nonlinearities. To overcome this limitation, we propose in this paper, a new algorithm for discriminant feature extraction, called Kernel Locality Preserving PLS-DA (KLPPLS-DA). Our proposed KLPPLS-DA algorithm is essentially a kernel extension of the linear LPPLS-DA algorithm in reproducing kernel Hilbert space (RKHS). Unlike other kernelized extensions of PLS-DA (for example, see [31,32,33,34,35]), the proposed KLPPLS-DA combines both global and local discriminating structure of the data in RKHS. While kernelized PLS-DA (KPLS-DA) finds global linear patterns in the associated RKHS, KLPPLS-DA goes one step further to also identify intrinsic local manifold structures and use that information to provide more compact within-class structures. In this way, KLPPLS-DA not only provides a nonlinear extension to PLS-DA but also has the potential to provide increased discriminant power. We show how the KLPPLS-DA solutions can be obtained by solving a generalized eigenvalue problem which shows the link between KLPPLS-DA and several other classical kernel-based feature extraction methods such as kernel fisher discriminant (KFD) [36], kernel locality preserving projections (KLPP) [37] and kernel local fisher discriminant analysis (KLFDA) [38].

The remainder of the paper is organized as follows: In Sect. 2, a brief review of the PLS-DA and LPPLS-DA algorithms is provided. In Sect. 3, global and local structure preservation in RKHS is described and the proposed Kernel Locality Preserving PLS-DA algorithm is outlined. Extensive experimental results on two synthetic datasets and six gene expression datasets are presented in Sect. 4. Finally, concluding remarks and suggestions for future work are deliberated in Sect. 5.

2 A Brief Review of PLS-DA and LPPLS-DA

2.1 PLS-DA

PLS-DA is derived from the well-known PLS algorithm for modeling the linear relationship between two sets of observed variables. The PLS algorithm has widely been used as a feature extraction method to deal with undersampled and multi-collinearity issues usually encountered in high-dimensional data. Suppose the data sets are \(X=[x_1,x_2,\ldots ,x_n]^T\in R^{n \times m}\) and \(Y=[y_1,y_2,\ldots ,y_n]^T\in R^{n \times N}\), where the rows correspond to observations and the columns correspond to variables. The main idea behind PLS is to find projection matrices \(W=[w_1,w_2,\ldots ,w_n]^T\in R^{n \times m}\) and \(V=[v_1,v_2,\ldots ,v_n]^T\in R^{n \times N}\), where each column projection vector pair (w, v) maximizes the co-variance of the projected data. Mathematically, this is represented by the constrained optimization problem of the form

where \(\bar{X}\) and \(\bar{Y}\) are mean-centered data matrices. In matrix form, (1) can be written as

By using the Lagrangian method (see for example [18]), the PLS component that is often sought after for dimension reduction is the vector w which can be solved via an eigenvalue problem of the form,

Alternatively, one can also cast problem (3) as a subspace optimization problem in the projection matrix \(\hat{W}\), that is

where the columns of \(\hat{W}\) are the d eigenvectors of \(\bar{X}^T\bar{Y}\bar{Y}^T\bar{X}\) (with \(d < m\)) associated with the d largest eigenvalues.

For discrimination and classification purposes, the input data matrix \(\bar{Y}\) is replaced by a dummy (class membership) matrix containing class information.

Let

where \(X_c=[x_{1}^{(c)},x_{2}^{(c)},\ldots ,x_{n_c}^{(c)}]\), \(c=1,2,\ldots , C\) with sample \(x_{i}^{(c)}\) being the ith vector belonging to the cth class, and, \(n_c\) denotes the number of samples in the cth class such that \(\sum _{c=1}^{C}n_c=n\). Then, the class membership matrix \(\bar{Y}\) can be defined as

where \(0_{n_c}\) and \(1_{n_c}\) are \(n_c\times 1\) vectors of zeros and ones, respectively. It was shown in [13] that, given \(\bar{Y}\) as in (6),

where \(\mu \) denotes the total sample mean vector and \(\mu ^{(c)}\) denotes the cth class mean vector. The matrix \(S_b\) in (7) is similar to the between-class scatter matrix in Linear Discriminant Analysis (LDA) except for the factor \(n_{c}\) on each term in the sum.

2.2 LPPLS-DA

In [13], locality preserving PLS-DA was proposed to combine PLS-DA and existing manifold learning techniques. More specifically, an affinity graph is constructed to model local neighborhood structures. To further enhance the discriminating ability of the LPPLS-DA method, class information is employed while constructing the neighborhood graph.

The objective of LPPLS-DA is to find a lower-dimensional subspace in which the intrinsic geometrical and discriminant structure of data is preserved. LPPLS-DA finds a projection matrix W that transforms the high-dimensional data set X into a lower-dimensional subspace \(Z=XW\) such that the relative local distances among data samples are preserved and class separation is maximized at the same time. The objective function of LPPLS-DA can be formulated as the following multi-objective optimization problem:

where Y is given by (6), \(L=D-S\) is the graph Laplacian, D is a diagonal matrix whose i-th diagonal element is \(D_{ii}=\sum _{j}S_{ij}\). The weight matrix \(S_{ij}\) is define as:

where t is a user-specified parameter. Equation (8) leads to the following optimal solution:

It can be shown that \(W_\textrm{opt}\) is the matrix whose columns are the eigenvectors corresponding to the principal eigenvalues of a generalized eigenvalue problem of the form:

Let \(w_1,w_2,\ldots ,w_d\) be the solutions to (11) associated with the first d largest eigenvalues. Thus, a data point x can be mapped into a d-dimensional LPPLS-DA subspace by

3 Kernel Locality Preserving PLS-DA (KLPPLS-DA)

From the description of LPPLS-DA in the previous section, it can be observed that LPPLS-DA inherits the linear framework of PLS-DA. In other words, just like PLS-DA, it attempts to describe a variable as a linear combination of linear features or vectors. However, the locality-preserving feature in LPPLS-DA allows it the added advantage of having the capability to represent locally nonlinear structures. This property makes LPPLS-DA suitable for problems involving data that are globally linear but have patches of data that resides on local nonlinear manifolds. For hyperspectral image and gene expression data sets that generally exhibit global nonlinear structure, the applicability of LPPLS-DA may probably be quite prohibitive.

To overcome this shortcoming, we propose a nonlinear extension of LPPLS-DA called kernel locality preserving PLS-DA (KLPPLS-DA) that allows for a global nonlinear framework while providing local representation for the respective classes so that local geometric/class structures are preserved. The main idea of KLPPLS-DA is based on the same perspectives that can be found in [34, 37] where LPPLS-DA is reformulated in an implicit feature space \({\mathcal {H}}\) induced by some nonlinear mapping

Given input data \(x_1, x_2, \ldots , x_n \in R^m\), the mapped data \(\phi (x_1),\ldots ,\phi (x_n) \in {\mathcal {H}}\) are assumed to have a linearly separable structure. The feature space \({\mathcal {H}}\) is the so-called reproducing kernel Hilbert space (RKHS) with the associated inner-product \(\langle .,.\rangle _{{\mathcal {H}}}\). The reproducing property of \({\mathcal {H}}\) allows the inner product to be represented by a kernel function  . More specifically, for any two variables \(x,y \in R^m\),

. More specifically, for any two variables \(x,y \in R^m\),

holds where  is a positive semi-definite kernel function. Some of the popular kernel functions are: Linear kernel

is a positive semi-definite kernel function. Some of the popular kernel functions are: Linear kernel  ; polynomial kernel

; polynomial kernel  ; radial basis function kernel

; radial basis function kernel  .

.

3.1 Partial Least Squares Discriminant Analysis in RKHS

The goal here is to construct a lower-dimensional embedding in \({\mathcal {H}}\) using PLS-DA, and this can be done by constructing a subspace optimization problem analogous to (4) where the optimum projection matrix is obtained from the eigenstructure of the between-class scatter matrix. Depending on the nonlinear transformation \(\phi (.)\) the feature space \({\mathcal {H}}\) can be high-dimensional, even infinite-dimensional when the Gaussian kernel function is used. However, in practice, we are working only with n observations. In such circumstances, we have to restrict ourselves to finding a lower-dimensional subspace in the span of the points \(\phi (x_1),\ldots ,\phi (x_n)\). We start with a matrix of mapped, mean-centered training data \(\bar{\Phi } = [\bar{\phi }(x_1), \ldots , \bar{\phi }(x_n)]^T\), where \(\bar{\phi }(x_i)=\phi (x_i)-\mu _{\phi }\), such that \(\mu _{\phi }\) is the global centroid that is given by \(\mu _{\phi } = \frac{1}{n}\sum _{i=1}^n\phi (x_i)=\frac{1}{n}\Phi ^T 1_n\) with \(1_n\) being an n-element vector with entries all equal to one. Equivalently, we can write \(\bar{\Phi }=[\bar{\phi }(x_1), \ldots , \bar{\phi }(x_n)]^T=H\Phi \), where \(H=I_n-\frac{1}{n}1_n1_n^T\) is the usual centering matrix.

Suppose the mean-centered mapped training set from C classes is represented by

where \(\bar{\Phi }_c=[\bar{\phi }(x_{1}^{(c)}), \bar{\phi }(x_{2}^{(c)}),\ldots ,\bar{\phi }(x_{n_c}^{(c)})]\), \(c=1,2,\ldots , C\) such that \(\bar{\phi }(x_{i}^{(c)})\) is the mean-centered, mapped ith training sample belonging to the cth class. Define the mean of the cth class in the feature space \({\mathcal {H}}\) as \(\mu _{\phi }^{(c)} =\frac{1}{n_c} \sum _{j}^{n_c}\phi (x_{j}^{(c)})\) and let \(\bar{Y}\) be defined as in (6). In terms of the mean vectors \(\mu _{\phi }\) and \(\mu _{\phi }^{(c)},c =1,2,\ldots ,C\):

Consequently, by writing \(\bar{\Phi }^T\bar{Y}\bar{Y}^T\bar{\Phi }\) as a sum of outer-products of the columns of \(\bar{\Phi }^TY\), we get

The term on the right-hand side of (14) gives us the slightly altered between-class scatter matrix in the feature space \({\mathcal {H}}\). Now, let U denotes a projection matrix in the feature space \({\mathcal {H}}\), then, the subspace optimization problem

finds a linear projection U that maximizes between-class separation in \({\mathcal {H}}\). The optimization problem (15) defines the PLS-DA method in the feature space \({\mathcal {H}}\).

3.2 Locality Projections and Within Class Structure Preservation in RKHS

From [39], optimal projection vector u that preserves local within-class structure in the feature space \({\mathcal {H}}\) is obtained from the minimization problem

where \(L^{\Phi }=D^{\Phi }-S^{\Phi }\) is the graph Laplacian in \({\mathcal {H}}\), \(D^{\Phi }\) is a diagonal matrix whose i-th diagonal element is \(D^{\Phi }_{ii}=\sum _{j}S^{\Phi }_{ij}\) and \(S^{\Phi }_{ij}\) is the weight matrix in \({\mathcal {H}}\) defined as:

The kernel function  measures similarity between \(\phi (x_i)\) and \(\phi (x_j)\) in the feature space. Thus, (17) provides a local graph for each class and preserving the graph structure is akin to preserving the within-class structure. Furthermore, \(S^{\Phi }\) can be written as block-diagonal form given below:

measures similarity between \(\phi (x_i)\) and \(\phi (x_j)\) in the feature space. Thus, (17) provides a local graph for each class and preserving the graph structure is akin to preserving the within-class structure. Furthermore, \(S^{\Phi }\) can be written as block-diagonal form given below:

where for each \(c=1,2,\ldots ,C\), \(S_{\Phi }^{(c)} \in R^{n_c \times n_c}\) and  , \(r,s=1,2,\ldots ,n_c\). The block-diagonal shape of \(S^{\Phi }\) implies the same block-diagonal shape for graph Laplacian \(L^{\Phi }\):

, \(r,s=1,2,\ldots ,n_c\). The block-diagonal shape of \(S^{\Phi }\) implies the same block-diagonal shape for graph Laplacian \(L^{\Phi }\):

where for each \(c=1,2,\ldots ,C\), \(L_{\Phi }^{(c)} = D_{\Phi }^{(c)}- S_{\Phi }^{(c)} \in R^{n_c \times n_c}\) and \((D_{\Phi }^{(c)})_{rr} = \sum _{j}(S_{\Phi }^{(c)})_{rj}\), \(r=1,2,\ldots ,n_c\).

By combining (13) and (19), we are able to write \(\bar{\Phi }^TL^{\Phi }\bar{\Phi }\) as a

It is worth noting that for the special case where  for all \(r,s=1,2,\ldots ,n_c\), \(L_{\Phi }^{(c)}\) reduces to the \(n_c\times n_c\) matrix \(I-\frac{1}{n_c}(1_{n_c}1_{n_c}^T)\) with I an identity matrix and \(1_{n_c}\) a \(n_c\)-dimensional vector with all entries equal to one. It can be shown further that, for this special case, the right-hand side matrix in (20) reduces to the within-class scatter matrix for the Kernel Fischer Discriminant Analysis (KFDA) [36]. This special case highlights the connection between (16) and the within-class distance in KFDA. KFDA assumes constant similarity in \({\mathcal {H}}\) between training samples of the same class, whereas the objective function in (16) admits a varying similarity among training samples. As such, samples from within the same class are allowed to have a structure and this structure is preserved by the similarity graph in (17).

for all \(r,s=1,2,\ldots ,n_c\), \(L_{\Phi }^{(c)}\) reduces to the \(n_c\times n_c\) matrix \(I-\frac{1}{n_c}(1_{n_c}1_{n_c}^T)\) with I an identity matrix and \(1_{n_c}\) a \(n_c\)-dimensional vector with all entries equal to one. It can be shown further that, for this special case, the right-hand side matrix in (20) reduces to the within-class scatter matrix for the Kernel Fischer Discriminant Analysis (KFDA) [36]. This special case highlights the connection between (16) and the within-class distance in KFDA. KFDA assumes constant similarity in \({\mathcal {H}}\) between training samples of the same class, whereas the objective function in (16) admits a varying similarity among training samples. As such, samples from within the same class are allowed to have a structure and this structure is preserved by the similarity graph in (17).

3.3 Kernel LPPLS-DA: Global and Local Structure Preservation in RKHS

The Kernel LPPLS-DA aims to reduce the dimensionality of data while preserving both its global and local class structures in the feature space \({\mathcal {H}}\). The approach is similar to that of LPPLS-DA which finds a low-dimensional embedding of the training samples that best preserves both global and local structures of the data. Based on the analysis in Sects. 3.1 and 3.2, global and local structures can be preserved simultaneously by solving a multi-objective function of the form

where U is an n by d matrix such that \(d<n\) is the dimension of the low-dimensional embedding. Following the approach in [13], equation (21) leads to an optimal projection matrix of the form:

To solve for \(U_{opt}\), first we observe that each column of U, \(u_i, i= 1,2,\ldots , d\) is a solution of the generalized eigenvalue problem

and since \(U \subseteq {\mathcal {H}}\), we can write \(u_i =\nu _{i}^{(1)} + \nu _{i}^{(2)}\) where \(\nu _{i}^{(1)} \in {\mathcal {C}}(\bar{\Phi }^T)\) and \(\nu _{i}^{(1)} \in {\mathcal {C}}(\bar{\Phi }^T)^{\perp }={\mathcal {N}}(\bar{\Phi })\) [40]. Here, \({\mathcal {C}}(\bar{\Phi }^T)\) denotes the column space of \(\bar{\Phi }^T\), whereas \({\mathcal {N}}(\bar{\Phi })\) denotes the nullspace of \(\bar{\Phi }\), which is also equivalent to the orthogonal complement of \({\mathcal {C}}(\bar{\Phi }^T)\). Since \(\bar{\Phi }\nu _{i}^{(2)}=0\), then

and

Thus, only \(u_i \in {\mathcal {C}}(\bar{\Phi }^T)\) needs to be considered. In other words, there exist coefficients \(\alpha _{ij}\) \((j=1,\ldots ,n)\) such that:

where \(\alpha _i=[\alpha _{i1},\ldots ,\alpha _{in}]^T\).

Substituting (24) into (22) results in the following optimization problem:

where \(A = [\alpha _1,\ldots ,\alpha _n]\) is an n by n matrix, and \(\bar{K}=\bar{\Phi }\bar{\Phi }^T=H {\Phi }{\Phi }^TH=HKH\). The matrix \(K=\Phi \Phi ^T\) is called the kernel matrix, whereas \(\bar{K}\) is the centered kernel matrix. The subspace optimization problem (25) is equivalent to the corresponding eigendecomposition:

and the columns of \(A_\textrm{opt}\) are the first d principal generalized eigenvectors of (26). Each column in \(A_\textrm{opt}\), \(\alpha _i\), gives a projection functions \(u_i\) in \({\mathcal {H}}\), and the projection of a data sample x onto the direction \(u_i\) is computed as:

where  . A data sample x can be mapped into d-dimensional subspace by:

. A data sample x can be mapped into d-dimensional subspace by:

The matrix \(\bar{K}L^{\Phi }\bar{K}\) in (26) is often semi-positive definite for many datasets. This situation often occurs because the mapping from an input space to a feature space by a kernel function often leads to a feature space with a dimension that is much larger than the dimension of the sample space. Semi-positive definiteness can lead to the ill-posedness of (25) because the denominator might become zero. To this end, we add a multiple of the identity matrix to \(\bar{K}L^{\Phi }\bar{K}\), i.e.,

For \(\mu > 0\), the strategy in (29) makes the denominator of (25) positive and leads to a more numerically stable generalized eigenvalue problem. Alternatively, the strategy can also be viewed as imposing a regularization on \(\Vert \alpha _i \Vert ^2\) that gives preference to solutions with small expansion coefficients. The algorithmic procedure of KLPPLS-DA is formally summarized in Algorithm 1.

4 Experimentation and Results

The focus of our experiments is to compare the performance of KLPPLS-DA with the other PLS-DA-based methods, namely, the conventional PLS-DA, LPPLS-DA, and kernel PLS-DA in supervised learning of low-dimensional subspace. The objective is to highlight the dimension reduction properties of each method and how these abilities will affect the classification of real-world datasets in low-dimensional embedding. For convenience, the dimension reduction mechanisms of each method are summarized in Table .

4.1 Experiment 1: Dimensionality Reduction for Data Visualization

For the experiments in this section, two synthetic datasets are used to demonstrate visually the comparative dimension reduction properties of the PLS-DA family of methods. The synthetic datasets are Swiss Roll and Helix datasets which are benchmark datasets for nonlinear dimensional reduction [41,42,43]. Data points for the Swiss roll were generated using the coordinates: \(\{x = t \cos t, y = 0.5t \sin t, z\}\) where t is varied from 0 to \(5.5\pi \) in steps of \(5.5\pi /n\) (n is the number of data points), and z are random numbers in the interval [0, 30]. For the Helix dataset, data points are generated using the coordinates: \(\{x = (2 + \cos 8t)\cos t, y = (2 + \cos 8t)\sin t, z = \sin 8t\}\), where t is varied from \(-\pi \) to \(\pi \) in steps of \(2\pi /n\). Preliminary experiments show that for the kernel methods (KPLS-DA and KLPPLS-DA), the radial basis function (RBF) kernel is most suitable for both Swiss roll and Helix data and this is,

where the parameter \(\sigma \) is set to 10.

4.1.1 Local Class Structure

To simulate datasets with local class structures, 1500 data points are generated for Swiss roll and Helix structures, The data points are divided into three different classes as shown in Fig. . Data from each class are distributed in the same locality. The dimension reduction in the datasets by each PLS-DA-based method is shown in Fig. for the Swiss roll dataset and Fig. for the Helix dataset.

From Figs. 2 and 3, it is observed that both PLS-DA and LPPLS-DA cannot unfold the global nonlinear structure of the Swiss roll and Helix datasets. This is expected due to the linear nature of the projections. The class structures under LPPLS-DA projection are less spread out (particularly for the Swiss roll data) owing to the minimization of within-class distance. The Swiss roll dataset was unfolded successfully into linear structures by both the KPLS-DA and KLPPLS-DA projections, thereby justifying the kernelized extensions of the linear counterparts. Class separation by KLPPLS-DA projection is much more refined compared to the KPLS-DA projection. Here, the minimization of the locality-preserving within-class distance in KLPPLS-DA provides a clear advantage over KPLS-DA. KPLS-DA projection unfolds the nonlinear structure while preserving the global class structure but unlike KLPPLS-DA, it does not provide enough separation between the classes. For the Helix dataset in Fig. 3, again the KLPPLS-DA projection successfully unfolded the nonlinear structure into three well-separated linear class structures. However, KPLS-DA projection cannot quite unfold the nonlinear structure but still maintain the global class structure.

4.1.2 Non-local Class Structure

To simulate datasets with non-local class structures, 1500 data points are generated for Swiss roll and Helix structures, and the points are again divided into three different classes. This time, data points for Class 1 are separated into two groups at two different localities as shown in Fig. . The dimension reduction in the datasets by each PLS-DA-based method is shown in Fig. for the Swiss roll dataset and Fig. for the Helix dataset.

Figures 5 and 6 further highlight the limitation of PLS-DA and LPPLS-DA in separating data points of different classes that are embedded within a nonlinear structure. Both methods observe the original global (and local) distribution of the data points and are unable to assemble the two groups of Class 1 in the same locality. The minimization of the locality-preserving within-class distance in class structures in LPPLS-DA only managed to minimize within-class distance in the locality of each group. It is noticed in Fig. 6 that LPPLS-DA projection results in some unfolding of the nonlinear Helix structure to try to bring the two groups of Class 1 data together, but with a lot of limitations. On the other hand, the unfolding of the Swiss roll dataset and the Helix dataset by the KPLS-DA and KLPPLS-DA projections have proven to be very useful in merging the two groups of Class 1 data. The global class structures from KPLS-DA and KLPPLS-DA projections are quite similar; however, KLPPLS-DA projection has the tendency to provide more compact within-class structures.

4.2 Experiment 2: Dimensionality Reduction for the Classification of Real-World Dataset

4.2.1 Benchmark Procedure

To evaluate the performance of the PLS-DA family of methods in dimensionality reduction for the classification of real-world datasets, a benchmark procedure is used. Given a real-world dataset, the procedure involves five main components:

-

1.

Splitting the dataset into two parts; training and test data;

-

2.

Compute the projection matrix for low-dimensional embedding using training dataset;

-

3.

Use the projection matrix to compute the projections of training and test data in the low-dimensional embedding according to (28);

-

4.

Use projected training data to train suitable classifiers;

-

5.

Classify projected test data using trained classifiers.

For classification in Steps 4 and 5, typical classifiers (amongst others) used in the literatures [44,45,46] are the Support Vector Machine (SVM) classifier, k-NN classifier, naive Bayes classifier (NBC) and Random Forest (RF). In the experiments in this section, both SVM and k-NN classifier (with \(k = 2\)) are used for the purpose of comparison.

For the kernel methods (kernel PLS-DA and KLPPLS-DA), two kinds of kernel functions are used, which are the radial basis function (RBF) kernel (30) and the polynomial kernel

A series of preliminary experiments show that setting \(\sigma =d=2\) produces overall good results. Thus, these values are used in all of the forthcoming experiments.

4.2.2 Datasets

The experiments in this section are conducted on six publicly available datasets. The important statistics of the datasets are summarized below as well as in Table .

-

1.

The Brain Tumor dataset [47] contains gene expression profiles of 42 patient samples: 10 medulloblastomas, 10 non-embryonal brain tumors (malignant glioma), 10 central nervous system atypical teratoid/rhabdoid (CNS AT/RT) and, renal and extrarenal rhabdoid tumors, 8 supratentorial PNETs and 4 normal human cerebella. Each sample contains 5597 gene expression values.

-

2.

The Colon cancer dataset contains gene expression levels of 40 tumors and 22 normal colon tissues for 6500 genes which are measured using Affymetrix technology. In [48], a selection of 2000 genes with the highest minimal intensity across the samples was made. We used this selection in our experiments.

-

3.

The Leukemia dataset [49] contains gene expression levels of 72 patients diagnosed with acute lymphoblastic leukemia (ALL, 47 cases) and acute myeloid leukemia (AML, 25 cases). The number of gene expression levels is 7129. Following the procedure in [50], the dataset was further processed by carrying out the logarithmic transformation, thresholding, filtering and standardization so that the final data contain expression values of 3571 genes.

-

4.

The Lymphoma dataset [51] consists of gene expression levels for 62 lymphomas: 42 samples of diffuse large B-cell lymphoma (DLBCL), 11 samples of chronic lymphocytic leukemia (CLL) and 9 samples of follicular lymphoma (FL). The whole dataset contains expression values of 4026 genes.

-

5.

The prostate dataset [52] contains 102 tissue samples: 52 tumor tissues and 50 normal tissues. The number of gene expression levels is 6033.

-

6.

The SRBCT dataset [53] contains 63 samples which include both tumor biopsy material [13 Ewing family of tumors (EWS) and 10 rhabdomyosarcoma (RMS)] and cell lines [10 EWS, 10 RMS, 12 neuroblastoma (NB) and 8 Burkitt lymphomas (BL)]. Each sample in this dataset contains 2308 gene expression values.

All data samples were normalized to have a unit norm so that the undesirable effects caused by genes with larger expression values are eliminated.

4.2.3 Data Visualization

The complex structure of microarray data may be too challenging for linear methods to capture the true structure of the data in very low dimensional subspace [4]. To briefly investigate the comparative performance of LPPLS-DA and KLPPLS-DA in distinguishing the different classes in tumor datasets based on gene expression data, we produce projections of the gene expression data onto two viewable dimensions. The results of the two-dimensional representation of both methods on the different datasets are shown in Fig. for two-class datasets and Fig. for multi-class. For two-class datasets, both methods produced comparable results where both clusters in the datasets are separated quite well. For multi-class datasets, it is observed that KLPPLS-DA performed exceptionally better than LPPLS-DA on the SRBCT dataset. The two-dimensional embedding subspace obtained from KLPPLS-DA is able to preserve more structural information compared to that of LPPLS-DA to allow a much better separation of the four clusters in the SRBCT dataset. The brain dataset appears to be rather challenging for both LPPLS-DA and KLPPLS-DA. Although good separation is observed for samples of medulloblastoma, malignant glioma, and rhabdoid, it is apparent that two-dimensional embedding of these methods is unable to capture the information necessary to effectively separate the normal Cerebella from the pancreatic neuroendocrine tumors (PNETs). These results also imply that the structure of the data is sometimes too complex to be captured in a two-dimensional subspace.

4.2.4 Data Classification: Average Classification Accuracies

Here, we use increasing values of embedding dimension to compare the performance of KLPPLS-DA with the other PLS-DA-based methods, namely, the conventional PLS-DA, LPPLS-DA, and kernel PLS-DA. These methods are used to compute optimal low-dimensional embedding of the gene expression data for a number of reduced dimensions. Then, for each reduced dimension, classification accuracies are calculated for each method. From these experiments, we aim to study the ability of each method to preserve class information in low-dimensional embedding. The benchmark procedure in 4.2.1 is used, where each dataset is randomly partitioned into training sets consisting of two-thirds of the whole sets and test sets consisting of the remaining one-third of the whole sets. To reduce variability in the results, we repeat the random splitting 10 times and report the average classification accuracies.

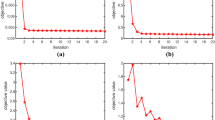

Figure shows the average classification accuracies obtained using k-NN classifier as the number of dimensions increases. Overall, we observe that KLPPLS-DA has the best performance for all the datasets used in the experiment. For Colon, Leukemia, and Prostate datasets, KLPPLS-DA is able to achieve the highest accuracy rate with only the one-dimensional optimal embedding subspace. For the Brain, Lymphoma, and SRBCT data sets, the KLPPLS-DA method obtained the highest classification accuracies using only four, two, and three-dimensional optimal embedding subspaces, respectively. In Fig. , the average classification accuracies obtained using the SVM classifier are presented. Here, we can see similar performances as in Fig. 9 where it is also revealed that the KLPPLS-DA method provides the highest accuracy with the lowest dimension. Thus, based on this observation, it can be deduced that the superior performance of KLPPLS-DA is independent of the choice of the classifier.

One interesting observation from Figs. 9 and 10 is that the KPLS-DA method (i.e., kernelized PLS-DA without locality preserving feature) performs rather poorly in most of the experiments. In the classification of Brain and Prostate datasets, its performance is worse than LPPLS-DA and no better than the conventional PLS-DA method. We see this as an indication of the need for minimizing the locality-preserving within-class distance to enhance the dimensional reduction in gene expression data.

It is worth noting that the performance of KLPPLS-DA is comparable to several recently published feature selection methods. For example in [54], it is reported that for Colon and SRBCT data sets, the best classification accuracies obtained with a k-NN classifier are \(84\%\) and \(84.6\%\), respectively, and using the SVM classifier, the authors reported a classification accuracy of \(83.8\%\) and \(93.6\%\), respectively. In the meantime, using the k-NN classifier, our proposed KLPPLS-DA method reports best classification accuracies of \(>85\%\) for the Colon dataset (see Table ) and \(100\%\) for the SRBCT for the two-third train/test split (see Table ). In another study [55], for the lymphoma data set, the best classification accuracies obtained using both k-NN and SVM are \(100\%\). The best classification accuracy recorded for KLPPLS-DA is also \(100\%\) using both classifiers (see Table ). However, KLPPLS-DA only requires a two-dimensional optimal embedding subspace to achieve \(100\%\) accuracy, whereas in [55], an eight-dimensional subspace is used.

4.2.5 Data Classification: Effects of Different Data Partitioning

In this section, we investigate the sensitivity of the family of PLS-DA-based methods with respect to data partition, i.e., training sample size. It is reasonable to expect that as the training sample size is increased, classification accuracy will increase as well because more information is available to learn the relevant features of the dataset. All six data sets are randomly partitioned into training sets consisting of one-third, half, and two-thirds of the whole sets and test sets consisting of the remaining two-thirds, half, and one-third of the data sets, respectively. Similar to the previous experiments, the results are averaged over 10 random splits of the data sets and report the average classification accuracies. The best average classification accuracy rates, standard deviations, and the corresponding dimensions (in brackets) for the different splits are reported in Tables , 4, , 6, and 8. The original number of features in all six data sets is in the thousands. For each method, 20 components are extracted.

Based on the experimental results presented in Tables 3, 4, 5, 6, 7 and 8, it can be seen in general that all the methods used in the experiment show an increase in classification accuracy as the size of the training sample increases. On all six data sets, the classification accuracies of KLPPLS-DA (using both RBF and polynomial kernels) are significantly higher than the other methods for all data partitioning. Most importantly, KLPPLS-DA provides the best classification accuracies compared to LPPLS-DA and KPLS-DA on all the datasets under consideration. This goes to show that KLPPLS-DA provides an improvement over LPPLS-DA in terms of capturing nonlinear features of gene expression datasets. Moreover, KLPPLS-DA also provides an improvement over KPLS-DA due to its ability to provide more compact local structures in RKHS.

5 Conclusions

In this paper, a novel algorithm for nonlinear feature extraction is proposed, called Kernel Locality Preserving PLS-DA (KLPPLS-DA). KLPPLS-DA combines nonlinear subspace learning with enhanced class discrimination via local structure preservation. As such, KLPPLS-DA has the ability to resolve the nonlinear structure while at the same time discovering the discriminant structure in the low-dimensional RKHS embedding. Several experiments using synthetic datasets with nonlinear structures have allowed a visual understanding of the dimension reduction properties of KLPPLS-DA in comparison with the other PLS-DA-based methods.

The capabilities of KLPPLS-DA are also demonstrated in the multi-class tumor classification from gene expression data where the experimental results show that the KLPPLS-DA algorithm consistently outperforms linear PLS-DA-based methods. KLPPLS-DA also shows significant improvement over KPLS-DA, which indicates the importance of minimizing the locality-preserving within-class distance in RKHS to enhance the dimensional reduction in gene expression data. The performance of LPPLS-DA and KLPPLS-DA is noticeably comparable in two-dimensional embedding; however, in higher dimensions, KLPPLS-DA clearly outperforms LPPLS-DA. This is an indication of the presence of certain nonlinear features in the gene expression datasets that cannot be effectively resolved by LPPLS-DA. KLPPLS-DA also demonstrates the ability to handle the complexity of the gene expression datasets in the least number of dimensions and with a small number of training samples. In general, the performance of KLPPLS-DA is seen to be independent of the choice of the classifier.

One main disadvantage of KLPPLS-DA is the need to identify the optimum values for \(\sigma \) (for RBF kernel) and d (for polynomial kernel) for each dataset. In the experiments in Sect. 4, these parameters are empirically determined. For many of the data sets, the performances of KLPPLS-DA using both RBF and the polynomial kernels are comparable to one another. However, the KLPPLS-DA method can perform poorly when given unsuitable values for \(\sigma \) and d. Thus suitable values for \(\sigma \) and d are critical to the success of the KLPPLS-DA method.

References

Souza, F.A., Araújo, R., Mendes, J.: Review of soft sensor methods for regression applications. Chemom. Intel. Lab. Syst. 152, 69–79 (2016)

Yeung, K.Y., Ruzzo, W.L.: Principal component analysis for clustering gene expression data. Bioinformatics 17(9), 763–774 (2001)

Ma, S., Kosorok, M.R.: Identification of differential gene pathways with principal component analysis. Bioinformatics 25(7), 882–889 (2009)

Bartenhagen, C., Klein, H.-U., Ruckert, C., Jiang, X., Dugas, M.: Comparative study of unsupervised dimension reduction techniques for the visualization of microarray gene expression data. BMC Bioinf. 11(1), 567 (2010)

Ma, S., Dai, Y.: Principal component analysis based methods in bioinformatics studies. Brief. Bioinf. 12(6), 714–722 (2011)

Fordellone, M., Bellincontro, A., Mencarelli, F.: Partial least squares discriminant analysis: a dimensionality reduction method to classify hyperspectral data. ArXiv (2018). https://doi.org/10.4850/arXiv.1806.09347

Nguyen, D.V., Rocke, D.M.: Tumor classification by partial least squares using microarray gene expression data. Bioinformatics 18(1), 39–50 (2002)

Tan, Y., Shi, L., Tong, W., Hwang, G.G., Wang, C.: Multi-class tumor classification by discriminant partial least squares using microarray gene expression data and assessment of classification models. Comput. Biol. Chem. 28(3), 235–243 (2004)

Nguyen, D.V., Rocke, D.M.: Multi-class cancer classification via partial least squares with gene expression profiles. Bioinformatics 18(9), 1216–1226 (2002)

Pérez-Enciso, M., Tenenhaus, M.: Prediction of clinical outcome with microarray data: a partial least squares discriminant analysis (pls-da) approach. Hum. Genet. 112(5–6), 581–592 (2003)

Boulesteix, A.-L., Strimmer, K.: Partial least squares: a versatile tool for the analysis of high-dimensional genomic data. Brief. Bioinf. 8(1), 32–44 (2007)

Barker, M., Rayens, W.: Partial least squares for discrimination. J. Chemom. 17(3), 166–173 (2003)

Aminu, M., Ahmad, N.A.: Locality preserving partial least squares discriminant analysis for face recognition. J. King Saud Univ. Comput. Inf. Sci. 34(2), 153–164 (2019)

Aminu, M., Ahmad, N.A.: Complex chemical data classification and discrimination using locality preserving partial least squares discriminant analysis. ACS Omega 5(41), 26601–26610 (2020)

Bevilacqua, M., Marini, F.: Local classification: locally weighted-partial least squares discriminant analysis (lw-pls-da). Anal. Chim. Acta 838, 20–30 (2014)

Centner, V., Massart, D.L.: Optimisation in locally weighted regression. Anal. Chem. 70, 4206–4211 (1998)

Postma, G.J., Krooshof, P.W.T., Buydens, L.M.C.: Opening the kernel of kernel partial least squares and support vector machines. Anal. Chim. Acta 705(1–2), 123–134 (2011)

Aminu, M., Ahmad, N.A.: New variants of global-local partial least squares discriminant analysis for appearance-based face recognition. IEEE Access 8, 166703–166720 (2020). https://doi.org/10.1109/ACCESS.2020.3022784

Belkin, M., Niyogi, P.: Laplacian eigenmaps and spectral techniques for embedding and clustering. In: Advances in Neural Information Processing Systems, pp. 585–591 (2002)

Belkin, M., Niyogi, P.: Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput. 15(6), 1373–1396 (2003)

Roweis, S.T., Saul, L.K.: Nonlinear dimensionality reduction by locally linear embedding. Science 290(5500), 2323–2326 (2000)

He, X., Cai, D., Yan, S., Zhang, H.-J.: Neighborhood preserving embedding. In: Tenth IEEE International Conference on Computer Vision (ICCV’05), vol. 2, pp. 1208–1213 (2005). IEEE

Lee, H.: Combining locality preserving projection with global information for efficient recognition. Int. J. Fuzzy Log. Intell. Syst. 18(2), 120–125 (2018)

Song, B., Shi, H.: Temporal-spatial global locality projections for multimode process monitoring. IEEE Access 6(January), 9740–9749 (2018)

Yao, C., Han, J., Nie, F., Xiao, F., Li, X.: Local regression and global information-embedded dimension reduction. IEEE Trans. Neural Netw. Learn. Syst. 29(10), 4882–4893 (2018)

Wan, Y., Chen, X., Zhang, J.: Global and intrinsic geometric structure embedding for unsupervised feature selection. Expert Syst. Appl. 93(March), 134–142 (2018)

Abeo, T.A., Shen, X.-J., Ganaa, E.D., Zhu, Q., Bao, B.-K., Zha, Z.-J.: Manifold alignment via global and local structures preserving pca framework. IEEE Access 7(March), 38123–38134 (2019)

Zhao, H., Lai, Z., Chen, Y.: Global-and-local-structure-based neural network for fault detection. Neural Netw. 118(October), 43–53 (2019)

Zhao, X., Jia, M.: Fault diagnosis of rolling bearing based on feature reduction with global-local margin fisher analysis. Neurocomputing 315(November), 447–464 (2019)

Cai, W.: A dimension reduction algorithm preserving both global and local clustering structure. Knowledge-Based Syst. 118(February), 191–203 (2016)

Song, W., Wang, H., Maguire, P., Nibouche, O.: Nearest clusters based partial least squares discriminant analysis for the classification of spectral data. Analytica Chimica Acta 1009, 27–38 (2018)

Štruc, V., Pavešić, N.: Gabor-based kernel partial-least-squares discrimination features for face recognition. Informatica 20(1), 115–138 (2009)

Srinivasan, B.V., Luo, Y., Garcia-Romero, D., Zotkin, D.N., Duraiswami, R.: A symmetric kernel partial least squares framework for speaker recognition. IEEE Trans. Audio Speech Lang. Process. 21(7), 1415–1423 (2013)

Rosipal, R.: Kernel partial least squares for nonlinear regression and discrimination. Neural Ntwork World 13(3), 291–300 (2003)

Zhang, L., Tian, F.-C.: A new kernel discriminant analysis framework for electronic nose recognition. Anal. Chim. Acta 816, 8–17 (2014)

Mika, S., Ratsch, G., Weston, J., Scholkopf, B., Mullers, K.-R.: Fisher discriminant analysis with kernels. In: Neural Networks for Signal Processing IX: Proceedings of the 1999 IEEE Signal Processing Society Workshop (cat. No. 98th8468), pp. 41–48 (1999). IEEE

He, X., Niyogi, P.: Locality preserving projections. In: Advances in Neural Information Processing Systems, pp. 153–160 (2004)

Sugiyama, M.: Dimensionality reduction of multimodal labeled data by local fisher discriminant analysis. J. Mach. Learn. Res. 8(May), 1027–1061 (2007)

Cheng, J., Liu, Q., Lu, H., Chen, Y.-W.: Supervised kernel locality preserving projections for face recognition. Neurocomputing 67, 443–449 (2005)

Schölkopf, B., Herbrich, R., Smola, A.J.: A generalized representer theorem. In: International Conference on Computational Learning Theory, pp. 416–426 (2001). Springer

Das, S., Pal, N.R.: Nonlinear dimensionality reduction for data visualization: an unsupervised fuzzy rule-based approach. IEEE Trans. Fuzzy Syst. 30(7), 2157–2169 (2022). https://doi.org/10.1109/TFUZZ.2021.3076583

van der Maaten L.J.P., P.E.O., van den Herik H.J.: Dimensionality reduction: a comparative review. Tilburg University Technical Report TiCC-TR 2009-005 (2009)

Agrafiotis, D.K., Xu, H.: A self-organizing principle for learning nonlinear manifolds. Proc. Natl. Acad. Sci. 99(25), 15869–15872 (2002). https://doi.org/10.1073/pnas.242424399

Cui, Y., Zheng, C.-H., Yang, J., Sha, W.: Sparse maximum margin discriminant analysis for feature extraction and gene selection on gene expression data. Comput. Biol. Med. 43(7), 933–941 (2013). https://doi.org/10.1016/j.compbiomed.2013.04.018

Li, B., Tian, B.-B., Zhang, X.-L., Zhang, X.-P.: Locally linear representation fisher criterion based tumor gene expressive data classification. Comput. Biol. Med. 53, 48–54 (2014). https://doi.org/10.1016/j.compbiomed.2014.07.018

Zhang, L., Qian, L., Ding, C., Zhou, W., Li, F.: Similarity-balanced discriminant neighbor embedding and its application to cancer classification based on gene expression data. Comput. Biol. Med. 64, 236–245 (2015). https://doi.org/10.1016/j.compbiomed.2015.07.008

Pomeroy, S.L., Tamayo, P., Gaasenbeek, M., Sturla, L.M., Angelo, M., McLaughlin, M.E., Kim, J.Y., Goumnerova, L.C., Black, P.M., Lau, C., et al.: Prediction of central nervous system embryonal tumour outcome based on gene expression. Nature 415(6870), 436–442 (2002)

Alon, U., Barkai, N., Notterman, D.A., Gish, K., Ybarra, S., Mack, D., Levine, A.J.: Broad patterns of gene expression revealed by clustering analysis of tumor and normal colon tissues probed by oligonucleotide arrays. Proc. Natl. Acad. Sci. 96(12), 6745–6750 (1999)

Golub, T.R., Slonim, D.K., Tamayo, P., Huard, C., Gaasenbeek, M., Mesirov, J.P., Coller, H., Loh, M.L., Downing, J.R., Caligiuri, M.A., et al.: Molecular classification of cancer: class discovery and class prediction by gene expression monitoring. Science 286(5439), 531–537 (1999)

Dudoit, S., Fridlyand, J., Speed, T.P.: Comparison of discrimination methods for the classification of tumors using gene expression data. J. Am. Stat. Assoc. 97(457), 77–87 (2002)

Alizadeh, A.A., Eisen, M.B., Davis, R.E., Ma, C., Lossos, I.S., Rosenwald, A., Boldrick, J.C., Sabet, H., Tran, T., Yu, X., et al.: Distinct types of diffuse large b-cell lymphoma identified by gene expression profiling. Nature 403(6769), 503–511 (2000)

Singh, D., Febbo, P.G., Ross, K., Jackson, D.G., Manola, J., Ladd, C., Tamayo, P., Renshaw, A.A., D’Amico, A.V., Richie, J.P., et al.: Gene expression correlates of clinical prostate cancer behavior. Cancer Cell 1(2), 203–209 (2002)

Khan, J., Wei, J.S., Ringner, M., Saal, L.H., Ladanyi, M., Westermann, F., Berthold, F., Schwab, M., Antonescu, C.R., Peterson, C., et al.: Classification and diagnostic prediction of cancers using gene expression profiling and artificial neural networks. Nat. Med. 7(6), 673–679 (2001)

Sun, L., Zhang, X., Qian, Y., Xu, J., Zhang, S.: Feature selection using neighborhood entropy-based uncertainty measures for gene expression data classification. Inf. Sci. 502, 18–41 (2019)

Huo, Y., Xin, L., Kang, C., Wang, M., Ma, Q., Yu, B.: Sgl-svm: a novel method for tumor classification via support vector machine with sparse group lasso. J. Theor. Biol. 486, 110098 (2020)

Acknowledgements

The authors would like to thank the Ministry of Higher Education Malaysia for the financial support under the FRGS Grant with Project Code: FRGS/1/2020/ICT06/USM/02/1.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest.

Additional information

Communicated by Rafiqul I. Chowdhury.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Aminu, M., Ahmad, N.A. Effective Dimensionality Reduction Using Kernel Locality Preserving Partial Least Squares Discriminant Analysis. Bull. Malays. Math. Sci. Soc. 46, 87 (2023). https://doi.org/10.1007/s40840-023-01479-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40840-023-01479-1