Abstract

In this work, we introduce a new algorithm for finding the minimum-norm solution of the multiple-sets split variational inequality problem in real Hilbert spaces. The strong convergence of the iterative sequence generated by the algorithm method is established under the condition that the mappings are monotone and Lipschitz continuous. We apply our main result to study the minimum-norm solution of the multiple-sets split feasibility problem and the split variational inequality problem. Finally, a numerical example is given to illustrate the proposed algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(\mathcal {H}_1\) and \(\mathcal {H}_2\) be two real Hilbert spaces, and let \(A:\mathcal {H}_1\longrightarrow \mathcal {H}_2\) be a bounded linear operator. Given nonempty closed convex subsets \(C_i\subseteq \mathcal {H}_1\), \(Q_j\subseteq \mathcal {H}_2\) for \(i=1,2,\ldots ,M\) and \(j=1,2,\ldots , N\), respectively. Let \(F_i: \mathcal {H}_1\longrightarrow \mathcal {H}_1\), \(i=1,2,\ldots ,M\) and \(G_j: \mathcal {H}_2\longrightarrow \mathcal {H}_2\), \(j=1,2,\ldots , N\) be mappings. The multiple-sets split variational inequality problem (MSSVIP), introduced first by Censor et al. [18], can be formulated as follows:

such that

If the solution sets of variational inequality problems \(VIP(C_i, F_i)\) and \(VIP(Q_j, G_j)\) are denoted by \(\hbox {Sol}(C_i, F_i)\) and \(\hbox {Sol}(Q_j, G_j)\), respectively, then the MSSVIP becomes the problem of finding \(x^*\in \displaystyle \bigcap \nolimits _{i=1}^{M}\hbox {Sol}(C_i, F_i)\) such that \(Ax^*\in \displaystyle \bigcap \nolimits _{j=1}^{N}\hbox {Sol}(Q_j, G_j)\).

When \(M=N=1\), the MSSVIP reduces to the split variational inequality problem, shortly SVIP,

such that

where C and Q are two nonempty closed convex subsets of \(\mathcal {H}_1\) and \(\mathcal {H}_2\), respectively, and \(F: \mathcal {H}_1\longrightarrow \mathcal {H}_1\), \(G: \mathcal {H}_2\longrightarrow \mathcal {H}_2\) are two mappings.

The SVIP was introduced and investigated by Censor et al. [18] in the case when F is \(\alpha \)-inverse strongly monotone on \(\mathcal {H}_1\), G is \(\beta \)-inverse strongly monotone on \(\mathcal {H}_2\), which starts from a given point \(x^0\in \mathcal {H}_1\), for all \(n\ge 0\), the next iterate is defined as

where \(\gamma \in \Big (0, \displaystyle \frac{1}{\Vert A\Vert ^2}\Big )\), \(0\le \lambda \le 2\min \{\alpha , \beta \}\), \(P_C^{F, \lambda }=P_C(I-\lambda F)\) and \(P_Q^{G, \lambda }=P_Q(I-\lambda G)\). They showed that the sequence \(\{x^n\}\) converges weakly to a solution of the split variational inequality problem, provided that the solution set of the SVIP is nonempty. If we consider only the problem VIP(C, F), then VIP(C, F) is a classical variational inequality problem, which was studied by many authors, see, for example, [1, 4, 5, 19, 20, 24,25,26, 28, 31, 34, 35, 38, 42, 43] and the references therein.

If \(F_i=0\) for all \(i=1,2,\ldots ,M\) and \(G_j=0\) for all \(j=1,2,\ldots ,N\), then the MSSVIP becomes the multiple-sets split feasibility problem (MSSFP):

The MSSFP, which has been first introduced and studied by Censor et al [17], is a general way to characterize various linear inverse problems which arises in many real-world application problems such as intensity-modulated radiation therapy [14, 15] and medical image reconstruction [22]. A special case of the MSSFP, when \(M=N=1\), is the split feasibility problem (SFP), which is formulated as finding a point \(x^*\in C\) such that \(Ax^*\in Q\), where C and Q are two nonempty closed convex subsets of real Hilbert spaces \(\mathcal {H}_1\) and \(\mathcal {H}_2\), respectively. Recently, it has been found that the SFP has some practical applications in various disciplines such as signal processing, image restoration, intensity-modulated radiation therapy [15, 16, 21] and many other fields. Many iterative projection methods have been developed to solve the MSSFP, the SFP and their generalizations. See, for example, [2, 3, 6,7,8,9,10,11,12, 17, 23, 27, 29, 30, 32, 33, 36, 39,40,41, 44, 45, 47, 48] and the references cited there.

In 2012, Ceng et al. [13, Theorem 3.1] presented a method, which is called the relaxed extragradient method, for finding minimum-norm solution of the SFP. This method is given as: For \(x^0\in \mathcal {H}_1\), the sequence \(\{x^n\}\) is generated by

where \(\nabla f_{\alpha _n}\) stands for \(A^*(I-P_Q)A+\alpha _n I\). They proved that, under some conditions imposed on \(\{\lambda _n\}\), \(\{\alpha _n\}\), \(\{\beta _n\}\), \(\{\gamma _n\}\) and \(\{\delta _n\}\), the sequence \(\{x^n\}\) generated by (1) converges strongly to the minimum-norm solution of the SFP if the solution set \(\varGamma \) of the SFP is nonempty.

Motivated and inspired by the above-mentioned works, in this paper, we present an iterative method for finding minimum-norm solution of the MSSVIP, which is to find a point \(x^*\in \varOmega \) such that

where \(\varOmega =\Big \{x^*\in \displaystyle \bigcap \limits _{i=1}^{M}\hbox {Sol}(C_i, F_i): Ax^*\in \displaystyle \bigcap \limits _{j=1}^{N}\hbox {Sol}(Q_j, G_j)\Big \}\) is the solution set of the MSSVIP and \(A:\mathcal {H}_1\longrightarrow \mathcal {H}_2\) is a bounded linear operator between \(\mathcal {H}_1\) and \(\mathcal {H}_2\).

The paper is organized as follows. Section 2 deals with some definitions and lemmas that will be used in the third section, where we describe the algorithm and prove its strong convergence. We close Sect. 3 by considering some applications to the multiple-sets split feasibility problem and the split variational inequality problem. Finally, in the last section, we perform a numerical example to illustrate the convergence of the proposed algorithm.

2 Preliminaries

Let C be a nonempty closed convex subset of a real Hilbert space \(\mathcal {H}\). It is well known that for all point \(x\in \mathcal {H}\), there exists a unique point \(P_C(x)\in C\) such that

The mapping \(P_C:\mathcal {H}\longrightarrow C\) defined by (2) is called the metric projection of \(\mathcal {H}\) onto C. It is known that \(P_C\) is nonexpansive. Moreover, for all \(x\in \mathcal {H}\) and \(y\in C\), \(y=P_C(x)\) if and only if

We denote the strong (weak) convergence of a sequence \(\{x^n\}\) to x in \(\mathcal {H}\) by \(x^n\longrightarrow x\) (\(x^n\rightharpoonup x\)), respectively. In what follows, we recall some well-known definitions and lemmas which will be used in this paper.

Definition 1

Let \(\mathcal {H}_1\) and \(\mathcal {H}_2\) be two Hilbert spaces and let \(A: \mathcal {H}_1\longrightarrow \mathcal {H}_2\) be a bounded linear operator. An operator \(A^*: \mathcal {H}_2\longrightarrow \mathcal {H}_1\) with the property \(\langle A(x), y\rangle =\langle x, A^*(y)\rangle \) for all \(x\in \mathcal {H}_1\) and \(y\in \mathcal {H}_2\), is called an adjoint operator.

The adjoint operator of a bounded linear operator A between Hilbert spaces \(\mathcal {H}_1\), \(\mathcal {H}_2\) always exists and is uniquely determined. Furthermore, \(A^*\) is a bounded linear operator and \(\Vert A^*\Vert =\Vert A\Vert \).

Definition 2

A mapping \(F:\mathcal {H}\longrightarrow \mathcal {H}\) is said to be

(i) L-Lipschitz continuous on \(\mathcal {H}\) if

(ii) monotone on \(\mathcal {H}\) if

Lemma 1

Let C be a nonempty closed convex subset of a real Hilbert space \(\mathcal {H}\). Let \(G: \mathcal {H}\longrightarrow \mathcal {H}\) be monotone and L-Lipschitz continuous on \(\mathcal {H}\) such that \(\hbox {Sol}(C, G)\ne \emptyset \). Let \(x\in \mathcal {H}\), \(0<\lambda <\dfrac{1}{L}\) and define

Then,

-

(i)

$$\begin{aligned} \langle x-y, z\rangle \ge (1-\lambda L)\Vert x-y\Vert ^2. \end{aligned}$$(3)

-

(ii)

$$\begin{aligned} \Vert x-y\Vert \le \dfrac{\sqrt{1+\lambda ^2L^2}}{1-\lambda L}\Vert x-t\Vert . \end{aligned}$$(4)

-

(iii)

For all \(x^*\in \hbox {Sol}(C, G)\)

$$\begin{aligned} \Vert t-x^*\Vert ^2\le \Vert x-x^*\Vert ^2-\Vert x-t\Vert ^2. \end{aligned}$$(5)

Proof

(i) From the definition of z and the Lipschitz continuity of G, we have

The inequality (3) holds. Since \(1-\lambda L>0\), from (3) we have that \(z\ne 0\) if \(y\ne x\). So t is well defined. (ii) If \(y=x\) then \(t=x\), so (4) holds. Suppose that \(y\ne x\), from the monotonicity and the L-Lipschitz continuity of G, we have

This implies that

So, by the definition of t and (3), we have

and hence

(iii) If \(y=x\) then \(t=x\). The inequality (5) holds. Suppose that \(y\ne x\). Since G is monotone and \(x^*\in \hbox {Sol}(C, G)\), we have

From \(y=P_C(x-\lambda G(x))\) and \(x^*\in C\), we have

Combining the above inequality with \(x-\lambda G(x)-y=z-\lambda G(y)\), we get

It follows from (6) and (7) that

By the definition of t, (3) and (8), we have

The proof is complete. \(\square \)

Lemma 2

([29, Lemma 3]) Let C be a nonempty closed convex subset of a real Hilbert space \(\mathcal {H}\). Let \(F: \mathcal {H}\longrightarrow \mathcal {H}\) be monotone and L-Lipschitz continuous on \(\mathcal {H}\). Assume that \(\lambda _n\ge a>0\) for all n, \(\{x^n\}\) is a sequence in \(\mathcal {H}\) satisfying \(x^n\rightharpoonup \overline{x}\) and \(\lim \limits _{n\longrightarrow \infty }\Vert x^n-y^n\Vert =0\), where \(y^n=P_C(x^n-\lambda _n F(x^n))\) for all n. Then \(\overline{x}\in \hbox {Sol}(C, F)\).

Lemma 3

([37, Remark 4.4]) Let \(\{a_n\}\) be a sequence of nonnegative real numbers. Suppose that for any integer m, there exists an integer p such that \(p\ge m\) and \(a_p\le a_{p+1}\). Let \(n_0\) be an integer such that \(a_{n_0}\le a_{n_0+1}\) and define, for all integer \(n\ge n_0\), by

Then, \(\{\tau (n)\}_{n\ge n_0}\) is a nondecreasing sequence satisfying \(\lim \limits _{n\longrightarrow \infty }\tau (n)=\infty \) and the following inequalities hold:

Lemma 4

( [46, Lemma 2.5]) Assume \(\{a_n\}\) is a sequence of nonnegative real numbers satisfying the condition

where \(\{\alpha _n\}\) is a sequence in (0, 1) and \(\{\xi _n\}\) is a sequence in \(\mathbb {R}\) such that

-

(i)

\(\displaystyle \sum \limits _{n=0}^{\infty }\alpha _n=\infty \);

-

(ii)

\(\limsup \limits _{n\longrightarrow \infty }\xi _n\le 0\). Then, \(\lim \limits _{n\longrightarrow \infty }a_n=0\).

3 The Algorithm and Convergence Analysis

In this section, we propose a strong convergence algorithm for finding the minimum-norm solution of the multiple-sets split variational inequality problem. We impose the following assumptions on the mappings \(F_i: \mathcal {H}_1\longrightarrow \mathcal {H}_1\) and \(G_j: \mathcal {H}_2\longrightarrow \mathcal {H}_2\), \(i=1,2,\ldots ,M\) and \(j=1,2,\ldots , N\).

\((A_F)\): \(F_i\) is monotone and \(L_i\)-Lipschitz continuous on \(\mathcal {H}_1\) for each \(i=1,2,\ldots ,M\).

\((A_G)\): \(G_j\) is monotone and \(\kappa _j\)-Lipschitz continuous on \(\mathcal {H}_2\) for each \(j=1,2,\ldots ,N\).

The algorithm can be expressed as follows

Algorithm 1.

Step 0. Choose \(\{\delta _n\}\subset [\underline{\delta }, \overline{\delta }]\subset \Big (0, \dfrac{1}{\Vert A\Vert ^2+1}\Big )\), \(\{\lambda _n\}\subset [\underline{\lambda }, \overline{\lambda }]\subset \Big (0, \min \limits _{1\le i\le M}\Big \{\displaystyle \frac{1}{L_i}\Big \}\Big )\), \(\{\mu _n\}\subset [\underline{\mu }, \overline{\mu }]\subset \Big (0, \min \limits _{1\le j\le N}\Big \{\displaystyle \frac{1}{\kappa _j}\Big \}\Big )\), \(\{\alpha _n\}\subset (0, 1)\) such that \(\lim \limits _{n\longrightarrow \infty }\alpha _n\!=\!0\), \(\displaystyle \sum \limits _{n=0}^{\infty }\!\alpha _n\!=\!\infty \). Step1. Let \(x^0\in \mathcal {H}_1\). Set \(n:=0\).

Step 2. Compute, for all \(j=1,2,\ldots ,N\)

Step 3. Find among \(w_j^n\), \(j=1,2,\ldots ,N\), the farthest element from \(b^n\), i.e.,

Step 4. Compute, for all \(i=1,2,\ldots ,M\)

Step 5. Find among \(s_i^n\), \(i=1,2,\ldots ,M\), the farthest element from \(a^n\), i.e.,

Step 6. Compute

Step 7. Set \(n:=n+1\), and go to Step 2.

The following theorem shows validity and convergence of the algorithm.

Theorem 1

Suppose that the assumptions \((A_F),(A_G)\) hold. Then, the sequence \(\{x^n\}\) generated by Algorithm 1 converges strongly to the minimum-norm solution of the multiple-sets split variational inequality problem.

Proof

The proof of the theorem is divided into several steps. Let \(x^*=P_\varOmega (0)\). We will prove that \(\{x^n\}\) converges in norm to \(x^*\). We divide the proof into several steps. Step 1. For all \(n\ge 0\), we have

In fact, it holds that

and

Step 2. For all \(n\ge 0\), we show that

Since \(x^*\in \varOmega \), we have \(x^*\in \hbox {Sol}(C_i, F_i)\), \(Ax^*\in \hbox {Sol}(Q_j, G_j)\) for all \(i=1,2,\ldots ,M\), \(j=1,2,\ldots ,N\). From Lemma 1, we get, for all \(n\ge 0\)

Hence,

From (13), we obtain

Step 3. We show that the sequences \(\{x^n\}\), \(\{a^n\}\) and \(\{t^n\}\) are bounded. From Lemma 1, we have, for all \(n\ge 0\)

From (11), (14) and \(\{\delta _n\}\subset {[}\underline{\delta }, \overline{\delta }]\subset \Big (0, \displaystyle \frac{1}{\Vert A\Vert ^2+1}\Big )\), we get

This implies that

So, by induction, we obtain, for every \(n\ge 0\) that

Hence, the sequence \(\{x^n\}\) is bounded and so are the sequences \(\{a^n\}\) and \(\{t^n\}\) thanks to (15). Step 4. We prove that \(\{x^n\}\) converges strongly to \(x^*=P_\varOmega (0)\). Let us consider two cases. Case 1. There exists \(n_0\) such that the sequence \(\{\Vert x^n-x^*\Vert \}\) is decreasing for \(n\ge n_0\). In this case, the limit of \(\{\Vert x^n-x^*\Vert \}\) exists. So, it follows from (10) and (15), for all \(n\ge 0\), that

Since the limit of \(\{\Vert x^n-x^*\Vert \}\) exists, \(\lim \limits _{n\longrightarrow \infty }\alpha _n=0\), \(\{t^n\}\) is bounded, it follows from the above inequality that

Combining (16) and (17), we have

From (14), we find

From (11), (17) and \(\{\delta _n\}\subset [\underline{\delta }, \overline{\delta }]\subset \Big (0, \displaystyle \frac{1}{\Vert A\Vert ^2+1}\Big )\), we get

On the other hand,

which together with (20) implies

From Lemma 1, we have, for all \(i=1,2,\ldots ,M\)

From Lemma 1, we have

Note that

Using the above inequality, (19) and (21), we have

Choose a subsequence \(\{t^{n_k}\}\) of \(\{t^n\}\) such that

Since \(\{t^{n_k}\}\) is bounded, we may assume without loss of generality that \(t^{n_k}\rightharpoonup \overline{t}\). Therefore,

From \(t^{n_k}\rightharpoonup \overline{t}\) and (19), we get \(a^{n_k}\rightharpoonup \overline{t}\). From (23), we have \(\lim \limits _{k\longrightarrow \infty }\Vert a^{n_k}-y_i^{n_k}\Vert =0\) for all \(i=1,2,\ldots ,M\). Note that \(y_i^{n_k}=P_{C_i}(a^{n_k}-\lambda _{n_k} F_i(a^{n_k}))\). From Lemma 2, we get \(\overline{t}\in \hbox {Sol}(C_i, F_i)\) for all \(i=1,2,\ldots ,M\), that is, \(\overline{t}\in \displaystyle \bigcap \nolimits _{i=1}^{M}\hbox {Sol}(C_i, F_i)\).

From \(t^{n_k}\rightharpoonup \overline{t}\) and (26), we get \(x^{n_k}\rightharpoonup \overline{t}\). Thus, \(b^{n_k}=A(x^{n_k})\rightharpoonup A(\overline{t})\). From (25), we have \(\lim \limits _{k\longrightarrow \infty }\Vert b^{n_k}-u_j^{n_k}\Vert =0\) for all \(j=1,2,\ldots ,N\). Note that \(u_j^{n_k}=P_{Q_j}(b^{n_k}-\mu _{n_k} G_j(b^{n_k}))\). From Lemma 2, we obtain \(A(\overline{t})\in \hbox {Sol}(Q_j, G_j)\) for all \(j=1,2,\ldots ,N\), that is, \(A(\overline{t})\in \displaystyle \bigcap \nolimits _{j=1}^{N}\hbox {Sol}(Q_j, G_j)\), which together with \(\overline{t}\in \displaystyle \bigcap \nolimits _{i=1}^{M}\hbox {Sol}(C_i, F_i)\) implies \(\overline{t}\in \varOmega \).

where

Since \(x^*=P_\varOmega (0)\) and \(\overline{t}\in \varOmega \), it follows that

Therefore, since \(\{t^n\}\) is bounded, \(\lim \limits _{n\longrightarrow \infty }\alpha ^n=0\), we get

By Lemma 4, we have \(\lim \limits _{n\longrightarrow \infty }\Vert x^n-x^*\Vert ^2=0\), that is, \(x^n\longrightarrow x^*\). Case 2. Suppose that for any integer m, there exists an integer n such that \(n\ge m\) and \(\Vert x^n-x^*\Vert \le \Vert x^{n+1}-x^*\Vert \). According to Lemma 3, there exists a nondecreasing sequence \(\{\tau (n)\}\) of \(\mathbb N\) such that \(\lim \limits _{n\longrightarrow \infty }\tau (n)=\infty \) and the following inequalities hold for all (sufficiently large) \(n\in \mathbb N\).

From the boundedness of \(\{t^n\}\), \(\lim \limits _{n\longrightarrow \infty }\alpha _n=0\) and (28), we have

Thus,

Thus, from (29), (30) and the boundedness of \(\{x^n\}\), \(\{a^n\}\), \(\{t^n\}\), we obtain

As proved in the first case, we obtain

So, from the boundedness of \(\{t^n\}\) and \(\lim \limits _{n\longrightarrow \infty }\alpha _n=0\), we have

From (10), (15) and (27), we have

Since \(\alpha _{\tau (n)}>0\), it follows from above inequality that

Taking the limit in (33) as \(n\longrightarrow \infty \), and using (31), we obtain that

Therefore, \(x^n\longrightarrow x^*\). This completes the proof of Theorem. \(\square \)

From Algorithm 1 and Theorem 1, by taking \(F_i=0\) for all \(i=1,2,\ldots ,M\) and \(G_j=0\) for all \(j=1,2,\ldots ,N\), we get the following corollary:

Corollary 1

Assume that the solution set \(\varOmega \) of the MSSFP is nonempty. Choose \(x^0\in \mathcal {H}_1\), \(\{\delta _n\}\subset [\underline{\delta }, \overline{\delta }]\subset \Big (0, \dfrac{1}{\Vert A\Vert ^2+1}\Big )\), \(\{\alpha _n\}\subset (0, 1)\) such that \(\lim \nolimits _{n\longrightarrow \infty }\alpha _n=0\) and \(\displaystyle \sum \nolimits _{n=0}^{\infty }\alpha _n=\infty \).

For each iteration \(n\ge 0\), compute

where

Further, we compute

where

and define the next iteration as

Then, the sequence \(\{x^n\}\) converges strongly to the minimum-norm solution of the MSSFP.

Corollary 2

Let C and Q be two nonempty closed convex subset of two real Hilbert spaces \(\mathcal {H}_1\) and \(\mathcal {H}_2\), respectively. Let \(F: \mathcal {H}_1\longrightarrow \mathcal {H}_1\) be monotone and L-Lipschitz continuous on \(\mathcal {H}_1\), \(G: \mathcal {H}_2\longrightarrow \mathcal {H}_2\) be monotone and \(\kappa \)-Lipschitz continuous on \(\mathcal {H}_2\). Suppose that the sequences \(\{\alpha _n\}\), \(\{\delta _n\}\), \(\{\lambda _n\}\) and \(\{\mu _n\}\) satisfy the following restrictions:

Choose \(x^0\in \mathcal {H}_1\), and for each iteration \(n\ge 0\), compute

Further, we compute

and define the next iteration as

Then, the sequence \(\{x^n\}\) converges strongly to the minimum-norm solution of the SVIP, provided that the solution set \(\varOmega =\{x^*\in \hbox {Sol}(C, F): Ax^*\in \hbox {Sol}(Q, G)\}\) of the SVIP is nonempty.

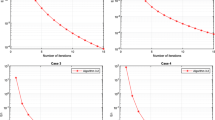

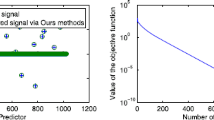

4 Numerical Illustrations

Example 1

Let \(\mathcal {H}_1=\mathbb {R}^4\) with the norm \(\Vert x\Vert =(x_1^2+x_2^2+x_3^2+x_4^2)^{\frac{1}{2}}\) for \(x=(x_1, x_2, x_3, x_4)^T\in \mathbb {R}^4\) and \(\mathcal {H}_2=\mathbb {R}^2\) with the norm \(\Vert y\Vert =(y_1^2+y_2^2)^{\frac{1}{2}}\) for all \(y=(y_1, y_2)^T\in \mathbb {R}^2\).

Let \(A(x)=(x_1+x_3+x_4, x_2+x_3-x_4)^T\) for all \(x=(x_1, x_2, x_3, x_4)^T\in \mathbb {R}^4\). Then, A is a bounded linear operator from \(\mathbb {R}^4\) into \(\mathbb {R}^2\) with \(\Vert A\Vert =\sqrt{3}\). For \(y=(y_1, y_2)^T\in \mathbb {R}^2\), let \(B(y)=(y_1, y_2, y_1+y_2, y_1-y_2)^T\). Then, B is a bounded linear operator from \(\mathbb {R}^2\) into \(\mathbb {R}^4\) with \(\Vert B\Vert =\sqrt{3}\). Moreover, for any \(x=(x_1, x_2, x_3, x_4)^T\in \mathbb {R}^4\) and \(y=(y_1, y_2)^T\in \mathbb {R}^2\), \(\langle A(x), y\rangle =\langle x, B(y)\rangle \), so \(B=A^*\) is an adjoint operator of A.

Let

and

The solution set \(\varOmega =\{x=(x_1, x_2, x_3, x_4)^T\in C_1\cap C_2\cap C_3\cap C_4\cap C_5\cap C_6: A(x)\in Q_1\cap Q_2\}\) of the MSSFP is given by

which can be rewritten as follows

Suppose \(x=(a+4, 1-3a, a, -2a-1)^T\in \varOmega \), then

The above equality holds if and only if \(a=\dfrac{1}{10}\). So the minimum-norm solution \(x^*\) of the MSSFP is \(x^*=\Big (\dfrac{41}{10}, \dfrac{7}{10}, \dfrac{1}{10}, -\dfrac{6}{5}\Big )^T\). Select a random starting point \(x^0=(-3,7,-4,10)^T\in \mathbb {R}^4\) for Algorithm 1. With \(\delta _n=\dfrac{n+1}{5n+6}\), \(\alpha _n=\dfrac{1}{n+2}\), \(\varepsilon =10^{-5}\) and the stopping criterion \(\Vert x^{n+1}-x^n\Vert \le \varepsilon \), we obtain an approximate solution obtained after 111490 iterations (with time 27.2783 seconds) is

which is a good approximation to the minimum-norm solution of the MSSFP \(x^*=\Big (\dfrac{41}{10}, \dfrac{7}{10}, \dfrac{1}{10}, -\dfrac{6}{5}\Big )^T\).

We perform the iterative schemes in PYTHON 3.9 running on a laptop with Intel(R) Core(TM) i5-5200U CPU @ 2.20GHz, 4 GB RAM.

5 Conclusion

The paper has presented an iterative algorithm for finding minimum-norm solutions of the multiple-sets split variational inequality problem in real Hilbert spaces. Under some suitable conditions imposed on parameters, we have proved the strong convergence of the iterative sequence to the minimum-norm solution of the MSSVIP. As special cases, we apply the main theorem to find the minimum-norm solution of the MSSFP and the SVIP. A simple numerical example has been performed to illustrate the performance of the proposed algorithm.

References

Adamu, A., Deepho, J., Ibrahim, A.H., Abubakar, A.B.: Approximation of zeros of sum of monotone mappings with applications to variational inequality and image restoration problems. Nonlinear Funct. Anal. and Appl. 26(2), 411–432 (2021)

Afassinou, K., Narain, O.K., Otunuga, O.E.: Iterative algorithm for approximating solutions of Split Monotone Variational Inclusion, Variational inequality and fixed point problems in real Hilbert spaces. Nonlinear Funct. Anal. and Appl. 25(3), 491–510 (2020)

Anh, P.K., Anh, T.V., Muu, L.D.: On bilevel split pseudomonotone variational inequality problems with applications. Acta Math, Vietnam. 42, 413–429 (2017)

Anh, P.N., Muu, L.D., Strodiot, J.J.: Generalized projection method for non-Lipschitz multivalued monotone variational inequalities. Acta Math, Vietnam. 34, 67–79 (2009)

Anh, T.V.: A strongly convergent subgradient Extragradient-Halpern method for solving a class of bilevel pseudomonotone variational inequalities. Vietnam J. Math. 45, 317–332 (2017)

Anh, T.V.: An extragradient method for finding minimum-norm solution of the split equilibrium problem. Acta Math, Vietnam. 42, 587–604 (2017)

Anh, T.V.: A parallel method for variational inequalities with the multiple-sets split feasibility problem constraints. J. Fixed Point Theory Appl. 19, 2681–2696 (2017)

Anh, T.V.: Linesearch methods for bilevel split pseudomonotone variational inequality problems. Numer. Algorithms 81, 1067–1087 (2019)

Anh, T.V., Muu, L.D.: A projection-fixed point method for a class of bilevel variational inequalities with split fixed point constraints. Optimization 65, 1229–1243 (2016)

Buong, N.: Iterative algorithms for the multiple-sets split feasibility problem in Hilbert spaces. Numer. Algorithms 76, 783–798 (2017)

Byrne, C.: Iterative oblique projection onto convex sets and the split feasibility problem. Inverse Probl. 18, 441–453 (2002)

Byrne, C., Censor, Y., Gibali, A., Reich, S.: The split common null point problem. J. Nonlinear Convex Anal. 13, 759–775 (2012)

Ceng, L.C., Ansari, Q.H., Yao, J.C.: Relaxed extragradient methods for finding minimum-norm solutions of the split feasibility problem. Nonlinear Anal. 75, 2116–2125 (2012)

Censor, Y., Ben-Israel, A., Xiao, Y., Galvin, J.M.: On linear infeasibility arising in intensity-modulated radiation therapy inverse planning. Linear Algebra Appl. 428, 1406–1420 (2008)

Censor, Y., Bortfeld, T., Martin, B., Trofimov, A.: A unified approach for inversion problems in intensity-modulated radiation therapy. Phys. Med. Biol. 51, 2353–2365 (2006)

Censor, Y., Elfving, T.: A multiprojection algorithm using Bregman projections in a product space. Numer. Algorithms. 8, 221–239 (1994)

Censor, Y., Elfving, T., Kopf, N., Bortfeld, T.: The multiple-sets split feasibility problem and its applications for inverse problems. Inverse Prob. 21, 2071–2084 (2005)

Censor, Y., Gibali, A., Reich, S.: Algorithms for the split variational inequality problem. Numer. Algorithms 59, 301–323 (2012)

Censor, Y., Gibali, A., Reich, S.: The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 148, 318–335 (2011)

Censor, Y., Gibali, A., Reich, S.: Strong convergence of subgradient extragradient methods for the variational inequality problem in Hilbert space. Optim. Meth. Softw. 26, 827–845 (2011)

Censor, Y., Segal, A.: Iterative projection methods in biomedical inverse problems. In: Censor, Y., Jiang, M., Louis, A.K. (eds.) Mathematical Methods in Biomedical Imaging and Intensity-Modulated Therapy, pp. 65–96. IMRT, Edizioni della Norale, Pisa, Italy (2008)

Combettes, P.L.: The convex feasibility problem in image recovery. Adv. Imaging Electron Phys. 95, 155–270 (1996)

Dadashi, V.: Shrinking projection algorithms for the split common null point problem. Bull. Aust. Math. Soc. 99(2), 299–306 (2017)

Dinh, B.V., Muu, L.D.: Algorithms for a class of bilevel programs involving pseudomonotone variational inequalities. Acta Math, Vietnam. 38, 529–540 (2013)

Dong, Q.L., Jiang, D., Gibali, A.: A modified subgradient extragradient method for solving the variational inequality problem. Numer. Algorithms 79, 927–940 (2018)

Facchinei, F., Pang, J.S.: Finite-Dimensional Variational Inequalities and Complementary Problems. Springer, New York (2003)

Hai, N.M., Van, L.H.M., Anh, T.V.: An Algorithm for a Class of Bilevel Variational Inequalities with Split Variational Inequality and Fixed Point Problem Constraints. Acta Math, Vietnam. 46, 515–530 (2021)

He, B.S., Liao, L.Z.: Improvements of some projection methods for monotone nonlinear variational inequalities. J. Optim. Theory Appl. 112, 111–128 (2002)

Huy, P.V., Hien, N.D., Anh, T.V.: A strongly convergent modified Halpern subgradient extragradient method for solving the split variational inequality problem. Vietnam J. Math. 48, 187–204 (2020)

Huy, P.V., Van, L.H.M., Hien, N.D., Anh, T.V.: Modified Tseng’s extragradient methods with self-adaptive step size for solving bilevel split variational inequality problems. Optimization (2020). https://doi.org/10.1080/02331934.2020.1834557

Kim, J.K., Anh, P.N., Anh, T.T.H., Hien, N.D.: Projection methods for solving the variational inequalities involving unrelated nonexpansive mappings. J. Nonlinear Convex Anal. 21(11), 2517–2537 (2020)

Kim, J.K., Tuyen, T.M.: Parallel iterative method for solving the split common null point problem in Banach spaces. J. Nonlinear Convex Anal. 20(10), 2075–2093 (2019)

Kim, J.K., Tuyen, T.M., Ha, M.T.N.: Two projection methods for solving the split common fixed point problem with multiple output sets in Hilbert spaces. Numer. Funct. Anal. Optim. 42(8), 973–988 (2021)

Konnov, I.V.: Combined Relaxation Methods for Variational Inequalities. Springer, Berlin (2000)

Kraikaew, R., Saejung, S.: Strong Convergence of the Halpern Subgradient Extragradient Method for Solving Variational Inequalities in Hilbert Spaces. J. Optim. Theory Appl. 163, 399–412 (2014)

Liu, B., Qu, B., Zheng, N.: A Successive Projection Algorithm for Solving the Multiple-Sets Split Feasibility Problem. Numer. Funct. Anal. Optim. 35, 1459–1466 (2014)

Maingé, P.E.: A hybrid extragradient-viscosity method for monotone operators and fixed point problems. IAM J. Control Optim. 47, 1499–1515 (2008)

Malitsky, Y.V.: Projected reflected gradient methods for variational inequalities. SIAM J. Optim. 25, 502–520 (2015)

Takahashi, W.: The split feasibility problem in Banach spaces. J. Nonlinear Convex Anal. 15, 1349–1355 (2014)

Takahashi, W.: The split feasibility problem and the shrinking projection method in Banach spaces. J. Nonlinear Convex Anal. 16(7), 1449–1459 (2015)

Takahashi, W.: The split common null point problem in Banach spaces. Arch. Math. 104, 357–365 (2015)

Thong, D.V., Vinh, N.T., Cho, Y.J.: A strong convergence theorem for Tseng’s extragradient method for solving variational inequality problems. Optim Lett. 14, 1157–1175 (2020)

Thuy, N.T.T., Hoai, P.T.T., Hoa, N.T.T.: Explicit iterative methods for maximal monotone operators in Hilbert spaces. Nonlinear Funct. Anal. Appl. 25(4), 753–767 (2020)

Tuyen, T.M., Ha, N.S., Thuy, N.T.T.: A shrinking projection method for solving the split common null point problem in Banach spaces. Numer. Algorithms 81, 813–832 (2019)

Wen, M., Peng, J.G., Tang, Y.C.: A cyclic and simultaneous iterative method for solving the multiple-sets split feasibility problem. J. Optim. Theory Appl. 166(3), 844–860 (2015)

Xu, H.-K.: Iterative algorithms for nonlinear operators. J. London Math. Soc. 66, 240–256 (2002)

Zhao, J.L., Yang, Q.Z.: A simple projection method for solving the multiple-sets split feasibility problem. Inverse Probl. Sci. Eng. 21, 537–546 (2013)

Zhao, J.L., Zhang, Y.J., Yang, Q.Z.: Modified projection methods for the split feasibility problem and the multiple-sets split feasibility problem. Appl. Math. Comput. 219, 1644–1653 (2012)

Acknowledgements

The first author would like to thank Van Lang University, Vietnam, for funding this work.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Communicated by Anton Abdulbasah Kamil.

Rights and permissions

About this article

Cite this article

Cuong, T.L., Anh, T.V. An Iterative Method for Solving the Multiple-Sets Split Variational Inequality Problem. Bull. Malays. Math. Sci. Soc. 45, 1737–1755 (2022). https://doi.org/10.1007/s40840-022-01283-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40840-022-01283-3

Keywords

- Multiple-sets split variational inequality problem

- Minimum-norm solution

- Strong convergence

- Multiple-sets split feasibility problem