Abstract

This paper proposes new regressive proportional and partial proportional odds models and a framework to predict trajectories of repeated ordinal outcomes, which is a new development. We illustrated the proposed models using repeated ordinal responses on activities of daily living from older adults collected biannually through the Health and Retirement Study in the USA. The proposed framework uses the marginal and conditional modeling approach to obtain the joint model and predict the joint probability of a sequence of ordinal outcomes and trajectories. Besides, these models significantly reduce over-parameterization, as one needs to fit one model for each follow-up. This model allows assessing the effect of prior responses on current outcomes, including interaction terms among previous responses and between prior outcomes and covariates in the model. Also, it permits the varying number of risk factors for each follow-up. The prediction accuracy for full, training, and test data is close and varies between 0.91 and 0.94. The bootstrap simulation demonstrates the bias of parameter estimates, accuracy, and predicted joint probabilities are negligible with very low mean squared error. This model and framework would be instrumental in studying trajectories generated from longitudinal studies. The proposed framework can be used to analyze big data generated from repeated measures. This model readily uses a divide and recombine approach for big data in a statistically valid manner.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Due to increased life expectancy in many countries, older adults are exposed to a higher risk of adverse health outcomes. Also, they are vulnerable to increased utilization of health care services and death [11]. For example, difficulty in the activity of daily living (ADL) may prospectively relate to the progression of functional limitations and disability among the elderly [5]. The Health and Retirement Study (HRS) is a nationally representative longitudinal survey in the USA and repeatedly measured ADL as ordinal outcomes. Individuals make transitions over time among different response categories, and a trajectory based on the series of events is helpful to understand the disease progression [4, 6, 12, 15, 40]. This is a large-scale longitudinal study with more than 20 years of follow-up data on approximately 20,000 people producing a huge data volume. Nowadays, due to the lower cost of data acquisition, large and complex longitudinal data captured, termed big data. As a result, there are new statistical challenges in methodology, theory, and computation to get vital insight, actual behavior, and make sense of this extensive complex data. A special issue on “The role of Statistics in the era of big data” of Statistics and Probability Letters in 2018 was devoted to the role of statistics in the era of big data. Modeling transitions over time among different response categories and predicting trajectory risks based on various risk factors would be difficult.

A growing area of interest is to predict the joint probability of a sequence of events (trajectory) based on a specified covariate vector [23, 29, 32, 35, 41, 42]. Modeling these sequences allows us to predict likely future outcomes. Specifically, interest might be in (i) What is the expected risk of having a condition of a patient based on previous responses and risk factors? (ii) What is the predicted risk of occurrence of a sequence of events based on specified features at different follow-ups? (iii) What would be the predicted outcome at the subsequent follow-up with specified values of covariates and previous outcomes? (iv) Also, in a follow-up, it is interesting to study the interaction effects between risk factors and outcomes of earlier follow-ups and interaction among previous events. Using the predicted risk of a sequence of outcomes, health care providers can screen individuals that would help them to suggest necessary therapy and preventive measures. A physician can recommend early or regular office visits or prescribe medication to prevent hospitalization based on a patient’s trajectory [37]. The risk prediction can also allow a patient to be aware of the future course of the disease [39].

The prediction of trajectories from a sequence of ordinal outcomes based on specified covariates is a great challenge. To predict the joint probability of a series of events, we need to examine the progression of responses during subsequent follow-ups using a joint model (multivariate) for ordinal outcomes. A multivariate approach is often complicated and would be challenging to develop for a large number of follow-ups [16]. The multistate higher-order Markov model (conditional model) can be used to study the underlying dependence in consecutive follow-ups [24]. Using this model, one can estimate the risk for a sequence of events [26]. However, these models are restricted for a large number of follow-ups due to over-parameterization, and one cannot assess the impact of the prior outcomes due to effective stratification [16, 25]. Also, it is not possible to include the interaction between responses from previous follow-ups and interaction between previous responses and risk factors in the model [13]. For many follow-ups, Markov models require data set with a big sample size throughout the follow-ups.

Figure 1 displays three repeated outcomes, each with three categories and twenty-seven possible trajectories (paths). In this case, one needs to fit a total of thirteen models; one marginal model from follow-up one (baseline), three first-order and nine second-order Markov models. Which could be computationally cumbersome and may explode for a large number of repeated responses [14, 17]. Another choice is the regressive logistic models under the Markovian assumption, which include both binary outcomes in previous times in addition to covariates in the conditional models [7, 8, 23, 36]. Islam and Chowdhury [23] developed a regressive logistic model to predict the joint probability of a sequence of binary outcomes based on specified covariates, which reduces the fitting of conditional models significantly. Chowdhury and Islam [13] extended this model for repeated multinomial responses. The ordinal logistic regression models with different variants are some approaches to model ordinal response [2, 3, 9, 19, 33, 34], for example proportional odds, partial proportional odds, continuation ratio, stereotype adjacent category, and baseline category models. However, these are univariate models only for the single ordinal outcome.

Against this backdrop, we proposed two regressive models for repeated ordinal outcomes and showed the joint model, which is a new development. First, we proposed a proportional odds regressive model (POM) for repeated ordinal outcomes. For POM, one needs to test the proportional odds assumption [9]. Second, in the case of violations of proportional odds assumption, we proposed a partial proportional odds regressive model (PPOM) for repeated ordinal outcomes. We also applied the multinomial regressive logistic model (MNOM) for repeated responses [13] by ignoring the ordinal nature of the outcome variables. Then we estimated the risk for a sequence of events for specified covariate values by linking marginal and conditional probabilities. We obtained the marginal probability using the outcome from the first follow-up, and the conditional probabilities are estimated from the subsequent follow-ups using the proposed regressive models. Using data partitioning (training and test data), we computed the prediction accuracy to check over(under)fitting. Furthermore, 10,000 bootstrap simulations are performed to assess the proposed models’ performance. Finally, we illustrated the proposed methods using follow-up data from the USA’s Health and Retirement Study (HRS).

2 Repeated outcomes and trajectories

Suppose \(Y_1, Y_2\), and \(Y_3\) are three repeated ordinal outcomes with three categories that may represent three states of ADL difficulty (0,1,2). Figure 1 displays the possible transitions between three outcome categories from three follow-ups. A total of twenty-seven distinct trajectories (paths) are possible. Here, the first column shows marginal probabilities, and the second and third are conditional probabilities.

2.1 Notations

Let \({Y_{i1}},{Y_{i2}},...,{Y_{iJ_i}}\) represent the past and present responses for ith subject at jth follow-up where \((i=1,2,...,n \text { and }j=1,2,...,J_i)\), \(J_i\) is the number of follow-ups for subject i. For simplicity, subscript i is omitted in what follows next unless explicitly specified. Define, \(Y_j=s\) where \((s=0,1,2,...,S)\) with \(S+1\) outcome categories. The category 0 may denote non-event.

Following the notations used in [13] the joint probability mass function of \(Y_1,Y_2,\cdots ,Y_J\) with covariate vector \({\varvec{X=x}}\) can be expressed as:

where \({\varvec{X}}^\prime =[1,x_1,...,x_p]\) is vector of covariates for a subject at the first follow-up. It should be noted that \({\varvec{X}}={\varvec{x}}\) can be time dependent. Explanations of the functions of the right-hand side in Eq. (1) are as follows:

\(P(Y_1 = s\mid {\varvec{x}})=P_s({\varvec{x}})\) is the marginal probability function of \(Y_1\) conditional on \({\varvec{x}}\);

\(P(Y_J = s\mid y_{j-1};{\varvec{x}})=P_{s.y_{j-1}}({\varvec{x}})\) is the probability function of \(Y_j\) conditional on \(y_{j-1}\) and \({\varvec{x}}\) of order one;

\(P(Y_J = s\mid y_{j-1}, y_{j-2};{\varvec{x}})=P_{s.y_{j-1},y_{j-2}}({\varvec{x}})\) is the probability function for \(Y_j\) conditional on \(y_{j-1}, y_{j-2}\) and \({\varvec{x}}\) of order two;

\(P(Y_J = s\mid y_{j-1},y_{j-2},\cdots ,y_1;{\varvec{x}})=P_{s.y_{j-1},y_{j-2},\cdots ,y_1}({\varvec{x}})\) is the probability function of \(Y_j\) conditional on \(y_{j-1},\cdots ,y_1\) and \({\varvec{x}}\) of order \(k=j-1\).

The unconditional probability of the left-hand side of Eq. (1) is defined as:

\(P(Y_1 = y_1,Y_2 = y_2,\cdots ,Y_J = y_J\mid {\varvec{x}})=P_{y_1,y_2,\cdots ,y_J}({\varvec{x}})\).

3 Models

3.1 Proportional odds model (POM)

McCullagh [33] proposed the proportional odds model (POM) model to analyze ordinal outcomes as a function of covariates. In this model, the coefficients that describe the relationship between lower-level versus all higher levels (thresholds or cut points) of the response variable are the same, which is the proportional odds assumption (parallel regression) and required to test [9]. We assessed the proportionality odds assumption using the Brant test [9]. POM-fitting using baseline outcome as a function of covariates will provide the marginal model.

Let, the outcome \(Y_1\) having s categories \((s=0,1,\ldots ,S)\) with associated probabilities \({\pi _0+\pi _1+\cdots +\pi _S}\) and \(P(Y_1 \le s)=\pi _0+\cdots +\pi _s\) where \(P(Y_1 \le 0) \le P(Y_1 \le 1) \le \cdots \le P(Y_1 \le S)\). Then the proportional odds model can be shown as:

or equivalently can be expressed in logit form as

where \(\alpha _s\)’s are the threshold parameters (cut points) and \({\varvec{\beta _1}}=[\beta _1,\beta _2,\cdots ,\beta _p]^\prime \) is the vector of regression coefficients corresponding to the covariate vector \({\varvec{X}}=[X_1,X_2,\cdots ,X_p]^\prime \). This model assumes that the effects of the covariates are same for all categories (proportional odds). Then the marginal probability of sth category is

3.2 Proposed kth-order proportional odds regressive model

Let \(Y_1,\ldots , Y_J\) are repeated ordinal outcomes with s outcome levels (\(s=0,1,2,\ldots ,S\)). Then the proposed kth-order (k=j-1) proportional odds regressive model can be shown as follows:

where \(\alpha _s\)’s are the threshold parameters and

is the vector of regression coefficients corresponding to the covariate vector

here \(D_{11},\ldots ,D_{1S},D_{21},\ldots ,D_{2S},\ldots ,D_{(j-1)1},\ldots ,D_{(j-1)S}\) are the dummy variables for categories \(1, 2,\ldots ,S\) for \(Y_1,\ldots Y_{j-1}\) with 0 as the reference category. Then the conditional probability of sth category is

3.3 Partial proportional odds model (PPOM)

If the proportional odds assumption violates for predictors, then alternative models are unconstrained, constrained partial proportional odds [38], or multinomial logistic models among others [1, 20, p. 290–292]. The unconstrained partial proportional odds model allows non-proportional odds for a subset of q predictors (\(q<p\), p is the total number of predictors) for those proportional odds assumptions violates. Then the marginal model using baseline outcome can be shown as:

or equivalently can be expressed in logit form as

where \(\alpha _s\) are the cut points, \({\varvec{T}}\) is the subset of covariate vector for which the proportional odds assumption is violated, and \(\gamma _s\) is a vector of regression coefficients corresponding to the q covariates in \({\varvec{T}}\), \({\varvec{\beta _1^\prime }}\) is the vector of the regression coefficients of covariates those are not in q. Then using Eq. (4), we can obtain the marginal probability of sth category.

3.4 Proposed kth-order partial proportional odds regressive model

The kth-order partial proportional regressive model for \(Y_1,\ldots ,Y_j\) can be shown as:

where \(\alpha _{j.s}\) are the cut points, \({\varvec{T}}\), \(\gamma _{j.s}\), \({\varvec{\beta _{j.y_{j-1}}^\prime }}\) are equivalent as explained in Eq. (10) and \({\varvec{Z}}\) is a covariate vector as defined in Eq. (7). The conditional probability of sth category can be estimated using Eq. (8).

3.5 Multinomial regressive logistic model

Chowdhury and Islam [13] showed kth-order multinomial regressive logistic model. The first-order multinomial regressive model \(P(Y_2\mid y_1; {\varvec{z}})\) for outcomes \(Y_1\) and \(Y_2\) can be shown as:

\({\varvec{Z^\prime }}=\left[ 1,Z_1,...,Z_p,Z_{p+1},\ldots , Z_{p+S}\right] \) \(=\left[ {\varvec{X^\prime }},{\varvec{D^\prime }}\right] =\left[ 1,X_1,\ldots ,X_p,D_{11},\ldots , D_{1S}\right] \). Here \(D_{11},\ldots , D_{1S}\) are the dummy variables for categories \(1,\ldots , S\) of outcome \(Y_1\) with 0 as the reference category and producing a total of \([(p+1)+S]S\) regression coefficients.

The first and all higher-order regressive models are equivalent to the corresponding marginal models. The regressive modeling approach requires fitting only one model for each repeated outcome by incorporating previous responses as covariates along with the risk factor. Besides, it allows the divide and recombines technique for large complex data. Therefore, one can run models for all the follow-ups in parallel using parallel programming and exploiting multiple processors. We can use R, SAS, STATA, or other software capable of fitting POM, PPOM, and MNOM. It is noteworthy that the regressive model for binary outcomes proposed by Islam and Chowdhury [23] and Bonney [7, 8] is special cases of the proposed regressive models shown in Eq. (12) for s=0,1.

3.6 Predictive models and joint probabilities

The log-likelihood function of the joint mass function in (1) can be obtained as:

For each proposed model, differentiating the log-likelihood with respect to the parameters and equating the derivatives to zero, we obtain the equations whose solutions give the maximum likelihood estimates for parameters. The observed information matrix can be obtained by taking the second derivative. Then using the Newton–Raphson method, the estimates of the parameters are obtained. These provide the fitted models and are used for predictions.

We can predict the risks of a sequence of outcomes for a subject with specified covariate vector \({\varvec{X}}^*={\varvec{x}}^*\) for a particular trajectory as shown in Fig. 1.

The predicted joint probabilities of \({\hat{P}}(Y_1=y_1,Y_2=y_2,\ldots ,Y_j=y_j\mid {\varvec{x}}^*)\) can be obtained as:

For simplicity, let two repeated outcomes \(Y_1\) and \(Y_2\) with categories \(s=0,1\) and 2. Then using Eq. (14) the predicted joint probabilities \(P(Y_1=y_1,Y_2=y_2 \mid {\varvec{x}}^*)\) is

We can predict the marginal probabilities \({\hat{P}}_0({\varvec{x}}^*); {\hat{P}}_1({\varvec{x}}^*); {\hat{P}}_2({\varvec{x}}^*)\) from the fitted marginal model and the first-order conditional probabilities \({\hat{P}}_{s.y_1}({\varvec{x}}^*)\) from the fitted first-order regressive model using covariate vector \({\varvec{Z}}=[{\varvec{x}}^*,D_{11},D_{12}]^\prime \) where \(D_{11},D_{12}=0,1\). For example, \({\hat{P}}_{1.0}({\varvec{x}}^*)\) and \({\hat{P}}_{2.0}({\varvec{x}}^*)\) are estimated using \({\varvec{Z}}=[{\varvec{x}}^*,0,0]^\prime \); \({\hat{P}}_{1.1}({\varvec{x}}^*)\) and \({\hat{P}}_{2.1}({\varvec{x}}^*)\) are estimated using \({\varvec{Z}}=[{\varvec{x}}^*,1,0]^\prime \); \({\hat{P}}_{1.2}({\varvec{x}}^*)\) and \({\hat{P}}_{2.2}({\varvec{x}}^*)\) are estimated using \({\varvec{Z}}=[{\varvec{x}}^*,0,1]^\prime \) and so on. Then the joint probabilities for two outcomes \({\hat{P}}_{00}={\hat{P}}_0\times {\hat{P}}_{0.0}\); \({\hat{P}}_{01}={\hat{P}}_0\times {\hat{P}}_{1.0}\) and \({\hat{P}}_{02}={\hat{P}}_0\times {\hat{P}}_{2.0}\) and so on.

4 Tests

4.1 Significance of the joint model

We can test the significance of the joint model using the likelihood ratio test between the joint constant only model (Red.) and joint full model (Full) as follows:

The degrees of freedom (d) for three models are as follows:

POM=\([\{(p+S)\}+\{(p+S+S)\} +\{(p+2S+S)\} +\cdots + \{p+(j-1)S\} +S]-jS\).

PPOM=\([\{(p^\prime +S)\}+\{(p^\prime +S+S)\} +\{(p^\prime +2S+S)\} +\cdots + \{p^\prime +(j-1)S\} +S]-jS\).

MNOM=\([\{(p+1)S\}+\{(p+1+S)S\} +\{(p+1+2S)S\} +\cdots + \{p+1+(j-1)S\} S] - jS\).

here \({\varvec{{\hat{\beta }}_0}}\) and \({\varvec{{\hat{\beta }}_1}}\) includes regression coefficients from the constant only joint model and the full joint model, respectively. Table 1 displays the degrees of freedom for different models.

4.2 Test for order of the regressive model

To test the order of the regressive model, i.e., whether a given response depends on any of the previous ones, we used the test showed by Chowdhury and Islam [13]. The null hypothesis for kth \((k=j-1)\)-order regressive model is

which can be tested using following test statistic:

which is distributed asymptotically as \(\chi ^2\) with \([p+(j-1)S+ S]-\{(j-1)S\}]\) degrees of freedom. The term \([p+(j-1)S+S]\) is the total number of parameters of \((j-1)\)th-order regressive model. The quantity \((j-1)S\) are the number of previous outcomes \(y_1,\ldots ,y_{j-1}\) multiplied by the number of dummy variables (S). Then we can perform the test as follows:

-

(i)

The likelihood ratio test can be used to test the significance of the overall model at the first stage.

-

(ii)

The Wald test can be used to test the significance of the parameter(s) corresponding to the previous outcomes.

4.3 Overfitting, underfitting and predictive accuracy

We evaluated the performance and predictive capability of models by estimating the prediction accuracy using the confusion matrix from training, test, full data sets, and to check over(under)fitting [27, p. 21, 29]. Good fit models with the better discriminative ability and predictive power will improve prediction accuracy.

5 An illustration

The panel data from the Health and Retirement Study (HRS), sponsored by the National Institute of Aging (grant number NIA U01AG09740), conducted by the University of Michigan [21] is used for illustration. We used data from follow-up six to follow-up eleven of the RAND version. At follow-up, six minimum age of the subjects was 60. The outcome variables considered are the Activity of Daily Living (ADL) Index. The ADL, from follow-up six to follow-up eleven are denoted by \(Y_1, Y_2, Y_3, Y_4, Y_5\), and \(Y_6\), respectively. This index ranges from 0 to 5 is the sum of five tasks (yes/no): whether respondents faced difficulties in walking, dressing, bathing, eating, and getting in/out of bed. Due to small frequencies, we recategorized all six ADL outcomes 0 as 0, 1 or 2 as 1, and 3, or more as 2. We termed those as independent, i.e., free of ADL difficulty, mild and severe ADL difficulty, respectively. The explanatory variables considered are: age (in years), marital status (married/partnered = 1, single/separated = 0), whether drink (yes = 1, no=0), gender (male = 1, female = 0), ncond, (number of conditions ever had, range 0–8), white (yes = 1, no = 0), black (yes = 1, no = 0) with others as reference category, education (in years), veteran status (1 = yes, 0 = no), mobility (mobility index, range 0–5), BMI (body mass index), CESD (mental health index, range 0–8), lmuscle (large muscle index, range 0–4), gskills ( gross motor skills, range 0–5), and wrecall (word recall score, range 0–20). More details about variables can be found in RAND HRS Longitudinal File 2016 (V1) documentation. Table 2 presents the frequency distribution of the outcome variables \(Y_1, \ldots ,Y_6\).

Tables 3 and 4 display parameter estimates, significance level, standard errors, and Brant p-value to test proportional odds assumption from POM for marginal and regressive models. Various predictors are significantly associated with outcome variables for different models. In particular, gender, mobility, lmuscle, and gskills were found to be significantly associated with all models (Tables 3 and 4). Most of the dummy indicators for previous outcomes are significantly and positively associated with the current outcomes except for some in the higher-order models. The Brant test results indicated the violation of the proportional odds assumption for different covariates in marginal and all regressive models. We fitted the PPOM models to tackle the variables that violated the proportional odds assumption in POM. We also fitted the multinomial regressive logistic model by ignoring the ordinal nature of outcomes. The parameter estimates of PPOM and MNOM are shown in supplementary materials (Appendix A: Tables 6, 7, 8, 9 and 10). All the models are fitted in parallel using three cores (processor) in a desktop computer (Intel i5-4590 CPU, 3.30 GHz, four cores) and NVIDIA Quadro FX 370 LP graphics card. The CPU time for fitting POM from all six follow-ups was fast (user 0.45, system 0.09, and elapsed 13.00).

Table 5 displays model statistics, including log-likelihood value for the constant only model and full model for marginal and all higher-order models for POM, PPOM, and MNOM, respectively. The likelihood ratio test between the constant only and full models is statistically significant (\(p < 0.001\)) for POM, PPOM, and MNOM. The prediction accuracy based on the confusion matrix for full data and test and training data varies between 0.91 and 0.94. Prediction accuracy for POM, PPOM, and MNOM are overly similar. Also, accuracy from full training and test data are very close for POM, PPOM, MNOM., which shows the absence of over(under)fitting for all models and better generalization for prediction for out-of-the-sample subjects.

5.1 Predicted trajectories

To illustrate, we showed the prediction for three selected trajectories. Those trajectories are (i) remains ADL difficulty free for all outcomes \(P_{0,0,0,0,0,0}({\varvec{x}})\), (ii) mild ADL difficulty for all outcomes \(P_{1,1,1,1,1,1}({\varvec{x}})\), and (iii) severe ADL difficulty for all outcomes \(P_{2,2,2,2,2,2}({\varvec{x}})\).

5.1.1 Impact of gender on trajectory

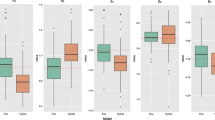

Figure 2 displays the predicted joint probabilities by gender from three models (POM, PPOM, and MNOM) for the three trajectories. The predicted risk at follow-up six in the graphs is the marginal probability, while from the follow-up, seven onward are the joint probabilities. Table 11 in Appendix presents the outcomes and covariates for all six follow-ups for these subjects. There are clearer differences between trajectories (\(P_{2,2,2,2,2,2}({\varvec{x}})\)) by gender and models. The predicted risk for a trajectory from PPOM is the highest for the male (top line) compared to that of a female (the third solid line from the top). The difference between trajectories for gender and models due to a significant positive association of gender with outcomes for all follow-ups and PPOM showing better predictions due to the violation of the proportional odds assumption (Tables 3 and 4). The first two panels show the other two trajectories \(P_{0,0,0,0,0,0}({\varvec{x}})\) and \(P_{1,1,1,1,1,1}({\varvec{x}})\) from different models. The joint probabilities (second follow-up onward) for these trajectories are close to zero because all the observed ADL difficulty from six follow-ups for these subjects was severe (Table 11 in Appendix). The predicted joint probabilities for trajectory \(P_{2,2,2,2,2,2}({\varvec{x}})\) for all figures are shown in Table 18 in Appendix.

5.1.2 Impact of mobility index on trajectory

Then we assess the impact of mobility for different values (0, 5) on a trajectory for a female subject (Fig. 3). Other covariates remain the same as in Table 11 in Appendix. The predicted risks of trajectories for a zero value of mobility index were close to one from all three models, and those three trajectories were coincided (top line in the bottom panel). A higher value (5) of the mobility index reduces ADL difficulty compared to a value of zero. This reduction is due to a significant negative association of mobility with outcomes for all follow-ups. The predicted joint probabilities for a value of 5 for mobility index for trajectory were highest from POM followed by PPOM and MNOM, respectively.

5.1.3 Impact of large muscle index on trajectory

Large muscle index showed significant positive associations with outcomes for marginal and all regressive models. Figure 4 displays the trajectories for two values (0 and 4) of this index from three models for a male subject. Zero means no problem with four tasks included for this index, and four means difficulties with all four tasks. The top lines in the bottom panel are for path \(P_{2,2,2,2,2,2}({\varvec{x}})\) from POM, PPOM, and MNOM and with a large muscle index value of four are close to one. For a large muscle index value of zero, these lines were followed by trajectories from MNOM, PPOM, and POM, respectively.

5.1.4 Impact of large muscle index on trajectory with mild ADL difficulties

Next, we assess the impact of large muscle index on trajectory \(P_{2,2,2,2,2,2}({\varvec{x}})\) with mild ADL difficulty for all outcomes (Fig. 5). Trajectories from all three models with a value of 4 of this index predicted the probabilities close to one (top three lines). Subjects with zero large muscle index value lowered the probabilities sharply. The lowest reduction was from the POM, followed by PPOM and MNOM, respectively. Controlling the covariates with a significant association with outcomes and previous episodes of ADL difficulties slow down the progression of the disease rapidly.

5.1.5 Impact of large muscle, mobility index, and previous outcomes on trajectory

Lastly, we assessed the impact of large muscle with value 0 and mobility index with value 5 on trajectory \(P_{2,2,2,2,2,2}({\varvec{x}})\) with mild ADL difficulty for all outcomes (Fig. 6). The highest reduction was from the PPOM, followed by POM and MNOM, respectively. It is clear from the figure that by controlling risk factors, ADL difficulty can be reduced over time significantly.

6 Bootstrapping

We performed 10,000 bootstraps simulations and computed bias, standard error, and mean squared error to measure the accuracy of the parameter estimates and predicted joint probabilities for trajectories. We used the nonparametric bootstrap by randomly sampling with replacement from the original longitudinal data. Also, we assume no assumptions about how observations are distributed to generate bootstrap samples. As we generate bootstrap samples with replacement, those observations not included in each bootstrap sample are used as the test data for assessing the model’s generalizing ability. For POM, estimates from Tables 3 and 4 are considered population parameters while bias, standard error, and mean squared error are computed. We used Tables 8, 9, and 10 in Appendix for MNOM as population parameters. However, we could not do the bootstrapping for PPOM as a varying number of covariates were violating the proportional odds assumption for different bootstrap samples (Tables 6 and 7 in Appendix). For POM, bias is generally minimal (less than 1 percent) for the estimators of parameters of all models along with very low standard error and mean squared errors (Tables 12 and 13 in Appendix). The same is true for MNOM (Tables 14, 15 and 16 in Appendix). But, we computed bootstrap estimates for predicted joint probabilities for trajectories for full, train and test data sets for POM, PPOM, and MNOM. As a result, the bias for the prediction accuracy from all three models for full, train, and test data are less than 0.01 percent (Table 17 in Appendix). Table 18 in Appendix displays the bootstrap estimates for the predicted joint probabilities for the subject used in all the graphs. The bootstrap mean (\({\bar{x}}\)) of the predicted joint probabilities to that of the population are overly similar. However, the biases are negligible, and the average bootstrap estimates will coincide with the population’s estimate.

7 Conclusion

In this paper, we proposed (i) proportional odds and (ii) partial proportional odds regressive models along with a framework to predict the risks for a sequence of ordinal responses from longitudinal studies that can make transitions through different trajectories. The proposed models and the risk prediction framework is a new development. Also, we compared the results from POM and PPOM with the multinomial regressive logistic model by [13]. Using a well-known longitudinal data set, we illustrated the proposed models, and the estimates are computed for each stage in the process conditionally. Then the conditional estimates are linked using marginal and sequence of conditional models to provide the joint model and the trajectory. The proposed modeling framework allows answering different questions of interest to researchers, clinicians, and policymakers. (i) We can observe the conditional probability estimate, hence the class prediction at each stage. This conditional probability allows us to assess the effect of responses from previous follow-ups. Also, we can compare the predicted sequence from all time points to that of the observed one. [31] suggested that for repeated measures, this must generally be conditioned on the previous history of a subject. [30] concluded that the conditional models are of fundamental interest, and one can make the marginal predictions from conditional models. (ii) The estimated joint probability provides the trajectory, which is of vital importance. Using this trajectory, one can see how individual responses change over time, which is the advantage of repeated measures [31]. (iv) This model allows interaction among previous outcomes and the interaction between previous outcomes and predictors in the model. The interaction terms may provide a better understanding of the underlying disease process and the relationships between outcomes and related risk factors. (v) In the proposed regressive model, it is easy to include varying numbers of predictors at each stage. Besides, one can easily add a terminal event at each stage, for example, death as the last category of the outcome variables [18, 25].

We showed the likelihood ratio test and AIC for the marginal and regressive models. When considering outcomes as ordinal, the PPOM showed a better performance compared to POM as AIC was lowest for marginal and all regressive models. This pattern was also evident in the figures for predicted trajectories. The classical marginal models (such as GEE) provide the average relationships estimated from repeated observations. However, the transition probabilities may depend on different models, each model representing a transition from one stage to another. [31] examined some important theoretical aspects regarding the marginal models and demonstrated various limitations. Another alternative is the subject-specific models taking into consideration the random effects by allowing random effect terms in the linear predictor [10]. However, the proposed method provides a more comprehensive, flexible, and attractive setup for addressing the risk prediction of repeated ordinal outcomes emerging from longitudinal studies.

The significant improvement of the proposed approach is the reduction of overparameterization, as it requires only one model for each follow-up compared to the sequence of conditional models, such as Markov models. The proposed modeling framework can readily use other available models for the ordinal outcome (e.g., continuation ratio, stereotype adjacent category). Also, one can use different machine learning algorithms (e.g., neural network, support vector machine, decision tree, and random forest) for multiclass classification and trajectory prediction in the proposed framework. Besides, the proposed method will be beneficial to analyze big data for a large number of repeated outcomes as it readily permits to use of a divide and recombine approach for big data in a statistically valid manner [14, 17]. Follow-ups are a natural condition variable that allows data division and recombination for trajectory using Eq. (1). For a large sample size in the follow-ups, a second-level data division is also possible [28]. Then one can analyze all subsets using multiple cores in a single computer or using several CPUs in a distributed system [22]. We believe the proposed methods can be applied to analyze and risk prediction for a sequence of events in many fields of studies such as epidemiology, public health, survival analysis, genetics, reliability, and environmental studies.

References

Agresti, A.: Categorical Data Analysis, 3rd edn. Wiley, Hoboken, New Jersey (2013)

Ananth, C.V., Kleinbaum, D.G.: Regression models for ordinal responses: a review of methods and applications. Int. J. Epidemiol. 26(6), 1323–1333 (1997)

Anderson, J.A.: Regression and ordered categorical variables. J. R. Stat. Soc. Ser. B Methodol. 46(1), 1–30 (1984)

Barnes, D.E., Mehta, K.M., Boscardin, W.J., et al.: Prediction of recovery, dependence or death in elders who become disabled during hospitalization. J. Gen. Intern. Med. 28(2), 261–268 (2013)

Beddoes-Ley, L., Khaw, D., Duke, M., Botti, M.A.: Profile of four patterns of vulneralibility to functional decline in older general medicine patients in victoria, australia: a cross sectional survey. BMC Geriatr. 16(150), 1–12 (2016)

Bodilsen, A.C., Klausen, H.H., Petersen, J., et al.: Prediction of mobility limitations after hospitalization in older medical patients by simple measures of physical performance obtained at admission to the emergency departmen. PLoS ONE 11(5), 1–19 (2016)

Bonney, G.E.: Regressive logistic models for familial disease and other binary trials. Biometrics 42(3), 611–625 (1986)

Bonney, G.E.: Logistic regression for dependent binary observations. Biometrics 43(4), 951–973 (1987)

Brant, R.: Assessing proportionality in the proportional odds model for ordinal logistic regression. Biometrics 46(4), 1171–1178 (1990)

Breslow, N.E., Clayton, D.G.: Approximate inference in generalized linear mixed models. J. Am. Stat. Assoc. 88(421), 9–25 (1993)

Brown, R.T., Diaz-Ramirez, L.G., Boscardin, W.J., Lee, S.J., Williams, B.A., Steinman, M.A.: Association of functional impairment in middle age with hospitalization, nursing home admission, and death. JAMA Int. Med. 179(5), 668–675 (2019)

Bøvelstad, H.M., Nygard, S., Borgan, O.: Survival prediction from clinico-genomic models - a comparative study. BMC Bioinf. 10(413), 1–9 (2009)

Chowdhury, R.I., Islam, M.A.: Regressive models for risk prediction for repeated multinomial outcomes: an illustration using health and retirement study (hrs) data. Biomet. J. (2020). https://doi.org/10.1002/bimj.201800101

Cleveland, W.S., Hafen, R.: Divide and recombine (d&r): data science for large complex data. Stat. Anal. Data Min. ASA Data Sci. J. 7(6), 425–433 (2014)

Fox, E.R., Samdarshi, T.E., Musani, S.K., et al.: Development and validation of risk prediction models for cardiovascular events in black adults. JAMA Cardiol. 1(1), 15–25 (2016)

Gottschau, A.: Markov chain model for multivariate binary panel data. Scand. J. Stat. 21(1), 57–71 (1994)

Guha, S., Hafen, R., Rounds, J., Xia, J., Li, J., Xi, B., Cleveland, W.S.: Large complex data: divide and recombine (d&r) with rhipe. Stat 1(1), 53–67 (2012)

Gao, H.J., S., Hui, S.L.: An illness-death stochastic model in the analysis of longitudinal dementia data. Stat. Med. 22(9), 1465–1475 (2003)

Hosmer, D.W., Lemeshow, S.: Applied Logistic Regression, 2nd edn. Wiley, New York (2000)

Hosmer, D.W., Lemeshow, S.: Applied Logistic Regression, 3rd edn. Wiley, New Jersey (2013)

HRS: Public Use Dataset, Health and Retirement Study. University of Michigan, Ann Arbor, MI (2019)

Hwang, H., Ryan, L.: Statistical strategies for the analysis of massive data sets. Biomet. J. (2019). https://doi.org/10.1002/bimj.201900034

Islam, M.A., Chowdhury, R.I.: Prediction of disease status: a regressive model approach for repeated measures. Stat. Methodol. 7(5), 520–540 (2010)

Islam, M.A., Chowdhury, R.I., Huda, S.: Markov Models with Covariate Dependence for Repeated Measures. Nova Science, New York (2009)

Islam, M.A., Chowdhury, R.I., Huda, S.A.: Multistate transition model for analyzing longitudinal depression data. Bull. Malays. Math. Sci. Soc. (2) 36(3), 637–655 (2013)

Islam, M.A., Chowdhury, R.I., Singh, K.P.: A markov model for analyzing polytomous outcome data. Pak. J. Stat. Oper. Res. 8(3), 593–603 (2012)

James, G., Witten, D., Hastie, T., Tibshirani, R.: An Introduction to Statistical Learning with Applications in R. Springer, New York (2013)

Karim, M.R., Islam, M.A.: Reliability and Survival Analysis. Springer, Singapore (2019)

Lee, K., Daniels, M.J.: A class of markov models for longitudinal ordinal data. Biometrics 63(4), 1063–1067 (2007)

Lee, Y., Neider, J.A.: Conditional and marginal models: another view. Stat. Sci. 19(2), 219–228 (2004)

Lindsey, J.K., Lambert, P.: On the appropriateness of marginal models for repeated measurements in clinical trials. Stat. Med. 17(4), 447–469 (1998)

Liski, E.P., Nummi, T.: Prediction in repeated-measures models with engineering applications. Technometrics 38(1), 25–26 (1996)

McCullagh, P.: Regression models for ordinal data. J. R. Stat. Soc. B 42(2), 109–142 (1980)

McCullagh, P., Nelder, J.A.: Generalized Linear Models. Chapman and Hall, London/New York (1983)

Miller, E.M., Thomas, R., Have, T., Reboussin, B.A., Lohman, K.K., Rejeski, W.J.: Marginal model for analyzing discrete outcomes from longitudinal surveys with outcomes subject to multiple-cause nonresponse. J. Am. Stat. Assoc. 96(455), 844–857 (2001)

Muenz, L.R., Rubinstein, L.V.: Markov models for covariate dependence of binary sequence. Biometrics 41(1), 91–101 (1985)

Pescosolido, B.A.: Patient Trajectories, pp. 1770–1777. American Cancer Society (2013). https://doi.org/10.1002/9781118410868.wbehibs282

Peterson, B., Harrell, F.E.: Partial proportional odds models for ordinal response variables. J. R. Stat. Soc. Ser. C 39(2), 205–217 (1990)

Tripepi, G., Heinze, G.K., Jager, J., Stel, V.S., Dekker, F.W., Zoccali, C.: Nephrology dialysis transplant. Risk Predict Models 28(8), 1975–1980 (2013)

Wallace, E., Stuart, E., Vaughan, N., et al.: Risk prediction models to predict emergency hospital admission in community-dwelling adults: a systematic review. Med. Care 52(8), 751–765 (2014)

Yalu, W., He, Z., Li, M., Lu, Q.: Risk prediction modeling of sequencing data using a forward random field method. Sci. Rep. 6(21120) (2016)

Yu, F.: Use of a markov transition model to analyse longitudinal low-back pain data. Stat. Methods Med. Res. 12(4), 321–331 (2003)

Acknowledgements

We acknowledge gratefully that this study is supported by the HEQEP subproject 3293 of the University Grants Commission of Bangladesh and the World Bank. The authors also acknowledge for the data to the HRS (Health and Retirement Study) which is sponsored by the National Institute of Aging and conducted by the University of Michigan.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by Shahariar Huda.

Professor M Ataharul Islam passed away in December 2020. A significant portion of this work was done before he fell into severe illness.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

See Table 6, 7, 8, 9,10,11, 12,13, 14, 15, 16, 17 and 18.

Rights and permissions

About this article

Cite this article

Chowdhury, R.I., Islam, M.A. Predictive Models for Trajectory Risks Prediction from Repeated Ordinal Outcomes. Bull. Malays. Math. Sci. Soc. 45 (Suppl 1), 161–209 (2022). https://doi.org/10.1007/s40840-022-01277-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40840-022-01277-1