Abstract

In this paper, a general five-step discrete-time Zhang neural network is proposed for time-varying nonlinear optimization (TVNO). First, based on the bilinear transform and Routh–Hurwitz stability criterion, we propose a general five-step third-order Zhang dynamic (ZeaD) formula, which is shown to be convergent with the truncation error \(O(\tau ^3)\) with \(\tau >0\) the sampling gap. Second, based on the designed ZeaD formula and the continuous-time Zhang dynamic design formula, a general five-step discrete-time Zhang neural network (DTZNN) model with quartic steady-state error pattern is presented for TVNO, which includes many multi-step DTZNN models as special cases. Third, based on the Jury criterion, we derive the effective domain of step size in the DTZNN model, which determines its convergence and convergence speed. Fourth, a nonlinear programming is established to determine the optimal step size. Finally, simulation results and discussions are given to validate the theoretical results obtained in this paper.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Time-varying nonlinear optimization (TVNO) has been around for quite some time [1] and appeared naturally in various fields such as robotics, machine learning, signal and image processing, economic management, and control theory, see, e.g., [7,8,9,10,11], and [2, 3, 5, 7]. The problem offers more accurate instruments for describing the dynamic properties of various processes, which makes it more useful than the classical static nonlinear optimization in modeling the real world.

Let \(f: {\mathcal {R}}^n\times {\mathcal {R}}_+\rightarrow R\) be a convex function parameterized over time, i.e., f(x, t), where \(x\in {\mathcal {R}}^n\) is the decision variable and \(t\ge 0\) is time. The TVNO can be formulated as:

where \(t_f\) is the final time of the given time duration. In the following, let g(x(t), t) denote the partial derivative of f(x(t), t) with respect to the first variable x and \(g_t'(x(t),t)\) denote the partial derivative of the mapping g(x(t), t) with respect to the second variable t, i.e.,

and

Let H(x(t), t) denote the Hessian matrix of problem (1) with respect to x, i.e.,

which is assumed to be nonsingular throughout the paper.

Generally, to find the minimum of f(x(t), t) at each point in time, one usually samples problem (1) at defined sampling times \(t_k\) (\(k=0, 1,\ldots \)) with sampling period \(\tau =t_{k+1}-t_k\) and considers a sequence of time-invariant nonlinear optimizations:

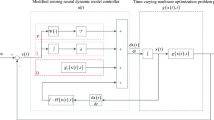

If we can get the expression of the convex function \(f(x(t_{k+1}),t_{k+1})\) in advance, problem (2) can be solved by the classical iteration algorithms for static nonlinear optimization [12, 13], e.g., the steepest descent method, the conjugate gradient method, the Newton method, the quasi-Newton method, etc. However, these approaches are very time-consuming as the internal iteration would be implemented at every sampling time. Thus, one should turn to pursue an online approach, which can find an approximate solution, denoted by \(x^*(t_{k+1})\), of problem (2) by one iteration based on the information \(x(t_j), f(x(t_j),t_j)\), \(g(x(t_j),t_j)\), \(g_t'(x(t_j),t_j), H(x(t_j),t_j)\), where \(j\le k\). As the time goes on, the approximate solution \(x^*(t_{k+1})\) will quickly reach the minimum trajectory of problem (2) with high accuracy and stability. To generate approximate solutions of problem (2), we shall adopt an influential on-time algorithm, i.e., the following continuous-time ZNN (CTZNN) model [2], which is designed by Zhang et al. [2, 14,15,16]:

where the scaling factor \(\gamma \in {\mathcal {R}}\) represents the convergence rate. Surely, if we set \(t=t_k\) in (3), then

Further, if we sue a certain numerical differentiation to approximate the first-order derivative \({x}'(t)|_{t=t_k}\), some discrete-time ZNN (DTZNN) models with different steady-state error patterns would be obtained [2, 5,6,7]. For example, one-step DTZNN model with \({\mathcal {O}}(\tau ^2)\) steady-state error pattern is proposed in [2]:

where \(h=\gamma \tau \in (0,2)\) is the step size; the general two-step DTZNN model with \({\mathcal {O}}(\tau ^2)\) steady-state error pattern is presented in [4, 5]:

where \(a_1\in \big (-{1/2},+\infty \big )\); the general three-step DTZNN model with \({\mathcal {O}}(\tau ^3)\) steady-state error pattern is obtained in [6]:

where \(a_1<0\); and the general four-step DTZNN model with \({\mathcal {O}}(\tau ^4)\) steady-state error pattern is investigated in [7]:

where \(a_1\in \big ({1/12},{1/6}\big )\). Besides, some special cases of multi-step DTZNN models are proposed in [16, 17]. In this paper, we are going to further study DTZNN model and present a general five-step DTZNN model for problem (2), which includes the general four-step DTZNN model (9) as its special case, and we prove that its steady-state error pattern is the same as the four-step DTZNN model, i.e., \({\mathcal {O}}(\tau ^4)\). We also investigate the feasible region of step size h, which determines its convergence and convergence speed. In addition, the optimal step size is also studied. The main contribution of this paper is as follows:

- (1)

We present a general five-step third-order Zhang et al. dynamic formula with the truncation error \(O(\tau ^3)\), whose convergence and stability are discussed in detail.

- (2)

We design a general five-step discrete-time Zhang neural network (DTZNN) model with quartic steady-state error pattern, which includes many multi-step DTZNN models as its special cases.

- (3)

The feasible region of the step size h in the above DTZNN is identified, and the optimal step size is also discussed.

- (4)

The high efficiency of the designed DTZNN is substantiated by some simulation results.

The remainder of the paper is organized as follows: Some basic results are collected in Sect. 2. In Sect. 3, based on the bilinear transform and Routh–Hurwitz stability criterion, we propose a general five-step third-order ZeaD formula to approximate and discrete the first-order derivative of functions. In Sect. 4, based on the ZeaD formula and Eq. (4), we get a general five-step DTZNN model for problem (2). Based on the Jury criterion, we get the feasible region of step size in the general five-step DTZNN model. A nonlinear programming is also established to determine the optimal step size. Later, in Sect. 5, feasibility of the theoretical results obtained in Sects. 3 and 4 is validated through numerical simulations. Section 6 concludes this paper with a brief remark.

2 Preliminaries

The function f(x, t) in problem (1) is assumed to be convex in this paper, which means that the set of its stationary point coincides with the set of its global minimum. Furthermore, we use the following definition to measure the convergence of the online algorithm DTZNN model for the sequence of nonlinear optimizations (2).

Definition 2.1

The n-step DTZNN model for problem (2) defined by

is convergent with \(O(\tau ^p)\) steady-state error pattern in the sense that for sufficiently large \(k>0\):

where \(p>0\) is an integer; \(F: {\mathcal {R}}^n\times {\mathcal {R}}^n\times \cdots {\mathcal {R}}^n\rightarrow {\mathcal {R}}^n\) is a multivariate mapping; and g(x(t), t) is the partial derivative of f(x(t), t) with respect to x.

Given \(a_i, y(i)\in {\mathcal {R}}\)\((i=0,1,\ldots ,n-1)\), suppose that the sequence \(\{y(k)\}\) is generated by the following n-order homogeneous difference equation:

with the following n-degree algebraic equation as its characteristic equation

Equation (11) has n roots in the complex field, which are denoted by \(\lambda _i\)\((i=1,2,\ldots ,n)\). Then, we have the following conclusion about the \(\{y(k)\}\) and \(\lambda _i\)\((i=1,2,\ldots ,n)\) (see [18]).

Theorem 2.1

The sequence \(\{y(k)\}\) generated by recurrence relation (10) converges to zero if and only if all roots of Eq. (11), i.e., \(\lambda _i\)\((i=1,2,\ldots ,n)\), are less than one in modulus.

Now, consider the general n-step pth-order ZeaD formula defined in [19]:

where n is the amount of the steps of ZeaD formula (12); \(a_i\in {\mathcal {R}}\)\((i=1,2,\ldots ,n+1\)) denotes the coefficients; \({\mathcal {O}}(\tau ^p)\) denotes the truncation error; and k denotes the updating index. Equation (12) with \(a_1\ne 0, a_{n+1}\ne 0\) is termed as n-step pth-order ZeaD formula.

To end this section, we review the simplified stability criterion for linear discrete systems proposed in [20] via the following polynomials

Theorem 2.2

A necessary and sufficient condition for polynomial (13) that has its all roots included in the unit circle in the z plane is:

where \(A_k+B_k=|X_k+Y_k|, A_k-B_k=|X_k-Y_k|\), and

3 The General Five-Step Third-Order ZeaD Formula

In this section, we will first consider the general five-step third-order Zhang dynamic (ZeaD) formula, which is concerned with how to approximate the derivative \({\dot{x}}_k\) of x(t) by the values \(x_j\)\((j=k+1,k,\ldots ,k-4)\), and then derive a general five-step third-order ZeaD formula, which will be used to solve the nonlinear optimizations (2) in the next section.

Theorem 3.1

The following general five-step third-order ZeaD formula

with

is convergent if and only if the following holds

Proof

Using Taylor series expansions of \(x_{k+1}\), \(x_{k-1}\), \(x_{k-2}\), \(x_{k-3}\) and \(x_{k-4}\) at \(x_k\), one has

Substituting (19)–(23) into (12) with \(n=5\) and \(p=3\), i.e.,

yields

where

It is readily seen that Eq. (25) holds if and only if \(b_i=0\)\((i=0,1,2,3)\). By the system of equation

one has

where \(a_1, a_2\in {\mathcal {R}}\) are two free parameters. Substituting \(a_i (i=3,4,5,6)\) into Eq. (24) yields the general five-step third-order ZeaD formula (14).

To guarantee the zero-stability of (14), according to the definition of zero-stability of discrete-time system [7], it needs that all roots of the following polynomial

lie in the unit disk, i.e., \(|\gamma _i|\le 1\)\((i=1,2,\ldots ,6)\) and \(|\gamma _i|=1\) is a single root for \(i=1,2,\ldots , 6\). Substituting the bilinear transform \(\gamma =(1+\omega \tau /2)/(1-\omega \tau /2)\) into (26) yields that

where

whose Routh’s matrix is

where “−” means that the element is vacant. Then, by the Routh–Hurwitz stability criterion, the general five-step third-order ZeaD formula (14) is stable if and only if \(a_1, a_2\) satisfy the four inequalities given in (15), and the proof is complete. \(\square \)

Remark 3.1

If \(a_1=0\), then the general five-step third-order ZeaD formula defined by (14) reduces to

where

which is the same as the general four-step third-order ZeaD formula in [7]. Further, from (15), we get

which coincides with that in [7].

Remark 3.2

For (14), if we set \(a_1=1/12,~a_2=-1/8\), then the four inequalities in (15) hold and hence

which was proposed in [17].

Due to the appearance of \({\mathcal {O}}(\tau ^3)\), \({\dot{x}}_k\) defined by (14) is incomputable. Then drop \({\mathcal {O}}(\tau ^3)\) on the right-hand side of (14) and define

Now let us analyze the truncation error generated by approximating the incomputable \({\dot{x}}_k\) by the computable \({\dot{\tilde{x}}}_k\), which is given in the following remark.

Remark 3.3

Substituting the fourth-degree Taylor expansions of \(x_{k+1}, x_{k-1}\), \(x_{k-2}, x_{k-3}, x_{k-4}\) at \(x_k\) into (12) with \(n=5\) and \(p=4\), one has

where \(b_i\)\((i=0,1,2,3)\) are the same as those in (25) and

So, from (14), the Taylor expansions (20) to (23) (expanding to the fourth degree), (31) and (29), one has

If we use \({\dot{\tilde{x}}}_k\) to approximate \({\dot{x}}_k\), the truncation error is dominated by

Given \(\tau \) and \(x_k^{(4)}\), if we intend to minimize the truncation error of (29), then the optimal parameters \(a_1, a_2\) can be determined by the following nonlinear optimization model:

Figure 1 illustrates the feasible set of the nonlinear optimization model (32), which indicates that

Feasible set of nonlinear optimization model (32)

Solving the nonlinear optimization model (32), we get its optimal solution:

and the optimal value is \({1/8}>0\), which indicates that the general five-step fourth-order ZeaD formula does not exist. The truncation error of the general five-step third-order ZeaD formula (29) becomes smaller when \(a_1\) and \(a_2\) approach toward \(-{1/48}\) and 7 / 48, respectively, and the numerical experiments in Sect. 5 verify that the numerical performance of the corresponding DTZNN model proposed in the next section becomes better. The optimal \(a_1, a_2\) can also be determined by other reasonable criteria, such as \(\min {\sum _{i=1}^6}\{|\gamma _i|\}\), where \(\gamma _i\)\((i=1,2,\ldots ,6)\) are the roots of Eq. (26).

4 General Five-Step DTZNN Model and Step Size Range

In the preceding section we considered the general five-step third-order ZeaD formula (29), based on which we shall propose a general five-step DTZNN model for the sequence of nonlinear optimizations (2) and study its step size’s range in this section.

Substituting the general five-step third-order ZeaD formula (29) into the left-hand side of (4), the following general five-step DTZNN model is obtained:

where the alternative coefficients \(a_1, a_2\) satisfy the four inequalities given in (15) and h is an important parameter which determines the convergence and convergence rate of (33). Now we are going to study the convergence of \(\{(x_k,t_k)\}\) generated by (33) and investigate the effective domain of the step size h. Firstly, let us rewrite Eq. (33) as a fifth-order homogeneous difference equation.

Theorem 4.1

Suppose \(\{(x_k,t_k)\}\) be the sequence generated by the general five-step DTZNN model (33) and the sequence \(\{\Vert H(x_k,t_k)\Vert \}\) is bounded. Then, the sequence \(\{g(x_k,t_k)\}\) satisfies the following homogeneous difference equation:

where \({\bar{e}}_k=g(x_k,t_k)-{\mathcal {O}}(\tau ^4)\).

Proof

Firstly, the general five-step DTZNN model (33) can be written as

Substituting (14) into the left-hand side of the above equality, we have

Thus,

in which the term \(H(x_k,t_k){\mathcal {O}}(\tau ^3)\) is absorbed into \({\mathcal {O}}(\tau ^3)\) due to the boundness of the sequence \(\{\Vert H(x_k,t_k)\Vert \}\). Then from (35), we get

Since (14) also holds for g(x(t), t), then expanding the left-hand side of (36), the following equation is obtained:

By some simple manipulations, we get the following equation:

from which we can easily derive Eq. (34). This completes the proof. \(\square \)

In the following, let us investigate the step size’s range of the general five-step DTZNN model (33). For simplicity, we only consider \(a_1={1/12},~a_2=-{1/8}\), i.e., the five-step DTZNN model proposed in [17]:

The characteristic equation of the fifth-order difference equation (34) with \(a_1={1/12}\) and \(a_2=-{1/8}\) is

Lemma 4.1

For any \(h\le 0\), at least a root of Eq. (38) is greater than or equal to one.

Proof

Setting

which is obviously continuous at any \(\lambda \in {\mathcal {R}}\) and

If \(h\le 0\), we have \(G(1)=24h\le 0\), which together with \(\lim \limits _{\lambda \rightarrow +\infty }G(\lambda )=+\infty \) and the continuity of \(G(\lambda )\) implies the conclusion of the lemma. The proof is complete. \(\square \)

Lemma 4.1 and Theorem 2.2 indicate that we only need to consider the case \(h>0\).

Theorem 4.2

The five-step DTZNN model (37) is convergent with \(O(\tau ^4)\) steady-state error pattern if and only if

Proof

According to Definition 2.1 and Theorem 2.1, the five-step DTZNN model (37) is convergent if and only if

where \(\lambda _i\)\((i=1,2,3,4,5)\) are the five roots of the fifth-order difference equation (38). For Eq. (38), the parameters \(A_i,~B_i\)\((i=1,2,3,4)\) defined in Theorem 2.2 are as follows:

Therefore, according to Theorem 2.2, the statement \(|\lambda _i|<1\)\((i=1,2,3,4,5)\) is equivalent to the following four inequalities:

From the first and the fourth inequalities, it is obtained that

Now, let us check that any \(0<h<{2/3}\) satisfies the second and the third inequalities. For the second inequality, if \(0<h<{801/1628}\), it reduces to

i.e.,

which holds for any \(0<h<{801/1628}\). If \({801/1628}\le h<{2/3}\), the second inequality reduces to

i.e.,

which holds for any \({801/1628}\le h<{2/3}\). Overall, the second inequality holds for any \(0<h<{2/3}\).

For any \(0<h<{2/3}\), the third inequality reduces to

i.e.,

which holds for any \(0<h<{2/3}\).

Overall, the five-step DTZNN model (37) is convergent with \(O(\tau ^4)\) steady-state error pattern if and only if \(0<h<{2/3}\). The proof is complete. \(\square \)

At the end of this section, let us investigate the optimal step size of the five-step DTZNN model (37). Generally speaking, the smaller the \(|\lambda _i|\)\((i=1,2,3,4,5)\) is, the smaller the \(\Vert g(x_k,t_k)\Vert \) is. Therefore, we establish the following nonlinear optimization model to determine the optimal step size:

where \({\mathcal {C}}\) denotes the complex set; \(a_i\)\((i=0,1,\ldots ,5)\) are the coefficients of Eq. (38), i.e.,

and the constraints are derived from the Vieta’s formula. Note that the objective function is set as \({\sum _{i=1}^5}|\lambda _i|\), which is different from \({\max _{i}}|\lambda _i|\) used in [3], because the numerical results listed in Sect. 5 are more encouraging. The above nonlinear optimization model is difficult to solve due to \(\lambda _i\in {\mathcal {C}}\), and in the following we use search-based method to find the optimal step size. More specifically, we set \(h=\texttt {linspace}(0.01,0.66,1000)\) and get the corresponding objective function values by MATLAB software. Then the optimal step size is determined by the smallest objective function value. By this procedure, we get the following results.

The optimal step size \(h^*=0.2364\) if the objective function is set as \(\max \nolimits _{i}|\lambda _i|\).

The optimal step size \(h^*=0.4524\) if the objective function is set as \(\sum \nolimits _{i=1}^5|\lambda _i|\).

The optimal step size \(h^*=0.4121\) if the objective function is set as \(\sum \nolimits _{i=1}^5|\lambda _i|^2\).

5 Numerical Results

In this section, two experiments are presented to test the theoretical results about the five-step fourth-order ZeaD formula (14) (dropping \({\mathcal {O}}(\tau ^3)\)) and the five-step DTZNN model (37) obtained in Sects. 3 and 4, respectively. These experiments are carried out in MATLAB version R2014a with a personal digital computer.

Problem 5.1

Consider the target function [4]:

whose derivative is

We use the five-step fourth-order ZeaD formula (14) with \(a_1=-{1/50},~a_2={7/48}\) or \(a_1={1/12},~a_2=-{1/8}\) or \(a_1={1/3},~a_2=-{9/10}\). The sampling gap is set as \(\tau =0.1\) s, and the corresponding results are displayed in Fig. 2 (left: approximation values of \({\dot{f}}_k\); right: absolute errors \(E_{{\dot{f}}_k}\)), and from the curves of absolute error we can conclude that the ZeaD formula with \(a_1=-{1/50},~a_2={7/48}\) performs best, followed by the ZeaD formula with \(a_1={1/12},~a_2=-{1/8}\) and then the ZeaD formula with \(a_1={1/3},~a_2=-{9/10}\). This coincides with theoretical results in Remark 3.3.

Problem 5.2

Consider the following time-varying nonlinear optimization [7]:

The sampling gap is set as \(\tau =0.01\) s with time duration being [0,10] s, and five initial state vectors are set as \(x_0=x(0)=[1,2,3,4]^\top \),

Firstly, let us verify that the optimal parameters \(a_1, a_2\) can be obtained by the optimal solution of the nonlinear optimization (32), i.e., \(a_1=-{1/48},~a_2={7/48}\). More specifically, we compare the performance of the DTZNN model (33) with \(a_1=-{1/50},~a_2={7/48},~h=0.4524\) and that of the DTZNN model (33) with \(a_1={1/12},~a_2=-{1/8},~h=0.4524\). The numerical results are shown in Fig. 3, which indicates that steady-state error \(\Vert g(x_k,t_k)\Vert \) generated by the DTZNN model (33) with \(a_1=-{1/50},~a_2={7/48},~h=0.4524\) is a little smaller than those generated by the DTZNN model (33) with \(a_1={1/12},~a_2=-{1/8},~h=0.4524\).

Second, let us verify that the number 2/3 is the strictly upper bound of the step size in (37), and the trajectories of \(\Vert g(x_k,t_k)\Vert \) generated by the DTZNN model (37) with \(h=2/3+0.01, 2/3, 2/3-0.01\) are presented in Fig. 4, from which we find that: (1) steady-state error \(\Vert g(x_k,t_k)\Vert \) generated by the DTZNN model (37) with \(h=2/3+0.01\) or 2 / 3 firstly fluctuates and then strictly increases with respect to k; thus, the DTZNN model (37) with \(h=2/3+0.01\) or 2 / 3 is divergent; (2) steady-state error \(\Vert g(x_k,t_k)\Vert \) generated by the DTZNN model (37) with \(h=2/3-0.01\) firstly fluctuates and then strictly decreases with respect to k; thus, the DTZNN model (37) with \(h=2/3-0.01\) is convergent. By measuring the slopes at points of the three curves, we get strong visual evidence that the number 2/3 is the strictly upper bound of the step size in the DTZNN model (37).

Thirdly, let us verify that \(h^*=0.4524\) is an approximate optimal step size of the DTZNN model (37). More specifically, we compare the performance of the DTZNN model (37) with \(h^*=0.4524\) with that of the DTZNN model (37) with \(h^*=0.2364\) or \(h^*=0.4121\). The numerical results are shown in Fig. 5, which shows that all the three DTZNN models have successfully solved problem (42) with steady-state error \(\Vert g(x_k,t_k)\Vert \) acceptably small, while steady-state error \(\Vert g(x_k,t_k)\Vert \) generated by the DTZNN model (37) with \(h^*=0.4524\) is a little smaller than those generated by the DTZNN model (37) with \(h^*=0.2364\) or \(h^*=0.4121\). This indicates that \(h^*=0.4524\) is a better approximation of the optimal step size than \(h^*=0.2364\) or \(h^*=0.4121\).

6 Conclusion

In this paper, based on the bilinear transform and Routh–Hurwitz stability criterion, a general five-step third-order ZeaD formula has been proposed firstly. Then, to solve a sequence of nonlinear optimizations, we have proposed a general five-step DTZNN model with \(O(\tau ^4)\) steady-state error pattern based on the general five-step third-order ZeaD formula, which has more steps and smaller truncation error. We have investigated its step size’s range and the optimal step size. Numerical results have been presented, which verify the rationality of the theoretical results obtained in this paper.

References

Polyak, B.T.: Introduction to Optimization. Optimization Software, Inc., New York (1987)

Jin, L., Zhang, Y.N.: Discrete-time Zhang neural network for online time-varying nonlinear optimization with application to manipulator motion generation. IEEE Trans. Neural Netw. Learn. Syst. 26(7), 1525–1531 (2015)

Zhang Y.N., Gong H.H., Yang M., Li J., Yang X.Y.: Stepsize range and optimal value for Taylor–Zhang discretization formula applied to zeroing neurodynamics illustrated via future equality-constrained quadratic programming. IEEE Trans. Neural Netw. Learn. Syst., PMID 30137015. https://doi.org/10.1109/TNNLS.2018.2861404

Zhang Y.N., Zhu M.J., Hu C.W., Li J., Yang M.: Euler-precision general-form of Zhang et al discretization (ZeaD) formulas, derivation, and numerical experiments. In: IEEE Chinese Control and Decision Conference (CCDC), pp. 6262–6267 (2018)

Sun, M., Tian, M.Y., Wang, Y.J.: Multi-step discrete-time Zhang neural networks with application to time-varying nonlinear optimization. Discrete Dyn. Nat. Soc. 4745759, 1–14 (2019)

Hu, C.W., Kang, X.G., Zhang, Y.N.: Three-step general discrete-time Zhang neural network design and application to time-variant matrix inversion. Neurocomputing 306, 108–118 (2018)

Zhang, Y.N., He, L., Hu, C.W., Guo, J.J., Li, J., Shi, Y.: General four-step discrete-time zeroing and derivative dynamics applied to time-varying nonlinear optimization. J. Comput. Appl. Math. 347, 314–329 (2019)

Zhang Y.N., Qi Z.Y., Li J., Qiu B.B., Yang M.: Stepsize domain confirmation and optimum of ZeaD formula for future optimization. Numerical Algorithms, 1–14, Accepted (2018)

Simonetto A.: Time-varying optimization: algorithms and engineering applications. arXiv: 1807.07032 (2018)

Xie Z.T., Jin L., Du X.J., Xiao X.C., Li H.X., Li S.: On generalized RMP scheme for redundant robot manipulators aided with dynamic neural networks and nonconvex bound constraints. IEEE Trans. Ind. Inform. 1–10 Accepted (2019)

Jin, L., Zhang, Y.N., Li, S., Zhang, Y.Y.: Noise-tolerant ZNN models for solving time-varying zero-finding problems: a control-theoretic approach. IEEE Trans. Autom. Control 62(2), 992–997 (2017)

Wang, Y.J., Xiu, N.H.: Nonlinear Programming Theory and Algorithm. Shaanxi Science and Technology Press, Xian (2008)

Sun, M., Wang, Y.J., Liu, J.: Generalized Peaceman-Rachford splitting method for multiple-block separable convex programming with applications to robust PCA. Calcolo 54(1), 77–94 (2017)

Zhang, Y.N., Li, Z., Yi, C.F., Chen, K.: Zhang neural network versus gradient neural network for online time-varying quadratic function minimization., vol. 807–814 (2008)

Zhang, Y.N., Chou, Y., Chen, J.H., Zhang, Z.Z., Xiao, L.: Presentation, error analysis and numerical experiments on a group of 1-step-ahead numerical differentiation formulas. J. Comput. Appl. Math. 239, 406–414 (2013)

Guo, D.S., Lin, X.J., Su, Z.Z., Sun, S.B., Huang, Z.J.: Design and analysis of two discrete-time ZD algorithms for time-varying nonlinear minimization. Numer. Algorithms 77(1), 23–36 (2018)

Qiu B.B., Zhang Y.N., Guo J.J., Yang Z., Li X.D.: New five-step DTZD algorithm for future nonlinear minimization with quartic steady-state error pattern. Numer. Algorithms, 1–21, Accepted (2018)

Li, Q.Y.: Numerical Analysis. Tsinghua University Press, Beijing (2008)

Zhang, Y.N., Jin, J., Guo, D.S., Yin, Y.H., Chou, Y.: Taylor-type 1-step-ahead numerical differentiation rule for first-order derivative approximation and ZNN discretization. J. Comput. Appl. Math. 273, 29–40 (2015)

Jury, E.I.: A simplified stability criterion for linear discrete systems. Proc. IRE 50(6), 1493–1500 (1962)

Acknowledgements

The authors thank the two anonymous reviewers for their valuable comments and suggestions that have helped them in improving the paper. This research was partially supported by the National Natural Science Foundation of China and Shandong Province (Nos. 11671228, 11601475, ZR2016AL05).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Anton Abdulbasah Kamil.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sun, M., Wang, Y. General Five-Step Discrete-Time Zhang Neural Network for Time-Varying Nonlinear Optimization. Bull. Malays. Math. Sci. Soc. 43, 1741–1760 (2020). https://doi.org/10.1007/s40840-019-00770-4

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40840-019-00770-4