Abstract

In a recent paper, Das introduced the graph \(\mathcal {I}n(\mathbb {V})\), called subspace inclusion graph on a finite dimensional vector space \(\mathbb {V}\), where the vertex set is the collection of nontrivial proper subspaces of \(\mathbb {V}\) and two vertices are adjacent if one is properly contained in another. Das studied the diameter, girth, clique number and chromatic number of \(\mathcal {I}n(\mathbb {V})\) when the base field is arbitrary, and he also studied some other properties of \(\mathcal {I}n(\mathbb {V})\) when the base field is finite. At the end of the above paper, the author posed the open problem of determining the automorphisms of \(\mathcal {I}n(\mathbb {V})\). In this paper, we give the answer to the open problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

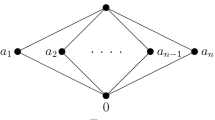

Let \(\mathbb {V}\) be a finite dimensional vector space over a field \(\mathbb {F}\) of dimension greater than 1. Das in [5] defined the subspace inclusion graph \(\mathcal {I}n(\mathbb {V})\) of \(\mathbb {V}\) as follows: the vertex set V of \(\mathcal {I}n(\mathbb {V})\) is the collection of nontrivial proper subspaces of \(\mathbb {V}\) and for \(W_1, W_2 \in V, W_1\) is adjacent to \(W_2\), written as \(W_1\sim W_2\), if either \(W_1 \subset W_2\) or \(W_2\subset W_1\). The main results of [5] are as follows:

Proposition 1.1

-

(1)

The diameter of \(\mathcal {I}n(\mathbb {V})\) is 3 if the dimension of \(\mathbb {V}\) is at least 3;

-

(2)

The girth of \(\mathcal {I}n(\mathbb {V})\) is either 3, 6 or \(\infty \);

-

(3)

The clique number and the chromatic number of \(\mathcal {I}n(\mathbb {V})\) are both \(\mathrm{dim}(\mathbb {V})-1\).

If the base field \(\mathbb {F}\) is a finite field with q elements, the author obtained the following result about the vertex degrees of \(\mathcal {I}n(\mathbb {V})\), which will be applied in our result.

Lemma 1.2

[5, Theorem 6.1] If W is a k-dimensional nontrivial proper subspace of \(\mathbb {V}\), then the degree of W is

where

Till date, a lot of researches, e.g., [1, 3,4,5,6,7,8, 10, 11], have been performed in connecting graph structures to subspaces of vector spaces.

Automorphisms of graphs are important in algebraic graph theory because they reveal the relationship between the vertices of the graph. Automorphisms of the zero-divisor graph of a ring R, denoted by \(\Gamma (R)\) and defined as a graph with \(Z(R){\setminus } \{0\}\) as vertex set and there is a directed edge from a vertex x to a distinct vertex y if and only if \(xy=0\), have attracted a lot of attention. In [2], Anderson and Livingston proved that the automorphism group of the zero-divisor graph of \(\mathbb {Z}_n\) is a direct product of symmetric groups for \(n \ge 4\) a nonprime integer. It was shown in [9] that Aut\((\Gamma (R)\)) is isomorphic to the symmetric group of degree \(p + 1\), when \(R =M_2(\mathbb {Z}_p)\) with p a prime. Park and Han [12] proved that Aut\((\Gamma (R))\cong S_{q+1}\) for \(R = M_2(F_q)\) with \(F_q\) an arbitrary finite field. In [15], Wong et al. determined the automorphisms of the zero-divisor graph with vertex set of all rank one upper triangular matrices over a finite field. Applying the main theorem in [15], Wang [13, 14], respectively, determined the automorphisms of the zero-divisor graph defined on all \(n\times n\) upper triangular matrices or on all \(n\times n\) full matrices when the base field is finite.

Now, a natural problem arises: What about the automorphisms of \(\mathcal {I}n(\mathbb {V})\)?

Denote by \(\mathrm{dim}(W)\) the dimension of a subspace W of \(\mathbb {V}\). If \(\mathrm{dim}(\mathbb {V})=1\), then the vertex set V of \(\mathcal {I}n(\mathbb {V})\) is empty; if \(\mathrm{dim}(\mathbb {V})=2\), then \(\mathcal {I}n(\mathbb {V})\) is a graph consisting of some isolated vertices; thus, any permutation on V is an automorphism of \(\mathcal {I}n(\mathbb {V})\). If \(\mathrm{dim}(\mathbb {V})\ge 3\), the situation is quite different. One will find that some nontrivial automorphisms do exist. In this paper, we solve the above problem for the case when the base field is finite.

Unless otherwise mentioned, \(\mathcal {I}n(\mathbb {V})\) is an n-dimensional vector space over a finite field \(F_q\) of q elements, where \(q=p^m\) and p is a prime integer. Let \(\{\epsilon _1, \epsilon _2, \ldots , \epsilon _n\}\) be a basis of \(\mathcal {I}n(\mathbb {V})\). Every \(\alpha \in \mathbb {V}\) can be uniquely written as \(\alpha =\sum _{i=1}^na_i\epsilon _i\) with \(a_i\in F_q\). If \(\alpha =\sum _{i=1}^na_i\epsilon _i, \beta =\sum _{i=1}^nb_i\epsilon _i\) satisfy \(\sum _{i=1}^n a_ib_i=0\), we write \(\alpha \perp \beta \) to denote that \(\alpha , \beta \) are orthogonal. For a subspace W of \(\mathbb {V}\), set

It is easy to see that:

-

(i) \(W^\perp \) is a subspace of \(\mathbb {V}\); (ii) \((W^\perp )^\perp =W\); (iii) \(\mathrm{dim}(W)+\mathrm{dim}(W^\perp )=\mathrm{dim}(\mathbb {V})\).

We note that \(\mathbb {V}\) possibly fails to be the sum of W and \(W^\perp \) since the intersection of W and \(W^\perp \) possibly contains nonzero vectors. Before announcing the main result of this paper, we introduce three standard automorphisms of \(\mathcal {I}n(\mathbb {V})\).

1.1 Involution of \(\mathcal {I}n(\mathbb {V})\)

Let \(\tau \) be the mapping from V, the vertex set of \(\mathcal {I}n(\mathbb {V})\), to itself, which sends any \(W\in V\) to \(W^\perp \). Then \(\tau ^2\) is the identity mapping on V, which implies that \(\tau \) is a bijection on V. Noting that \(W_1\subset W_2\) if and only if \(W_2^\perp \subset W_1^\perp \), we have \(W_1\sim W_2\) if and only if \(\tau (W_1)\sim \tau (W_2)\). Consequently, \(\tau \) is an automorphism of \(\mathcal {I}n(\mathbb {V})\), which is called the involution of \(\mathcal {I}n(\mathbb {V})\).

1.2 Invertible Linear Transformation

Let \(X=[x_{ij}]\) be an \(n\times n\) invertible matrix over \(F_q\). Then the mapping \(\theta _X\) on \(\mathbb {V}\) defined by

is an invertible linear transformation on \(\mathbb {V}\). The mapping from V to itself, also written as \(\theta _X\), sending any \(W\in V\) to \( \{\theta _X(w):w\in W\}\) is an automorphism of \(\mathcal {I}n(\mathbb {V})\).

1.3 Field Automorphism

Let f be an automorphism of the base field \(F_q\) and let \(\chi _f\) be the mapping on \(\mathbb {V}\) defined by

Then the related mapping, also written as \(\chi _f\), on V sending any \(W\in V\) to \(\{\chi _f(w)\,{:}\,w\in W\}\) is an automorphism of \(\mathcal {I}n(\mathbb {V})\), which is called a field automorphism of \(\mathcal {I}n(\mathbb {V})\).

The main result of this paper is the following theorem.

Theorem 1.3

If F is a finite field and \(\mathrm{dim}(\mathbb {V})\ge 3\), then a mapping \(\sigma \) on V is an automorphism of \(\mathcal {I}n(\mathbb {V})\) if and only if \(\sigma \) can be uniquely decomposed either as \(\sigma = \theta _X\circ \chi _f \) or as \(\sigma = \tau \circ \theta _X\circ \chi _f\), where \(\tau , \theta _X\) and \(\chi _f\) are as above.

Corollary 1.4

Let \(F=F_q\) with \(q=p^m\), where p is a prime integer. If \(\mathrm{dim}(\mathbb {V})\ge 3\), then the automorphism group of \(\mathcal {I}n(\mathbb {V})\) is isomorphic to the semidirect product of \(\mathbb {Z}_2\times \mathbb {Z}_m\) and \(PGL_n(F_q)\), that is \(\mathrm{Aut}(\mathcal {I}n(\mathbb {V}))\cong PGL_n(F_q)\rtimes (\mathbb {Z}_2\times \mathbb {Z}_m)\), where \(PGL_n(F_q)\) is the quotient group of all \(n\times n\) invertible matrices over \(F_q\) to the normal subgroup of all nonzero scalar matrices over \(F_q\).

We will give a proof for Theorem 1.3 and Corollary 1.4 in the next section.

2 Proof of Theorem 1.3

The subspace spanned by a subset S of \(\mathbb {V}\) is denoted by [S]. An 1-dimensional subspace W can be written as \(W=[\alpha ]\) with \(\alpha =\sum _{i=1}^na_n\epsilon \) a nonzero vector of \(\mathbb {V}\). As \([\alpha ]=[b\alpha ]\) for any \(0\not =b\in F_q\), the expression is not unique. However, if we require the first nonzero coefficient of \(\alpha \) is 1, that is \(\alpha \) is of the form

then the expression \(W=[\alpha ]\) is unique. Such an expression of an 1-dimensional subspace is called standard. In what follows, all 1-dimensional subspaces will be expressed in standard form. The degree of a vertex \(W\in V\) in \(\mathcal {I}n(\mathbb {V})\) is denoted by d(W). Before giving a proof for Theorem 1.3 we introduce some lemmas for latter use. The first one follows from Lemma 1.2 immediately.

Lemma 2.1

For \(W, W'\in V\), we have \(d(W)=d(W^\perp )\), and \(d(W')<d(W)\) if \(1\le \mathrm{dim}(W)<\mathrm{dim}(W')\le \frac{n}{2}\).

Proof

Suppose that \(\mathrm{dim}(W)=k\). Then \(\mathrm{dim}(W^\perp )=n-k\); thus, by Corollary 6.2 in [6], we have \(d(W)=d(W^\perp )\). If \(2\le k\le \frac{n}{2}\), then it follows from Lemma 1.2 that the degree of a \((k-1)\)-dimensional subspace U and the degree of a k-dimensional subspace \(U'\), respectively, are

Further, it can be obtained that

Since \(2\le k\le \frac{n}{2}\), we first have \(n-k-1>k-2\) and \(\frac{q^{n-k+1}-q^{k}}{q-1}> 0\). Next, if we can prove that

then one can easily get \(d(U)-d(U')>0\) and the second assertion follows. In fact, \(\frac{q^{i+1}-q^k}{q^{i+1}-1}<0, \frac{q^{n-k+1}-q^{i+1}}{q^{i+1}-1}>0\) and

Moreover, from Lemma 1.2, we see that, for any integers \(n>m\),

Hence, \(\left[ \begin{array}{c}n-k\\ i\end{array}\right] _q > \left[ \begin{array}{c}k-1\\ i\end{array}\right] _q\) for \(2\le k\le \frac{n}{2}\). Consequently, the desired inequality is proved. \(\square \)

Lemma 2.2

Let \(\sigma \) be an automorphism of \(\mathcal {I}n(\mathbb {V})\). If \(\sigma \) sends an 1-dimensional subspace to an 1-dimensional subspace, then \(\sigma \) sends every k-dimensional subspace to a subspace of equal dimension, where \(1\le k\le n-1\).

Proof

Suppose \(\sigma \) sends an 1-dimensional subspace \([\alpha _1]\) to an 1-dimensional subspace. Firstly, we consider the case when \(k=1\). Let \([\alpha ]\) be an 1-dimensional subspace different from \([\alpha _1]\). Expand \(\alpha _1\) to a basis of \(\mathbb {V}\) as \(\alpha _1, \alpha , \alpha _3, \ldots , \alpha _n\), where \(n=\mathrm{dim}(\mathbb {V})\). Then the following \(n-1\) vertices:

form a maximum clique of \(\mathcal {I}n(\mathbb {V})\) (see the proof for Theorem 5.1 in [6]). The image of this clique under \(\sigma \) is also a maximum clique of \(\mathcal {I}n(\mathbb {V})\). Since \(\sigma \) sends \([\alpha _1]\) to an 1-dimensional subspace of \(\mathbb {V}\), the image of \([\alpha _1,\alpha ,\alpha _3,\ldots ,\alpha _{n-1}]\) under \(\sigma \) must be an \((n-1)\)-dimensional subspace of \(\mathbb {V}\) (otherwise, it follows from Lemma 2.1 that the dimension of this space is 1 and thus this space is not adjacent to the image of \([\alpha _1]\) under \(\sigma \), a contradiction). The following \(n-1\) vertices:

also induce a maximum clique of \(\mathcal {I}n(\mathbb {V})\). Since the image of this clique under \(\sigma \) is also a maximum clique of \(\mathcal {I}n(\mathbb {V})\) and the dimension of the image of \([\alpha _1,\alpha ,\alpha _3,\ldots ,\alpha _{n-1}]\) under \(\sigma \) is \((n-1)\), we confirm that the image of \([\alpha ]\) under \(\sigma \) is of 1-dimensional (otherwise, such a space is of \((n-1)\)-dimensional and thus it is not adjacent to the image of \([\alpha _1,\alpha ,\alpha _3,\ldots ,\alpha _{n-1}]\), a contradiction).

Next, we proceed by induction on k, for \(1\le k\le n-1\), to prove that \(\sigma \) sends any k-dimensional subspace to a subspace of equal dimension. The case when \(k=1\) has been proved. Suppose \(\sigma \) sends every i-dimensional subspace to a subspace of equal dimension for \(1\le i\le k-1\). Let W be a k-dimensional subspace. We confirm the dimension of \( \sigma (W)\) is at least k. Otherwise the dimension of \(\sigma (W)\) equals to the dimension of a proper subspace, say U, of W; thus, \(\sigma (W)\) and \(\sigma (U)\) are not adjacent since they have the same dimension (the induction hypothesis implies that \(\mathrm{dim}(\sigma (U))=\mathrm{dim}(U)\)), which is a contradiction to \(U\sim W\). Hence, \(\mathrm{dim}(\sigma (W))\ge \mathrm{dim}(W)\). Observing that \(\sigma ^{-1}\) also sends an 1-dimensional subspace to an 1-dimensional subspace and it sends \(\sigma (W)\) to W, we have

Consequently, \(\mathrm{dim}(\sigma (W))=\mathrm{dim}(W)\). \(\square \)

Lemma 2.3

Let \(\sigma \) be an automorphism of \(\mathcal {I}n(\mathbb {V})\) which sends an 1-dimensional subspace of \(\mathcal {I}n(\mathbb {V})\) to an 1-dimensional subspace, W a k-dimensional subspace of \(\mathbb {V}\) with a set of base \(\alpha _1, \alpha _2, \ldots , \alpha _k\) and suppose \(\sigma ([\alpha _i])=[\beta _i]\) for \(1\le i\le k\). If \(\beta _1, \beta _2, \ldots , \beta _k\) are linearly independent, then \(\sigma (W)=[\beta _1, \beta _2, \ldots , \beta _k]\).

Proof

Assume that \(k\ge 2\). For \(1\le i\le k\), applying \(\sigma \) to \([\alpha _i]\subset W\) we have \([\beta _i]\subset \sigma (W)\), which implies that \([ \beta _1, \beta _2, \ldots , \beta _k]\subseteq \sigma (W)\). By Lemma 2.2, the dimension of \(\sigma (W)\) is k, which equals the dimension of \([ \beta _1, \beta _2, \ldots , \beta _k]\); thus, we have \(\sigma (W)=[\beta _1, \beta _2, \ldots , \beta _k]\).\(\square \)

Let I be the \(n\times n\) identity matrix and let \(E_{ij}\), for \(1\le i,j\le n\), be the \(n\times n\) matrix unit whose (i, j) position is 1 and all other positions are 0.

Lemma 2.4

Let \(\sigma \) be an automorphism of \(\mathcal {I}n(\mathbb {V}), 1\le k\le n\). If \(\sigma \), respectively, fixes every \([\epsilon _i]\) for \(i=1,2, \ldots , k-1\), then there exists an invertible matrix A such that \(\theta _{A }\circ \sigma \) fixes every \([\epsilon _j]\) for \(j=1,2, \ldots , k\).

Proof

We only consider the case when \(2\le k\), the case when \(k=1\) can be proved similarly. Denote \([\epsilon _1,\epsilon _2,\ldots ,\epsilon _{k-1}]\) by W. Applying Lemma 2.3, we have \(\sigma (W)=W\). Suppose \(\sigma ([\epsilon _k])=[\alpha ]\) and \(\alpha =\sum _{i=1}^na_i\epsilon _i\). Since \([\epsilon _k]\) and W are not adjacent, so are their images under \(\sigma \), which implies that \( \alpha \notin W\) and not all of \(a_k,a_{k+1},\ldots ,a_n\) are 0. If \(a_{k}\not =0\), then we take

and thus \(\theta _{A }\circ \sigma \) fixes \([\epsilon _k]\). If \(a_{k}=0\) and \(a_{ m}\not =0\) for some \(m>k\), let \(P_{km}\) be the permutation matrix obtained from I by permuting the mth row and the kth row of I, and take

then \(\theta _{A }\circ \sigma \) fixes \([\epsilon _k]\). Note that \(\theta _{A }\circ \sigma \) also fixes \([\epsilon _i]\) for \(i=1,2, \ldots , k-1\).\(\square \)

Now, we are ready to give a proof for Theorem 1.3.

Proof of Theorem 1.3

The sufficiency of Theorem 1.3 is obvious since the product of some automorphisms of a graph is also an automorphism. For the necessity, we complete the proof by establishing several claims. Let \(\epsilon _1,\epsilon _2,\ldots , \epsilon _n\) be a set of basis of \(\mathbb {V}, \sigma \) an automorphism of \(\mathcal {I}n(\mathbb {V})\).

Claim 1

Either \(\sigma \) or \(\tau \circ \sigma \) sends \([\epsilon _1]\) to an 1-dimensional subspace of \(\mathbb {V}\).

Clearly, the degree of \(\sigma ([\epsilon _1])\) must equal the degree of \([\epsilon _1]\). Thus by Lemma 2.1, the dimension of \(\sigma ([\epsilon _1])\) is either 1 or \(n-1\). If \(\sigma \) sends \([\epsilon _1]\) to an 1-dimensional subspace of \(\mathbb {V}\), then there is nothing to do. Otherwise, if \(\sigma \) sends \([\epsilon _1]\) to an \((n-1)\)-dimensional subspace of \(\mathbb {V}\), then \(\tau \circ \sigma \) sends \([\epsilon _1]\) to \((\sigma ([\epsilon _1]))^\perp \), which is an 1-dimensional subspace of \(\mathbb {V}\).

If \(\sigma \) sends \([\epsilon _1]\) to an \((n-1)\)-dimensional subspace of \(\mathbb {V}\), we will denote \(\tau \circ \sigma \) by \(\sigma _1\); otherwise, we set \(\sigma _1=\sigma \). Then \( \sigma _1\) sends \([\epsilon _1]\) to an 1-dimensional subspace.

Claim 2

There exists an invertible matrix A such that \(\theta _{A }\circ \sigma _1\) fixes every \([\epsilon _i]\) for \(i=1,2, \ldots , n\).

Applying Lemma 2.4, there is an invertible matrix \(A_1\) such that \(\theta _{A_1}\circ \sigma _1\) fixes \([\epsilon _1]\). Then we can further find an invertible matrix \(A_2\) such that \(\theta _{A_2}\circ \theta _{A_1}\circ \sigma _1\), respectively, fixes \([\epsilon _1]\) and \([\epsilon _2]\). Proceeding in this way, we can find invertible matrices \(A_1, A_2, \ldots , A_n\), in sequence, such that \( \theta _{A_n}\circ \theta _{A_{n-1}}\circ \ldots \circ \theta _{A_1}\circ \sigma _1\) fixes every \(\epsilon _i\) for \(i=1,2, \ldots , n\). Let \(A=A_nA_{n-1}\ldots A_1\). Then \(\theta _{A }\circ \sigma _1\) is as required.

In the following, we denote \( \theta _{A }\circ \sigma _1\) by \(\sigma _2\). Keep in mind that \(\sigma _2\) sends every nontrivial proper subspace to a subspace of equal dimension (by Lemma 2.2).

Claim 3

For any \(\alpha =\sum _{i=1}^na_i\epsilon _i\not =0\), suppose \(\sigma _2([\alpha ])=[\beta ]\) with \(\beta =\sum _{i=1}^nb_i\epsilon _i\). Then \(b_k=0\) if and only if \(a_k=0\) for \(1\le k\le n\).

Let \(U_k\) denote the subspace spanned by \(\epsilon _1, \ldots , \epsilon _{k-1},\epsilon _{k+1}, \ldots , \epsilon _n\). Then \(\sigma _2\) fixes every \(U_k\) for \(k=1,2, \ldots , n\) (thanks to Lemma 2.3). If \(a_k=0\), then \([\alpha ]\subset U_k\), which implies that \([\beta ]\subset U_k\) and thus \(b_k=0\). By considering \(\sigma _2^{-1}\) we have \(a_k=0\) whenever \(b_k=0\).

For \(1\le i<j\le n\) and \(a\in F_q\), Claim 3 shows that \(\sigma _2\) sends \([\epsilon _i+a\epsilon _j]\) to a vertex of the form \([\epsilon _i+b\epsilon _j]\) with \(b\in F_q\). Thus we can define a function \(f_{ij}\) on \(F_q\) such that \(f_{ij}(0)=0\) and \(\sigma _2([\epsilon _i+a\epsilon _j])=[\epsilon _i+f_{ij}(a)\epsilon _j]\). Next, we will study the properties of \(f_{ij}\).

Claim 4

\(\sigma _2([\epsilon _i+\sum _{j=i+1}^na_j\epsilon _j])=[\epsilon _i+\sum _{j=i+1}^nf_{ij}(a_j)\epsilon _j]\) for \(1\le i<j\le n\).

Suppose \(\sigma _2([\epsilon _i+\sum _{j=i+1}^na_j\epsilon _j]=[\epsilon _i+\sum _{j=i+1}^nb_j\epsilon _j]\). Applying \(\sigma _2\) on

then by Lemma 2.3 we have

which implies that \(b_k=f_{ik}(a_k)\) for any \(1\le i<k\le n\).

Claim 5

Let \(2\le i<j\le n\). Then

-

(i)

\(f_{1j}(ab)=f_{1i}(a)f_{ij}(b)\) for any \(a,b\in F_q\);

-

(ii)

\(f_{1j}(a)=f_{1i}(a)f_{ij}(1)=f_{1i}(1)f_{ij}(a)\) for any \(a\in F_q\). In particular, \(f_{1j}(1)=f_{1i}(1)f_{ij}(1)\).

-

(iii)

\(\frac{f_{1j}(a)}{f_{1j}(1)} =\frac{f_{1i}(a)}{f_{1i}(1)} =\frac{f_{ij}(a)}{f_{ij}(1)}\).

As \([\epsilon _1]\subset [\epsilon _1+a\epsilon _i+ab\epsilon _j, \epsilon _i+b\epsilon _j]\), applying Lemma 2.3 and Claim 4, we have

from which it follows that

Taking \(b=1\), we have

Similarly, we have

If we take \(a=b=1\), we have

which completes the proof of (ii). (iii) follows from (ii) immediately.

Claim 6

Let \(f=\frac{f_{12}}{f_{12}(1)}\). Then

-

(i)

\(f(a)=\frac{f_{ij}(a)}{f_{ij}(1)}\) for any \(a\in F_q\) and \(1\le i<j\le n\).

-

(ii)

\(f(ab)=f(a)f(b)\) for any \(a,b\in F_q\).

-

(iii)

\(f(1)=1\) and \(f(a^{-1})=f(a)^{-1}\) for \(0\not =a\in F_q\).

-

(iv)

\(f(-1)=-1\) and \(f(-a)=-f(a)\) for any \(a\in F_q\).

-

(v)

\(f(a+b)=f(a)+f(b)\) for any \(a,b\in F_q\).

-

(vi)

f is an automorphism of \(F_q\).

The definition of f implies that \(f(a)=\frac{f_{12}(a)}{f_{12}(1)}\), and (iii) of Claim 5 implies that

which proves (i) of this claim.

For any \(a, b\in F_q\),

which proves (ii) of this claim.

The definition of f implies that \(f(1)=1\). If \(a\not =0\), from \(1=f(1)=f(a^{-1}a)=f(a^{-1})f(a)\) we have \(f(a^{-1})=f(a)^{-1}\).

As \(1=f(1)=f((-1)(-1))=f(-1)^2\), we have \(f(-1)=-1\). Further, we have

which proves (iv).

If \(a=0\), (v) is obvious. Assume that \(a\not =0\). By

we have

from which it follows that

As

we further have \(f(a+b)=f(a)+f(b)\).

(vi) follows from (ii)–(v) immediately.

Claim 7

Set \(f_{11}(1)=1\), then \(\sigma _2([\epsilon _i+\sum _{j=i+1}^na_j\epsilon _j])=[\epsilon _i+\frac{1}{f_{1i}(1)}\sum _{j=i+1}^nf(a_j)f_{1j}(1)\epsilon _j]\) for \(1\le i<j\le n\).

By Claim 4,

Since \(f_{ij}(a_{ij})=f(a_{ij})f_{ij}(1)\) and \(f_{ij}(1)=\frac{f_{1j}(1)}{f_{1i}(1)}\), the assertion of this claim is confirmed.

Claim 8

There is a diagonal matrix D such that \(\chi _f^{-1}\circ \theta _D\circ \sigma _2 \) fixes every 1-dimensional subspace.

Let \(D=diag(1,f_{12}^{-1}(1),f_{13}^{-1}(1),\ldots , f_{1n}^{-1}(1))\). Then \(\theta _D\circ \sigma _2\) sends any 1-dimensional subspace \([\epsilon _i+\sum _{j=i+1}^na_j\epsilon _j]\) to \([\epsilon _i+\sum _{j=i+1}^nf(a_j)\epsilon _j]\). Further \(\chi _f^{-1}\circ \theta _D\circ \sigma _2 \) fixes every 1-dimensional subspace. Let \(\sigma _3=\chi _f^{-1}\circ \theta _D\circ \sigma _2\).

Claim 9

\(\sigma _3\) fixes every vertex of \(\mathcal {I}n(\mathbb {V})\).

Claim 8 has shown that \(\sigma _3\) fixes every 1-dimensional subspace of \(\mathbb {V}\). Now, we proceed by induction on the dimension of subspace to prove that \(\sigma _3\) fixes every nontrivial proper subspace of \(\mathbb {V}\). Suppose \(\sigma _3\) fixes every \((k-1)\)-dimensional subspace of \(\mathbb {V}\) and let W be a k-dimensional subspace of \(\mathbb {V}\) with a basis \(w_1,w_2, \ldots , w_k\). The induction hypothesis implies that \(\sigma _3\) fixes \([w_1,w_2,\ldots , w_{k-1}]\). As \([w_k]\subset W,\,[w_1,w_2,\ldots , w_{k-1}]\subset W\), by applying \(\sigma _3\) we have \([w_k]\subset \sigma _3(W),\, [w_1,w_2,\ldots , w_{k-1}]\subset \sigma _3(W)\), and thus \(W\subseteq \sigma _3(W)\). By comparing their dimensions we have \(\sigma _3(W)=W\).

Claim 9 has proved that \(\sigma _3\) is the identity mapping on V. Thus \(\sigma =\tau \circ \theta _X\circ \chi _f\) or \(\sigma =\theta _X\circ \chi _f\), where \(X=A^{-1}D^{-1}\).

Suppose

are two decompositions of \(\sigma \), where \(\delta _i=1\) or \(0, X_1, X_2\) are \(n\times n\) invertible matrices over \(F_q\) and \(f_1, f_2\) are automorphisms of \(F_q\). We first prove that \(\delta _1=\delta _2\). Indeed, if \(\delta _1\not = \delta _2\), say \(\delta _1=1\) and \(\delta _2=0\), then \(\tau ^{\delta _1}\circ \theta _{X_1}\circ \chi _{f_1}\) sends \([\epsilon _1]\) to an \((n-1)\)-dimensional subspace; however, \(\tau ^{\delta _2}\circ \theta _{X_2}\circ \chi _{f_2}\) sends \([\epsilon _1]\) to an 1-dimensional subspace, a contradiction. Thus, \(\delta _1=\delta _2\) and

which further implies that

As \(\theta _{X_2^{-1}X_1}\) fixes every \([\epsilon _i]\) for \(i=1,2, \ldots ,n\), we find that \(X_2^{-1}X_1\) is a diagonal matrix. Furthermore, since \(\theta _{X_2^{-1}X_1}\) also fixes every \([\epsilon _i+\epsilon _j]\) for \(i\not =j\), we find that the diagonal matrix must be a nonzero scalar matrix. Hence \(X_2\) must be a nonzero scalar multiple of \(X_1\) and thus \(\theta _{X_2}=\theta _{X_1}\), which implies that \(\chi _{f_2}=\chi _{f_1}\). \(\square \)

Proof of Corollary 1.4

Corollary 1.4 follows from Theorem 1.3 and the following observations:

-

(1)

\(\tau \) is of order 2 and it commutes with \(\chi _f\) for any \(f\in \mathrm{Aut}(F_q)\).

-

(2)

The automorphism group of \(F_q\) is a cyclic group of order m, which is isomorphic to the additive group of \(\mathbb {Z}_m\).

-

(3)

\(\theta _X=\theta _Y\) if and only if \(X=aY\) for an nonzero \(a\in F_q\), thus the subgroup of \(\mathrm{Aut}(\mathcal {I}n(\mathbb {V}))\) consisting of all \(\theta _X\) with \(X\in GL_n(F_q)\) is isomorphic to \(PGL_n(F_q)\), the quotient group of all \(n\times n\) invertible matrices over \(F_q\) to the normal subgroup of all nonzero scalar matrices over \(F_q\).

-

(4)

The subgroup of \(\mathrm{Aut}(\mathcal {I}n(\mathbb {V}))\) consisting of all \(\theta _X\) with \(X\in GL_n(F_q)\) is a normal subgroup of \(\mathrm{Aut}(\mathcal {I}n(\mathbb {V}))\) since \(\chi _f\cdot \theta _X\cdot \chi _{f^{-1}}=\theta _{f(X)}\) and \(\tau \cdot \theta _X \cdot \tau ^{-1}=\theta _{(X^T)^{-1}}\), where \(X^T\) is the transpose of X.\(\square \)

Remark

As a topic of further research, one can look into the determining sets and determining number of the subspace inclusion graph. For definition of determining sets, one can refer to a paper by Debra L. Boutin: Determining sets, resolving sets, and the exchange property available at https://arxiv.org/pdf/0808.1427.pdf.

References

Akbari, S., Habibi, M., Majidinya, A., Manaviyat, R.: The inclusion ideal graph of rings. Commun. Algebra 43, 2457–2465 (2015)

Anderson, D.F., Livingston, P.S.: The zero-divisor graph of a commutative ring. J. Algebra 217, 434–447 (1999)

Badawi, A.: On the dot product graph of a commutative ring. Commun. Algebra 43, 43–50 (2015)

Das, A.: Non-zero component graph of a finite dimensional vector space. Commun. Algebra 44, 3918–3926 (2016)

Das, A.: Subspace inclusion graph of a vector space. Commun. Algebra 44, 4724–4731 (2016)

Das, A.: On nonzero component graph of vector spaces over finite fields. J. Algebra Appl. 16, 1750007 (2017)

Das, A.: Non-zero component union graph of a finite-dimensional vector space. Linear Multilinear Algebra 65, 1276–1287 (2017)

Das, A.: On subspace inclusion graph of a vector space. Linear Multilinear Algebra (2017). https://doi.org/10.1080/03081087.2017.1306016

Han, J.: The zero-divisor graph under group actions in a noncommutative ring. J. Korean Math. Soc. 45, 1647–1659 (2008)

Laison, J.D., Qing, Y.: Subspace intersection graphs. Discrete Math. 310, 3413–3416 (2010)

Nikandish, R., Maimani, H.R., Khaksari, A.: Coloring of a non-zero component graph associated with a finite dimensional vector space. J. Algebra Appl. 16, 1750173 (2017)

Park, S., Han, J.: The group of graph automorphisms over a matrix rings. J. Korean Math. Soc. 48, 301–309 (2011)

Wang, L.: A note on automorphisms of the zero-divisor graph of upper triangular matrices. Linear Algebra Appl. 465, 214–220 (2015)

Wang, L.: Automorphisms of the zero-divisor graph of the ring of matrices over a finite field. Discrete Math. 339, 2036–2041 (2016)

Wong, D., Ma, X., Zhou, J.: The group of automorphisms of a zero-divisor graph based on rank one upper triangular matrices. Linear Algebra Appl. 460, 242–258 (2014)

Acknowledgements

The authors are grateful to the referees for their valuable comments, corrections and suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Sandi Klavžar.

Dein Wong: Supported by “the National Natural Science Foundation of China (No. 11571360)”.

Rights and permissions

About this article

Cite this article

Wang, X., Wong, D. Automorphism Group of the Subspace Inclusion Graph of a Vector Space. Bull. Malays. Math. Sci. Soc. 42, 2213–2224 (2019). https://doi.org/10.1007/s40840-017-0597-2

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40840-017-0597-2