Abstract

In this paper, a Pythagorean fuzzy decision-making method based on overall entropy is presented. First, a new definition is proposed for fuzzy entropy for any given Pythagorean fuzzy set (PFS). The proposed definition is based on the relationship between the fuzziness contained in the given PFS and the distance from a point to a line on a projection plane. Some related properties are introduced. Second, the overall entropy of the PFS is determined based on fuzzy entropy and the degree of hesitancy; proofs are presented to formalize some related properties. Third, an entropy weight formula is provided that is based on overall entropy, and a Pythagorean fuzzy decision-making method is developed on this basis. Finally, the effectiveness and practicability of the proposed methods are illustrated by an example and three comparative analyses.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In multi-criteria decision-making (MCDM) problems, criterion weights play an important role in the decision-making process because the proper assignment of criterion weights is conducive to optimally ranking alternatives. However, the weight information of criteria is sometimes unknown in MCDM problem; therefore, finding a method for determining weights is crucial. Various methods have been published in the literature [1,2,3,4,5] for determining weight information, such as the weighted least squares method [1], AHP method [2], principle element analysis [3], and multiple objective programming [3, 4]. Entropy presents a powerful method for determining weight information in MCDM problems where such information is completely unknown. In 1965, Zadeh [6] proposed the concept of fuzzy entropy, which is often used to measure the fuzziness of fuzzy sets (FSs). De Luca and Termini [7] axiomatized fuzzy entropy and defined fuzzy entropy for FSs in 1972.

With the rise of the theory on intuitionistic fuzzy sets (IFSs) [8], numerous achievements [9,10,11,12,13,14] have been made in IFSs. For instance, in 1996, Burille and Bustince [9] proposed the axiomatically defined entropy for IFSs and constructed different entropy formulas for IFSs. In 2001, Szmidt and Kacprzyk [10] extended De Luca and Termini’s definition [7] to IFSs and developed nonprobabilistic entropy for IFSs based on a geometric interpretation of IFSs. In 2016, Zhu and Li [11] presented new axioms of entropy measure in IFSs. Since then, the theory of Pythagorean fuzzy sets (PFSs) [15, 16] was developed to depict the fuzziness of the objective world that IFSs cannot. Consequently, many scholars have studied the entropy measure of PFSs [17,18,19,20]. For example, Yang and Hussain [17] proposed four entropy measures of PFSs based respectively on probability, distance, Pythagorean index, and a min–max operation. Xue et al. [18] presented a new entropy measure for PFSs based on similarity and hesitancy. Wan et al. [19] constructed an entropy measure of PFSs based on the axiomatic definition proposed by Szmidt and Kacprzyk [10]. Peng et al. [20] formalized an axiomatic definition of entropy measure for PFSs and provided 12 entropies of PFSs based on the axiomatic definition of entropy measure given by Burille and Bustince [9]. However, the aforementioned entropy measures of PFSs do not satisfy \(E(P^{\frac{1}{2}})>E(P)\) in some cases, so they do not satisfy the requirement for acceptable performance (which is explained thoroughly in Sect. 5.1). The main contributions of this paper are as follows:

-

A new entropy measure for PFSs is constructed to improve the aforementioned entropies [17,18,19,20].

-

Based on the proposed overall entropy, a new Pythagorean fuzzy decision-making method is presented to solve MCDM problems with completely unknown weight information.

The remainder of this paper is organized as follows: Sect. 2 reviews some basic notions of PFSs and the axiomatic definition of entropy measure of PFSs. Section 3 gives the fuzzy entropy and overall entropy of PFSs according to the degree of hesitancy and the relationship between distance and fuzziness, and introduces their related properties. Section 4 presents a Pythagorean fuzzy decision-making method based on overall entropy, and explains its specific application with an example. Section 5 provides three comparative analyses to illustrate the effectiveness and practicability of the proposed methods. Section 6 concludes the paper.

2 Preliminaries

2.1 Pythagorean Fuzzy Sets

Yager [15, 16] proposed the notion of PFSs, Zhang and Xu [21] elaborated Yager’s notions to offer a more general definition of PFSs.

Definition 1

Let a set X be a universe of discourse, a PFS P in X is an object having the form

where \(\mu _P:X\rightarrow [0,1]\) and \(\nu _P:X\rightarrow [0,1]\) with the condition \(0\le (\mu _P(x))^{2}+(\nu _P(x))^{2}\le 1\). The numbers \(\mu _P(x)\) and \(\nu _P(x)\) represent, respectively, the degree of membership and nonmembership of element x to set P. \(\pi _P(x)= (1-(\mu _P(x))^{2}-(\nu _P(x))^{2})^{\frac{1}{2}}\) is called the degree of indeterminacy, or hesitancy, of x to P. Let PFS(X) be the set of all PFSs in X.

For convenience, the pair \(P(\mu _P(x),\nu _P(x))\) is called a Pythagorean fuzzy number (PFN) and simply denoted by \(\beta =P(\mu _{\beta },\nu _{\beta })\), where \(\mu _{\beta }\in [0,1],\nu _{\beta }\in [0,1]\) and \(0\le (\mu _{\beta })^{2}+(\nu _{\beta })^{2}\le 1\).

Based on the aforementioned definition, Zhang et al. [21] and Peng et al. [22] have explained how to compare two PFNs in terms with a score function and an accuracy function.

Definition 2

Let \(\beta =P(\mu _{\beta },\nu _{\beta })\) be a PFN, then the score function s and accuracy function h of \(\beta \) can be defined as follows:

Theorem 1

Let\(\beta _{1}=P(\mu _{\beta _{1}},\nu _{\beta _{1}})\), \(\beta _{2}=P(\mu _{\beta _{2}},\nu _{\beta _{2}})\)be two PFNs and\(s(\beta _{1})\), \(s(\beta _{2})\), \(h(\beta _{1})\), and\(h(\beta _{2})\)be the scores and accuracy values of\(\beta _{1}\)and\(\beta _{2}\)respectively, then

-

1.

If\(s(\beta _{1})<s(\beta _{2})\), then\(\beta _{1}\prec \beta _{2}\);

-

2.

If\(s(\beta _{1})=s(\beta _{2})\), then

-

a.

If\(h(\beta _{1})<h(\beta _{2})\), then\(\beta _{1}\prec \beta _{2}\);

-

b.

If\(h(\beta _{1})=h(\beta _{2})\), then\(\beta _{1}\sim \beta _{2}\).

-

a.

Using the PFN algorithm, for any positive real number n and PFS P, Yang and Hussain [17] defined a PFS \(P^{n}\) as follows:

where \((\mu _{P}(x))^{n}\in [0,1]\) and \(\sqrt{1-(1-(\nu _{P}(x))^{2})^{n}}\in [0,1]\) respectively denote the degree of membership and nonmembership of element x to set \(P^{n}\) and satisfy the condition \(0\le ((\mu _{P}(x))^{n})^{2}+(\sqrt{1-(1-(\nu _{P}(x))^{2})^{n}})^{2}\le 1\). In addition, \(\pi _{P^{n}}(x)=\sqrt{(1-(\nu _{P}(x))^{2})^{n}-(\mu _{P}(x))^{2n}}\) denotes the degree of indeterminacy, or hesitancy, of the element \(x\in X\) to the set \(P^{n}\).

Remark 1

In fact, \(P^{n}\) is also a PFS on X.

Let \(\psi (x)=(\mu _{P}(x))^{2n}+(\sqrt{1-(1-(\nu _{P}(x))^{2})^{n}})^{2}\)\(=1+(\mu _{P}(x))^{2n}-(1-(\nu _{P}(x))^{2})^{n}\) because \(\psi (x)=1+(\mu _{P}(x))^{2n}-(1-(\nu _{P}(x))^{2})^{n} \le 1+(\mu _{P}(x))^{2n}-(\mu _{P}(x))^{2}+(\nu _{P}(x))^{2}-(\nu _{P}(x))^{2})^{n} =1\) and \(\psi (x)=1+(\mu _{P}(x))^{2n}-(1-(\nu _{P}(x))^{2})^{n}\ge 1+(\mu _{P}(x))^{2n}-1=(\mu _{P}(x))^{2n}\ge 0\), that is, \(\psi (x)\in [0,1]\).

2.2 Axiomatic Definition of Entropy of PFSs

De Luca and Termini [7] formalized an axiomatic definition of fuzzy entropy for FSs. Szmidt and Kacprzyk [10] extended it to an entropy measure of IFSs. Because the PFS developed by Yager [15, 16] is a generalized form of an IFS, Peng et al. [20] used a concept similar to IFSs to give an axiomatic definition of entropy measure of PFSs.

Definition 3

For any \(M, N\in {\text{PFS}}(X)\), an entropy measure E(M) is a mapping \(E: {\text{PFS}}(X)\rightarrow [0, 1]\) that satisfies the following axioms:

- (E1):

-

(Nonnegativity) \(0\le E(M)\le 1\);

- (E2):

-

(Minimality) \(E(M)=0\) iff M is a crisp set;

- (E3):

-

(Maximality) \(E(M)=1\) iff \(\mu _{M}(x)=\nu _{M}(x)\);

- (E4):

-

(Symmetry) \(E(M)=E(M^{c})\);

- (E5):

-

(Resolution) \(E(M)\le E(N)\) if M is less fuzzy than N, that is, \(\mu _{M}(x) \le \mu _{N}(x) \le \nu _{N}(x)\le \nu _{M}(x)\), or \(\nu _{M}(x) \le \nu _{N}(x)\le \mu _{N}(x)\le \mu _{M}(x)\).

Here, \(M^{c}=\{\langle x, \nu _{M}(x),\mu _{M}(x)\rangle |x\in X\}\). M is a crisp set, which means that \(\mu _{M}(x)=1\), \(\nu _{M}(x)=0\) or \(\mu _{M}(x)=0\), \(\nu _{M}(x)=1\).

By considering the characteristics of linguistic variables, for any \(P\in {\text{PFS}}(X)\), if we regard P as “\(LARGE\)” in X, then employing Eq. (3), we consider the following:

\(P^{\frac{1}{2}}\) may be treated as “\(More \ or \ less \ LARGE.\)”

\(P^{2}\) may be treated as “\(Very \ LARGE.\)”

\(P^{\frac{5}{2}}\) may be treated as “\(Quite \ very \ LARGE.\)”

\(P^{3}\) may be treated as “\(Very \ very \ LARGE.\)”

From an intuitive perspective, Yang and Hussain [17] determined that the entropy measures of PFSs ought to satisfy the following requirement for acceptable performance:

Some entropy measures of PFSs [17,18,19,20] do not satisfy the Sequence (4) under some cases. Therefore, we propose a new entropy measure of PFSs that considers both fuzziness and the degree of hesitancy to improve entropy measure, and we compare the proposed entropy measure with existing entropy measures in Sect. 5.1.

3 Entropy of Pythagorean Fuzzy Sets

In this section, fuzzy entropy of PFSs is proposed that is based on the relationship between distance and fuzziness in a geometrical interpretation of PFNs. On this basis, the degree of hesitancy is applied to define the overall entropy of PFSs.

3.1 Fuzzy Entropy of PFSs

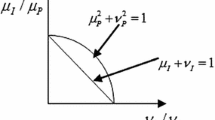

In the following, the fuzzy entropy of PFSs based on the relationship between the fuzziness included in the PFS and the distance from a point to a line on a projection plane is introduced. For convenience of explanation, we explain the geometric meaning of a PFS in terms of a PFN corresponding to a fixed x.

-

Geometric interpretation of PFNs

As illustrated in Fig. 1, for any PFN \(P(\mu ,\nu )\), \(\mu \), \(\nu \), \(\pi \in [0,1]\) and \(\mu ^2+\nu ^2+\pi ^2=1\), thus, the image of PFNs in the three-dimensional coordinate system is the spherical surface \(A_{1}C_{1}B_{1}\). The orthogonal projection of spherical surface \(A_{1}C_{1}B_{1}\) provides a representation of PFNs on a plane, that is, the sector \(A_{2}C_{2}B_{2}\). The orthogonal projections of arcs \(E_{1}F_{1}\), \(C_{1}D_{1}\), and \(G_{1}H_{1}\) are respectively segments \(E_{2}F_{2}\), \(C_{2}D_{2}\), and \(G_{2}H_{2}\). Furthermore, any coordinate in the spherical surface \(A_{1}C_{1}B_{1}\) can be represented in the sector \(A_{2}C_{2}B_{2}\).

-

Relationship between fuzziness and distance

By analyzing Fig. 1, we can determine that points \(A_{2}(1,0)\) and \(B_{2}(0,1)\) are the crisp points, they respectively represent that the corresponding fixed x fully belongs to the PFS and fully does not belong to the PFS, and thus, their fuzziness is \(0\%\). From point \(A_{2}\) to point \(B_{2}\) along arc \(A_{2}B_{2}\), the degrees of membership decreases, and the degree of non-membership increases. For the midpoint \(D_{2}\), the degree of membership and non-membership are both equal to \(\frac{\sqrt{2}}{2}\); at this point, we cannot determine whether the corresponding fixed x belongs to the PFS, and therefore, the fuzziness of the midpoint \(D_{2}\) is \(100\%\). That is, the fuzziness along arc \(A_{2}D_{2}\) increases from 0 to \(100\%\). Similarly, the fuzziness along arc \(D_{2}B_{2}\) decreases from 100 to \(0\%\).

Furthermore, we determine that the fuzziness of any point on segment \(C_{2}D_{2}\) is \(100\%\), which means that the fuzzy entropy of any point on segment \(C_{2}D_{2}\) is equal to 1. In sector \(A_{2}C_{2}B_{2}\), for any points \(P_{1}\) and \(P_{2}\), if the distance from point \(P_{1}\) to segment \(C_{2}D_{2}\) is equal to the distance from point \(P_{2}\) to segment \(C_{2}D_{2}\), then the fuzziness of these two points is equal. In other words, the fuzzy entropy of point \(P_{1}\) is equal to that of point \(P_{2}\). Therefore, in the sector \(A_{2}C_{2}B_{2}\), we judge the fuzziness of a point according to the distance from the point to segment \(C_{2}D_{2}\) and then determine the fuzzy entropy of the point.

-

Origin of fuzzy entropy of PFSs

The distance from the point to segment \(C_{2}D_{2}\) is calculated using Eq. (5), which expresses the distance from a point to a line:

$$\begin{aligned} D({\hat{P}}, l)=\frac{|A\mu _{{\hat{P}}}+B\nu _{{\hat{P}}}+C|}{\sqrt{A^{2}+B^{2}}} \end{aligned}$$(5)where point \({\hat{P}}=(\mu _{{\hat{P}}},\nu _{{\hat{P}}})\), and the equation of line l is denoted by \(A\mu +B\nu +C=0\) (where A, B, and C are arbitrary constants, \(A\ne 0\) or \(B\ne 0)\).

Therefore, in the sector \(A_{2}C_{2}B_{2}\), the equation of segment \(C_{2}D_{2}\) is \(\mu -\nu =0\), for a point \({\hat{P}}=(\mu _{{\hat{P}}},\nu _{{\hat{P}}})\), from Eq. (5), and the distance from point \({\hat{P}}\) to segment \(C_{2}D_{2}\) can be obtained as follows:

$$\begin{aligned} D({\hat{P}},C_{2}D_{2})=\frac{|\mu _{{\hat{P}}}-\nu _{{\hat{P}}}|}{\sqrt{2}}. \end{aligned}$$(6)In the sector \(A_{2}C_{2}B_{2}\), points \(A_{2}\) and \(B_{2}\) are the farthest from segment \(C_{2}D_{2}\):

$$\begin{aligned}&D(A_{2},C_{2}D_{2})=D(B_{2},C_{2}D_{2})=\max (D({\hat{P}},C_{2}D_{2}))\nonumber \\&\quad =\frac{\sqrt{2}}{2}. \end{aligned}$$(7)The greater the distance is from segment \(C_{2}D_{2}\), the smaller the fuzziness and fuzzy entropy must be. To guarantee that the fuzzy entropy is negatively correlated with distance and is evaluated on a closed unit interval, the fuzzy entropy of point \({\hat{P}}\) is defined as

$$\begin{aligned} E^{*}({\hat{P}}) & =1-\frac{D({\hat{P}},C_{2}D_{2})}{D(A_{2},C_{2}D_{2})}=1-\frac{D({\hat{P}},C_{2}D_{2})}{D(B_{2},C_{2}D_{2})} \\ & \quad =1-|\mu _{{\hat{P}}}-\nu _{{\hat{P}}}| .\end{aligned}$$(8) -

New definition of fuzzy entropy of PFSs

Through analysis of the correlation between PFNs and PFSs, the following definition of fuzzy entropy of the PFS can be derived. In addition, because the entropy proposed is to applied to decision-making problems, to maintain generality, we make \(X=\{x_{1},x_{2},\ldots , x_{n}\}\) here.

Definition 4

A real function \(E^{*}: {\text{PFS}}(X)\rightarrow [0, 1]\) is a fuzzy entropy on PFS(X), for a PFS P in X, the fuzzy entropy \(E^{*}(P)\) is defined as follows:

Remark 2

Equation (9) satisfies axioms (E1)–(E4) in Definition 3. In addition, for any \(M,N\in {\text{PFS}}(X)\), if M is less fuzzy than N, we assume \(\mu _{M}(x_{i})\le \mu _{N}(x_{i}) \le \nu _{N}(x_{i})\le \nu _{M}(x_{i})\), we can obtain

Because \(\nu _{M}(x_{i})-\nu _{N}(x_{i})\ge 0\) and \(\mu _{N}(x_{i})-\mu _{M}(x_{i})\ge 0\), \(E(N)-E(M)\ge 0\), that is, \(E(N)\ge E(M)\), the other case can be similarly proved. So the fuzzy entropy \(E^{*}(P)\) satisfies Definition 3.

As previously described, the fuzzy entropy should satisfy the following proposition.

Proposition 1

A real function\(E^{*}\): PFS(X)\(\rightarrow [0, 1]\)is a fuzzy entropy onPFS(X), possessing the following properties:

-

1.

In the sector\(A_{2}C_{2}B_{2}\), the point closest to segment\(C_{2}D_{2}\)has the highest fuzzy entropy, that is, for any\(Q_{1}\in C_{2}D_{2}\), there exists some\(E^{*}(Q_{1})=1\);

-

2.

In the sector\(A_{2}C_{2}B_{2}\), the point farthest from segment\(C_{2}D_{2}\)has the lowest fuzzy entropy, that is,\(E^{*}(A_{2})=E^{*}(B_{2})=0\);

-

3.

In the sector\(A_{2}C_{2}B_{2}\), if segment\(E_{2}F_{2}\)is parallel to segment\(C_{2}D_{2}\), for any\(P_{1}, P_{2}\in E_{2}F_{2}\), \(E^{*}(P_{1})=E^{*}(P_{2})\);

-

4.

In the sector\(A_{2}C_{2}B_{2}\), if segments\(E_{2}F_{2}\)and\(G_{2}H_{2}\)are equidistant from segment\(C_{2}D_{2}\), for any\(P_{1}\in E_{2}F_{2}, Q_{2}\in G_{2}H_{2}\), \(E^{*}(P_{1})=E^{*}(Q_{2})\).

3.2 Overall Entropy of PFSs

Because the degree of hesitancy indicates the reliability of information, it is used to measure the degree of certainty for the relevant information. Therefore, including the degree of hesitancy in the definition of entropy can better measure the total uncertainty contained in a PFS. Xue et al. [18] applied the degree of hesitancy to the entropy of PFSs, but it did not distinguish between different PFSs in some cases (which was explained in Ref. [17]). Here, a new definition of overall entropy of PFSs is given that combines the degree of hesitancy and the aforementioned fuzzy entropy.

Definition 5

A real function \(E:PFS(X)\rightarrow [0,1]\) is an overall entropy on PFS(X), for a PFS P in X, the overall entropy E(P) is defined as follows:

where \(P_{i}\) is a separate element from PFS P, that is, \(E^{*}(P_{i})=1-|\mu _{P}(x_{i})-\nu _{P}(x_{i})|\).

Obviously, the overall entropy E(P) satisfies the following monotonicity.

Remark 3

A real function \(E: PFS(X)\rightarrow [0,1]\) is an overall entropy on PFS(X), having the following properties:

-

1.

Positive correlation: The overall entropy increases monotonically with the increase of hesitancy degree.

-

2.

Negative correlation: In the sector \(A_{2}C_{2}B_{2}\), the greater the distance from segment \(C_{2}D_{2}\) is, the smaller the overall entropy is.

The proof of Remark 3 is apparent from the proposed overall entropy formula. In addition, we provide Fig. 2 to illustrate the relationship among \(E(M_{i}), \mu _{M}(x_{i})\) and \(\nu _{M}(x_{i})\); here, \(M_{i}\) is a separate element from PFS M.

Theorem 2

The overall entropyEonPFS(X) satisfies axioms (E1)–(E5) in Definition 3.

Proof

Verifying that E satisfies axioms (E1)–(E4) is simple. We need only to prove that E satisfies axiom (E5). \(\square \)

(E5) For any \(M, N\in PFS(X)\), if M is less fuzzy than N, we assume \(\mu _{M}(x_{i}) \le \mu _{N}(x_{i}) \le \nu _{N}(x_{i})\le \nu _{M}(x_{i})\), then

Suppose \(\delta =\frac{1}{n}\sum \limits ^{n}_{i=1}[(1-\pi _{M}(x_{i}))(\nu _{M}(x_{i})-\mu _{M}(x_{i}))-(1-\pi _{N}(x_{i}))(\nu _{N}(x_{i})-\mu _{N}(x_{i}))]\). To prove more intuitively that the entropy measure satisfies axiom (E5), we construct the following model 1:

Model 1 \(\min\{\delta \}\)

s.t. \(\left\{ \begin{array}{ll} \mu _{M}(x_{i})\ge 0 \\ \mu _{N}(x_{i})\ge \mu _{M}(x_{i}) \\ \nu _{N}(x_{i})\ge \mu _{N}(x_{i}) \\ \nu _{M}(x_{i})\ge \nu _{N}(x_{i}) \\ \nu _{M}(x_{i})\le 1 \\ (\mu _{M}(x_{i}))^{2}+(\nu _{M}(x_{i}))^{2}\ge 0 \\ (\mu _{M}(x_{i}))^{2}+(\nu _{M}(x_{i}))^{2}\le 1 \\ (\mu _{N}(x_{i}))^{2}+(\nu _{N}(x_{i}))^{2}\ge 0 \\ (\mu _{N}(x_{i}))^{2}+(\nu _{N}(x_{i}))^{2}\le 1 \end{array} \right. \)

A LINGO program was developed to solve Model 1, the results are shown in Fig. 3. Here, \(A=\mu _{M}(x_{i})\), \(B=\mu _{N}(x_{i})\), \(C=\nu _{N}(x_{i})\), and \(D=\nu _{M}(x_{i})\).

It is obvious from Fig. 3 that at least when \(\mu _{M}(x_{i})=\mu _{N}(x_{i})=\nu _{N}(x_{i})=\nu _{M}(x_{i})=0\), there exists \(\min\{\delta \}=0\), that is \(E(N)-E(M)\ge 0\), \(E(N)\ge E(M)\). The other case can be proved in a similar manner. Therefore, The overall entropy satisfies Definition 3.

Theorem 3

LetMandNbe two PFSs defined in\(X=\{x_{1},x_{2},\ldots ,x_{n}\}\), for any\(x_{i}\in X\), either\(M\subseteq N\)or\(M\supseteq N\), the following property holds:

Proof

When \(M\subseteq N\), \(\mu _{M}(x_{i})\le \mu _{N}(x_{i})\), and \(\nu _{M}(x_{i})\ge \nu _{N}(x_{i})\). Therefore

Thus, \(E(M\cup N)+E(M\cap N)=E(M)+E(N)\) holds. The other case can be similarly proved. \(\square \)

Corollary 1

For any\(M\in PFS(X)\), the following properties hold true:

4 Application of Overall Entropy of PFSs in MCDM

Compared with other methods for determining unknown weights, the entropy weight method [23] is more commonly used. Therefore, in this section, an entropy weight method under a Pythagorean fuzzy environment is given that is based on the proposed overall entropy:

On this basis, a decision-making method is introduced that combines the entropy weight method and the technique for order preference by similarity to the ideal solution (TOPSIS) method [24]. An example is provided to illustrate the effectiveness and practicality of this decision-making method.

4.1 Decision-Making Method

In a MCDM problem, suppose \(A=\{A_1, A_2,...,A_m\}\) is a set of alternatives, \(C=\{c_1, c_2,...,c_n\}\) is a finite set of criteria, whose weights are completely unknown. Let \(R=(\gamma _{ij})_{m\times n} \) be a Pythagorean fuzzy decision matrix, \(\gamma _{ij}=P(\mu _{\gamma _{ij}},\nu _{\gamma _{ij}})\) is the evaluation value of alternative \(A_{i}\) under criterion \(c_{j}\) provided by the decision maker, where \(\mu _{\gamma _{ij}}\) and \(\nu _{\gamma _{ij}}\) respectively indicate the degrees of satisfaction and dissatisfaction of the decision maker for the alternative \(A_{i}\) with respect to the criterion \(c_{j}\), such that \(\mu _{\gamma _{ij}}\in [0,1]\), \(\nu _{\gamma _{ij}}\in [0,1]\) and \(0 \le (\mu _{\gamma _{ij}})^{2}+ (\nu _{\gamma _{ij}})^{2}\le 1\).

We now develop an algorithm of the Pythagorean fuzzy MCDM method based on the aforementioned decision-making information, which involves the following steps:

- Step 1::

-

Utilize Eq. (10) to compute the overall entropy of each criterion, and then use Eq. (13) to obtain the weight of each criterion.

- Step 2::

-

Employ Eq. (2) and Theorem 1 to respectively determine the Pythagorean fuzzy postive ideal solution (PIS) \(\gamma ^{+}\) and Pythagorean fuzzy negative ideal solution (NIS) \(\gamma ^{-}\) of each alternative as follows:

$$\begin{aligned} \gamma ^{+}=(\gamma _{1}^{+},\gamma _{2}^{+},...,\gamma _{j}^{+},...,\gamma _{n}^{+}) \end{aligned}$$(14)where \(\gamma _{j}^{+}\) must satisfy \(\max \limits _{i}(s(\gamma _{ij}))\), if \(s(\gamma _{ij})=s(\gamma _{kj})(i,k=1,2,...,m)\), then satisfy \(\max \limits _{i}(h(\gamma _{ij}))\).

$$\begin{aligned} \gamma ^{-}=(\gamma _{1}^{-},\gamma _{2}^{-},...,\gamma _{j}^{-},...,\gamma _{n}^{-}) \end{aligned}$$(15)where \(\gamma _{j}^{-}\) must satisfy \(\min \limits _{i}(s(\gamma _{ij}))\), if \(s(\gamma _{ij})=s(\gamma _{kj})(i,k=1,2,...,m)\), then satisfy \(\min \limits _{i}(h(\gamma _{ij}))\).

- Step 3::

-

Use Eqs. (16) and (17) to compute the distances between alternatives \(A_{i} (i=1,2,\ldots ,m)\) and PIS \(\gamma ^{+}\) and between alternatives \(A_{i} (i=1,2,\ldots ,m)\) and NIS \(\gamma ^{-}\), respectively.

$$\begin{aligned} D(A_{i},\gamma ^{+})= & {} [\frac{1}{2}\sum \limits _{j=1}^{n}w_{j}((\mu _{\gamma _{ij}}-\mu _{\gamma _{j}^{+}})^{2}+(\nu _{\gamma _{ij}}-\nu _{\gamma _{j}^{+}})^{2}\nonumber \\&+(\pi _{\gamma _{ij}}-\pi _{\gamma _{j}^{+}})^{2})]^{\frac{1}{2}} \end{aligned}$$(16)$$\begin{aligned} D(A_{i},\gamma ^{-})= & {} [\frac{1}{2}\sum \limits _{j=1}^{n}w_{j}((\mu _{\gamma _{ij}}-\mu _{\gamma _{j}^{-}})^{2}+(\nu _{\gamma _{ij}}-\nu _{\gamma _{j}^{-}})^{2}\nonumber \\&+(\pi _{\gamma _{ij}}-\pi _{\gamma _{j}^{-}})^{2})]^{\frac{1}{2}} \end{aligned}$$(17) - Step 4::

-

Utilize Eq. (18) to calculate the relative degree of closeness \(\phi (A_{i})\) of each alternative.

$$\begin{aligned} \phi (A_{i})=\frac{D(A_{i},\gamma ^{-})}{D(A_{i},\gamma ^{-})+D(A_{i},\gamma ^{+})} \end{aligned}$$(18) - Step 5::

-

Rank alternatives \(A_{i}\) according to the relative closeness \(\phi (A_{i})\) of each alternative, the alternative \(A_{i}\) with the largest \(\phi (A_{i})\) is the best.

4.2 Illustrative Example

To illustrate a specific application of the developed decision-making method, an example adapted from Ref. [25] is given as follows:

One must select from a panel of five possible emerging technology enterprises \(A_{i}(i=1,2,...,5)\). The experts select four criteria to evaluate the five possible emerging technology enterprises:

-

1.

\(c_{1}\) is technical advancement.

-

2.

\(c_{2}\) is potential market and market risk.

-

3.

\(c_{3}\) is industrialization infrastructure, human resources, and financial conditions.

-

4.

\(c_{4}\) is employment creation and the development of science and technology.

The five emerging enterprises \(A_{i}(i=1,2,...,5)\) are to be evaluated using Pythagorean fuzzy information by the decision maker under the four criteria (whose weights are completely unknown), as presented in Matrix R.

Next, we use the proposed decision-making method to solve this decision-making problem.

- Step 1::

-

Utilize Eq. (10) to compute the overall entropy of each criterion, we can obtain

$$\begin{aligned} E_{1}=0.5758, E_{2}=0.7839, E_{3}=0.9777, E_{4}=0.8912 .\end{aligned}$$Next, employ Eq. (13) to calculate the weight of each criterion as follows:

$$\begin{aligned} w_{1}= & {} \frac{1-E_{1}}{4-\sum \nolimits _{j=1}^{4}E_j}=\frac{0.4242}{0.7714}=0.5499,\\ w_{2}= & {} \frac{1-E_{2}}{4-\sum \nolimits _{j=1}^{4}E_j}=\frac{0.2161}{0.7714}=0.2801,\\ w_{3}= & {} \frac{1-E_{3}}{4-\sum \nolimits _{j=1}^{4}E_j}=\frac{0.0223}{0.7714}=0.0289,\\ w_{4}= & {} \frac{1-E_{4}}{4-\sum \nolimits _{j=1}^{4}E_j}=\frac{0.1088}{0.7714}=0.1410. \end{aligned}$$That is, \(W=\{0.5499,0.2801,0.0289,0.1410\}\).

- Step 2::

-

Employ Eq. (2) and Theorem 1 to respectively determine the Pythagorean fuzzy PIS \(\gamma ^{+}\) and NIS \(\gamma ^{-}\) of each alternative as follows:

$$\begin{aligned} \gamma ^{+}= & {} \{P(1.0,0.0),P(0.6,0.5),P(0.4,0.2),P(0.6,0.4)\},\\ \gamma ^{-}= & {} \{P(0.5,0.8),P(0.4,0.9),P(0.3,0.5),P(0.5,0.8)\}. \end{aligned}$$ - Step 3::

-

Use Eqs. (16) and (17) to compute the distances between alternatives \(A_{i} (i=1,2,\ldots ,m)\) and PIS \(\gamma ^{+}\) and between alternatives \(A_{i} (i=1,2,\ldots ,m)\) and NIS \(\gamma ^{-}\), respectively. The results of this step are shown in Table 1.

Table 1 Distance results for five alternatives - Step 4::

-

Utilize Eq. (18) to calculate the relative degree of closeness \(\phi (A_{i})\) of each alternative. The results of this step are as follows:

$$\begin{aligned} \phi (A_{1})= & {} \frac{0.2708}{0.2708+0.4341}=0.3842,\\ \phi (A_{2})= & {} \frac{0.4603}{0.4603+0.5072}=0.4758,\\ \phi (A_{3})= & {} \frac{0.1905}{0.1905+0.5457}=0.2588,\\ \phi (A_{4})= & {} \frac{0.3064}{0.3064+0.3349}=0.4778,\\ \phi (A_{5})= & {} \frac{0.5873}{0.5873+0.0812}=0.8785. \end{aligned}$$ - Step 5::

-

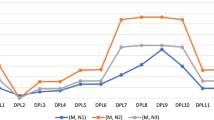

Rank alternatives \(A_{i}\) according to the relative closeness \(\phi (A_{i})\) of each alternative. Figure 4 presents this result. The alternatives are sorted as \(A_{5}\succ A_{4}\succ A_{2}\succ A_{1}\succ A_{3}\), that is, \(A_{5}\) is the optimal alternative.

5 Comparison Analyses

This section provides three comparative analyses. The first analysis compares the overall entropy with existing entropy measures of PFSs. The second analysis compares the proposed entropy weight method with other methods for determining unknown weights. The third analysis compares the developed decision-making method with other Pythagorean fuzzy decision-making methods.

5.1 Comparison with Existing Entropy Measures for PFSs

The following is a comparison of overall entropy and existing entropy measures for demonstrating the superiority of overall entropy. We compare overall entropy with 14 existing entropy measures of PFSs suggested by various researchers, such as

-

1.

Yang and Hussain’s \((E_{E}\), \(E_{PI}\), \(E_{HC}^{\gamma }(\gamma >0)\), \(E_{\min /\max })\) [17];

-

2.

Xue et al. \((E_{X})\) [18];

-

3.

Wan et al. \((E_{W})\) [19];

-

4.

Peng et al. \((E_{i}(i=1,2,...,8))\) [20].

Because Yang and Hussain [17] proved by comparisons that \(E_{PI}\) and \(E_{HC}^{2}\) do not fulfill the aforementioned Sequence (4) and \(E_{X}\) cannot distinguish between different PFSs in some cases, we only compare the overall entropy E with \(E_{E}, E_{HC}^{1}, E_{\min /\max }, E_{W}\), and \(E_{i}(i=1,2,...,8)\) based on the work of Yang and Hussain [17].

First, we give an example to compare the overall entropy E with \(E_{E}, E_{HC}^{1}, E_{\min /\max }, E_{W}\), and \(E_{i}(i=1,2,...,8)\).

Example 1

Assume a PFS P in \(X=\{5,6,7,8\}\):

The results calculated by different entropy measures are shown in Table 2: \(a=0.5285362\) and \(b=0.5285357\). From Table 2, the following results can be obtained:

From the ranking, it can be seen that E, \(E_{E}\), and \(E_{W}\) satisfy Sequence (4), whereas \(E_{HC}^{1}\), \(E_{\min /\max }\), and \(E_{i}(i=1,2,...,8)\) do not. Thus, the performance of \(E, E_{E}\), and \(E_{W}\) is better than that of \(E_{HC}^{1}, E_{\min /\max }\) and \(E_{i}(i=1,2,...,8)\).

Second, we provide another example to further compare E with \(E_{E}\) and \(E_{W}\).

Example 2

Assume a PFS P in \(X=\{3,4,5,6,7\}\):

The results calculated using the three entropy measures are shown in Table 3, and the following ranking can be obtained from Table 3:

This ranking indicates that E satisfies Sequence (4), whereas \(E_{E}\) and \(E_{W}\) do not. Thus, the performance of E is superior to that of \(E_{E}\) and \(E_{W}\).

A comparative analysis of Examples 1 and 2 proves that overall entropy outperforms existing entropy measures. Therefore, overall entropy E is excellent.

5.2 Comparison with Other Methods for Determining Unknown Weights

In the following, we compare the entropy weight method based on overall entropy with Xu’s three methods [26] and grey relational analysis method [27].

(I) Comparison with Xu’s three methods of determining unknown weights

In 2004, Xu [26] proposed the following three methods for determining unknown weights:

-

1.

\(w_{1}=\frac{1-\alpha }{n}+\alpha \), \(w_{i}=\frac{1-\alpha }{n}\), \(i\ne 1\), and \(\alpha \in [0,1]\);

-

2.

\(w_{i}=\frac{1-\alpha }{n}\), \(i\ne n\), \(w_{n}=\frac{1-\alpha }{n}+\alpha \), and \(\alpha \in [0,1]\);

-

3.

\(w_{1}=\frac{1-(\alpha +\beta )}{n}+\alpha \), \(w_{i}=\frac{1-(\alpha +\beta )}{n}\), \(i=2,...,n-1\), \(w_{n}=\frac{1-(\alpha +\beta )}{n}+\beta \), such that \(\alpha ,\beta \in [0,1]\) and \(\alpha +\beta \le 1\).

First, we use Xu’s three methods to solve the decision-making problem in Sect. 4.2. Thus, \(n=4\), if \(\alpha =0.2\) and \(\beta =0.2\), the following weight vectors can be obtained:

Second, Sect. 4.2 reveals that the weight vector obtained by the proposed entropy weight method is \(W=\{0.5499,0.2801,0.0289,0.1410\}\).

Finally, by comparing the weight vectors \(W_{1}, W_{2}, W_{3}\), and W, we find that most of the single weight values in \(W_{1}, W_{2}\), and \(W_{3}\) are the same, that is to say, the weight information generated by Xu’s three methods has a low degree of discrimination. However, the individual weights in W are highly distinct. Therefore, compared with Xu’s three methods, the proposed entropy weight method based on overall entropy better distinguishes the weights of criteria.

(II) Comparison with grey relational analysis method

In 2017, Peng and Dai [27] gave a grey relational analysis method for determining criterion weights:

where

We utilize the aforementioned grey relational analysis method to solve the decision-making method in Sect. 4.2.

By Eq. (22), Matrix S can be obtained:

From Eq. (21), we have

Then the weight information of the four criteria obtained from Eq. (19) is as follows:

that is, \({\hat{W}}=\{0.2537,0.2618,0.2493,0.2352\}\).

For a typical decision-making problem with few sample data, extensive calculation is required to determine weights through grey relational analysis method; however, grey relational analysis method is inconvenient and may lead to the loss of decision-making information. Compared with this method, the entropy weight method based on overall entropy is more convenient.

If one compares \({\hat{W}}\) with the weight vector W obtained by entropy weight method, one finds that the difference among the four weight values in \({\hat{W}}\) is very small, that is, the weight information generated by grey relational analysis has a low degree of discrimination. However, the individual weights in W are distinct. Therefore, compared with grey relational analysis, at least in this decision-making problem, the entropy weight method based on overall entropy has more discriminatory power for determining the weight of each criterion.

From the two aforementioned comparative analyses, we know that Xu’s three methods and the grey relational analysis method have very low discrimination for the weight of each criterion. The weight information generated is thus ineffective. Therefore, if any of Xu’s three methods or the grey relational analysis method is used to replace the entropy weight method in the developed decision-making method in Sect. 4.1 to solve the decision-making problem, the final decision-making results may have certain errors.

5.3 Comparison with Other Pythagorean Fuzzy Decision-Making Methods

Because we compare the proposed overall entropy with the existing entropy measures of PFSs in Sect. 5.1, we do not compare with entropy-based decision methods here, but choose to compare with operator-based decision-making methods and TOPSIS method based on determining weight.

In 2016, Ma and Xu [28] presented Pythagorean fuzzy weighted geometric/averaging (PFWG/PFWA) operators and symmetric Pythagorean fuzzy weighted geometric/averaging (SPFWG/SPFWA) operators, as follows:

Consider the problem of evaluating four hospitals in a region as follows:

-

1.

\(A_{1}\): Class B hospital of Grade II;

-

2.

\(A_{2}\): Class B hospital of Grade III;

-

3.

\(A_{3}\): Class A hospital of Grade II;

-

4.

\(A_{4}\): Class A hospital of Grade III.

The problem has four evaluation criteria, and the weight of the criteria is \(W_{4}=(0.15,0.25,0.25,0.35)\).

-

1.

\(c_{1}\) is hospital quality.

-

2.

\(c_{2}\) is management level.

-

3.

\(c_{3}\) is technical level.

-

4.

\(c_{4}\) is hospital scale and medical equipment.

Next, experts are invited to evaluate the comprehensive strength of the four hospitals. The four hospitals \(A_{i}\)\((i=1,2,3,4)\) were evaluated by experts using Pythagorean fuzzy information, and the results are presented as Matrix B.

The decision-making method presented in Sect. 4.1 is used to solve this decision-making problem, the weight information obtained is \({\tilde{W}}=(0.2134,0.1570, 0.2973,0.3322)\), and other results are listed in Table 4.

The weight vector \(W_{4}\) is used in Steps 2–5 from Sect. 4.1 to solve this decision-making problem, and the results are presented in Table 5.

The four operators of Eqs. (23)–(26) are used to solve this decision-making problem respectively, and the results are listed in Table 6.

The rankings based on the results presented in Tables 4, 5 and 6 as listed in Table 7.

It is obvious from Table 7 that the sorting results obtained by the \(W_{4}\)-based method, PFWG operator, SPFWG operator and SPFWA operator are all \(A_{4}\succ A_{3}\succ A_{2}\succ A_{1}\). According to the actual grades of the four hospitals, \(A_{4}\) is a Class A hospital of Grade III, and \(A_{1}\) is a Class B hospital of Grade II, these are consistent with the ranking results. However, because \(A_{2}\) is a Class B hospital of Grade III and \(A_{3}\) is a Class A hospital of Grade II, the ranking result \(A_{3}\succ A_{2}\) is inconsistent with the actual hospital grades. The ranking results \(A_{4}\succ A_{2}\succ A_{3}\succ A_{1}\) of our method and the PFWA operator are consistent with the actual hospital grades. Our method determines the weight information of each criterion according to actual decision-making information, which can effectively reflect the comprehensive strength of each hospital. Therefore, the proposed decision-making method is effective and practical.

6 Conclusions

Some existing formulas for entropy of PFSs do not satisfy Sequence (4); therefore, they have poor performance to some extent. Decision-making methods based on these entropy formulas are thus also imperfect to some extent. Therefore, this paper proposes a definition of fuzzy entropy of PFSs that is based on the relationship between the fuzziness included in the PFS and the distance from a point to a line on a projection plane. Using fuzzy entropy and the degree of hesitancy, the overall entropy of PFSs can be obtained. On this basis, we developed the new Pythagorean fuzzy decision-making method presented herein.

This paper provides three comparative analyses illustrating the significance of the method, which are summarized as follows:

-

1.

To explain the excellent performance of the proposed overall entropy, we compare it with existing entropy measures for PFSs. The proposed overall entropy satisfies Sequence (4) in some cases in which other entropy measures do not. Therefore, the performance of overall entropy is excellent.

-

2.

To prove the reliability of the proposed entropy weight method, the proposed entropy weight method is compared with Xu’s three methods and grey relational analysis method. Through two comparative analyses, the convenience and effectiveness of the entropy weight method based on overall entropy are illustrated.

-

3.

To evaluate the methodological effectiveness, the developed decision-making method is compared with operator-based decision methods and TOPSIS method based on determining weight. The feasibility and practicality of decision-making method based on the proposed entropy weight method are proved by the consistency between the ranking results and the actual hospital grades.

In summary, relative to other approaches, the proposed overall entropy is superior. This entropy weight method based on overall entropy has the advantages of the ability to compute the unknown weight of each criterion more conveniently and distinguish the weights more effectively. Pythagorean fuzzy decision-making method based on the proposed entropy weight method determines the weight information of each criterion according to actual decision-making information, it can thus more fully reflect the importance of each criterion and is more conducive to obtaining reasonable decision-making results.

In future research, entropy weight method based on overall entropy can be combined with other approaches such as TODIM, VIKOR, and ANP to construct more useful decision-making methods for Pythagorean fuzzy environments. In addition, overall entropy is applied to the solution of unknown weights in this paper, we hope that the proposed overall entropy can be widely applied to other aspects and further increase the research on entropy measures.

Data Availability Statement

All data are included in the manuscript.

References

Chu, A.T.W., Kalaba, R.E., Spingarn, K.: A comparison of two methods for determining the weights of belonging to fuzzy sets. J. Optim. Theory Appl. 27, 531–538 (1979)

Saaty, T.L.: The Analytic Hierarchy Process. McGraw hill, New York (1980)

Fan, Z.P.: Complicated multiple attribute decision making: theory and applications. Ph.D. Dissertation, Northeastern university, Shenyang, China (1996)

Choo, E.U., Wedley, W.C.: Optimal criterion weights in repetitive multicriteria decision making. J. Oper. Res. Soc. 36, 983–992 (1985)

Hwang, C.L., Lin, M.J.: Group Decision Making Under Multiple Criteria: Methods and Applications. Springer, Berlin (1987)

Zadeh, L.A.: Fuzzy sets. Inf. Control 8, 338–353 (1965)

De Luca, A., Termini, S.: A definition of non-probabilistic entropy in the setting of fuzzy set theory. Inf. Control 20, 301–312 (1972)

Atanassov, K.T.: Intuitionistic fuzzy sets. Fuzzy Sets Syst. 20, 87–96 (1986)

Burillo, P., Bustince, H.: Entropy on intuitionistic fuzzy sets and on interval-valued fuzzy sets. Fuzzy Sets Syst. 78, 305–316 (1996)

Szmidt, E., Kacprzyk, J.: Entropy for intuitionistic fuzzy sets. Fuzzy Sets Syst. 118, 467–477 (2001)

Zhu, Y.J., Li, D.F.: A new definition and formula of entropy for intuitionistic fuzzy sets. J. Intell. Fuzzy Syst. 30, 3057–3066 (2016)

Li, J.Q., Deng, G.N., Li, H.X., Zeng, W.Y.: The relationship between similarity measure and entropy of intuitionistic fuzzy sets. Inf. Sci. 188, 314–321 (2012)

Xia, M.M., Xu, Z.S.: Entropy/cross entropy-based group decision making under intuitionistic fuzzy environment. Inf. Fusion 13, 31–47 (2012)

Hung, W.L., Yang, M.S.: Fuzzy entropy on intuitionistic fuzzy sets. Int. J. Intell. Syst. 21, 443–451 (2006)

Yager, R.R.: Pythagorean fuzzy subsets. In: Proceeding of The Joint IFSA World Congress and NAFIPS Annual Meeting, Edmonton, Canada, , pp. 57–61 (2013)

Yager, R.R.: Pythagorean membership grades in multicriteria decision making. IEEE Trans. Fuzzy Syst. 22, 958–965 (2014)

Yang, M.S., Hussain, Z.: Fuzzy Entropy for Pythagorean Fuzzy Sets with Application to Multicriterion Decision Making. Complexity. (2018). https://doi.org/10.1155/2018/2832839

Xue, W.T., Xu, Z.S., Zhang, X.L., Tian, X.L.: Pythagorean fuzzy LINMAP method based on the entropy theory for railway project investment decision making. Int. J. Intell. Syst. 33, 93–125 (2018)

Wan, S.P., Li, S.Q., Dong, J.Y.: A three-phase method for Pythagorean fuzzy multi-attribute group decision making and application to haze management. Comput. Ind. Eng. 123, 348–363 (2018)

Peng, X.D., Yuan, H.Y., Yang, Y.: Pythagorean fuzzy information measures and their applications. Int. J. Intell. Syst. 32, 991–1029 (2017)

Zhang, X.L., Xu, Z.S.: Extension of TOPSIS to multiple criteria decision making with Pythagorean fuzzy sets. Int. J. Intell. Syst. 29, 1061–1078 (2014)

Peng, X.D., Yang, Y.: Some results for Pythagorean fuzzy sets. Int. J. Intell. Syst. 30, 1133–1160 (2015)

Zeleny, M.: Multiple Criteria Decision Making, pp. 111–117. McGraw-Hill, New York (1982)

Hwang, C.L., Yoon, K.S.: Multiple Attribute Decision Making: Methods and Applications. Springer, Berlin,Berlin, (1981)

Wei, G.W., Lu, M.: Pythagorean fuzzy Maclaurin symmetric mean operators in multiple attribute decision making. Int. J. Intell. Syst. 33, 1043–1070 (2018)

Xu, Z.S.: Uncertain Multiple Attibute Decision Making: Methods and Applications, pp. 6–7. Springer, Berlin (2015)

Peng, X.D., Dai, J.G.: Approaches to Pythagorean fuzzy stochastic multi-criteria decision making based on prospect theory and regret theory with new distance measure and score function. Int. J. Intell. Syst. 00, 1–28 (2017)

Ma, Z.M., Xu, Z.S.: Symmetric Pythagorean fuzzy weighted geometric/averaging operators and their application in multicriteria decision-making problems. Int. J. Intell. Syst. 00, 1–22 (2016)

Acknowledgements

The authors are very grateful to the editor in chief and anonymous referees for their insightful and constructive comments and suggestions, which have been helpful in improving the paper. This research is supported by the Natural Science Foundation of Anhui Province of China (No.1908085MA07).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Xu, TT., Zhang, H. & Li, BQ. Pythagorean Fuzzy Entropy and Its Application in Multiple-Criteria Decision-Making. Int. J. Fuzzy Syst. 22, 1552–1564 (2020). https://doi.org/10.1007/s40815-020-00877-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40815-020-00877-y