Abstract

Middle-school students completed a comprehension assessment. The following day, they read four, 120-word passages, two standard and two non-standard ransom-note passages with altered font sizes. Altering font sizes increased students’ reading time (i.e., reduced reading speed) by an average of 3 s and decreased students’ words correct per minute (WCPM) scores but did not reduce oral reading accuracy or increase the amount of comprehension score variance accounted for by reading accuracy, reading speed, and WCPM measures. While oral reading fluency (ORF) measures accounted for 32 and 36 % of the variance in comprehension scores, the measure of reading speed embedded within ORF measures accounted for almost all of this explained variance. The importance of reading speed and implications for those concerned that ORF measures non-functional aloud word reading accuracy are described.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

When oral reading fluency (ORF) assessments are administered, students read intact passages aloud as educators score errors. Results are typically reported as words read correct per minute (WCPM). Within a response-to-intervention framework, WCPM measures are commonly used to evaluate interventions and identify students at risk or with disabilities (Hughes and Dexter 2011). Although difficulties associated with developing equivalent ORF reading passages may limit educators’ and researchers’ ability to use WCPM scores to evaluate interventions (Christ and Ardoin 2009; Poncy et al. 2005), numerous researchers have concluded that ORF measures are reliable and valid measures of students’ global reading skill development [see Marston (1989) and Reschly et al. (2009) for reviews of research and meta-analytic support for ORF measures].

Whereas traditional assessments of academic skill development yielded measures of accurate responding (e.g., percentage of words read correctly), WCPM measures speed of accurate responding (Shapiro 2011). There are several reasons why researchers and educators converted traditional accuracy measures to speed of accurate responding measures. Measuring speed of accurate responding, as opposed to accurate responding, allows for a more sensitive measure of reading skill development (Poncy et al. 2005; Shinn 1995). Additionally, evidence-based theories support a causal relationship between reading speed and reading comprehension (e.g., LaBerge and Samuels 1974; Perfetti 1985; Skinner 1998; Stanovich 1986). Because the purpose or function of most reading is comprehension, ORF assessments may yield a more valid and reliable indirect measure of functional reading skill development than other assessment procedures which only provide measures of accurate reading (Shapiro 2011; Shinn 1995).

The ORF measure WCPM is derived from measures of passage word reading accuracy (i.e., number of words read correct) and a measure of reading speed or timeFootnote 1 (i.e., seconds spent reading). The accuracy/reading speed ratio (i.e., number of words read correctly / seconds spent reading) is often converted to a common denominator of 60 s to yield the measure WCPM. Alternatively, during ORF assessments, students can be given 60 s to read, which negates the need to convert the measure to a common denominator (Shapiro 2011). Regardless, researchers have developed alternative brief reading measures that also incorporate a measure of reading speed but apply different accuracy measures.

As opposed to measuring oral word reading accuracy, researchers evaluating reading comprehension rate (RCR) assess accurate responding to comprehension questions (Neddenriep et al. 2007; Skinner 1998). Both MAZE and CLOZE procedures involve removing words from passages and then scoring correctly inserted words or correctly selected words (i.e., multiple-choice selection, typically from three options with one correct word and two distractors). These measures are thought to provide a more direct measure of comprehension than ORF (Jenkins and Jewell 1993; Parker et al. 1992; Skinner et al. 2002; Tichá et al. 2009; Wayman et al. 2007), which has been characterized as a measure of word calling. Additionally, some have suggested that these measures may provide a better indication of broad reading skill development than ORF in more advanced readers (Jenkins and Jewell 1993; Skinner et al. 2002).

Rather than evaluating different accuracy measures embedded within brief reading rate measures (e.g., percent comprehension questions answer correctly), researchers have focused on what these brief reading rate measures have in common, an embedded measure of reading speed (Hale et al. 2012). Researchers have reported results from MAZE, RCR, and ORF studies demonstrating that reading speed alone is a strong predictor of measures of broad reading ability (Ciancio et al. 2015; Hale et al. 2012; Jenkins et al. 2003; Skinner et al. 2009; Williams et al. 2011). Williams et al. (2011) analysis of ORF scores indicated that reading speed accounted for more than 49, 44, and 47 % of Woodcock-Johnson III (WJ-III) Tests of Achievement (Woodcock et al. 2001) reading composite score variance in fourth-, fifth- and tenth-grade students, respectively. Converting reading speed into the WCPM rate measure accounted for only 7, 2, and 4 % additional reading composite variance for the fourth-, fifth-, and tenth-grade students, respectively. Based on these findings, Williams et al. suggested that concerns over WCPM measuring non-functional accurate word calling may be unfounded because the measure of accurate aloud word reading had little impact on the amount of global reading skill development accounted for by the ORF measures. Rather, the embedded measure of reading speed within the WCPM measures can account for almost all of the variance in WJ-III Broad Reading Cluster (BRC) scores.

Using BRC scores as their criterion variable, Hale et al. (2012) conducted a similar analysis of MAZE and RCR measures with sixth- and seventh-grade students. During MAZE assessments, Hale et al. deleted ten total words, one word about every 35–45 words per 400-word passage. Students read passages aloud and selected the appropriate word from three options. Thus, their reading was disrupted with this selection task. During RCR assessments, students read intact 400-word passages aloud continuously, without disruption, and then answered ten comprehension questions. Findings with these two measures generally confirmed research with ORF measures (Williams et al. 2011); the embedded measure of reading speed accounted for much of the explained variance in BRC scores. Also, Hale et al. noted an unexpected pattern: the measure of reading speed during MAZE assessments was a stronger predictor of BRC scores (R 2 = 0.40 for sixth grade and 0.50 for seventh grade) than reading speed during the RCR assessment (R 2 = 0.36 for sixth grade and 0.22 for seventh grade). Hale et al. posited that these results suggest that measuring reading speed during disrupted reading may be a stronger predictor of broad reading ability than reading speed during typical reading conditions. This difference may be attributable to less skilled readers having fewer available cognitive resources (e.g., attention and working memory) needed to deal with disruptions during reading (LaBerge and Samuels 1974; Perfetti 1985).

Katzir et al. (2013) enhanced text disfluency by altering font size, word spacing, and line length and found that reading the disfluent text enhanced comprehension in fifth-grade students but reduced comprehension in second-grade students. Although Katzir et al. (2013) did not measure reading speed, their findings that the text alteration procedure had a detrimental effect on a reading comprehension for lower-level readers but not higher-level readers provide indirect support for the hypothesis that altering fonts may slow down reading speed in weaker readers but not in stronger readers, consequently enhancing the variance in reading speed scores (Hale et al. 2012; Jenkins et al. 2003).

Limitations of Previous Research and Purpose of the Current Study

Researchers who used WJ-III scores as their criterion measure found that most of the variance in global reading skill development can be explained by the measure of reading speed embedded within brief reading rate measures (Hale et al. 2012; Skinner et al. 2009; Williams et al. 2011). These findings support both cognitive and behavioral theories suggesting that reading speed is causally related to reading comprehension and reading skill development (e.g., Breznitz 1987; LaBerge and Samuels 1974; Perfetti 1985; Skinner 1998; Stanovich 1986). Furthermore, these results have applied implications because they may alleviate concerns with ORF measures merely assessing word calling, as opposed to functional reading skills (Williams et al. 2011).

Unfortunately, the overlap between predictor and WJ-III criterion measures used by previous researchers may have confounded these results. In each of the studies evaluating the unique contribution of the reading speed measures embedded within brief reading rate measures (e.g., ORF, RCR, MAZE), researchers used either BRC or reading composite scores from the WJ-III as their criterion measures to assess global reading skill development (Ciancio et al. 2015; Hale et al. 2012; Skinner et al. 2009; Williams et al. 2011). The BRC of the WJ-III is an aggregate measure of scores from three subtests: letter-word identification, reading fluency, and passage comprehension. Two of the three subtests composing the BRC, letter-word identification and reading fluency, are measures of accurate reading and/or accurate reading speed. As ORF is a measure of accurate reading and reading speed, it is reasonable to expect a high correlation between BRC and ORF scores (Hale et al. 2012; Keenan et al. 2008; Paris et al. 2005; Williams et al. 2011). Furthermore, the third subtest composing the BRC, passage comprehension, requires examinees to provide a missing word to complete brief passages. The Gates-MacGinitie uses a different comprehension format in which examinees read passages and answer literal and inferential multiple-choice questions.

Purpose

With the current concurrent validity study, we extended previous research on the relationship between ORF measures (i.e., reading speed, reading accuracy, and WCPM) and reading skill development. Our primary purpose was to (a) examine the amount of variance in reading comprehension that is accounted for by the measure of reading speed embedded within ORF measures and (b) the incremental increases accounted for by altering the measure of reading speed to the measure WCPM. To address problems associated with predictor-criterion variable overlap, we selected the comprehension subtest of the Gates-MacGinitie Reading Test (MacGinitie et al. 2000) as our criterion variable. The Gates-MacGinitie Reading Test (GMRT) comprehension subtest does not incorporate a measure of accurate aloud reading speed; therefore, our analyses should be less influenced by the overlap between our predictor and criterion measures than previous investigation of ORF predictor variables (Ciancio et al. 2015; Williams et al. 2011). Based on the findings of Hale et al. (2012), we also sought to test the hypothesis that reading speed measures would be stronger predictors of reading skill development when passage reading was disrupted. Thus, we assessed WCPM when students read standard passages as well as experimental, ransom-note passages. The ransom-note passages contained randomized font sizes within each word to disrupt reading.

Method

Participants

Participants were 86 sixth-, seventh-, and eighth-grade students from a rural school in eastern Tennessee. The school population (n = 780) is 47 % male and 53 % female. The population is predominantly Caucasian (94 % Caucasian, 3 % African American, 3 % Hispanic). Almost half of the student body (48 %) is eligible for free or reduced lunch. The study sample was predominantly White (92 %) with more males than females (58 % male, 42 % female). Only students whose parents provided written informed consent were given the opportunity to participate.

Materials and Measures

Criterion Variable

The criterion measure used in this study was the group-administered comprehension subtest of the Gates-MacGinitie Reading Tests (GMRT) Fourth Edition (MacGinitie et al. 2000). To complete the comprehension subtest, students silently read brief passages and answered related multiple-choice questions during a 35-min period. Internal consistency values for the total test, as well as the comprehension subtest, are at or above 0.90. Because our predictor scores were raw scores, not grade equivalent scores, we also analyzed raw criterion variable scores. As the GMRT comprehension subtest contains 48 questions, raw scores could range from 0 to 48 questions correct.

Predictor Variables

Researchers have demonstrated that it is difficult to develop equivalent ORF passages when passage equivalence is based solely on readability formulas (Christ and Ardoin 2009; Poncy et al. 2005). To address the difficulty equating passages, we selected four passages developed and evaluated by Forbes et al. (2015). Forbes et al. developed their passages by first selecting six, 400-word passages from the Timed Reading series (Spargo 1989). Next, Forbes et al. reduced each passage to 120 words and then modified passages (e.g., changed words, added or subtracted words, modified sentences) until each passage’s Flesh-Kincaid readability score fell between the seventh- and eighth-grade reading levels. During their study, Forbes et al. employed a control group (23 seventh- and eighth-grade students from the same geographic area as our sample) who read these six passages. Average WCPM scores for four of the six of the passages ranged between 141 and 148. We used these four passages to collect ORF data for our current investigation.

Our four standard passages were identical to the passages used by Forbes et al. (2015). Each standard passage was printed using Times New Roman 12-point font. To construct the experimental ransom-note passages, we altered each of the four standard passages by manipulating fonts so that the font sizes of each subsequent letter differed with sizes ranging from Times New Roman 10-point font to Times New Roman 16-point font. A phrase in the ransom note style would look like this—A phrase in the ransom note passage would look like this.

Each student read the four different passages, two standard and two ransom-note passages, aloud. We counterbalanced passage types so that for each passage, approximately half of the students received a standard version of the passage and the other half received a ransom-note version. As students read, examiners recorded the number of words read correctly as well as the time spent reading in seconds. For each passage, researchers collected reading accuracy (number of words read correctly), reading speed or time (seconds required to read the passage), and reading rate (WCPM) data. WCPM was calculated by multiplying the number of words read correctly by 60 s and dividing by the number of seconds required to read each passage. For each student and variable (accuracy, speed, and WCPM), average performance on their two standard passages and average performance on their two ransom-note passages were analyzed.

Procedures

Each student who returned a signed parental consent form and who assented to participate was assessed over two sessions on consecutive days. During the first session, students’ reading comprehension was assessed using the Gates-MacGinitie Reading Test (GMRT). During the second session, students were administered the four, brief oral reading passages. Only students who completed the GMRT were assessed on the second day. All measures were administered and scored by trained graduate students.

The GMRT assessment lasted approximately 40 min with 35 min allotted for completing the test. Once students were quietly seated, the examiner read the standardized instructions for the comprehension subtest, including the completion of two practice items. The examiner then answered any remaining questions and instructed the students to begin. Students who finished early were instructed to review their answers and then close their test booklets.

Brief oral reading assessments lasted approximately 5–7 min. During these sessions, students read two standard passages printed in Times New Roman 12-point font and two ransom-note passages with varied font sizes to a trained graduate student. Before beginning, examiners instructed students to read each passage aloud and to do their best reading. For each passage, the examiner used a stopwatch to record seconds needed to complete the passage. If the student paused for more than 3 s, the examiner provided the word and marked the word as an error. For each student, the four passages (two ransom-note and two standard passages) were administered in a random order.

Procedural Integrity and Inter-Scorer Agreement

All ORF sessions were recorded. In order to evaluate procedural integrity and inter-scorer agreement, a second, trained experimenter randomly selected and independently scores approximately 20 % of the recordings. Procedural integrity checks show that all researchers delivered standardized instruction as described and administered all assessment procedures in their prescribed sequence. For WCPM, the Pearson product-moment correlation between the original scorer and independent scorer was 1.00. For errors, the correlation between the two scorers was 0.96. Both correlations are acceptable and suggest high inter-rater reliability (House et al. 1981).

Data Analysis

Predictor variables from both standard and ransom-note passages were correlated with students’ raw GMRT passage comprehension scores. To determine if altering standard passages to ransom-note passages disrupted reading, paired-samples t tests were used to compare students’ performance on standard and ransom-note passages. To examine the incremental increases in GMRT variance explained by converting reading speed into WCPM, separate sequential regressions were conducted on standard and ransom-note passages. For all analyses, p < .05 was considered statistically significant.

Results

Before conducting analyses, researchers screened data for missing data points and outliers resulting in the exclusion of two cases from analyses due to their extreme scores. One excluded case had a standard score of 1 on the GMRT. The other case had unusually low seconds to read scores (i.e., high reading speed scores1) and unusually low WCPM scores. Across the remaining data, GMRT scores and WCPM scores were approximately symmetrical. Reading time scores were highly skewed right and leptokurtic.

Descriptive Statistics and Comparisons of Standard and Ransom-Note Passage Reading

Mean and standard deviation data for GMRT and predictor variables are presented in Table 1. In Table 1, GMRT Normal Curve Equivalent scores are normed with a mean of 50 and a standard deviation of 21.0. Across all grade levels, GMRT scores averaged 58.7 with a standard deviation of 19.8.

To determine if altering standard passages to ransom-note passages disrupted reading, researchers conducted paired samples t tests comparing students’ reading accuracy (words read correctly), reading time (seconds required to read passages), and WCPM on standard and ransom-note passages. For each student, mean scores across the two ransom-note and two standard passages were analyzed. Across the two passage types, differences in reading accuracy were not statistically significant, t(83) = 0.24, p = .81. Although our t tests indicated that reading time was statistically significantly lower on the standard passage than the ransom-note passages, t(83) = −4.13, p < .01, the average difference across passage types was only about 3 s (53 vs 56 s). Finally, words correct per minute scores were statistically significantly higher on standard passages than ransom-note passages, t(83) = 6.52, p < .01, with means of 142.5 and 134.2 WCPM, respectively.

Correlations

All Pearson’s product-moment correlations between each predictor variable and GMRT raw scores (see Table 2) were statistically significant at the p < .05 level. Correlations between predictor variables were all statistically significant at the p < .01 level. The correlations between seconds to read1 and GMRT scores were negative, while reading accuracy and WCPM scores were positively correlated with GMRT scores. Correlations between reading accuracy, reading time or speed, WCPM, and GMRT scores were almost identical between standard and ransom-note passages. Across both passage types, correlations of reading speed and WCPM with GMRT scores were similar with rs ranging from 0.56 to 0.60, while the accuracy measure (i.e., words read correctly) yielded smaller correlations with GMRT scores (i.e., rs of 0.29 and 0.28 for ransom-note and standard passages, respectively).

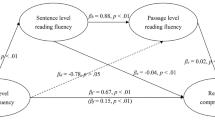

Sequential Regressions

The results of two sequential regression analyses, the first examining standard passages and the second examining ransom-note passages, are displayed in Table 3. For both regressions, GMRT raw scores served as the criterion variable, reading speed or time (i.e., seconds to read) was entered first (i.e., model 1), and then, model 2 WCPM was added. Therefore, in model 2, we analyzed the incremental increase in GMRT score variance accounted for by altering our reading speed measure to the reading rate measure, WCPM, which incorporated the accuracy measure words read correctly. Both standard and ransom-note passages showed similar patterns in predicting GMRT scores. Across both passage types, seconds to read (reading speed or time1) accounted for a statistically significant amount of comprehension score variance (i.e., 36 and 32 % for standard and non-standard passages, respectively). Adding WCPM into the regression equation only accounted for an additional 2 % of variance on standard passages and 1 % of variance on ransom-note passages, neither of which reached a level of statistical significance.

Discussion

We sought to replicate and extend research on the relationship between WCPM, reading speed, and general reading skill development. The WJ-III BRC, used by previous researchers as a standardized reading measure (e.g., Ciancio et al. 2015; Hale et al. 2012; Williams et al. 2011), includes measures based on oral reading accuracy and speed; consequently, a positive correlation with measures of ORF is to be expected (Keenan et al. 2008; Paris et al. 2005). In the current study, we used the GMRT as an indicator of reading skill. Consequently, these results provide further support to previous researchers who have demonstrated that the measure of reading speed embedded within brief reading rate measures (i.e., MAZE, RCR, ORF) can account for most of reading skill variance explained by the brief reading rate measures (Ciancio et al. 2015; Hale et al. 2012; Skinner et al. 2009; Williams et al. 2011).

For both standard and ransom-note passages, words read correctly was a weaker explanatory variable of GMRT scores than WCPM scores. These results are consistent with others who have asserted that converting accuracy measures (e.g., number or percent of words read correctly) to a rate measure can enhance the amount of validity accounted for by brief reading measures (Shapiro 2011; Shinn 1995). Because oral reading speed was not embedded within our standardized criterion measures (GMRT), our current analysis provides clear support for psychometric and theoretical researchers who found evidence of a strong and perhaps causal relationship between reading speed and reading comprehension (e.g., Breznitz 1987; LaBerge and Samuels 1974; Martson 1989; Perfetti 1985; Reschly et al. 2009).

Our current results have applied implications associated with the specific brief reading rate measures, WCPM. Educators and researchers have expressed concerns with WCPM being a measure of non-functional oral reading accuracy (Chall 1983; Potter and Wamre 1990; Samuels 2007; Skinner et al. 2002). Our sequential analyses suggest that the embedded measure of reading speed, by itself, could account for almost all of the explained standardize reading score variance. Consequently, our findings provide support for others who have suggested that concerns over ORF assessing non-functional word calling accuracy may be unfounded as the measure of reading speed embedded within the WCPM measures can account for almost all of the measures’ concurrent validity (Williams et al. 2011).

Finally, we investigated the effects of disrupted reading by administering matched standard and ransom-note (varied font sizes) passages. Students read statistically significantly slower on the ransom-note passages, but only by an average of three seconds. Based on the findings of Hale et al. (2012), we hypothesized that reading speed during disrupted reading may account for more comprehension score variance than reading speed during a typical reading condition. We did not find evidence to support the hypothesis that disrupting passage reading may strengthen the predictive utility of reading speed scores.

Limitations and Future Research

There are several limitations associated with the current study that should be addressed by future researchers. The subject pool was small and relatively homogeneous. Future researchers should increase both the sample size and diversity of the subject pool. While our population’s average GMRT standard scores were average, 28 of the 35 student did not complete the GMRT test within the allotted time. This indicates that our population was appropriate as ORF measures are often used to evaluate reading growth in struggling and developing readers (Hughes and Dexter 2011; Shapiro 2011). However, not permitting students sufficient time to complete the assessment introduced the measure of reading speed that we were trying to reduce in our criterion variable. Future researchers may find that they can address this problem by altering GMRT administration procedures by eliminating or lengthening the 35-min time limit to obtain a comprehension measure that does not include a rate measure.

Although we demonstrated that our passage alteration procedures (i.e., changed to contain font sizes varying from 10-point to 16-point font, all Times New Roman) reduced reading speed and words correct per minute, average reductions were less than 6 %. It is possible that the predictive validity of reading speed is a function of the degree of disruption, with improvements only seen after a certain threshold of disruption. Before concluding that disrupting reading does not enhance the predictive utility of ORF measures, future researchers should conduct similar studies using procedures that enhance the degree of disruption. For example, researchers could enhance font size differences, line spacing, and line length (see Katzir et al. 2013). Also, research could cause larger disruptions by varying font type, shade, and color.

With our font alteration procedures, we slowed reading speed without reducing oral reading accuracy, but we did not measure comprehension on individual passages. If future researchers measure passage comprehension, they may be able to manipulate and study within-subject relationships between passage reading speed and comprehension for that passage. Such studies may be theoretically important because across-subject covariates of reading speed and comprehension development (e.g., vocabulary development, intelligence, processing speed) would be controlled by within-subject comparisons (Paris et al. 2005), which may enhance researchers’ ability to investigate the relationship between reading speed and comprehension.

In the current study, we averaged passage reading scores across passages. As previous researchers have show wide variation in ORF across passages that were designed to be equivalent (Christ and Ardoin 2009; Poncy et al. 2005), future researchers may want to conduct similar studies analyzing data from each passage separately (see Ciancio et al. 2015 for an example). Because applying ransom-note procedures may be more disruptive (e.g., cause a large increase in time required to read) when reading more difficult passages (e.g., passage at the high instructional or low frustrational level), researchers conducting similar studies should consider manipulating passage difficulty.

Conclusions

The current results support previous researchers who demonstrated that ORF measures are robust and that reading speed is related to and a significant predictor of comprehension skill development (Marston 1989; Reschly et al. 2009). We extended research on the measure of reading speed embedded within brief reading rate measures. Specifically, we found that almost all the GMRT reading comprehension score variance accounted for by WCPM could be explained by the measure of reading speed embedded within the WCPM measure. These results support earlier researchers who conducted studies with criterion variables (i.e., WJ-III scores) that also measured oral reading speed and accuracy (Ciancio et al. 2015; Hale et al. 2012; Williams et al. 2011).

Researchers attempting to enhance brief reading rate measures have focused on applying different measures of accuracy, including oral word reading accuracy (ORF), comprehension answer accuracy (RCR), and word selection accuracy (MAZE). We focused on applying a procedure designed to influence the measure of reading speed embedded within each of these brief reading rate measures. This is the first investigation of ORF measures where investigators experimentally manipulated reading speed. Although we did not find evidence to support the hypothesis that disrupted reading enhanced the validity of the reading speed measure (Hale et al. 2012), because reading speed appears to account for so much reading skill variance, researchers may want to continue to investigate procedures designed to enhance the validity of reading rate measures by assessing the impact of variables designed to influence reading speed.

Notes

Both reading speed and reading time can be measured using seconds required to read a complete passage. Greater reading speed is associated with lower seconds to read. Increased reading time is associated with higher seconds to read.

References

Breznitz, Z. (1987). Increasing first graders’ reading accuracy and comprehension by accelerating their reading rates. Journal of Educational Psychology, 79, 236–242. doi:10.1037//0022-0663.79.3.236.

Chall, J. S. (1983). Stages of reading development. New York: McGraw-Hill. doi:10.1017/s0142716400005166.

Ciancio, D. J., Thompson, K., Schall, M., Skinner, C., & Foorman, B. A. (2015). Accurate reading comprehension rate as an indicator of broad reading in students in first, second, and third grades. Journal of School Psychology, 53, 393–407. doi:10.1016/j.jsp.2015.07.003.

Christ, T. J., & Ardoin, S. P. (2009). Curriculum-based measurement of oral reading: passage equivalence and probe-set development. Journal of School Psychology, 47, 55–75. doi:10.1016/j.jsp.2008.09.004.

Forbes, B. E., Skinner, C. H., Maurer, K. M., Taylor, E. P., Schall, M., Ciancio, D., & Conley, M. (2015). Prompting faster reading during fluency assessments: the impact of skill level and comprehension measures on changes in performance. Research in the Schools, 22, 27–43.

Hale, A. D., Skinner, C. H., Wilhoit, B., Ciancio, D., & Morrow, J. A. (2012). Variance in broad reading accounted for by measures of reading speed embedded within Maze and comprehension rate measures. Journal of Psychoeducational Assessment, 30, 539–554. doi:10.1177/0734282912440787.

House, A. E., House, B. J., & Campbell, M. B. (1981). Measures of interobserver agreement: calculation formulas and distribution effects. Journal of Behavioral Assessment, 3, 37–57. doi:10.1007/bf01321350.

Hughes, C. A., & Dexter, D. D. (2011). Response to intervention: a research-based summary. Theory into Practice, 50, 4–11. doi:10.1080/00405841.2011.534909.

Jenkins, J. R., Fuchs, L. S., van den Broek, P., Espin, C., & Deno, S. L. (2003). Sources of individual differences in reading comprehension and reading fluency. Journal of Educational Psychology, 95, 719–729. doi:10.1037/0022-0663.95.4.719.

Jenkins, J. R., & Jewell, M. (1993). Examining the validity of two measures for formative teaching: reading aloud and maze. Exceptional Children, 59, 421–432.

Katzir, T., Hershko, S., & Halamish, V. (2013). The effect of font size on reading comprehension on second and fifth grade children: bigger is not always better. PLoS One, 8(9), e74061.

Keenan, J. M., Betjemann, R. S., & Olson, R. K. (2008). Reading comprehension tests vary in the skills they assess: differential dependence on decoding and oral comprehension. Scientific Studies of Reading, 12, 281–300. doi:10.1080/10888430802132279.

LaBerge, D., & Samuels, S. J. (1974). Toward a theory of automatic processing in reading. Cognitive Psychology, 6, 293–323. doi:10.1016/0010-0285(74)90015-2.

MacGinitie, W. H., MacGinitie, R. K., Maria, K., Dreyer, L. G., & Hughes, K. E. (2000). Gates-MacGinitie Reading Tests (4th ed.). Itasca, IL: Riverside Publishing.

Marston, D. B. (1989). A curriculum-based measurement approach to assessing academic performance: what it is and why we do it. In M. R. Shinn (Ed.), Curriculum-based measurement: assessing special children (pp. 18–78). New York: Guilford.

Neddenriep, C., Hale, A., Skinner, C. H., Hawkins, R. O., & Winn, B. D. (2007). A preliminary investigation of the concurrent validity of reading comprehension rate: a direct dynamic measure of reading comprehension. Psychology in the Schools, 44, 373–388. doi:10.1002/pits.20228.

Paris, S. G., Carpenter, R. D., Paris, A. H., & Hamilton, E. E. (2005). Spurious and genuine correlates of children’s reading comprehension. In G. Paris & S. A. Stahl (Eds.), Children’s reading comprehension and assessment (pp. 131–160). Mahwah, NJ: Erlbaum.

Parker, R., Hasbrouck, J. E., & Tindal, G. (1992). The Maze as a classroom-based reading measure: construction methods, reliability, and validity. Journal of Special Education, 26, 195–218. doi:10.1177/002246699202600205.

Perfetti, C. A. (1985). Reading ability. New York: Oxford University Press. doi:10.1017/s0033291700005304.

Poncy, B. C., Skinner, C. H., & Axtell, P. K. (2005). An investigation of the reliability and standard error of measurement of words read correctly per minute. Journal of Psychoeducational Assessment, 23, 326–338. doi:10.1177/073428290502300403.

Potter, M. L., & Wamre, H. M. (1990). Curriculum-based measurement and developmental reading models: opportunities for cross-validation. Exceptional Children, 57, 16–25.

Reschly, A. L., Busch, T. W., Betts, J., Deno, S. L., & Long, J. D. (2009). Curriculum-based measurement oral reading fluency as an indicator or reading achievement: a meta-analysis of the correlational evidence. Journal of School Psychology, 47, 427–469. doi:10.1016/j.jsp.2009.07.001.

Samuels, S. J. (2007). The DIBELS tests: is speed of barking at print what we mean by reading fluency? Reading Research Quarterly, 42, 563–566.

Shapiro, E. S. (2011). Academic skills problems: direct assessment and intervention (4th ed.). New York: Guilford Press.

Shinn, M. R. (1995). Curriculum-based measurement and its use in a problem solving model. In A. Thomas & J. Grimes (Eds.), Best practices in school psychology-III (pp. 547–567). Washington DC: National Association of School Psychologists.

Skinner, C. H. (1998). Preventing academic skills deficits. In T. S. Watson & F. Gresham (Eds.), Handbook of child behavior therapy: ecological considerations in assessment, treatment, and evaluation (pp. 61–83). New York: Plenum. doi:10.1007/978-1-4615-5323-6_4.

Skinner, C. H., Neddenriep, C. E., Bradley-Klug, K. L., & Ziemann, J. M. (2002). Advances in curriculum-based measurement: alternative rate measures for assessing reading skills in pre- and advanced readers. Behavior Analyst Today, 3, 270–281.

Skinner, C. H., Williams, J. L., Morrow, J. A., Hale, A. D., Neddenriep, C., & Hawkins, R. O. (2009). The validity of a reading comprehension rate: reading speed, comprehension, and comprehension rates. Psychology in the Schools, 46, 1036–1047. doi:10.1002/pits.20442.

Spargo, E. (1989). Timed Readings (3rd ed., Book 4). Providence, RI: Jamestown Publishers.

Stanovich, K. E. (1986). Matthew effects in reading: some consequences of individual differences in the acquisition of literacy. Reading Research Quarterly, 21, 360–407. doi:10.1598/rrq.21.4.1.

Tichá, R., Espin, C. E., & Wayman, M. M. (2009). Reading progress monitoring for secondary school students: reliability, validity, and sensitivity to growth of reading-aloud and maze-selection measures. Learning Disabilities Research and Practice, 24, 132–142. doi:10.1111/j.1540-5826.2009.00287.x.

Wayman, M. M., Wallace, T., Wiley, H. I., Tichá, R., & Espin, C. A. (2007). Literature synthesis on curriculum-based measurement in reading. Journal of Special Education, 41, 85–120. doi:10.1177/00224669070410020401.

Williams, J. L., Skinner, C. H., Floyd, R. G., Hale, A. D., Neddenriep, C., & Kirk, E. (2011). Words correct per minute: the variance in standardized reading scores accounted for by reading speed. Psychology in the Schools, 48, 87–101. doi:10.1002/pits.20527.

Woodcock, R. W., McGrew, K. S., & Mather, N. (2001). Woodcock-Johnson tests of achievement—third edition. Itasca, IL: Riverside Publishing.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Schall, M., Skinner, C.H., Cazzell, S. et al. Extending Research on Oral Reading Fluency Measures, Reading Speed, and Comprehension. Contemp School Psychol 20, 262–269 (2016). https://doi.org/10.1007/s40688-015-0083-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40688-015-0083-5