Abstract

Introduction

The Core Entrustable Professional Activities for Entering Residency (Core EPAs) are clinical activities all interns should be able to perform on the first day of residency with indirect supervision. The acting (sub) internship (AI) rotation provides medical students the opportunity to be assessed on advanced Core EPAs.

Materials and Methods

All fourth-year AI students were taught Core EPA skills and performed these clinical skills under direct supervision. Formative feedback and direct observation data were provided via required workplace-based assessments (WBAs). Supervising physicians rated learner performance using the Ottawa Clinic Assessment Tool (OCAT). WBA and pre-post student self-assessment data were analyzed to assess student performance and gauge curriculum efficacy.

Results

In the 2017–2018 academic year, 167 students completed two AI rotations at our institution. By their last WBA, 91.2% of students achieved a target OCAT supervisory scale rating for both patient handoffs and calling consults. Paired sample t tests of the student pre-post surveys showed statistically significant improvement in self-efficacy on key clinical functions of the EPAs.

Discussion

This study demonstrates that the AI rotation can be structured to include a Core EPA curriculum that can assess student performance utilizing WBAs of directly observed clinical skills.

Conclusions

Our clinical outcomes data demonstrates that the majority of fourth-year medical students are capable of performing advanced Core EPAs at a level acceptable for intern year by the conclusion of their AI rotations. WBA data collected can also aid in ad hoc and longitudinal summative Core EPA entrustment decisions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Incoming interns lack proficiency in a number of essential skills required for clinical practice [1]. Collectively, these deficiencies may pose a significant threat to patient safety [2]. To address the need for improved preparation for internship in the USA, the Association of American Medical Colleges (AAMC) sponsored the development of Core Entrustable Professional Activities for Entering Residency (Core EPAs) [3]. Despite the publications of the Core EPA pilot project, institutions have continued to struggle to implement the best structure for a Core EPA–based curriculum that facilitates collection of direct assessment data, especially in redesigning the final year of medical school [1, 4].

While clinical clerkships provide an opportunity to teach and assess many EPAs, clerkship leaders have noted that the more advanced EPAs are not a priority for their clerkship curricula [5]. Not surprisingly, the perceived deficiencies across individual EPAs vary as well. For example, a recent study demonstrated that the majority of surgical program directors felt incoming interns were unable to perform several EPAs, including ability to perform or receive a patient handover (EPA 8) and enter and discuss orders/prescriptions (EPA 4), but were better prepared to perform other Core EPAs such as gathering a history and preforming a physical examination (EPA 1) [6].

These needs assessments should drive curricular innovation to ensure teaching of the more advanced Core EPAs within the fourth year of medical school. Some authors have suggested the Core EPAs should be introduced in a more incremental fashion, with more advanced EPAs learned later in the curriculum [7]. This provides a unique opportunity within the fourth year of medical school for residency preparation [8], especially within the acting internship (AI) rotation. The AI should allow medical students to perform a variety of clinical tasks that encompass many EPAs; however, the rotation commonly lacks structure and requirements vary across institutions [9,10,11]. In addition, there are limited published outcomes data about integration of the Core EPAs into AIs, including how to perform clinical observations, compile summative assessments, and ensure entrustment of graduating students. Implementation of clinical observations is essential to facilitate ad hoc and summative entrustment decisions, which is the basis of the Core EPA project [12,13,14]. Without objective WBA data, evaluation of students on their AI falls to a more global, summative assessment which may lack objective data on student progress and development of key clinical behaviors.

We aimed to address these issues by adding structure and rigor to the AI rotation in our school-wide, interdepartmental AI curriculum and implementing workplace-based assessments (WBAs) to address the more advanced Core EPAs. Specifically, the objective was to ascertain the effectiveness of a Core EPA–focused AI curriculum in preparing fourth-year medical students for intern year as assessed by direct observations using WBAs and student pre-post self-assessments. Secondly, we aimed to utilize the directly observed outcomes data from these WBAs to evaluate the ability of students to reach the desired supervisory scale of performing certain clinical skills with indirect supervision.

Materials and Methods

AI Rotation Curriculum

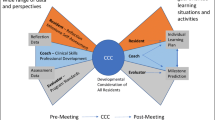

Based on the perceived gaps between interns and medical school graduates [1, 5], we developed a curriculum focused on acquisition of clinical skills encompassing five designated EPAs. These included entering electronic orders (EPA 4—Enter and discuss orders and prescriptions), calling consults (EPA 6—Provide an oral presentation of a clinical encounter and EPA 9—Collaborate as a member of an interprofessional team), handing off patients (EPA 8—Give or receive a patient handover to transition care responsibility), and providing cross-coverage (EPA 10—Recognize a patient requiring urgent or emergent care and initiate evaluation and management). The framework and detailed curriculum for the AI may be found in our previously published manuscript [15]. Briefly, a school-wide curriculum was implemented with all AI students on the first day of their AI rotation, which included active learning approaches with small group exercises focused on practicing the aforementioned Core EPAs. The AI curriculum built upon Core EPA-based curriculum and assessment in the clerkship year.

Students underwent formative assessment and received feedback in the clinical environment during their AI rotation through required, direct WBAs of two clinical behaviors, which encompassed three EPAs, patient handovers (EPA 8), and calling consults (EPA 6 and EPA 9). Students were required to request two WBAs for each designated Core EPA behavior per AI rotation, with ideally one of each occurring the first half and second half of each rotation.

Assessment Approach

During the 2017–2018 academic year, 167 students completed two required AI rotations (1 critical care and 1 ward-based) at our institution. Supervising physicians provided formative feedback through required direct observations and completed ad hoc WBAs for patient handovers and calling consults. We developed WBAs for each desired clinical behavior that included checklist items specific to the behavior or Core EPA observed as well as a supervisory scale described below. In addressing patient handoffs, we utilized the patient handoff tool with previous validity evidence, I-PASS, to train students to perform handoffs and used its associated checklist as a part of our WBA to score patient handoff observations [16]. Residents and faculty had experience using the I-PASS tool to perform patient handoffs as this was our institution’s recommended handoff format.

In developing our consult curriculum and WBA, we utilized a consult observation checklist, which was developed from a prior publication [17], and although not validated in the UME setting, it has been used to train all incoming interns during their graduate medical education orientation at our institution for multiple years. Therefore, given the familiarity of our faculty and residents to these clinical tools, the degree of additional faculty development to use the WBAs based on these tools was minimal.

In addition to these checklists, both WBA forms included the Ottawa Clinic Assessment Tool (OCAT) [18], which was used to assess the level of supervision the student required to perform each behavior. This scale can be used for any clinically observed behavior and was not specific to any particular Core EPA. Given the ultimate goal of the Core EPA pilot is the ability of graduating medical students to perform the Core EPAs with indirect supervision, we identified a correlating target OCAT score of 4 or 5 (“I had to be available just in case” and “I did not need to be there” respectively) as the expected level of competence for our AI students. Both observation forms were piloted with the AI students in the preceding academic year (2016–2017) and revised based on faculty and student feedback as well as review of the data obtained from these forms. Faculty and residents underwent training in direct observations using WBAs and the OCAT scale as part of a project involving the clerkships during the prior academic year. Therefore, supervising physicians had experience using similar WBAs and the OCAT supervisory scale in the clinical setting supervising third-year medical students on their clerkships in the preceding academic year.

Lastly, students completed a retrospective pre-post survey after completion of their AI rotations asking their self-perceived ability (self-efficacy—defined as the confidence to carry out the courses of action necessary to accomplish desired goals [19]) to perform EPAs and their preparedness for internship. This survey was developed based on our objectives for the AI rotation and Core EPAs addressed by our curriculum and in practice on the clinical rotation. This survey tool was also piloted in the prior academic year and revised based on course director review of the data.

Setting

Our university is a large, public institution located within an urban city. Our university reformed the previously existing traditional medical school curriculum through the introduction of the “C3 Curriculum” (C3 = centered on the needs of the learner, clinically driven, and competency-based). Within the context of the greater curriculum reform effort, in 2017, the educational leaders sought to restructure the AI experience to address the EPAs. Our university’s leadership modified the AI experience from a required departmentally based, specialty-specific rotation to a centrally administered, interdepartmental rotation with a Core EPA–based curriculum and associated workplace-based assessments (WBAs) with directed feedback [15]. Team structure of each AI varied by rotation and department, from working directly with an attending to working on a traditional ward team with interns, residents, and attendings. Students on their AI rotation are expected to perform all duties of a typical intern, including but not limited to placing orders (to be cosigned by supervising physicians), documentation (admission histories and physicals, daily notes, discharge summaries and instructions), patient handoffs, returning and addressing nursing pages (cross-coverage), and calling consults. Throughout the 4-week AI, students were intended to have increasingly graduated responsibility to call consults, perform handoffs, enter orders, and function at the level of an intern under direct supervision. The introduction of WBAs throughout the rotation allowed for multiple objective assessments and structured feedback to the students on these clinical behaviors.

WBAs and direct observation data were collected and managed using REDCap (Research Electronic Data Capture) electronic data capture tools hosted at our institution [20, 21]. The institutional review board at our institution approved this study as exempt.

Results

WBA Data

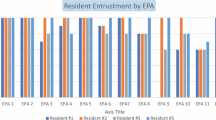

Of the 167 students enrolled in AIs, all students had at least two completed formal WBAs within one AI rotation of calling consults and 158 had WBAs of patient handoffs. We limited our analysis to the last submitted WBA available as our measure of the final supervision level achieved for each EPA given most students did not have two WBAs completed by supervising physicians in both AIs. For both activities, over 91% achieved the target OCAT supervisory score of a 4 or 5 (“I had to be available just in case” and “I did not need to be there” respectively) (see Fig. 1). On direct assessment of performance on specific I-PASS handoff components of the WBAs, 89 to 98% of students demonstrated appropriate performance of “usually” or “always” for each component by their second WBA (see Table 1). Of all the students, 71 and 96 had all four WBAs submitted by supervising physicians for calling consults and patient handoffs respectively across both AI rotations (2 per AI rotation). Mean ratings for handoff WBA were lowest during the first WBA at the beginning of the first AI (mean = 4.24, SD = 0.52) and highest at the end of the second AI (mean = 4.41, SD = 0.65). Mean ratings for consults were slightly higher than handoffs across time points and showed the lowest mean rating during the first WBA (mean = 4.39, SD = 0.07) and the highest mean rating during their third WBA (mean = 4.55, SD = .06), the first WBA of the second AI. A repeated measures ANOVA with post hoc comparisons found no statistically significant differences between mean ratings at any time points for handoffs or consults despite upward trends (see Fig. 2) (for complete WBA data, see Tables 3 and 4 in the Supplemental Material).

Self-efficacy

In total, 148 (89%) students completed the retrospective pre-post survey ratings of self-efficacy performing EPAs for either their first or second AI. Student data from the first AI was analyzed if available: if a student completed the survey after their first AI, it was included; if they did not complete the survey after their first AI, the data from their second AI was utilized if available. Only one survey was analyzed per student. The majority of students (n = 87) completed the survey after their first AI. Despite a significant number of students completing the survey after their second AI, there were still significant improvements noted. Given lack of longitudinal surveys completed by students following both AI rotations, the survey data was unable to accurately be compared to show improvements following the first vs second AI. Regardless, paired t tests after one AI rotation showed a statistically significant improvement in self-efficacy across all surveyed Core EPA behaviors (p < 0.05) (see Table 2). Cohen’s d showed moderate to large effect sizes (0.79–1.39).

The majority of respondents (77%) chose a 4 or 5 on preparedness for internship following their AI, compared to 28% prior to their AI, on a 5-point Likert scale (1 = “not at all prepared,” 5 = “very prepared”). Students showed statistically significant improvement in their confidence performing all five Core EPAs addressed in our AI curriculum (EPAs 4, 6, 8, 9, and 10) (see Table 2). Students also seemed to benefit from additional clinical practice of other Core EPAs that the curriculum did not directly address, including EPA 1 (Gather a history and perform a physical exam) and EPA 2 (Prioritize a differential diagnosis). In fact, the largest effect size was the students’ ability to independently formulate a patient-specific differential diagnosis (Cohen’s d = 1.39) based on the pre-post AI data.

Discussion

We sought to address the reported gaps in competence of beginning interns [1] through a school-wide curriculum for AIs focused on a number of advanced Core EPAs. Targeted WBAs with direct feedback were designed to foster deliberate and repeated practice in the behaviors underlying each designated Core EPA. The AI rotation provided an ideal place to teach and evaluate these behaviors given the graduated student autonomy and clinical responsibilities afforded by the clinical rotation. As a result, fourth-year medical students felt significantly more prepared for internship as a result of the AI rotation. The individualized WBA data also supported this as most students achieved a performance level of needing indirect supervision across selected Core EPAs on their final WBA. While we did not find statistically significant difference in mean OCAT scores on WBAs longitudinally across the two AI rotations, there was small trend towards improvement over time. This approach represents a first step towards an overarching assessment within the AI that is grounded in Core EPAs and ad hoc entrustment decisions.

The AI rotation may also be a setting for direct observations of other EPAs. WBAs of EPA 4 (Enter and discuss orders and prescriptions) and EPA 10 (Recognize a patient requiring urgent or emergent care and initiate evaluation and management) are possible candidates and do not seem to be priorities for clerkship education [6]. Ideally, additional EPA implementation would be integrated under a programmatic approach, where AI assessments build upon EPA assessments in the clerkship year as students progress towards entrustment. This approach should also include developing entrustment committees to determine entrustment decisions in Core EPAs prior to graduation based on clerkship performance, AI assessments, and clinical elective experiences [12]. The standardized, direct clinical observation (WBA) data and ad hoc entrustment decisions obtained through this curriculum will be essential to aid in Core EPA entrustment committee decisions.

As described, a number of students did not meet the threshold for indirect supervision performing specific Core EPAs by the conclusion of the AI experience. Since this was a formative exercise, we did not require remediation. As a next step, students could be required to achieve target performance level on multiple direct observations within the AI rotation before passing the rotation. Identifying the minority of students that do not achieve the desired performance level on one or more behaviors will allow for additional teaching, clinical practice, and directed feedback to promote more deliberate practice and facilitate reaching competency. Additionally, a Core EPA portfolio that allows students and educational leaders to monitor progress towards reaching the target performance level for each behavior on their path to competency and ultimately entrustment would provide enhanced feedback to our students. This approach would ensure students achieve the target performance ability before graduation and also help adapt curriculum to student needs.

Our study has a number of limitations. This was a single-institution study; therefore, our results may not be representative of student performance and ability at other institutions. In addition, the supervising physician completion rate of our WBAs, which was estimated to be about half of those requested, was less than our goal. Our limited sample of longitudinal WBA data across both AI rotations may have limited statistical power to find small differences and limits interpretation of these trends to the full cohort of students. While students were expected to request a minimum number of WBAs for each designated EPA, we could not enforce that a minimum number were submitted by the supervising physician (attending or resident). Students were encouraged to submit additional WBA requests if they were having trouble getting supervisors to complete and submit their WBAs. Additionally, we emphasized the importance of students informing their supervising physician beforehand that they are requesting a specific clinical skill to be observed with an associated WBA before performing that activity in the clinical setting. Additional faculty and resident development centered around these direct observations could potentially increase WBA completion rate and quality. Furthermore, streamlined assessment tools, embedded in a more user-friendly application, may increase usability and decrease the demands of faculty to complete these forms. The measurement of self-efficacy may not in some cases accurately represent actual performance. Lastly, our WBAs have not been previously studied or validated but did include components from previously validated tools, including the OCAT supervisory scale.

Future studies should investigate how entrustment decisions predict competence of incoming interns. This is the essential question of observation tool validity and could be accomplished through program director assessment of our graduating students’ performance as interns. Future studies should also examine the characteristics of various tools such as reliability and usability to determine the most efficient way to determine entrustment. Additionally, future studies should aim to include more robust longitudinal data, especially WBAs, to enable more defined outcomes of student development and allow for further analysis.

Our objective, Core EPA–based WBA data, demonstrated the benefit and practicality of this approach for training fourth-year medical students for intern year. Objective clinical data provided by our WBAs can contribute to competency and entrustment decisions of students’ ability to perform designated Core EPAs. These curricular changes and the outcomes data provide some important insights for others interested in this area and serve as an important steppingstone to more competent, entrustable physicians.

Conclusion

Our Core EPA–focused AI curriculum and clinical rotation demonstrates adding structure and ad hoc WBAs with direct feedback in the clinical setting successfully prepared fourth-year medical students for intern year. In addition, we showed that fourth-year medical students are capable of achieving desired competency and supervisory scale ratings correlating with indirect supervision performing designated clinical skills of advanced Core EPAs by the conclusion of their AI rotations.

References

Angus S, Vu TR, Halvorsen AJ, Aiyer M, McKown K, Chmielewski AF, et al. What skills should new internal medicine interns have in July? A national survey of internal medicine residency program directors. AcadMed. 2014;89:432–5.

Young JQ, Ranji SR, Wachter RM, Lee CM, Niehaus B, Auerbach AD. “July effect”: impact of the academic year-end changeover on patient outcomes: a systematic review. AnnInternMed. 2011;155:309–15.

Englander R, Flynn T, Call S, Carraccio C, Cleary L, Fulton TB, et al. Toward defining the foundation of the MD degree: Core Entrustable Professional Activities for Entering Residency. AcadMed. 2016;91:1352–8.

Wackett A, Daroowalla F, Lu W-H, Chandran L. Reforming the 4th-year curriculum as a springboard to graduate medical training: one school’s experiences and lessons learned. Teaching and Learning in Medicine. 2016;28:192–201.

Fazio SB, Ledford CH, Aronowitz PB, Chheda SG, Choe JH, Call SA, et al. Competency-based medical education in the internal medicine clerkship: a report from the Alliance for Academic Internal Medicine Undergraduate Medical Education Task Force. Acad Med. 2018;93:421–7.

Lindeman BM, Sacks BC, Lipsett PA. Graduating students’ and surgery program directors’ views of the Association of American Medical Colleges Core Entrustable Professional Activities for Entering Residency: where are the gaps? JSurgEduc. 2015;72:e184–92.

Meyer EG, Kelly WF, Hemmer PA, Pangaro LN. The RIME model provides a context for entrustable professional activities across undergraduate medical education. Acad Med. 2018;93:954.

Benson NM, Stickle TR, Raszka WV Jr. Going “fourth” from medical school: fourth-year medical students’ perspectives on the fourth year of medical school. AcadMed. 2015;90:1386–93.

Aiyer MK, Vu TR, Ledford C, Fischer M, Durning SJ. The subinternship curriculum in internal medicine: a national survey of clerkship directors. TeachLearnMed. 2008;20:151–6.

Lindeman BM, Lipsett PA, Alseidi A, Lidor AO. Medical student subinternships in surgery: characterization and needs assessment. AmJSurg. 2013;205:175–81.

Elnicki DM, Gallagher S, Willett L, Kane G, Muntz M, Henry D, et al. Course offerings in the fourth year of medical school: how U.S. medical schools are preparing students for internship. AcadMed. 2015;90:1324–30.

Brown DR, Warren JB, Hyderi A, Drusin RE, Moeller J, Rosenfeld M, et al. Finding a path to entrustment in undergraduate medical education: a progress report from the AAMC Core Entrustable Professional Activities for Entering Residency Entrustment Concept Group. AcadMed. 2017;92:774–9.

Hauer KE, Holmboe ES, Kogan JR. Twelve tips for implementing tools for direct observation of medical trainees’ clinical skills during patient encounters. Medical Teacher. 2011;33:27–33.

Ryan MS, Santen S. Generalizability of the Ottawa Surgical Competency Operating Room Evaluation (O-SCORE) scale to assess medical student performance of the Core EPAs in the workplace. Acad Med. 2020;(accepted).

Garber AM, Ryan MS, Santen SA, Goldberg SR. Redefining the acting internship in the era of entrustment: one institution’s approach to reforming the acting internship. MedSciEduc [Internet]. 2019 [cited 2019 Mar 20]; Available from: https://doi.org/10.1007/s40670-019-00692-7.

Starmer A, Landrigan C, Srivastava R, Wilson K, Allen A, Mahant S, et al. I-PASS handoff curriculum: faculty observation tools. MedEdPORTAL [Internet]. 2013 [cited 2019 Mar 20]; Available from: https://www.mededportal.org/publication/9570/

Podolsky A, Stern DT, Peccoralo L. The courteous consult: a CONSULT card and training to improve resident consults. J Grad Med Educ. 2015;7:113–7.

Rekman J, Hamstra SJ, Dudek N, Wood T, Seabrook C, Gofton W. A new instrument for assessing resident competence in surgical clinic: the Ottawa Clinic Assessment Tool. J Surg Educ. 2016;73:575–82.

Klassen RM, Klassen JRL. Self-efficacy beliefs of medical students: a critical review. Perspect Med Educ. 2018;7:76–82.

Harris PA, Taylor R, Minor BL, Elliott V, Fernandez M, O’Neal L, et al. The REDCap consortium: building an international community of software platform partners. Journal of Biomedical Informatics. 2019;95:103208.

Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research Electronic Data Capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42:377–81.

Funding

VCU receives funding from the Accelerating Change in Medical Education Grant from the American Medical Association. This funding was not related to this study. In addition, REDCap database access at VCU is provided through C. Kenneth and Dianne Wright Center for Clinical and Translational Research grant support (UL1TR002649).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Ethics Approval

This study was approved by the VCU Institutional Review Board.

Informed Consent

Not applicable

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Adam M. Garber, Associate Professor of Internal Medicine at the Virginia Commonwealth University School of Medicine.

Moshe Feldman is Associate Professor and Assistant Director for Research and Evaluation, Center of Human Simulation and Patient Safety, Virginia Commonwealth University School of Medicine, Richmond, Virginia.

Michael Ryan, Associate Professor of Pediatrics and Assistant Dean for Clinical Medical Education at the Virginia Commonwealth University School of Medicine.

Sally A. Santen, Professor of Emergency Medicine and Senior Associate Dean for Evaluation, Assessment and Scholarship at the Virginia Commonwealth University School of Medicine.

Alan Dow, Associate Professor of Surgery at the Virginia Commonwealth University Health System.

Stephanie R. Goldberg is Associate Professor of Surgery at the Virginia Commonwealth University School of Medicine, Richmond, Virginia

Supplementary Information

ESM 1

(DOCX 21 kb)

Rights and permissions

About this article

Cite this article

Garber, A.M., Feldman, M., Ryan, M. et al. Core EPAs in the Acting Internship: Early Outcomes from an Interdepartmental Experience. Med.Sci.Educ. 31, 527–533 (2021). https://doi.org/10.1007/s40670-021-01208-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40670-021-01208-y