Abstract

We propose a simple model for the phenomenon of Eulerian spontaneous stochasticity in turbulence. This model is solved rigorously, proving that infinitesimal small-scale noise in otherwise a deterministic multi-scale system yields a large-scale stochastic process with Markovian properties. Our model shares intriguing properties with open problems of modern mathematical theory of turbulence, such as non-uniqueness of the inviscid limit, existence of wild weak solutions and explosive effect of random perturbations. Thereby, it proposes rigorous, often counterintuitive answers to these questions. Besides its theoretical value, our model opens new ways for the experimental verification of spontaneous stochasticity, and suggests new applications beyond fluid dynamics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Scaling symmetries of space and time shape the modern theory of developed turbulence [17], which assumes that equations of motion for a velocity field \({\mathbf {u}}({\mathbf {r}},t)\) are invariant with respect to the scaling transformations:

for arbitrary \(\lambda > 0\) and \(h \in {\mathbb {R}}\). Notice that this property refers to a wide (so-called inertial) interval of scales, at which both the forcing and viscous terms are negligible. Multi-scale systems of this kind may possess a fascinating property of spontaneous stochasticity: a small-scale initial uncertainty develops into a randomly chosen large-scale state in a finite time, and this behavior is not sensitive to the nature and magnitude of uncertainty [5, 13, 15, 21, 22, 29, 30, 32].

A simpler form of this phenomenon is the Lagrangian spontaneous stochasticity (LSS) of particle trajectories in a turbulent (non-differentiable) velocity field, also known as the Richardson super-diffusion [15, 17]: two particles diverge to distant random states in finite time independently of their initial separation. Another intriguing form is the Eulerian spontaneous stochasticity (ESS) of the velocity field itself: an infinitesimal small-scale noise triggers stochastic evolution of velocity field at finite scales and times. The consequences are both theoretical, revising the role of stochasticity in multi-scale classical systems, and practical, e.g., its implications for weather prediction [27, 28]. The ESS suggests a potentially new path for understanding the inviscid limit in the developed (Navier–Stokes) turbulence, which copes with a number of paradoxes, such as the recently discovered wild and non-unique dissipative weak solutions; see, e.g., [6, 7]. Unlike the LSS, which can been studied in various models [3, 8,9,10, 12, 14, 19], the current knowledge on the ESS is mostly limited to numerical simulations [4, 16, 24, 25, 29, 32]. A rigorous theory of ESS remains elusive due to its sophisticated (infinite-dimensional) character.

In this paper, we propose an artificial model, which is constructed as an infinite-dimensional extension of the (hyperbolic) Arnold’s cat map [1] and yields a rigorously solvable example of ESS. This model is a formally deterministic system with a scaling symmetry, which possesses non-unique (uncountably many) solutions, including analogues of wild solutions known for the Euler equations of incompressible ideal fluid [6]. However, solutions are made unique by introducing a viscous-like regularization. By mimicking the Navier–Stokes turbulence [2], we study the inviscid limit and we prove that it exists for subsequences, but yields uncountably many limiting solutions depending on a chosen subsequence. Then, we prove that adding a random perturbation as a part of the regularization yields a unique inviscid limit in the stochastic sense, i.e., it yields a unique and universal probability measure solving the original formally deterministic system with deterministic initial conditions. This probability measure defines a stochastic process with Markovian properties, and its universality means that it does not depend on a specific form of a random perturbation. The counterintuitive property of this spontaneously stochastic solution is that it assigns equal probability (uniform probability density) to all non-unique solutions. The rigorous answers produced by our model shed light on new ways of understanding the problem of non-uniqueness in the developing mathematical theory of turbulence [7].

The paper has the following structure. Section 2 introduces the model and describes basic properties of non-unique solutions. Section 3 defines regularized solutions and studies non-unique inviscid (subsequence) limits. Section 4 introduces random regularization and formulates our main result on the existence and uniqueness of a spontaneously stochastic solution, which is proved in Sect. 5. Section 6 investigates the convergence issues and presents results of numerical simulations. Further applications of obtained results are discussed in Sect. 7.

2 Model

We consider variables \(u_n(t)\) depending on time t and integer indices \(n \in {\mathbb {Z}}^+ = \{0,1,2,\ldots \}\). One can see these variables as describing a multi-scale system with a geometric sequence of spatial scales \(\ell _n = \lambda ^{-n}\) for some \(\lambda > 0\). In this case, the discrete analogue of scaling symmetry (1) with \(h = 0\) becomes

where the index shift \(n \mapsto n+1\) reflects the spatial scaling relation \(\ell _n = \lambda \ell _{n+1}\). Notice that (2) is the symmetry of the Euler equations for incompressible ideal fluid, in which case the variable \(u_n\) can be introduced by low/high-pass filters or wavelet transforms of the velocity field in the range of scales between \(\ell _n\) and \(\ell _{n+1}\) [17].

We construct an artificial model with symmetry (2) by setting \(\lambda = 2\) and defining variables \(u_n(t)\) on the two-dimensional torus \({\mathbb {T}}^2 = {\mathbb {R}}^2/{\mathbb {Z}}^2\) at discrete times:

where \(\tau _n\) is interpreted as the “turn-over” time at scale \(\ell _n\). As shown in Fig. 1, all scales and corresponding times define the self-similar lattice:

Our model is defined by the deterministic relation:

where the symmetric \(2 \times 2\) matrix A defines the Arnold’s cat map [1]:

Relation (5) defines evolution at scale \(\ell _n\) over a single turn-over time \(\tau _n\). Here we limited the inter-scale couplings to the same and smaller scales, \(\ell _n\) and \(\ell _{n+1}\), and took advantage that the map A is a linear, hyperbolic, invertible and area-preserving. These properties greatly facilitate analysis of the model, and we discuss further generalizations later. Relation (5) is invariant with respect to the scaling symmetry (2). The resulting structure of whole system is presented schematically in Fig. 1.

Structure of the multi-scale map for the variables \(u_n(t)\) corresponding to scales \(\ell _n\) and discrete times \(t \in \tau _n {\mathbb {Z}}^+\). Gray arrows represent the Arnold’s cat map (shown on the top of the figure), which appear in the coupling relation (5) and correspond to one turn-over time \(\tau _n\). White circles correspond to initial conditions. Green (\({\mathcal {G}}\)), red (\({\mathcal {R}}\)) and small black (\({\mathcal {B}}\)) circles denote, respectively, the next-time variables, the variables taking arbitrary values in Proposition 1, and the remaining variables

We assume an arbitrary deterministic initial condition:

We say that the infinite sequence \(\left( u_n(t)\right) _{(n,t) \in {\mathcal {L}}}\) is a solution of the initial value problem, if it satisfies relations (5)–(7) for all \((n,t) \in {\mathcal {L}}\). For describing all solutions, we split the lattice, \({\mathcal {L}} = {\mathcal {I}} \cup {\mathcal {G}} \cup {\mathcal {R}} \cup {\mathcal {B}}\), as shown in Fig. 1. Here \({\mathcal {I}} = \left\{ (n,0): n \in {\mathbb {Z}}^+\right\} \) are indices of initial conditions and \({\mathcal {G}} = \left\{ (n,\tau _n): n \in {\mathbb {Z}}^+\right\} \) of the next-time variables. The remaining sets of indices are defined as

Proposition 1

For any given initial condition (7), there is uncountable number of solutions of system (5). Each solution is determined by initial conditions \(u_n(t) \in {\mathbb {T}}^2\) for \((n,t) \in {\mathcal {I}}\) and arbitrary values \(u_n(t) \in {\mathbb {T}}^2\) for \((n,t) \in {\mathcal {R}}\), in which case the remaining variables with \((n,t) \in {\mathcal {G}} \cup {\mathcal {B}}\) are defined uniquely.

Proof

Let us write Eq. (5) as

Then, given arbitrary \(N \in {\mathbb {Z}}^+\) and inspecting Fig. 1, one can verify that all variables \(u_n(t)\) with \(n \le N\) and \((n,t) \in {\mathcal {G}}\cup {\mathcal {B}}\) are uniquely defined by the initial conditions at \((n,t) \in {\mathcal {I}}\) and the variables with \(n < N\) and \((n,t) \in {\mathcal {R}}\). Hence, all equations (5) and initial conditions (7) are satisfied for arbitrary \(u_n(t) \in {\mathbb {T}}^2\) at \((n,t) \in {\mathcal {R}}\) and uniquely defined variables at \((n,t) \in {\mathcal {G}}\cup {\mathcal {B}}\). \(\square \)

We notice that solutions of Proposition 1 include analogues of the so-called wild weak solutions for Euler equations in fluid dynamics [6]. These are unphysical solutions with a finite support in time, i.e., nonzero for \(t \in (t_1,t_2)\) but vanishing both for \(t \le t_1\) and \(t \ge t_2\). Such solutions are constructed in our model by choosing the variables with \((n,t) \in {\mathcal {R}}\) to be zero for times \(t \notin (t_1+1,t_2)\) and nonzero for \(t \in (t_1+1,t_2)\), where \(t_1\) and \(t_2\) are arbitrary positive integers. One can show using Proposition 1 and relation (10) that this yields uncountably many wild solutions; see Fig. 2.

3 Regularized Solutions

Introducing a regularized system is a conventional way for dealing with non-uniqueness. For any integer \(N \ge 1\), we define the N-regularized system for the finite number of variables \(u_1(t),\ldots ,u_N(t)\) by setting the remaining variables to zero: \(u_n(t) \equiv (0,0)\) for \(n > N\). Thus, the set of variables reduces to \((u_n(t))_{(n,t) \in {\mathcal {L}}_N}\), where \({\mathcal {L}}_N = \left\{ (n,t): n \in \{0,\ldots ,N\},\ t \in \tau _n {\mathbb {Z}}^+\right\} \) is the truncated lattice. Equations of the N-regularized system are given by (5) for \(n < N\) with the equation for \(n = N\) reduced to the form \(u_n(t+\tau _n) = Au_n(t)\). The initial conditions are defined by relations (7) limited to the scales \(n \le N\). This truncation resembles the viscous regularization in fluid dynamics, where the viscous term of the Navier–Stokes equations suppresses the turbulent motion below a certain (so-called Kolmogorov) microscale \(\eta \sim \ell _N\) [17].

One can easily see from Fig. 1 that N-regularized solutions, which we denote by \(u_n^{(N)}(t)\), are uniquely defined by the initial conditions. One can always choose a subsequence \(N_1< N_2 < \cdots \) such that the N-regularized solutions converge for all n and t (see [11, Theorem 3.10.35]):

where \(u_n(t)\) is some solution from Proposition 1. For example, for vanishing initial conditions, this limit yields the vanishing solution at all times \(t \ge 0\), therefore, ruling out all wild solutions mentioned above.

Similar to turbulence models [23], solutions obtained in the regularization limit (11) are non-unique in general, because different subsequences yield different solutions:

Proposition 2

Consider the initial condition (7) with all variables equal to the same value \(u_n^0 = a\). Then, for almost every choice of \(a \in {\mathbb {T}}^2\), there exist infinite (uncountable) number of different solutions obtained as subsequence limits (11) of N-regularized systems.

Proof

Let us focus on the specific variable \(u_0^{(N)}(2)\). By induction with relation (5) represented by gray arrows in Fig. 1, one can verify the formula:

Taking into account that all initial values are equal to a, and \(\sum _{n = 1}^N A^n = (A-I)^{-1}(A^{N+1}-A)\) with the identity map I, one reduces (12) to the form:

where \(B = A^2(I+A)(A-I)^{-1}\) is a nonsingular matrix with integer components.

The ergodicity of the Arnold’s Cat map implies that the sequence \(A^Na\) with \(N \in {\mathbb {Z}}^+\) is dense on the torus for almost every \(a \in {\mathbb {T}}^2\). Let us consider such a, an arbitrary \(c\in {\mathbb {T}}^2\) and define \(b \in {\mathbb {T}}^2\) such that \((A^2-B)a+Bb=c\). Since \(A^Na\) is a dense orbit, we can choose an infinite subsequence \(N_i\) such that \(A^{N_i}a \rightarrow b\) as \(N_i \rightarrow \infty \). Then, expression (13) yields

Similar to (11) we can take a subsequence \(N_{i_k}\) within the sequence \(N_i\), so that \(u_n^{(N_{i_k})}(t)\) converges to a solution \(u_n(t)\) for all n and t. In particular, this implies that \(u_0(2)=c\) for arbitrary \(c\in {\mathbb {T}}^2\), providing uncountable number of limits for the regularized system. \(\square \)

Proposition 2 shows that the regularization does not serve as a proper selection criterion among infinitely many solutions given by Proposition 1. As we show in the next section, there is a deep reason for this failure of the regularization strategy. Contrary to the common intuition, all solutions of Proposition 1 become equally relevant when the stochastic form of regularization is considered.

4 Spontaneously Stochastic Solution

Let us modify the definition of N-regularized solution by adding a random small-scale perturbation. For simplicity, we consider a single random number \(\xi \in {\mathbb {T}}^2\) added to the initial value at the cutoff scale \(n = N\) as

with \(\xi \) having a Lebesgue integrable probability density \(\rho (\xi )\). This formulation is not only technically convenient, but also highlights an exceptional role of even a single source of randomness at small scales. Generalization to multiple random sources is rather straightforward.

Let us consider the mapping

relating deterministic initial conditions with deterministic N-regularized solutions; recall that \(u_n^{(N)}(t) \equiv 0\) for \(n > N\). For the new random initial condition (15), we introduce the full vector of initial states as

and define the corresponding probability measure as

This measure is a product of Dirac delta functions on the torus \({\mathbb {T}}^2\) for the first N components and the shifted density \(\rho (\xi )\) for the last component. We denote by \(\mu ^{(N)}\) the corresponding probability measure of N-regularized solutions, which is naturally obtained as the image (push-forward) of \(\mu _{\mathrm {ini}}^{(N)}\) by the mapping (16).

Let us consider the standard product topology on the lattice \({\mathcal {L}}\) and Borel probability measures endowed with the weak-convergence topology; see, e.g., [31]. We say that the original problem (5)–(7) has a spontaneously stochastic solution described by a non-trivial measure \(\mu \), if it is obtained as the limit:

in which the regularization is removed. Now we can formulate our main result as

Theorem 1

Problem (5)–(7) has a spontaneously stochastic solution given by the probability measure \(\mu \) specified below by Eqs. (20)–(24). This measure is universal, i.e., independent of the small-scale perturbation \(\xi \).

We postpone the proof for the next section, and now describe the measure \(\mu \). This measure is composed as a product of four pieces. The first two are the probability measures \(\mu _{{\mathcal {I}}}\) and \(\mu _{{\mathcal {G}}}\) corresponding, respectively, to deterministic initial conditions (7) and the next-time variables \(u_n(\tau _n)\) given uniquely by relation (5):

Here \(du_n(t)\) defines the Lebesgue (uniform) probability measure on \({\mathbb {T}}^2\) corresponding to a specific variable \(u_n(t)\). The third piece is given by the measure:

which describes a random uniform choice of variables \(u_n(t)\) from the red set \({\mathcal {R}}\); see Fig. 1. The last piece ensures that all relations (5) are satisfied for \(t > \tau _n\). These relations are verified at points of the black set \({\mathcal {B}}\) using Eq. (5) transformed to form \(u_n(t) = A^{-1}u_{n-1}(t+\tau _{n-1})-u_{n-1}(t)\); see Fig. 1. Therefore, we define

The probability measure \(\mu \) is given by the product:

It is remarkable that the spontaneously stochastic solution \(\mu \) assigns equal probability (uniform distribution) to all solutions of Proposition 1 independently of the random perturbation \(\xi \). One can see, however, that the probability measure corresponding to a set of wild solutions discussed above is zero.

Let us consider evolution of the spontaneously stochastic solution \(\mu \) by focusing on integer times. At each \(t \in {\mathbb {Z}}^+\), the solution defines a probability measure \(\mu _t\) on the infinite-dimensional space of variables \({\mathbf {u}}(t) = \left( u_0(t),u_1(t),u_2(t),\ldots \right) \). For example, projecting the measure (20)–(24) at \(t = 1\), we have

where \(u_0(1)\) is deterministic and \(u_n(1)\) with \(n \ge 1\) are random (independent and uniformly distributed). Measure (25) defines a Markov kernel: given a specific initial state \({\mathbf {u}}(0)\) it yields the probability distribution for \({\mathbf {u}}(1)\). Hence, the dynamics of our model at integer times represents a Markov process. In our example, \(\mu _t\) converges at \(t \ge 2\) to the equilibrium state \(\mu _t \equiv \mu _{\mathrm {eq}}\), which is the uniform (Haar) measure on \({\mathbb {T}}^\infty \). As discussed in a different example later on, the convergence of \(\mu _t\) as \(t \rightarrow \infty \) does not always occur in a finite time.

By inspecting the proofs of Propositions 1 and 2 and of Theorem 1 one can generalize our results as follows.

Corollary 1

Let us consider a larger class of models given by relation (5), where A is an arbitrary \(m \times m\) matrix with integer elements and \(\det A =1\), thus defining an automorphism of the m-dimensional torus \({\mathbb {T}}^m\). Proposition 1 remains valid with no additional hypothesis. Proposition 2 is valid if we assume that A does not possess eigenvalues which are roots of unity, in which case the induced automorphism of \({\mathbb {T}}^m\) is ergodic [26]. Finally, Theorem 1 remains valid under the two additional assumptions:

-

(i)

The dominant (maximum absolute value) eigenvalue \(\lambda \) of A is simple and greater than 1.

-

(ii)

Let \(v = (v_1,\ldots ,v_m)\) be the eigenvector corresponding to \(\lambda \) for the transposed matrix \(A^T\). Then the numbers \(v_1,\ldots ,v_m\) and 1 are rationally independent.

5 Proof of Theorem 1

The weak convergence of measures \(\mu ^{(N)} \rightarrow \mu \) in the product topology [31] follows from the following property, which describes the convergence for all finite-dimensional projections.

Lemma 1

Let \(\left( u_n(t)\right) _{(n,t) \in {\mathcal {S}}} \in {\mathbb {T}}^{2d}\) be any finite set of variables indexed by \({\mathcal {S}} = \{(n_i,t_i):i = 1,\ldots ,d\} \subset {\mathcal {L}}\). Let \(\mu _{{\mathcal {S}}}\) and \(\mu _{{\mathcal {S}}}^{(N)}\) be the corresponding probability measures obtained by projecting the measure \(\mu \) from (20)–(24) and the stochastically regularized measure \(\mu ^{(N)}\). Then

for any continuous observable \(\varphi :{\mathbb {T}}^{2d} \mapsto {\mathbb {R}}\).

Proof

First, we express explicitly an arbitrary variable \(u_n(t)\) in terms of initial conditions \(u_n^0\) and the random quantity \(\xi \) of the stochastically N-regularized problem. For this purpose, we use the polynomials \(P_{n,t}^{(N)}\) defined as

where the sum is taken over all paths following grey (right or up-right diagonal) arrows in Fig. 1, which connect (N, 0) to (n, t), and |p| denotes the number of arrows in the path. Using iteratively the linear relation (5) with the truncation property \(u_n(t) = 0\) for \(n > N\) and the initial conditions (7) and (15), one can check that

where \(a_{n,t}^{(N)} \in {\mathbb {T}}^2\) denotes the contribution from deterministic initial conditions. The probability measure \(\mu _{{\mathcal {S}}}^{(N)}\) corresponding to a finite set of random variables \(\left( u_n^{(N)}(t)\right) _{(n,t) \in {\mathcal {S}}} \in {\mathbb {T}}^{2d}\) is obtained using relation (28) as

where \(du_n^{(N)}(t)\) denotes the Lebesgue (uniform) probability measure on \({\mathbb {T}}^2\) corresponding to a specific variable \(u_n^{(N)}(t)\), and \(\rho : {\mathbb {T}}^2 \mapsto {\mathbb {R}}^+\) is a measurable probability density for the random number \(\xi \).

Now let us analyse an arbitrary set \({\mathcal {S}}\). It is enough to consider \({\mathcal {S}} = {\mathcal {L}}_{n,t}\) in the rectangular region:

for any integer n and t. Both measures \(\mu \) and \(\mu ^{(N)}\) are supported on the linear subspace determined by relations (5). Specifically, according to Proposition 1 and Fig. 1, variables at white nodes correspond to initial conditions, variables at green nodes are determined by initial conditions only, and variables at black nodes are given by initial conditions and by variables at red nodes of the set \({\mathcal {R}}\). These relations do not depend on N. Hence, for both projected measures \(\mu _{\mathcal {S}}\) and \(\mu _{\mathcal {S}}^{(N)}\) with \({\mathcal {S}} = {\mathcal {L}}_ {n,t}\), relations (5) define variables \(u_{n'}(t')\) from \({\mathcal {S}}\) in terms of initial conditions and variables from \({\mathcal {L}}_ {n,t} \cap {\mathcal {R}}\). From this property, one can infer that the relation (26) can be verified for smaller sets of the form:

in which we ignored the remaining deterministic variables.

Projecting the measure \(\mu \) from (20)–(24) on the subspace given by (31), one obtains that \(\mu _{\mathcal {S}}\) is the Lebesgue measure in \({\mathbb {T}}^{2d}\). Then, the integral in the right-hand side of (26) reduces to the mean value of the observable:

where \({\mathbf {w}} = \left( w_{n,t}\right) _{(n,t) \in {\mathcal {S}}} \in {\mathbb {T}}^{2d}\) denotes the vector of variables indexed by \({\mathcal {S}}\), with \(w_{n,t} = u_n^{(N)}(t)\) in the integral on the left-hand side. Let is consider the Fourier expansion:

where we introduced the wavevector \({\mathbf {k}} = \left( k_{n,t}\right) _{(n,t) \in {\mathcal {S}}} \in (2\pi {\mathbb {Z}})^{2d}\); the dot denotes the scalar product. Using (33) in relation (32), the constant term \(\varphi _{{\mathbf {0}}}\) compensates the integral in the right-hand side since \(\varphi _{{\mathbf {0}}} = \int \varphi \, d^{2d} {\mathbf {w}}\). Therefore, it remains to show that

for any nonzero wavevector \({\mathbf {k}}\). Using (29) with the property \(k_{n,t} \in (2\pi {\mathbb {Z}})^2\) and symmetry of the matrix A, we have

where we introduced the scalar \(a_{{\mathbf {k}}}^{(N)} \in {\mathbb {R}}\) and the vector \(A_{{\mathbf {k}}}^{(N)} \in {\mathbb {R}}^2\) as

Notice that the integral in the right-hand side of (35) represents the Fourier coefficient of \(\rho (\xi )\) of order \(-A_{{\mathbf {k}}}^{(N)}\). By the Riemann–Lebesgue lemma the high-order Fourier coefficients of the function \(\rho (\xi )\) converge to zero. Therefore, to conclude the proof it is enough to show that \(\Vert A_{{\mathbf {k}}}^{(N)}\Vert \rightarrow \infty \) as \(N\rightarrow \infty \) for any fixed nonzero wavevector \({\mathbf {k}} \in (2\pi {\mathbb {Z}})^{2d}\).

Using the eigenvalue decomposition of the Arnold’s cat map (6), we can write [1]

where \(\alpha = (3+\sqrt{5})/2\) and \(\alpha ^{-1}\) are eigenvalues of A, and the symmetric matrices \(A_1\) and \(A_2\) are given by the linear maps:

Substituting (37) into the second expression of (36) yields

Since \(P_{n,t}^{(N)}\) defined in (27) is a polynomial with positive coefficients and \(\alpha > 1\), we have

Using Lemma 2 formulated and proved below, we can order the elements in \({\mathcal {S}} = \{(n_i,t_i): i =1,\ldots ,d\}\) such that

Notice that \(A_1k_{n,t} = \frac{1}{\alpha +1}\left( {\begin{array}{c} {\alpha \ \ \alpha -1}\\ {\alpha -1 \ \ 1} \end{array}}\right) k_{n,t}\) following from (38), where \(\alpha = (3+\sqrt{5})/2\) is the irrational number. Since the wavevector \(k_{n,t}/(2\pi ) \in {\mathbb {Z}}^2\) has integer components, \(A_1k_{n,t}\) is nonzero if \(k_{n,t}\) is nonzero. Therefore, using properties (40) and (41) in expression (39), one can see that the magnitude of \(A_{{\mathbf {k}}}^{(N)}\) is dominated by the polynomial \(P_{n_i,t_i}^{(N)}(\alpha )\) with the largest i such that \(k_{n_i,t_i}\) is nonzero. Since \(P_{n_i,t_i}^{(N)}(\alpha ) \rightarrow \infty \) as \(N \rightarrow \infty \), we prove the desired property that \(\Vert A_{{\mathbf {k}}}^{(N)}\Vert \rightarrow \infty \) as \(N\rightarrow \infty \). \(\square \)

Lemma 2

Elements \((n_j, t_j)\), \(j = 1,\ldots ,d\) of any finite subset \({\mathcal {S}} \subset {\mathcal {R}}\) can be ordered such that (41) holds.

a Every path connecting (N, 0) to (n, t) defines the path connecting (N, 0) to \((n+1,t)\) through the following surgery procedure. The upper (red) part of the path is removed and the remaining (green) part is shifted to the left. Then, the lower (red) part is added to complete the new path. b Black lines connect nodes \((n',t')\) related by Eq. (45), where (n, t) are taken at red points. Polynomials on the same line have finite (nonzero and non-infinite) ratios in the limit \(N \rightarrow \infty \). c The path connecting (N, 0) to (n, t) with the largest number of segments

Proof

Observe that the condition \((n,t) \in {\mathcal {R}}\) with \(n \le N\) ensures that \(P_{n,t}^{(N)} (x)\) from (27) is nonzero for any \(x > 0\); see Fig. 1. For any path p from (N, 0) to (n, t) in (27), one constructs a new path \(p'\) from (N, 0) to \((n+1,t)\), as shown in Fig. 3a: removing the final segments at scale n, shifting the remaining part to the right, and adding extra segments at scale N. In this procedure, each removed segment yields the \(\tau _n/\tau _N = 2^{N-n}\) added segments. This means that

where we assumed an arbitrarily chosen number \(x > 1\). Notice that the definition (27) implies

where the last two terms correspond to the paths ending, respectively, with the horizontal and diagonal arrows (Fig. 1). Using (42) in (43), we have

Iterating this relation yields

When \((n,t) \in {\mathcal {R}}\), the points \((n',t')\) from (45) belong to a descending diagonal line, as shown in Fig. 3b. Inspecting these diagonal lines and using the property (42), one can deduce that

for any distinct elements \((n_1,t_1)\) and \((n_2,t_2)\) of the set \({\mathcal {R}}\). Here the indices are chosen such that the black line starting at \((n_2,t_2)\) is located to the right of the line starting at \((n_1,t_1)\); see Fig. 3b. In particular, this implies that any finite subset of elements \((n_j,t_j) \in {\mathcal {R}}\) can be ordered satisfying the properties (41). \(\square \)

6 Convergence Rate

We now address practical aspects of convergence: how small can be the random perturbation \(\xi \) and how large must be the number of scales N for observing the spontaneously stochastic solution with a given variable \(u_n(t)\)?

Relations (28) and (37) in the proof of Theorem 1 with the limit (40) indicate that the convergence to the spontaneously stochastic limit for each variable \(u_n^{(N)}(t)\) is controlled by the factor:

Here \(\alpha = \frac{1}{2}(3+\sqrt{5}) \approx 2.618\) and the sum is taken over all paths following grey (right or up-right diagonal) arrows in Fig. 1, which connect (N, 0) to (n, t); |p| denotes the number of arrows along the path. The factor (47) amplifies the random perturbation induced by \(\xi \) in the variable \(u_n^{(N)}(t)\). Let us assume that \(\xi \) takes small random values of order \(\varepsilon \) and has a sufficiently regular probability density (e.g., Holder continuous). Hence, for observing spontaneous stochasticity at node (n, t), the corresponding error must become large: \(P_{n,t}^{(N)}(\alpha ) \varepsilon \gg 1\). This yields the condition:

We now verify how fast \(P_{n,t}^{(N)}(\alpha )\) grows with N. For this purpose, we compute the longest path (dominant term) in expression (47). This path contains the maximum number of arrows at the smallest scale \(\ell _N\), supplemented with \(N-n\) diagonal arrows (one at every scale) to reach the node (n, t); see Fig. 3c. The number of arrows at scale N is evaluated as \((t-\Delta t)/\tau _N\), where \(\tau _N = 2^{-N}\) is the turn-over time for each arrow and \(\Delta t\) is the time interval occupied by the diagonal arrows at larger scales. This interval is evaluated as

Therefore, the total number of arrows in the path is found as

Using the longest path (50) in expression (47), yields the lower-bound estimate as

This expression suggests that the time \(t = 2\tau _n\) is transitional: the factor \(P_{n,t}^{(N)}(\alpha ) \propto \alpha ^N\) grows exponentially in N at \(t \sim 2\tau _n\), while the growth becomes double-exponential with \(P_{n,t}^{(N)}(\alpha ) \propto \left( \alpha ^{t-2\tau _n}\right) ^{2^N}\) at larger times.

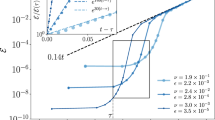

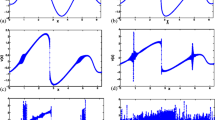

To be more specific, we computed the values of \(P_{n,t}^{(N)}(\alpha )\) numerically using formula (47) and presented the results graphically in Fig. 4. One observes that, in the model with only \(N = 7\) scales and utterly small noise of amplitude \(\varepsilon \sim 10^{-50}\), the spontaneously stochastic behaviour develops for all variables lying to the right of the transitional (red/yellow) region. Therefore, systems with a moderate number of scales N must demonstrate the spontaneously stochastic behaviour even for extremely small random perturbations. However, larger perturbations are required for convergence in the transitional region. This result is tested numerically in Fig. 5a, b.

7 Discussion

We designed a simple model that demonstrates the Eulerian spontaneous stochasticity (ESS): It is a formally deterministic scale-invariant system with deterministic initial conditions, which has uncountably many non-unique solutions and yields a universal stochastic process when regularized with a small-scale infinitesimal random perturbation. Our work provides the rigorous study of this system proving the existence of spontaneously stochastic solution as well as its universality (independence of the vanishing regularization term). The exceptional and counterintuitive property of this solution is that it assigns equal probability (uniform probability density) to all non-unique solutions. At integer times, the solution represents a Markov process converging to the equilibrium (uniform) state.

Amplification factor \(P_{n,t}^{(N)}(\alpha )\) of the initial error evaluated at each point of the lattice in a system with \(N = 7\) scales. The color of each rectangle shows (in logarithmic scale) the value of \(P_{n,t}^{(N)}(\alpha )\) corresponding to the node (n, t) located in the upper left corner of the rectangle; zero values are shown by white color

Each rectangle shows the probability density functions (darker colors correspond to higher probabilities) for the variable \(u_n(t) \in {\mathbb {T}}^2\) corresponding to the upper left corner of the rectangle. Only the scales \(n = 0,1,2\) are demonstrated. The results are obtained by simulating numerically \(10^8\) samples of the system with the initial conditions \(u_n(0) = (0.7,0.5)\) for \(n = 0,\ldots ,N\) and the random variable \(\xi \) uniformly distributed in the interval \([0,\varepsilon ]\): a \(N = 7\) and \(\varepsilon = 10^{-10}\), and b \(N = 9\) and \(\varepsilon = 10^{-1}\). Bold red borders designate variables in the transitional region, \(t \sim 2\tau _n\), where the convergence is only exponential in N and is not attained for very small \(\varepsilon \). The last panel c shows analogous results for \(N = 9\) and \(\varepsilon = 10^{-10}\) in modified system (52)

Our results can be extended to other forms of random regularization, e.g., random variables depending on N or random perturbations added to all variables (noise). In addition, one can use this idea for designing spontaneously stochastic systems with different behaviors by modifying the couplings or imposing extra conditions, such as conserved quantities. For example, Fig. 5c shows the numerical results when Eq. (5) is replaced by

where \(u_{n+1}(t)=\left( x_{n+1}(t), y_{n+1}(t)\right) /2\pi \in {\mathbb {T}}^2\). We see that model (52) yields a more sophisticated spontaneously stochastic solution. The rigorous study of such systems is challenging, leaving important theoretical questions for future study: how to analyse the existence, universality and robustness of spontaneously stochastic solutions in more general multi-scale models?

Our model can used as a prototype for a (first) experimental observation of the ESS implemented in a physical system, e.g., an optical or electric circuit. In this experiment, arrows in Fig. 1 represent waveguides, and coupling nodes are identical signal-processing gates. The scaling symmetry is maintained by choosing lengths of connecting waveguides proportional to turn-over times \(\tau _n\), exploiting the property that a distance travelled by a signal is proportional to time. The variables \(u_n(t)\) can describe phases of propagating signals measured at each node, while the initial conditions are associated with the input signal. A challenge of this setup is in reproducing the coupling relation (5) or a similar one that leads to the spontaneous stochasticity. Notice that the intrinsic hyperbolicity of Arnold’s cat map can also be recreated in a simple mechanical system [18, 20]. The extremely fast convergence, which is double-exponential in a number of scales, suggests that the spontaneous stochasticity in the described experiment will be triggered by a natural microscopic noise from the environment already in systems of moderate size, e.g., \(N = 7\) from Fig. 4.

Finally, the proposed model suggests that applications and occurrence of the ESS can be seen in a broader sense. This refers to multi-scale systems defined by deterministic rules but generating complex and genuinely stochastic processes. In real-world systems, this stochasticity may be triggered by a natural microscopic noise. Confirming ESS experimentally would imply that the occurrence of ESS should be studied in a wide range of applications, e.g., hydrodynamic turbulence, random-number generation, neural networks in artificial intelligence or living organisms, etc.

Data Availability

The data sets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Arnold, V. I., Avez, A.: Ergodic problems of classical mechanics. Benjamin, (1968)

Bardos, C.W., Titi, E.S.: Mathematics and turbulence: where do we stand? J. Turbul. 14(3), 42–76 (2013)

Bernard, D., Gawedzki, K., Kupiainen, A.: Slow modes in passive advection. J. Stat. Phys. 90(3), 519–569 (1998)

Biferale, L., Boffetta, G., Mailybaev, A.A., Scagliarini, A.: Rayleigh–Taylor turbulence with singular nonuniform initial conditions. Phys. Rev. Fluids 3(9), 092601(R) (2018)

Boffetta, G., Musacchio, S.: Predictability of the inverse energy cascade in 2D turbulence. Phys. Fluids 13(4), 1060–1062 (2001)

Buckmaster, T., Vicol, V.: Convex integration constructions in hydrodynamics. Bull. Am. Math. Soc. 58(1), 1–44 (2021)

De Lellis, C., Sze’kelyhidi Jr, L.: Weak stablity and closure in turbulence. Philos. Trans. R. Soc. A, 380, (2022)

Drivas, T. D., Eyink, G. L.: A Lagrangian fluctuation–dissipation relation for scalar turbulence. Part I. Flows with no bounding walls. J. Fluid Mech. 829:153–189, (2017)

Drivas, T. D., Mailybaev, A. A., Raibekas, A.: Statistical determinism in non-Lipschitz dynamical systems. arXiv:2004.03075, (2020)

E, W., Vanden Eijnden, E.: Generalized flows, intrinsic stochasticity, and turbulent transport. Proc. Natl. Acad. Sci. 97(15):8200–8205, (2000)

Engelking, R.: General Topology. Heldermann, Berlin (1989)

Eyink, G., Vishniac, E., Lalescu, C., Aluie, H., Kanov, K., Bürger, K., Burns, R., Meneveau, C., Szalay, A.: Flux-freezing breakdown in high-conductivity magnetohydrodynamic turbulence. Nature 497(7450), 466–469 (2013)

Eyink, G.L.: Turbulence noise. J. Stat. Phys. 83(5), 955–1019 (1996)

Eyink, G.L., Bandak, D.: Renormalization group approach to spontaneous stochasticity. Phys. Rev. Res. 2(4), 043161 (2020)

Falkovich, G., Gawedzki, K., Vergassola, M.: Particles and fields in fluid turbulence. Rev. Mod. Phys. 73(4), 913 (2001)

Fjordholm, U.S., Mishra, S., Tadmor, E.: On the computation of measure-valued solutions. Acta Numer. 25, 567–679 (2016)

Frisch, U.: Turbulence: the Legacy of A.N. Kolmogorov. Cambridge University Press, (1995)

Hunt, T.J., MacKay, R.S.: Anosov parameter values for the triple linkage and a physical system with a uniformly chaotic attractor. Nonlinearity 16(4), 1499–1510 (2003)

Kupiainen, A.: Nondeterministic dynamics and turbulent transport. Ann. Henri Poincaré 4(2), 713–726 (2003)

Kuznetsov, S.P.: Example of a physical system with a hyperbolic attractor of the Smale-Williams type. Phys. Rev. Lett. 95(14), 144101 (2005)

Leith, C.E., Kraichnan, R.H.: Predictability of turbulent flows. J. Atmos. Sci. 29(6), 1041–1058 (1972)

Lorenz, E.N.: The predictability of a flow which possesses many scales of motion. Tellus 21(3), 289–307 (1969)

Mailybaev, A.A.: Spontaneous stochasticity of velocity in turbulence models. Multiscale Model. Simul. 14(1), 96–112 (2016)

Mailybaev, A.A.: Spontaneously stochastic solutions in one-dimensional inviscid systems. Nonlinearity 29(8), 2238 (2016)

Mailybaev, A.A.: Toward analytic theory of the Rayleigh-Taylor instability: lessons from a toy model. Nonlinearity 30(6), 2466–2484 (2017)

Mañé, R.: Ergodic theory and differentiable dynamics. Springer, (1987)

Palmer, T.N.: Predicting uncertainty in forecasts of weather and climate. Rep. Prog. Phys. 63(2), 71–116 (2000)

Palmer, T.N.: Stochastic weather and climate models. Nat. Rev. Phys. 1(7), 463–471 (2019)

Palmer, T.N., Döring, A., Seregin, G.: The real butterfly effect. Nonlinearity 27(9), R123 (2014)

Ruelle, D.: Microscopic fluctuations and turbulence. Phys. Lett. A 72(2), 81–82 (1979)

Tao, T.: An introduction to measure theory. AMS, Providence (2011)

Thalabard, S., Bec, J., Mailybaev, A.A.: From the butterfly effect to spontaneous stochasticity in singular shear flows. Commun. Phys. 3(1), 1–8 (2020)

Acknowledgements

The work was supported by CNPq (grants 303047/2018-6, 406431/2018-3,308721/ 2021-7).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Mailybaev, A.A., Raibekas, A. Spontaneously Stochastic Arnold’s Cat. Arnold Math J. 9, 339–357 (2023). https://doi.org/10.1007/s40598-022-00215-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40598-022-00215-0