Abstract

We present a new numerical method for solving fractional delay differential equations (FDDEs) along with its error analysis. We illustrate applicability and utility of the method by solving various examples. Further, we compare the method with other existing methods such as fractional Adams method (FAM) and new predictor–corrector method (NPCM) developed by Daftardar-Gejji et al. (Fract Calc Appl Anal 18(2):400–418, 2015). The order of accuracy is shown to be \(O(h^2).\) It is noted that the new method is more time efficient and works for very small values of the order of the fractional derivative, where FAM as well as NPCM fail.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fractional delay differential equations (FDDEs) are equations involving fractional derivatives and time delays. Unlike ordinary derivatives, fractional derivatives are non-local in nature and are capable of modeling memory effects whereas time delays express the history of an earlier state. The real-world problems can be modeled in a more accurate way by including fractional derivatives and delays. FDDEs find numerous applications in physics, chemistry, control systems, electro-chemistry, bioengineering, population dynamics and many other areas (Daftardar-Gejji 2014; Epstein and Luo 1991; Davis 2003; Fridman et al. 2000; Kuang 1993). In bioengineering, fractional derivatives improve the understanding of the dynamics that occur in biological tissues. Such understanding is useful in examining nuclear magnetic resonance and magnetic resonance imaging of complex, porous and heterogeneous materials from both living and nonliving systems. In this context, a series of papers is given in the literature describing fractional Bloch equation with delay. For some recent references in this context, see Refs. Bhalekar et al. (2011) and Baleanu et al. (2015) and the references therein. Interesting phenomena such as chaos are observed even in one-dimensional fractional delay systems Willé and Baker (1992). Hence, the fractional order delay models are of great importance and have emerged as interdisciplinary area of research in recent years. Existence and uniqueness theorems on FDDEs are discussed in Maraaba et al. (2008a, b), Morgado et al. (2013) and Wang et al. (2011).

Solving nonlinear FDDEs is computationally demanding because of the non-local nature of fractional derivatives. To develop accurate, time-efficient and computationally economical numerical methods for solving FDDEs is primarily important. In pursuance to this, Diethelm et al. (2002, 2004) have extended Adams–Bashforth method to solve FDEs referred as fractional Adams method (FAM). Further, Bhalekar and Daftardar-Gejji (2011a) developed an efficient algorithm using FAM to solve fractional differential equations (FDEs) incorporating delay term. Daftardar-Gejji et al. (2014) introduced another numerical method (NPCM) based on Daftardar-Gejji and Jafari method (DGJ method) (Daftardar-Gejji and Jafari 2006; Bhalekar and Daftardar-Gejji 2011b; Daftardar-Gejji and Kumar 2018), to solve FDEs. They extended NPCM to solve FDDEs which is more time efficient as compared to other methods Daftardar-Gejji et al. (2015). Further a new method was introduced by Jhinga and Daftardar-Gejji (2018) called as L1-predictor–corrector method (L1-PCM) which is a combination of L1 algorithm Oldham and Spanier (1974) and DGJ method, to solve FDEs and it is noted that the method is accurate and more time efficient than existing methods developed using FAM Bhalekar and Daftardar-Gejji (2011a) and NPCM Daftardar-Gejji et al. (2015). In the present work, we extend L1-PCM to solve FDDEs. Though the non-local nature of fractional derivatives affects the time efficiency of the numerical simulations involved in solving FDDEs, it is very useful for modelling memory involved in natural phenomena. The rationale of the research done in this paper is to develop more accurate and time-efficient method as compared with existing methods. The advantage of the newly proposed method is that it converges for very small values of order of fractional derivative (\(\alpha \)) whereas existing methods such as FAM or NPCM fail, take least time for simulations and give better accuracy as compared with existing methods. Thus, the proposed method is superior to the existing methods and very useful to solve nonlinear FDDEs.

The paper is organized as follows. Preliminaries and notations are given in Sect. 2. A new predictor–corrector formula for FDDEs along with its error analysis has been carried out in Sect. 3. In Sect. 4, some illustrative examples are presented. Conclusions are drawn in Sect. 5.

2 Preliminaries

In this section, we introduce the definitions and notations used throughout this paper (Podlubny 1999; Miller and Ross 1993; Samko et al. 1993).

2.1 Definitions

Definition 1

Riemann–Liouville fractional integral of order \(\alpha >0\) of a function \(u(t) \in C[a,b]\) is defined as

Definition 2

Caputo fractional derivative of order \(\alpha >0\) of a function \(u\in C^m[a,b], m \in \mathbb {N}\) is defined as

where \(D^m\) is ordinary mth order derivative.

2.2 DGJ method

Daftardar-Gejji and Jafari (2006) introduced a new decomposition method (DGJ method) for solving functional equations of the form

where g is a known function and N(u) a non-linear operator from a Banach space \(B \rightarrow B\).

In this method, it is assumed that solution u of Eq. (3) is of the form:

The nonlinear operator N(u) is decomposed as

where \(G_0=N(u_0)\) and \(G_i=\Bigl \{N\left( \sum _{k=0}^{i}u_k\right) -N\left( \sum _{k=0}^{i-1}u_k\right) \Bigr \}, \ i \ge 1.\)

Equation (3) takes the form

The terms \(u_i,\) \(i=0,1,\ldots \) are then obtained by the following recurrence relation:

Then

and

The k-term approximation is defined as

3 Results

In this section, we derive the new method for solving the fractional delay differential equations (FDDEs). Consider the following FDDE:

Consider a uniform grid \({t_n=nh:n=-K,-K+1,\ldots ,-1,0,1,\ldots ,N}\) where K and N are integers such that \(N=T/h\) and \(K=\tau /h.\)

We use L1 algorithm Oldham and Spanier (1974) for the numerical evaluation of the fractional derivatives of order \(\alpha \), \(0<\alpha <1\) as given below:

where

Suppose we have already calculated approximations \(u(t_j)\), \((j=-K,-K+1,\ldots ,-1,0,1,\ldots ,n-1)\) and want to calculate nth approximation \(u(t_n)\). We approximate \(^cD_0^{\alpha }u(t)\) by the formula (12) and using Eq. (10) we get

where \(u_k\) denotes the approximate value of the solution of (10) at \(t=t_k\). Further Eq. (14) can be rewritten as

After rearranging the terms, Eq. (15) takes the following form:

Using (13), we get

Equation (17) takes the form

where \(a_k:=(k+1)^{1-\alpha }-k^{1-\alpha }.\) Note that \(a_k 's\) have the following properties:

It is important to note that Eq. (18) is of the form of Eq. (3) if we identify

and

Hence, we can employ DGJ method to get approximate solution. The algorithm of DGJ method yields approximate value of \(u_n\), as follows:

The three term approximation of \(u_n \approx u_{n,0}+u_{n,1}+u_{n,2}\). The delay term is given below:

Equations (20–22) constitute a new predictor–corrector scheme referred as L1-PCM for solving FDDEs.

where \(u_n^p\), \(z_n^p\) are predictors and \(u_n^c\) is the corrector.

3.1 Error analysis

In the present section, we perform error analysis of the proposed method. The detailed error analysis for L1 method is carried out in the literature Langlands and Henry (2005), Lin and Xu (2007) and Sun and Wu (2006) which is given below:

where \(C'\) is a positive constant. Define \(r_n\) as

In view of Eq. (26)

Lemma 1

(Lin and Liu 2007) Let \(a,b>0\) and \(\{\zeta _i\}\) satisfy

then

where \(M_0=\max (||\zeta _0|,\zeta _1|,\cdots ,|\zeta _{k-1}|).\)

Further, we modify Gr\(\ddot{\text {o}}\)nwall’s inequality to get an error estimate of the proposed method as follows.

Lemma 2

Suppose that \(c_{j,n}=(n-j)^{1-\alpha }\) (\(j=1,2,\ldots ,n-1\)) and \(c_{j,n}=0\) for \(j \ge n,\) \(0<\alpha <1, \ h,M,T>0, \ kh \le T\) and k is a positive integer. If

then

where C is a positive constant independent of h and k.

Proof

We have \(0<\alpha <1,\) it can be easily observed that \(c_{j,n}=(n-j)^{1-\alpha }\le T^{1-\alpha }h^{\alpha -1}\). Thus, we have

Using Gr\(\ddot{\text {o}}\)nwall’s inequality (Lemma 1) and the fact that \(h< h^{\alpha -1} \ (0<\alpha <1)\), the result follows. \(\square \)

Note that using equations (10), (19), (23) and (27), the error equation can be written as

Theorem 1

Let f(t, u, v) satisfy Lipschitz condition in variables u and v with Lipschitz constants \(L_1\) and \(L_2\), respectively. Let u(t) be the exact solution of the IVP (10). Further, \(u_k\) denotes the approximate solution at \(t=t_k\) obtained in (25). Then for \(0<\alpha <1\) and h sufficiently small,

where \(N=\lfloor {T/h}\rfloor .\)

Proof

We will show that, for sufficiently small h,

for all \(k \in \{0,1,\ldots ,N\}\) and C is a suitable constant. The proof will be based on mathematical induction. The basis step is presupposed. Suppose the result holds for \(k=1,2,\ldots ,n-1\). We will prove that the result is true for \(k=n\). Let \(e_k=u(t_k)-u_k\) and \(e_k^p=u(t_k)-u_k^p\). The error equation can be written as

Further, we observe that

Using Lemma (2) and Eq. (31) in Eq. (32) and induction hypothesis step in the last summand, we get

It can be observed that the last summand in the bracket can be less than or equal to C / 2 by choosing sufficiently small h and the sum of remaining terms in the bracket can be made less than or equal to C / 2 with a suitable \(C>2C'\). Hence, this bound cannot exceed \(Ch^2\). Therefore,

where C is some constant. \(\square \)

Remark: For \(0<\alpha <1\), the order of accuracy for FAM is given to be \(O(h^{1+\alpha })\), whereas for NPCM, the order varies between \(O(h^{1+\alpha })\) and \(O(h^{2-\alpha })\). The proposed method has order of accuracy \(O(h^2)\). It is noted that the new method gives better accuracy than FAM and NPCM.

4 Illustrations

We present some examples solved by the proposed method to demonstrate its applicability.

Example 1

Consider a fractional order DDE given in Willé and Baker (1992)

We take \(h=0.01\). Figures 1 and 2 present the solution u(t) of system (33)–(34) for \(\alpha =0.96\) and \(\alpha =0.84\) respectively, whereas Figs. 3 and 4 present phase portraits of u(t) versus \(u(t-2)\) of the system for \(\alpha =0.96\) and \(\alpha =0.84\), respectively. We solve this example numerically for small values of \(\alpha \) such as \(\alpha =0.01\) and \(\alpha =0.001\) and observe that FAM and three-term NPCM do not converge in this case whereas new method converges. These observations are presented in Tables 1 and 2.

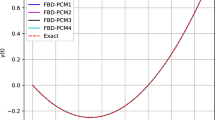

Example 2

Consider the following delay fractional order equation:

The exact solution is \(u(t)=t^2-t\). The absolute and relative errors between the proposed method and existing methods are compared in Tables 3 and 4, respectively. The CPU time required to solve this example is also compared for the three methods in Table 5. It is observed that the proposed method is more accurate and more time efficient than FAM and 3-term NPCM.

Example 3

Consider the following fractional order Ikeda equation: Jun-Guo (2006)

We have taken \(h=0.001\). The solutions u(t) of the system (37)–(38) for \(\alpha =0.8,\tau =0.15\) and \(\alpha =0.8,\tau =0.1\) are shown in Figs. 5 and 6, respectively, whereas phase portraits are shown in Figs. 7 and 8 for the same values. It is observed that the system shows chaotic behaviour.

Example 4

Consider the following fractional version of DDE: Umeki (2012)

Phase portraits are drawn in Figs. 9 and 10 by taking \(h=0.001\). Chaotic behaviour is observed for \(\alpha =0.7\), whereas stable orbits are obtained for \(\alpha =0.9\). Further, we compare the time required by FAM and NPCM in Table 6. It is observed that the proposed method is more time efficient than FAM and NPCM.

Example 5

Consider the fractional order version of the 4-year life cycle of a population of lemmings Tavernini (1996)

The solution of the system (41)–(42) is shown in Fig. 11 for \(\alpha =0.99\) by taking \(h=0.001\). The phase portraits are shown in Figs. 12, 13, 14, 15 and 16. It is observed that the phase portraits are stretching towards the positive side of the axes. The graphs obtained by the new method are same as that of obtained in Daftardar-Gejji et al. (2015) by FAM and three-term NPCM.

5 Conclusions

In this paper, a new predictor corrector method has been developed for solving nonlinear fractional delay differential equations (FDDEs). Further error analysis of the proposed method has been carried out. Various illustrative examples are solved to demonstrate the applicability of the method. It is observed that the method is accurate and more time efficient than existing numerical methods developed for FDDEs using FAM and NPCM. Further, it is noted that L1-PCM converges for very small values of \(\alpha \), while FAM and three-term NPCM diverge.

References

Baleanu D, Magin RL, Bhalekar S, Daftardar-Gejji V (2015) Chaos in the fractional order nonlinear Bloch equation with delay. Commun Nonlinear Sci Numer Simul 25(1–3):41–49

Bhalekar S, Daftardar-Gejji V (2011) A predictor–corrector scheme for solving nonlinear delay differential equations of fractional order. J Fract Calc Appl 1(5):1–9

Bhalekar S, Daftardar-Gejji V (2011) Convergence of the new iterative method. Int J Differ Equ 2011:989065. https://doi.org/10.1155/2011/989065

Bhalekar S, Daftardar-Gejji V, Baleanu D, Magin R (2011) Fractional Bloch equation with delay. Comput Math Appl 61(5):1355–1365

Daftardar-Gejji V (2014) Fractional calculus: theory and applications. Narosa Publishing House, New Delhi

Daftardar-Gejji V, Jafari H (2006) An iterative method for solving nonlinear functional equations. J Math Anal Appl 316(2):753–763

Daftardar-Gejji V, Kumar M (2018) New iterative method: a review. In: Bhalekar S (ed) Frontiers in fractional calculus, vol 1. Bentham Science Publishers, Sharjah, p 233

Daftardar-Gejji V, Sukale Y, Bhalekar S (2014) A new predictor–corrector method for fractional differential equations. Appl Math Comput 244:158–182

Daftardar-Gejji V, Sukale Y, Bhalekar S (2015) Solving fractional delay differential equations: a new approach. Fract Calc Appl Anal 18(2):400–418

Davis L (2003) Modifications of the optimal velocity traffic model to include delay due to driver reaction time. Phys A Stat Mech Appl 319:557–567

Diethelm K, Ford NJ, Freed AD (2002) A predictor–corrector approach for the numerical solution of fractional differential equations. Nonlinear Dyn 29(1):3–22

Diethelm K, Ford NJ, Freed AD (2004) Detailed error analysis for a fractional Adams method. Numer Algorithms 36(1):31–52

Epstein IR, Luo Y (1991) Differential delay equations in chemical kinetics. Nonlinear models: the cross-shaped phase diagram and the oregonator. J Chem Phys 95(1):244–254

Fridman E, Fridman L, Shustin E (2000) Steady modes in relay control systems with time delay and periodic disturbances. J Dyn Syst Meas Control 122(4):732–737

Jhinga A, Daftardar-Gejji V (2018) A new finite-difference predictor–corrector method for fractional differential equations. Appl Math Comput 336:418–432

Jun-Guo L (2006) Chaotic dynamics of the fractional-order Ikeda delay system and its synchronization. Chin Phys 15(2):301

Kuang Y (1993) Delay differential equations: with applications in population dynamics, vol 191. Academic Press, London

Langlands T, Henry BI (2005) The accuracy and stability of an implicit solution method for the fractional diffusion equation. J Comput Phys 205(2):719–736

Lin R, Liu F (2007) Fractional high order methods for the nonlinear fractional ordinary differential equation. Nonlinear Anal Theory Methods Appl 66(4):856–869

Lin Y, Xu C (2007) Finite difference/spectral approximations for the time-fractional diffusion equation. J Comput Phys 225(2):1533–1552

Maraaba T, Baleanu D, Jarad F (2008a) Existence and uniqueness theorem for a class of delay differential equations with left and right Caputo fractional derivatives. J Math Phys 49(8):083507

Maraaba TA, Jarad F, Baleanu D (2008b) On the existence and the uniqueness theorem for fractional differential equations with bounded delay within Caputo derivatives. Sci China Ser A Math 51(10):1775–1786

Miller KS, Ross B (1993) An introduction to the fractional calculus and fractional differential equations. Wiley, New York

Morgado ML, Ford NJ, Lima PM (2013) Analysis and numerical methods for fractional differential equations with delay. J Comput Appl Math 252:159–168

Oldham K, Spanier J (1974) The fractional calculus: theory and applications of differentiation and integration to arbitrary order, vol 111. Elsevier, Amsterdam

Podlubny I (1999) Fractional differential equations: an introduction to fractional derivatives, fractional differential equations, to methods of their solution and some of their applications, vol 198. Elsevier, Amsterdam

Samko SG, Kilbas AA, Marichev OI et al (1993) Fractional integrals and derivatives. Theory and applications, vol 1. Gordon and Breach, Yverdon

Sun Z-Z, Wu X (2006) A fully discrete difference scheme for a diffusion-wave system. Appl Numer Math 56(2):193–209

Tavernini L (1996) Continuous-time modeling and simulation, vol 2. CRC Press, Boca Raton

Umeki M (2012) Chaos in the Battisti–Hirst original model for El Ninõ southern oscillation. Theor Appl Mech Jpn 60:21–27

Wang Z, Huang X, Shi G (2011) Analysis of nonlinear dynamics and chaos in a fractional order financial system with time delay. Comput Math Appl 62(3):1531–1539

Willé DR, Baker CT (1992) DELSOL—a numerical code for the solution of systems of delay-differential equations. Appl Numer Math 9(3–5):223–234

Acknowledgements

A. Jhinga acknowledges Council of Scientific and Industrial Research, New Delhi, India, for Junior Research Fellowship (09/137(0568)/2017-EMR-I).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Vasily E. Tarasov.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Jhinga, A., Daftardar-Gejji, V. A new numerical method for solving fractional delay differential equations. Comp. Appl. Math. 38, 166 (2019). https://doi.org/10.1007/s40314-019-0951-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-019-0951-0