Abstract

This article reviews the self-supervised learning methods for CT image denoising and reconstruction. Currently, deep learning has become a dominant tool in medical imaging as well as computer vision. In particular, self-supervised learning approaches have attracted great attention as a technique for learning CT images without clean/noisy references. After briefly reviewing the fundamentals of CT image denoising and reconstruction, we examine the progress of deep learning in CT image denoising and reconstruction. Finally, we focus on the theoretical and methodological evolution of self-supervised learning for image denoising and reconstruction.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the emergence of large-scale training data and convolutional neural networks (CNNs) [1], deep learning has become a dominant methodology in computer vision over the last decade [2,3,4,5,6,7]. Deep learning methods enable CNNs to efficiently and effectively solve various image processing problems such as super-resolution [8] and image synthesis [9]. Among such applications, image denoising has also been extensively studied in the era of deep learning. The great success of deep learning-based denoisers has consequently stimulated studies on medical image restoration, including CT image denoising.

Image denoising is one of the oldest image processing tasks. Over several decades, many denoising methods based on Bayesian estimation have introduced image priors such as \(L_2\)-regularization, total-variation, wavelet, partial differential equations, sparse representations, patch-based models, and low-rank assumptions. Well-established theories and applications have led to high-performance denoisers, while they have also generated disbelief that the theoretical bound of denoising capacity is close to being achieved [10]. Recent advances in deep learning-based image denoising have addressed this problem with dramatically improved denoising performance. Although classical image denoisers are limited to inherent inflexibility and long computation time, deep learning is capable of producing highly adaptive denoisers with fast and accurate image processing. For better denoising performance, novel architectures of backbone networks [11,12,13,14] as well as their deployment strategy and applications [15,16,17,18] have been actively explored.

Computed tomography (CT) is typically the first imaging option to produce tomographic images and to examine the internal structures of patients. Although CT scans produce rapid and precise tomographic images, there is concern about the radiation exposure associated with these procedures. Low-dose CT (LDCT) protocols can reduce the radiation dose to patients, but can also reduce the signal-to-noise ratio (SNR) of the projection data. The resultant noisy or blurred CT images further negatively affect diagnostic accuracy, as small changes in structures or textures can lead to a different diagnosis. In order to produce high-quality CT images from the noisy projection data, the trade-off between radiation dose and noise level in acquisition data must be addressed.

In addition to radiation dose, many physical factors, including CT geometry, detector characteristics, motion artifacts, and reconstruction algorithms, are related to the quality of CT images. Although an inverse problem aims to undo such degradation processes, a perfect inversion of the various degradation components in a single reconstruction process is challenging to implement. Instead of formulating and solving the inverse problems, more practical and tractable postprocessing strategies such as image denoising after reconstructing images have also been proposed to enhance the quality of medical images.

For LDCT imaging, advances in deep learning-based image denoising have been adopted in CT image denoising tasks [19,20,21,22,23,24]. Most studies on LDCT image denoising have focused on training CNN-based denoisers in a supervised learning manner with noisy LDCT images and the paired normal-dose CT (NDCT) images. In order to acquire datasets for supervised learning, paired LDCT/NDCT images have been obtained by generating LDCT projections from NDCT projections [25], by scanning animals/cadavers/patients [19, 21, 22]. However, a large-scale dataset with LDCT/NDCT image pairs of real patients is difficult to acquire, since repeated CT scans deliver an additional and unnecessary radiation dose to the patients. Although existing supervised learning approaches have shown outstanding denoising performance, developing and evaluating deep neural networks using a small pool of LDCT/NDCT image pairs may not meet various clinical requirements, including patients, scanners, and protocols.

As an alternative to methods based on supervised learning, self-supervised learning methods, which do not require clean training targets, have attracted great attention [26, 27]. Typically, self-supervised learning methods first partition the noisy input into blind spots and their complements, and then learn to recover the blind spots by using the complements as contexts. Although self-supervised learning-based image denoising methods effectively reduce the need for input-target pairs in the training process, the inference process needs to be further improved for high quality of the resultant images [28].

In this article, we first introduce CT image denoising and reconstruction problems along with common metrics to assess the quality of the reconstructed images. We also survey deep learning-based image denoising methods as the learning paradigms shift from supervised learning to self-supervised learning. Then, we review deep learning-based CT image denoising and reconstruction with an emphasis on self-supervised learning without ground truth.

2 CT noise and image reconstruction

2.1 CT noise models

For a CT scan, the object represents a spatial distribution of the linear attenuation coefficients [29]. Each x-ray measurement represents the line integral of the attenuation coefficients of the object along a particular x-ray path. The measurements form a projection view, and a set of projections is measured with uniformly spaced angles that cover an entire/half circular scan. The inverse problem imposed on the tomographic reconstruction is typically defined as the following:

where \({\textbf{x}}\in {\mathbb {R}}^n\) denotes the unknown attenuation coefficients, \({\textbf{b}} \in {\mathbb {R}}^m\) denotes the measured projections, a mapping function \(A:{\mathbb {R}}^n \rightarrow {\mathbb {R}}^m\) models the forward projection process, and \(\varvec{\eta }\in {\mathbb {R}}^m\) denotes the additive noise. In order to simplify our discussion, we assume 2D object and the corresponding projections, while the similar discussion can be extended to 3D object and projections.

With the assumptions of ideal object and CT geometry, the forward projection model is simplified to the system matrix \(A\in {\mathbb {R}}^{m\times n}\). For ideal geometry, the x-ray source (focal spot) is infinitely small and can be assumed to be a point source. Although the detectors are spaced in the derivation of the reconstruction filters, the shape and dimension of each detector cell are also ignored by assuming that the interactions between the x-ray photons and detector cell take place at the center of the detector cell. As a result, the system problem in (1) can be reduced to the following linear equation:

where the error vector \(\varvec{\eta }\) accounts for measurement errors and noise.

Due to the finite focal spot, x-ray detector, and reconstructed image pixel, the simple linear approximation of the CT system affects the quality of reconstructed images. In addition, various sources of noise including quantum and electronic noise further degrade image quality. From the x-ray physics model [29], line integrals \({\textbf{b}}\) can be measured by the following:

where \(N_0\) and \(N_i\) are blank photon count and measured photon count along line \(l_i\), respectively. The incident photon count is often modeled by \(N_i = N_{q_i} + N_{e_i}\) with the quantum number \(N_{q_i}\sim \textrm{Poisson} \left( N_0 \exp \left( -\left[ A{\textbf{x}} \right] _i\right) \right)\) and electronic noise of detector cell \(N_{e_i} \sim {\mathcal {N}}(0,\sigma _e^2)\). If \(N_i\)’s are independent, the covariance matrix of \({\textbf{b}}\) is given by:

where

2.2 Analytical image reconstruction

In the case of noiseless observations, i.e., \(\varvec{\eta } = {\textbf{0}}\), and thus,

which implies the measurements are perfect. If the system matrix A is full rank and the system equation in (6) is overdetermined, the system matrix A has a left inverse of \(A^\dagger\), and we can analytically reconstruct the CT image \({\textbf{y}}\) by:

where the analytically reconstructed image is the same as the real image, i.e., \({\textbf{y}}={\textbf{x}}\). These simplified assumptions allow us to analytically reconstruct CT images by finding a left inverse \(A^{\dagger }\) of A, i.e., \(A^{\dagger }A = I\), such as filtered-backprojection (FBP) algorithms. Despite the theoretical and practical advantages, the simple linear and noiseless models, on which FBP algorithms are based, do not fully reflect real CT physics, especially when low-dose protocols are used.

2.3 CT image denoising

In the presence of noise, the analytically reconstructed image \({\textbf{y}}\) is noisy:

where \(\varvec{\epsilon } \in {\mathbb {R}}^n\) is the noise in the image domain. In the image processing literature, the noise \(\varvec{\epsilon }\) is generally assumed to be a zero-mean jointly Gaussian noise, i.e., \(\varvec{\epsilon }\sim {\mathcal {N}}({\textbf{0}}, \Sigma )\). In general image denoising, noise \(\varvec{\epsilon }\) is often assumed to be independent and identically distributed (i.i.d.) Gaussian noise, i.e., \(\varvec{\epsilon }\sim {\mathcal {N}}({\textbf{0}}, \sigma ^2 I)\), In CT image denoising, the noise is often modeled to be zero-mean Gaussian but not independent across pixels, since the covariance matrix can be obtained by:

Given the noisy image \({\textbf{y}}\), the task of denoising CT images is to estimate \({\textbf{x}}\) from \({\textbf{y}}\) with or without the knowledge of \(\Sigma _{{\textbf{y}}}\). In this problem setting, the observation is the noisy image \({\textbf{y}} \in {\mathbb {R}}^n\), which can be obtained using standard image reconstruction methods such as FBP algorithms. Thus, the image denoising problem is to find a denoiser which can be expressed as a function of the form \(\hat{{\textbf{x}}}= g({\textbf{y}}, \Sigma _{{\textbf{y}}})\).

If the joint distribution on \({\textbf{x}}\) and \({\textbf{y}}\) is known, we can assess the performance of the denoiser \(g:{\mathbb {R}}^n \rightarrow {\mathbb {R}}^n\) in terms of the mean squared error (MSE)

where the expectation is taken with the joint distribution over image pairs \(({\textbf{x}},{\textbf{y}})\). The best denoiser that minimizes MSE is given by

which is referred to as the minimum MSE (MMSE) estimate.

2.4 Iterative reconstruction

As an alternative to analytical reconstruction algorithms, iterative reconstruction (IR) algorithms with embedded noise models have been studied extensively for decades [30,31,32,33,34,35,36]. In contrast to analytical reconstruction algorithms, the system matrix A of IR algorithms reflects the geometry of the system, the shape of the focal spot, the response of the detector, and many other geometric and physical parameters of a CT system.

Suppose that the true image \({\textbf{x}}\) is drawn from the image manifold with a priori probability density function \(p ({\textbf{x}})\) and \(p({{\textbf{b}}|{\textbf{x}}})\). From Bayes’ rule, we can express a posteriori probability density function as

from which we can reconstruct the image by maximize the log-posteriori:

By assuming \(p({{\textbf{b}}|{\textbf{x}}})={\mathcal {N}}(A{\textbf{x}}, \Sigma _{\textbf{b}})\), maximizing the log-likelihood in (13) is reduced to least-squares of data fidelity. Then, the image reconstruction problem can be reformulated as:

where the regularization term \(f({\textbf{x}})\) reflects the a priori distribution on \({\textbf{x}}\). Various handcrafted regularization terms, including total-variation and wavelet sparsity, have been studied to preserve edges and textures in CT images. While IR approaches typically adopt convex optimization algorithms, such as first-order methods, with strong theoretical convergence, the alternating direction method of multipliers (ADMM) [37] has been extensively studied as a solver for the large-scale problem in (14). With the augmented Lagrangian,

the ADMM algorithm updates variables as the following:

Although IR methods have been shown to be practically useful, occasional misrepresentations and excessive computation time limit the clinical use of IR methods. Since IR methods are based on specific selections of filter kernels, regularization terms, and optimization parameters, they may not be adaptive enough to meet the various demands of clinical applications.

2.5 Image quality metrics

In order to evaluate image quality, quantitative metrics can provide meaningful scores. Full-reference metrics measure the quality of the processed image with a given reference image. In this subsection, we introduce commonly used metrics to assess the quality of reconstructed CT images.

Among many full-reference metrics, the sample MSE between two images \((\hat{{\textbf{x}}}, {\textbf{x}})\) is commonly used:

and the corresponding RMSE is given by

where the two vectors \(\hat{{\textbf{x}}}\) and \({\textbf{x}}\) denote the reconstructed and true images, respectively.

As a commonly used full-reference metric, peak signal-to-noise ratio (PSNR) is given by:

where \(\textrm{MAX}_x\) is the maximum value of pixels. Note that PSNR is closely related to MSE by its definition. If the MSE between the two images is small, the PSNR value is high.

Although MSE and PSNR quantitatively measure absolute errors, measuring the perceptual differences between the two images is also important. In order to measure image degradation as perceived change in structures, structural similarity (SSIM) is a perception-based model to evaluate structural similarity. Given two image vectors \({\textbf{u}}\) and \({\textbf{v}}\), the SSIM is defined by

where \(\mu _u\), \(\mu _v\), \(\sigma ^2_u\), \(\sigma ^2_v\), and \(\sigma _{uv}\) represent sample means, sample variances, and sample covariance, respectively. The constants \(C_1\) and \(C_2\) are predetermined parameters to stabilize the division with weak denominator. The SSIM metric compares the two images, i.e., \({\textbf{u}}\) and \({\textbf{v}}\), based on three measurements: luminance, contrast, and structure.

Another common metric to assess the quality of medical images is contrast-to-noise ratio (CNR). Although CNR is similar to the metric signal-to-noise ratio (SNR), the average of the background area subtracts from the signal as the following:

where \(\mu _r\) and \(\mu _b\) are the mean values of the selected region of interest (ROI) and the background area surrounding the ROI, and \(\sigma _r\) denotes the standard deviation of the background area, respectively.

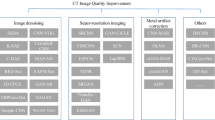

3 Supervised and unsupervised CT denoising

In this section, we provide a comprehensive survey on supervised/unsupervised learning-based image/projection denoising methods with different learning strategies and architectures, such as CNNs, GANs, and transformers. With the clean reference \({\textbf{x}}\) in NDCT domain \({\mathcal {X}}\) and noisy input \({\textbf{y}}\) in LDCT domain \({\mathcal {Y}}\), the training process optimizes a denoising model as follows:

where \(g_\theta :{\mathbb {R}}^n\rightarrow {\mathbb {R}}^n\) is a neural network-based denoising model, \(\theta\) denotes the network parameters, and \({\mathcal {L}}\) denotes a fidelity criterion such as the \(L_2\) loss.

While \(({\textbf{x}},{\textbf{y}})\) are paired samples with noisy input \({\textbf{y}}\) and clean target \({\textbf{x}}\) for supervised learning, \({\textbf{x}}\) and \({\textbf{y}}\) are not necessarily paired for unsupervised learning. If a large number of input-target pairs are available, the supervised learning setting can enable a large-scale neural network to achieve high denoising performance.

3.1 CNN-based CT denoising

During the past decade, deep learning has developed rapidly to become a dominant machine learning tool in computer vision and image processing. Convolutional neural networks (CNNs), which have been successful in classifying images beyond the human level [1,2,3,4,5], have been applied to other computer vision tasks such as object localization [6] and semantic segmentation [7]. To enhance computational efficiency and precision, architectures based on fully convolutional networks have been suggested to process entire images directly [38,39,40]. In image denoising tasks, CNN-based deep learning methods also outperform classical algorithms [12, 13]. With a dataset consisting of noisy/clean image pairs, CNNs can be trained in a supervised fashion.

In order to improve the quality of LDCT images, deep learning methods have also trained CNNs with pairs of LDCT/NDCT image pairs [20, 21, 41, 42]. Most of these methods utilize patch-wise models that divide the original images into overlapping subimages for training purposes. With limited receptive fields, patch-wise models are able to decrease the number of network parameters to fit the number of training samples [38]. Although patch-wise models can be useful to augment the data set from a limited number of training samples, the reduced receptive fields are not capable of capturing spatially varying noise in CT images [29, 40].

Upon availability of the LDCT/NDCT image dataset [25], deep learning architecture for low-dose CT reconstruction has shown outstanding denoising performance [43]. Inspired by the residual network architecture for image classification [4], a residual learning-based denoiser has successfully outperformed traditional algorithms by a considerable gap. Based on the U-Net architecture [39], residual learning for CT image denoising has been implemented with skip connections [41].

Compared to extensive studies of image denoising for low-dose CT, supervised sinogram/projection denoising has not been thoroughly studied. A supervised/unsupervised sinogram denoising approach with generated noise has been proposed for low-dose CT under a maximum a posteriori (MAP) framework [44]. Rather than supervised projection denoising prior to image reconstruction, the techniques to embed projection domain denoisers as trainable regularization terms have been investigated in deep learning-based iterative reconstruction, as discussed in Sect. 5.

Although CNN-based denoisers have achieved high PSNR and RMSE values in CT image denoising, altered textures and blurriness have also been reported. In general, MMSE denoising models minimize distortions in terms of \(L_2\)-norm in (10) by compromising perceptual quality [45, 46]. While perceptual losses can explicitly preserve textures and structural characteristics [42], data-driven approaches with auxiliary network architectures such as adversarial training and self-attention can improve perceptual quality indirectly, as introduced in the following subsections.

3.2 GAN-based CT denoising

The deep learning revolution has also allowed us to tackle another challenging task, such as image synthesis, to generate a variety of realistic images, without any input or initialization conditions. For successful image synthesis, we need to train an image generator \(G_\theta ({\textbf{z}})\) to capture the a priori distribution of images \(p({\textbf{x}})\) and map a simple random input \({\textbf{z}}\sim {\mathcal {N}}({\textbf{0}}, I)\) to a valid sample from \(p({\textbf{x}})\). In the task of image synthesis, generative adversarial networks (GANs) [47], which simultaneously train a generator to capture the data distribution and a discriminator model to estimate the probability of whether a sample is from real data or not, have been extensively studied [9, 48,49,50]. The advances of GANs have also been transferred to image denoising [51, 52]. In the original GAN [47], a GAN is optimized by the following minmax problem:

where the generator G transforms a noisy sample to mimic a real sample by defining a data distribution, and the discriminator D is trained for a fixed G.

As an extended method of GAN, cyclic adversarial loss has been proposed to alleviate the unpaired image-to-image translation problem and generate more realistic samples in many applications [9]. With the inverse mapper F of the generator G, the inverse relationship \(F=G^{-1}\) can impose cyclic consistency:

where \(\lambda\) is a parameter to control the importance of the cyclic consistency.

To preserve textures and details in CT images, adversarial learning approaches have been proposed for CT image denoising [53, 54]. In addition to adversarial training, perceptual loss and the Wasserstein distance [8, 50] have been adopted to improve structural representation [20]. A structurally sensitive loss has also been proposed to preserve informative structural features [23]. By transferring noise statistics from projection data into the image domain, a novel training loss has been proposed for better structural preservation performance [24]. The selected supervised/unsupervised CT image denoising methods are compared in Fig. 1.

Despite the potential to preserve texture in CT image denoising, GAN-based approaches require careful selection of hyperparameters and network architectures to avoid mode collapse and capture the underlying data distribution [55]. Since GANs are designed to capture the data distribution without explicit knowledge, generators try to find the output most plausible to the discriminator. As a result, the generators may choose an output from a small set of realistic samples and fail to produce diverse samples. One possible way to stabilize the GAN training process is employing the Wasserstein loss to prevent vanishing gradients and help models escape local minima [50]. As another remedy to mode collapse, multiple generators and discriminators have been used to generate diverse samples [56]. After clustering CT images into multiple imagesets for denoising, each imageset is used to train each generative adversarial network. Each discriminator is dedicated to a specific category and trained to distinguish between denoised images and NDCT images in the category. Thus, this hierarchical architecture prevents the generators from overfitting to a single discriminator.

3.3 Transformer-based CT denoising

Transformers have been adapted from language to computer vision as the backbone for various tasks. For example, shifted window (Swin) transformer modules have computed self-attention in shifted windows and exploited non-local self-similarity [57]. For image denoising, the Swin architecture has been trained with a dataset consisting of noisy/clean image pairs [14]. A similar idea has been applied in CT image denoising. SwinIR has also been trained with LDCT/NDCT image pairs by minimizing a loss function of L1 and MS-SSIM. [58].

Inspired by the long-range dependencies of the transformer, the LDCT denoising network, which incorporates a window-based transformer and a dual enhancement module, has been designed to improve edge, texture, and contextual details [59]. Using each angle of the sinogram view as input, a sinogram transformer can effectively extract structural characteristics [60]. A transformer-based encoder-decoder network with learnable Sobel-Feldman operators has also been proposed to denoise medical images and improve edge information [61]. A convolution-free transformer has previously been suggested to effectively denoise LDCT images [62]. With token rearrangement to encompass local contextual information, the convolution-free transformer is capable of learning long-range interaction from dilated and shifted feature maps.

Examples of supervised/unsupervised CT image denoising methods. The models have been trained with the AAPM-Mayo LDCT dataset and tested with a clinical LDCT dataset: a LDCT, b RED-CNN [41], c modularized U-Net [42], d CycleGAN [9], e DnCNN [12], f StatCNN [24], g StatCGAN [24], and h StatCycleGAN [24]. Display window is set to \([-160~240]\) for all images

4 Self-supervised CT denoising

While CT denoising approaches based on unsupervised image generation, such as GANs, are able to successfully capture image style and textures from unpaired clean samples, occasional and unexpected artifacts have also been observed in the resultant images. As an alternative to reduce such artifacts and dependency on the clean samples, CT denoising approaches based on self-supervised learning have been recently studied to explore the noisy source domain alone.

In general, CT images represent anatomical structures in which pixel values exhibit a high degree of spatio-temporal correlation [29]. Since medical images can be expressed with much lower dimensions in a specific latent space than the dimension in the image domain [63], it may be possible to recover noisy images without clean references [26, 64, 65]. In this section, we first summarize recent studies on self-supervised learning with blind-spot networks [26,27,28], and then review the studies on CT denoising based on self-supervised learning.

4.1 Noise2Noise

Although deep learning is not fully explanatory, its groundbreaking high performance has greatly influenced many computer vision and image processing tasks, such as image denoising. As discussed in the previous section, the supervised learning methods typically rely on a large dataset consisting of diverse noisy inputs and clean targets. However, the need for clean targets limits the application of supervised learning-based image denoising methods in various real-world scenarios.

Recent research has demonstrated that training denoising models does not require clean targets. Remarkably, Noise2Noise has trained a denoising model with two independent observations of the common ground truth [64]. Given the joint distribution between signal and observation, the \(L_2\) loss in (22) can be rewritten as

where the expectation is taken with the joint distribution over \(({\textbf{x}},{\textbf{y}})\). By the chain rule, it is equivalent to

which results in the MMSE denoiser \(g_{{\hat{\theta }}}({\textbf{y}}) = {\mathbb {E}}({\textbf{x}}|{\textbf{y}})\). If a dataset of two observations is available instead of signal/obervation pairs, the Noise2Noise denoiser is trained by

where \(\tilde{{\textbf{y}}}\) denotes the other noisy observation of the signal \({\textbf{x}}\). We can assume additive noise, i.e., \(\tilde{{\textbf{y}}} = {\textbf{x}} + \tilde{\varvec{\epsilon }}\), where \(\varvec{\epsilon }\) and \(\tilde{\varvec{\epsilon }}\) are not necessarily of the same distribution. Given the underlying signal \({\textbf{x}}\), the two noisy observations \({\textbf{y}}\) and \(\tilde{{\textbf{y}}}\) are independent, resulting in the denoiser given by

Furthermore, if the second noisy observation is unbiased, i.e., \({\mathbb {E}}({\tilde{{\textbf{y}}}|{\textbf{x}}})= {\textbf{x}}\), the Noise2Noise denoiser can be expressed as

where the Noise2Noise denoiser can achieve the MMSE estimate, i.e., \(g_{{\hat{\theta }}}({\textbf{y}}) = {\mathbb {E}}({\textbf{x}}|{\textbf{y}})\). Note that the Noise2Noise denoiser requires neither a likelihood distribution \(p({\textbf{y}}|{\textbf{x}})\) nor a priori probability \(p({\textbf{x}})\) for training [64].

4.2 Blind-spot network

Based on blind-spot networks, self-supervised image denoising has been proposed, which does not require duplicate observations in the training process. Blind-spot networks are trained by partially blinding the input image and learning to recover them [27]. With the assumption that images are structured, the denoising predictions of self-supervised learning models tend to align with the real values of independent noise [26].

For self-supervised denoising with blind-spot networks, a blind spot \(J\subset \{1,\ldots ,n\}\) is defined. Then, a noisy observation \({\textbf{y}}\in {\mathbb {R}}^n\) is partitioned into pixels in the blind spot \({\textbf{y}}_J\in {\mathbb {R}}^{|J|}\) and its corresponding context \(\Omega _J \in {\mathbb {R}}^{n-|J|}\). Using \(\Omega _J\) and \({\textbf{y}}_J\) as input and target, respectively, the self-supervised learning denoiser is trained by

where \((\Omega _J, {\textbf{y}}_J)\) is equivalent to \({\textbf{y}}\). Assume that \(\Omega _J\) and \({\textbf{y}}_J\) are independent conditioned on \({\textbf{x}}\), so that the additive noise \(\varvec{\epsilon }\) is an uncorrelated Gaussian. As a result, the self-supervised learning denoiser is obtained by

where the noise is unbiased, i.e., \({\mathbb {E}}({\textbf{y}}_J|{\textbf{x}})= {\textbf{x}}_J\). The self-supervised learning denoiser can be expressed as

where the optimal denoiser can achieve the MMSE estimate from the context \(\Omega _J\), i.e., \(g_{{\hat{\theta }}}(\Omega _J) = {\mathbb {E}}({\textbf{x}}_J|\Omega _J)\). Similarly to the Noise2Noise denoiser, the self-supervised learning denoiser does not require a noise model \(p({\textbf{y}}|{\textbf{x}})\) nor a priori probability \(p({\textbf{x}})\) for training [26, 27].

Although self-supervised learning denoiser \(g_{{\hat{\theta }}}(\Omega _J)\) is the MMSE estimate given the context \(\Omega _J\), it does not depend on blind observation \({\textbf{y}}_J\). With a Gaussian approximation to the prior \({\textbf{x}}_J|\Omega _J \sim {\mathcal {N}}(\varvec{\mu }_{J},\Sigma _{J})\), blind observation \({\textbf{y}}_J\) can be incorporated into the denoising process [28]. If blind observation \({\textbf{y}}_J\) is independent of the context \(\Omega _J\) conditioned on \({\textbf{x}}_J\), i.e., \(p({\textbf{y}}_J|{\textbf{x}}_J) = p({\textbf{y}}_J|({\textbf{x}}_J,\Omega _J))\), the posterior distribution is given by

where the noise model \(p({\textbf{y}}_J|{\textbf{x}}_J)\) is assumed to be known, and the prior distribution \(p({\textbf{y}}_J|\Omega _J)\) does not need to be obtained for calculating \({\mathbb {E}}({\textbf{x}}_J | {\textbf{y}})\). For example, additive Gaussian noise, i.e., \({\textbf{y}}_J|{\textbf{x}}_J\sim {\mathcal {N}}({\textbf{x}}_J, \sigma ^2 I)\), and the Gaussian prior, i.e., \({\textbf{x}}_J|\Omega _J \sim {\mathcal {N}}(\varvec{\mu }_{J},\Sigma _{J})\), result in \({\textbf{y}}_J|\Omega _J \sim {\mathcal {N}}(\varvec{\mu }_J, \Sigma _{J} + \sigma ^2 I)\). From (33), the posterior mean is given by

where \(\varvec{\mu }_J\) and \(\Sigma _J\) are assumed to be known from the prior distribution of \({\textbf{x}}_J|\Omega _J\). In order to empirically obtain \(\varvec{\mu }_J = {\mathbb {E}}({\textbf{x}}_J|\Omega _J)\) and \(\Sigma _J= \textrm{Cov}({\textbf{x}}_J|\Omega _J)\), a model can be trained by minimizing the loss of negative log-likelihood:

where \(\hat{\varvec{\mu }}_J\) and \({\hat{\Sigma }}_J\) are optimized during the training process as the functions of the context \(\Omega _J\), and c is a constant. Note that the posterior mean in (34) requires the noise model \(p({\textbf{y}}_J|{\textbf{x}}_J)\) and the posterior distribution \(p({\textbf{x}}_J|\Omega _J)\) given the context.

4.3 CT denoising with Noise2Noise

While self-supervised learning has been actively studied in CT image reconstruction, one main strategy involves converting the given dataset into two statistically independent datasets [66, 67], which are suitable for Noise2Noise training in Sect. 4.1. By splitting CT projections from a single scan into two sets with odd and even indexes [66], a denoising network can learn with two noisy projections from the different noisy datasets with Noise2Noise training. Since the gantry angles of the projections gradually change, the difference between the two consecutive projections is slightly biased and unmatched to the assumption of unbiased noise for Noise2Noise training. As a remedy to mitigate biased noise in the projection domain, Noise2Noise training can be performed with two noisy images from two different projections with odd and even indexes. Another data conversion approach has been proposed to divide each x-ray measurement into two statistically independent measurements that enable Noise2Noise training [67]. In contrast to odd and even projection splitting [66], the difference between two randomly divided measurements is unbiased [67]. Since the measurement splitting method divides the number of photons into two random numbers, Noise2Noise training can be applied in both the count and image domains. Although data splitting methods allow denoising networks to learn with Noise2Noise training, the problem of training and testing denoising networks with noisy data alone needs to be addressed.

4.4 CT denoising with blind-spot networks

In contrast to CT data conversion methods which enable Noise2Noise training, blind-spot networks optimize an unbiased estimator with original CT data to predict the true values of pixels in the blind spot [26]. From the discussion in Sect. 4.2, blind-spot networks require the fundamental assumption that noisy observations in blind spots and the corresponding context are statistically independent given the true values. For conventional CT image reconstruction algorithms such as FBP in Sect. 2.1, the noise of the reconstructed images is spatially varying, correlated, and dependent on the true pixels. Hence, directly applying blind spot networks to self-supervised denoising of CT images may not yield the best results.

On the other hand, self-supervised denoising in the sinogram domain is more efficient and effective since noise in sinograms can be reasonably modeled to be statistically independent, as discussed in Sect. 2.1. Comparative studies have shown that the projection domain denoising model outperforms the other model in terms of image quality and anatomical details in low-dose CT imaging [70, 71]. By training blind-spot networks with both sinograms and images, self-supervised denoising can utilize the forward physical model and statistical independence [72]. Since reconstructed images and reprojections can be inconsistent with respect to the forward CT model, the self-supervised dual domain denoising approach has been proposed to reduce the gap and improve the quality of reconstructed images [73].

In order to enhance self-supervised CT denoising performance, several studies have focused on exploring the context of blind-spot networks. The pixels in the adjacent slices as well as the neighboring pixels in the same slice have been involved as the inter-slice context for self-supervised denoising [74]. To further improve the effectiveness of self-supervised learning, a dual-phase training strategy, which includes initial offline pretraining and subsequent online finetuning, has been introduced and extensively evaluated by using public and private clinical dataset of LDCT images. Both image quality metrics and clinical evaluations demonstrate that the self-supervised denoising model simultaneously reduces noise and recovers anatomical details in LDCT images solely based on the images themselves. The findings of the experiments further indicate that the online finetuning approach enhances the denoising capabilities of pretrained models at test time. The non-local similarity in CT images has been explored to extract dissimilar masks from adjacent slices and determine blind spots [75]. Through experiments with low-dose CT and photoncounting CT images, the similarity-based self-supervised denoising approach has shown potential in various clinical applications.

In Fig. 2, several rule-based/unsupervised/self-supervised denoising methods are compared. As indicated by the arrows, sharp edges (blue) and small details (green) were restored in C2S-FT. In contrast, rule-based methods such as BM3D and NLM resulted in blurred details and unfavorably altered textures. The unsupervised denoising method, i.e., CycleGAN, reduced the level of noise in soft tissues, but failed to retain textures as indicated by the red arrows. By incorporating the inter-slice correlation, C2S-PT improved the denoising performance. Furthermore, C2S-FT, which was finetuned with the test sample, removed coarse-grained noise resulting in a noise level slightly lower than that of the NDCT images.

Reconstructed CBCT images in head phantom study (WL=250 and WW=1500). CBCT images were reconstructed from 340 denoised low-dose projections (CTDI\(\mathrm {_w}\)=2.35 mGy) with short-scan FDK algorithm. Reference images were reconstructed from 680 high-dose projections (CTDI\(\mathrm {_w}\)=94.15 mGy) with conventional FDK algorithm

4.5 Self-supervised CBCT denoising

As discussed in Sect. 2.1, noise of multislice CT (MSCT) projections/sinograms has been assumed independent and unbiased. Although such CT noise properties allow self-supervised denoisers to converge to an efficient estimator in (32), conditionally uncorrelated and biased noise given the context can limit the performance and application of blind-spot networks.

Indeed, noise of cone-beam CT (CBCT), which is commonly used for various interventional imaging purposes, is possibly correlated and biased both in the projection domain and the image domain. Since low-dose protocols with tube current modulation are often applied to CBCT imaging, projection data and images in CBCT are contaminated by complicated noise characteristics such as shorter exposure times, x-ray fluence, and detector sensitivity [79]. In addition, x-ray scattering due to the less effective antiscatter grid for flat-panel detectors negatively affects the noise correlation and bias [80,81,82]. Due to biased and highly correlated noise, self-supervised denoising of CBCT images is challenging compared to that of MSCT images [83, 84].

A self-supervised learning framework has been proposed that is capable of training a CNN-based denoiser in the projection domain for low-dose CBCT imaging [78]. In order to utilize the uncorrelated noise across the projections, a projection-wise Noise2Noise training strategy, i.e., 2Proj2Proj, learns the true projection from adjacent noisy projections. A blind spot network-based self-supervised learning model, i.e., 3Proj2Self, is separately trained to learn correlated information in projections. The predicted projections from the two self-supervised denoising methods are fused into the final prediction according to measured statistics.

Figure 3 shows the experimental results for selected CBCT image/projection denoising methods. The uncorrected images were reconstructed from the noisy projections and were highly degraded compared to the high-dose reference images. As highlighted with red ROIs, self-supervised projection denoising methods such as 2Proj2Proj and 3Proj2Self successfully retained small details compared to self-supervised image denoising methods such as N2S and PSL. In the yellow ROIs, 3Proj2Self and Proj2Self successfully preserved the sharp edges, while PWLS produced a noisy background.

5 Deep learning-based iterative reconstruction

As discussed in Sect. 2.4, conventional IR methods require noisy measurements alone to solve the inverse problems of reconstructing high-quality CT images by minimizing objective functions in the form of least-squares plus regularization terms. The advances of deep learning have also been incorporated into IR-based CT image reconstruction.

In order to reduce computation time, several studies have focused on unrolling optimization solvers of inverse problems with deep neural networks [85]. A pinoneering work has investigated and reimplemented the ADMM algorithm in (16) in deep neural networks [86]. By unrolling a proximal primal-dual optimization method for tomographic reconstruction, a forward operator and the proximal operators have been replaced with convolutional neural networks that can be trained end-to-end with raw measured data [87]. As a deep learning reimplementation of the FISTA algorithm, a proximal operator network has been trained for nonlinear thresholding by updating the gradient matrix during iterations [88]. Furthermore, the constraints of positivity and monotone have been imposed to ensure convergence.

Deep neural networks have also been investigated in learning the regularization term of optimization problem in (14) from training data. Inspired by plug-and-play prior [89], image denoisers have been used as regularization terms of inverse problems. After training image denoisers with input/target pairs, the pretrained denoisers can be embedded into the regularization term that is minimized along with the data fidelity term. A sparse autoencoder has been proposed for the unsupervised learning of image priors from normal-dose images, and the difference between the reconstructed image and the prior images has been used as a regularization term during the optimization process [90]. In contrast, the denoiser can be updated simultaneously along with intermediate images during optimization iterations. A learning expert assessment-based reconstruction network (LEARN) has proposed trainable prior functions with linear transforms and activation functions for CT with sparse data [91]. By training an iteration-dependent denoiser, inverse problems have been solved in multiple steps [92]. A unified supervised-unsupervised (SUPER) learning framework combines supervised and unsupervised priors based on a fixed-point iteration analysis [93].

For sparse-view CT, a coupled convolution and swin transformer has introduced a multi-domain consistency loss to learn the similarity between the reconstructed and reference images [94]. Another dual domain transformer model has been introduced to ensure consistency in both the image and projection domains to reconstruct CT images [95]. Despite potential usefulness in clinical applications, the combination between self-supervised learning and DL-based iterative reconstruction has not been fully explored [96].

6 Discussion

Although DL-based CT image denoising and reconstruction methods have shown high performance in specific datasets, the results cannot be applied directly in different CT imaging scenarios in clinical applications. For example, the AAPM-Mayo LDCT dataset [25], which contains LDCT/NDCT data of 10 patients, cannot be sampled with other imaging protocols and real clinical data. In terms of machine learning, we choose a predictive model that minimizes training loss between prediction and target values using training data, since training and test data are assumed to be sampled from the same underlying distributions. If the underlying distributions of the training and test data are inconsistent, there are systematic errors in the prediction regardless of how we sample the training data. Thus, collecting a large amount of data from the target application is ideal, but only a small amount of data can often be attainable in many clinical applications. As a possible way to mitigate errors due to mismatched distributions, self-supervised transfer learning strategies can be utilized to adapt pretrained models to such a small dataset of the target application [74].

Another general problem is that DL-based methods are prone to high variance. With a relatively small dataset, in comparison to the number of model parameters to optimize, the fitted model can be sensitive to the choice of training data. Rather than simply increasing the size of the data, various techniques, including cross-validation and early stopping, have been proposed to avoid overfitting in machine learning. For CT image denoising and reconstruction, physical model-based/data-driven regularizations have been suggested and incorporated into the DL-based methods [90, 91]. In the cases where physical models are likely to fail or clean data are unattainable, unsupervised/self-supervised learning strategies can be considered to reduce the sensitivity of fitted models and achieve robust performance [93].

As discussed in Sects. 2.1 and 2.3, the noise of CT images can be modeled as unbiased and correlated Gaussian noise by deriving from the noise of CT projections, which is typically modeled as unbiased and uncorrelated Gaussian noise. The assumption of unbiased and independent noise in CT projections is no longer valid in cases where x-ray interactions severely contaminate the observations. Additionally, the assumption of Gaussian noise in the CT projections is valid only if the numbers of detected photons are large enough to approximate the Poisson distribution of primary quantum noise to the Gaussian distribution. In order to mitigate biased and dependent noise, existing hardware/software-based techniques [81, 82, 97, 98] can be utilized for data preparation. In addition, DL-based CT physics models, which are actively being studied [99, 100], can be incorporated into DL-based CT image denoising and reconstruction.

Due to limited resources in clinical settings, the computational demands of DL-based methods are important in terms of both training and inference time. Since network inference time is typically less than one second, most end-to-end learning networks can complete CT image denoising and reconstruction in a short period of time. In order to improve performance and adaptability, DL-based methods require more computational resources and time. For an improved quality of the reconstructed images, DL-based image reconstruction methods can train an iterative-dependent network and repeat network inferences at test time, which can take a few seconds [92, 93]. In order to adapt DL-based image denoising methods to test samples, self-supervised learning methods also require a few seconds with early stopping strategies [74].

While quantitative evaluation using simulated datasets is commonly used in many DL-based CT image denoising and reconstruction studies, clinical validation is beneficial in assessing how the DL-based methods perform in real-world settings. As discussed in Sect. 2.5, PSNR strongly depends on MSE, where noise is assumed to be distributed independently and identically between different pixels. Since noise in CT images/projections does not hold the i.i.d. assumption, popular image quality metrics such as MSE and PSNR may not be adequate for evaluating the quality of CT images. Despite the high MSE and PSNR values, oversmoothed textures and inaccuracies have occasionally been observed with DL-based methods that have been trained to minimize MSE loss. For diagnostic quality assessments, expert radiologists can review the resultant CT images with subjective criteria such as noise suppression, contrast, sharpness, artifact suppression, and diagnostic acceptability [24]. In addition, single- or multi-center studies with real data are advantageous in increasing clinical coverage and generalizability [78].

7 Conclusion

In this review, we have examined the techniques for supervised/unsupervised/self-supervised CT image denoising and reconstruction. We have outlined the primary aspects of image- and projection-domain denoising through the lens of deep learning. Taking into account the rapid advancements in AI technologies and the demands of clinical practice, deep learning-based CT denoising and image reconstruction methods offer substantial promise for clinical use, and the ongoing development of innovative theories and methods is essential to addressing the diverse challenges in clinical settings.

References

Krizhevsky A, Sutskever I, Hinton GE. Imagenet classification with deep convolutional neural networks. Adv Neural Inf Process Syst. 2012:1097–105.

Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. In: International conference on learning representations, 2015.

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A. Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, p. 1–9.

He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, p. 770–8.

Goodfellow I, Bengio Y, Courville A. Deep learning. Cambridge: MIT Press; 2016.

Sermanet P, Eigen D, Zhang X, Mathieu M, Fergus R, Lecun Y. Overfeat: integrated recognition, localization and detection using convolutional networks. In: International conference on learning representations, 2014.

Pinheiro PH, Collobert R. Recurrent convolutional neural networks for scene labeling. In: 31st International conference on machine learning, 2014.

Johnson J, Alahi A, Fei-Fei L. Perceptual losses for real-time style transfer and super-resolution. In: European conference on computer vision. Springer, 2016, p. 694–711

Zhu JY, Park T, Isola P, Efros AA. Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE international conference on computer vision, 2017, p. 2223–32

Chatterjee P, Milanfar P. Is denoising dead? IEEE Trans Image Process. 2009;19(4):895–911.

Chen Y, Pock T. Trainable nonlinear reaction diffusion: a flexible framework for fast and effective image restoration. IEEE Trans Pattern Anal Mach Intell. 2016;39(6):1256–72.

Zhang K, Zuo W, Chen Y, Meng D, Zhang L. Beyond a gaussian denoiser: residual learning of deep cnn for image denoising. IEEE Trans Image Process. 2017;26(7):3142–55.

Liu D, Wen B, Fan Y, Loy CC, Huang TS. Non-local recurrent network for image restoration. Adv Neural Inf Process Syst 2018;31.

Liang J, Cao J, Sun G, Zhang K, Van Gool L, Timofte R. Swinir: image restoration using swin transformer. In: Proceedings of the IEEE/CVF international conference on computer vision, 2021, p. 1833–44.

Liu Y, Qin Z, Anwar S, Ji P, Kim D, Caldwell S, Gedeon T. Invertible denoising network: a light solution for real noise removal. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition 2021; p. 13365–74.

Zamir SW, Arora A, Khan S, Hayat M, Khan FS, Yang MH, Shao L. Multi-stage progressive image restoration. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition 2021, p. 14821–31.

Kawar B, Vaksman G, Elad M. Stochastic image denoising by sampling from the posterior distribution. In: Proceedings of the IEEE/CVF international conference on computer vision 2021, p. 1866–75

Ohayon G, Adrai T, Vaksman G, Elad M, Milanfar P. High perceptual quality image denoising with a posterior sampling cgan. In: Proceedings of the IEEE/CVF international conference on computer vision, 2021, p. 1805–13

Chen H, Zhang Y, Zhang W, Liao P, Li K, Zhou J, Wang G. Low-dose CT via convolutional neural network. Biomed Opt Express. 2017;8(2):679–94.

Yang Q, Yan P, Zhang Y, Yu H, Shi Y, Mou X, Kalra MK, Zhang Y, Sun L, Wang G. Low-dose CT image denoising using a generative adversarial network with Wasserstein distance and perceptual loss. IEEE Trans Med Imaging. 2018;37(6):1348–57.

Wolterink JM, Leiner T, Viergever MA, Išgum I. Generative adversarial networks for noise reduction in low-dose CT. IEEE Trans Med Imaging. 2017;36(12):2536–45.

Shan H, Zhang Y, Yang Q, Kruger U, Kalra MK, Sun L, Cong W, Wang G. 3-D convolutional encoder-decoder network for low-dose CT via transfer learning from a 2-D trained network. IEEE Trans Med Imaging. 2018;37(6):1522–34.

You C, Yang Q, Gjesteby L, Li G, Ju S, Zhang Z, Zhao Z, Zhang Y, Cong W, Wang G, et al. Structurally-sensitive multi-scale deep neural network for low-dose CT denoising. IEEE Access. 2018;6:41839–55.

Choi K, Lim JS, Kim S. StatNet: statistical image restoration for low-dose CT using deep learning. IEEE J Sel Top Signal Process. 2020;14(6):1137–50.

AAPM. Low Dose CT Grand Challenge (2016). www.aapm.org/GrandChallenge/LowDoseCT

Batson J, Royer L. Noise2Self: blind denoising by self-supervision. In: International conference on machine learning, 2019, p. 524–33

Krull A, Buchholz TO, Jug F. Noise2void-learning denoising from single noisy images. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 2019, p. 2129–37

Laine S, Karras T, Lehtinen J, Aila T. High-quality self-supervised deep image denoising. In: Advances in neural information processing systems 2019, p. 6970–80.

Hsieh J. Computed tomography: principles, design, artifacts, and recent advances. SPIE; 2009.

Sidky E, Pan X. Image reconstruction in circular cone-beam computed tomography by constrained, total-variation minimization. Phys Med Biol. 2008;53(17):4777–807.

Yu H, Wang G. Compressed sensing based interior tomography. Phys Med Biol. 2009;54(9):2791–805.

Choi K, Wang J, Zhu L, Suh TS, Boyd S, Xing L. Compressed sensing based cone-beam computed tomography reconstruction with a first-order method. Med Phys. 2010;37:5113–25.

Ritschl L, Bergner F, Fleischmann C, Kachelrieß M. Improved total variation-based CT image reconstruction applied to clinical data. Phys Med Biol. 2011;56(6):1545–61.

Ramani S, Fessler JA. A splitting-based iterative algorithm for accelerated statistical X-ray CT reconstruction. IEEE Trans Med Imaging. 2012;31(3):677–88.

Zeng GL, Li Y, Zamyatin A. Iterative total-variation reconstruction versus weighted filtered-backprojection reconstruction with edge-preserving filtering. Phys Med Biol. 2013;58(10):3413–31.

Choi K, Fahimian B, Li T, Suh TS, Xing L. Enhancement of four-dimensional cone-beam computed tomography by compressed sensing with Bregman iteration. J X-Ray Sci Technol. 2013;21(2):177–92.

Boyd S, Parikh N, Chu E, Peleato B, Eckstein J, et al. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found Trends® Mach Learn. 2011;3(1):1–122.

Long J, Shelhamer E, Darrell T. Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, p. 3431–40

Ronneberger O, Fischer P, Brox T. U-net: convolutional networks for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention. Springer, 2015, p. 234–41

Ren S, He K, Girshick R, Sun J. Faster R-CNN: towards real-time object detection with region proposal networks. In: Advances in neural information processing systems, 2015, p. 91–9

Chen H, Zhang Y, Kalra MK, Lin F, Chen Y, Liao P, Zhou J, Wang G. Low-dose CT with a residual encoder-decoder convolutional neural network. IEEE Trans Med Imaging. 2017;36(12):2524–35.

Shan H, Padole A, Homayounieh F, Kruger U, Khera RD, Nitiwarangkul C, Kalra MK, Wang G. Competitive performance of a modularized deep neural network compared to commercial algorithms for low-dose CT image reconstruction. Nat Mach Intell. 2019;1(6):269.

Kang E, Min J, Ye JC. A deep convolutional neural network using directional wavelets for low-dose X-ray CT reconstruction. Med Phys 2017;44(10)

Zeng D, Wang L, Geng M, Li S, Deng Y, Xie Q, Li D, Zhang H, Li Y, Xu Z, et al. Noise-generating-mechanism-driven unsupervised learning for low-dose CT sinogram recovery. IEEE Trans Radiat Plasma Med Sci. 2021;6(4):404–14.

Blau Y, Michaeli T. The perception-distortion tradeoff. In: Proceedings of the IEEE conference on computer vision and pattern recognition 2018, p. 6228–37.

Freirich D, Michaeli T, Meir R. A theory of the distortion-perception tradeoff in wasserstein space. Adv Neural Inf Process Syst. 2021;34:25661–72.

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y. Generative adversarial nets. Adv Neural Inf Process Syst. 2014;5:2672–80.

Radford A, Metz L, Chintala S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434 2015.

Isola P, Zhu JY, Zhou T, Efros AA. Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, p. 1125–34

Arjovsky M, Chintala S, Bottou L. Wasserstein generative adversarial networks. In: Proceedings of the 34th international conference on machine learning, 2017, p. 214–23

Divakar N, Venkatesh Babu R. Image denoising via CNNs: an adversarial approach. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, 2017, p. 80–7

Dey R, Bhattacharjee D, Nasipuri M. Image denoising using generative adversarial network. Intell Comput Image Process Based Appl 2020;73–90

Yi X, Babyn P. Sharpness-aware low-dose CT denoising using conditional generative adversarial network. J Digit Imaging. 2018;31:655–69.

Choi K, Kim SW, Lim JS. Real-time image reconstruction for low-dose CT using deep convolutional generative adversarial networks (GANs). In: SPIE medical imaging 2018: physics of medical imaging, 2018;10573:1057332.

Goodfellow I. NIPS 2016 tutorial: generative adversarial networks. arXiv preprint arXiv:1701.00160 2016.

Choi K, Vania M, Kim S. Semi-supervised learning for low-dose CT image restoration with hierarchical deep generative adversarial network (HD-GAN). In: 2019 41st annual international conference of the IEEE engineering in medicine and biology society (EMBC). IEEE, 2019, p. 2683–6.

Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B. Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF international conference on computer vision, 2021, p. 10012–22.

Zhu L, Han Y, Xi X, Fu H, Tan S, Liu M, Yang S, Liu C, Li L, Yan B. Stednet: Swin transformer-based encoder-decoder network for noise reduction in low-dose CT. Med Phys. 2023;50(7):4443–58.

Li H, Yang X, Yang S, Wang D, Jeon G. Transformer with double enhancement for low-dose CT denoising. IEEE J Biomed Health Inform. 2022;27(10):4660–71.

Yang L, Li Z, Ge R, Zhao J, Si H, Zhang D. Low-dose CT denoising via sinogram inner-structure transformer. IEEE Trans Med Imaging. 2023;42(4):910–21.

Luthra A, Sulakhe H, Mittal T, Iyer A, Yadav S. Eformer: edge enhancement based transformer for medical image denoising. arXiv preprint arXiv:2109.08044 2021.

Wang D, Fan F, Wu Z, Liu R, Wang F, Yu H. Ctformer: convolution-free token2token dilated vision transformer for low-dose CT denoising. Phys Med Biol. 2023;68(6): 065012.

Choi K, Li R, Nam H, Xing L. A Fourier-based compressed sensing technique for accelerated CT image reconstruction using first-order methods. Phys Med Biol. 2014;59(12):3097–119.

Lehtinen J, Munkberg J, Hasselgren J, Laine S, Karras T, Aittala M, Aila T. Noise2Noise: learning image restoration without clean data. In: International conference on machine learning, 2018, p. 2971–80.

Ehret T, Davy A, Morel JM, Facciolo G, Arias P. Model-blind video denoising via frame-to-frame training. In: Proceedings of the IEEE conference on computer vision and pattern recognition, 2019, p. 11369–78.

Wu D, Gong K, Kim K, Li X, Li Q. Consensus neural network for medical imaging denoising with only noisy training samples. In: International conference on medical image computing and computer-assisted intervention. Springer, 2019, p. 741–9.

Yuan N, Zhou J, Qi J. Half2Half: deep neural network based CT image denoising without independent reference data. Phys Med Biol. 2020;65(21): 215020.

Dabov K, Foi A, Katkovnik V, Egiazarian K. BM3D image denoising with shape-adaptive principal component analysis. In: Signal processing with adaptive sparse structured representations, 2009.

Buades A, Coll B, Morel JM. A non-local algorithm for image denoising. IEEE Conf Comput Vis Pattern Recognit. 2005;2:60–5.

Choi K. Self-supervised projection denoising for low-dose cone-beam CT. In: 2021 43rd annual international conference of the IEEE engineering in medicine & biology society (EMBC). IEEE, 2021, p. 3459–62.

Choi K. A comparative study between image-and projection-domain self-supervised learning for ultra low-dose CBCT. In: 2022 44th annual international conference of the IEEE engineering in medicine & biology society (EMBC). IEEE, 2022, p. 2076–9

Hendriksen AA, Pelt DM, Batenburg KJ. Noise2inverse: self-supervised deep convolutional denoising for tomography. IEEE Trans Comput Imaging. 2020;6:1320–35.

Wang G, Ye JC, De Man B. Deep learning for tomographic image reconstruction. Nat Mach Intell. 2020;2(12):737–48.

Choi K, Lim JS, Kim S. Self-supervised inter-and intra-slice correlation learning for low-dose CT image restoration without ground truth. Expert Syst Appl. 2022;209: 118072.

Niu C, Li M, Fan F, Wu W, Guo X, Lyu Q, Wang G. Noise suppression with similarity-based self-supervised deep learning. IEEE Trans Med Imaging. 2023.

Bai T, Wang B, Nguyen D, Jiang S. Probabilistic self-learning framework for low-dose CT denoising. Med Phys. 2021;48(5):2258–70.

Wang J, Li T, Liang Z, Xing L. Dose reduction for kilovotage cone-beam computed tomography in radiation therapy. Phys Med Biol. 2008;53(11):2897.

Choi K, Kim SH, Kim S. Self-supervised denoising of projection data for low-dose cone-beam CT. Med Phys. 2023;50(10):6319–33.

Garayoa J, Castro P. A study on image quality provided by a kilovoltage cone-beam computed tomography. J Appl Clin Med Phys. 2013;14(1):239–57.

Fahrig R, Dixon R, Payne T, Morin RL, Ganguly A, Strobel N. Dose and image quality for a cone-beam c-arm CT system. Med Phys. 2006;33(12):4541–50.

Zhu L, Xie Y, Wang J, Xing L. Scatter correction for cone-beam CT in radiation therapy. Med Phys. 2009;36(6Part1):2258–68.

Min J, Pua R, Kim I, Han B, Cho S. Analytic image reconstruction from partial data for a single-scan cone-beam CT with scatter correction. Med Phys. 2015;42(11):6625–40.

Tacher V, Radaelli A, Lin M, Geschwind JF. How i do it: cone-beam CT during transarterial chemoembolization for liver cancer. Radiology. 2015;274(2):320–34.

Bai M, Liu B, Mu H, Liu X, Jiang Y. The comparison of radiation dose between c-arm flat-detector CT (dynact) and multi-slice CT (msct): a phantom study. Eur J Radiol. 2012;81(11):3577–80.

Ongie G, Jalal A, Metzler CA, Baraniuk RG, Dimakis AG, Willett R. Deep learning techniques for inverse problems in imaging. IEEE J Sel Areas Inf Theory. 2020;1(1):39–56.

Sun J, Li H, Xu Z. et al. Deep admm-net for compressive sensing mri. Adv Neural Inf Process Syst. 2016;29.

Adler J, Öktem O. Learned primal-dual reconstruction. IEEE Trans Med Imaging. 2018;37(6):1322–32.

Xiang J, Dong Y, Yang Y. Fista-net: learning a fast iterative shrinkage thresholding network for inverse problems in imaging. IEEE Trans Med Imaging. 2021;40(5):1329–39.

Romano Y, Elad M, Milanfar P. The little engine that could: regularization by denoising (red). SIAM J Imaging Sci. 2017;10(4):1804–44.

Wu D, Kim K, El Fakhri G, Li Q. Iterative low-dose CT reconstruction with priors trained by artificial neural network. IEEE Trans Med Imaging. 2017;36(12):2479–86.

Chen H, Zhang Y, Chen Y, Zhang J, Zhang W, Sun H, Lv Y, Liao P, Zhou J, Wang G. Learn: learned experts’ assessment-based reconstruction network for sparse-data CT. IEEE Trans Med Imaging. 2018;37(6):1333–47.

Chun IY, Huang Z, Lim H, Fessler JA. Momentum-net: fast and convergent iterative neural network for inverse problems. IEEE Trans Pattern Anal Mach Intell. 2020;45(4):4915–31.

Ye S, Li Z, McCann MT, Long Y, Ravishankar S. Unified supervised-unsupervised (super) learning for x-ray CT image reconstruction. IEEE Trans Med Imaging. 2021;40(11):2986–3001.

Li Y, Sun X, Wang S, Li X, Qin Y, Pan J, Chen P. Mdst: multi-domain sparse-view CT reconstruction based on convolution and swin transformer. Phys Med Biol. 2023;68(9): 095019.

Li R, Li Q, Wang H, Li S, Zhao J, Yan Q, Wang L. Ddptransformer: dual-domain with parallel transformer network for sparse view CT image reconstruction. IEEE Trans Comput Imaging. 2022;8:1101–16.

Xia W, Shan H, Wang G, Zhang Y. Physics-/model-based and data-driven methods for low-dose computed tomography: A survey. IEEE Signal Process Mag. 2023;40(2):89–100.

Lee H, Xing L, Lee R, Fahimian BP. Scatter correction in cone-beam CT via a half beam blocker technique allowing simultaneous acquisition of scatter and image information. Med Phys. 2012;39(5):2386–95.

Zhao W, Vernekohl D, Zhu J, Wang L, Xing L. A model-based scatter artifacts correction for cone beam CT. Med Phys. 2016;43(4):1736–53.

Shen C, Nguyen D, Zhou Z, Jiang SB, Dong B, Jia X. An introduction to deep learning in medical physics: advantages, potential, and challenges. Phys Med Biol. 2020;65(5):05TR01.

Nomura Y, Xu Q, Shirato H, Shimizu S, Xing L. Projection-domain scatter correction for cone beam computed tomography using a residual convolutional neural network. Med Phys. 2019;46(7):3142–55.

Funding

This work was supported by the Korea Medical Device Development Fund grant funded by the Korea government (the Ministry of Science and ICT, the Ministry of Trade, Industry Compliance with Ethical Standards and Energy, the Ministry of Health & Welfare, the Ministry of Food and Drug Safety) under Grant No. 202011A02.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Competing interest

The authors have no relevant financial or non-financial interests to disclose.

Ethical approval

This article does not contain any studies with human participants performed by any of the authors.

Consent to participate

There are no human participants in these studies.

Consent to publish

There are no human participants in these studies.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Choi, K. Self-supervised learning for CT image denoising and reconstruction: a review. Biomed. Eng. Lett. (2024). https://doi.org/10.1007/s13534-024-00424-w

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13534-024-00424-w